Security violations of mobile applications as a result of insufficient attention of the developers

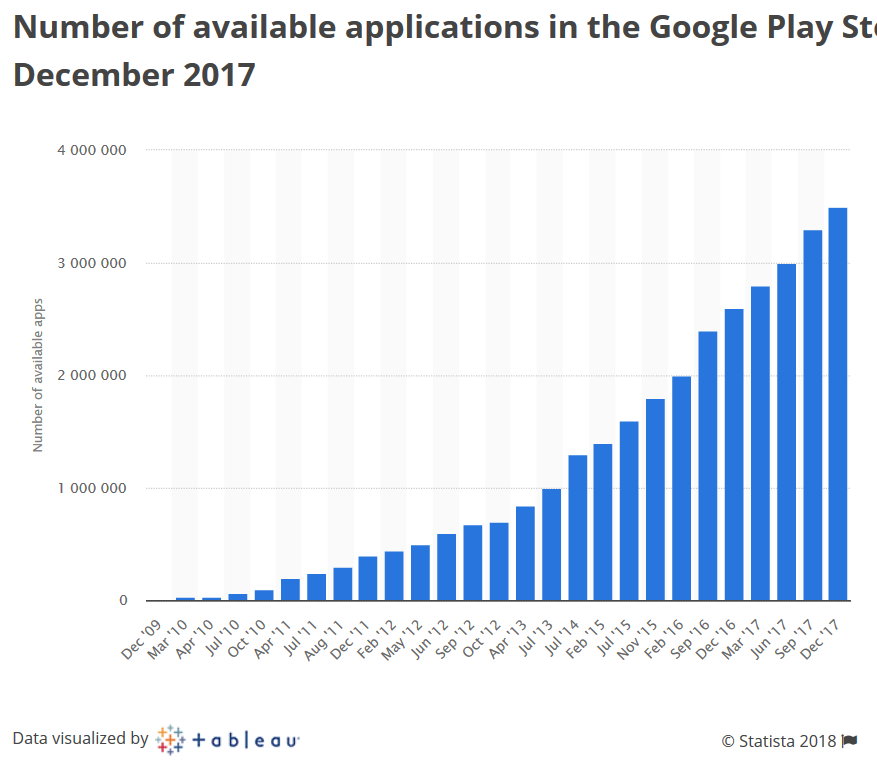

In the second half of 2017, developers downloaded approximately 2,800 apps every day on Google Play. According to the AppStore, data has not yet been found, but it is hardly many times less. Each of these applications contains a certain amount of data (data) that is stored or transmitted via cellular and Wi-Fi networks.

Obviously, the data of mobile applications are the main purpose of attackers: they not only steal them, but also manipulate them in their own interests . It also presents a number of problems, such as fake and alternative (often unreliable) applications, malware, data leakage, poorly protected data or data protection errors, as well as tools for accessing and decrypting the data.

A little bit about yourself

My name is Yuri, I have been working for 10 years in various areas of information security, including privacy and data breaches. Areas of practical interest are mobile security, cloud computing, forensic and access and security management. The last 7 years, speak at national and international conferences (CyberCrimeForum, HackerHalted, DefCamp, NullCon, OWASP, CONFidence, Hacktivity, Hackfest, DeepSec Intelligence, HackMiami, NotaCon, BalcCon, Intelligence Sec, InfoSec NetSysAdmins, etc.), which offer the attention of developers without interest examples of insecurity and consequences, and I ask them to think a little more about data security.

How developers can affect security

There are many different opinions regarding the security impact of the developer.

- "The developer does not always respond in a timely manner to security reports that highlight application flaws."

- “The developer did everything in his power to ensure security, so any problems that arise are only due to user actions” (This can continue until the situation is made public, often with the involvement of journalists, and the effect will not last long)

- " It is worth stopping blaming developers for all the sins of security " (appeared relatively recently). In other words, developers are not the only ones involved in creating an application or product.

For example, there are testers and product managers. In addition, each performs its own role, which together allows to make sure of the general security of the developed solution. The opinion was published in an article about a year ago . The role separation clause was repeated several times in paragraphs and was supplemented with the conclusion that security problems always happen when someone develops a prototype into a commercially successful product. But separation by roles implies a division of responsibility, therefore claims regarding the security of the application may not reach their destination. Such statements reduce the attention to safety, and the developers do not feel responsible for this until the problem information is made public.

Developers have always been and will be the first to work with applications and can immediately fix problems in them and make them safer. Proactiveness in the early stages also helps developers maintain an adequate level of security. However, users should keep in mind that there is a time-independent problem-fixing framework. So, if the developer simply wants to update the application, he creates a new assembly, sends it to the application store and waits for moderators to approve it (several days required), then you need to wait for the application to update if the automatic update on the user's device has been turned off. Thus, the developer partly controls the process. The question arises, is it worth cultivating and encouraging such delays? This is counterproductive, because the developer, knowing this situation better than others, can plan in advance, for example, the introduction of new functionality, the correction of security errors, and in general, may have a checklist indicating that you need to have time to perform on time with each update.

It seems to be all in best practice, and if you look closely - it's a disaster ...

People have many needs (personal, professional, workers); two or more devices and, as a result, more than one service provider and dozens of applications to meet their needs. Despite this flexibility and variety, different software has many common features. For example, the same technologies of storage, transfer and protection of data, even taking into account different implementations, may have similarities at the level of architectural features.

With regard to security mechanisms, some of them are already running by default and do not require developer intervention, while others are the opposite. For example, SSL / TLS, which is used in many applications, is vulnerable to Man-in-the-Middle (MITM) attacks when it comes to improper implementation. At the same time, in iOS 10 (and above) and Android 7 (and above), mechanisms for preventing MITM attacks are already built in, which also help to avoid government interception of data from countries such as Kazakhstan and Thailand.

The law of Kazakhstan was adopted in December 2014, but almost immediately one of the major operators published information on its website about the need to install the state root SSL certificate.

Government agencies also interacted with Mozilla representatives, and the state root certificate is considered trusted in the list of Mozilla certificates.

- Mozilla bug report - Add Root Cert of Republic of Kazakhstan

- Mozilla CA Program (in pdf)

- Gov Cert of Kazakhstan

Published report “ Who's That Knocking at My Door? Understanding Surveillance in Thailand ”informs you that Microsoft provides access to root certificates when interacting with Thailand. According to other reports, so far only Microsoft is doing this (Apple is not), but in Thailand, 85% of devices are running Windows StatCounter .

Moreover, these mechanisms are enabled by default regardless of the developer’s actions, but are implemented differently. Also, Android has a fully independent mechanism, and iOS - managed, which allows you to activate the work of third-party SSL-certificates.

In addition to network problems, there are problems with locally stored data (internal memory of mobile devices). Most applications actively use local storage for cached data, optimizing network usage and fast access to user data (conversations, files, multimedia, etc.). Many of these applications often store user data without protection or protection is too weak, for example, the secret key is located next to the data encrypted on it.

This hole in the fence even has its own page on Wikimapia

But still specialized forensic solutions, in which the results of studying these problems are actively embodied in the form of programmed methods of access to application data and separately stored user data. And the presence of various security problems often makes data extraction easy and comfortable.

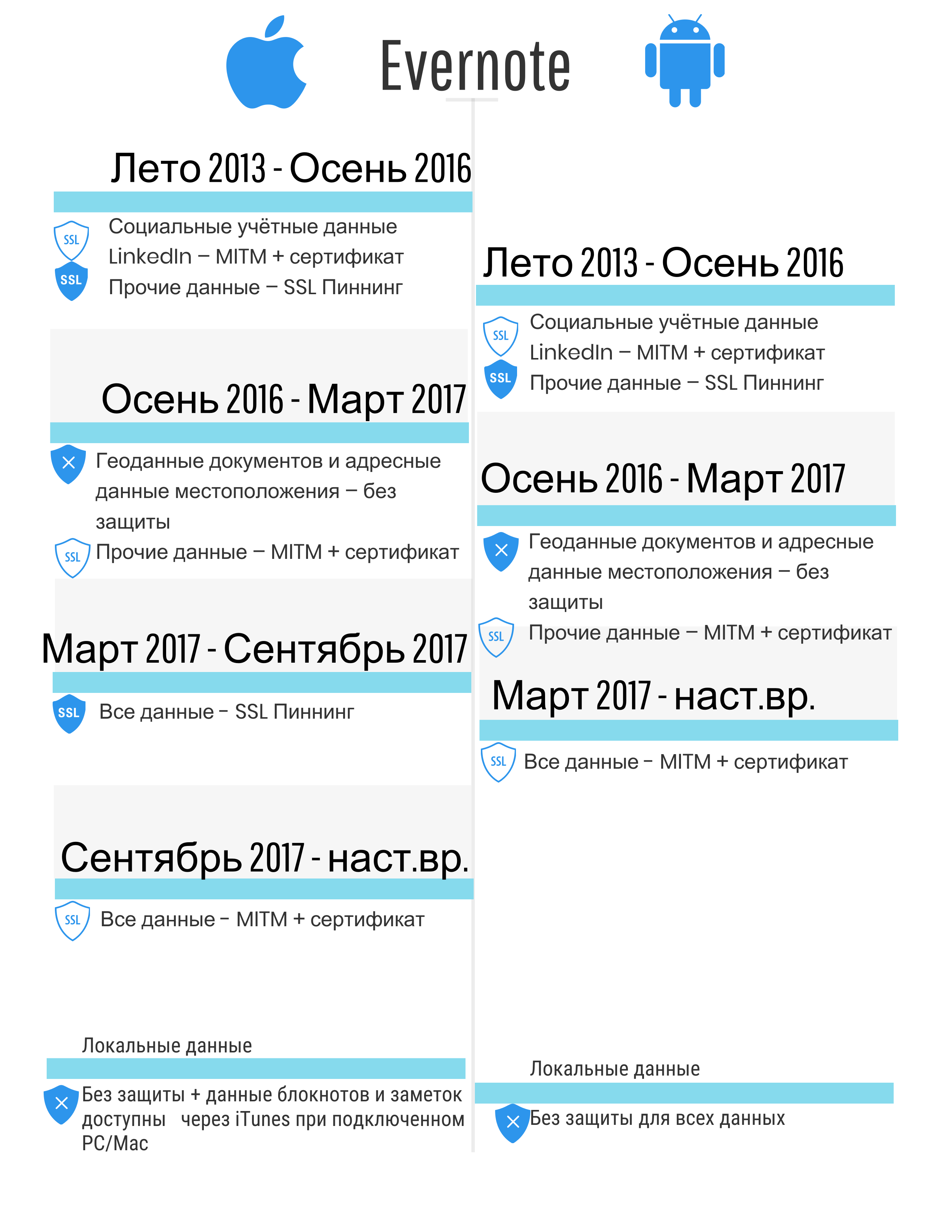

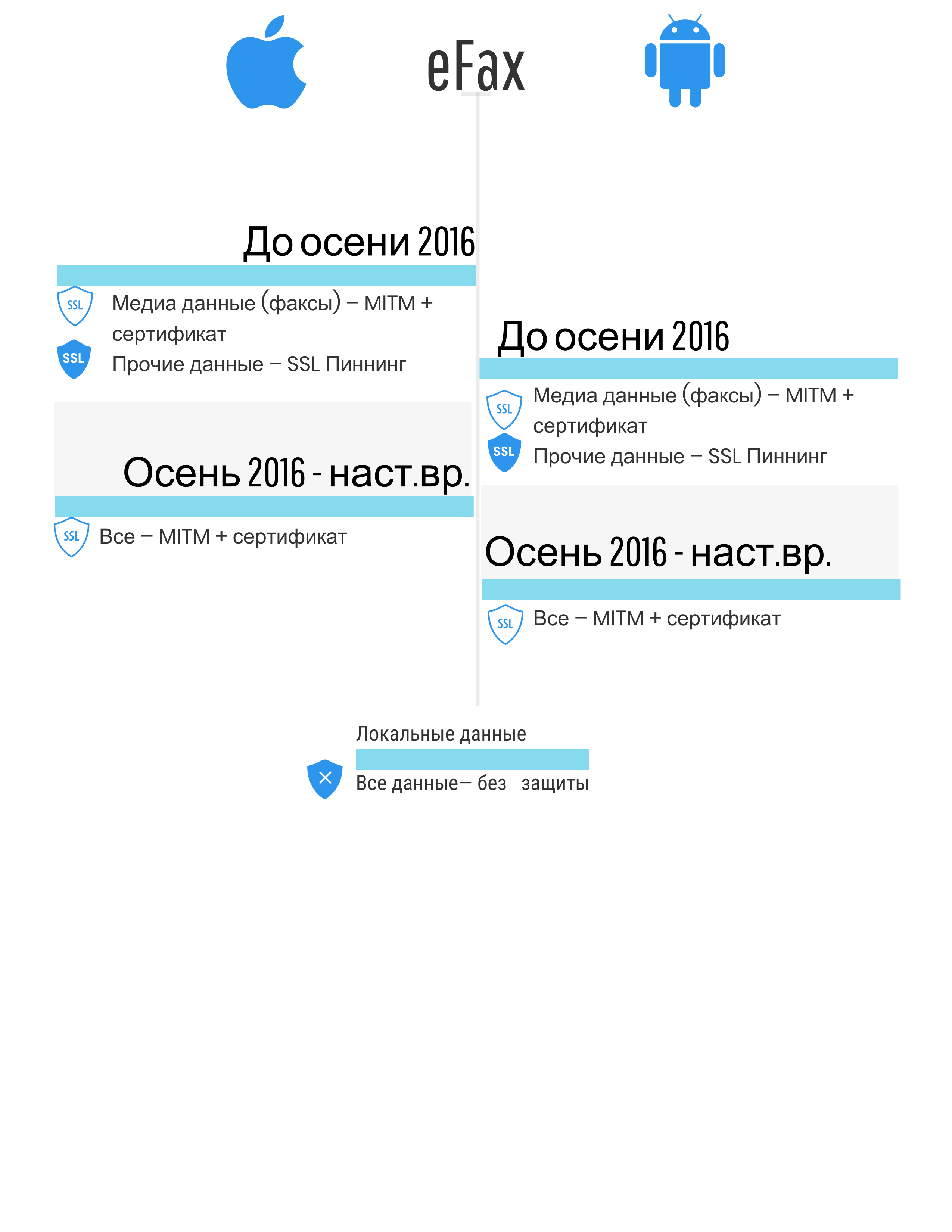

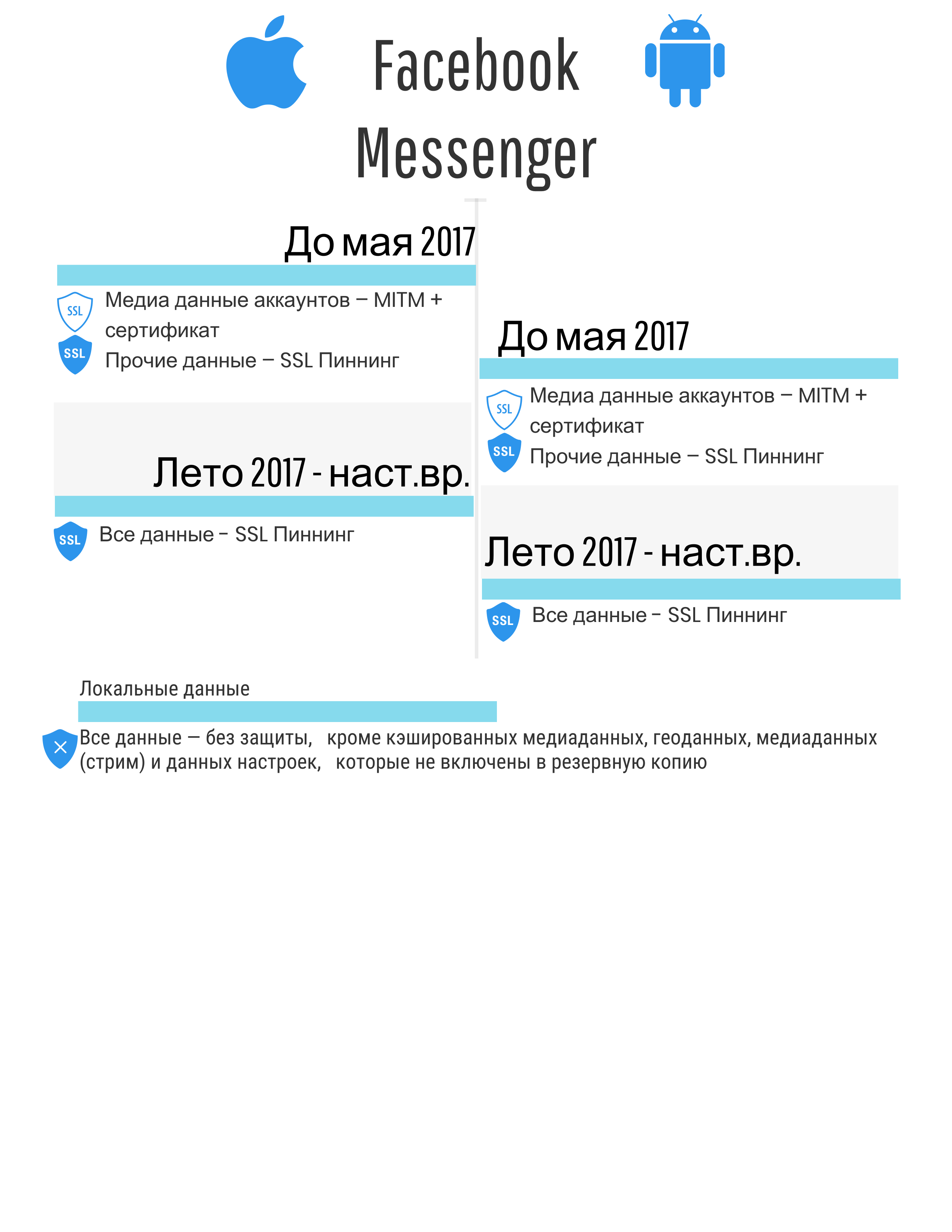

Results of data security checks for 4 years

The figures below contain generalized results of a study of the problems of protection mechanisms for local and network data. Only those mechanisms that are implemented in the considered applications are described. In other words, if, for example, data encryption is not implemented, the information is not specified.

- Without protection - data is stored or transmitted without any protection and encryption, "as is, in the clear".

- MITM without a root certificate (not in the examples given, but until spring 2017, AeroExpress was such an example) - the ability to intercept data with any fake certificate.

- MITM + root certificate - MITM-attack is possible when an attacker uses a special certificate, incl. bogus / stolen root SSL certificate for intercepting and decrypting traffic. Cases where the user is forced to install independently may be associated with the recommendation of developers, the need to meet the requirements of a particular state (for example, the aforementioned Kazakhstan or Thailand.

- SSL Pinning ( here or here ) - MITM-attack is impossible if the device has not been hacked (no jailbreak or root has been performed), taking into account the features of the previous paragraph.

- Strong protection - in a general sense, the application is protected from attacks and requires considerable effort to obtain data; it should also be understood that HTTPS technology is not used. This is important because applications are actively and overwhelmingly using HTTPS due to ease of implementation and server support. At the same time, there are a large number of data interception tools that are designed specifically for the problems associated with HTTPS.

- Inclusion in the backup - allows access to duplicate data (less than or equal to 100% of the stored application data) using various programs, including forensic decisions without the need to have a device with a jailbreak or root. There is data for the application - they are stored in the working folder of the application. Some of this data is included in the backup. At the same time, where the developer will put the data: whether to synchronize backed up folders or not - this is a question for the developer (therefore, 100% duplication of the data in the backup can be achieved. It is considered that access to the backup is easier to get without root and jailbreak than to perform root and jailbreak (on new devices, on old ones it doesn't matter).

A brief explanation of the data type:

- All data — account information, credentials, chats, application and user databases, configuration and cached data — everything that the user has seen in his application among his data and documents.

- Media data - all data, including photo, video, audio information (except media streams).

- Geo Docs data — geolocation associated with user documents, files, and notes, such as coordinates, location description, address, city, etc.

- Location address data - geolocation, not tied to specific data elements, but also representing coordinates, a description of a place, an address and a city, etc.

- Social credentials - username / email address, password or token instead of a password (the most common implementation), related to social networks Facebook, Twitter, LinkedIn, etc.).

- Photo-location - information about the place and address, with photographs of this place, for example, an image of the building at X.

- Information about the application - everything that is available in the application settings inside the application: changing passwords, setting up credit cards, choosing trusted friends to access a lost account.

- Account media data — multimedia files related to the accounts of the owner and his friends, in other words, profile images and a cached image (for further storage purposes on the device).

Indicative problems

Unsafe data storage

A large number of applications for storing account data, geolocation or banking information uses the “store in clear” approach. For a number of people, this may mean removing the application as soon as it becomes widely known.

The mixing of data between applications (each application may contain different data) usually indicates that there is a possibility of compromising additional user accounts. For example, an application that is not a social network may have authorization via social networks, and the credentials used (for example, login and token with OpenID) will be stored in the open form.

Unsafe communication

Even in the examples reviewed, developers of half of the applications do not follow the safe recommendations for correct implementations of the security mechanisms. SSL is the most popular method of communication for various applications, and every security guide states that you need to check certificates, including the root certificate, to prevent data being compromised. However, the lack of proper protection often helps to intercept the traffic of mobile applications.

Data leakage

Many popular mobile apps, especially games, often collect a huge amount of personal data for profiling and personalizing the device owner. This includes data such as age, gender, geolocation, activity in social networks, habits (favorite routes, visited places, music, culinary preferences) and much more. In this case, there is a possibility that at least 2, 3, or 5 applications reveal the maximum amount of information about the user. Nevertheless, the collection of such information is a violation of the requirements of many documents and safety recommendations, taking into account the objectives and functions of the application. The problem is aggravated by the fact that user agreements often do not reflect the essence of defense mechanisms or protected data or have inaccuracies. And nobody reads them.

Habravchane, tell your friends that it is worth limiting the access of applications to personal data by means of operating systems, checking permissions during installation and reading descriptions in markets, as well as reviews of security specialists

3rd party code

Using third-party libraries helps to speed development and take advantage of the experience of other developers in their own application. However, this experience includes all the problems, including security problems. From a security point of view, this practice can divide protection into two or more parts: the same data type, for example, a password, will be found in several places (different user scenarios) and with different levels of security (similar to Facebook).

Deferred security fix

Creating an application and its appearance in the market does not mean that the development cycle is completed. On the contrary, developers are just beginning to add new features, fix security errors, inadvertently introduce new security errors, remove old features, etc., which allows attackers to not only detect but also effectively use the problems that arise.

In the long run, there is no reliable application.

Each application can be reliable, weak or unreliable at any time. In addition, application updates violate the idea of “fixing problems” with each next version (a new version may be less secure than the old version), and for some applications, an update is necessary. In practice, actual security concerns continue to be ignored.

As a result, security errors are formed that allow attackers to compromise users again and again. It seems that the more experienced the development team, the less such problems should be in the final product. But, having studied popular applications, we will not see the similar. This is because they are focused on the work product as the primary measure of progress, i.e. performance is an indicator of success. At the same time, with examples it is possible to make sure that developers can still create reliable applications and keep them up to date from a security point of view, using the right tools and requirements sets.

')

Source: https://habr.com/ru/post/353112/

All Articles