Effective use of AWS spot instances

Spot instances are essentially the sale of currently free resources with a great discount. In this case, the instance can at any time turn it off and take it back. In the article I will talk about the features and practices of working with this proposal from AWS.

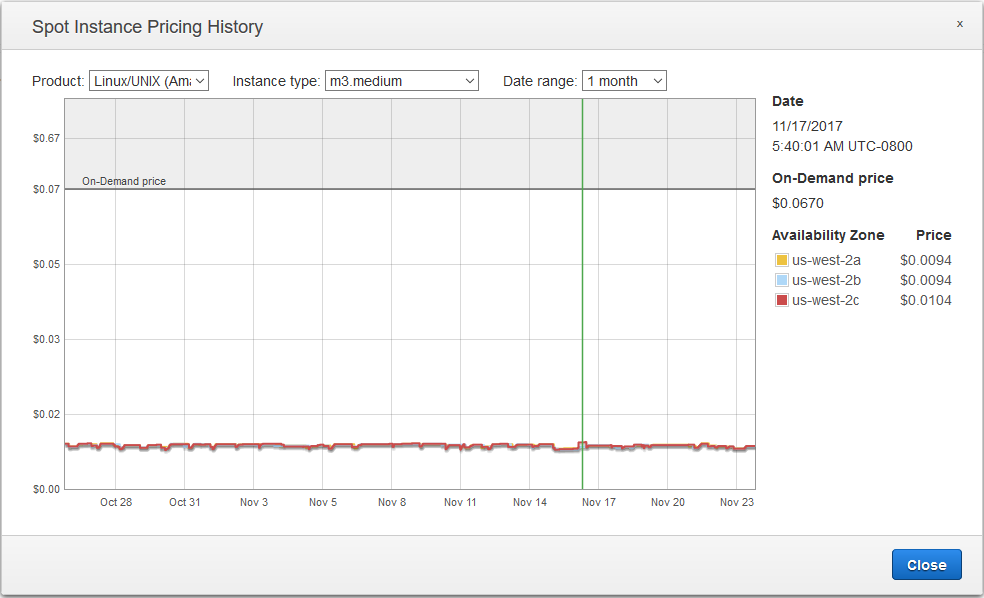

The cost of using a spot instance may vary from time to time. At the time of the order you place a bid (bid) - specify the maximum price you are willing to pay for use. It is the balance of rates and free resources that forms the final cost, which at the same time differs in different regions and even in the availability zones of the region.

At some point, the weighted price may exceed the price of a regular on-demand instance. That is why you should not make excess bets - you can really sell an instance for $ 1000 per hour. You do not know what bet to make - specify the price of the on-demand instance (namely, it is used by default).

The life cycle of the spot instance

So, we form a request, and AWS allocates us instances. As soon as Amazon needed the issued instances or the price exceeded the limit we specified, the request closes. This means that our instances will be terminated / hibernated.

Also, the request may be closed due to an error in the request. And here you have to be careful. For example, you created a request and received instances. And then they removed the associated IAM role. Your request will close with the status “error”. The instances will be stopped.

And of course, you can cancel the request at any time, which will also stop the instances.

The request itself may be:

- One-time - as soon as the instances are taken from us, the request closes

- Permanent - instansy return to us after re-inclusion.

- With guaranteed service for 1-6 hours.

As a result, we have two main tasks for the service with the desired 100% uptime:

- Form optimal spot-requests

- Handle instance disconnection events

Facilities

One of the most powerful tools for working with spot queries is spot fleet. It allows you to dynamically form requests in such a way as to satisfy the specified conditions. For example, if instances went up in one AvailabilityZone, then you can quickly run the same in another. Also, there is such a wonderful factor as the “weight” of the instance. For example, to perform the task we need 100 single core nodes. Or 50 dual core. And this means, on the example of T2-type instances, that we can use 100 small or 50 large or 25 xlarge. It is the optimal distribution and redistribution that minimizes both the cost and the likelihood that the request will be unmet. Now, however, there is a possibility that in all AvailabilityZones there will not be the necessary number of instances with our parameters.

Fortunately, AWS leaves us 2 minutes between making the decision to stop and shutting down. It is at this point that the shutdown timer starts to return via the link:

http://169.254.169.254/latest/meta-data/spot/termination-time Something like this would look like the simplest handler that we can run as a daemon:

#!/usr/bin/env bash while true do if [ -z $(curl -Is http://169.254.169.254/latest/meta-data/spot/termination-time | head -1 | grep 404 | cut -d \ -f 2) ] then echo “Instane is going to be terminated soon” # Execute pre-shutdown stuff break else # Spot instance is fine. sleep 5 fi done Let's complicate the task a bit - our instances are connected to the Elastic Load Balancer (ELB). You can use the demon from the snippet above and report the status to the balancer via the API. But there is a more elegant way - the project SeeSpot . In short, the daemon looks at the same time in / spot / termination-time and, optionally, in the healthcheck url of your service. As soon as AWS is about to withdraw an instance, it is marked as OutOfService in ELB and can optionally perform the final CleanUP task.

So, we figured out how to properly handle the trip. It remains only to learn how to maintain the required performance of the system, if we suddenly decide to take instances. Here the autospotting project will help us. The idea is as follows: we create a regular autoscaling group containing on-demand instances. The autospotting script finds these instances and, one by one, replaces them with fully relevant spot instances (the search is done by tag). Autoscaling groups have their own Healthcheck. As soon as one of its members fails the test, the group tries to recover its volume and creates a “healthy” instance of the original type (and this was on-demand). In this way, we can wait out, for example, price races. Once the spot-requests are satisfied again, autospotting will begin a gradual replacement. From myself I will add that the project is done fairly well, and has, among other things, approaches for configuration using terraform or cloudformation.

In conclusion I would like to recommend, if possible, to use spot instances for stateless services. Or use S3 / EFS. For ECS configurations, you need to think about re-balancing tasks.

')

Source: https://habr.com/ru/post/353102/

All Articles