Application of convolutional neural networks for NLP problems

When we hear about convolutional neural networks (CNN), we usually think about computer vision. CNN underlay the breakthroughs in image classification - the famous AlexNet, the winner of the ImageNet competition in 2012, from which the boom of interest in this topic began. Since then, convolutional networks have achieved great success in image recognition, due to the fact that they are designed like the visual cortex of the brain - that is, they can concentrate on a small area and highlight important features in it. But as it turned out, CNN is good not only for this, but also for Natural Language Processing (NLP) tasks. Moreover, in a recent article [1] from a team of authors from Intel and Carnegie-Mellon University, it is argued that they are suitable for this even better than the RNN, who have reigned supreme in the region in recent years.

First, a little theory. What is convolution? We will not dwell on this in detail, since a ton of materials has already been written about this, but still it’s worth a quick run. There is a beautiful visualization from Stanford that allows you to grasp the essence:

A source

It is necessary to introduce basic concepts, so that later we understand each other. A window that goes through a large matrix is called a filter (in the English version of kernel, filter or feature detector, so you can find translations and tracing of these terms do not worry, it's all the same). The filter is superimposed on a section of a large matrix and each value is multiplied with its corresponding filter value (the red numbers are lower and to the right of the black digits of the main matrix). Then everything turned out is added up and the output (“filtered”) value is obtained.

')

The window goes through a large matrix with some step, which in English is called stride. This step is horizontal and vertical (although the latter will not be useful to us).

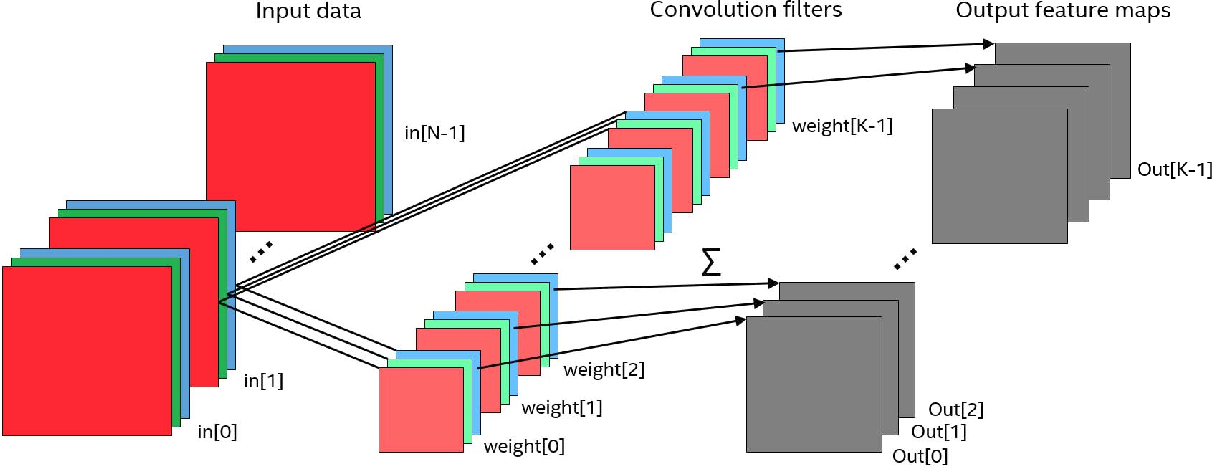

It remains to introduce an important concept of the channel. Channels in images are base colors known to many, for example, if we are talking about a simple and common RGB color coding scheme (Red - red, Green - green, Blue - blue), then it is assumed that of these three basic colors, by mixing them we can get any color. The key word here is “blending”, all three basic colors exist simultaneously, and can be obtained from, for example, the white light of the sun using a filter of the desired color (do you feel the terminology begins to make sense?).

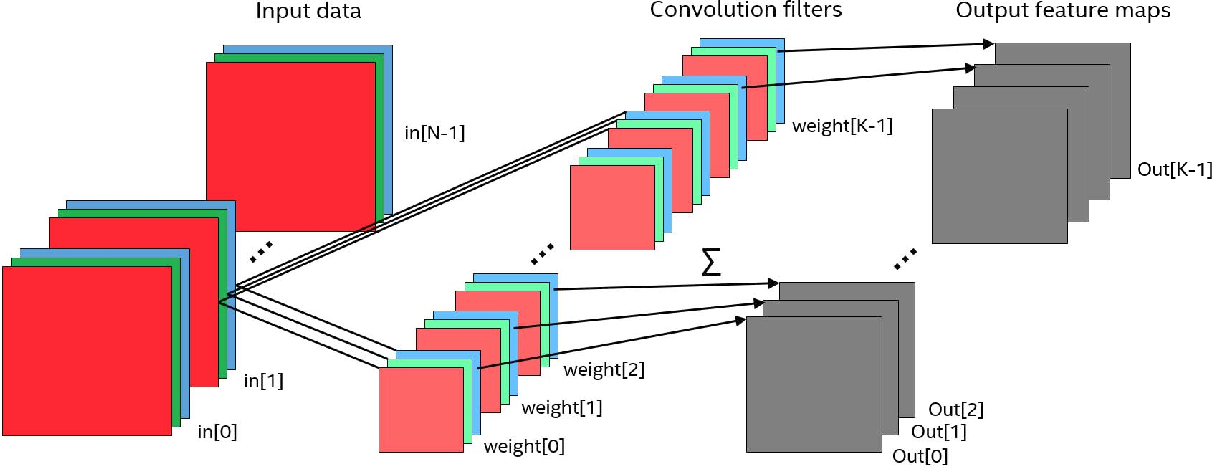

And so it turns out that we have an image, it has channels and our filter walks along it with the necessary step. It remains to understand - what to do with these channels? With these channels we do the following - each filter (that is, a matrix of small size) is superimposed on the original matrix simultaneously on all three channels. The results are simply summarized (which is logical, if you look at it, in the end, channels are our way of working with a continuous physical spectrum of light).

It is necessary to mention one more detail, without which further understanding will be difficult: I will reveal to you a terrible secret, there are much more filters in convolution networks. What does so much more mean? This means that we have n filters that do the same job. They walk with a window on the matrix and are considering something. It would seem, why do one job twice? One and more than one - due to the different initialization of the filter matrices, in the process of learning they begin to pay attention to different details. For example, one filter looks at the line, and the other at a particular color.

Source: cs231n

This is a visualization of different filters of the same layer of the same network. See that they look at completely different features of the image?

All we are armed with terminology in order to move on. In parallel with the terminology, we figured out how the convolutional layer works. It is the base for convolutional neural networks. There is another base layer for CNN - this is the so-called pooling layer.

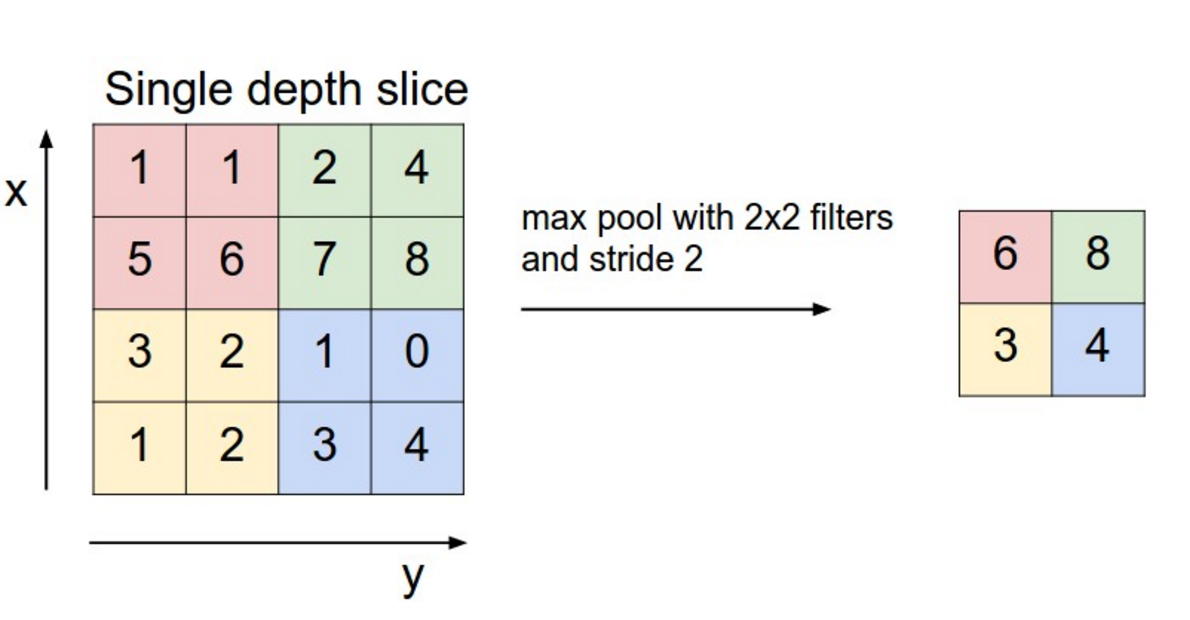

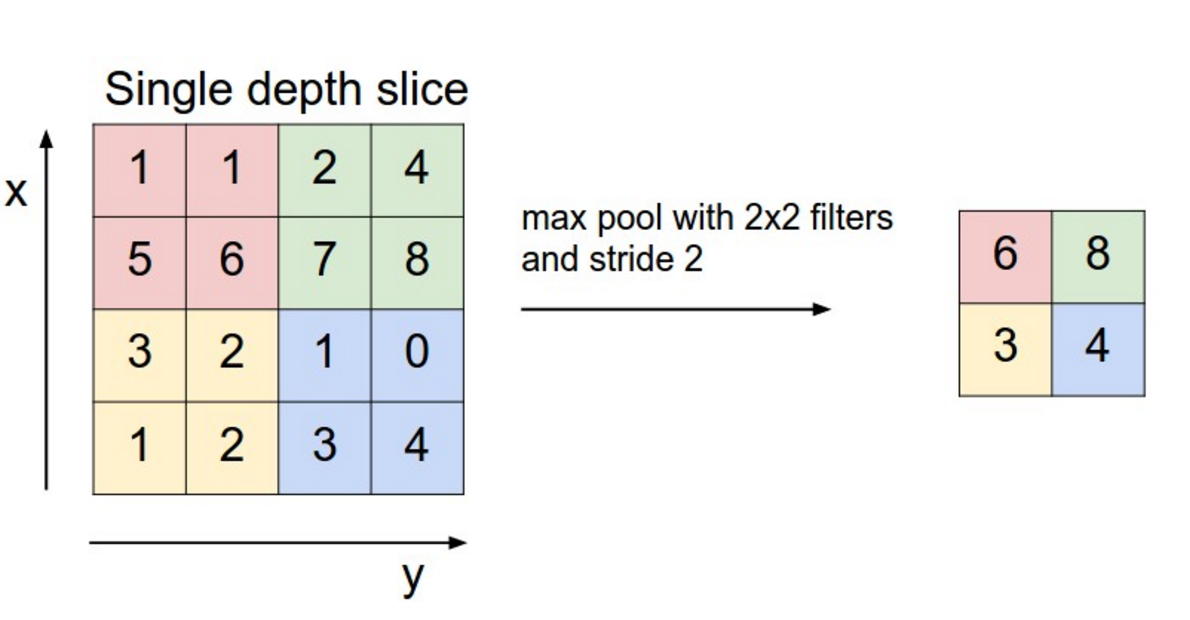

The easiest way to explain it is by the example of max-pooling. So, imagine that in a convolutional layer already known to you, the filter matrix is fixed and is single (that is, multiplication by it does not affect the input data). And instead of summing all the results of multiplication (input data according to our condition), we simply select the maximal element. That is, we will select the pixel from the entire window with the highest intensity. This is max-pooling. Of course, instead of functions, the maximum may be another arithmetic (or even more complex) function.

Source: cs231n

As a further reading on this subject, I recommend the post of Chris Olah (Chris Olah).

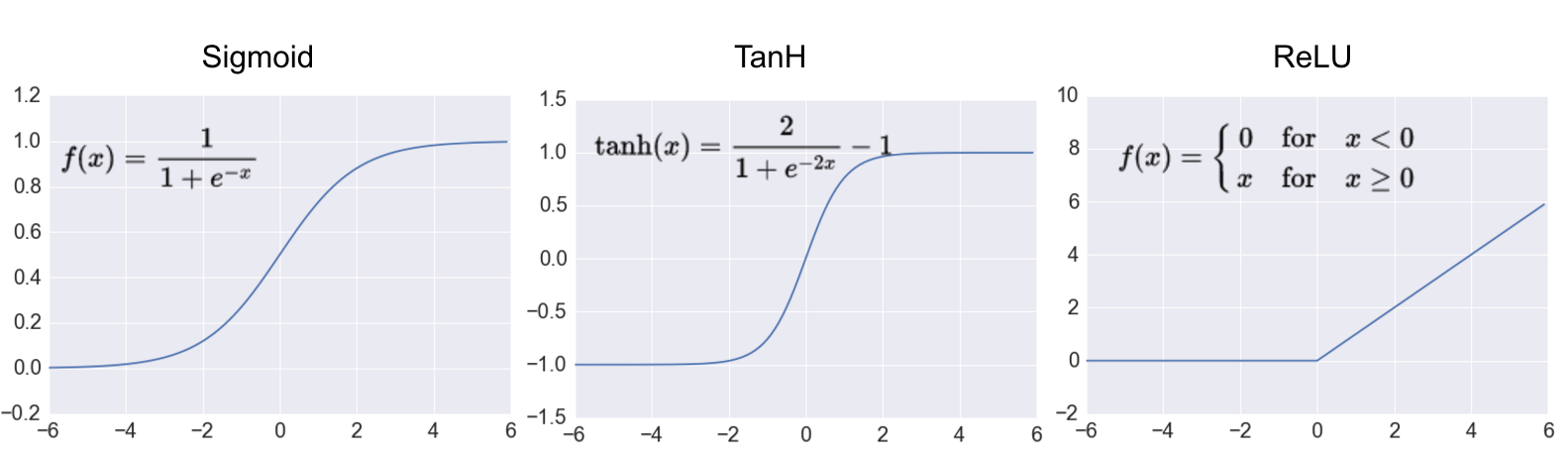

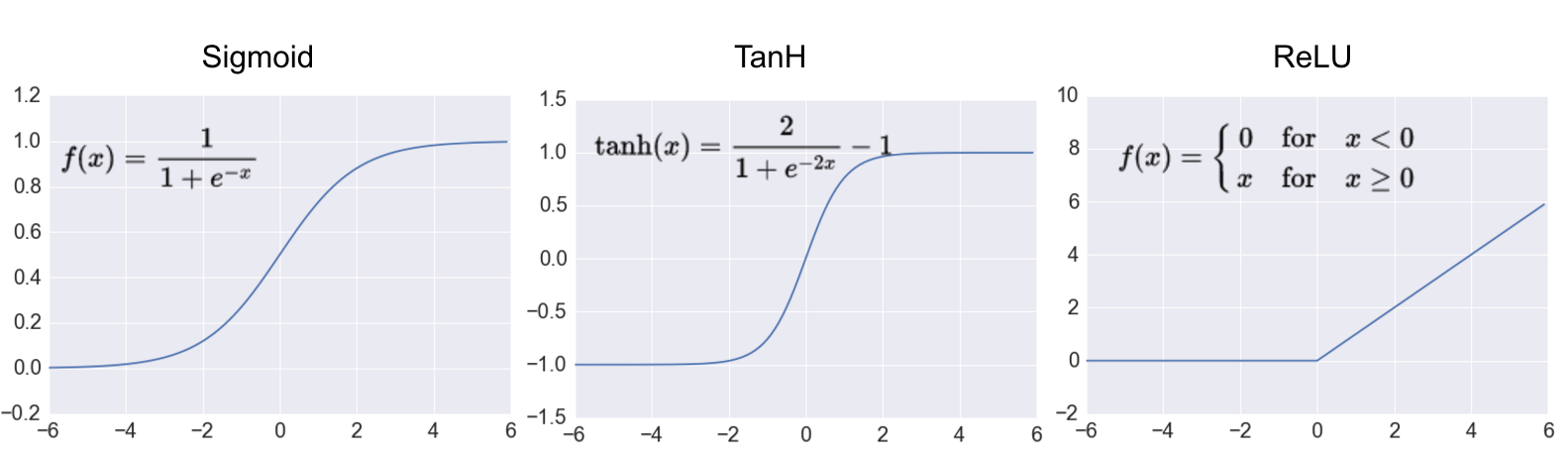

All of the above is good, but without nonlinearity, neural networks will not have the universal approximant property so necessary for us. Accordingly, I must say a few words about them. In recurrent and fully connected networks, balls are governed by such nonlinearities as sigmoid and hyperbolic tangent.

A source

These are good non-linear smooth functions, but they still require significant calculations compared to the one that rules the ball in the CNN: ReLU - Rectified Linear Unit. In Russian, ReLU is called a linear filter (are you not tired of using the word filter?). This is the simplest non-linear function, and is also not smooth. But on the other hand, it is calculated for one elementary operation, for which developers of computing frameworks love it very much.

All right, we have already discussed convolutional neural networks, how vision and all that is arranged. But where about the lyrics, or have I shamelessly deceived you? No, I did not deceive you.

This picture almost completely explains how we work with text using CNN. Unclear? Let's figure it out. First of all, the question is - where do we get the matrix for work from? CNN works with matrices, doesn't it? Here we need to go back a little and remember what embedding is (there is a separate article on this subject).

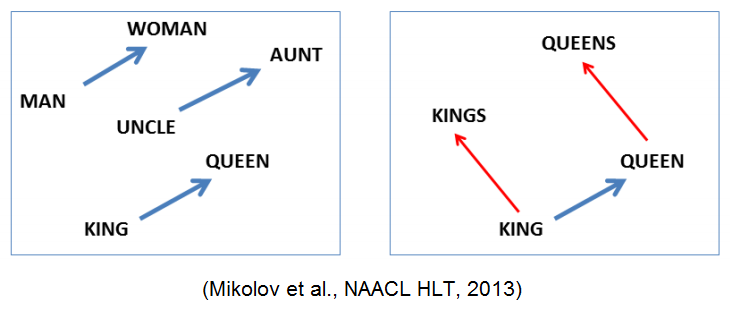

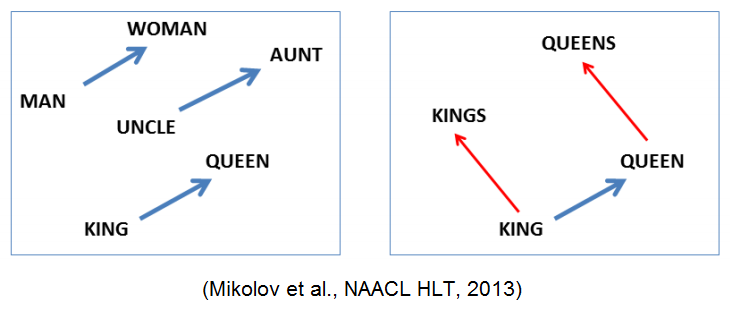

In short, embedding is the mapping of a point in some multidimensional space to an object, in our case, a word. An example of perhaps the most famous such embedding is Word2Vec. By the way, it has semantic properties, like

Now the next step, the matrix is good, and it seems to even look like a picture - the same two dimensions. Or not? Wait, we have more channels in the picture. It turns out that in the matrix of the picture we have three dimensions - width, height and channels. And here? And here we only have the width (the matrix for the convenience of displaying is transposed in the header section of the matrix section) is the sequence of tokens in the sentence. And - no, not height, but channels. Why channels? Because embedding words only makes sense completely, every single measurement of it will not tell us anything.

Well, we figured out the matrix, now more about convolutions. It turns out that the convolution we can walk only on one axis - in width. Therefore, in order to distinguish it from standard convolution, it is called one-dimensional (1D convolution).

And now almost everything is clear, except for the mysterious Max Over Time Pooling. What is this beast? This is the max-pooling already discussed above, only applied to the entire sequence at once (that is, the width of its window is equal to the entire width of the matrix).

Picture to attract attention. In fact, an illustration from the work [4].

Convolutional neural networks are good where you need to see a piece or the entire sequence and draw some conclusion from this. That is, these are tasks, for example, spam detection, tonality analysis, or extraction of named entities. Parsing articles can be difficult for you, if you have just met neural networks, you can skip this section.

In [2], Yun Kim (CNY) shows that CNN is good for classifying sentences on different datasets. The picture used to illustrate the section above is just from his work. It works with Word2Vec, but you can work directly with characters.

In [3], the authors classify texts based directly on letters, learning to embedding them for them in the learning process. On large datasets, they showed even better results than word networks.

Convolutional neural networks have a significant drawback compared to RNN - they can only work with a fixed-size input (since the dimensions of the matrices in the network cannot change during operation). But the authors of the above work [1] were able to solve this problem. So now this restriction has been removed.

In [4], authors classify texts based directly on symbols. They used a set of 70 characters to represent each character as a one-hot vector and set the fixed text length to 1014 characters. Thus, the text is represented by a 70x1014 binary matrix. The network has no idea about the words and sees them as combinations of characters, and information about the semantic proximity of words is not provided to the network, as in the cases of pre-trained Word2Vec vectors. The network consists of 1d conv, max-pooling layers and two fully-connected layers with dropout. On large datasets, they showed even better results than word networks. In addition, this approach significantly simplifies the preprocessing step that may contribute to its use on mobile devices.

In another paper [5], the authors are trying to improve the use of CNN in NLP using developments from computer vision. The main trends in computer vision recently is the increase in the depth of the network and the addition of so-called skip-links (eg, ResNet) that connect layers that are not adjacent to each other. The authors showed that the same principles apply to NLP, they built CNN on the basis of symbols with 16-dimensional embedding, which they learned together with the network. They trained the networks of different depths (9, 17, 29 and 49 conv layers) and experimented with skip-links to find out how they affect the result. They concluded that increasing the depth of the network improves the results on selected data sets, but the performance of too deep networks (49 layers) is lower than moderately deep (29 layers). The use of skip-links led to an improvement in the results of the network of 49 layers, but it still did not surpass the network indicator with 29 layers.

Another important feature of CNN in computer vision is the possibility of using weights of a network trained on one large dataset (a typical example is ImageNet) in other tasks of computer vision. In [6], the authors investigate the applicability of these principles in the problem of text classification using CNN with word embedding. They study how the transfer of certain parts of the network (Embedding, conv layers and fully-connected layers) affects the classification results on selected datasets. They come to the conclusion that in NLP tasks the semantic proximity of the source on which the network had previously been trained plays an important role, that is, the network trained on movie reviews will work well on another dataset with movie reviews. In addition, they note that using the same embedding for words increases the success of the transfer and recommends not freezing the layers, but noting them on the target date.

Let's take a practical look at how to do a sentiment analysis on CNN. We analyzed a similar example in the article about Keras , so for all the details I refer you to her, and here only the key features for understanding will be considered.

First of all, you need the concept of Sequence from Keras. Actually, this is the sequence in the form convenient for Keras:

Here,

Further, the resulting sequences need to be aligned - as we remember, CNN does not know how to work with variable text lengths yet, this has not yet reached industrial application.

After that, all sequences will either be truncated or padded with zeros to the length

And now, actually the most important, the code of our model:

The first layer is

After embedding, there is a one-dimensional convolutional

After the ReLU comes the

Here is the model we got in the end:

An interesting feature can be seen in the picture: after the convolutional layer, the sequence length was 38 instead of 40. Why is that? Because you and I did not talk and did not use padding, a technique that allows you to virtually “add” data to the original matrix so that the light can go beyond it. And without this, a convolution of length 3 with a step equal to 1 can make only 38 steps in a matrix 40 wide.

Well, what did we get in the end? In my tests, this classifier gave a quality of 0.57, which, of course, a little. But you can easily improve my result if you make a little effort. Go for it .

PS: Thanks for the help in writing this article to Evgeny Vasilyev somesnm and Bulat Suleimanov khansuleyman .

[1] Bai, S., Kolter, JZ, & Koltun, V. (2018). Generic Convolutional and Recurrent Networks for Sequence Modeling. arxiv.org/abs/1803.01271

[2] Kim, Y. (2014). Convolutional Neural Networks for Sentence Classification. Proceedings of the 2014 Conference on Empirical Methods of Natural Language Processing (EMNLP 2014), 1746–1751.

[3] Heigold, G., Neumann, G., & van Genabith, J. (2016). Neural morphological tagging from morphologically rich languages. arxiv.org/abs/1606.06640

[4] Character-level Convolutional Networks for Text Classification. Xiang Zhang, Junbo Zhao, Yann LeCun arxiv.org/abs/1509.01626

[5] Very Deep Convolutional Networks for Text Classification. A Conneau, H Schwenk, L Barrault, Y Lecun arxiv.org/abs/1606.01781

[6] A Practitioners' Guide to Transfer Learning for Text Classification using Convolutional Neural Networks. T Semwal, G Mathur, P Yenigalla, SB Nair arxiv.org/abs/1801.06480

Convolutional neural networks

First, a little theory. What is convolution? We will not dwell on this in detail, since a ton of materials has already been written about this, but still it’s worth a quick run. There is a beautiful visualization from Stanford that allows you to grasp the essence:

A source

It is necessary to introduce basic concepts, so that later we understand each other. A window that goes through a large matrix is called a filter (in the English version of kernel, filter or feature detector, so you can find translations and tracing of these terms do not worry, it's all the same). The filter is superimposed on a section of a large matrix and each value is multiplied with its corresponding filter value (the red numbers are lower and to the right of the black digits of the main matrix). Then everything turned out is added up and the output (“filtered”) value is obtained.

')

The window goes through a large matrix with some step, which in English is called stride. This step is horizontal and vertical (although the latter will not be useful to us).

It remains to introduce an important concept of the channel. Channels in images are base colors known to many, for example, if we are talking about a simple and common RGB color coding scheme (Red - red, Green - green, Blue - blue), then it is assumed that of these three basic colors, by mixing them we can get any color. The key word here is “blending”, all three basic colors exist simultaneously, and can be obtained from, for example, the white light of the sun using a filter of the desired color (do you feel the terminology begins to make sense?).

And so it turns out that we have an image, it has channels and our filter walks along it with the necessary step. It remains to understand - what to do with these channels? With these channels we do the following - each filter (that is, a matrix of small size) is superimposed on the original matrix simultaneously on all three channels. The results are simply summarized (which is logical, if you look at it, in the end, channels are our way of working with a continuous physical spectrum of light).

It is necessary to mention one more detail, without which further understanding will be difficult: I will reveal to you a terrible secret, there are much more filters in convolution networks. What does so much more mean? This means that we have n filters that do the same job. They walk with a window on the matrix and are considering something. It would seem, why do one job twice? One and more than one - due to the different initialization of the filter matrices, in the process of learning they begin to pay attention to different details. For example, one filter looks at the line, and the other at a particular color.

Source: cs231n

This is a visualization of different filters of the same layer of the same network. See that they look at completely different features of the image?

All we are armed with terminology in order to move on. In parallel with the terminology, we figured out how the convolutional layer works. It is the base for convolutional neural networks. There is another base layer for CNN - this is the so-called pooling layer.

The easiest way to explain it is by the example of max-pooling. So, imagine that in a convolutional layer already known to you, the filter matrix is fixed and is single (that is, multiplication by it does not affect the input data). And instead of summing all the results of multiplication (input data according to our condition), we simply select the maximal element. That is, we will select the pixel from the entire window with the highest intensity. This is max-pooling. Of course, instead of functions, the maximum may be another arithmetic (or even more complex) function.

Source: cs231n

As a further reading on this subject, I recommend the post of Chris Olah (Chris Olah).

All of the above is good, but without nonlinearity, neural networks will not have the universal approximant property so necessary for us. Accordingly, I must say a few words about them. In recurrent and fully connected networks, balls are governed by such nonlinearities as sigmoid and hyperbolic tangent.

A source

These are good non-linear smooth functions, but they still require significant calculations compared to the one that rules the ball in the CNN: ReLU - Rectified Linear Unit. In Russian, ReLU is called a linear filter (are you not tired of using the word filter?). This is the simplest non-linear function, and is also not smooth. But on the other hand, it is calculated for one elementary operation, for which developers of computing frameworks love it very much.

All right, we have already discussed convolutional neural networks, how vision and all that is arranged. But where about the lyrics, or have I shamelessly deceived you? No, I did not deceive you.

Apply convolutions to texts

This picture almost completely explains how we work with text using CNN. Unclear? Let's figure it out. First of all, the question is - where do we get the matrix for work from? CNN works with matrices, doesn't it? Here we need to go back a little and remember what embedding is (there is a separate article on this subject).

In short, embedding is the mapping of a point in some multidimensional space to an object, in our case, a word. An example of perhaps the most famous such embedding is Word2Vec. By the way, it has semantic properties, like

word2vec(“king”) - word2vec(“man”) + word2vec(“woman”) ~= word2vec(“queen”) . So, we take embedding for each word in our text and just put all the vectors in a row, getting the desired matrix.

Now the next step, the matrix is good, and it seems to even look like a picture - the same two dimensions. Or not? Wait, we have more channels in the picture. It turns out that in the matrix of the picture we have three dimensions - width, height and channels. And here? And here we only have the width (the matrix for the convenience of displaying is transposed in the header section of the matrix section) is the sequence of tokens in the sentence. And - no, not height, but channels. Why channels? Because embedding words only makes sense completely, every single measurement of it will not tell us anything.

Well, we figured out the matrix, now more about convolutions. It turns out that the convolution we can walk only on one axis - in width. Therefore, in order to distinguish it from standard convolution, it is called one-dimensional (1D convolution).

And now almost everything is clear, except for the mysterious Max Over Time Pooling. What is this beast? This is the max-pooling already discussed above, only applied to the entire sequence at once (that is, the width of its window is equal to the entire width of the matrix).

Examples of using convolutional neural networks for texts

Convolutional neural networks are good where you need to see a piece or the entire sequence and draw some conclusion from this. That is, these are tasks, for example, spam detection, tonality analysis, or extraction of named entities. Parsing articles can be difficult for you, if you have just met neural networks, you can skip this section.

In [2], Yun Kim (CNY) shows that CNN is good for classifying sentences on different datasets. The picture used to illustrate the section above is just from his work. It works with Word2Vec, but you can work directly with characters.

In [3], the authors classify texts based directly on letters, learning to embedding them for them in the learning process. On large datasets, they showed even better results than word networks.

Convolutional neural networks have a significant drawback compared to RNN - they can only work with a fixed-size input (since the dimensions of the matrices in the network cannot change during operation). But the authors of the above work [1] were able to solve this problem. So now this restriction has been removed.

In [4], authors classify texts based directly on symbols. They used a set of 70 characters to represent each character as a one-hot vector and set the fixed text length to 1014 characters. Thus, the text is represented by a 70x1014 binary matrix. The network has no idea about the words and sees them as combinations of characters, and information about the semantic proximity of words is not provided to the network, as in the cases of pre-trained Word2Vec vectors. The network consists of 1d conv, max-pooling layers and two fully-connected layers with dropout. On large datasets, they showed even better results than word networks. In addition, this approach significantly simplifies the preprocessing step that may contribute to its use on mobile devices.

In another paper [5], the authors are trying to improve the use of CNN in NLP using developments from computer vision. The main trends in computer vision recently is the increase in the depth of the network and the addition of so-called skip-links (eg, ResNet) that connect layers that are not adjacent to each other. The authors showed that the same principles apply to NLP, they built CNN on the basis of symbols with 16-dimensional embedding, which they learned together with the network. They trained the networks of different depths (9, 17, 29 and 49 conv layers) and experimented with skip-links to find out how they affect the result. They concluded that increasing the depth of the network improves the results on selected data sets, but the performance of too deep networks (49 layers) is lower than moderately deep (29 layers). The use of skip-links led to an improvement in the results of the network of 49 layers, but it still did not surpass the network indicator with 29 layers.

Another important feature of CNN in computer vision is the possibility of using weights of a network trained on one large dataset (a typical example is ImageNet) in other tasks of computer vision. In [6], the authors investigate the applicability of these principles in the problem of text classification using CNN with word embedding. They study how the transfer of certain parts of the network (Embedding, conv layers and fully-connected layers) affects the classification results on selected datasets. They come to the conclusion that in NLP tasks the semantic proximity of the source on which the network had previously been trained plays an important role, that is, the network trained on movie reviews will work well on another dataset with movie reviews. In addition, they note that using the same embedding for words increases the success of the transfer and recommends not freezing the layers, but noting them on the target date.

Practical example

Let's take a practical look at how to do a sentiment analysis on CNN. We analyzed a similar example in the article about Keras , so for all the details I refer you to her, and here only the key features for understanding will be considered.

First of all, you need the concept of Sequence from Keras. Actually, this is the sequence in the form convenient for Keras:

x_train = tokenizer.texts_to_sequences(df_train["text"]) x_test = tokenizer.texts_to_sequences(df_test["text"]) x_val = tokenizer.texts_to_sequences(df_val["text"]) Here,

text_to_sequences is a function that translates text into a sequence of integers by a) tokenization, that is, splitting a string into tokens and b) replacing each token with its number in the dictionary. (The dictionary in this example was compiled in advance. The code for its compilation is in a full notebook.)Further, the resulting sequences need to be aligned - as we remember, CNN does not know how to work with variable text lengths yet, this has not yet reached industrial application.

x_train = pad_sequences(x_train, maxlen=max_len) x_test = pad_sequences(x_test, maxlen=max_len) x_val = pad_sequences(x_val, maxlen=max_len) After that, all sequences will either be truncated or padded with zeros to the length

max_len .And now, actually the most important, the code of our model:

model = Sequential() model.add(Embedding(input_dim=max_words, output_dim=128, input_length=max_len)) model.add(Conv1D(128, 3)) model.add(Activation("relu")) model.add(GlobalMaxPool1D()) model.add(Dense(num_classes)) model.add(Activation('softmax')) The first layer is

Embedding , which translates whole numbers (in fact, one-hot vectors, in which the unit space corresponds to the number of a word in the dictionary) into dense vectors. In our example, the size of the embedding space (the length of the vector) is 128, the number of words in the max_words dictionary, and the number of words in the sequence are max_len , as we already know from the code above.After embedding, there is a one-dimensional convolutional

Conv1D layer. The number of filters in it is 128, and the width of the window for filters is 3. Activation must be clear - this is a favorite ReLU.After the ReLU comes the

GlobalMaxPool1D layer. “Global” in this case means that it is taken along the entire length of the incoming sequence, that is, it is nothing but the above-mentioned Max Over Time Pooling. By the way, why is it called Over Time? Because the sequence of words we have has a natural order, some words come to us earlier in the stream of speech / text, that is, earlier in time.Here is the model we got in the end:

An interesting feature can be seen in the picture: after the convolutional layer, the sequence length was 38 instead of 40. Why is that? Because you and I did not talk and did not use padding, a technique that allows you to virtually “add” data to the original matrix so that the light can go beyond it. And without this, a convolution of length 3 with a step equal to 1 can make only 38 steps in a matrix 40 wide.

Well, what did we get in the end? In my tests, this classifier gave a quality of 0.57, which, of course, a little. But you can easily improve my result if you make a little effort. Go for it .

PS: Thanks for the help in writing this article to Evgeny Vasilyev somesnm and Bulat Suleimanov khansuleyman .

Literature

[1] Bai, S., Kolter, JZ, & Koltun, V. (2018). Generic Convolutional and Recurrent Networks for Sequence Modeling. arxiv.org/abs/1803.01271

[2] Kim, Y. (2014). Convolutional Neural Networks for Sentence Classification. Proceedings of the 2014 Conference on Empirical Methods of Natural Language Processing (EMNLP 2014), 1746–1751.

[3] Heigold, G., Neumann, G., & van Genabith, J. (2016). Neural morphological tagging from morphologically rich languages. arxiv.org/abs/1606.06640

[4] Character-level Convolutional Networks for Text Classification. Xiang Zhang, Junbo Zhao, Yann LeCun arxiv.org/abs/1509.01626

[5] Very Deep Convolutional Networks for Text Classification. A Conneau, H Schwenk, L Barrault, Y Lecun arxiv.org/abs/1606.01781

[6] A Practitioners' Guide to Transfer Learning for Text Classification using Convolutional Neural Networks. T Semwal, G Mathur, P Yenigalla, SB Nair arxiv.org/abs/1801.06480

Source: https://habr.com/ru/post/353060/

All Articles