Alice, Google Assistant, Siri, Alexa. How to write applications for voice assistants

The market for voice assistants is expanding, especially for Russian-speaking users. 2 weeks ago, Yandex spoke for the first time about the Yandex.Dialogi platform, 2 months ago, Google introduced the ability to write dialogs for Google Assistant in Russian, 2 years ago, Apple released SiriKit to the open voyage from the stage of Bill Graham Civic Auditorium. In fact, there is a new branch of development, where there should be their own designers, architects and developers. The perfect moment to talk about voice assistants and api for them.

This article will not be detailed tutorials. This article is about ideas and interesting technical details on which tools are built for third-party developers of the main market players: Apple Siri, Google Assistant, and Alice from Yandex.

A theory without practice is boring to learn. Imagine that we face the challenge of the newly invented DoReMi pizzeria. The company's management wants the buyer to have the opportunity to learn the menu of the pizzeria and order pizza by voice. We will leave the food order for the second iteration, and now we will deal with the menu. Add the command "What is part of <Pizza name>?". If the user enters an incorrect command, the output will consist of a list of pizzas. The task is simple. Ideal to learn technology and prepare for further expansion.

')

The first point is to raise the backend

ATTENTION! Android developer raises server to node.js. Nervous better to skip this part.

We need a server for storing information about pizzas and for further interaction with api assistants. The backend will be written in node.js along with the express framework to configure the web application. We will deploy on the platform Now from the company Zeit . The platform is free and easy to use. Enter the "now" command in the terminal to start the deployment script and in response we get a link to our web application.

To initialize the project, use the Express Generator. The result of the generation will be an excellent framework for web applications, but there is a lot of unnecessary for simple api: templates for pages, error pages, folders for resources. Leave only the most necessary.

We will not use the database. We have static data, one object in js is enough - a list of pizzas with names and ingredients.

const pizzas = [ { name: "", ingredients: ["", " ", " ", "", ""] }, { name: "", ingredients: ["", " ", " ", ""] }, { name: "", ingredients: ["", " ", " ", "", "", " ", " ", "", ""] }, { name: " ", ingredients: ["", " ", " ", " ", " ", " "] }, { name: "", ingredients: ["", " ", " ", " ", ""] }, ]; Add a method that the object pizza will display its composition. If the pizza is not found, then the answer will be the menu of the pizzeria.

const pizzaInfo = { getPizzaInfoByPizzaName: function (pizza) { const wrapName = name => `"${name}"` if (!pizza) { const pizzaNames = pizzas.map(pizza => wrapName(pizza.name)).join(", ") return ` "" ${pizzaNames}. .` } const ingredients = pizza.ingredients.map(ingredient => ingredient.toLowerCase()).join(", ") return ` ${wrapName(pizza.name)} ${ingredients}.` }, }; Yandex.Alice. Start simple

Yandex.Dialogs - basic equipment of the car, where you can ride. But still not enough air conditioning. The platform from Yandex is ideal for learning the basics: as simple as three kopecks, but at the same time contains the concepts on which most assistants are built.

The main unit of the platform is dialogue. Dialogues - skills created by third-party developers. Add new functionality in the main conversation with the assistant will not work. I would like to take the phrase “Alice, order me a pizza”, but there are many pizzerias. The user will have to say the activation command: "Alice, call me ReMi." Then the service understands that it is necessary to switch to the dialogue from “DoReMi”. We take power into our own hands and manage the process on our server, through requests and responses, using the technology of webchuk.

What is a webcam?

WebHook, in its essence, POST request, which is sent to the server. The server is configured to receive the request, process it and send a response to the url that the client has specified. The client does not waste time waiting for a response.

It works like this.

You come to the store, dial a cart of goods. The queue in the store is one, very long. In ordinary supermarkets you would have to defend it and lose a huge amount of time. In a parallel universe, you leave your cart in line, while you yourself are doing other things. The store staff finds you and gives you packages. The first approach is the analogy of the API, the second is the webhuk.

The settings that you need to register to create a dialogue in your personal account: the name, the subject of the dialogue, the activation name and url to the server.

Further only setup of the server for processing requests. Accept json, send json. And even easier, if we discard all the husks with receiving json, parsing it, retrieving data and inverse actions in the process of sending a response, we accept the user's text and return Alice's text. Welcome to the 70s, at the time of text interfaces.

We have a string with the user command. To return the composition of the pizza at the user's command, we need to isolate the name of the pizza and send a phrase in reply. We will extract the usual string.contains (phrase). In order for the idea to work, we will modernize our pizza list, adding to them a list of the basics (the morpheme of the word without ending) that may appear in the query.

const pizzas = [ { name: "", base_name: [""], ingredients: ["", " ", " ", "", ""] }, { name: "", base_name: ["", "", ""], ingredients: ["", " ", " ", ""] }, { name: "", base_name: [""], ingredients: ["", " ", " ", "", "", " ", " ", "", ""] }, { name: " ", base_name: ["", ""], ingredients: ["", " ", " ", " ", " ", " "] }, { name: "", base_name: [""], ingredients: ["", " ", " ", " ", ""] }, ]; Let's slightly change the function that returns the composition of the pizza at the user's command.

getPizzaInfoByUserCommand: function (command) { command = command.toLowerCase(); const pizza = pizzas.find(pizza => ( pizza.base_name.some(base => (command.indexOf(base) !== -1)) )) return this.getPizzaInfoByPizzaName(pizza) }, We process JSON, send the correct answer and add a button that redirects the user to the pizzeria website. The buttons in Alice are the only way to add variety to plain text output. On the button, you can assign a replica of the user or open the browser by url. Use deep linking to link the assistant and the application into one convenient process. For example, when ordering a pizza, you can customize the transition to the payment screen in the application, where billing information has already been saved or you can pay via Google / Apple Pay.

var express = require('express'); var pizzaInfo = require('../pizza/pizza_info.js'); var router = express.Router(); /* GET home page. */ router.use('/', function (req, res, next) { const body = req.body; const commandText = body.request.command; const answer = pizzaInfo.getPizzaInfoByUserCommand(commandText); res.json({ "response": { "text": answer, "buttons": [{ "title": "", "url": "https://doremi.fake/" } ], "end_session": false }, "session": { "session_id": body.session.session_id, "message_id": body.session.message_id, "user_id": body.session.user_id }, "version": body.version }) }); Using the tts (text-to-speach) parameter, you can set up Alice’s voice response library: stress, pronunciation, and spaces. In tts, it is better to transmit a transcription instead of spelling correct spelling. For example, "please". So Alice’s speech will be more natural.

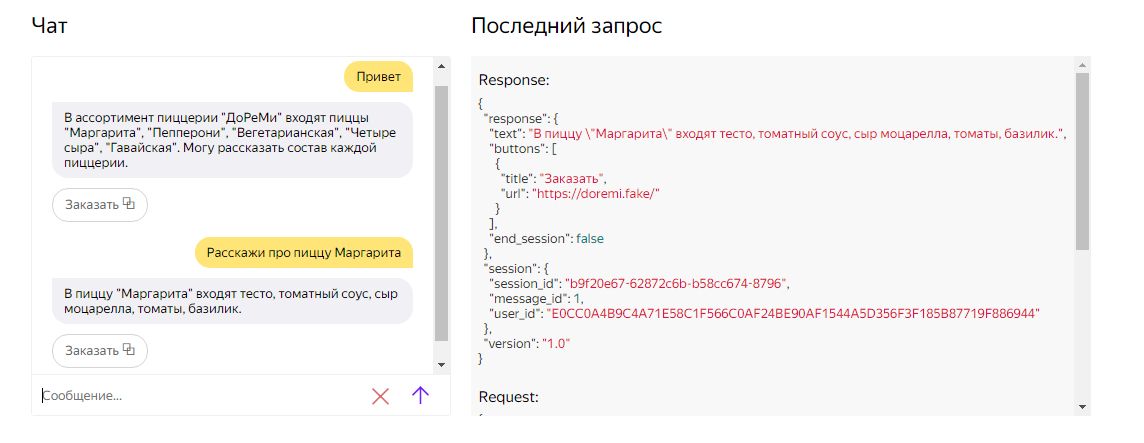

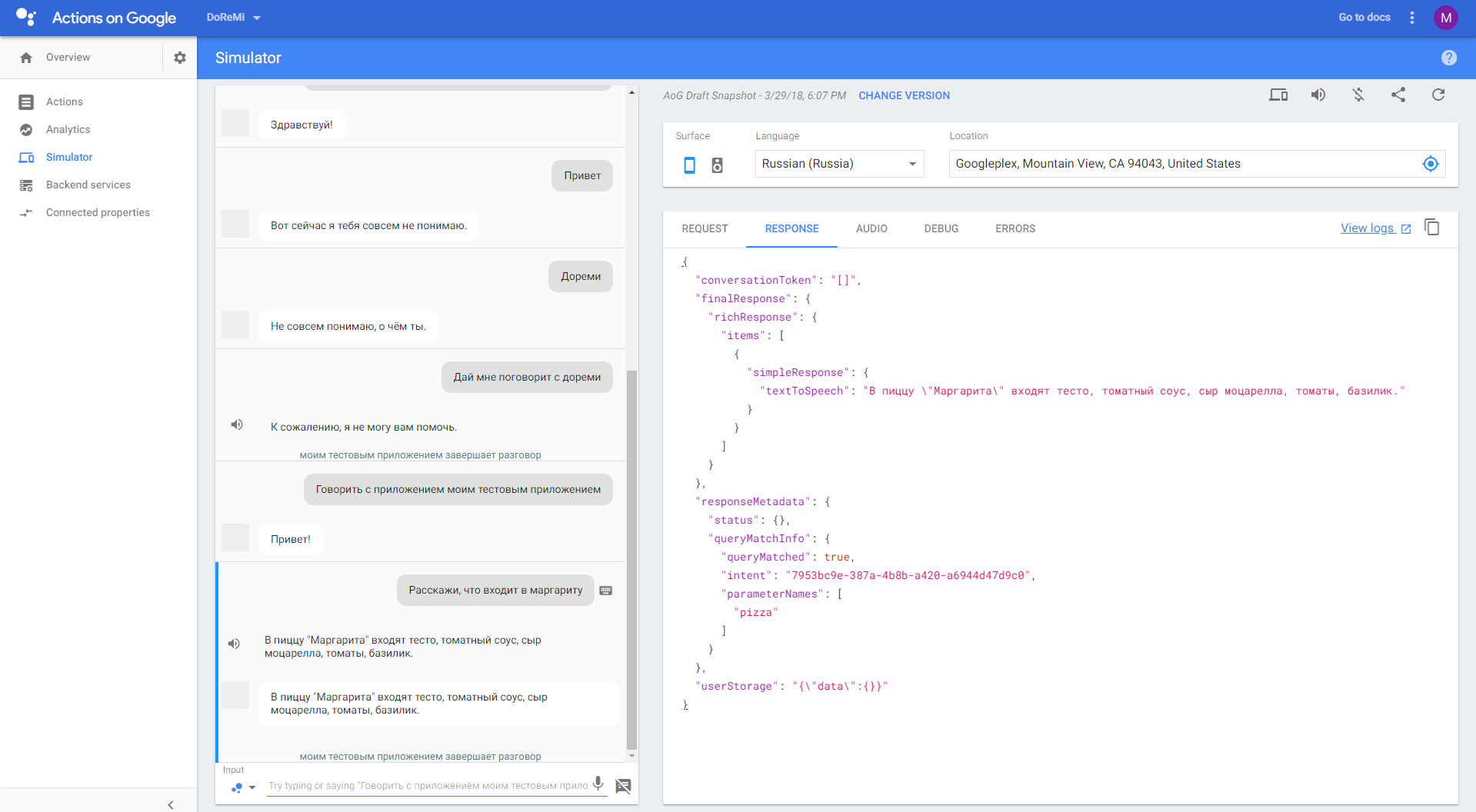

Test dialogue is nowhere easier. In your account, you can talk through the console with your dialogue and read json'y.

At the moment, the dialogue in the draft stage. The next step will be publishing in the Yandex catalog. Before publication, it is tested for compliance with the requirements of Yandex: accuracy of information, literacy, ethical and other formal qualities.

Google Assistant. New level

If Dialogues is the basic equipment of a car, then Actions on Google is a complete set with a massage chair, autopilot and personal driver, the instructions for which are attached in Chinese. The Google tool is stronger, richer, but harder. And the entrance threshold in technology is higher. Yandex has ingeniously laconic and simple documentation. About Google I can not say this. Actions on Google are built on the same axioms as Dialogues: activation team, communication through api, use of webchuk, separation of third-party dialogue from the main one.

Simplicity is the main advantage and problem of the Dialogs. The problem is that you need to build the whole architecture yourself. The simplest algorithm for isolating parts from the user's text, implemented above, cannot be extended to new commands. Have to reinvent the wheel. At such moments, you understand why the graphical UI still rules. But Google has implemented products that free the developer from the boring scripted processes: the classification of user commands and work with requests and responses. The first task is solved by the DialogFlow aka Api.Ai framework, the second - by the bulk library under node.js. It remains for us to connect api to Actions via node.js. At first glance, this is an unnecessary complication, but now I will show that this approach benefits in projects where there are more teams than one.

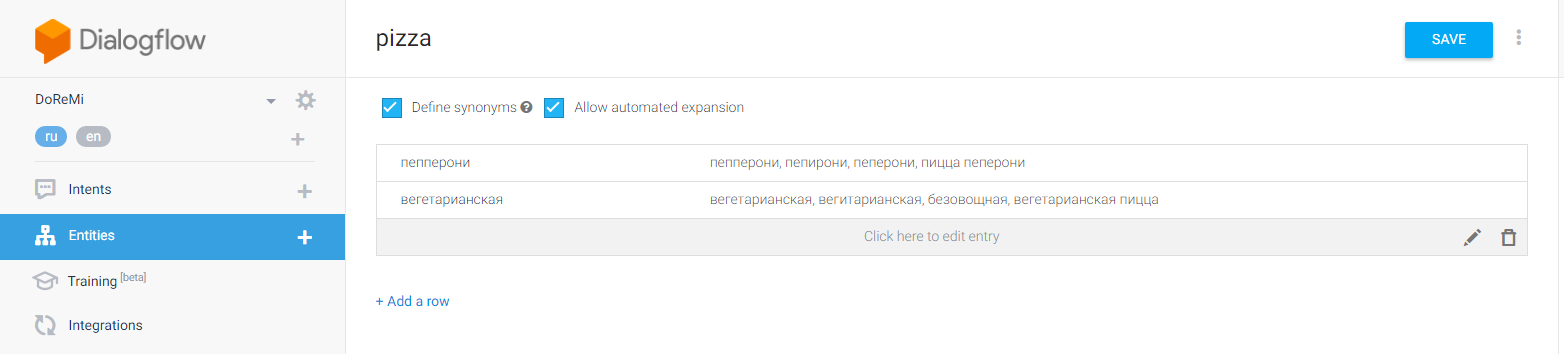

DialogFlow solves the typical problem of machine learning - the task of classification, in our case, the classification of user commands into categories. To understand and customize the framework's work, let's analyze two concepts from the terminology of DialogFlow. The first is Entities or entities. For example, brand cars, city or pizza names. In the entity settings, we specify examples of the entity and its synonyms. The algorithm will try to cling to the essence at the level of the foundations of words. If successful, Google will send it to the server as an argument.

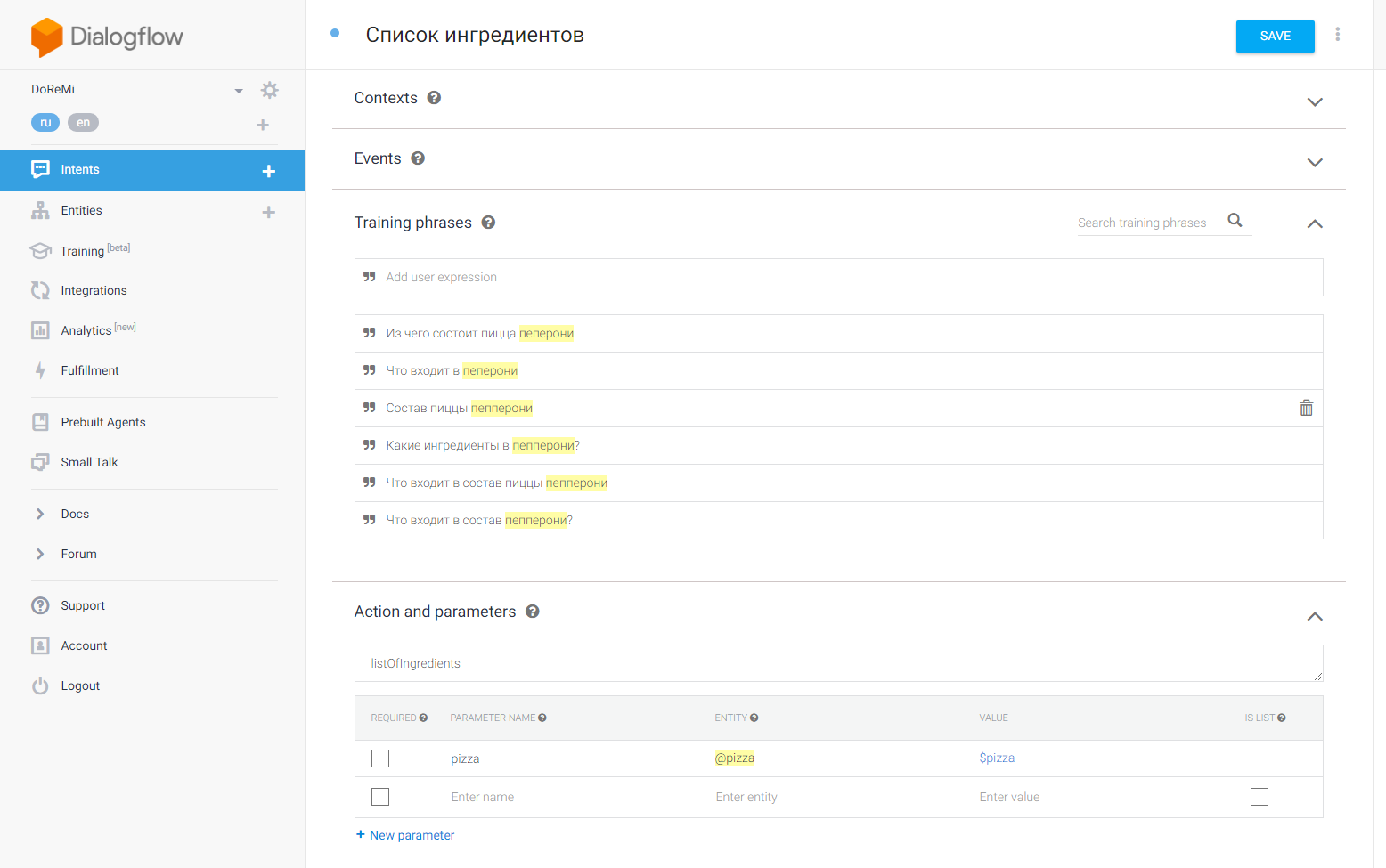

The second concept - Intents or actions - categories by which DialogFlow will classify user commands. We add examples of commands by which the intent will be determined. In the example commands it is better to use the examples of the entities that were added in the first step. So the algorithm will be easier to learn how to isolate the arguments we need. The main feature of DialogFlow - based on the entered patterns, Google's neural networks are trained and generate new key phrases. The more templates we add, the more correctly the intent will be determined. Do not forget to add an identification name for the intent, which we will continue to use in the code.

Intent has a name, there is a list of parameters. Not enough return value. In the settings you can add static answers. Dynamic responses are the responsibility of js code. Next, I will praise the second thing that makes Google’s approach even cooler - the official library for node.js. It deprives the joy of parsing json and routing intents through long ifs or switch-case blocks.

We initialize the DialogflowApp object, pass the request and response to the constructor. Through the getArgument () method, we get the entity from the command, tell () with the help of the helper, and handleRequest () with the help of the routing, depending on the intensity.

const express = require('express'); const Assistant = require('actions-on-google').DialogflowApp; const pizzaInfo = require('../pizza/pizza_info.js'); const app = express.Router(); // app.use('/', function (req, res, next) { // API.AI assistant . const assistant = new Assistant({request: req, response: res}); const ASK_INGREDIENTS_ACTION = 'listOfIngredients'; // const PIZZA_PARAMETER = 'pizza'; // function getIngredients(assistant) { let pizzaName = assistant.getArgument(PIZZA_PARAMETER); // Respond to the user with the current temperature. assistant.tell(pizzaInfo.getPizzaInfoByUserCommand(pizzaName)); } // let actionRouter = new Map(); actionRouter.set(ASK_INGREDIENTS_ACTION, getIngredients); assistant.handleRequest(actionRouter); }); module.exports = app; DialogflowApp will do all the dirty work for us. We can only prepare the data for the withdrawal. Now imagine how this facilitates the work, when we need to set up a pizza order, display the menu or order status, search for the nearest pizzeria, and a couple more teams. How many man hours we save with this technology!

We can conduct the initial testing of answers immediately in your personal account.

For more thorough testing, there is a simulator or device with Google now.

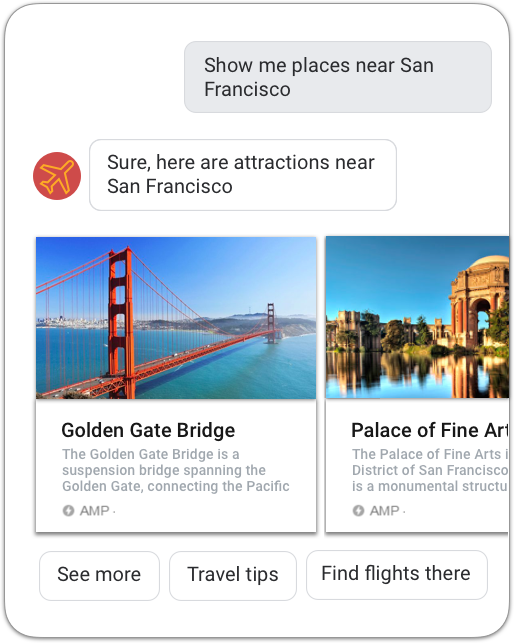

The answer in Google Assistant can consist not only of text, but also of different ui elements: buttons, cards, carousels, lists.

This is worth a stop. Further subtleties of technology - material on several articles. The basics that have been told now already give a huge benefit in building your application for Google Assistant. Pareto's law in action.

Apple SiriKit. Briefly about why Siri is lagging behind

If Dialogues is the basic equipment of a car, and Actions on Google is fully equipped, then SiriKit is a metro with two stations all over Moscow.

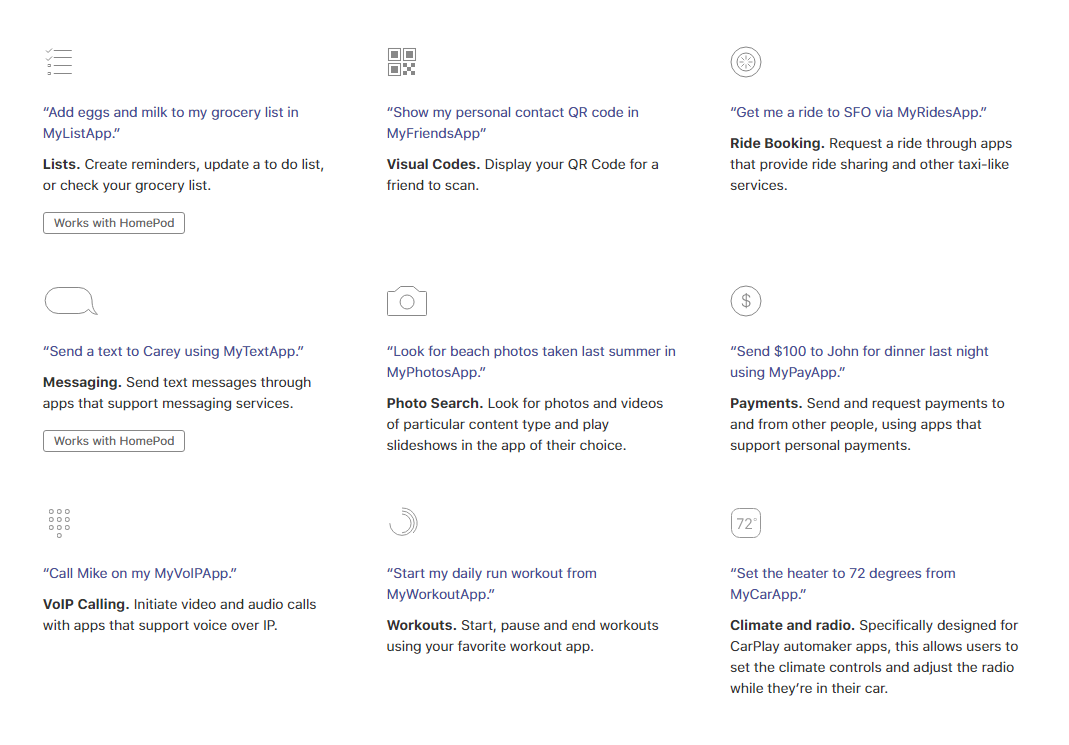

Two features that make Apple’s approach different than everyone else — attachment to the main application and mandatory compliance with one of the usage scenarios prescribed by Apple, that is, a complete lack of conversation customization. On the first point, everything is clear - without the main application on the device there will be no dialogue in Siri. Your dialogue is only an addition to the application.

The second point is the main disadvantage of SiriKit. All dialogues, the whole text is already registered. You can only add a few synonyms to the Siri vocabulary or impose a widget that appears on request. This is the only freedom Apple gives.

You're lucky if you want to do something similar to the commands in the screenshot below. We are not lucky.

If Apple does not fundamentally change the approach to custom dialogs on WWDC 2018, then Siri will remain at the bottom of the top. Voice assistants are the operating systems of the future. The system makes a cool application. When they cannot be done normally, the system loses. It is because of this IOS in the top. It is because of this that Siri lags behind in the race.

Expert opinion. About Amazon Alexa, production and future.

I think that voice development in our market in the near future will move from the entertaining thing into something serious, into production. The official announcement of the Russian-language Google Assistant, that is, Google I / O 2018, is likely to be the starting point. We must mentally prepare and learn from our Western colleagues. I asked our friend, Maxim Kokosh, Team Lead from Omnigon. He worked with Assistant and Alexa.

Maxim Kokosh, Team Lead Omnigon

Tell me in general terms, what did you develop?

I worked on updating one skill for Alexa and porting another on Alexa on Actions on Google using DialogFlow. And in a very short time, the week was for porting, a week for updating the Alexa skill.

About the Amazon project, we do not know anything.

You wrote about Alice, Siri, Google Assistant in the article, but not about Alexa. This is how to compare Android and Symbian and forget about iOS.

Alexa is Google's main competitor. As the production shows, it has significantly more users. Community much more. Documentation more. And the skills themselves are also much larger.

By the way, I would not call Alice’s approach a car. Two-wheeled cart on the donkey, this is the maximum. Compared to Google Actions and Alexa, everything is very bad there. Parsing string'i in 2018 sounds like wildness.

Why do you think Alexa’s audience is so much bigger?

I think this is due to the fact that Google later entered the game. Very little is invested in advertising. Although judging by the fact that the assistant is or can be installed on almost every Android device, they could become more popular.

What are the features of Alexa?

In Alex, it is convenient to work with the state within the session. For example, you ask to turn on the light in the bathroom. It turns out the Intent "Turn on the light", and the entity - the bathroom. Then you say: "Turn off." And here we have the context within the session. During the processing of the intent, we can set the “bathroom” state and use it when getting the following intents. Google has follow-up intents that serve similar purposes, but they are not as flexible.

Alexa clearly states how to set skills. This is a familiar user approach - store skills. Google sets skills automatically.

Review process is very strict on both platforms. The reviewers ensure that each response ends in a dot, so that user interaction looks natural, for this each platform has its own guidelines, so that there are no grammatical errors, even in the description, and there is a lot of text there. Amazon's review usually took 2-3 days, Google managed in 1 day.

The development of Google Actions itself seemed simpler: I chose Firebase action, hooked it up in 2 clicks, and now you are ready to develop everything. If you want to make requests out, you have to pay. If you use only Google services, then it is possible for free. AWS, due to its greater workload, looks more confusing.

Google can make bots using Google tables, the functionality is very limited, but it allows you to write bots without programming skills and is suitable for small tasks.

DialogFlow also, in theory, allows you to connect your bots to slak, Telegram, Cortana and the like in one click. There are a lot of integrations there, although the collaboration with Alexa does not work.

In principle, with knowledge of Actions on Google, you can work with Alexa.

You and I are people from the mobile sphere. Are mobile and voice development business processes different?

To my taste, the process is very similar, intents can be viewed as screens, an entity — as data in them, there is also a state system. From the point of view of testing, everything looks the same: correct handling of cases with loss of the Internet, unexpected responses from the API, etc. is required.

Can the development of dialogues become as popular as mobile development is now?

It seems to me that the assistants will not be able to achieve the popularity of mobile applications, because not all scenarios can be passed on to verbal interaction + information is perceived from the screen much faster than by ear. Also, the user does not always have the opportunity to verbally conduct a dialogue with an assistant, especially when it concerns sensitive data. Voice assistants can be quite utilitarian, for example, “order a taxi” or “order a pizza”, but it is unlikely that you will be able to be carried away for a long time. It is, rather, a spectacular addition to the house or car.

What is the future of voice assistants?

Voice assistants will occupy their niche and large enough. And they will become as common as Android Auto and CarPlay among cars. Already sold 20 million Amazon Echo devices and 4.6 million Google Home devices. Do not forget that many Android phones are equipped with Google Assistant.

Max, thank you very much for the detailed answers.

I hope they somehow help. :)

What awaits us ahead

All interactive platforms have room to grow, the ceiling is still far away. There has long been no intrigue in the battle for our vote.

useful links

Source: https://habr.com/ru/post/352982/

All Articles