Apache Kafka Review

Hi, Habr!

Today we offer you a relatively brief, but at the same time sensible and informative article about the device and Apache Kafka application options. We expect to translate and release the book by Nii Nakhid (Neha Narkhede) et. al until the end of summer.

Enjoy reading!

Introduction

')

Today they talk a lot about Kafka. Many leading IT companies are already actively and successfully using this tool. But what is Kafka?

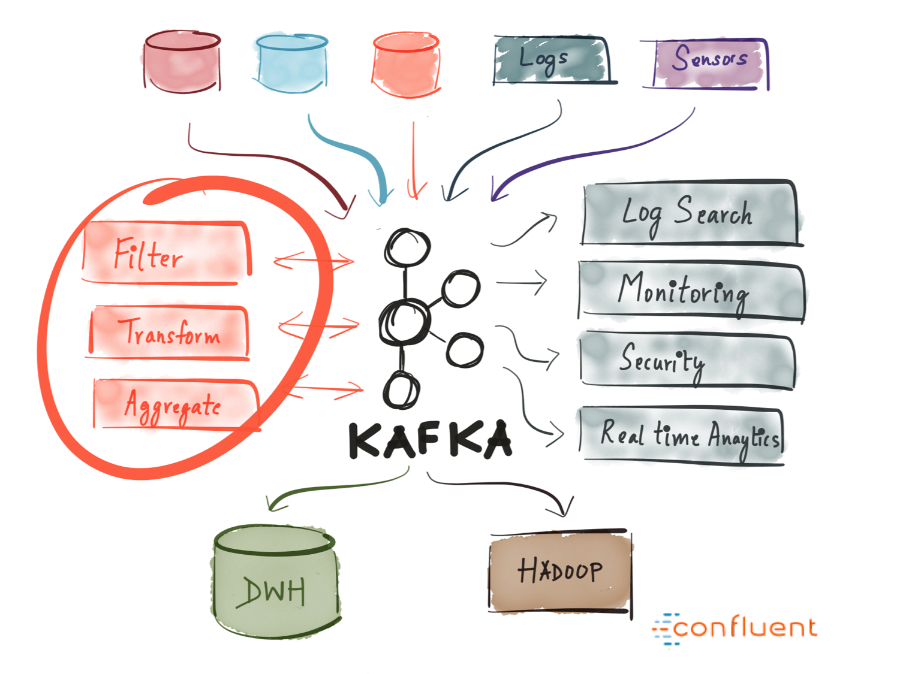

Kafka was developed by LinkedIn in 2011 and has since improved significantly. Today, Kafka is a whole platform providing sufficient redundancy for storing absurdly large amounts of data. It provides a message bus with colossal bandwidth, where you can process absolutely all data passing through it.

All this is cool, however, if you reduce Kafka to a dry residue, you get a distributed, horizontally scalable, fault-tolerant commit log .

It sounds wisely. Let's look at each of these terms and see what it means. And then we examine in detail how it all works.

Distributed

A distributed system is a system that, in a segmented form, works simultaneously on a set of machines that form a whole cluster; therefore, for the end user, they look like a single node. Distribution Kafka is that the storage, receipt and distribution of messages from him is organized on different nodes (the so-called "brokers").

The most important advantages of this approach are high availability and fault tolerance.

Horizontally scalable

Let's first define what vertical scalability is. Suppose we have a traditional database server, and it gradually ceases to cope with the increasing load. To cope with this problem, you can simply increase the resources (CPU, RAM, SSD) on the server. This is vertical scaling - additional resources are hung on the machine. With this "scaling up" there are two serious drawbacks:

Horizontal scalability solves exactly the same problem, we just get more and more cars involved. When adding a new machine, no downtime occurs, while the number of machines that can be added to the cluster is unlimited. The catch is that not all systems support horizontal scalability, many systems are not designed to work with clusters, and those that are designed are usually very difficult to operate.

After a certain threshold, horizontal scaling becomes much cheaper than vertical.

fault tolerance

Unallocated systems are characterized by the presence of a so-called single point of failure. If the only server of your database for some reason fails - you are trapped.

Distributed systems are designed in such a way that their configuration can be adjusted, adapting to failures. The Kafka cluster of five nodes remains operational, even if two nodes fall down. It should be noted that in order to ensure fault tolerance, it is necessary to partially sacrifice performance, because the better your system tolerates failures, the lower its performance.

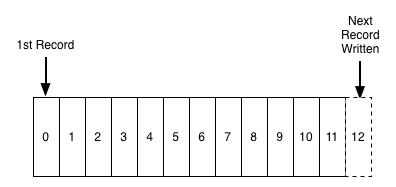

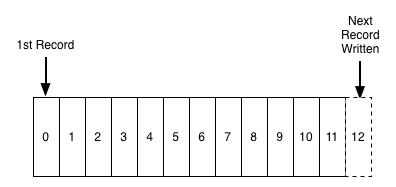

Commit log

The commit log (also referred to as the “lead write log”, “transaction log”) is a long-term ordered data structure, and you can only add data to such a structure. Entries from this log can neither be modified nor deleted. Information is read from left to right; this ensures the correct order of the elements.

Commit log layout

In essence, Kafka stores all its messages on disk (more on this below), and when organizing messages in the form of the structure described above, you can use sequential reading from the disk.

These two points drastically increase performance, since it is completely independent of the size of the data. Kafka works equally well, whether you have 100KB or 100TB of data on your server.

How does all this work?

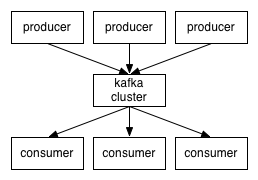

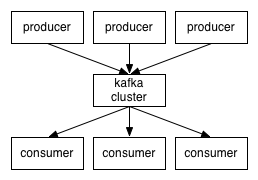

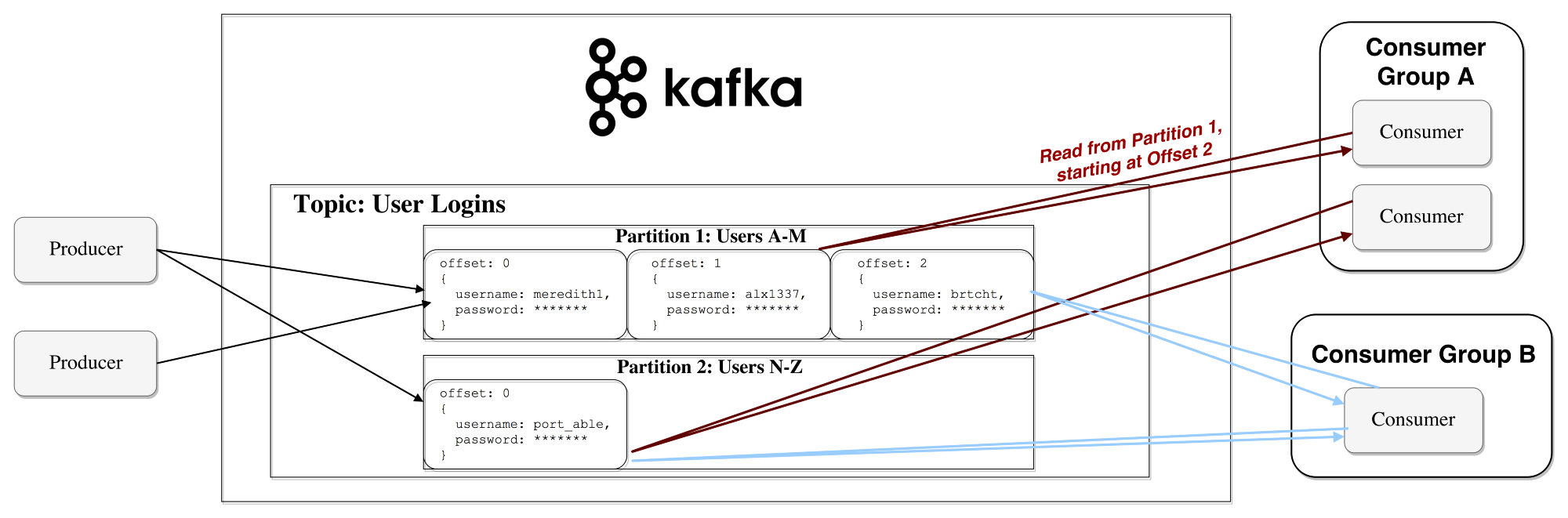

Applications ( generators ) send messages ( records ) to the Kafka node ( broker ), and the specified messages are processed by other applications, so-called consumers . These messages are saved in the thread , and consumers subscribe to the thread to receive new messages.

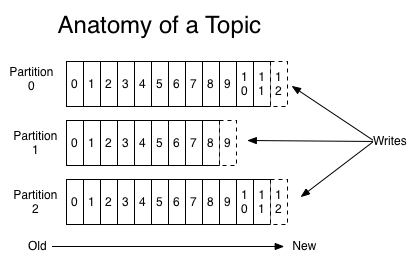

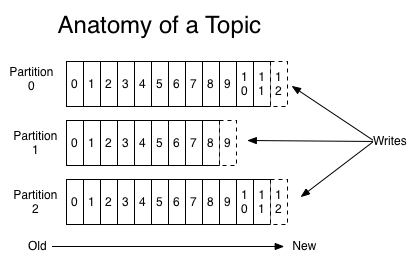

Topics can grow, so large topics are divided into smaller sections to improve performance and scalability. (example: let's say you saved user login requests; in this case, you can distribute them by the first character in the user name )

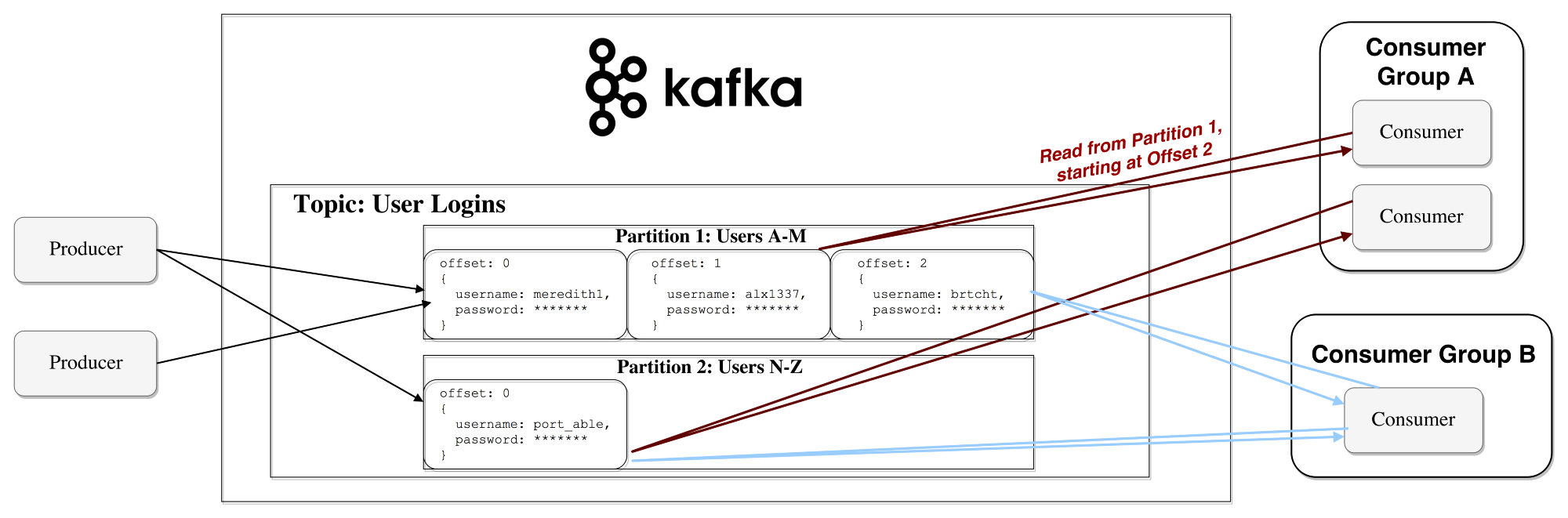

Kafka guarantees that all messages within the section will be ordered exactly in the sequence in which they arrived. A specific message can be found by its offset, which can be considered a normal index in the array, a sequence number that is incremented by one for each new message in this section.

In Kafka, the principle of "stupid broker - smart consumer" is observed. Thus, Kafka does not keep track of which records are read by the consumer and then deleted, but simply keeps them for a predetermined period of time (for example, a day), or until a certain threshold is reached. Consumers themselves questioned Kafka if he had any new messages, and indicate which records they need to read. Thus, they can increase or decrease the offset by moving to the desired record; at the same time events can be replayed or re-processed.

It should be noted that in reality this is not about single consumers, but about groups, in each of which there is one or more consumer processes. In order to prevent a situation where two processes could read the same message twice, each section is tied to only one consumer process within a group.

So the data stream is arranged.

Long-term storage on disk

As mentioned above, Kafka actually stores its records on disk and does not hold anything in RAM. Yes, the question is possible, is there any point in this. But Kafka has many optimizations that make this happen:

Thanks to all these optimizations, Kafka delivers messages almost as quickly as the network itself.

Data distribution and replication

Now let's discuss how fault tolerance is achieved in Kafka and how it distributes data between nodes.

Data replication

The data from the segment is replicated to multiple brokers so that the data is saved if one of the brokers fails.

In any case, one of the brokers always “owns” the section: this broker is the one on which applications perform read and write operations to the section. This broker is called the " leading section ". It replicates the received data to N other brokers, the so-called slaves . Data is also stored on the slaves, and any of them can be selected as the master if the current master fails.

This way, you can configure guarantees to ensure that any message that is successfully published is not lost. When it is possible to change the replication ratio, you can partially sacrifice performance for the sake of increased data protection and durability (depending on how critical they are).

Thus, if one of the leaders ever fails, the follower can take his place.

However, it is logical to ask:

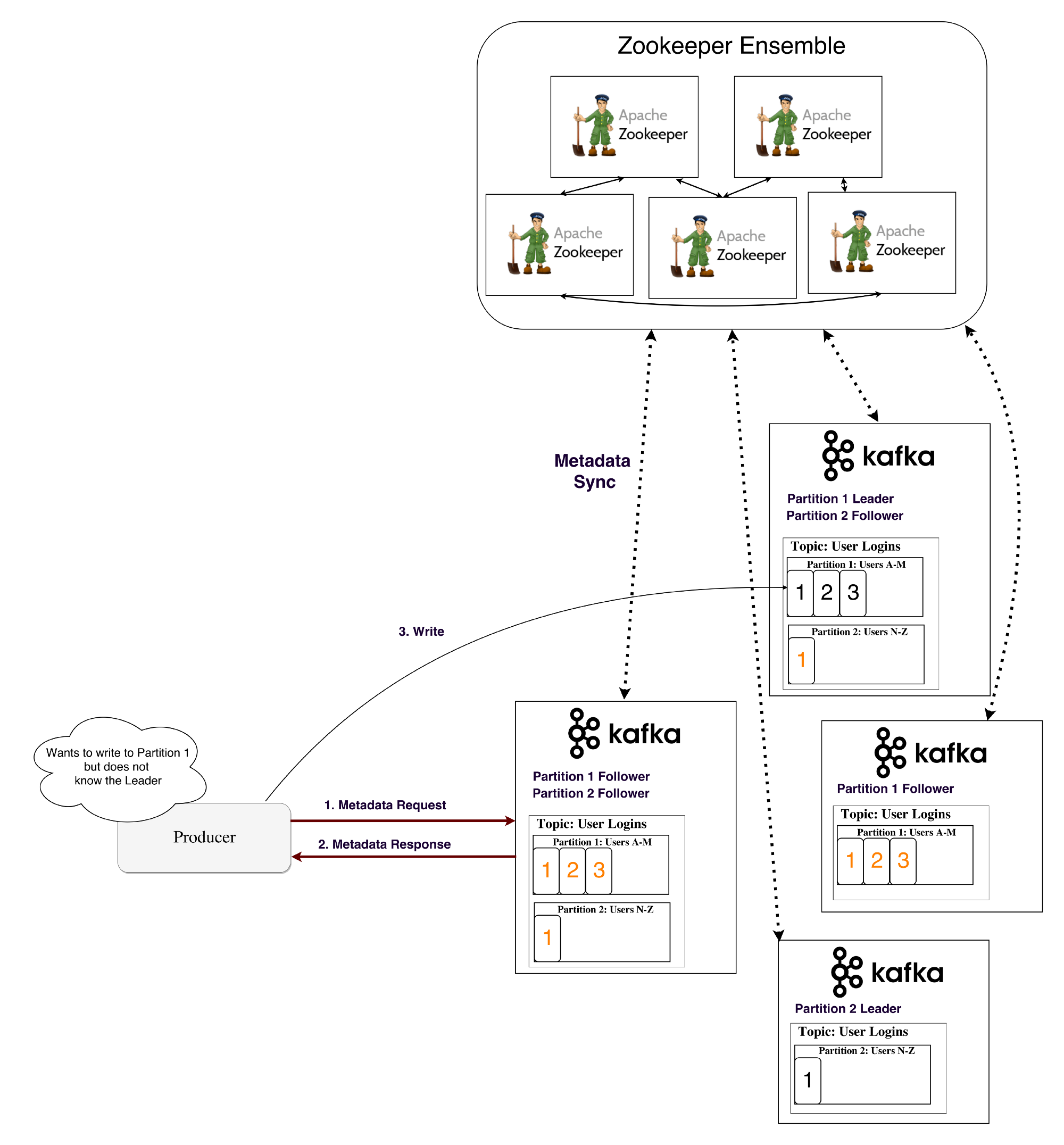

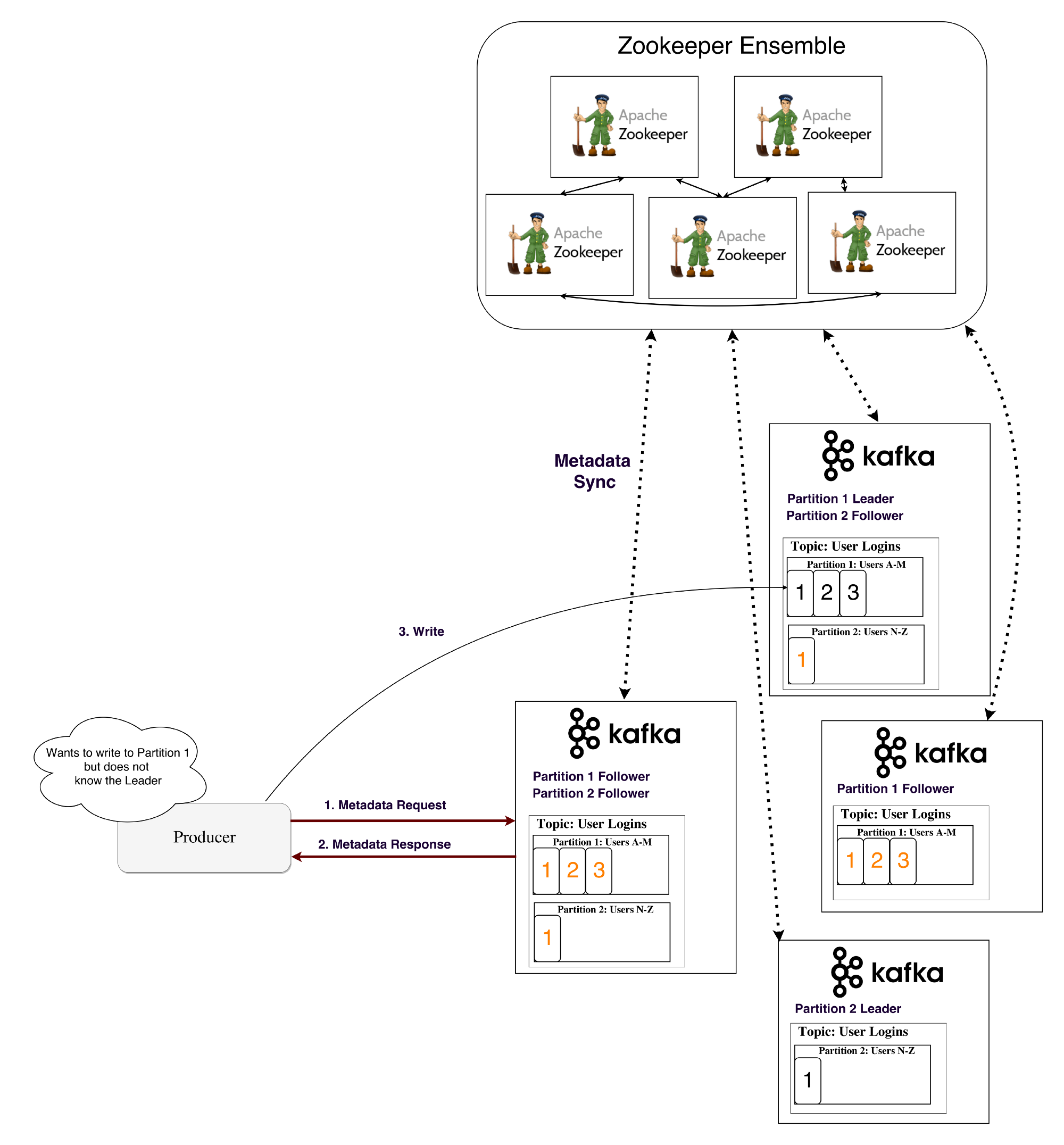

Kafka uses a service called Zookeeper to store such metadata.

What is a zookeeper?

Zookeeper is a distributed repository of keys and values. It is highly optimized for reading, but writing to it is slower. Most often, Zookeeper is used to store metadata and process clustering mechanisms (heart rate, distributed update / configuration operations, etc.).

Thus, clients of this service (Kafka brokers) can subscribe to it - and will receive information about any changes that may occur. This is how brokers will know when a leader in a section changes. Zookeeper is exceptionally fault tolerant (as it should be), since Kafka depends heavily on it.

It is used to store all kinds of metadata, in particular:

How does the generator / consumer determine the lead broker for this section?

Previously, the Generator and Consumers directly connected to Zookeeper and learned from him this (and also other) information. Now Kafka is moving away from such a bundle and, starting, respectively, from versions 0.8 and 0.9, clients firstly select metadata directly from Kafka brokers, and brokers turn to Zookeeper.

Metadata Stream

Streams

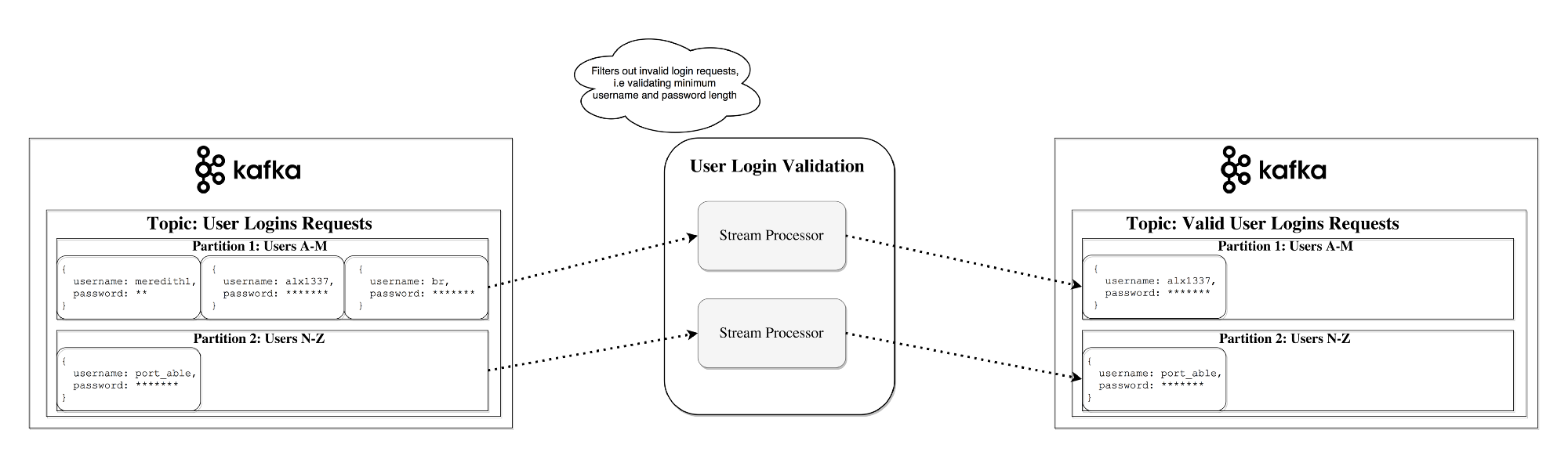

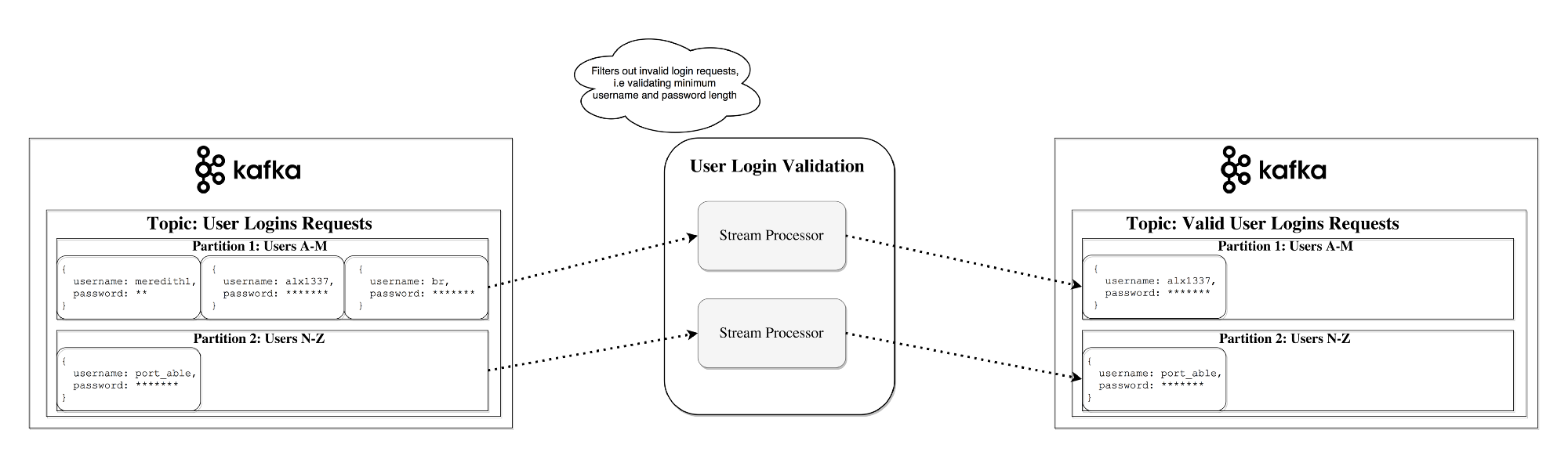

The stream processor in Kafka is responsible for all the following work: it receives continuous data streams from the input topics, somehow processes this input and feeds the data stream to the output topics (either to external services, databases, to the recycle bin, and anywhere ...)

Simple processing can be performed directly on the generators / consumers API, however, more complex transformations — for example, thread integration, in Kafka are performed using the Streams API integrated library.

This API is intended for use within your own code base, it does not work on a broker. Functionally, it is similar to the consumer API, facilitates the horizontal scaling of thread processing and its distribution among several applications (similar to groups of consumers).

Stateless processing

Stateless processing is a deterministic processing flow that does not depend on any external factors. As an example, consider the following simple data conversion: attach information to a string

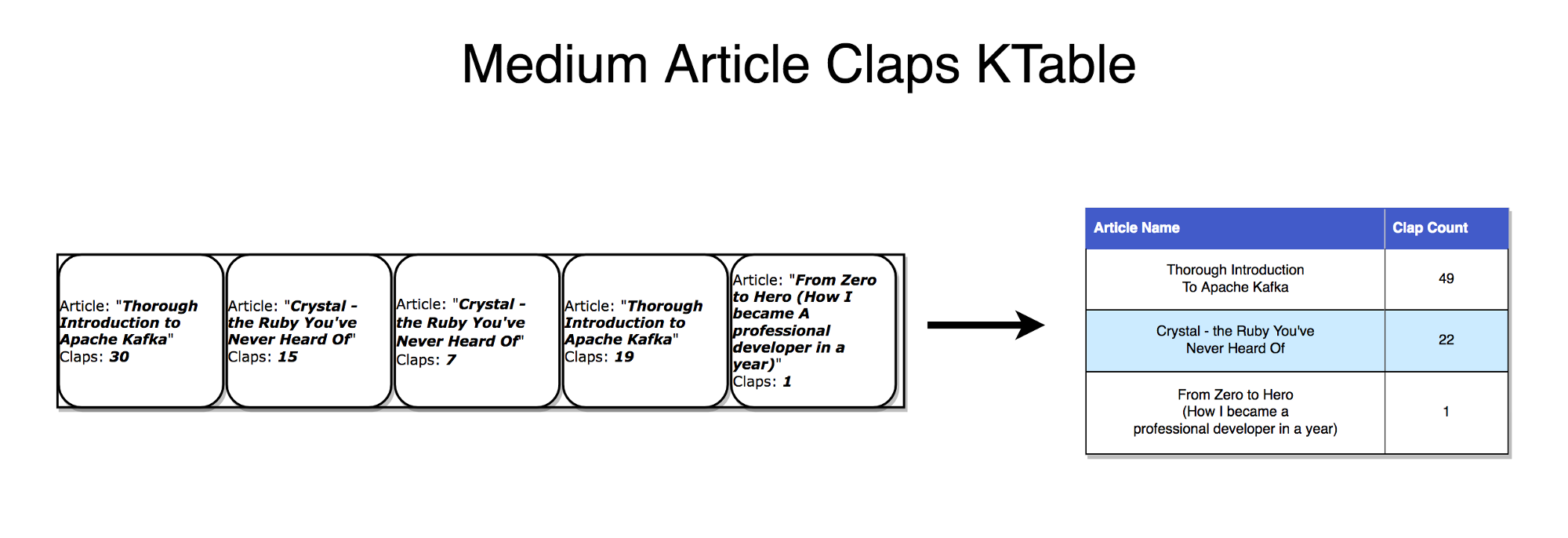

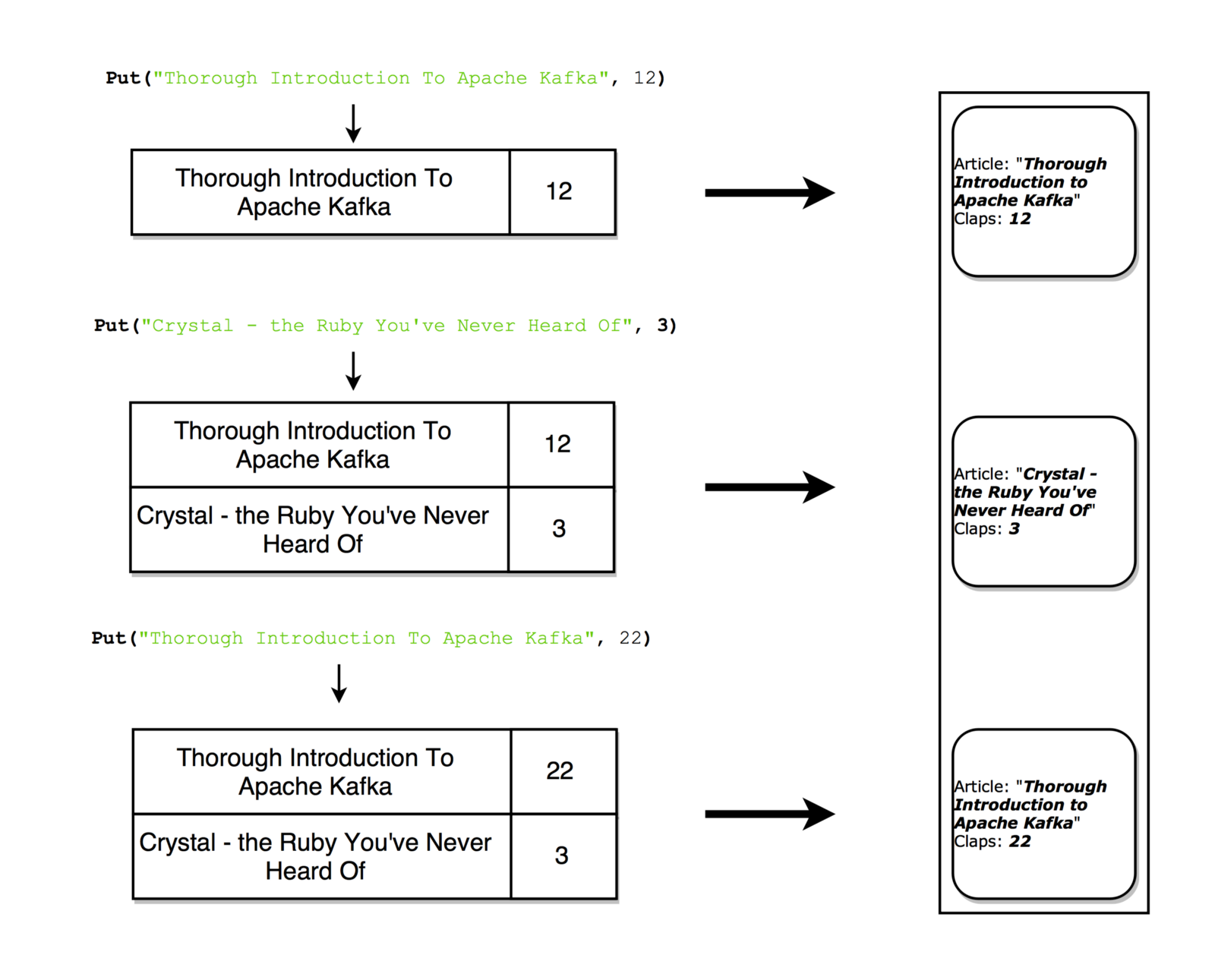

Stream-tabular dualism

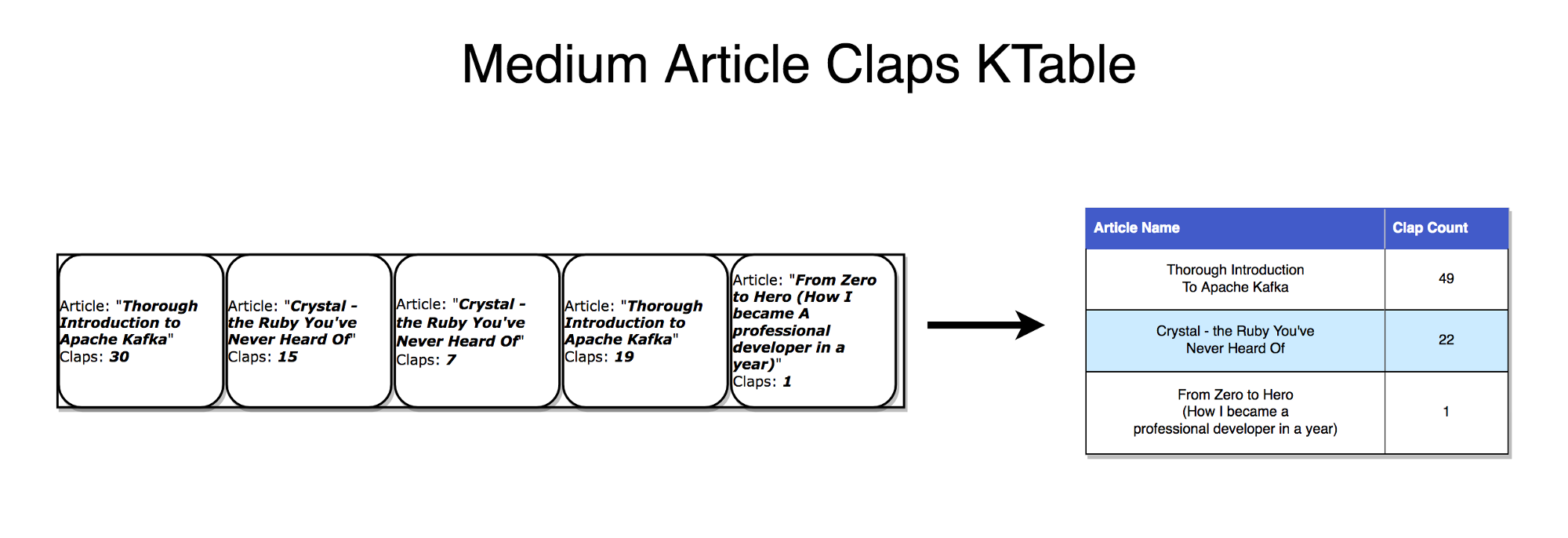

It is important to understand that threads and tables are essentially the same thing. A stream can be interpreted as a table, and a table as a stream.

Stream as a table

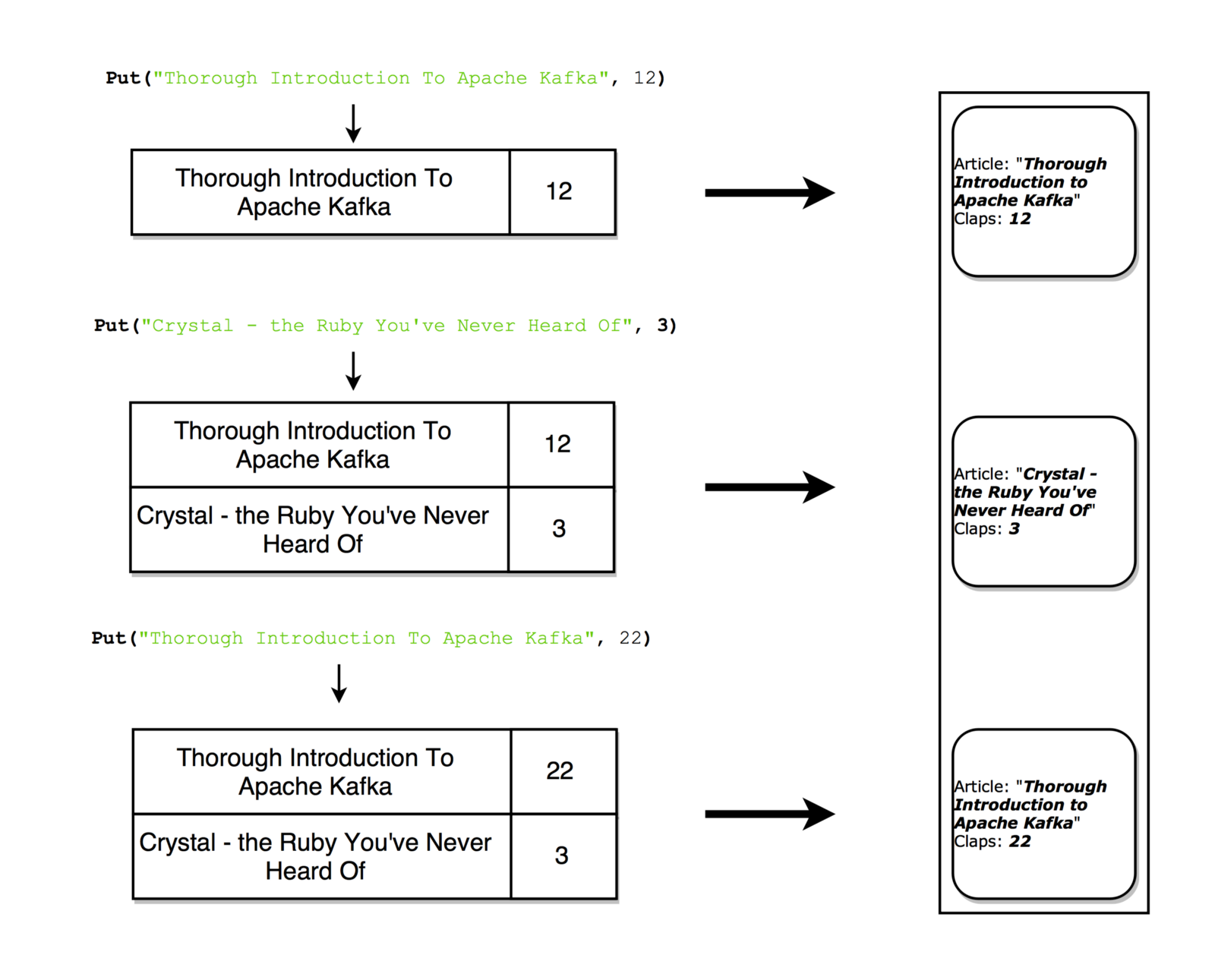

If you pay attention to how synchronous replication of the database is performed, it is obvious that we are talking about streaming replication , where any changes in the tables are sent to the replica server (replica). The Kafka stream can be interpreted in the same way - as a stream of updates for data that aggregates and gives the final result appearing in the table. Such streams are stored in the local RocksDB (by default) and are called KTable .

Table as a stream

A table can be considered an instant snapshot, reflecting the last value for each key in the stream. Similarly, you can create a table from stream entries, and from table updates you can create a stream with a change log.

With each update, you can take a snapshot of the stream (record)

Stateful processing

Some simple operations, such as

The problem with maintaining state in stream processors is that these processors sometimes fail! Where to store this state to provide fault tolerance?

A simplified approach is to simply store all state in a remote database and connect to this repository over the network. The problem is that the data locality is then lost, and the data itself is repeatedly distilled over the network - both factors significantly slow down your application. A more subtle, but important problem is that the activity of your stream processing task will depend heavily on the remote database - that is, this task will not be self-sufficient (all your processing may fail if another team makes any changes to the database) .

So which approach is better?

Recall the dualism of tables and streams. It is through this property that streams can be converted to tables located exactly where the processing takes place. Also, we get a mechanism for ensuring fault tolerance - we store the threads on the Kafka broker.

The stream processor can save its state in a local table (for example, in RocksDB), which the input stream will update (possibly, after any arbitrary transformations). If this process fails, we can recover the relevant data by reproducing the stream.

You can even ensure that the remote database generates a stream and, in fact, broadcasts the change log, on the basis of which you will rebuild the table on the local machine.

Stateful processing, connecting KStream with KTable

KSQL

As a rule, the code for stream processing is written in one of the languages for the JVM, since the only official client of the Kafka Streams API works with it.

Sample KSQL installation

KSQL is a new feature that allows you to write simple stream jobs in a familiar language that resembles SQL.

We configure the KSQL server and interactively request it through the CLI to control the processing. It works exactly with the same abstractions (KStream and KTable), guarantees the same advantages as the Streams API (scalability, fault tolerance) and greatly simplifies working with streams.

It may not sound inspiring, but in practice it is very useful for testing the material. Moreover, this model allows you to join streaming processing even to those who are not involved in the development as such (for example, product owners). I recommend to watch a small introductory video - see for yourself how simple everything is here.

Alternative to stream processing

Kafka flows - the perfect combination of strength and simplicity. Perhaps, Kafka is the best tool for performing streaming tasks available on the market, and integrating with Kafka is much easier than with alternative tools for streaming processing ( Storm , Samza , Spark , Wallaroo ).

The problem with most other stream processing tools is that they are difficult to deploy (and difficult to handle). A batch framework, such as Spark, requires:

Unfortunately, when trying to solve all these problems within one framework, this framework turns out to be too invasive. The framework attempts to control all sorts of aspects of deploying, configuring code, monitoring it, and packaging the code.

Kafka Streams allows you to formulate your own deployment strategy when you need it, moreover, you can work with the tool to your taste: Kubernetes , Mesos , Nomad , Docker Swarm , etc.

Kafka Streams is designed primarily for you to organize stream processing in your application, however, without the operational difficulties associated with supporting the next cluster. The only potential drawback of such an instrument is its close connection with Kafka, however, in the current reality, when stream processing is mainly performed with the help of Kafka, this small drawback is not so terrible.

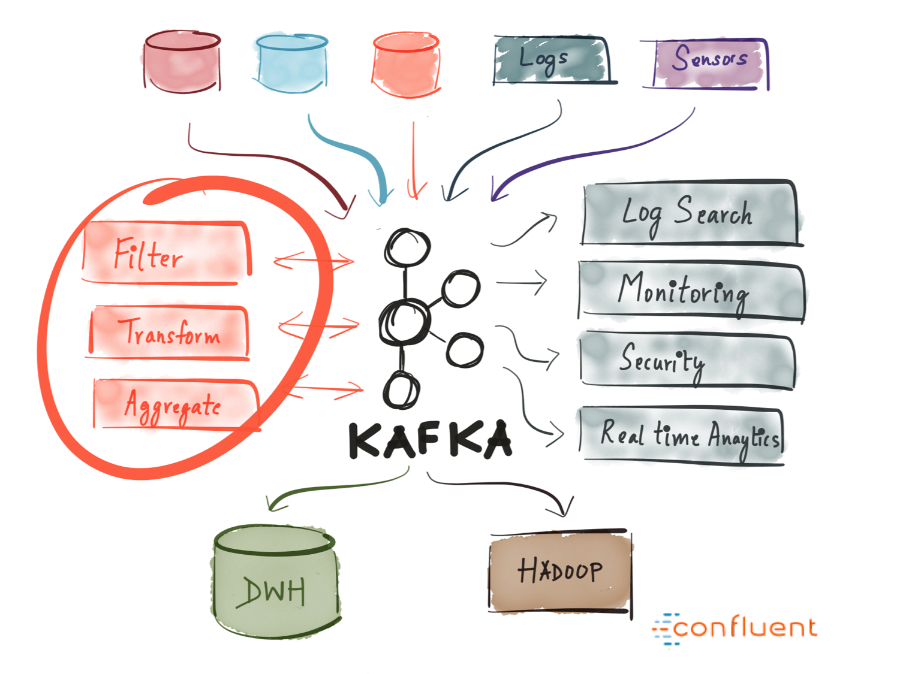

When should I use Kafka?

As mentioned above, Kafka allows you to pass a huge number of messages through a centralized environment, and then store them without worrying about performance and not fearing that data will be lost.

Thus, Kafka will be perfectly arranged in the very center of your system and will work as a link that ensures the interaction of all your applications. Kafka can be a central element of an event-oriented architecture, which will allow you to properly detach applications from each other.

Kafka allows you to easily distinguish between communication between different (micro) services. Working with Streams API, it has become easier than ever to write business logic that enriches data from the Kafka topic before services begin to consume them. This opens up tremendous opportunities - so I strongly recommend to study how Kafka is used in different companies.

Results

Apache Kafka is a distributed streaming platform that allows you to handle trillions of events per day. Kafka guarantees minimal delays, high throughput, provides fault tolerant pipelines operating on the “publish / subscribe” principle and allows to handle event streams.

In this article, we got acquainted with the basic semantics of Kafka (we learned what a generator, broker, consumer, topic is), learned about some optimization options (page cache), learned what fault tolerance Kafka guarantees in data replication and briefly discussed its powerful streaming capabilities.

Today we offer you a relatively brief, but at the same time sensible and informative article about the device and Apache Kafka application options. We expect to translate and release the book by Nii Nakhid (Neha Narkhede) et. al until the end of summer.

Enjoy reading!

Introduction

')

Today they talk a lot about Kafka. Many leading IT companies are already actively and successfully using this tool. But what is Kafka?

Kafka was developed by LinkedIn in 2011 and has since improved significantly. Today, Kafka is a whole platform providing sufficient redundancy for storing absurdly large amounts of data. It provides a message bus with colossal bandwidth, where you can process absolutely all data passing through it.

All this is cool, however, if you reduce Kafka to a dry residue, you get a distributed, horizontally scalable, fault-tolerant commit log .

It sounds wisely. Let's look at each of these terms and see what it means. And then we examine in detail how it all works.

Distributed

A distributed system is a system that, in a segmented form, works simultaneously on a set of machines that form a whole cluster; therefore, for the end user, they look like a single node. Distribution Kafka is that the storage, receipt and distribution of messages from him is organized on different nodes (the so-called "brokers").

The most important advantages of this approach are high availability and fault tolerance.

Horizontally scalable

Let's first define what vertical scalability is. Suppose we have a traditional database server, and it gradually ceases to cope with the increasing load. To cope with this problem, you can simply increase the resources (CPU, RAM, SSD) on the server. This is vertical scaling - additional resources are hung on the machine. With this "scaling up" there are two serious drawbacks:

- There are certain limits related to equipment capabilities. Infinitely can not grow.

- Such work is usually associated with downtime, and large companies can not afford to downtime.

Horizontal scalability solves exactly the same problem, we just get more and more cars involved. When adding a new machine, no downtime occurs, while the number of machines that can be added to the cluster is unlimited. The catch is that not all systems support horizontal scalability, many systems are not designed to work with clusters, and those that are designed are usually very difficult to operate.

After a certain threshold, horizontal scaling becomes much cheaper than vertical.

fault tolerance

Unallocated systems are characterized by the presence of a so-called single point of failure. If the only server of your database for some reason fails - you are trapped.

Distributed systems are designed in such a way that their configuration can be adjusted, adapting to failures. The Kafka cluster of five nodes remains operational, even if two nodes fall down. It should be noted that in order to ensure fault tolerance, it is necessary to partially sacrifice performance, because the better your system tolerates failures, the lower its performance.

Commit log

The commit log (also referred to as the “lead write log”, “transaction log”) is a long-term ordered data structure, and you can only add data to such a structure. Entries from this log can neither be modified nor deleted. Information is read from left to right; this ensures the correct order of the elements.

Commit log layout

- Do you mean that the data structure in Kafka is so simple?In many ways, yes. This structure forms the very core of Kafka and is absolutely priceless, because it provides orderliness, and orderliness - deterministic processing. Both of these problems in distributed systems are difficult to solve.

In essence, Kafka stores all its messages on disk (more on this below), and when organizing messages in the form of the structure described above, you can use sequential reading from the disk.

- Read and write operations are performed at a constant O (1) time (if the record ID is known), which, compared to O (log N) operations on a disk in a different structure, is an incredible time saver, since each feeding operation is costly.

- Read and write operations do not affect each other (the read operation does not block the write operation and vice versa, which cannot be said about operations with balanced trees).

These two points drastically increase performance, since it is completely independent of the size of the data. Kafka works equally well, whether you have 100KB or 100TB of data on your server.

How does all this work?

Applications ( generators ) send messages ( records ) to the Kafka node ( broker ), and the specified messages are processed by other applications, so-called consumers . These messages are saved in the thread , and consumers subscribe to the thread to receive new messages.

Topics can grow, so large topics are divided into smaller sections to improve performance and scalability. (example: let's say you saved user login requests; in this case, you can distribute them by the first character in the user name )

Kafka guarantees that all messages within the section will be ordered exactly in the sequence in which they arrived. A specific message can be found by its offset, which can be considered a normal index in the array, a sequence number that is incremented by one for each new message in this section.

In Kafka, the principle of "stupid broker - smart consumer" is observed. Thus, Kafka does not keep track of which records are read by the consumer and then deleted, but simply keeps them for a predetermined period of time (for example, a day), or until a certain threshold is reached. Consumers themselves questioned Kafka if he had any new messages, and indicate which records they need to read. Thus, they can increase or decrease the offset by moving to the desired record; at the same time events can be replayed or re-processed.

It should be noted that in reality this is not about single consumers, but about groups, in each of which there is one or more consumer processes. In order to prevent a situation where two processes could read the same message twice, each section is tied to only one consumer process within a group.

So the data stream is arranged.

Long-term storage on disk

As mentioned above, Kafka actually stores its records on disk and does not hold anything in RAM. Yes, the question is possible, is there any point in this. But Kafka has many optimizations that make this happen:

- Kafka has a protocol that unites messages into groups. Therefore, when network requests are, messages are added to groups, which reduces network costs, and the server, in turn, saves a batch of messages in one go, after which consumers can immediately select large linear sequences of such messages.

- Linear reads and writes to disk occur quickly. There is a known problem: modern disks work relatively slowly because of the need to supply heads, however, with large linear operations, this problem disappears.

- These linear operations are highly optimized by the operating system by read ahead (large groups of blocks are selected in advance) and late writing (small logical write operations are combined into large physical write operations).

- Modern operating systems cache the disk in free RAM. This technique is called page cache .

- Since Kafka saves messages in a standardized binary format that does not change throughout the entire chain (generator-> broker-> consumer), zero-copy optimization is appropriate here. In this case, the OS copies the data from the page cache directly to the socket, practically bypassing the broker application related to Kafka.

Thanks to all these optimizations, Kafka delivers messages almost as quickly as the network itself.

Data distribution and replication

Now let's discuss how fault tolerance is achieved in Kafka and how it distributes data between nodes.

Data replication

The data from the segment is replicated to multiple brokers so that the data is saved if one of the brokers fails.

In any case, one of the brokers always “owns” the section: this broker is the one on which applications perform read and write operations to the section. This broker is called the " leading section ". It replicates the received data to N other brokers, the so-called slaves . Data is also stored on the slaves, and any of them can be selected as the master if the current master fails.

This way, you can configure guarantees to ensure that any message that is successfully published is not lost. When it is possible to change the replication ratio, you can partially sacrifice performance for the sake of increased data protection and durability (depending on how critical they are).

Thus, if one of the leaders ever fails, the follower can take his place.

However, it is logical to ask:

- How does the generator / consumer know which broker is leading this section?In order for the generator / consumer to record / read information in this section, the application needs to know which broker is leading here, right? This information needs to be taken somewhere.

Kafka uses a service called Zookeeper to store such metadata.

What is a zookeeper?

Zookeeper is a distributed repository of keys and values. It is highly optimized for reading, but writing to it is slower. Most often, Zookeeper is used to store metadata and process clustering mechanisms (heart rate, distributed update / configuration operations, etc.).

Thus, clients of this service (Kafka brokers) can subscribe to it - and will receive information about any changes that may occur. This is how brokers will know when a leader in a section changes. Zookeeper is exceptionally fault tolerant (as it should be), since Kafka depends heavily on it.

It is used to store all kinds of metadata, in particular:

- Offset of consumer groups within the section (although modern clients store offsets in a separate Kafka topic)

- ACL (Access Control Lists) - used to restrict access / authorization

- Generator and consumer quotas - maximum message limits per second

- Leading sections and their level of performance

How does the generator / consumer determine the lead broker for this section?

Previously, the Generator and Consumers directly connected to Zookeeper and learned from him this (and also other) information. Now Kafka is moving away from such a bundle and, starting, respectively, from versions 0.8 and 0.9, clients firstly select metadata directly from Kafka brokers, and brokers turn to Zookeeper.

Metadata Stream

Streams

The stream processor in Kafka is responsible for all the following work: it receives continuous data streams from the input topics, somehow processes this input and feeds the data stream to the output topics (either to external services, databases, to the recycle bin, and anywhere ...)

Simple processing can be performed directly on the generators / consumers API, however, more complex transformations — for example, thread integration, in Kafka are performed using the Streams API integrated library.

This API is intended for use within your own code base, it does not work on a broker. Functionally, it is similar to the consumer API, facilitates the horizontal scaling of thread processing and its distribution among several applications (similar to groups of consumers).

Stateless processing

Stateless processing is a deterministic processing flow that does not depend on any external factors. As an example, consider the following simple data conversion: attach information to a string

"Hello" -> "Hello, World!"

Stream-tabular dualism

It is important to understand that threads and tables are essentially the same thing. A stream can be interpreted as a table, and a table as a stream.

Stream as a table

If you pay attention to how synchronous replication of the database is performed, it is obvious that we are talking about streaming replication , where any changes in the tables are sent to the replica server (replica). The Kafka stream can be interpreted in the same way - as a stream of updates for data that aggregates and gives the final result appearing in the table. Such streams are stored in the local RocksDB (by default) and are called KTable .

Table as a stream

A table can be considered an instant snapshot, reflecting the last value for each key in the stream. Similarly, you can create a table from stream entries, and from table updates you can create a stream with a change log.

With each update, you can take a snapshot of the stream (record)

Stateful processing

Some simple operations, such as

map() or filter() , are performed statelessly, and we don’t have to store any data regarding their processing. However, in practice, most operations are performed with state saving (for example, count() ), so you naturally have to store the state that is currently in place.The problem with maintaining state in stream processors is that these processors sometimes fail! Where to store this state to provide fault tolerance?

A simplified approach is to simply store all state in a remote database and connect to this repository over the network. The problem is that the data locality is then lost, and the data itself is repeatedly distilled over the network - both factors significantly slow down your application. A more subtle, but important problem is that the activity of your stream processing task will depend heavily on the remote database - that is, this task will not be self-sufficient (all your processing may fail if another team makes any changes to the database) .

So which approach is better?

Recall the dualism of tables and streams. It is through this property that streams can be converted to tables located exactly where the processing takes place. Also, we get a mechanism for ensuring fault tolerance - we store the threads on the Kafka broker.

The stream processor can save its state in a local table (for example, in RocksDB), which the input stream will update (possibly, after any arbitrary transformations). If this process fails, we can recover the relevant data by reproducing the stream.

You can even ensure that the remote database generates a stream and, in fact, broadcasts the change log, on the basis of which you will rebuild the table on the local machine.

Stateful processing, connecting KStream with KTable

KSQL

As a rule, the code for stream processing is written in one of the languages for the JVM, since the only official client of the Kafka Streams API works with it.

Sample KSQL installation

KSQL is a new feature that allows you to write simple stream jobs in a familiar language that resembles SQL.

We configure the KSQL server and interactively request it through the CLI to control the processing. It works exactly with the same abstractions (KStream and KTable), guarantees the same advantages as the Streams API (scalability, fault tolerance) and greatly simplifies working with streams.

It may not sound inspiring, but in practice it is very useful for testing the material. Moreover, this model allows you to join streaming processing even to those who are not involved in the development as such (for example, product owners). I recommend to watch a small introductory video - see for yourself how simple everything is here.

Alternative to stream processing

Kafka flows - the perfect combination of strength and simplicity. Perhaps, Kafka is the best tool for performing streaming tasks available on the market, and integrating with Kafka is much easier than with alternative tools for streaming processing ( Storm , Samza , Spark , Wallaroo ).

The problem with most other stream processing tools is that they are difficult to deploy (and difficult to handle). A batch framework, such as Spark, requires:

- Manage a large number of tasks on a pool of machines and effectively distribute them in a cluster.

- To do this, you need to dynamically package the code and physically deploy it to the nodes where it will be executed (plus configuration, libraries, etc.)

Unfortunately, when trying to solve all these problems within one framework, this framework turns out to be too invasive. The framework attempts to control all sorts of aspects of deploying, configuring code, monitoring it, and packaging the code.

Kafka Streams allows you to formulate your own deployment strategy when you need it, moreover, you can work with the tool to your taste: Kubernetes , Mesos , Nomad , Docker Swarm , etc.

Kafka Streams is designed primarily for you to organize stream processing in your application, however, without the operational difficulties associated with supporting the next cluster. The only potential drawback of such an instrument is its close connection with Kafka, however, in the current reality, when stream processing is mainly performed with the help of Kafka, this small drawback is not so terrible.

When should I use Kafka?

As mentioned above, Kafka allows you to pass a huge number of messages through a centralized environment, and then store them without worrying about performance and not fearing that data will be lost.

Thus, Kafka will be perfectly arranged in the very center of your system and will work as a link that ensures the interaction of all your applications. Kafka can be a central element of an event-oriented architecture, which will allow you to properly detach applications from each other.

Kafka allows you to easily distinguish between communication between different (micro) services. Working with Streams API, it has become easier than ever to write business logic that enriches data from the Kafka topic before services begin to consume them. This opens up tremendous opportunities - so I strongly recommend to study how Kafka is used in different companies.

Results

Apache Kafka is a distributed streaming platform that allows you to handle trillions of events per day. Kafka guarantees minimal delays, high throughput, provides fault tolerant pipelines operating on the “publish / subscribe” principle and allows to handle event streams.

In this article, we got acquainted with the basic semantics of Kafka (we learned what a generator, broker, consumer, topic is), learned about some optimization options (page cache), learned what fault tolerance Kafka guarantees in data replication and briefly discussed its powerful streaming capabilities.

Source: https://habr.com/ru/post/352978/

All Articles