Apache Ignite 2.4 BaselineTopology Concept

At the time of the appearance of the Ignite project in the Apache Software Foundation, it was positioned as a pure in-memory-solution: a distributed cache that picks up data from a traditional DBMS in memory in order to gain access time. But already in release 2.1, a module of built-in persistence ( Native Persistence ) appeared, which allows to classify Ignite as a full-fledged distributed database. Since then, Ignite has ceased to depend on external systems for ensuring persistent data storage, and the configuration and administration rake bundling, which users have repeatedly attacked, has disappeared.

However, the persistent mode raises its scripts and new questions. How to prevent intractable data conflicts in a split-brain situation? Can we refuse to rebalance the partitions, if the node output now does not mean that the data on it is lost? How to automate additional actions like cluster activation ? BaselineTopology to help us.

BaselineTopology: first acquaintance

Conceptually, a cluster in in-memory-mode is simple: there are no dedicated nodes, everyone has equal rights, you can assign a cache partition to each, send a computational task, or deploy a service. If the node leaves the topology, then user requests will be serviced by other nodes, and the data of the output node will no longer be available.

With the help of cluster groups and user attributes, the user can, based on the selected attributes, distribute the nodes into classes. However, restarting any node causes it to “forget” what was happening to it before restarting. The cache data will be re-queried in the cluster, the computational tasks will be re-executed, and the services will be re-deployed. Once the nodes do not store states, they are completely interchangeable.

In the persistence mode, the nodes retain their state even after a restart: during the start process, the node data is read from the disk, and its state is restored. Therefore, rebooting a node does not lead to the need to completely copy data from other nodes of the cluster (a process known as rebalancing): the data will be restored from the local disk at the time of the fall. This opens up opportunities for very attractive network interaction optimizations, and as a result, the performance of the entire cluster will increase. So, we need to somehow distinguish the set of nodes that are able to maintain their state after the restart, from all the others. This task serves BaselineTopology.

It is worth noting that the user can use persistent-caches simultaneously with in-memory-caches. The latter will continue to live the same life as before: consider all nodes equal and interchangeable, begin the redistribution of partitions when the nodes exit to maintain the number of copies of data - BaselineTopology will regulate only the behavior of persistent caches.

BaselineTopology (further BLT) at the topmost level is simply a collection of node identifiers that have been configured to store data. How to create a BLT in a cluster and manage it, we will understand a little later, but now let's see what is the use of this concept in real life.

Persisted data: no entry allowed

The problem of distributed systems, known as the split brain, is already complicated when using persistence becomes even more insidious.

A simple example: we have a cluster and a replicated cache.

The standard approach to ensuring fault tolerance is to maintain the redundancy of a resource. Ignite uses this approach to ensure reliable data storage: depending on the configuration, the cache can store more than one copy of the key on different nodes of the cluster. Replicated cache stores one copy on each node, partitioned - a fixed number of copies.

More details about this feature can be found in the documentation .

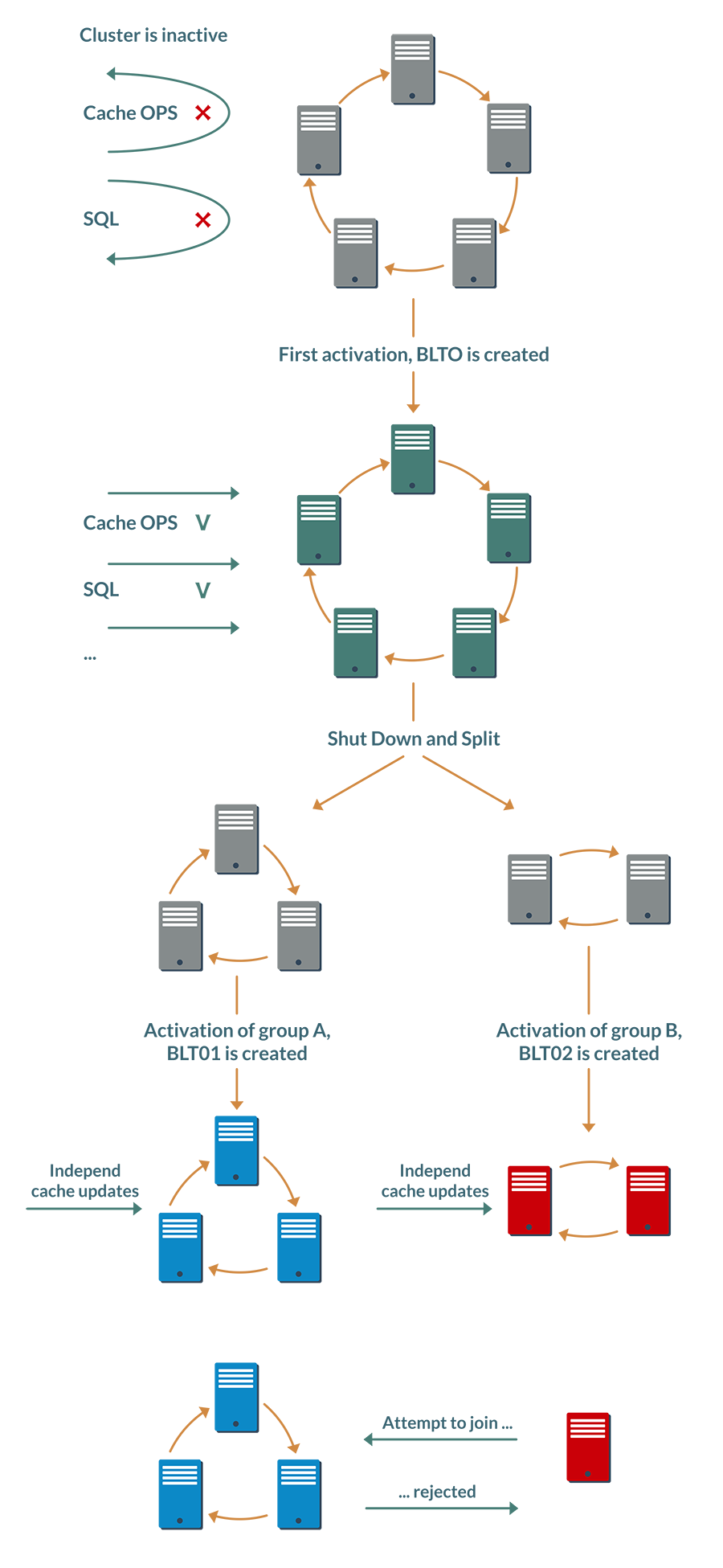

We will perform simple manipulations on it in the following sequence:

- Stop the cluster and start the node group A.

- Update any keys in the cache.

- Stop group A and run group B.

- Apply other updates for the same keys.

Once Ignite is working in database mode, then when the nodes of the second group of updates are stopped, they will not be lost: they will be available as soon as we start the second group again. So after restoring the original cluster state, different nodes may have different values for the same key.

Without much folly, just stopping and starting nodes, we were able to bring the data in a cluster into an indefinite state, which is automatically impossible to resolve.

Preventing this situation is one of the tasks of the BLT.

The idea is that in the persistence mode, the launch of the cluster goes through an additional stage, activation.

At the very first activation, the first BaselineTopology is created and stored on the disk, which contains information about all the nodes present in the cluster at the time of activation.

This information also includes a hash calculated on the basis of online site identifiers. If, upon subsequent activation, some nodes are missing in the topology (for example, the cluster rebooted and one node was brought up for maintenance), then the hash is recalculated, and the previous value is stored in the activation history within the same BLT.

Thus, BaselineTopology supports a chain of hashes that describe the composition of the cluster at the time of each activation.

At stages 1 and 3, after starting the node groups, the user will have to explicitly activate the incomplete cluster, and each online node will update the BLT locally, adding a new hash to it. All nodes of each group will be able to calculate the same hashes, but they will be different in different groups.

You could already guess what happens next. If a node tries to join a “foreign” group, it will be determined that the node is activated independently of the nodes of this group, and it will be denied access.

It is worth noting that this validation mechanism does not provide complete protection against conflicts in a Split-Brain situation. If the cluster is divided into two halves in such a way that at least one copy of the partition remains in each half, and the halves are not reactivated, the situation will occur when half of the conflicting changes in the same data occur. BLT does not refute the CAP-theorem , but protects against conflicts with obvious administrative errors.

Buns

In addition to preventing data conflicts, BLT allows you to implement a couple of optional, but pleasant options.

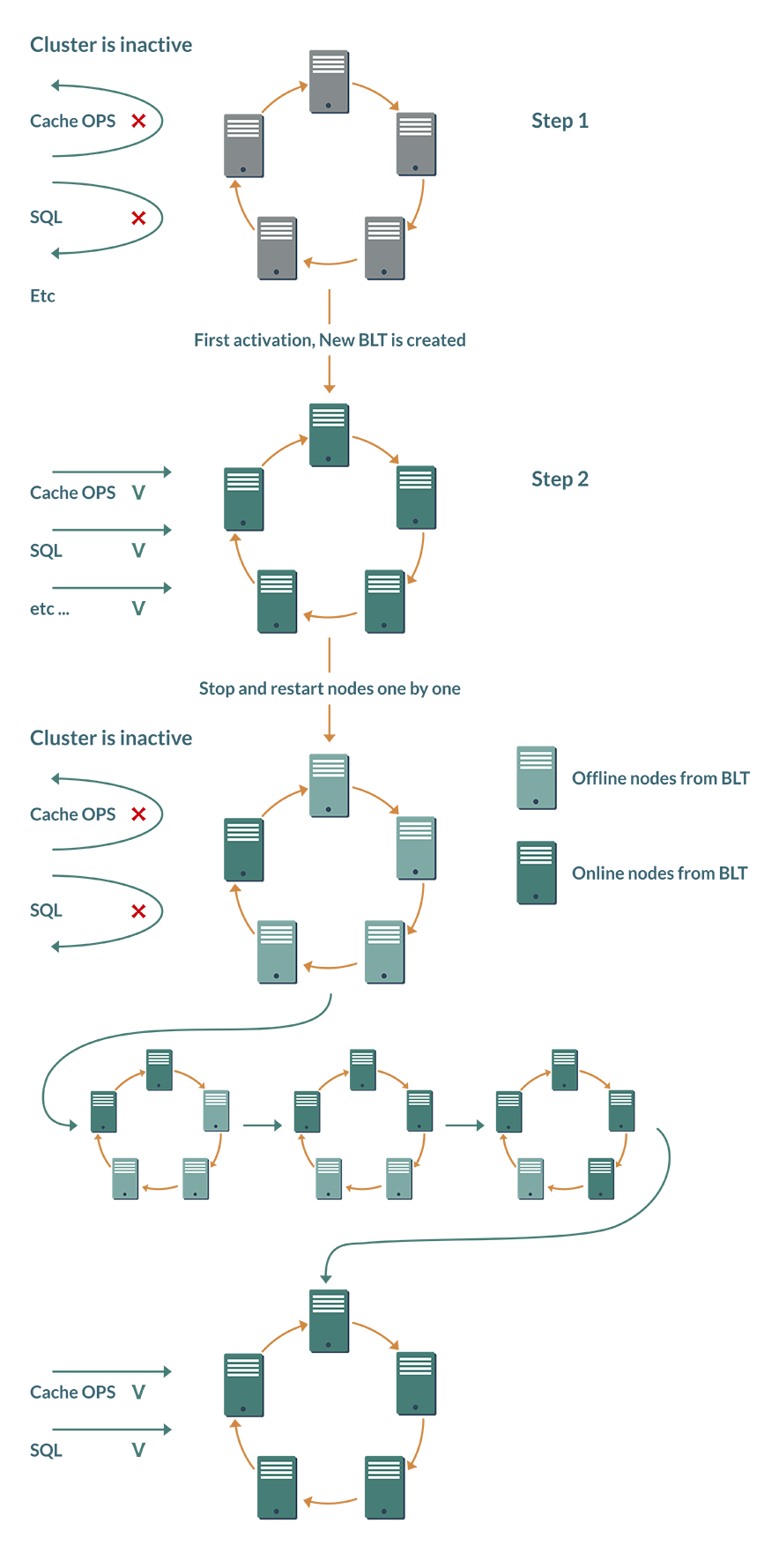

Bun №1 - minus one manual action. The activation already mentioned above had to be performed manually after each cluster reboot; out-of-the-box automation tools were missing. If there is a BLT, the cluster can independently decide on activation.

Although the Ignite cluster is an elastic system, and nodes can be added and displayed dynamically, BTL comes from the concept that in database mode, the user maintains a stable cluster composition.

When the cluster is first activated, the newly created BaselineTopology remembers which nodes should be present in the topology. After a reboot, each node checks the status of the other BLT nodes. Once all the nodes are online, the cluster is automatically activated.

Bun number 2 - savings on network interaction. The idea, again, is based on the assumption that the topology will remain stable for a long time. Previously, leaving a node from the topology even for 10 minutes led to the launch of rebalancing of cache partitions to maintain the number of backups. But why waste network resources and slow down the cluster if problems with a node are resolved in a matter of minutes, and it will be online again. BaselineTopology optimizes this behavior.

Now the cluster by default assumes that the problem node will soon be back in operation. Part of the caches during this time will work with fewer backups, but it will not lead to interruption or slowdown of the service.

Managing BaselineTopology

Well, we already know one way: BaselineTopology is automatically created when the cluster is first activated. In this case, all server nodes that were online at the time of activation will be included in BLT.

Manual BLT administration is performed using the control-script from the Ignite distribution, more about which can be found on the documentation page on cluster activation.

The script provides a very simple API and supports only three operations: adding a node, deleting a node and installing a new BaselineTopology.

Moreover, if adding nodes is a fairly simple operation without any special implications, then removing the active node from the BLT is a more subtle task. Its performance under the load is fraught with races, in the worst case - the hang of the entire cluster. Therefore, deletion is accompanied by an additional condition: the node to be deleted must be offline. If you try to delete an online node, the control script will return an error and the operation will not start.

Therefore, when deleting a node from the BLT, one manual operation is still required: stop the node. However, this usage scenario is clearly not the main one, so the additional labor costs are not too large.

The Java interface for managing BLT is even simpler and provides just one method to set BaselineTopology from the list of nodes.

An example of changing BaselineTopology using the Java API:

Ignite ignite = /* ... */; IgniteCluster cluster = ignite.cluster(); // BaselineTopology. Collection<BaselineNode> curBaselineTop = cluster.baselineTopology(); for (ClusterNode node : cluster.topology(cluster.currentTopologyVersion())) { // , BaselineTopology // (shouldAdd(ClusterNode) - ) if (shouldAdd(node) curTop.add(node); } // BaselineTopology cluster.setBaselineTopology(curTop); Conclusion

Ensuring data integrity is the most important task that any data warehouse should solve. In the case of distributed DBMS, to which Apache Ignite belongs, the solution of this problem becomes significantly more difficult.

The concept of BaselineTopology allows you to close the part of real-world scenarios in which the integrity of the data can be broken.

Another priority Ignite is performance, and here BLT also saves resources and improves the response time of the system.

The functionality of Native Persistence appeared in the project quite recently, and, no doubt, it will develop, become more reliable, more productive and more convenient to use. And along with it, the concept of BaselineTopology will develop.

')

Source: https://habr.com/ru/post/352916/

All Articles