Data Center for Technopark: from “Concrete” to Tier Facility Certification

During the construction of the technical support center of one of the largest Russian technology parks, I was responsible for the engineering infrastructure. The seventh facility in Russia has been certified by Tier Facility of the reputable international institute Uptime Institute. About what it cost us, what solutions we used and how the data center was tested for compliance with international standards, I will tell you in this photopost.

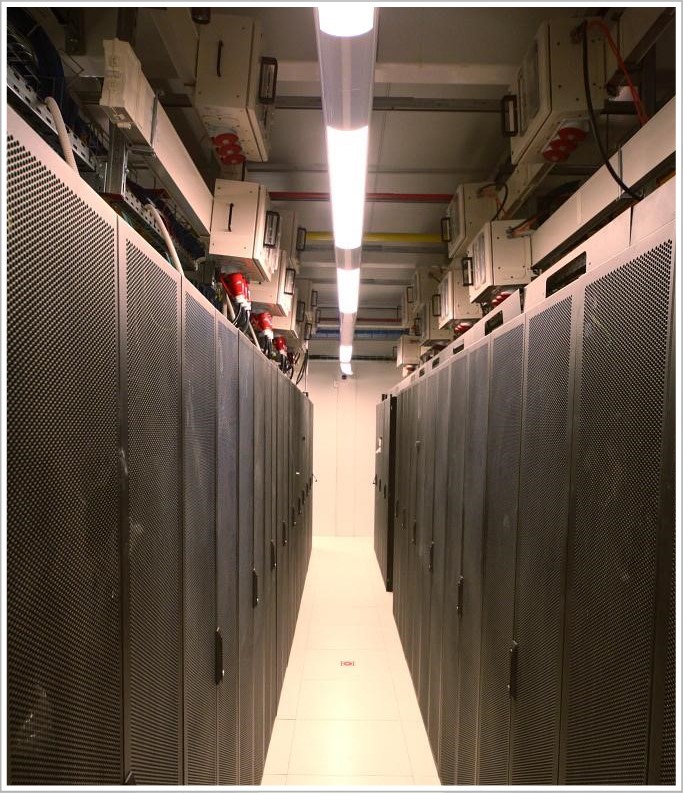

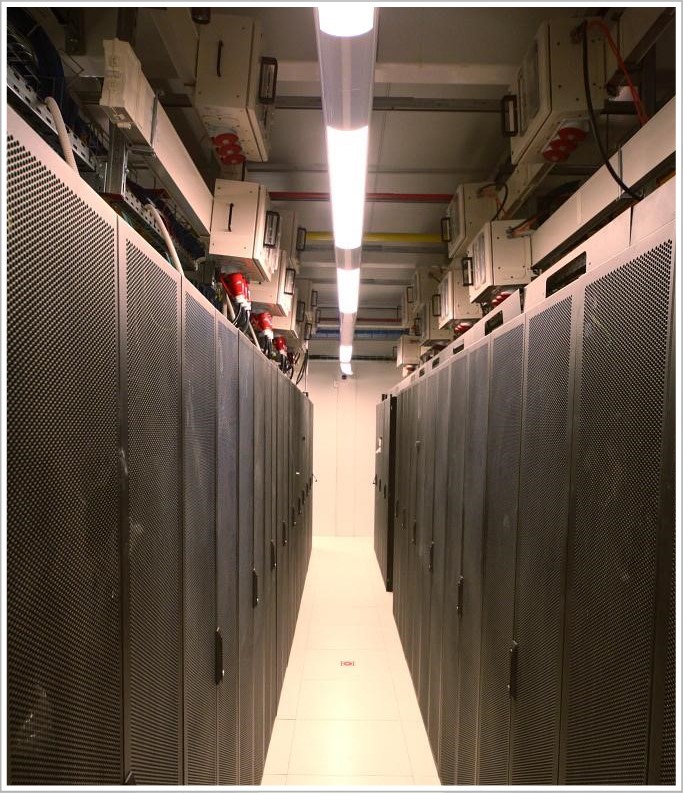

The Technical Support Center (TEC) of the Zhiguli Valley Technopark is a two-story building. It includes six engine rooms with a total area of 843 square meters. m and holds 326 racks with an average power of 7 kW each (maximum load on the rack - 20 kW). And we, specialists of LANIT-Integration , saw it for the first time even at the stage of the concrete frame. At that time it had no communications. We had to create the entire engineering and network infrastructure, as well as part of the computing infrastructure for the automation of engineering systems.

To begin, I will talk a little about the project as a whole, and then I will show all the “stuffing”.

')

So, in the technical support center of the Zhiguli Valley it was necessary to install servers, storage systems and other equipment necessary for residents of the innovation center of the Samara region. Of course, such equipment requires special operating conditions: energy, thermal conditions, humidity levels, etc., so it was economically expedient to install the equipment in one place.

We examined the building, examined the documentation available to the customer, and began to develop project documentation (which was to undergo a state expert review). The biggest difficulty was that we were given only three months to develop the design and working documentation. In parallel with the working documentation, we had to prepare a part of the documentation in English for the examination of the international institute Uptime Institute.

Since the density of engineering systems in the data center is very high, and the deadlines are tight, it was necessary to plan in detail the work and materials and equipment supplies.

Again, because of the "burning" terms, they decided to use high-tech pre-fabricated modular structures - with their help they avoided additional construction work, did not make fireproof partitions and powerful waterproofing. This solution protects the premises from water, fire, dust and other factors dangerous to IT equipment, and the assembly of a modular design takes only 1-2 weeks.

After the examination of the project began to develop working documentation and order equipment. By the way, about the equipment: since the project got into a crisis year and there were difficulties with financing, we had to adjust all the work for the financial plan and above all the rest to buy expensive large-sized equipment - the price for it was fixed by the manufacturer.

Most of the workers' specialties we recruited in the region (up to 150 people), as for narrow specialists and engineers, we had to send them on a long trip from Moscow.

In the end, everything turned out. The story of the main test - certification of the Uptime Institute - I have reserved for the very end. And now let's see how everything works out here.

To get to the data center, you must have an RFID pass and be identified at the entrance to the data center. The building also has all the doors under control, in some rooms there is an admission only after double identification of the employee.

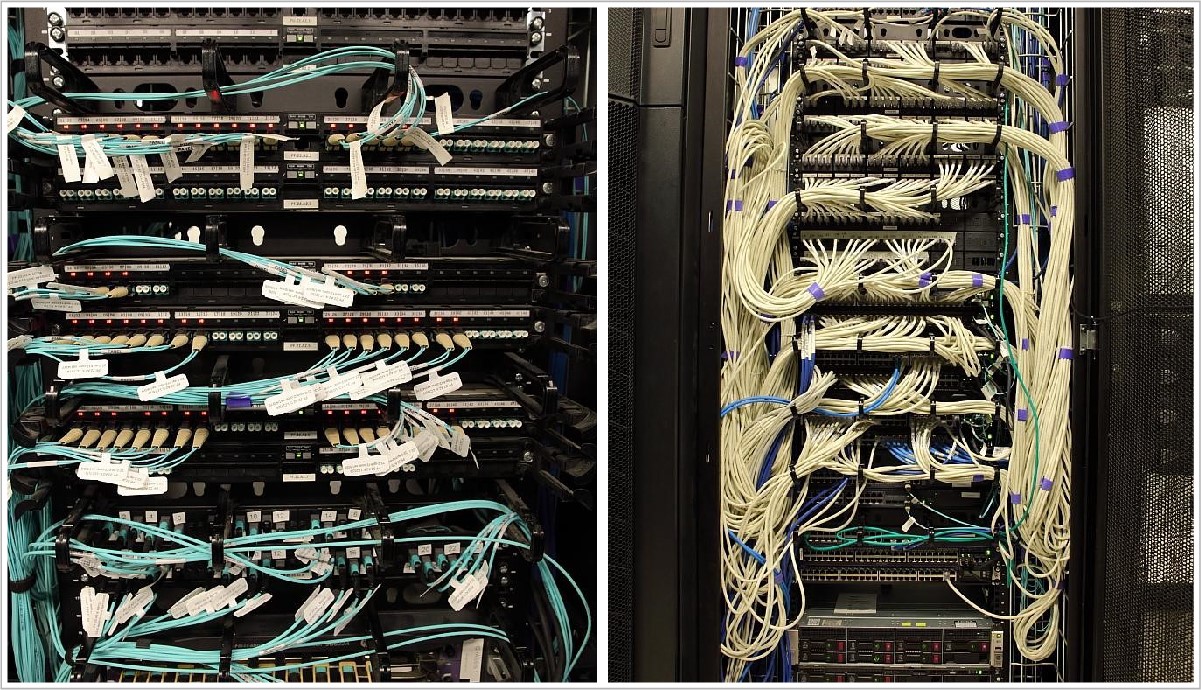

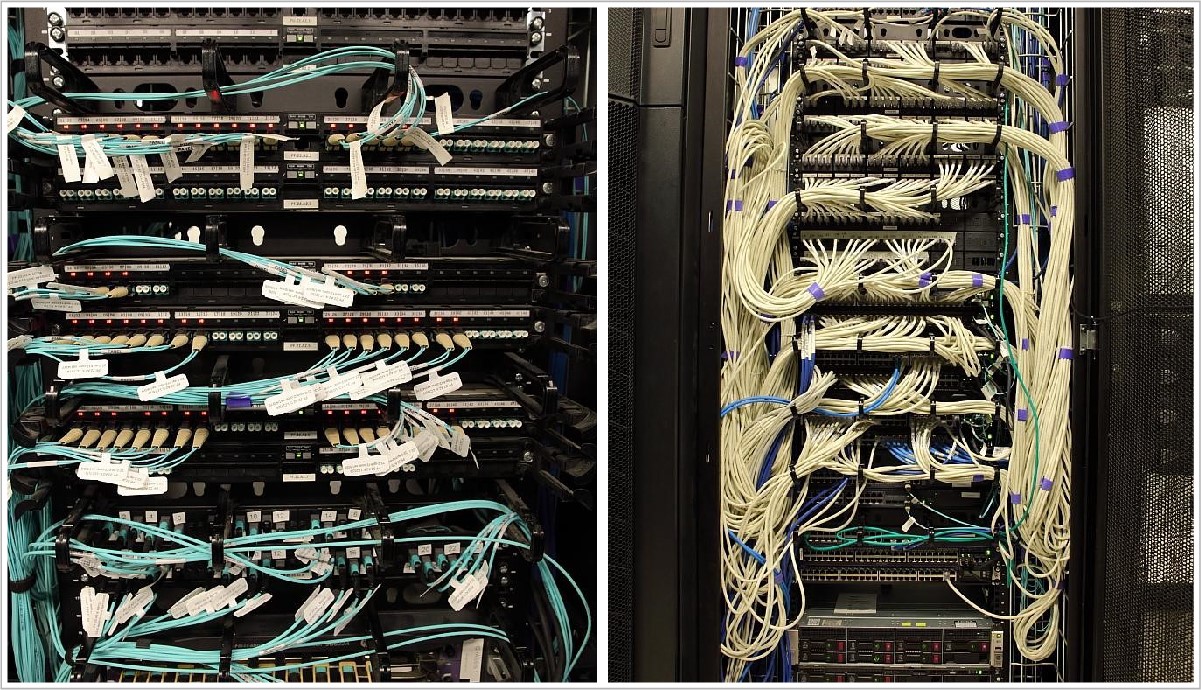

For the data network, we used HP FlexFabric and Juniper QFabric switches. Panduit's intelligent SCS with the PanView iQ physical infrastructure management system allows you to remotely monitor and automatically document the state of the physical network layer, as well as perform intelligent control of the switching field.

It is possible to control the correctness of the equipment connection or abnormal connection to the ports of the SCS. Pre-terminated cables allow, if necessary, changing the configuration of the SCS in those areas where it is required.

In its own transformer substation of the central heating station, 4 independent transformers are installed, which supply electricity to the distribution equipment (IU1-4) of the central heating station.

The total allocated power at the data center is 4.7 MW.

8 independent beams are departing from the transformer substation in IU DTE and through the system of guaranteed power on the basis of diesel-rotary uninterruptible power supply units (DDIBP), they feed 8 IGS of the data center, 4 IGN of the uninterrupted power supply system and 4 IGs of the guaranteed power supply system. The power supply system is built according to the 2N scheme, cables run from two different sides of the data center building into two spaced electrical switchboards.

Scheme 2N implements redundancy for power supply. Any feed beam for maintenance can be taken out of service, while the data center will be fully operational.

For uninterrupted and guaranteed power supply, we installed diesel-dynamic uninterruptible power supplies Piller. The power of each 1 MW uninterrupted and 650 kW of guaranteed power that allows you to ensure the work of the data center in full.

In the case of termination of the electricity supply, the rotor rotates by inertia and the electrical installation continues to generate a current for a few more seconds. This short period of time is enough to start the diesel generator and to reach the operating speed. Thus, the operation of the equipment does not stop for a second.

N + 1 construction scheme. With such a power of generated electricity, a diesel engine has a high fuel consumption - more than 350 liters per hour, which required an additional external fuel storage capacity of more than 100 tons of diesel fuel.

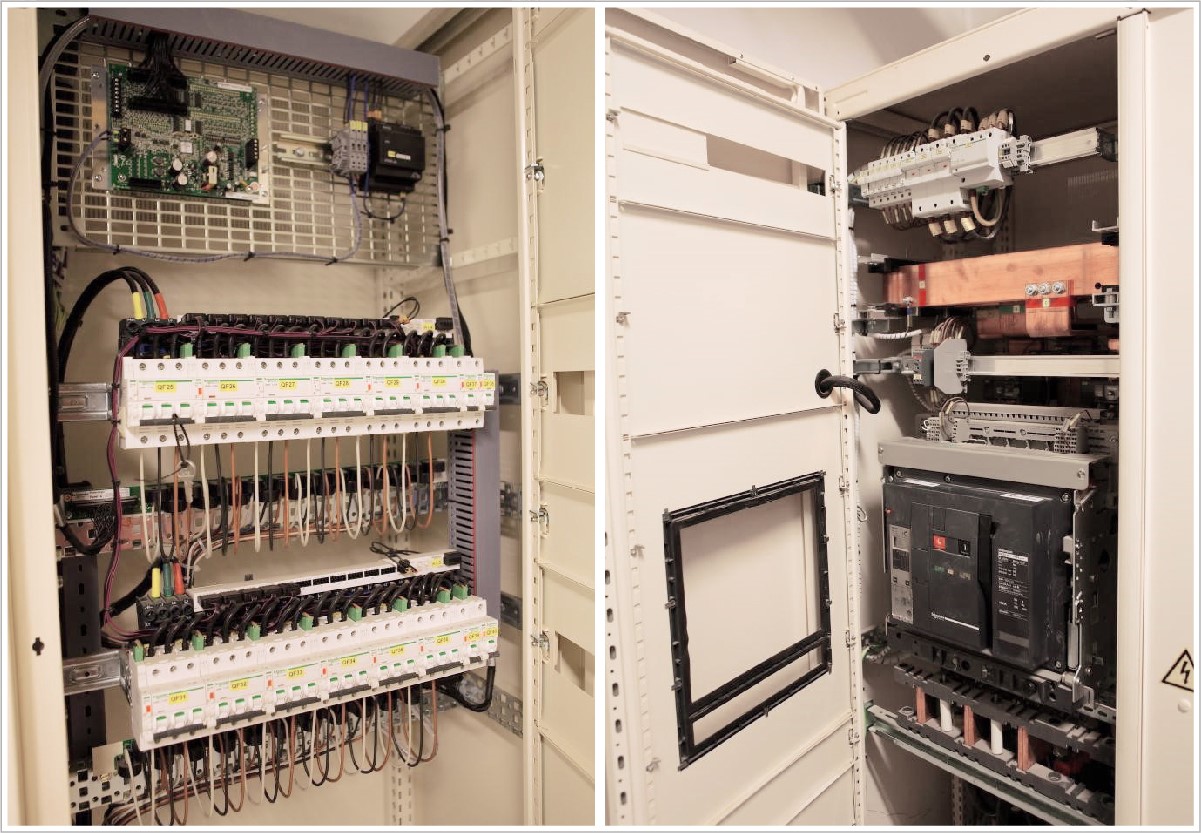

In the data center building there are two spaced electrical panels. Each set has 2 VSU uninterruptible power systems and 2 VSU systems of guaranteed power.

The dispatch system monitors the status of all circuit breakers. Technological metering devices are also installed, which allows you to control electricity consumption in each direction of power supply. To ensure the smooth operation of auxiliary systems, a cabinet with a static electronic bypass is installed.

The distribution system is also controlled by a dispatch system, and each line is equipped with a process metering device.

Distribution cabinets are located in small halls, lines run from them to each server cabinet, in which two power distribution units are installed.

In large halls for the distribution of electricity was used busbars. In the bypass boxes, each machine is also monitored by the dispatch system, which allows you to constantly get a complete picture of the operation of the data center power supply system.

Power distribution units are installed directly in the server cabinets for power distribution.

On the ground floor, in the equipment and machine rooms of the TEC, the equipment is cooled using Emerson in-row precision air conditioners (previously acquired by the customer) and using water chillers and drycoolers to prepare chilled water.

To cool the halls of the second floor (with the main load), we used air-to-air conditioners.

An innovative cooling system from AST Modular has been deployed in the four engine rooms of the second floor, which includes 44 Natural Free Cooling outdoor air cooling modules.

The system consists of two open circuits. In one of them, air from the engine room circulates, and in the other, cool air from the street is fed through the technological ventilation system. Both streams pass through a recuperative heat exchanger, in which heat is exchanged between the outside and the inside air. Thus, the equipment can be cooled with outside air up to 90% of the time in a year, which allows a 20–30% reduction in the cost of traditional active cooling using water chillers and drycoolers. In summer, the air is cooled with chilled water from the chillers.

By the beginning of the project, we already had experience in creating similar cooling systems: We used Natural Fresh Aircooling in the high-tech modular data center at VimpelCom in Yaroslavl. But each new project harbors its own difficulties.

In order to link the operation of MNFC air conditioners with air supply units (which prepare the outdoor air, and then feed it into the technical corridor for the MNFC), we had to work out the dependence of the air supply volume and the air supply unit installed at the mathematical level for MNFC air conditioners. This is due to the fact that the volume of air that enters the technical corridor must not exceed a certain limit (so as not to suffocate the fans of air conditioners) and at the same time be sufficient (so that the air in the corridor is not rarefied).

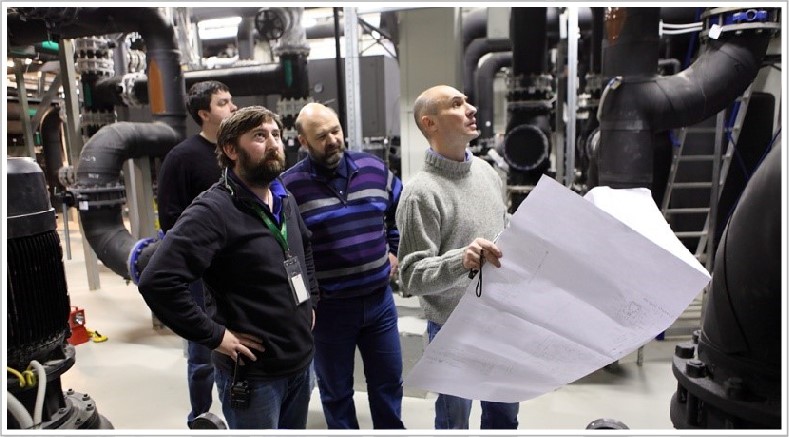

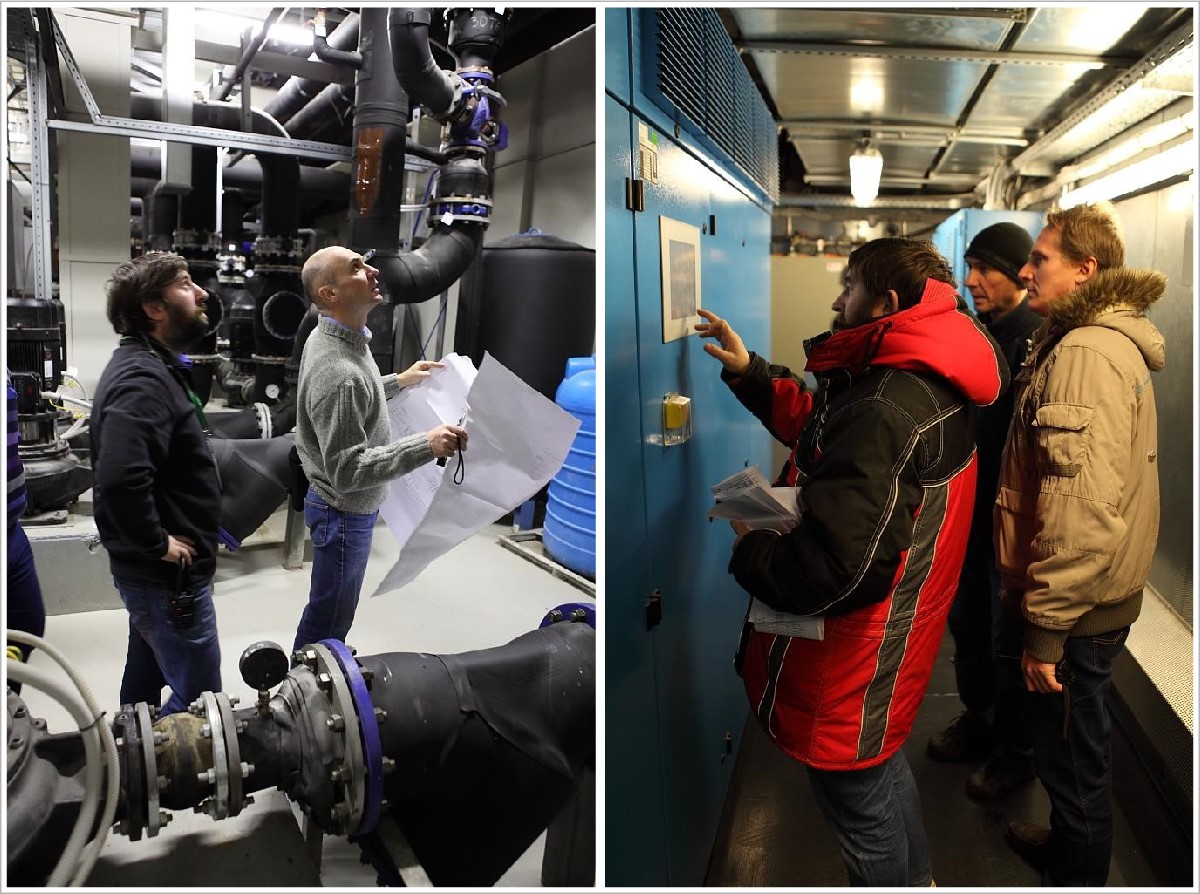

Chiller machines, pump groups, heat exchangers, etc. were located in the cold center (HC).

Water chillers and drycoolers are used to supply chilled water to Natural Free Cooling installations on the second floor in the hot season and to air conditioners on the first floor constantly. In winter, water is cooled through a heat exchanger in the HC and cooling towers on the roof, which also allows you not to turn on the chillers and save electricity.

According to the project, we needed to install a large amount of equipment on the roof of the central heating station, so we had to practically build the third floor in the form of a hundred-ton frame and install about 60 tons of equipment on it: cooling towers, which reached 14 m in length, and powerful ventilation systems above human growth.

With cooling towers, we, of course, sweated: try to lift such a giant on the roof! Due to the large size of the equipment, a special beam had to be brewed for unloading, and a 160-ton crane with a large boom was used, and even such a crane sagged in places.

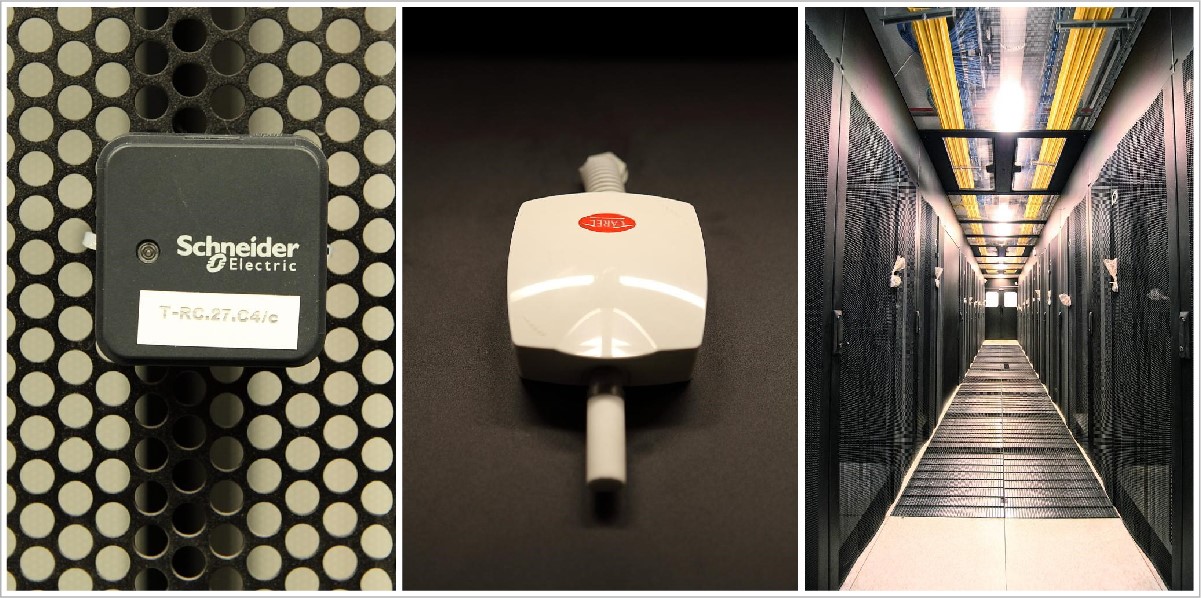

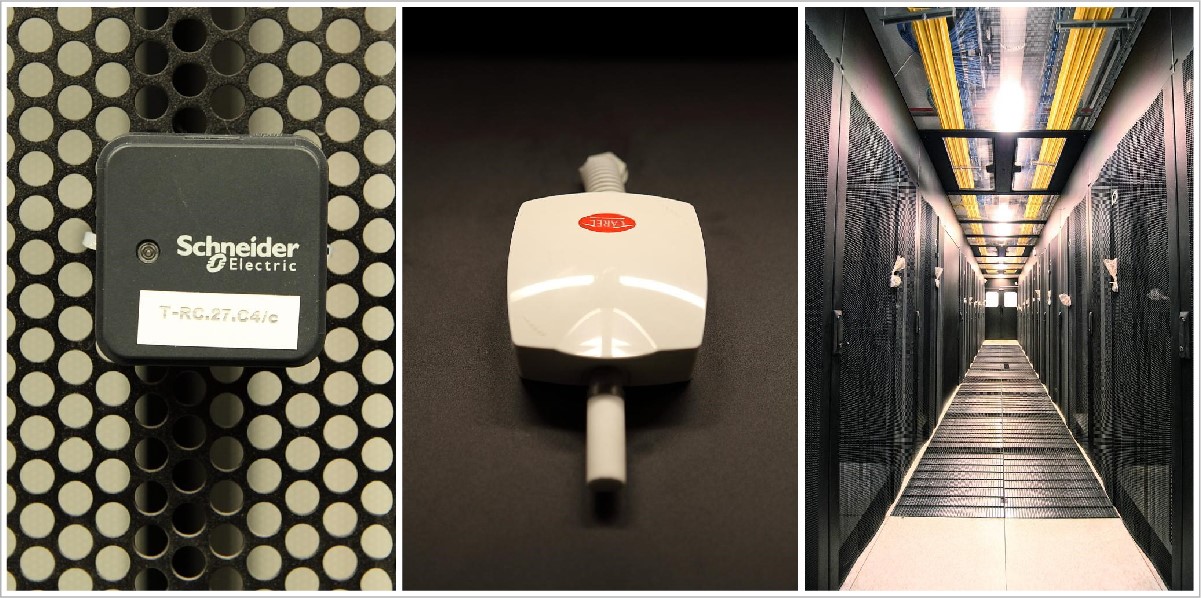

For fire safety, fire alarm systems, early fire detection (aspiration system - sensors analyzing the air composition), automatic gas fire extinguishing and gas removal system, which allows to remove gas residues after the fire extinguishing system has been developed, are deployed at the site.

The fire alarm system works as follows: fire sensors of the machine rooms, equipment rooms, and also auxiliary premises give out corresponding signals to the central automatic fire alarm station, which, in turn, starts the automatic gas fire extinguishing system, automatic fire alarm and warning system, depending on fire zones.

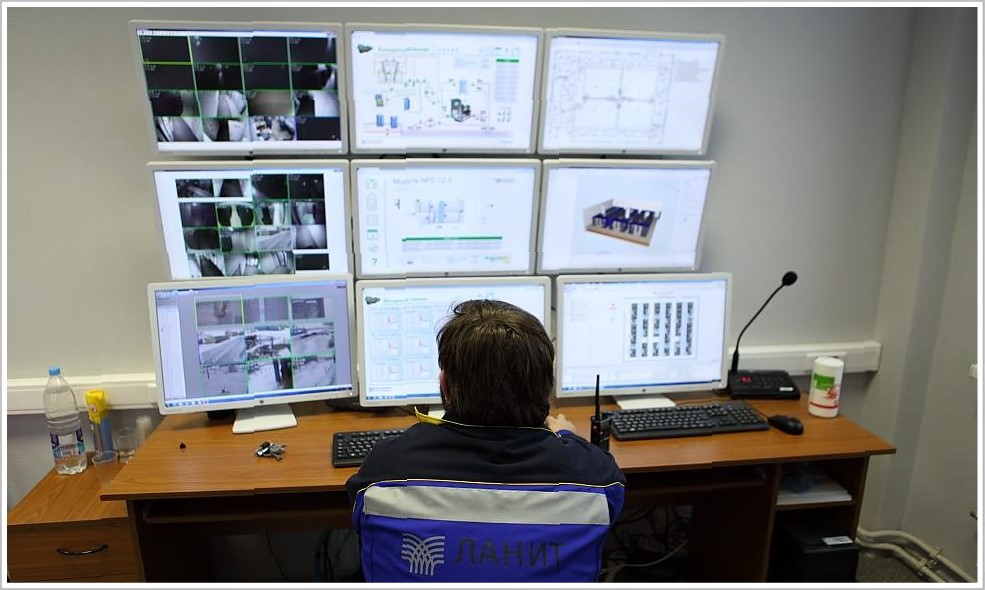

As the main equipment was used ESMI (Schneider Electric). It has closed all the fire safety tasks and is displayed on the operator’s workstation in the control room.

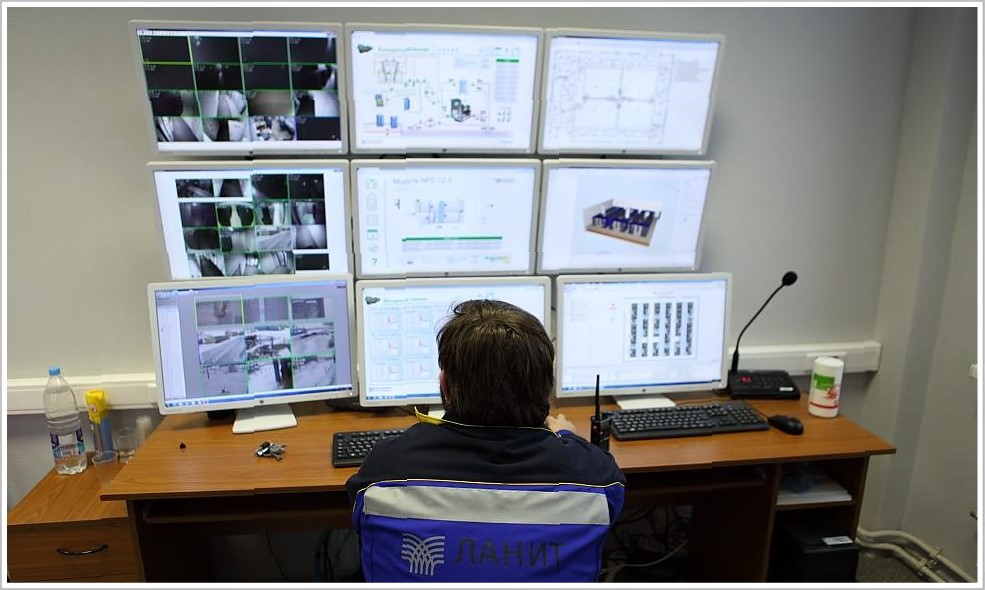

In most data centers, only local automation is installed with a minimal set of monitoring functions, and in our case all subsystems are integrated into a single technical solution with a common monitoring system. The control system monitors the status of more than 10 systems and displays more than 20 thousand parameters. Do you want to know the temperature and humidity in the engine rooms or are you interested in the electricity consumption for each IT rack? No problem.

As part of the monitoring and dispatching system, we used both the SCADA system and the data center monitoring and management system. SCADA-system (Supervisory Control And Data Acquisition - dispatch control and data collection) allows you to view data and control the automation of installed equipment: chiller, air conditioner, diesel-rotary UPS, ventilation, pumps, etc.

The data center resource accounting system allows you to keep complete records of all equipment installed in racks. It displays in real time alarm messages, data on the state of equipment with visualization on a detailed layout plan. Thanks to the detail, we can see any device located in the data center.

The system allows you to simulate emergencies and provides recommendations for solving problems, and also allows you to plan and optimize the operation of data center technical resources.

It also monitors and controls the ventilation systems (including the temperature control of the air supplied by them, as they participate in the technological process of cooling IT equipment). All parameters for air conditioners, ventilation systems and cooling systems are taken into account.

The system monitors the status of the equipment and controls it (and various valves). In addition, it additionally protects equipment from critical modes.

The system monitors the status of all the main protection devices in electrical cabinets, as well as the power consumption on these lines.

The system allows you to keep records of installed equipment in IT racks, as well as store information about its accessories. Data from fire-fighting systems and building security systems flow here.

The building is managed using solutions built on Schneider Electric equipment. The automated dispatching system, monitoring and management of engineering systems provides all the necessary information to the dispatcher at his workplace.

Systems are interconnected through a technological data network segment. The software is installed on virtual machines, data backup is provided.

A centralized Schneider Electric solution was also installed to ensure security, video surveillance and access control in the data center. It provides the necessary information to the data center dispatching system.

All the equipment I have described is assembled in a fail-safe configuration and placed in modular Smart Shelter premises, providing reliable protection of the TEC from fire, moisture, vibration and other external influences. This solution has reduced the duration of the project. On average, it took two weeks to assemble one room.

To pass the exam for data center compliance with Tier III Design and Tier III Facility standards, we had to complete all the documentation according to the requirements of the Uptime Institute, pass its certification and install and run all systems using this documentation. In the final, we passed the testing of data center systems for compliance with Tier III Facility. And this is about 50 tests!

Preparation for the tests was not easy: if the certification of the documentation is clear, then finding information on how the certification of the facility itself takes place was extremely difficult (at that time, the Zhiguli Valley data center turned out to be Russia's seventh data center undergoing certification).

A lot of effort was spent on an internal audit (tests under load were previously carried out for the customer). For two weeks we checked everything one by one in accordance with the project documentation and tests received from the Uptime Institute, and still, of course, we were nervous at the time of certification.

When the Uptime Institute experts came to the data center with the inspection and began to inspect the halls, I made an important conclusion for myself for the future - there should be one leader in the team who will take on communication with the "examiners". About who will become such a person, you need to think more at the stage of formation of the team. We tried to do just that, and yet in the process of testing some of our employees tried to take the initiative. Representatives of the Uptime Institute, noticing third-party discussions, immediately asked additional questions.

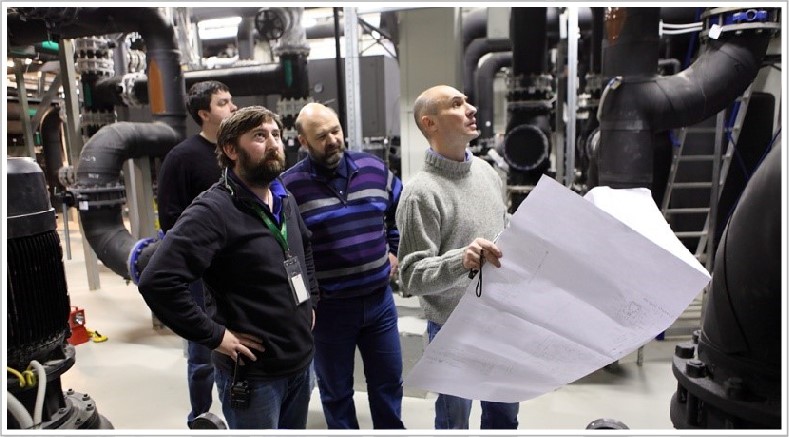

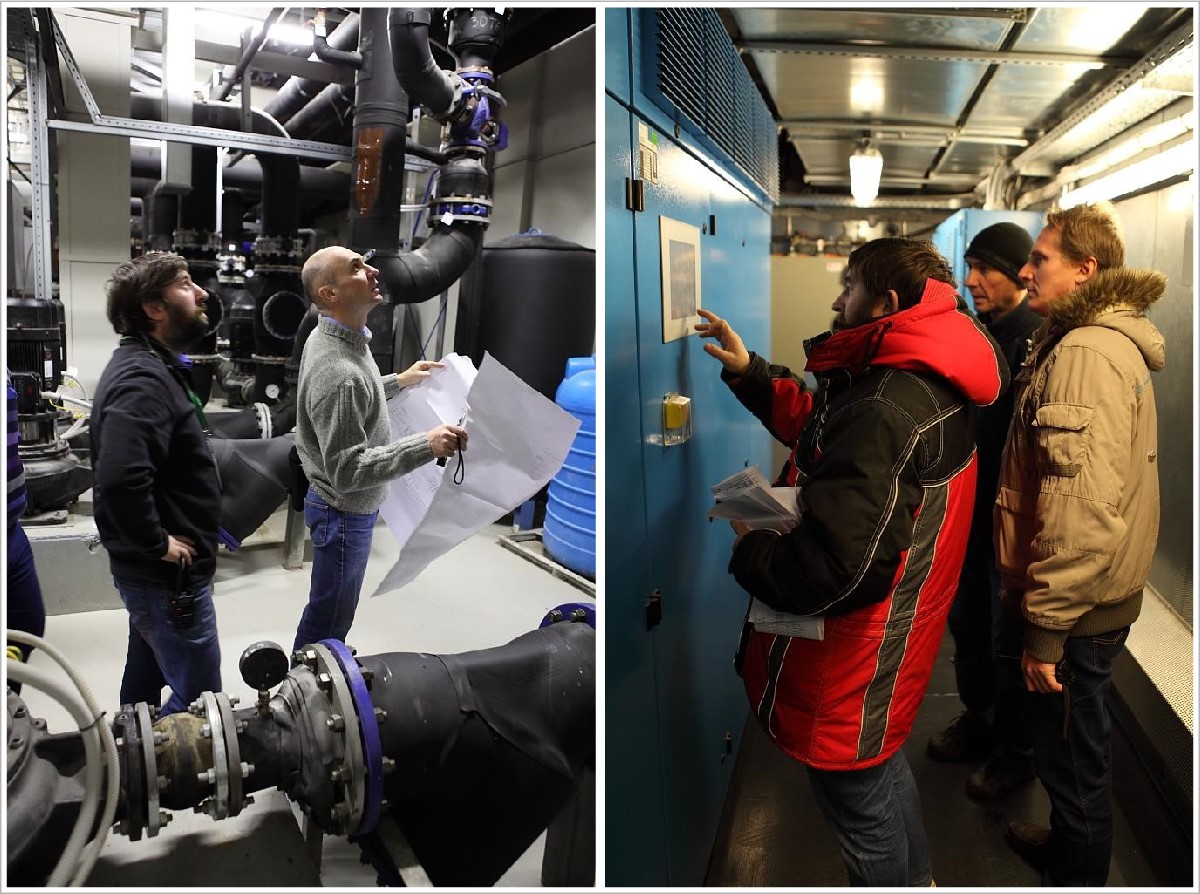

The first day was introductory: the inspector examined the data center for compliance with project documentation.

The next day, for a key test of the Uptime Institute, it was necessary to give the full load to the data center - both thermal and in terms of energy consumption. The load was simulated by specially installed heat guns with a power of 30-100 kW.

For some tests, the equipment was transferred to a critical mode, and there were fears that the systems would not stand. To protect the equipment from possible consequences (as they say, God protects you), we invited engineers from the equipment manufacturers.

Fred Dickerman, one of the inspectors of the Uptime Institute, observed everything that was happening from the control room in real time. The automated dispatching control system and a variety of sensors made it possible to monitor the current state of the equipment and the temperature change in the machine rooms.

The results of certification showed that we initially laid the right technical solutions, all correctly mounted and launched. According to the experts, this data center turned out to be the only one in Russia that was tested by the Uptime Institute without comment.

And finally - a video about our project in the "Zhiguli Valley"

The Technical Support Center (TEC) of the Zhiguli Valley Technopark is a two-story building. It includes six engine rooms with a total area of 843 square meters. m and holds 326 racks with an average power of 7 kW each (maximum load on the rack - 20 kW). And we, specialists of LANIT-Integration , saw it for the first time even at the stage of the concrete frame. At that time it had no communications. We had to create the entire engineering and network infrastructure, as well as part of the computing infrastructure for the automation of engineering systems.

To begin, I will talk a little about the project as a whole, and then I will show all the “stuffing”.

')

So, in the technical support center of the Zhiguli Valley it was necessary to install servers, storage systems and other equipment necessary for residents of the innovation center of the Samara region. Of course, such equipment requires special operating conditions: energy, thermal conditions, humidity levels, etc., so it was economically expedient to install the equipment in one place.

We examined the building, examined the documentation available to the customer, and began to develop project documentation (which was to undergo a state expert review). The biggest difficulty was that we were given only three months to develop the design and working documentation. In parallel with the working documentation, we had to prepare a part of the documentation in English for the examination of the international institute Uptime Institute.

As part of the project, we had to:

- make data center energy efficient;

- build in a short time;

- pass the Uptime Institute exam and get a Tier III certificate.

Since the density of engineering systems in the data center is very high, and the deadlines are tight, it was necessary to plan in detail the work and materials and equipment supplies.

Again, because of the "burning" terms, they decided to use high-tech pre-fabricated modular structures - with their help they avoided additional construction work, did not make fireproof partitions and powerful waterproofing. This solution protects the premises from water, fire, dust and other factors dangerous to IT equipment, and the assembly of a modular design takes only 1-2 weeks.

After the examination of the project began to develop working documentation and order equipment. By the way, about the equipment: since the project got into a crisis year and there were difficulties with financing, we had to adjust all the work for the financial plan and above all the rest to buy expensive large-sized equipment - the price for it was fixed by the manufacturer.

Changes in equipment delivery plans required even more careful planning. To organize the work of 100-150 people at the facility without downtime, the project team paid a lot of attention to change management and risk management procedures. They made a bet on communications: any information about problems and delays was almost immediately transmitted along the whole chain from the team leaders - to the project manager and engineers at the head office of the company.The project team and purchasing managers worked in Moscow, and the core team worked directly in the technopark. Engineers more than once had to go on a business trip to the site to clarify and correct the design decisions, and meanwhile from our Moscow office to the technology park along the highway is almost 1000 km. On-site, we organized the construction headquarters - there were regular meetings with the participation of the customer and work managers, after which all updated current information was transferred to the engineering team.

Most of the workers' specialties we recruited in the region (up to 150 people), as for narrow specialists and engineers, we had to send them on a long trip from Moscow.

In the end, everything turned out. The story of the main test - certification of the Uptime Institute - I have reserved for the very end. And now let's see how everything works out here.

To get to the data center, you must have an RFID pass and be identified at the entrance to the data center. The building also has all the doors under control, in some rooms there is an admission only after double identification of the employee.

Structured Cabling Systems

For the data network, we used HP FlexFabric and Juniper QFabric switches. Panduit's intelligent SCS with the PanView iQ physical infrastructure management system allows you to remotely monitor and automatically document the state of the physical network layer, as well as perform intelligent control of the switching field.

It is possible to control the correctness of the equipment connection or abnormal connection to the ports of the SCS. Pre-terminated cables allow, if necessary, changing the configuration of the SCS in those areas where it is required.

Data Center Power Supply

In its own transformer substation of the central heating station, 4 independent transformers are installed, which supply electricity to the distribution equipment (IU1-4) of the central heating station.

The total allocated power at the data center is 4.7 MW.

8 independent beams are departing from the transformer substation in IU DTE and through the system of guaranteed power on the basis of diesel-rotary uninterruptible power supply units (DDIBP), they feed 8 IGS of the data center, 4 IGN of the uninterrupted power supply system and 4 IGs of the guaranteed power supply system. The power supply system is built according to the 2N scheme, cables run from two different sides of the data center building into two spaced electrical switchboards.

Scheme 2N implements redundancy for power supply. Any feed beam for maintenance can be taken out of service, while the data center will be fully operational.

For uninterrupted and guaranteed power supply, we installed diesel-dynamic uninterruptible power supplies Piller. The power of each 1 MW uninterrupted and 650 kW of guaranteed power that allows you to ensure the work of the data center in full.

In the case of termination of the electricity supply, the rotor rotates by inertia and the electrical installation continues to generate a current for a few more seconds. This short period of time is enough to start the diesel generator and to reach the operating speed. Thus, the operation of the equipment does not stop for a second.

N + 1 construction scheme. With such a power of generated electricity, a diesel engine has a high fuel consumption - more than 350 liters per hour, which required an additional external fuel storage capacity of more than 100 tons of diesel fuel.

In the data center building there are two spaced electrical panels. Each set has 2 VSU uninterruptible power systems and 2 VSU systems of guaranteed power.

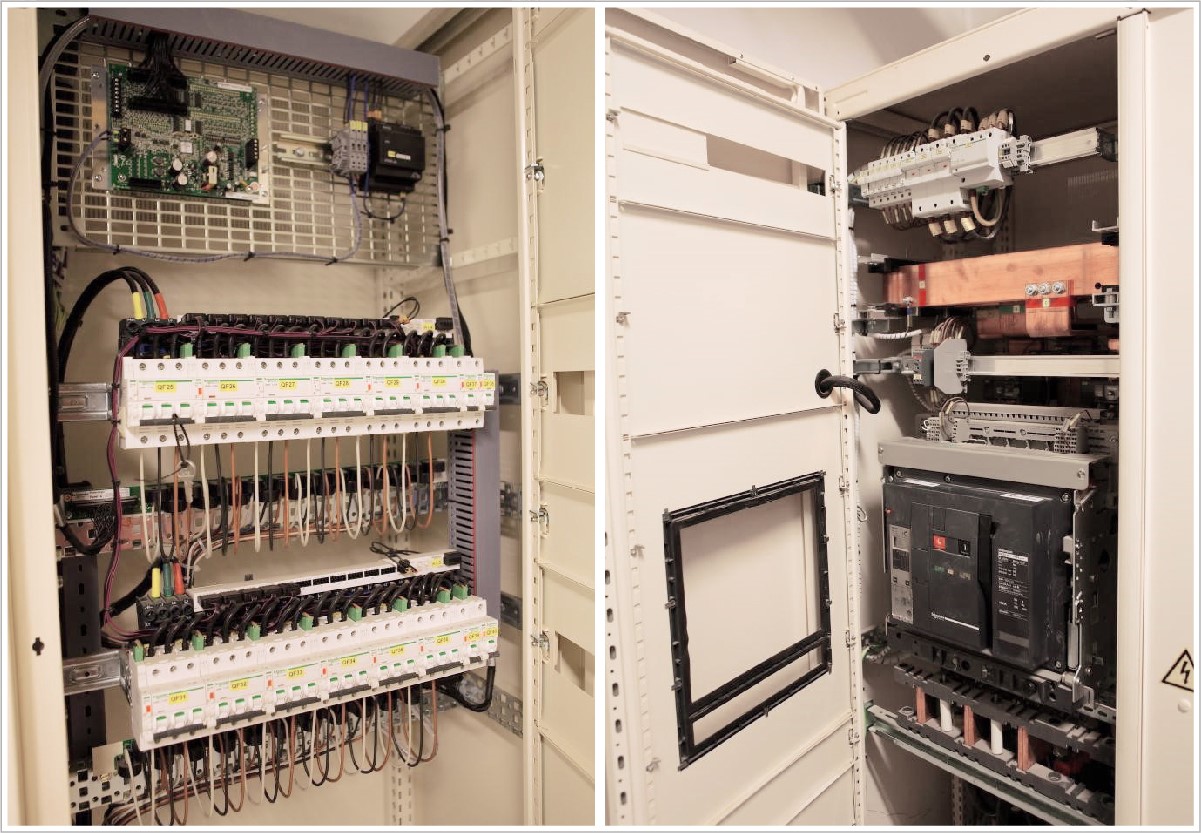

The dispatch system monitors the status of all circuit breakers. Technological metering devices are also installed, which allows you to control electricity consumption in each direction of power supply. To ensure the smooth operation of auxiliary systems, a cabinet with a static electronic bypass is installed.

The distribution system is also controlled by a dispatch system, and each line is equipped with a process metering device.

Distribution cabinets are located in small halls, lines run from them to each server cabinet, in which two power distribution units are installed.

In large halls for the distribution of electricity was used busbars. In the bypass boxes, each machine is also monitored by the dispatch system, which allows you to constantly get a complete picture of the operation of the data center power supply system.

Power distribution units are installed directly in the server cabinets for power distribution.

Cooling rooms

On the ground floor, in the equipment and machine rooms of the TEC, the equipment is cooled using Emerson in-row precision air conditioners (previously acquired by the customer) and using water chillers and drycoolers to prepare chilled water.

To cool the halls of the second floor (with the main load), we used air-to-air conditioners.

An innovative cooling system from AST Modular has been deployed in the four engine rooms of the second floor, which includes 44 Natural Free Cooling outdoor air cooling modules.

The system consists of two open circuits. In one of them, air from the engine room circulates, and in the other, cool air from the street is fed through the technological ventilation system. Both streams pass through a recuperative heat exchanger, in which heat is exchanged between the outside and the inside air. Thus, the equipment can be cooled with outside air up to 90% of the time in a year, which allows a 20–30% reduction in the cost of traditional active cooling using water chillers and drycoolers. In summer, the air is cooled with chilled water from the chillers.

By the beginning of the project, we already had experience in creating similar cooling systems: We used Natural Fresh Aircooling in the high-tech modular data center at VimpelCom in Yaroslavl. But each new project harbors its own difficulties.

In order to link the operation of MNFC air conditioners with air supply units (which prepare the outdoor air, and then feed it into the technical corridor for the MNFC), we had to work out the dependence of the air supply volume and the air supply unit installed at the mathematical level for MNFC air conditioners. This is due to the fact that the volume of air that enters the technical corridor must not exceed a certain limit (so as not to suffocate the fans of air conditioners) and at the same time be sufficient (so that the air in the corridor is not rarefied).

Ground floor cooling center and equipment on the roof

Chiller machines, pump groups, heat exchangers, etc. were located in the cold center (HC).

Water chillers and drycoolers are used to supply chilled water to Natural Free Cooling installations on the second floor in the hot season and to air conditioners on the first floor constantly. In winter, water is cooled through a heat exchanger in the HC and cooling towers on the roof, which also allows you not to turn on the chillers and save electricity.

According to the project, we needed to install a large amount of equipment on the roof of the central heating station, so we had to practically build the third floor in the form of a hundred-ton frame and install about 60 tons of equipment on it: cooling towers, which reached 14 m in length, and powerful ventilation systems above human growth.

With cooling towers, we, of course, sweated: try to lift such a giant on the roof! Due to the large size of the equipment, a special beam had to be brewed for unloading, and a 160-ton crane with a large boom was used, and even such a crane sagged in places.

Fire safety

For fire safety, fire alarm systems, early fire detection (aspiration system - sensors analyzing the air composition), automatic gas fire extinguishing and gas removal system, which allows to remove gas residues after the fire extinguishing system has been developed, are deployed at the site.

The fire alarm system works as follows: fire sensors of the machine rooms, equipment rooms, and also auxiliary premises give out corresponding signals to the central automatic fire alarm station, which, in turn, starts the automatic gas fire extinguishing system, automatic fire alarm and warning system, depending on fire zones.

As the main equipment was used ESMI (Schneider Electric). It has closed all the fire safety tasks and is displayed on the operator’s workstation in the control room.

Data center automation and dispatching system

In most data centers, only local automation is installed with a minimal set of monitoring functions, and in our case all subsystems are integrated into a single technical solution with a common monitoring system. The control system monitors the status of more than 10 systems and displays more than 20 thousand parameters. Do you want to know the temperature and humidity in the engine rooms or are you interested in the electricity consumption for each IT rack? No problem.

As part of the monitoring and dispatching system, we used both the SCADA system and the data center monitoring and management system. SCADA-system (Supervisory Control And Data Acquisition - dispatch control and data collection) allows you to view data and control the automation of installed equipment: chiller, air conditioner, diesel-rotary UPS, ventilation, pumps, etc.

The data center resource accounting system allows you to keep complete records of all equipment installed in racks. It displays in real time alarm messages, data on the state of equipment with visualization on a detailed layout plan. Thanks to the detail, we can see any device located in the data center.

The system allows you to simulate emergencies and provides recommendations for solving problems, and also allows you to plan and optimize the operation of data center technical resources.

It also monitors and controls the ventilation systems (including the temperature control of the air supplied by them, as they participate in the technological process of cooling IT equipment). All parameters for air conditioners, ventilation systems and cooling systems are taken into account.

The system monitors the status of the equipment and controls it (and various valves). In addition, it additionally protects equipment from critical modes.

The system monitors the status of all the main protection devices in electrical cabinets, as well as the power consumption on these lines.

The system allows you to keep records of installed equipment in IT racks, as well as store information about its accessories. Data from fire-fighting systems and building security systems flow here.

The building is managed using solutions built on Schneider Electric equipment. The automated dispatching system, monitoring and management of engineering systems provides all the necessary information to the dispatcher at his workplace.

Systems are interconnected through a technological data network segment. The software is installed on virtual machines, data backup is provided.

A centralized Schneider Electric solution was also installed to ensure security, video surveillance and access control in the data center. It provides the necessary information to the data center dispatching system.

All the equipment I have described is assembled in a fail-safe configuration and placed in modular Smart Shelter premises, providing reliable protection of the TEC from fire, moisture, vibration and other external influences. This solution has reduced the duration of the project. On average, it took two weeks to assemble one room.

Pass the fire, water and ... Uptime Institute exam

To pass the exam for data center compliance with Tier III Design and Tier III Facility standards, we had to complete all the documentation according to the requirements of the Uptime Institute, pass its certification and install and run all systems using this documentation. In the final, we passed the testing of data center systems for compliance with Tier III Facility. And this is about 50 tests!

Preparation for the tests was not easy: if the certification of the documentation is clear, then finding information on how the certification of the facility itself takes place was extremely difficult (at that time, the Zhiguli Valley data center turned out to be Russia's seventh data center undergoing certification).

A lot of effort was spent on an internal audit (tests under load were previously carried out for the customer). For two weeks we checked everything one by one in accordance with the project documentation and tests received from the Uptime Institute, and still, of course, we were nervous at the time of certification.

When the Uptime Institute experts came to the data center with the inspection and began to inspect the halls, I made an important conclusion for myself for the future - there should be one leader in the team who will take on communication with the "examiners". About who will become such a person, you need to think more at the stage of formation of the team. We tried to do just that, and yet in the process of testing some of our employees tried to take the initiative. Representatives of the Uptime Institute, noticing third-party discussions, immediately asked additional questions.

The first day was introductory: the inspector examined the data center for compliance with project documentation.

The next day, for a key test of the Uptime Institute, it was necessary to give the full load to the data center - both thermal and in terms of energy consumption. The load was simulated by specially installed heat guns with a power of 30-100 kW.

For some tests, the equipment was transferred to a critical mode, and there were fears that the systems would not stand. To protect the equipment from possible consequences (as they say, God protects you), we invited engineers from the equipment manufacturers.

Fred Dickerman, one of the inspectors of the Uptime Institute, observed everything that was happening from the control room in real time. The automated dispatching control system and a variety of sensors made it possible to monitor the current state of the equipment and the temperature change in the machine rooms.

The results of certification showed that we initially laid the right technical solutions, all correctly mounted and launched. According to the experts, this data center turned out to be the only one in Russia that was tested by the Uptime Institute without comment.

Want to be part of our team? We have jobs for you!

And finally - a video about our project in the "Zhiguli Valley"

Source: https://habr.com/ru/post/352824/

All Articles