To steal in 1100 seconds - the strangest bug that I saw

Two days ago, I received a strange message from a client: the video call mysteriously broke off after exactly 18 minutes. And then it happened again, also after the 18th minute. Coincidence?

This bug was not only strange, but terribly awkward. Our goal is to make a video call tool so simple that a conditional doctor or psychologist would like to use our service. Need I say that a permanent gap after 18 minutes is inconsistent with this goal?

WebRTC is when you always deal with bugs.

Bugs are not a phenomenon for us. Our product is based on WebRTC, a fairly new web standard that allows two browsers to directly exchange data (for example, video and audio in real time).

In September 2017, WebRTC was finally supported in all major browsers . In recent months, we snatched our fair share of bugs , while we made WebRTC work for everyone. (Working with advanced technologies is a good way to get familiar with how to write bug reports to browser makers).

')

However, this last bug was the strangest in my 5 years of working with WebRTC.

Tools for catching bugs WebRTC

Fortunately, we have a lot of bug catching tools (thanks to some cool contributors). It is naive to think that someone will dump the chrome: // webrtc-internals and send it to you along with a script that is easy to reproduce.

Sample output based on WebRTC dump . Green lines indicate a successful connection, the red line indicates a connection failure.

One of the best tools is rtcstats , which gives output from getStats and stores it in regular files (along with other useful tokens).

Bug confirmation

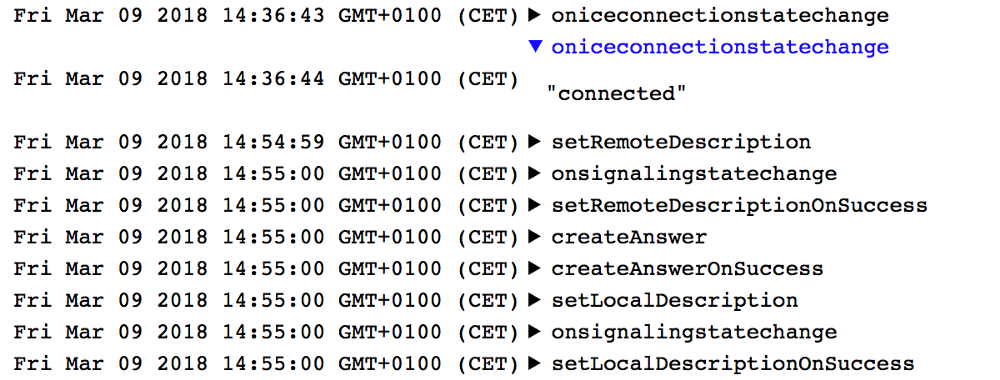

We quickly found the required files from two failed calls. These were the video calls between Chrome 64 and Edge 16. Chrome fell at 18 minutes 20 seconds. The subsequent restart of ICE also failed, which automatically closed RTCPeerConnection.

The riddle is that Edge did not agree with Chrome - on the Edge side, iceConnectionState remained connected until the ICE restart, the browser broke the connection, but the ICE restart failed. What was it?

Screen from Edge, look at the connected timestamps and get a description of iceRestart, between them about 18 minutes 20 seconds. Moreover, iceConnectionStateChange has not even changed to failed!

Reproduce bug: magic number “18 minutes 20 seconds”

A couple of months before we bought a cheap laptop with Windows to run Edge 16 and see the bugs in action. We made a video call between this laptop and MacBook with Chrome 64.

And then we waited. And of course, exactly after 18 minutes and 20 seconds, the ICE status changes to failed, the ICE restarts and the connection is broken. We have never seen anything like it, it was a shock.

What to do next? Where to begin? In our code, there was no magic timer that would break connections after 18 minutes 20 seconds in some browsers. It all seemed insurmountable, but we could only do one thing.

The team is blissfully unaware of the bugs that await it. Picture taken in Alesund, Norway, during a working trip.

One step at a time - methodical localization of the bug

We found a playable script, but still had to reduce the search area. A series of tests was supposed to test our assumptions.

First, will this happen between two Chrome browsers, which usually have the least problems with WebRTC? On the same laptops, with the same initial conditions, we checked the call from Chrome to Chrome. After 18 minutes 20 seconds, the call was still active, the ICE connection status remained stable. That is, the bug did not happen.

However, when testing from Firefox to the Edge, the call again failed.

The main question: is it a bug in Edge or a bug in our code? The appear.in service is also built on WebRTC and much has been done in our product in the same way, because half of our team used to work in appear.in. We decided to test the call from Chrome to Edge through this platform in peer-to-peer mode (free of charge), all on the same machines. Not a single break!

We did not know to cry or laugh. The scope of the search has narrowed and indicated an error in our code. Confrere could not work for a bunch of chrome-edge.

When expectations fall

Since each test took 20 minutes, we decided that we should pause and continue from home.

For the sake of superfluous verification, we tested Chrome-Edge from home. What a surprise it was when, after 40 minutes of the test, the call was still active. What?!

We checked again and again. Chrome logged that the office connection did this: relay <-> stun. This means that one shoulder of the call must go through our servers (relayed), while the second shoulder can send data directly (STUN). This is not uncommon for modern network configurations.

The RTCStats dump shows the connection status of each ICE candidate pair. Look at the very top: the local address is in relay status, and the remote address is in stun status.

Due to the specific network configuration in our office, my machine running Chrome was connected via cable to allow direct connections. A laptop with an Edge clung over a very unstable Wi-Fi, and used a relay-server. However, in the home network, the parties to the call were connected through local candidates, that is, they did not leave the home network.

IceTransportPolicy to the rescue!

The next step is to determine why the test was unsuccessful in the office, but now it’s working. Could the reason be in stun or relay candidates? Fortunately, we can force PeerConnection to use only relay candidates — you need to set the appropriate value for iceTransportPolicy when PeerConnection is created. And of course, after 18 minutes and 20 seconds the call is terminated.

Was it a coincidence that the Chrome-Edge bundle worked in appear.in? Maybe our connection through appear.in used STUN, but TURN won in our service? A quick test showed that it was our mistake, since the forced relay ( TURN ) for appear.in did not cause any bugs.

Finally, we tried something else. We collected a special version of Confrere and, with the permission of appear.in, used their TURN servers for one test. After 25 minutes, we concluded that the code in our client application was fine (because this code did not provoke a bug when using other TURN servers).

When each test takes at least 18 minutes, it requires patience. On the left I, on the right - Svein (CEO)

Logs rush to the rescue!

When we localized the problem - our configuration of the TURN servers was to blame - could we fix everything? Not. We still did not know what exactly caused this bug. Our TURN infrastructure is mostly based on what we learned over 4 years of work in appear.in, it uses the same open source components, works in the same cloud and with the same configuration.

When you are at a loss and do not know where to go next, you can always refer to the logs . Logging was for us the main tool in the search for a bug, because it allows us to monitor the running system in real time. Launching a new video call, we closely followed the logs.

Somewhere on the 10-minute mark, messages such as:

turnserver: 1054: session 000000000000000061: realm <confrere.com> user <confrere:1520714985>: incoming packet message processed, error 438: Stale nonce When the call ended after 18 minutes and 20 seconds, we looked through the logs again and found the first message mentioning Stale nonce :

turnserver: 1053: session 000000000000000034: refreshed, realm=<confrere.com>, username=<redacted>, lifetime=600 turnserver: 1053: session 000000000000000034: realm <confrere.com> user <redacted>: incoming packet REFRESH processed, success turnserver: 1053: handle_udp_packet: New UDP endpoint: local addr <redacted>:443, remote addr <redacted>:56031 turnserver: 1053: session 000000000000000061: realm <confrere.com> user <redacted>: incoming packet message processed, error 438: Wrong nonce turnserver: 1053: session 000000000000000061: realm <confrere.com> user <redacted>: incoming packet message processed, error 438: Stale nonce Why are there so many messages about Stale nonce ? What is this one-time code (nonce) and how can it be overdue (stale)?

Understand the bug: Hi, “stale nonce”

If you, like me, did not know beforehand what a one-time code is (nonce):

Nonce (from nonce - “number that can only be used once” is a number that can be used once) in cryptography is a one-time code, selected randomly or pseudo-randomly, which is used to securely transmit the main password, preventing replaying attacks .- Wikipedia

And if this number is overdue:

Stale nonce is more of a warning than an error. For SIP, your authorization data is encrypted in SIP headers. To prevent this data from being intercepted by other people and make calls at your expense, a one-time code (nonce) is used.- from the Internet

The SIP RFC standard requires that nonce be changed periodically. If the client uses the old nonce, then it is “stale nonce”. In this case, the client must use the current nonce instead of the old one. Such a message means that the client is trying to use stale nonce, that is, either a replay attack occurs, or the client has failed to receive a new nonce

Confrere uses coturn open source software, it manages TURN servers. If you read the documentation carefully, you will see that there is a parameter in the configuration for stale nonce:

# Uncomment if extra security is desired, # with nonce value having limited lifetime. # By default, the nonce value is unique for a session, # and has unlimited lifetime. # Set this option to limit the nonce lifetime. # It defaults to 600 secs (10 min) if no value is provided. After that delay, # the client will get 438 error and will have to re-authenticate itself. # #stale-nonce=600 In our configuration, we turned on the stale-nonce and everything worked flawlessly until the client base with the Edge browser started to grow.

The logs of the stale nonce were familiar to Philip Hanke , and he also helped us understand what was happening. Shijun Sung reported on a similar issue in May 2017 that allowed appear.in to avoid problems.

We had the opportunity to change the configuration of the TURN server and remove the stale-nonce flag so that nonce has no time limit.

50 minutes in the test environment - and we were finally able to say: “We found a bug!”

And yet, why 18 minutes 20 seconds?

You must have noticed the title of this post. Hijack in 1100 seconds. I wrote “18 minutes 20 seconds” in this text, but do you know how much it is in seconds? 1100

We know that after 600 seconds from the beginning of the conversation, the initial nonce becomes invalid. There is a message stale nonce and after another 500 seconds the connection is broken. Where do another 500 seconds come from?

If you look at the number 500 in the coturn sorts , then something interesting will be found.

It looks like a built-in sleep timer 500 seconds after some kind of connection check. I’m not too familiar with the coturn internal device, but apparently, if the nonce becomes invalid, then after 500 seconds, the coturn will stop transmitting the packets to another member. Of course, another participant will see that the connection is broken (after all, he no longer receives packets).

This explains what is happening on the Chrome side, as it stops receiving packets. But why Edge does not notice this?

The story of two packages

Connections in WebRTC can be of two types: UDP and TCP. UDP packets do not require acknowledgment of receipt, delivery is not guaranteed and they may arrive in the wrong order. In real-time communications, this is not a big problem, since codecs do a pretty good job with packet loss.

TCP is different. TCP is very useful when you need to deliver all the data to the other side, in the correct order and with delivery confirmation. Most WebRTC traffic is UDP .

In our history, Edge sent UDP packets through a TURN server to the other side . When the WebRTC server stopped sending packets, Edge could not know if Chrome was receiving packets or not , so Edge continued to happily send data. On the other hand, Chrome behaved correctly and sent packets via STUN. So when iceRestart was happening, Edge did not know what to do and just fell silently .

Results

The conclusions are as follows: we will send a bug report to the Edge bug tracker and for a while we will deploy new TURN servers without the stale-nonce flag. New servers must ensure that in the future there will be no failures, and our users will receive long and high-quality video calls in our service .

I am also extremely happy to be part of a community of people who willingly share knowledge and help each other. From browser developers who responded to our bug reports and fixed bugs, to individual contributors who make an excellent and free toolkit for the WebRTC community.

Special thanks to Philip Hanke for pointing out the stale-nonce flag and enduring my endless lamentations when it all seemed nonsense. In addition, I express my gratitude to Shijun Sun for having discovered this problem in May 2017.

We hope this story was helpful to you. The author of the original article can be contacted via Twitter or mail , and I am ready to share our experience using WebRTC in the comments!

Source: https://habr.com/ru/post/352730/

All Articles