Rumors about the cancellation of the Kotelnikov theorem are greatly exaggerated

tl; dr:

Scientists from Columbia University, led by Ken Shepard and Rafa Yuste, said that they bypassed the centenary sampling theorem (the Nyquist – Shannon theorem, the discretization theorem, in Russian-language literature - the Kotelnikov theorem): 1 , 2 . Now the overlap protection filters are optional, because the noise from the overlapping spectra can be restored after sampling. Sounds crazy? Yes. I offer $ 1000 to the first to prove that this is not insane. To get a reward, be sure to read to the end.

“Filter before sampling!”

This mantra is driven to death into the heads of generations of engineering students. Here, by "discretization" is meant the conversion of a continuous function of time into a series of discrete values. Such a process occurs wherever a computer digitizes a signal from the real analog world. To “filter” means to remove high-frequency components from the signal. Since this process occurs in the analog world, it requires real analog equipment: circuits of resistors, capacitors and amplifiers. Creating such a circuit can become a tedious and time consuming process, for example, if there is not enough space on the electronic chips. Shepard's research team considered this limitation in the context of a device for recording signals from nerve cells .

Now the authors state that they have invented a “data collection paradigm that does not require filters to protect against overlapping for each channel, thereby overcoming the limitations of the scaling of existing systems.” In fact, they say that instead of hardware circuits, you can use software that runs on the digital side after sampling . “Another advantage of this approach to data collection is that all steps of signal processing (channel separation and deletion) are implemented in digital form,” said the scientific work .

If this is true, it would be the most important discovery. It not only refutes the generally accepted opinion, which was held for almost a century. More and a lot of equipment becomes unnecessary. Anti-overhead filters are commonly used in electronic equipment. There are several digital radio chips and an analog-digital audio signal converter in your cell phone, and between them there are half a dozen filter chips for protection against overlapping. If you can replace all these electronic components with a few lines of code, then manufacturers will gladly do it. So this is potentially a billion-dollar idea .

')

Unfortunately, this is a big mistake. I will show that these documents have nothing to do with the discretization theorem. They do not cancel processing. And do not cancel analog filters before digitizing. And they don’t even come close to the best systems for extracting neural signals from noise.

Why overlaying is bad

[skip this part if you know the answer]

Discretization is the most important stage of data collection, when a continuous signal turns into a discrete series of numbers. Most often, a continuous signal is a voltage signal. It is discretized and digitized at regular intervals by an electronic circuit, which is called an “analog-to-digital converter” (Fig. 1). At first glance, due to discretization, information is lost. Indeed, on a continuous voltage curve “infinitely many” points, but after discretization, we leave only a part of them. Consequently, an infinite number of points between selected ones are lost. And here the most important statement of the discretization theorem sounds: under appropriate conditions, individual samples contain all the information necessary for perfect restoration of the continuous voltage function that produced them . In this case, there is no loss of information due to discretization.

These relevant conditions are simple: the input signal must change slowly enough so that its shape is well fixed in the sequence of discrete samples. In particular, the signal should not have sinusoidal components at frequencies above the so-called Nyquist frequency, which is equal to half the sampling rate:

(one)

For example, if the ADC makes 10,000 measurements per second, then the input signal should not exceed 5000 Hz. What happens if the input signal changes faster? These frequency components cannot be reconstructed from samples, since their origin is completely incomprehensible (Fig. 1).

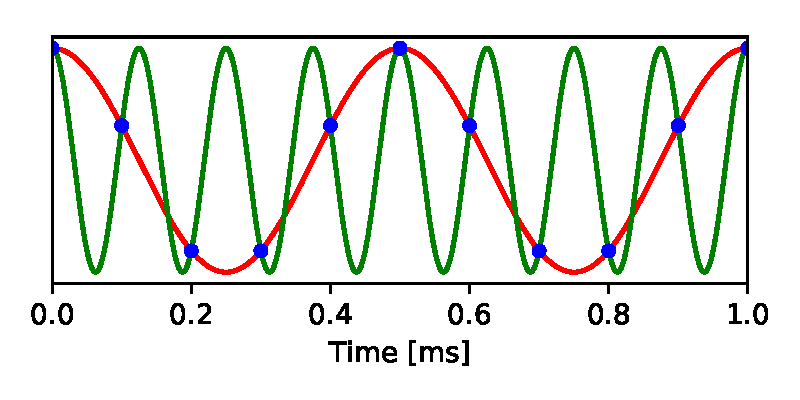

Fig. 1. Overlay. A 2 kHz sine wave (red) is sampled at 10 kS / s (blue dots). Another 8 kHz sine wave produces exactly the same sample sequence. Thus, after sampling it is impossible to understand, the original signal was at 2 kHz or at 8 kHz

The same set of samples is obtained from the signal below the Nyquist frequency, and from any number of possible signals above the Nyquist frequency. This phenomenon is called overlapping (aliasing), when the high-frequency signal in the sampled form can be masked as a low-frequency signal. If there are no restrictions on the incoming signal, the overlap results in a loss of information, because we can no longer restore the original signal from discrete samples.

How to avoid overlap? You just need to eliminate the frequency components above the Nyquist frequency from the analog signal before sampling. Most often this is done by an electronic low-pass filter, which passes low frequencies, but cuts off high ones. Thanks to the widespread use of such filters, much engineering effort has been spent on their improvement. As a result, the usual approach to the design of a data collection system looks like this (Fig. 2):

1. Determine the required bandwidth of the signal you want to register, namely the highest frequency that you need to restore later. This is the Nyquist frequency for your system.

2. Pass the signal through the low-pass filter, which cuts off all frequencies above .

3. Run the signal sampling after the filter with frequency .

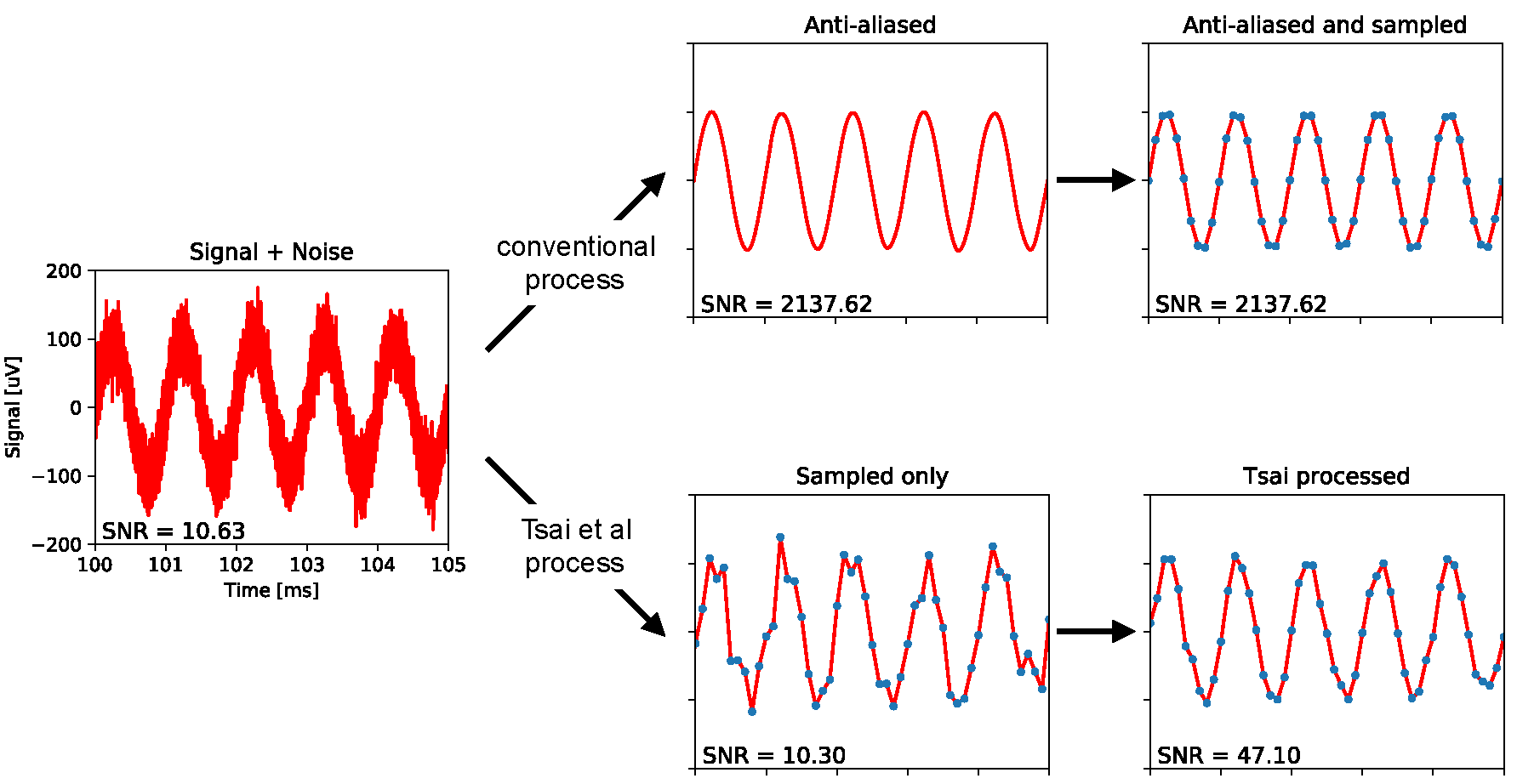

Fig. 2. Comparison of the usual data collection with the procedure described in the scientific work of Tsai et al. Left: a sine wave at a frequency of 1 kHz with an amplitude from peak to peak of 200 µV, as in test records from scientific work. Added white Gaussian noise with a bandwidth of 1 MHz and a root-mean-square amplitude of 21.7 µV. Above: A standard overlap protection approach with a cut-off frequency of 5 kHz, which eliminates most of the noise and thus improves the signal-to-noise ratio (SNR) 200 times. This signal is then discretized to 10 kS / s. Bottom: in the scientific work of Tsai and others, the signal is immediately sampled at the same low SNR. Then comes the digital processing, which slightly increases the SNR. All data and processing are modeled with this code.

What say Tsai and others.

Now consider a specific situation from the scientific work of David Cai and others. They want to record electrical signals from neurons, which requires bandwidth

So they chose a sampling rate of 10 kS / s. Unfortunately, the required neural signal is distorted due to the broadband thermal noise, an inevitable by-product of the recording. The noise spectrum reaches 1 MHz with a typical root-mean-square amplitude (rms) of 21.7 µV. The authors approximate it as white Gaussian noise, that is, noise with a constant power density before clipping at 1 MHz. (Links to these figures: p. 5 and Fig. 11 from the first article ; pp. 2, 5, 9 and Fig. 2e in the appendix from the second article ).

The standard procedure would be to transmit the signal and noise through an analog filter to protect against overlapping with cut-off frequencies above 5 kHz (Fig. 2). This will leave the neural signal intact, while at the same time reducing the noise power by a factor of 200, because the noise spectrum is cut off from the 1 MHz bandwidth to only 5 kHz. Thus, the remaining noise below the Nyquist frequency will have a root-mean-square amplitude of just 21.7 µV / = 1.53 µV. This amount of thermal noise is completely harmless. This is less than from other sources of noise in the experiment. The signal is then sampled at a sampling frequency of 10 kS / s.

Instead, the authors completely exclude the filter and directly sample the wideband signal + noise . In their article, they explain in detail the technical limitations associated with the lack of space on the silicon devices in this recording method, due to which they had to abandon the filter. But in the end, all the noise power up to 1 MHz now hit the sampled signal through the overlay. In fact, each sample is contaminated with Gaussian noise with a root-mean-square amplitude of 21.7 µV. This is an unacceptable level of noise, because we want to distinguish the signals of neurons on the same or lower amplitude. For reference, in some popular systems for recording multi-neuron activity, the noise level does not exceed 4 µV.

Here, Cai and his colleagues demonstrate a striking "innovation": they say that you can apply a clever data conversion algorithm after sampling to restore the original broadband noise , subtract this noise from the sampled data - and thus leave a clear signal. Here are the relevant quotes, in addition to the above: "We can digitally recover the spectral contribution from high-frequency thermal noise, and then remove it from the data with sparse sampling, thereby minimizing the effects of overlap, without using overlay protection filters on each channel." And one more: “We present multiplexing architectures without overlay protection filters on each channel. Sparse sampling data is recovered using a compressed read strategy that includes statistical reconstruction and thermal noise removal with a coarse sampling step. "

What evidence do they present for this statement? Only one experiment was conducted that checks noise reduction using real data. The authors recorded a sinusoidal test signal at a frequency of 1 kHz with an amplitude of 200 µV from peak to peak. Then applied their processing scheme to "remove thermal noise." The output signal looks cleaner than the input signal (Fig. 2e from the paper ; see also the simulation in Fig. 2). Quantitatively, noise has been reduced from a mean square amplitude of 21.7 µV to 10.02 µV. This is a rather modest improvement in the signal-to-noise ratio (SNR) by 4.7 times (more on this below). The correct filter for protection against overlaps before sampling or the claimed system for removing the imposed noise after sampling should increase the SNR by a factor of 200. (For references to the cited results, see Fig. 2e-f from the second article and Fig. 7 from the first article ).

Why scheme Tsai and others. Can not work in principle

Before turning to a specific analysis of the data processing scheme proposed in these scientific articles, it is worth considering why it cannot work in principle. The most important thing: if it works, then it cancels Kotelnikov's theorem, which generations of engineering students studied at institutes. The white noise signal in these experiments is sampled at a frequency 200 times lower than that which allows the signal to be reconstructed according to the Kotelnikov theorem. So why did Tsai and his colleagues decide that the scheme could work?

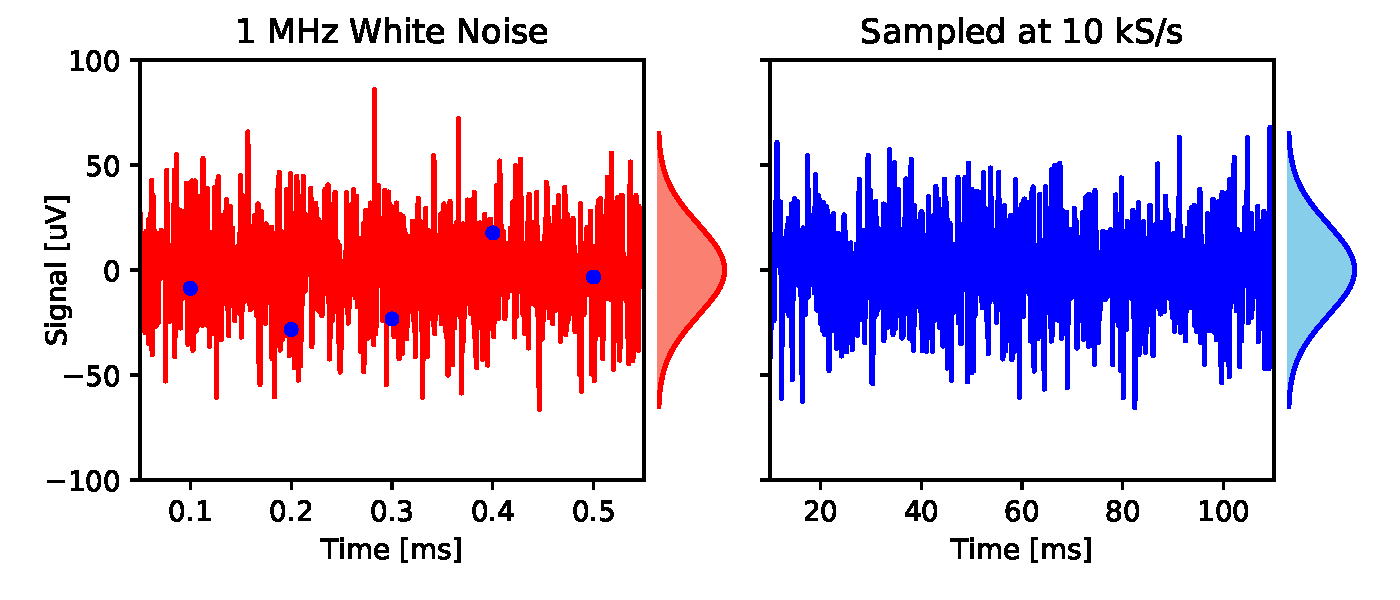

The authors claim that the superimposed high-frequency noise retains a certain footprint, which then allows you to remove it: “Using the above characteristics, we can digitally recover the spectral contribution from high-frequency thermal noise and then remove it from the data with sparse sampling, thereby minimizing the effects overlay. " It is difficult to understand what these characteristics may be. Their easiest to assess on a time scale. White Gaussian noise in the 1 MHz band can be reconstructed using time series of independent samples from the identical Gaussian distribution with a frequency of 2 million samples per second. Now take these time series and make a subsample for 10 thousand samples per second, i.e. Every 200th of the original samples. You will again receive independently and equally distributed samples, i.e. a white Gaussian noise of 10 kS / s. Nothing in this series of samples indicates that its source is high frequency noise. It is well sampled.

Fig. 3. Selection of white Gaussian noise. Left: white Gaussian noise (red line) with a root-mean-square amplitude of 21.7 µV and a 1 MHz bandwidth sampled at a frequency of 10 kS / s (blue dots). Right: sampled signal with a 200-fold extended timeline. This is also white Gaussian noise, with the same amplitude distribution (field of the graph), but with a bandwidth of 5 kHz.

The authors also claim that their algorithm for recovering white Gaussian noise "avoids overlapping by using the concepts of compressed reading." The principle of compressed reading (compressed sensing) is that if a signal exhibits known patterns, then it can be sampled without loss with a frequency less than the Nyquist frequency. In particular, such a signal exhibits a sparse distribution in data space in certain directions, which opens up opportunities for compression . This applies to many natural sources of signal, such as photographs. Unfortunately, white Gaussian noise is an absolutely incompressible signal without any regularities. The probability distribution for this signal is a circular Gaussian sphere, which in the data space looks the same from all sides and simply does not provide any compression possibilities. Of course, neural signals on top of this noise contain some statistical patterns (more on this below). But they do not help restore noise.

How the scheme of Cai and others fails in practice

I wrote the code for the algorithm described in the scientific work of Cai et al. When processing the author's test simulation (sinusoidal wave 200 μV with noise of 21.7 μV), it produces a cleaned sinusoidal wave with noise of only 10.2 μV (Fig. 2), which terribly close to the result of a scientific work of 10.02 µV. So, I emulated the circuit correctly.

Along the way, I ran into something like a serious mathematical error. This refers to the authors' conviction that noise with an insufficient discretization step retains a certain signature as a result of overlap. Referring to the Fourier representation of white Gaussian noise, they write ( Section III.G ): “In Fourier space, the vector angles of thermal noise (infinite length) have a uniform distribution with zero mean. Again, any deviation from this ideal in signals of finite length is averaged by overlapping spectra when the content is added to the first Nyquist zone (as a result of which the angles converge to zero). ” And similarly, “the spectral angles converge to zero in the thermal noise superimposition variant” ( p. 9, bottom left ). This is not true. The phase angles of the Fourier vectors described here have a uniform circular distribution; this distribution does not have a mean angle. After averaging, due to the normalization of some of these vectors, the phase of the mean vector again acquires a uniform circular distribution. This is confirmed in Fourier space, which is easier to estimate in direct space: a subsample of white Gaussian noise again gives you white Gaussian noise (Fig. 3). The justification of Tsai’s algorithm is based on this erroneous concept that the phase angles are somehow averaged to zero.

So, how does Tsai's algorithm clear a sinusoidal test signal? It is not so difficult. If you know that the signal is a pure sine wave, then only three unknowns remain: amplitude, phase and frequency. Obviously, you can extract these three unknowns from 10,000 noise patterns that you get every second. It can be done much more efficiently: find the largest Fourier component in the sample record and reset all others. This almost completely eliminates noise (see here for more details). Tsai’s algorithm does just that: it suppresses out-of-peak frequencies in the Fourier transform more strongly than the peak ones - and gets a certain amount of sinusoid and suppressed noise (see also Fig. 9 in the work ). As we will see later, the declared performance does not extend to realistic signals.

What would be a reasonable processing scheme?

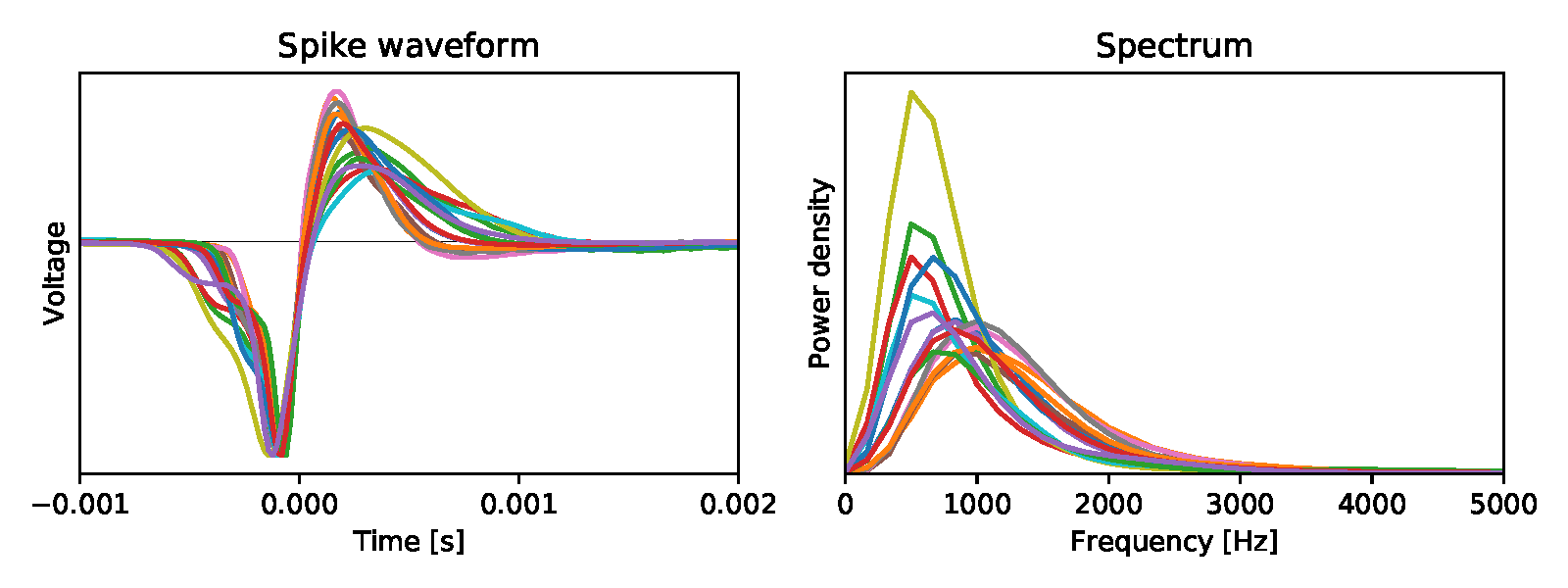

An example of a sine wave shows how to actually post process data. Attempting to restore the original noise is hopeless for all the reasons described above - you just need to put up with the noise level that is the result of all the spectral overlaps. And focus on the properties of the signal that we want to highlight. If the signal has statistical patterns, then you can use this fact to your advantage. Naturally, a sine wave is extremely regular - it means that it is extracted from the noise with almost infinite accuracy. Usually we have some partial knowledge of signal statistics. For example, the shape of the energy spectrum. Neuron potentials have frequency components up to ~ 5 kHz, but the spectrum is not flat over the whole range (Fig. 4).

Fig. 4. Left: averaged bioelectric potentials for 15 mouse retinal ganglion cells [ source ]. Right: their energy spectrum

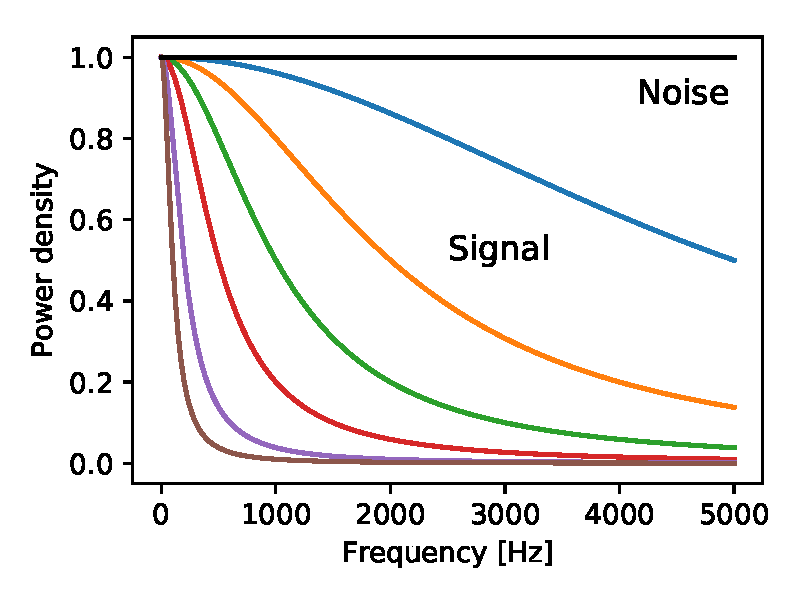

Suppose we know the energy spectrum of a signal, and it differs from the noise spectrum - how then to process the sample data in order to optimally restore the signal? This is a classic task that is set on the signal processing course. The optimal linear filter for reconstruction with minimizing the mean square error is called the Wiener filter . In fact, it suppresses frequency components, where noise is relatively more. But Cai's algorithm is a non-linear operation (see the details here ), so theoretically it can surpass the Wiener filter. In addition, due to nonlinearity, it is impossible to predict the efficiency of an algorithm on more ordinary signals from its performance on sinusoidal signals (see above). Therefore, I compared the Cai algorithm with the classic Wiener filter, using various assumptions for the spectrum. In particular, the signal was limited below the cut-off frequency in the range from 100 Hz to 5000 Hz, while the noise had a uniform spectrum in the 5 kHz band:

Fig. 5. The energy spectrum of the signal (colored lines) and noise (black) used in the calculations in Fig. 6. Noise Gaussian and white in the whole range. The signal is obtained from a Gaussian white process, passed through a single-pole low-pass filter with cut-off frequencies at 5000, 2000, 1000, 500, 200, 100 Hz.

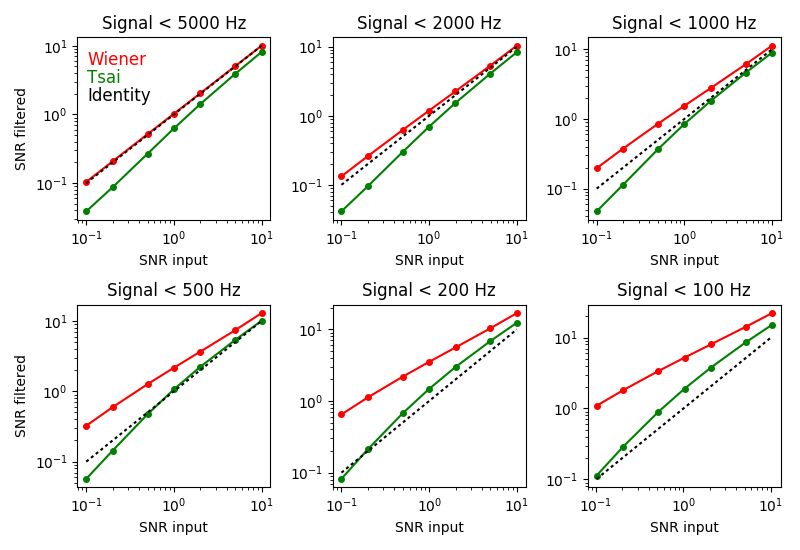

For each of these combinations of signal and noise, I applied the Wiener filter and the Tsai algorithm — and measured the signal-to-noise ratio (SNR) as a result (Fig. 6).

Fig. 6. How Cai's algorithm and Wiener filter change SNR. Each panel corresponds to a different energy spectrum of the signal (see Fig. 5). Signal and noise at different SNRs are plotted on the horizontal axis. The mixed signal and noise passed through the Wiener filter or Tsai algorithm, and the SNR of the output signal is plotted along the vertical axis. Red - Wiener filter; green - Cai's algorithm; dotted line - original.

As expected, the Wiener filter always improves the SNR (compare the red curve with the dotted script), and the greater the difference between the signal and noise spectra (the panel from the top left to the bottom right). In all variants, the Wiener filter outperforms Cai's algorithm in improving the SNR (compare the red and green curves). In addition, in many cases, the Tsai algorithm actually degrades SNR (compare green and dashed curves). Given that the algorithm is based on mathematical errors, this is not surprising.

There are more complex noise suppression schemes than the Wiener filter. For example, we know that the records of interest in neural activity are the superposition of impulse-like events — bioelectric potentials — whose forms of bursts are described by just a few parameters. Within this statistical model, it is possible to develop algorithms that will determine among the noise the optimal peak values of each of the events. This is an active area of research .

Why is it important for neuroscience?

Researchers from different neurosciences actively seek to increase the number of simultaneously tracked neurons. In this area, exponential progress, although rather slow, doubles every seven years . Several projects are aimed at accelerating this process by creating large-scale CMOS chips with an array of thousands of densely located electrodes and multiplexers [including Cai's work - note. trans.]. This is expensive research. To bring the electrode grids to the prototype stage, millions of investments are required. If the success of such a device is based on misconceptions about overlapping and unwarranted expectations from software, this can lead to costly failures and waste of valuable resources.

A painful failure of this kind was the array Fromhertsa . Designed in collaboration with Siemens for millions of DMs, it became the largest array of bioelectrodes for its time, with 16,384 sensors at a site of 7.8 × 7.8 microns. To save space and for other reasons, developers have abandoned the use of anti-overlay filters. Overlay and other artifacts ultimately resulted in a noise level of 250 µV , unsuitable for any interesting experiments, so the innovative device was never used in the business. But such an outcome could be predicted by simple calculation.

If it is rational to foresee the consequences of overlap, then useful compromises are possible. For example, in a newly created device with silicon pins, there are also no overlay protection filters in multiplexers . But the subsampling coefficient is only 8: 1, with the result that the excess noise does not exceed the critical values . In combination with the correct recovery of the signal, such a scheme is quite viable.

Who checked this work?

As always, the question arises: did these dramatic statements by Tsai and others have been reviewed? Has anyone not been surprised by the obvious contradiction to the reading theorem? Who ever considered these materials? Well, I reviewed them. For another magazine, where the article was eventually rejected. Before that there was a long correspondence with the authors, including I gave them the task to remove the overlay noise from the simulated recording (the task below, only without a monetary reward). The authors considered the call a valid test algorithm, but completely failed the test. Somehow this did not shake their confidence - and they submitted the exact same research article to two other journals. These journals, apparently after the so-called peer review, happily agreed to print.

How to win $ 1000

Of course, there is a possibility that I misunderstood Cai’s algorithm or, in spite of everything, there is another scheme for recovering superimposed white noise after sampling. To encourage creative work in this area, I propose a task: if you can do what Tsai and colleagues claim, I will give you $ 1,000. Here's how it works:

First you send me $ 10 for “postage” (Paypal meister4@mac.com, thanks). Instead, I send a data file containing the result of the signal and noise sampling at 10 kS / s, where the signal is limited to a 5KHz Nyquist frequency and the noise is white Gaussian 1 MHz noise. You use Tsai's algorithm or any other scheme of your choice to remove the superimposed noise as much as possible, and send me a file with your best signal estimate. If you can improve your SNR by a factor of two or more, I’ll pay you $ 1,000. Please note that the correct overlay protection filter increases the SNR by a factor of 200 (Fig. 2), and Tsai claims that it increases it more than four times, so I ask a little here. For more technical information, see the code and comments in my Jupyter notebook .

A few more rules: the offer is valid for 30 days from the date of publication [March 20, 2018 - footnote. trans.]. Wins only the first qualifying record. You must disclose the algorithm used so that I can reproduce its work. After the battle with the counting theorem, you may be interested in some other task, for example, a cryptographic attack on a random seed, which I used to create a data file.Although I would be interested to know about such alternative solutions, but I will not pay 1000 dollars for this, but I will only refund your 10 dollars.

Finally, I do not want to take money from newbies. Before you send me $ 10, be sure to thoroughly test your algorithm using the code in my Jupyter notebook : I will use it to evaluate the algorithm.

Results

While no one has received $ 1,000, the conclusions are as follows:

- , 200 , .

- , . . , .

- . ( ) . , , — . , . .

- At the moment, I propose that hardware developers adhere to the ancient wisdom of “filter before sampling!”

Source: https://habr.com/ru/post/352628/

All Articles