How to switch to microservices and not break production

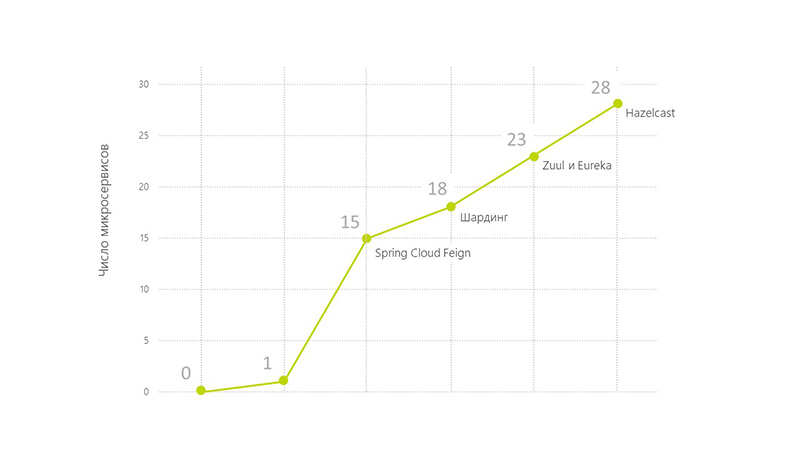

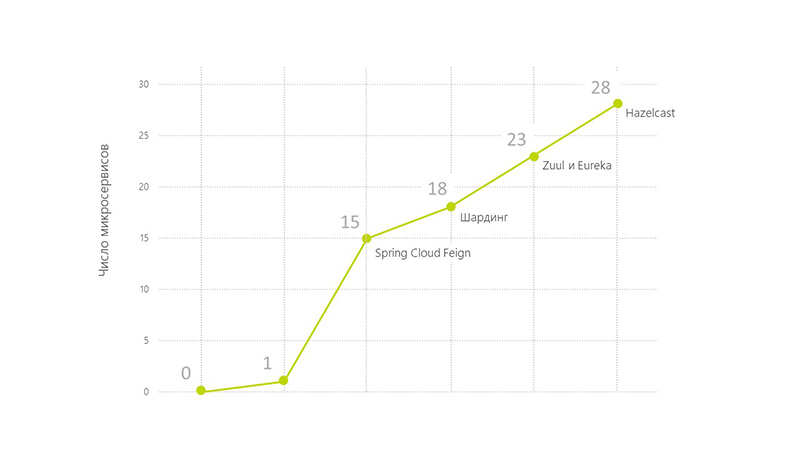

Today we will tell how a monolithic solution was transferred to microservices. Through our application around the clock passes from 20 to 120 thousand transactions per day. Users work in 12 time zones. At the same time, functionality was added a lot and often, which is quite difficult to do on a monolith. That is why the system required steady operation in 24/7 mode, that is, HighLoad, High Availability and Fault Tolerance.

We develop this product on the MVP model. The architecture changed in several stages following the requirements of the business. Initially, it was not possible to do everything at once, because no one knew what the solution should look like. We moved along the Agile model, iteratively adding and extending functionality.

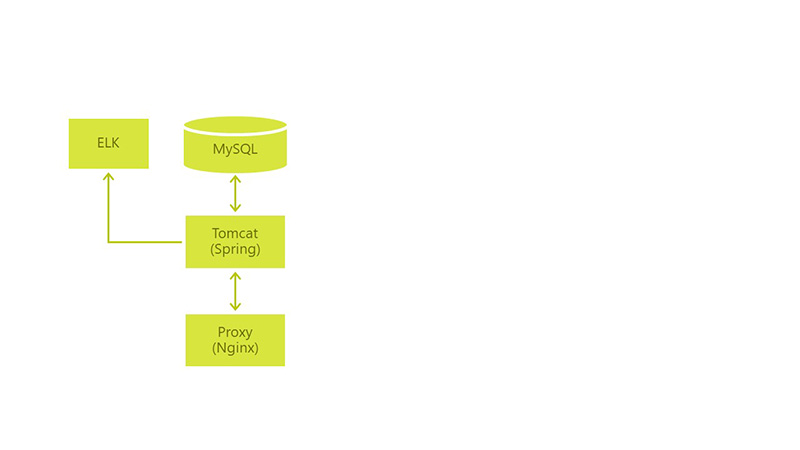

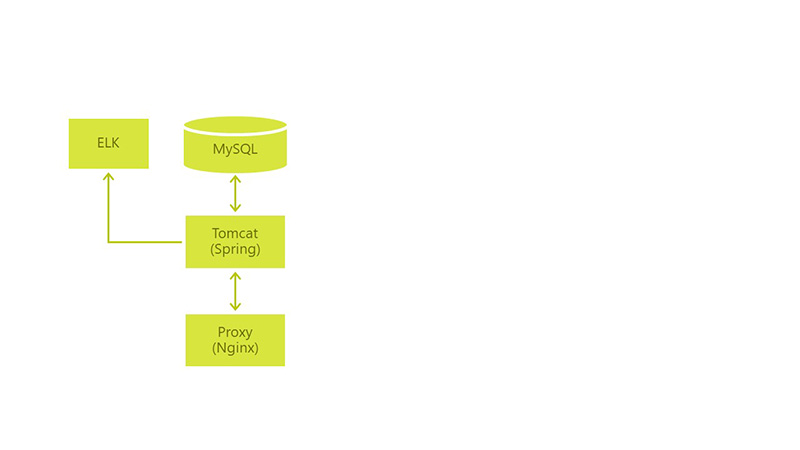

Initially, the architecture looked like this: we had MySql with a single war, Tomcat and Nginx for proxying user requests.

')

Environments (& Minimum CI / CD):

The development was based on user scripts. Already at the start of the project, most of the scenarios fit into a certain workflow. But still not everything, and this circumstance complicated our development and did not allow us to carry out a “deep design”.

In 2015, our application saw production. Industrial operation has shown that we do not have enough flexibility in the operation of the application, its development and in sending changes to the prod-server. We wanted to achieve High Availability (HA), Continuous Delivery (CD) and Continuous Integration (CI).

Here are the problems that needed to be solved in order to come to HI, CD, CI:

We began to solve all these problems one by one. And the first thing that they took up is the changing requirements for the product.

Challenge: Changing product requirements and new use cases.

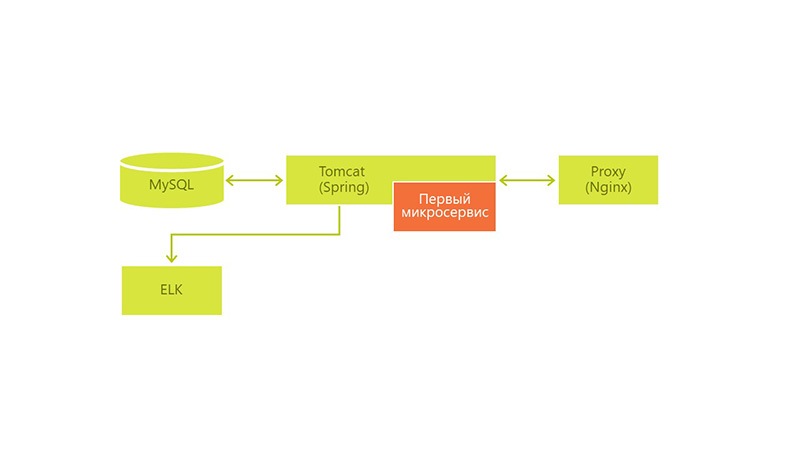

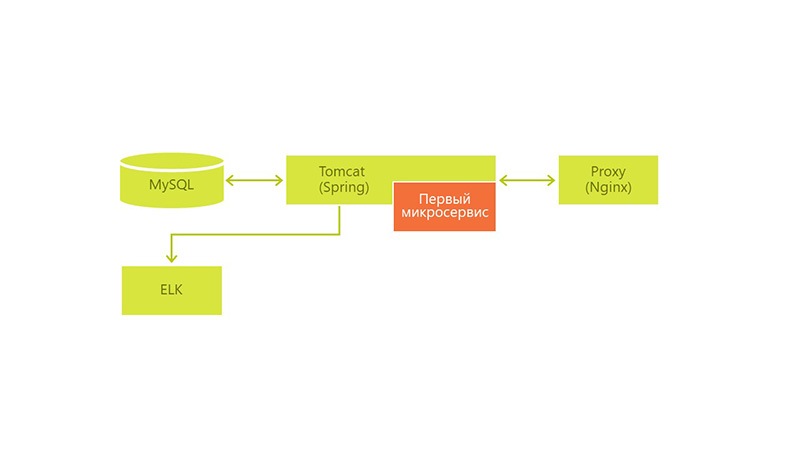

Technological answer: The first microservice appeared - they carried part of the business logic into a separate war file and put it in Tomcat.

Another task of the form came to us: by the end of the week, update the business logic in the service and we decided to put this part into a separate war-file and put it in the same Tomcat. We used Spring Boot for speed configuration and development.

We made a small business function that solved a problem with periodically changing user parameters. In the event of a change in business logic, we would not have to restart the whole Tomcat, lose our users for half an hour and reload only a small part of it.

After successfully making the logic on the same principle, we continued to make changes to the application. And from the moment when tasks that radically changed something within the system came to us, we carried these parts separately. Thus, we constantly accumulated new microservices.

Call: Microservices are already 15. The problem of typing.

Technical answer: Spring Cloud Feign.

The problems did not dare by themselves just because we started cutting our solutions into microservices. Moreover, new problems began to arise:

New problems have increased the restart time of all Tomcat with technical work. So, we have complicated our work.

The problem with typing, of course, did not arise by itself. Most likely, during several releases we simply ignored it, because we found these errors during the testing or development stages and had time to do something. But when several errors were discovered quite halfway in production and required an urgent correction, we introduced regulations or began to use tools that solve this problem. We paid attention to the Spring Cloud Feign - this is the client library for http requests.

- little overhead for implementation in the project,

- he generated the client himself,

- it is possible to use one interface both on the server and on the client.

He solved our typing problems with what we formed clients. And for the controllers of our services, we used the same interfaces as for the formation of clients. So the typing problems are gone.

Challenge of business: 18 microservices, now idle times in system operation are inadmissible.

Technical answer: changing architecture, increasing servers.

We still have a problem with downtime and rolling out new versions, there is a problem with restoring a Tomcat session and freeing resources. The number of microservices continued to grow.

The process of deploying all microservices took about an hour. Periodically, you had to restart the application due to the problem with the release of resources from tomcat. There were no easy ways to do this more quickly.

We began to think about how to change the architecture. Together with the department of infrastructure solutions, we built a new solution based on what we already had.

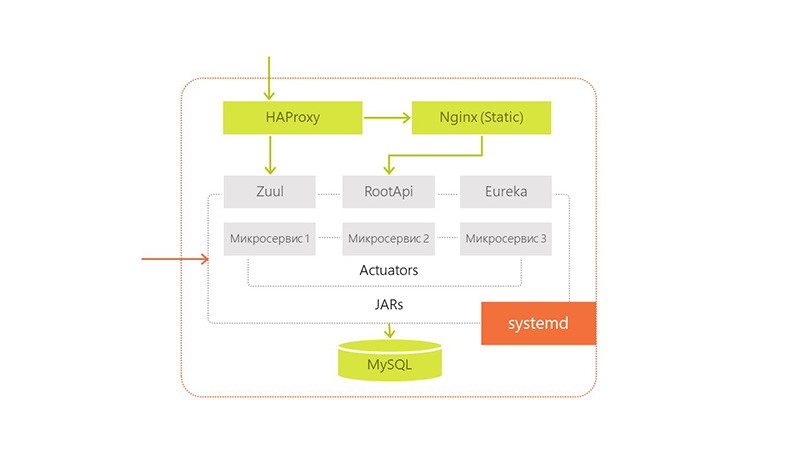

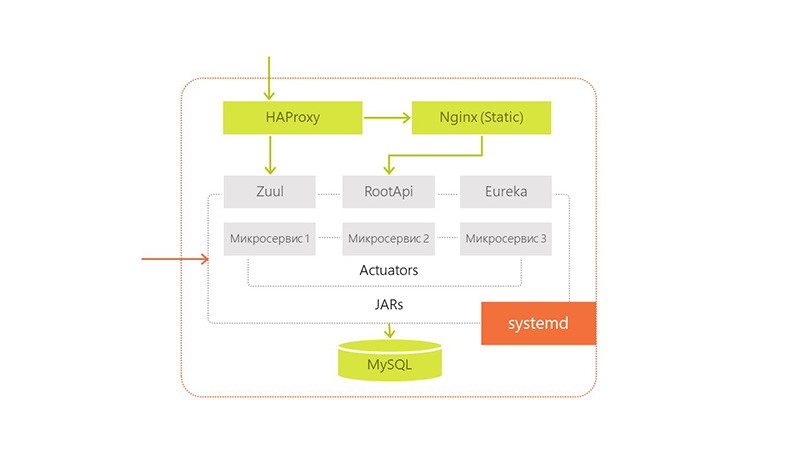

The architecture has changed its look as follows:

The technologies used were:

Accordingly, when a request came from the user to the services in Tomcat, they simply requested data from MySQL. The data that required integrity was collected from all servers and glued together (all requests were through the API).

Applying this approach, we lost a little in the consistency of the data, but we solved the current problem. The user could work with our application in any situations.

So major problems were solved. Out easy to work users. Now they did not feel when we rolled update.

Business challenge: 23 microservices. Data consistency issues.

Technical solution: launch services separately from each other. Improved monitoring. Zuul and Eureka. Simplified the development of individual services and their delivery.

Problems continued to appear. This is how our finite looked like:

Thinking, we decided that our problem would be solved by the launch of services separately from each other - if we launch services not in one Tomcat, but each in its own on one server.

But there were other questions: how can services now communicate with each other, which ports should be open to the outside?

We selected a number of ports and distributed them to our modules. To avoid the need to keep all this information about the ports somewhere in the pom-file or the general configuration, we chose Zuul and Eureka to solve these problems.

To improve monitoring, we added Spring Boot Admin from the existing stack to understand what service is running on it.

We also began to translate our dedicated services to a stateless architecture in order to get rid of the deployment problems of several identical services on one server. This gave us horizontal scaling within a single data center. Inside one server, we ran different versions of the same application when upgrading, so that even on it there was no downtime.

It turned out that we approached the Continuous Delivery / Continuous Integration by simplifying the development of individual services and their delivery. Now there was no need to fear that the delivery of one service would cause a leak of resources and would have to restart the entire service.

The idle time while rolling out new versions still remained, but not entirely. When we alternately updated several jar on the server, this happened quickly. And there was no problem on the server when updating a large number of modules. But restarting all 25 microservices during the update took a lot of time. Though faster than inside Tomcat, which does it consistently.

The problem with the release of resources, we also decided that we started everything with a jar, and the system Out of memory killer dealt with leaks or problems.

Business challenge: 28 microservices. A lot of information that needs to be managed.

Technical solution: Hazelcast.

We continued to implement our architecture and realized that our basic business transaction covers several servers at once. It was inconvenient for us to send a request to a dozen systems. Therefore, we decided to use Hazelcast for event-messaging and for system work with users. Also for subsequent services used it as a layer between the service and the database.

We finally got rid of the problem with the consistency of our data. Now we could save any data in all databases at the same time, without doing any unnecessary actions. We told Hazelcast, in which databases it should store incoming information. He did this on every server, which simplified our work and allowed us to get rid of sharding. And thus we moved to replication at the application level.

Also now we began to store the session in Hazelcast and used it for authorization. This allowed users to be poured between servers imperceptibly for them.

Challenge business: you need to speed up the release of updates in production.

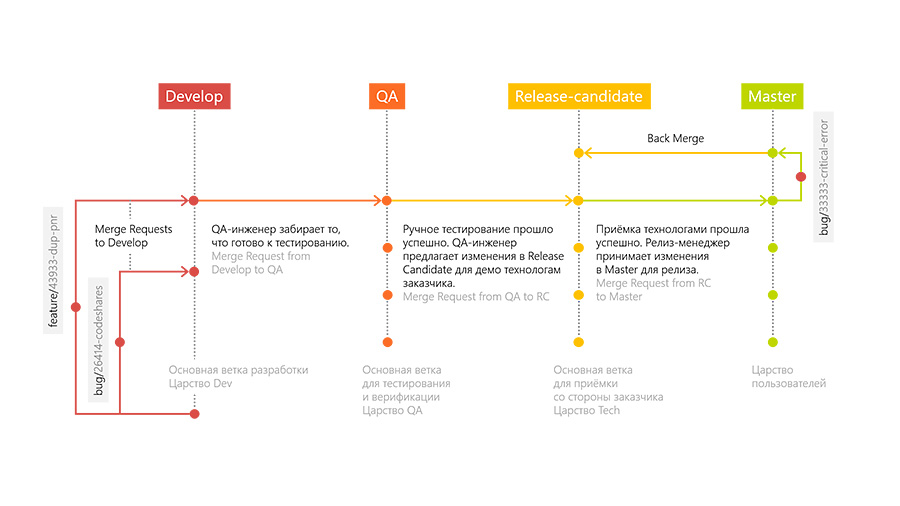

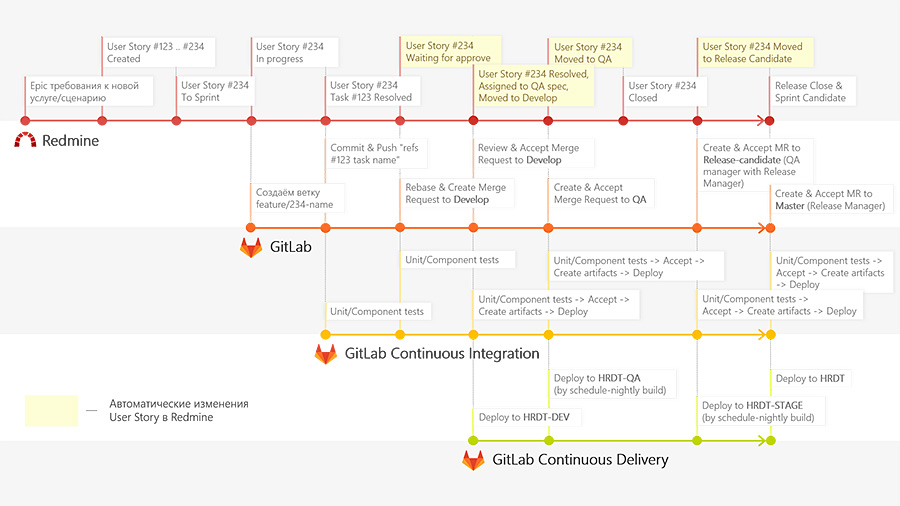

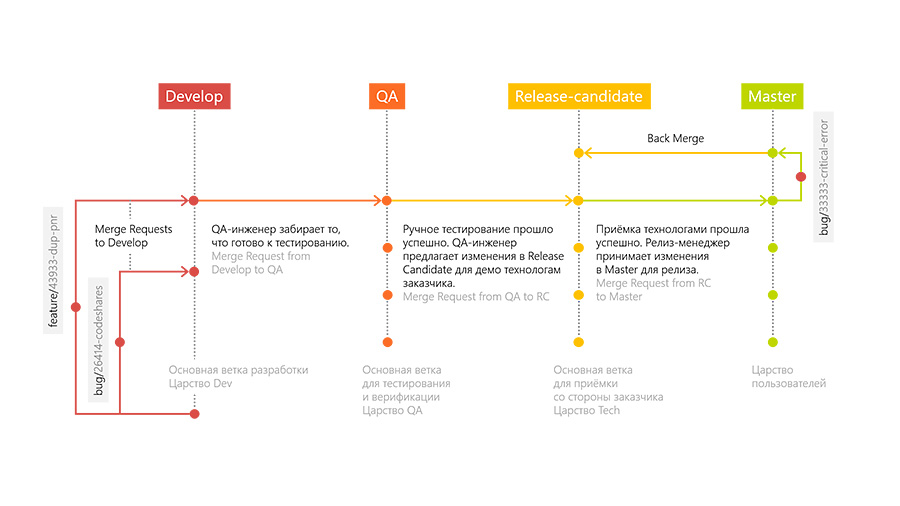

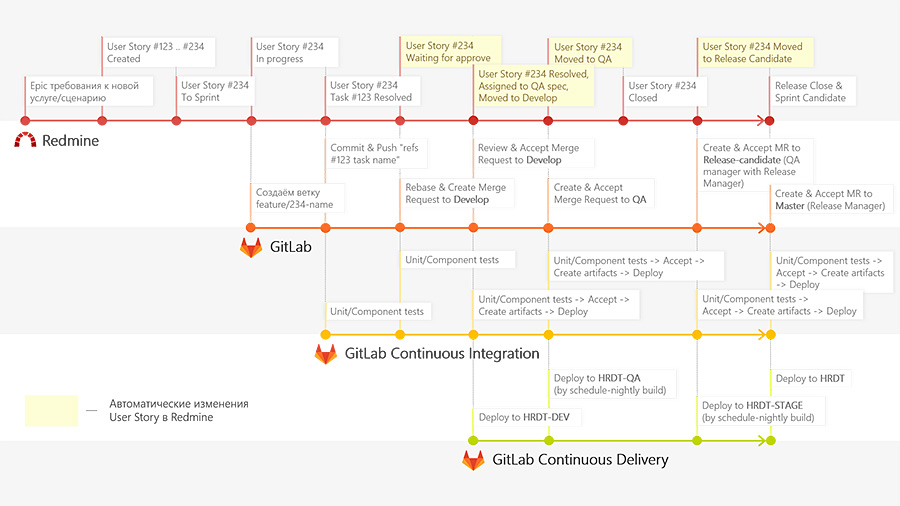

Technical solution: our application deployment pipeline, GitFlow for working with code.

Together with the number of microservices, the internal infrastructure also developed. We wanted to speed up the delivery of our services to production. To do this, we implemented a new deployment pipeline for our application and moved to GitFlow to work with the code. CI began to collect and run tests for each commit, run unit-tests, integration tests, add artifacts with the delivery of the application.

To do this quickly and dynamically, we deployed several GitLab-runners that started all these tasks according to the developers' push. Thanks to the GitLab Flow approach, we have several servers: Develop, QA, Release-candidate and Production.

Development occurs as follows. The developer adds a new feature in a separate branch (feature branch). After the developer has finished, he creates a request to merge his branch with the main development branch (Merge Request to Develop branch). The request to merge is viewed by other developers and is accepted or not accepted, after which the comments are corrected. After merging into the main branch, a special environment is developed, on which tests for elevation of the environment are performed.

When all these stages are completed, the QA engineer takes the changes to his “QA” branch and performs tests on previously written feature cases and research tests.

If the QA engineer approves the work done, then the changes go to the Release-Candidate branch and unfold on the environment that is accessible to external users. In this environment, the customer accepts and verifies our technologies. Then we translate it all into Production.

If at some stage there are bugs, then it is in these branches that we solve these problems and are missing them in Develop. We also made a small plugin so that Redmine could tell us what stage the feature is at.

This helps testers look at what stage they need to connect to the task, and developers - to correct bugs, because they see at what stage the error occurred, they can go to a specific branch and play it there.

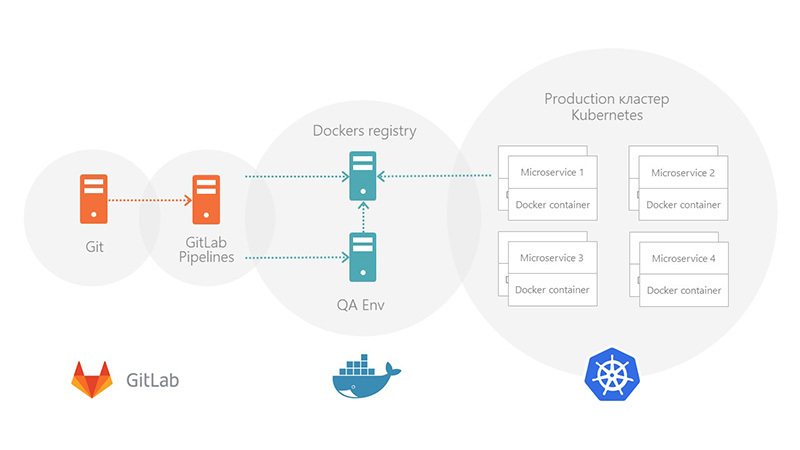

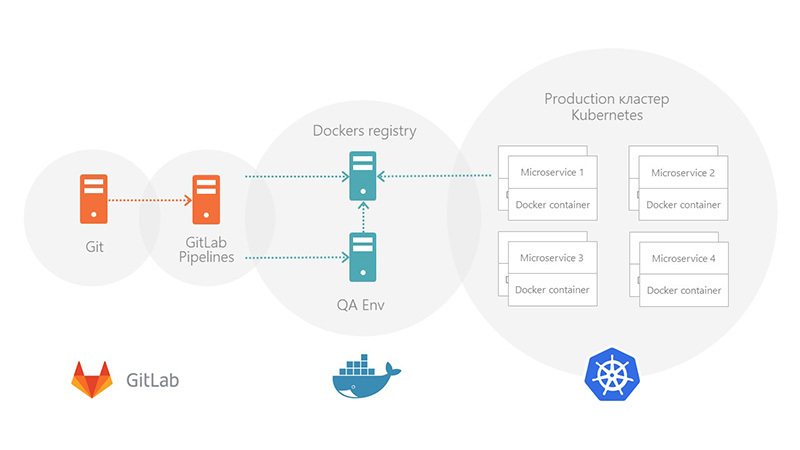

Business Challenge: Switch between servers without downtime.

Technical Solution: Packaging in Kubernetes.

Now at the end of the deployment, technical specialists report jer-ki to the PROD-server and restart them. This is not very convenient. We want to automate the work of the system further by implementing Kubernetes and linking it with the data center, updating them and rolling it all at once.

To go to this model, we need to complete the following work.

We develop this product on the MVP model. The architecture changed in several stages following the requirements of the business. Initially, it was not possible to do everything at once, because no one knew what the solution should look like. We moved along the Agile model, iteratively adding and extending functionality.

Initially, the architecture looked like this: we had MySql with a single war, Tomcat and Nginx for proxying user requests.

')

Environments (& Minimum CI / CD):

- Dev - deploy to push to develop,

- QA - develop once a day,

- Prod - on the button with the master,

- Run integration tests manually,

- Everything works on Jenkins.

The development was based on user scripts. Already at the start of the project, most of the scenarios fit into a certain workflow. But still not everything, and this circumstance complicated our development and did not allow us to carry out a “deep design”.

In 2015, our application saw production. Industrial operation has shown that we do not have enough flexibility in the operation of the application, its development and in sending changes to the prod-server. We wanted to achieve High Availability (HA), Continuous Delivery (CD) and Continuous Integration (CI).

Here are the problems that needed to be solved in order to come to HI, CD, CI:

- idle time when rolling out new versions - deployed applications for too long

- the problem with changing product requirements and new user cases - it took too much time to test and verify even with small fixes,

- Problem with restoring sessions to Tomcat: session management for the booking system and third-party services; upon restarting the application, the session was not restored by Tomcat,

- problems with the release of resources: it was necessary to reboot Tomcat sooner or later, a memory leak occurred.

We began to solve all these problems one by one. And the first thing that they took up is the changing requirements for the product.

First microservice

Challenge: Changing product requirements and new use cases.

Technological answer: The first microservice appeared - they carried part of the business logic into a separate war file and put it in Tomcat.

Another task of the form came to us: by the end of the week, update the business logic in the service and we decided to put this part into a separate war-file and put it in the same Tomcat. We used Spring Boot for speed configuration and development.

We made a small business function that solved a problem with periodically changing user parameters. In the event of a change in business logic, we would not have to restart the whole Tomcat, lose our users for half an hour and reload only a small part of it.

After successfully making the logic on the same principle, we continued to make changes to the application. And from the moment when tasks that radically changed something within the system came to us, we carried these parts separately. Thus, we constantly accumulated new microservices.

The main approach by which we began to allocate microservices is the allocation of a business function or the entire business service.So we quickly separated services integrated with third-party systems, such as 1C.

The first problem is typing.

Call: Microservices are already 15. The problem of typing.

Technical answer: Spring Cloud Feign.

The problems did not dare by themselves just because we started cutting our solutions into microservices. Moreover, new problems began to arise:

- the problem of typing and versioning in Dto between modules,

- How to fix not one war file in Tomcat, but a lot.

New problems have increased the restart time of all Tomcat with technical work. So, we have complicated our work.

The problem with typing, of course, did not arise by itself. Most likely, during several releases we simply ignored it, because we found these errors during the testing or development stages and had time to do something. But when several errors were discovered quite halfway in production and required an urgent correction, we introduced regulations or began to use tools that solve this problem. We paid attention to the Spring Cloud Feign - this is the client library for http requests.

github.com/OpenFeign/feignWe chose it because

cloud.spring.io/spring-cloud-netflix/multi/multi_spring-cloud-feign.html

- little overhead for implementation in the project,

- he generated the client himself,

- it is possible to use one interface both on the server and on the client.

He solved our typing problems with what we formed clients. And for the controllers of our services, we used the same interfaces as for the formation of clients. So the typing problems are gone.

Downtime. First fight. Performance

Challenge of business: 18 microservices, now idle times in system operation are inadmissible.

Technical answer: changing architecture, increasing servers.

We still have a problem with downtime and rolling out new versions, there is a problem with restoring a Tomcat session and freeing resources. The number of microservices continued to grow.

The process of deploying all microservices took about an hour. Periodically, you had to restart the application due to the problem with the release of resources from tomcat. There were no easy ways to do this more quickly.

We began to think about how to change the architecture. Together with the department of infrastructure solutions, we built a new solution based on what we already had.

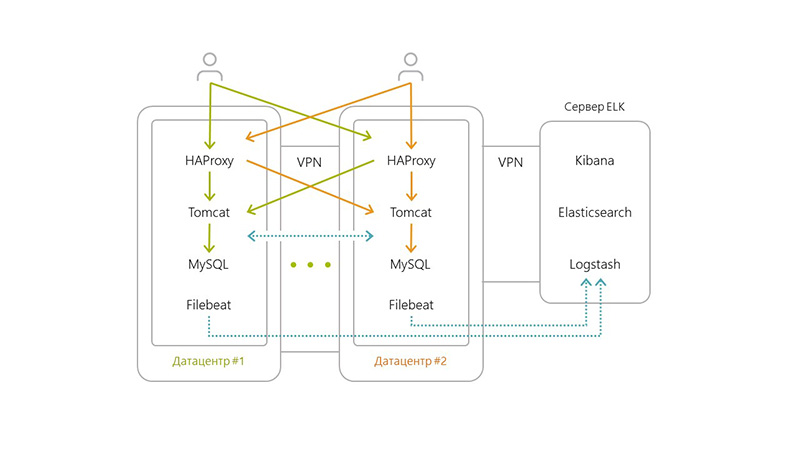

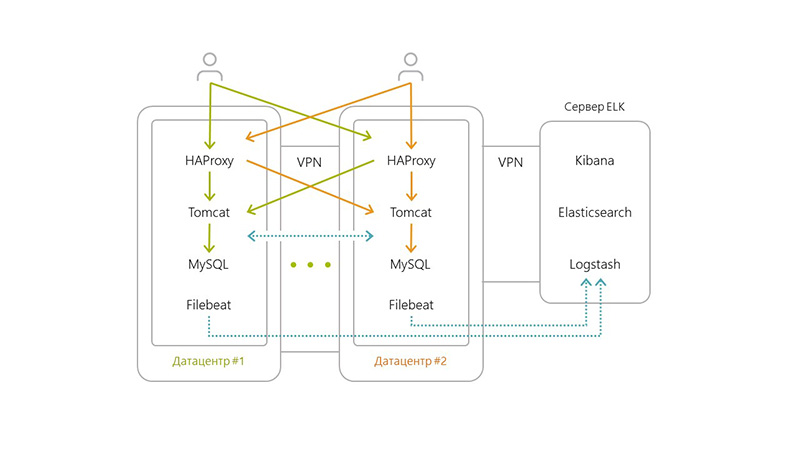

The architecture has changed its look as follows:

- horizontally divided our application into several data centers,

- added a filebeat to each server

- added a separate server for ELK, as the number of transactions and logs grew,

- several haproxy + Tomcat + Nginx + MySQL servers (this is how we ensured High Availability).

The technologies used were:

- Haproxy is engaged in routing and balancing between servers,

- Nginx is responsible for the distribution of statics, tomcat was the application server,

- The peculiarity of the solution was that MySQL on each of the servers does not know about the existence of its other MySQL,

- Because of the latency problem between data centers, replication at the MySQL level was impossible. Therefore, we decided to implement sharding at the level of microservices.

Accordingly, when a request came from the user to the services in Tomcat, they simply requested data from MySQL. The data that required integrity was collected from all servers and glued together (all requests were through the API).

Applying this approach, we lost a little in the consistency of the data, but we solved the current problem. The user could work with our application in any situations.

- Even if one of the servers fell, we still had 3-4, which, supported the performance of the entire system.

- We stored backups not on servers in the same data center in which they were made, but in neighboring ones. This helped us with disaster recovery.

- Fault tolerance was also solved at the expense of several servers.

So major problems were solved. Out easy to work users. Now they did not feel when we rolled update.

Downtime. Fight the second. Full value

Business challenge: 23 microservices. Data consistency issues.

Technical solution: launch services separately from each other. Improved monitoring. Zuul and Eureka. Simplified the development of individual services and their delivery.

Problems continued to appear. This is how our finite looked like:

- We did not have the consistency of the data with rediploy, so some of the functionality (not the most important) faded into the background. For example, when rolling in a new application, statistics worked defectively.

- We had to kick users from one server to another in order to restart the application. It also took about 15-20 minutes. On top of that, users had to log in when switching from server to server.

- We also restarted Tomcat more often due to the growing number of services. And now I had to follow a large number of new microservices.

- Redploying time has grown in proportion to the number of services and servers.

Thinking, we decided that our problem would be solved by the launch of services separately from each other - if we launch services not in one Tomcat, but each in its own on one server.

But there were other questions: how can services now communicate with each other, which ports should be open to the outside?

We selected a number of ports and distributed them to our modules. To avoid the need to keep all this information about the ports somewhere in the pom-file or the general configuration, we chose Zuul and Eureka to solve these problems.

Eureka - service discoveryEureka also improved our performance in High Availability / Fault Tolerance, since communication between services is now possible. We set up so that if the current data center does not have the necessary service, go to another.

Zuul - proxy (to save contextual URLs that were in Tomcat)

To improve monitoring, we added Spring Boot Admin from the existing stack to understand what service is running on it.

We also began to translate our dedicated services to a stateless architecture in order to get rid of the deployment problems of several identical services on one server. This gave us horizontal scaling within a single data center. Inside one server, we ran different versions of the same application when upgrading, so that even on it there was no downtime.

It turned out that we approached the Continuous Delivery / Continuous Integration by simplifying the development of individual services and their delivery. Now there was no need to fear that the delivery of one service would cause a leak of resources and would have to restart the entire service.

The idle time while rolling out new versions still remained, but not entirely. When we alternately updated several jar on the server, this happened quickly. And there was no problem on the server when updating a large number of modules. But restarting all 25 microservices during the update took a lot of time. Though faster than inside Tomcat, which does it consistently.

The problem with the release of resources, we also decided that we started everything with a jar, and the system Out of memory killer dealt with leaks or problems.

Third fight, information management

Business challenge: 28 microservices. A lot of information that needs to be managed.

Technical solution: Hazelcast.

We continued to implement our architecture and realized that our basic business transaction covers several servers at once. It was inconvenient for us to send a request to a dozen systems. Therefore, we decided to use Hazelcast for event-messaging and for system work with users. Also for subsequent services used it as a layer between the service and the database.

We finally got rid of the problem with the consistency of our data. Now we could save any data in all databases at the same time, without doing any unnecessary actions. We told Hazelcast, in which databases it should store incoming information. He did this on every server, which simplified our work and allowed us to get rid of sharding. And thus we moved to replication at the application level.

Also now we began to store the session in Hazelcast and used it for authorization. This allowed users to be poured between servers imperceptibly for them.

From microservices to CI / CD

Challenge business: you need to speed up the release of updates in production.

Technical solution: our application deployment pipeline, GitFlow for working with code.

Together with the number of microservices, the internal infrastructure also developed. We wanted to speed up the delivery of our services to production. To do this, we implemented a new deployment pipeline for our application and moved to GitFlow to work with the code. CI began to collect and run tests for each commit, run unit-tests, integration tests, add artifacts with the delivery of the application.

To do this quickly and dynamically, we deployed several GitLab-runners that started all these tasks according to the developers' push. Thanks to the GitLab Flow approach, we have several servers: Develop, QA, Release-candidate and Production.

Development occurs as follows. The developer adds a new feature in a separate branch (feature branch). After the developer has finished, he creates a request to merge his branch with the main development branch (Merge Request to Develop branch). The request to merge is viewed by other developers and is accepted or not accepted, after which the comments are corrected. After merging into the main branch, a special environment is developed, on which tests for elevation of the environment are performed.

When all these stages are completed, the QA engineer takes the changes to his “QA” branch and performs tests on previously written feature cases and research tests.

If the QA engineer approves the work done, then the changes go to the Release-Candidate branch and unfold on the environment that is accessible to external users. In this environment, the customer accepts and verifies our technologies. Then we translate it all into Production.

If at some stage there are bugs, then it is in these branches that we solve these problems and are missing them in Develop. We also made a small plugin so that Redmine could tell us what stage the feature is at.

This helps testers look at what stage they need to connect to the task, and developers - to correct bugs, because they see at what stage the error occurred, they can go to a specific branch and play it there.

Further development

Business Challenge: Switch between servers without downtime.

Technical Solution: Packaging in Kubernetes.

Now at the end of the deployment, technical specialists report jer-ki to the PROD-server and restart them. This is not very convenient. We want to automate the work of the system further by implementing Kubernetes and linking it with the data center, updating them and rolling it all at once.

To go to this model, we need to complete the following work.

- Bring our current solutions to a stateless architecture so that the user can send requests to all servers without parsing. Some of our services still support some session data. This work concerns the replication of database data.

- We also need to cut the last small monolith, which contains several business processes. This will lead us to the last major step - Continuous Delivery.

PS What has changed with the transition to microservices

- We got rid of the problem of changing demands.

- We got rid of the problem of restoring sessions from Tomcat by transferring them to Hazelcast.

- When transferring users from one server to another, they do not have to re-login.

- Solved all the problems with the release of resources, shifting them onto the shoulders of the operating system.

- The problems of typing and versioning were solved thanks to Feign.

- We are confidently moving towards Continuous Delivery using Gitlab Pipelines.

Source: https://habr.com/ru/post/352618/

All Articles