Network physics in virtual reality

Introduction

About a year ago, Oculus approached me with a proposal to sponsor my research. In fact, they said the following: “Hello, Glenn, there is a lot of interest in network physics for VR, and you gave an excellent talk at GDC. Do you think you can prepare a sample of network physics in VR that we could show to developers? Maybe you can use touch controllers? ”

I replied,

The guys from Oculus agreed. And this article! The source code of the network physics example is posted here . The code I wrote in it was released under the BSD license. Hopefully, the next generation of programmers will be able to learn something from my research in network physics and create something truly remarkable. Good luck!

What will we build?

When I first started discussing the project with Oculus, we imagined creating something like a table at which four players could sit and interact with physically simulated cubes lying on the table. For example, throwing them, catching and building towers, maybe destroying each other’s towers with a wave of hands.

')

But after a few days of exploring Unity and C #, I was finally inside Rift. In VR, scale is very important . When the cubes were small, everything was not very interesting, but when their size grew to about a meter, a wonderful sense of scale appeared. The player could create huge towers of cubes, up to 20-30 meters in height. The sensations were awesome!

It is impossible to visually convey how everything looks in VR, but it looks something like this:

Here you can select, drag and drop cubes using the touch controller. All cubes that the player releases from the hand interact with other cubes of the simulation. You can throw a cube into a tower of cubes and break it. You can take a cube in each hand and juggle them. You can build towers of cubes to check how high you can get.

Although all this is very interesting, but not everything is so cloudless. Working with Oculus as a client, before starting work, I had to define the tasks and the necessary results.

I suggested the following criteria as an assessment of success:

- Players must be able to pick, roll, and catch dice without delay.

- Players should be able to put cubes in towers and these towers should become stable (come to rest) and without noticeable jitter.

- When abandoned by any player interact with the simulation, such interactions must occur without delay.

At the same time, I created a set of tasks in order from the most serious to the smallest. Since this is a research project, there was no guarantee that we would succeed in what we were trying to do.

Network models

First we had to choose a network model. In essence, the network model is a strategy for how specifically we will hide the delays and keep the simulation synchronized.

We could choose one of three basic network models:

- Deterministic lockstep

- Client server with client side prediction

- Distributed Authorization Simulation

I was immediately sure of the correct choice of the network model: it is a distributed simulation model in which players are given authority for cubes with which they interact. But I should share with you my reasoning.

First, I can trivially exclude the deterministic lockstep model, since the Unity physics engine (PhysX) is not deterministic. Moreover, even if PhysX were deterministic, I could still exclude this model due to the need for no delays in player interactions with the simulation.

The reason for this is that in order to hide the delays in the deterministic lockstep model, I would have to store two copies of the simulation and predict in advance the authoritative simulation with local input before rendering (GGPO style). With a simulation frequency of 90 Hz and a delay of up to 250 ms, this meant that each frame of visual rendering would require 25 steps of physics simulation. The costs of 25X are simply unrealistic for a physical simulation with intensive CPU utilization.

Therefore, there are two options left: a client-server network model with client-side prediction (perhaps with a dedicated server) and a less secure network model of distributed simulation.

Since the sample is not competitive, I found few arguments in favor of adding support costs for dedicated servers. Therefore, regardless of which model of the two I implement, security is essentially the same. The only difference would appear only when one of the players in the game could theoretically cheat, or all the players could cheat.

For this reason, the distributed simulation model was a more logical choice. In fact, it provided the same level of security, but it did not require expensive backtracks and re-simulations, since players simply get the authority to manage the cubes with which they interact, and send the status of these cubes to other players.

Authorization Scheme

It is intuitively clear that obtaining permissions (to work as a server) on objects with which you interact can hide delays - you are a server, so you have no delays, right? However, it is not entirely clear how to resolve conflicts in this case.

What if two players interact with the same tower? If two players due to the delay grab the same cube? In case of conflict, who will win, whose state is corrected, and how to make such decisions?

At this stage, my intuitive considerations were as follows: since we will exchange object states very quickly (up to 60 times per second), it is best to implement this as encoding in the state passed between players over my network protocol, and not as events.

I thought about it for a while and came to two basic concepts:

- Authority

- Possession

Each cube will have authority, either with a default value (white), or the color of the player with whom he interacted last. If another player has interacted with the object, the powers are changed and transferred to this player. I planned to use permissions for interactions of objects dropped in the scene. I imagined that a cube thrown by player 2 could take authority over all the objects with which it interacted, and they in turn recursively with all the objects with which they interacted.

Ownership is a little different. When a cube is owned by one player, no other player can gain possession over it until the first player surrenders possession. I was planning to use possession for players who pick up cubes, because I didn’t want players to snatch cubes from other players.

I had an intuitive understanding that I could represent and transfer authority and possession as a state, adding to each cube when it was transferred two different consecutive numbers: the ordinal number of powers and the ordinal number of ownership. As a result, this intuitive understanding proved its fairness, but it turned out to be much more difficult to implement than I expected. More on this, I will tell below.

State synchronization

Believing that I can implement the rules of authority described above, I decided that my first task would be to prove the possibility of synchronizing physics in one direction of flow using Unity and PhysX. In my previous work, I created network simulations using ODE, so I really had no idea if this was possible.

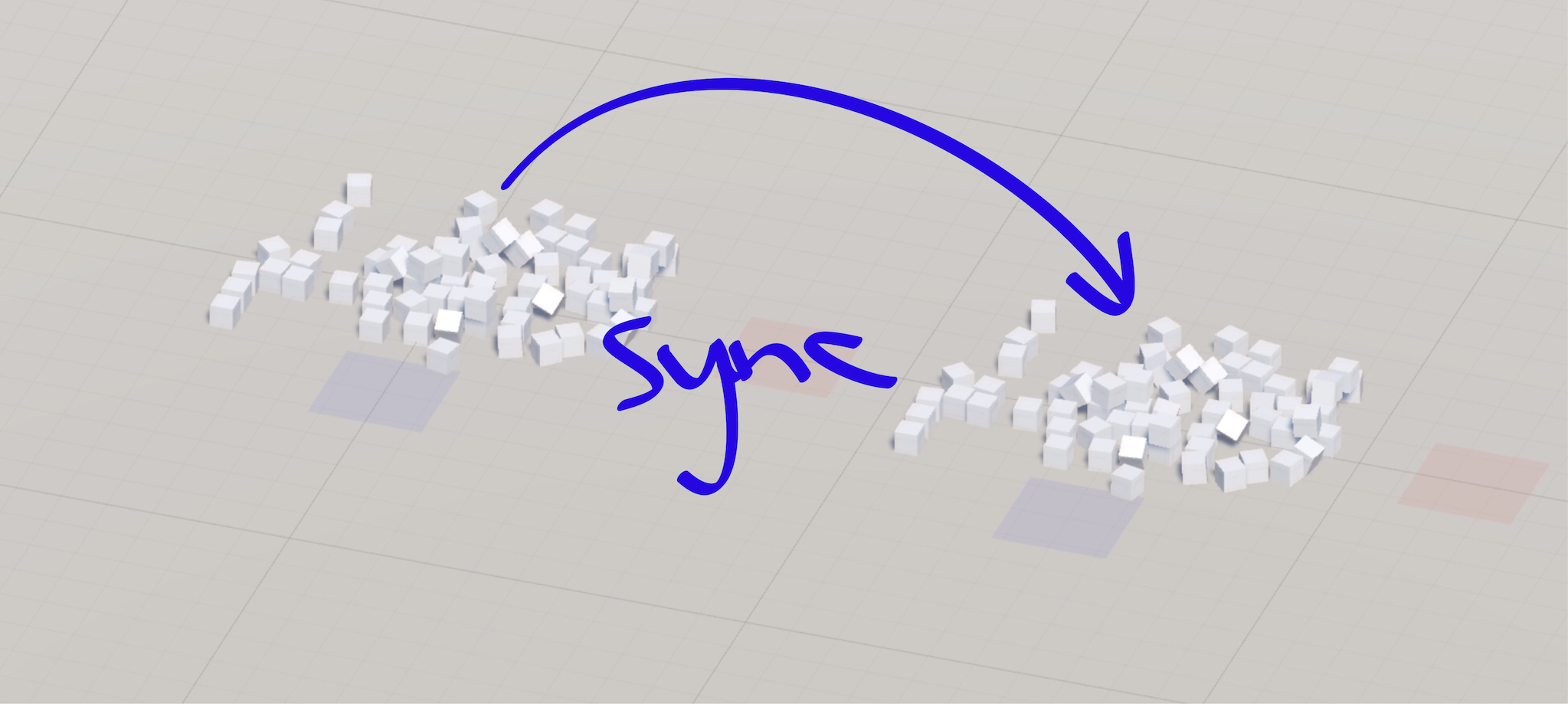

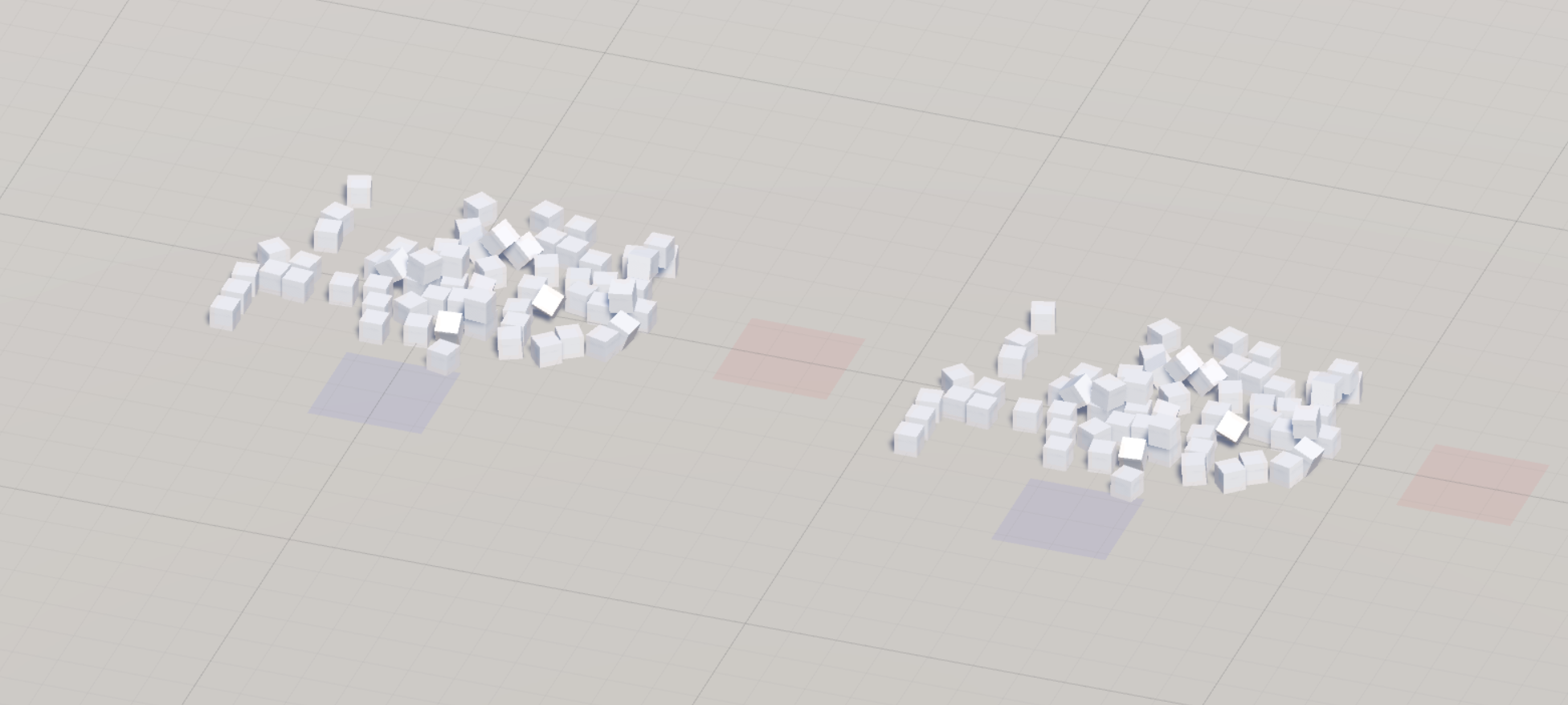

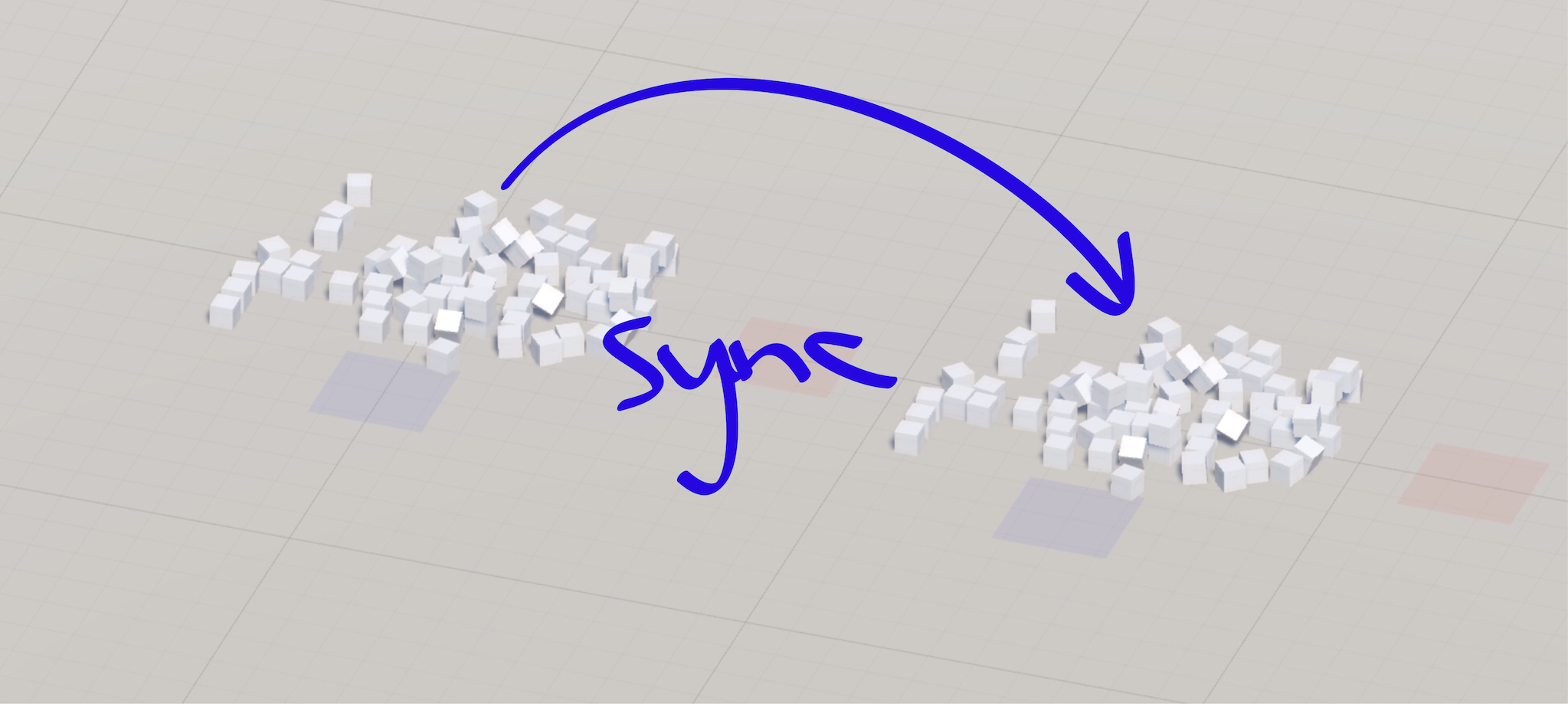

To find out, I created a loopback scene in Unity, in which a bunch of cubes fell in front of the player. I had two sets of cubes. The cubes on the left represented the side of authority. The cubes on the right denoted the side without authority, which we wanted to synchronize with the cubes on the left.

At the very beginning, when nothing else was done to synchronize the cubes, even though both sets of cubes started from the same initial state, the final results were slightly different. This is easiest to see in the top view:

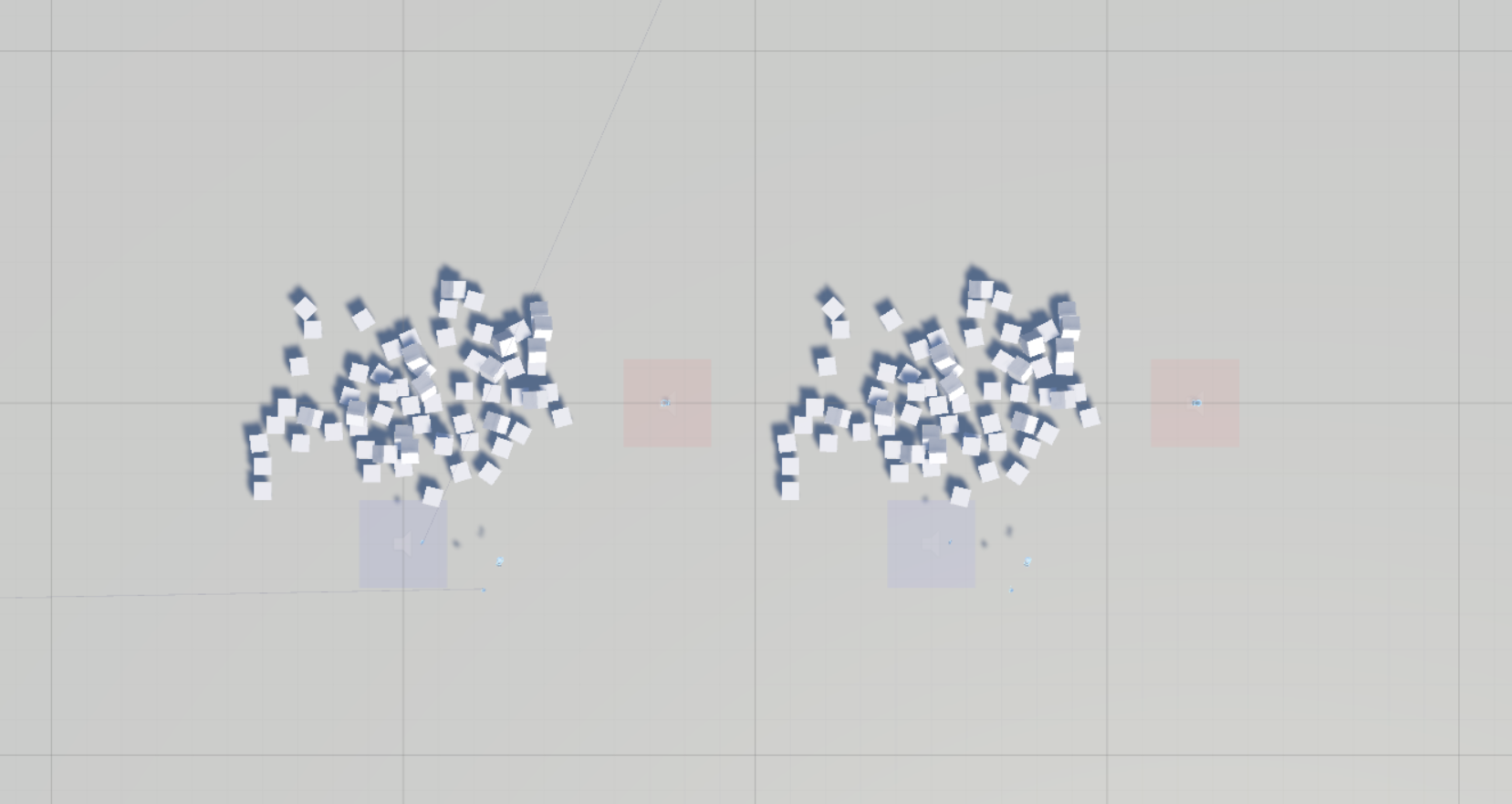

This happened because PhysX is not deterministic. Instead of struggling with non-deterministic windmills, I defeated non-determinism, getting the state from the left side (with powers) and applying it to the right side (without authority) 10 times per second:

The state obtained from each cube looks like this:

struct CubeState { Vector3 position; Quaternion rotation; Vector3 linear_velocity; Vector3 angular_velocity; }; And then I apply this state to the simulation on the right: I simply attach the position, rotation, linear and angular velocity of each cube to the state obtained from the left side.

This simple change is enough to synchronize the left and right simulations. For 1/10 of a second, PhysX does not have enough time to deviate between updates to demonstrate any noticeable fluctuations.

This proves that the state-synchronization approach for network games can work in PhysX. (Sigh of relief) . Of course, the only problem is that the transfer of an uncompressed physical state takes up too much of the channel ...

Bandwidth optimization

To ensure the playability of a sample of network physics via the Internet, I needed to control the bandwidth.

The simplest improvement method I found was simply to more efficiently encode cubes that were at rest. For example, instead of constant repetition (0,0,0) for linear velocity and (0,0,0) for the angular velocity of the cubes alone, I send only one bit:

[position] (vector3) [rotation] (quaternion) [at rest] (bool) <if not at rest> { [linear_velocity] (vector3) [angular_velocity] (vector3) } This is a lossless transmission method, because it does not in any way change the state transmitted over the network. In addition, it is extremely effective, because statistically most of the time, most cubes are at rest.

To further optimize bandwidth, we will have to use lossy transmission techniques . For example, we can reduce the accuracy of the physical state transmitted over the network by limiting the position in a certain interval of minima-maxima and discretizing it to a resolution of 1/1000 centimeter, and then transferring this discretized position as an integer value in a known interval. The same simplest approach can be used for linear and angular velocities. To rotate, I used the transfer of the three smallest components of the quaternion.

But although this reduces the load on the channel, at the same time, the risk increases. I was afraid that when transmitting over a tower network of cubes (for example, 10–20 cubes stacked on top of each other), discretization could create errors leading to the trembling of this tower. It may even lead to the instability of the tower, but it is especially annoying and difficult to debug, namely when the tower looks normal to you and is unstable only when viewed remotely (that is, when simulating without authority), when another player watches by what you do.

The best solution I found to this problem was to discretize the state on both sides . This means that before each simulation step, I will intercept and sample the physical state in the same way as it is done during transmission over the network, after which I apply this sampled state to the local simulation.

Then the extrapolation from the discretized state on the unauthorized side will exactly match the simulation with powers, minimizing the jitter of high towers. At least in theory.

Transition to rest

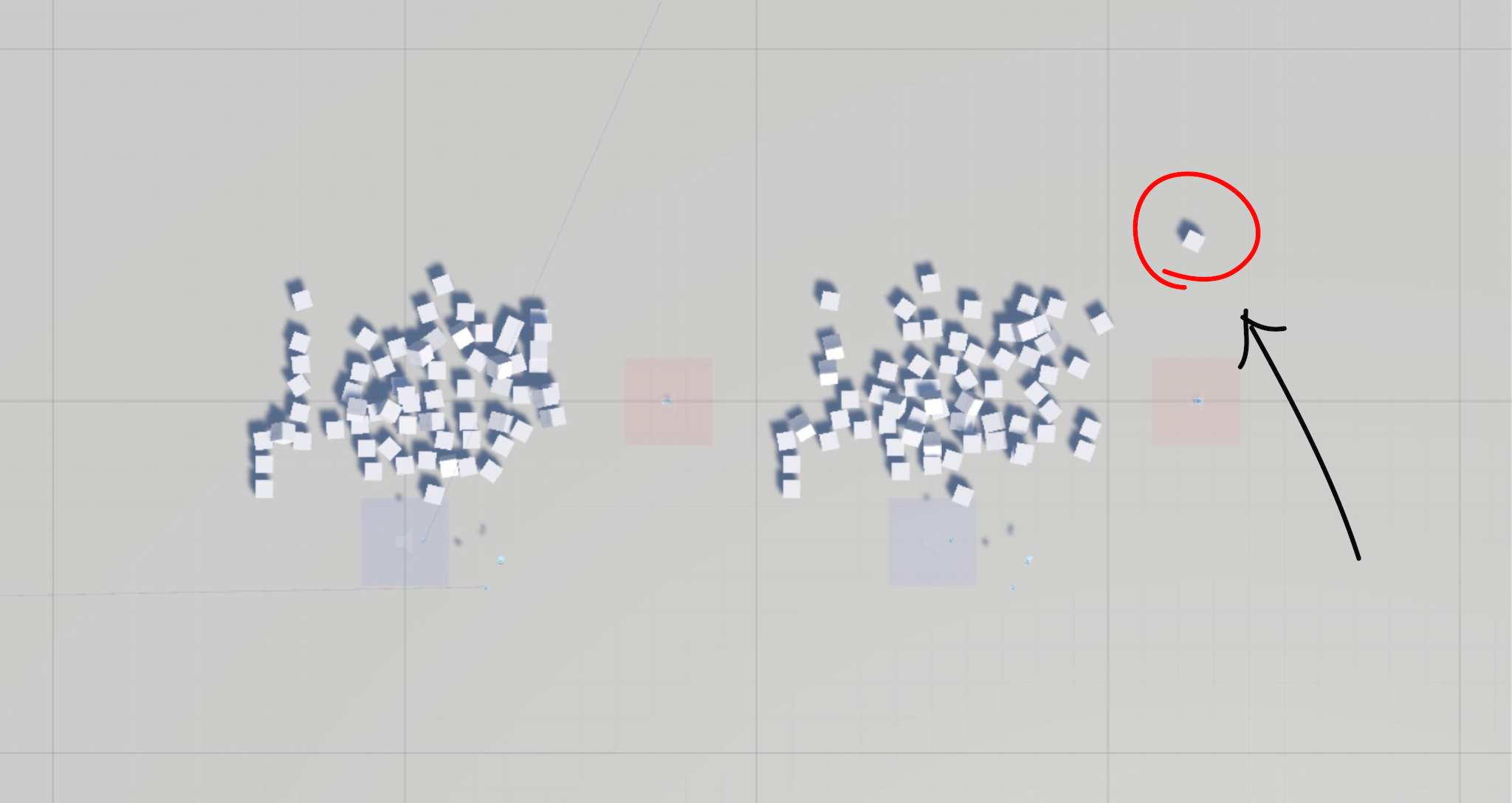

But the discretization of the physical state has created some very interesting side effects!

- The PhysX engine is actually not very pleased when it is made to change the state of each solid body at the beginning of each frame, and it lets us know about it, consuming most of the CPU resources.

- Discretization adds an error to the position, which PhysX is persistently trying to eliminate, immediately and with huge jumps bringing cubes out of the state of penetration into each other!

- Turns are also impossible to imagine exactly, which also leads to the interpenetration of cubes. Interestingly, in this case, the cubes can get stuck in the feedback loop and start sliding on the floor!

- Although cubes in large towers seem to be at rest, with careful study in the editor, it turns out that in fact they fluctuate by small amounts, as the cubes are sampled slightly above the surface and fall on it.

I could do almost nothing to solve the problem of consuming the PhysX engine CPU resources, but I found a solution to get out of the interpenetration of objects. I set the maxDepenetrationVelocity rate for each solid body, limiting the speed with which cubes can repel each other. It turned out that one meter per second is well suited.

Bring cubes to rest turned out to be much more difficult. The solution I found is to completely disable the calculations of the state of rest of the PhysX engine itself and replace them with a ring buffer of positions and turns for each cube. If the cube has not moved and has not turned significant amounts in the last 16 frames, then I will force it to go to rest. Boom! As a result, we got perfectly stable towers with discretization.

This may seem like a rough hack, but without being able to access the PhysX source code and not having enough qualifications to rewrite the PhysX solver and rest state calculations, I did not see any other options. I will be happy if I’m wrong, so if you can find a better solution, please let me know.

Priority Drive

Another major optimization of bandwidth was the transfer in each packet only a subset of cubes. This gave me precise control over the amount of data transferred — I was able to set the maximum packet size and transmit only the set of updates that fit into each packet.

Here is how it works in practice:

- Each cube has a priority score , which is calculated in each frame. The higher the values, the higher the likelihood of their transmission. Negative values mean "this cube does not need to be transmitted . "

- If the priority score is positive, then it is added to the priority accumulator value of each cube. This value is preserved between simulation updates in such a way that the priority accumulator increases in each frame, that is, the values of higher priority cubes grow faster than those of low priority.

- Negative priority scores reset the priority accumulator to -1.0.

- When sending a packet, cubes are sorted in order from the highest value of the priority accumulator to the lowest. The first n cubes become a set of cubes that can potentially be included in the package. Objects with negative values of the priority accumulator are excluded from the list.

- The packet is written and the cubes are serialized into the packet in order of importance. All state updates will not necessarily fit into the package, since cubes are encoded with variables depending on their current state (at rest, not at rest, and so on). Therefore, package serialization returns a flag for each cube that determines whether it was included in the package.

- The values of the priority drive for cubes transmitted in the packet are reset to 0.0, which gives other cubes an honest chance of being included in the next packet.

For this demo, I picked up the value for a significant increase in the priority of cubes that recently participated in collisions with high energy, because due to the non-deterministic results, high-energy collisions were one of the largest sources of deviations. I also increased the priority for cubes recently abandoned by players.

It turned out to be quite counterintuitive that reducing the priority of cubes at rest leads to poor results. My theory is that since the simulation is performed on both sides, the cubes at rest can become slightly out of sync and not quickly correct the state, which led to deviations for other cubes colliding with them.

Delta compression

Even with all of the above methods, data transfer is still not well optimized. For the game for four people, I wanted to make the costs per player lower than 256 kbps, so that for the host the whole simulation could fit into a 1 Mbps channel.

I had the last trick up my sleeve: delta compression .

Delta compression is often used in first-person shooters: the entire state of the world is compressed relative to the previous state. In this technique, the previous full world state, or “snapshot”, is used as a reference point , and a set of differences, or delta , is generated and sent to the client between the reference point and the current snapshot.

This technique is (relatively) easy to implement, since the state of all objects is included in each snapshot, that is, it is enough for the server to track the most recent snapshot received by the client and generate a delta of differences between this snapshot and the current one.

However, when using the priority accumulator, the packages do not contain updates for all objects and the delta encoding process becomes more complex. Now the server (or the side with permissions) cannot simply code cubes relative to the previous number of the snapshot. Instead, the pivot point must be specified for each cube so that the recipient knows what state each cube is encoded for.

Support systems and data structures should also become much more complicated:

- A security system is needed that tells the server which packets were received, not just the number of the last snapshot received.

- The sender must track the states included in each packet being sent so that it can bind the acknowledgment levels of the packets to the transmitted states and update the latest confirmed states for each cube. The next time a cube is transmitted, its delta is encoded relative to this state as a reference point.

- The receiver must store the ring buffer of the received states for each cube so that it can recreate the current state of the cube from the delta by looking at the reference point in this ring buffer.

But in the end, the increase in complexity justifies itself, because such a system combines the flexibility and the ability to dynamically adjust the occupied capacity with improved throughput by orders of magnitude due to delta encoding.

Delta coding

Now that we have all the supporting structures, I need to encode the differences in the cube relative to the previous state of the reference point. How to do it?

The simplest way to encode cubes, whose state has not changed compared to the value of the reference point, is just one bit: there are no changes . In addition, this is the easiest way to reduce the load on the channel, because at any given time most of the cubes are at rest, that is, they do not change their state.

A more complex strategy is to encode the differences between the current and reference values, aiming at coding small changes with as few bits as possible. For example, the delta position can be (-1, + 2, + 5) relative to the pivot point. I found out that this works well for linear values, but is poorly implemented for the deltas of the three smallest components of quaternions, since the largest component of quaternion often differs between the reference point and the current rotation.

In addition, while coding differences gives us some advantages, it does not provide improvements by the orders of magnitude to which I aspired. Clinging to a straw, I came up with a delta encoding strategy, which I added prediction to . With this approach, I predict the current state of the reference point, assuming that the cube moves ballistically, under the influence of acceleration due to gravity.

The prediction was complicated by the fact that the prediction code had to be written with a fixed comma, since floating-point calculations do not guarantee determinism. But after a few days of experiments, I was able to write a ballistic predictor for the position, linear and angular velocity, which with discrete resolution in about 90% of cases corresponded to the results of the PhysX integrator.

These lucky cubes were encoded with one more bit: an ideal prediction , which led to another improvement by an order of magnitude. For cases where the forecast did not fully comply, I coded a small offset of the error relative to the forecast.

For all the time spent, I could not find a good way to predict turns. I believe that the blame for this lies in the representation of the three smallest components of the quaternion, which is numerically very unstable, especially with fixed-point numbers. In the future, I will not use the representation in the form of the three smallest components for sampled turns.

It was also painfully obvious to me that when coding differences and error shifts, using a bit-wrapper was not the best way to read and write these values. I am sure that something like an interval coder or an arithmetic compressor that can represent fractional bits and dynamically change the model according to the differences can give much better results, but at this stage I already fit into my own channel limits and could not go for additional experiments.

Synchronize avatars

After a few months of work, I made the following progress:

- Proof that state synchronization in Unity and PhysX works

- Stable cube towers when viewed remotely when the state is sampled from both sides

- Occupied channel lowered to a level where four players can fit at 1 Mbps

The next thing I needed to implement was interaction with simulation through touch controllers. This part was very interesting and became my favorite stage of the project.

I hope you enjoy these interactions. I had to carry out a lot of experiments and fine-tuning in order to make such simple actions as raising, throwing, passing from hand to hand felt right. Even the insane settings for the correct throwing worked perfectly, while providing the possibility of collecting high towers with great accuracy.

But as for sharing over the network, in this case the game code is not important. All that is important for network transmission is that avatars are presented in the form of a head and two hands, controlled by a head-mounted device with tracking, as well as the positions and orientation of the touch controllers.

To synchronize them, I intercepted the position and orientation of avatar components in FixedUpdate along with the rest of the physical state, and then applied this state to avatar components in a remote viewing window.

But when I tried to realize it for the first time, everything looked absolutely horrible . Why?

After debugging, I found out that the avatar state is sampled from the sensory equipment with the frame rate of rendering in the Update event, and is applied to another machine via FixedUpdate , which causes jitter, since the avatar sampling time did not match the current time during remote viewing.

To solve this problem, I kept the differences between physics and rendering time when sampling the state of the avatar, and in each package included them in the state of the avatar. Then I added a 100 ms delay jitter to the received packets, which helped to eliminate the network jitter problem caused by time differences in packet delivery, and provide interpolation between avatar states to recreate the sample at the correct time.

To synchronize the cubes that hold avatars when the cube is a child of the avatar hand, I assigned the cube priority to the value -1, so that its state was not transmitted in the usual physical state updates. When a cube is attached to a hand, I add its id, relative position, and rotation as an avatar state. With remote viewing, cubes are attached to the avatar's hand when they receive the first state of an avatar, in which the cube becomes a child of it, and are detached from the hand when the normal physical state updates that correspond to the moment of dropping or releasing the cube are resumed.

Bidirectional flow

Now, when I created the player interaction with the scene using touch controllers, I began to think about how the second player can interact with the scene.

In order not to engage in an insane constant change of two head-mounted devices, I expanded my Unity test scene and added the ability to switch between contexts of the first (left) and second (right) players.

I called the first player "host", and the second "guest". In this model, the host is a “real” simulation, and by default it synchronizes all cubes for the guest player, but when the guest interacts with the world, he receives authority over the corresponding objects and transfers their states to the host player.

For this to work without creating obvious conflicts, both the host and the guest must check the local state of the cubes before receiving authority and ownership. For example, a host will not gain ownership over a cube that the guest already owns, and vice versa. , , - .

-, . , peer-to-peer , -. , , .

, : «, / , ». - , , ; .

, :

, , . , , , , , .

, , , , . , , . , , , .

, . «», , . , , , .

, , . , , , , .

Conclusion

</ Unity PhysX .

, .

Oculus !

.

Source: https://habr.com/ru/post/352382/

All Articles