You don't need autotest developers.

In the era of the universal introduction of agile-methodologies and Devops, no one doubts that the regression should be automated. Especially if the company is talking about Continuous Delivery. All rushed to hunt autotest developers, from which the market becomes overheated.

In this article I will tell you that in fact the autotest developer is not such an important role in the team. They are not needed, especially if you are implementing a scrum. And all these agile and devops can be implemented without these people. So if someone tells you that they have everything in their team tested with their hands - because for some reason they don’t have an autotest developer, don’t believe them. They test with their hands, because in another way they are lazy. Or do not know how.

The problem of a separate autotest development team

In Alfa-Bank, I was engaged in the development of test automation. And for more than two years, we were able to completely abandon such a position as the developer of autotests. Immediately make a reservation, we are talking about units where electronic products are made. And not about the whole bank.

When I first arrived, the structure of the organization and the process strongly resembled this typical picture for many:

There is some:

- department / development team;

- department / test team;

- department / team of analysts;

All of them are simultaneously working on different products / projects. I was lucky a little more, and at that time about testing automation had already begun to reflect. Therefore, the test automation team was also present.

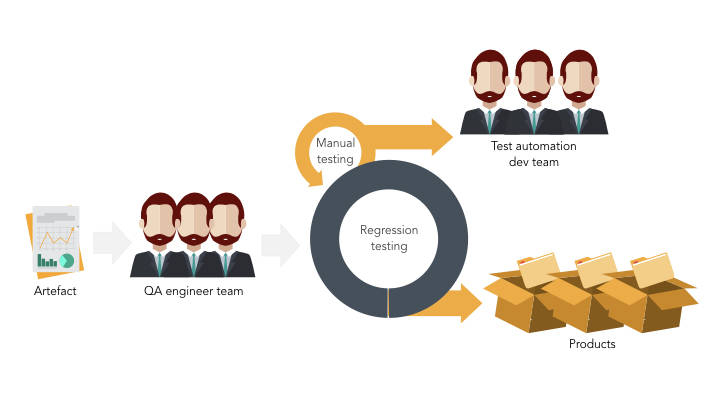

And to manage these functional wells, as a rule, you need a Project Manager. In this case, our testing process was as follows:

- A team of testers received an artifact from the development team. The guys conducted functional testing. At the same time, automatic regression was started if it was developed for this product. And most of the cases were tested manually.

- Then this artifact was deposited on the prod. Where customers are already going.

- And only after that the tasks were started in jira for the team of autotesters (hereinafter I will call the developers of autotests).

- The implementation of the tasks set was delayed from 1 to 4 iterations.

And as a rule, automation of testing was achieved through the joint labor of a tester and a developer of autotests. Where one / some comes up with test cases and tests the application with his hands, and the second - automates these test cases. And their interaction is coordinated by the RP and another army of managers. Anyone there Test manager and Test lead-s.

I will not tell, “what is wrong with functional wells”. It's not like that with them. If only because each department produces “artifacts-puzzles” that are part of a future feature / product. And tmlidam and PM-am always need to keep abreast of this process. Pass on the artifact-riddle to another department, make sure that departments communicate with each other, etc.

Therefore, we will not dwell separately on this.

Let's go straight to the more common workflow diagram. When there are product teams and a separate service team of autotest developers. At that time, we already had a part of the teams working on kanban - and a part on the skram. Despite this, the problems remained the same. Now we had teams in which there were all the roles needed to develop a product / project. And the autotest developer is still in a separate automating team. At the same time, this approach was a necessary necessity for us, because:

- the number of our auto testers was not enough to provide each team with test automation;

- due to the presence of a large technical debt, the guys could not “throw” him and immediately switch to what the teams needed right now;

- Some systems were also present, for which automated tests were developed a long time ago and they needed to be maintained. What expertise within the teams was not enough.

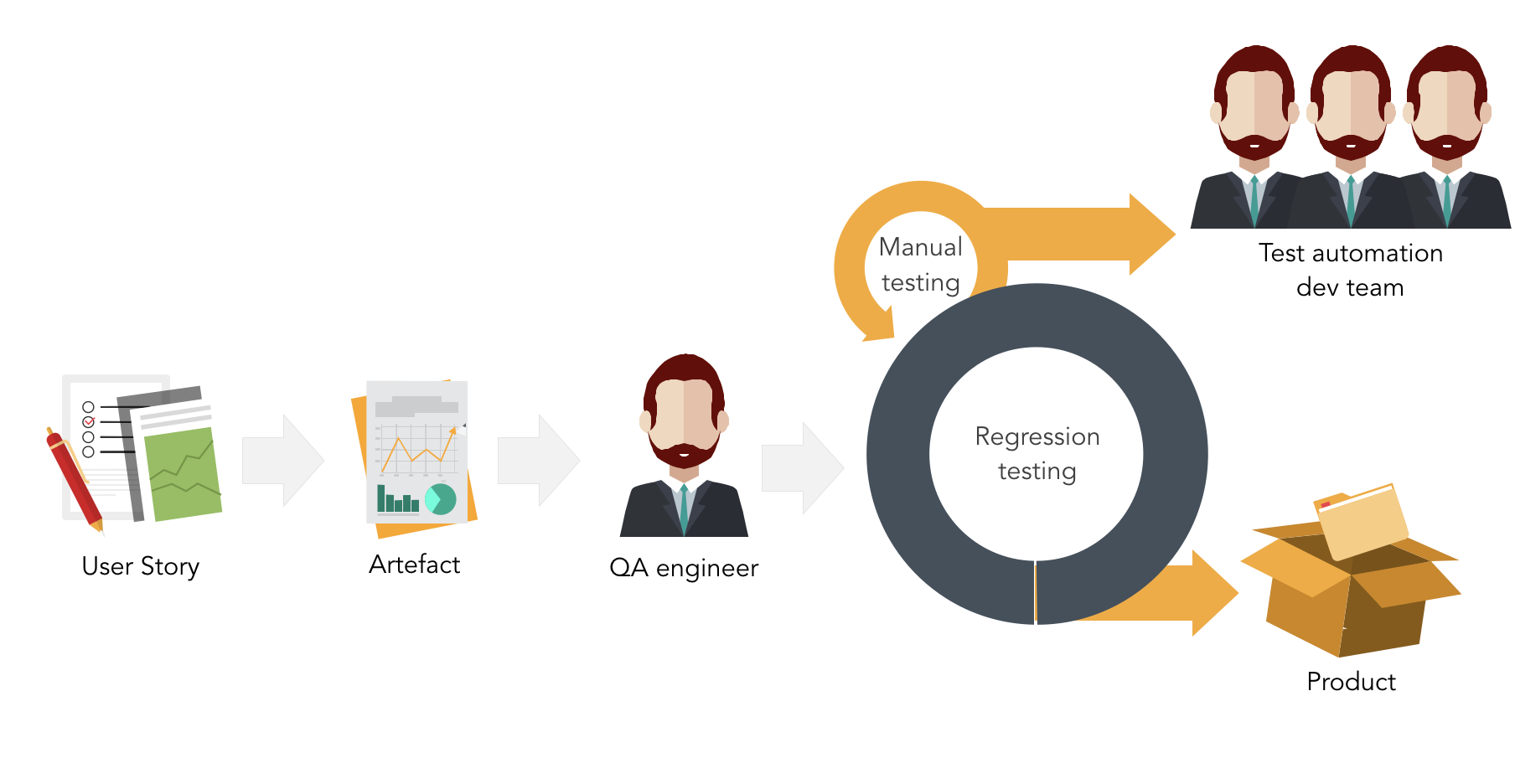

Despite the fact that our testing process has changed and began to look like this:

- The artifact comes to the team tester, which is a small part of the user story.

- It performs acceptance testing and starts automatic regression.

- If the regression is not fully automated, then part of the regression is tested manually.

- Then the artifact is deployed to the prod. And the tester passes the task to auto-testers for automation.

Well, then all the same expectation of when the test cases will be automated. That is, the problems remained. More precisely, they became more. Because now the teams also cannot close the sprint, because there are pending tasks to automate testing.

Let's summarize what questions we have left open with a separate team of autotesters.

Problems of a dedicated auto-tester team

- There is no fast value from autotests. As a rule, they were developed with a delay of 1 or more sprints. Because there was a queue for these same autotests. And the team received value from them only in the form of automated regression. And very often it happened that by the time they automate a feature, the team will already redo it. And auto tests quickly become irrelevant.

- The cost of the product has grown. This is a consequence of the 1st item. Due to the fact that manual testing decreased with significant delay, the team had to spend money on testing automation with reliable on their deferred value. Not to mention that the presence of proxy management increased the feedback loop, and this also increased the cost of the product.

- There was no transparency in the testing process. No one in the teams knew what autotesters were doing. I did not understand their workload and on what basis they distributed priorities.

- Auto testers - "bought" people (outsourcing). In this case, SM or PO thought that they were “coming” people. And they should not be part of the team. And the guys themselves didn’t really want to become part of the team, because where is the guarantee that their employer will not transfer them to another project once again?

- The business did not understand why it should pay in the hope of a long-term effect.

And now attention - what to do RP / SM, whose PO has not pawned money for automation? Or is the product already being developed for about 3 months, but they still cannot find a developer for autotests for their product? Or is there a shortage of people in the autotester team, someone went on vacation / got sick? Forget about automation and long live the manual regression for 2 weeks? Or wait for the right amount of people to appear? And at the same time incur financial losses from the untimely launch of the product, or vice versa, from the fact that the product was released in a “funded” state?

So, obviously, the approach with a dedicated team of auto-testers in an environment where there is agile is not applicable , and other ways of rebuilding the process must be found to achieve test automation.

To do this, let's look at the forms of cooperation and interaction of autotest developers with other members of the product teams.

How testers work with auto testers

- Testers are customers for autotest developers.

- Autotest team - service team for product teams. And such a team has its own Timlide, which proxies inquiries from auto testers to testers. In the worst case, testers also have their own team leader, which proxies the testers' requests to the auto test team leader. And in fact, we have a broken phone, a long feedback loop. And almost zero efficiency. Usually, with such an organizational structure, people speak at conferences with topics about the fact that testing automation is long and expensive, and that testing with hands alone is not a shame.

- And even if communication is not so bad, you can catch other problems in style: the developer (s) are busy with other projects at a particular moment in time and cannot answer the tester's requests here and now.

- Testers do not trust autotests. And repeat automated suites - manually.

How developers "collaborate" with autotesters

- The developers in theory are also customers of the test automation team. But in practice, rarely did they actually send their requests to them.

When a developer creates a library to solve the problems of his product, and it can be useful for testing, it is in his interest to transfer this tool to auto testers. But with a separate team, and especially with a separate team leader, many developers simply refuse to get involved in the communication debate. As a result, we have the following problems:

- Duplication of automation tools / frameworks due to the lack of communication with developers.

- Often the same problems are solved by different tools. For example, for testing you need to pull out the logs from Elasticsearch. Developers already, for example, have their own written library for this task, but instead of re-using and developing an existing tool, auto testers usually write their own, or find a new tool for this.

- Auto testers use tools that, for some reason, developers may then refuse to support or develop. For example, our developers used maven as a project builder. And when the task of integrating test automation into the development process arose, we had to abandon the old framework completely. Since it was more difficult to translate it into a gradle, than writing a new one. And the developers could not use maven, since the entire infrastructure and environment we were already “sharpened” under the gradle.

How management "collaborates" with autotesters

Management cooperates with autotest developers, mainly requesting and receiving regular performance reports that should contain answers to standard questions, for example:

- What do we get from test automation?

- How much does it cost the product?

- Given the cost, do we win enough from test automation?

- What bad can happen if financing of automation is suspended?

- What is the cost of technical support written autotests?

As a result, here are the main test automation fails when selected auto testers make it:

- The opacity of the amount of automated test coverage

- We develop what is not used when testing the product. For example, they wrote or automated test cases that did not include a regression suite in the launch. Accordingly, such auto tests do not bring value.

- We do not analyze the test results: developed -> launched -> did not analyze the results. And then, after a while, the team detects "collapsed" autotests because the test data is very outdated. This is the best case.

- Unstable test cases: constantly spend a lot of money to stabilize test cases. This is the problem of the lack of immersion in the context of the application that we are trying to automatically test.

Finding a solution to the problem

Attempt # 1: 1 tester and 1 auto tester are allocated to the team

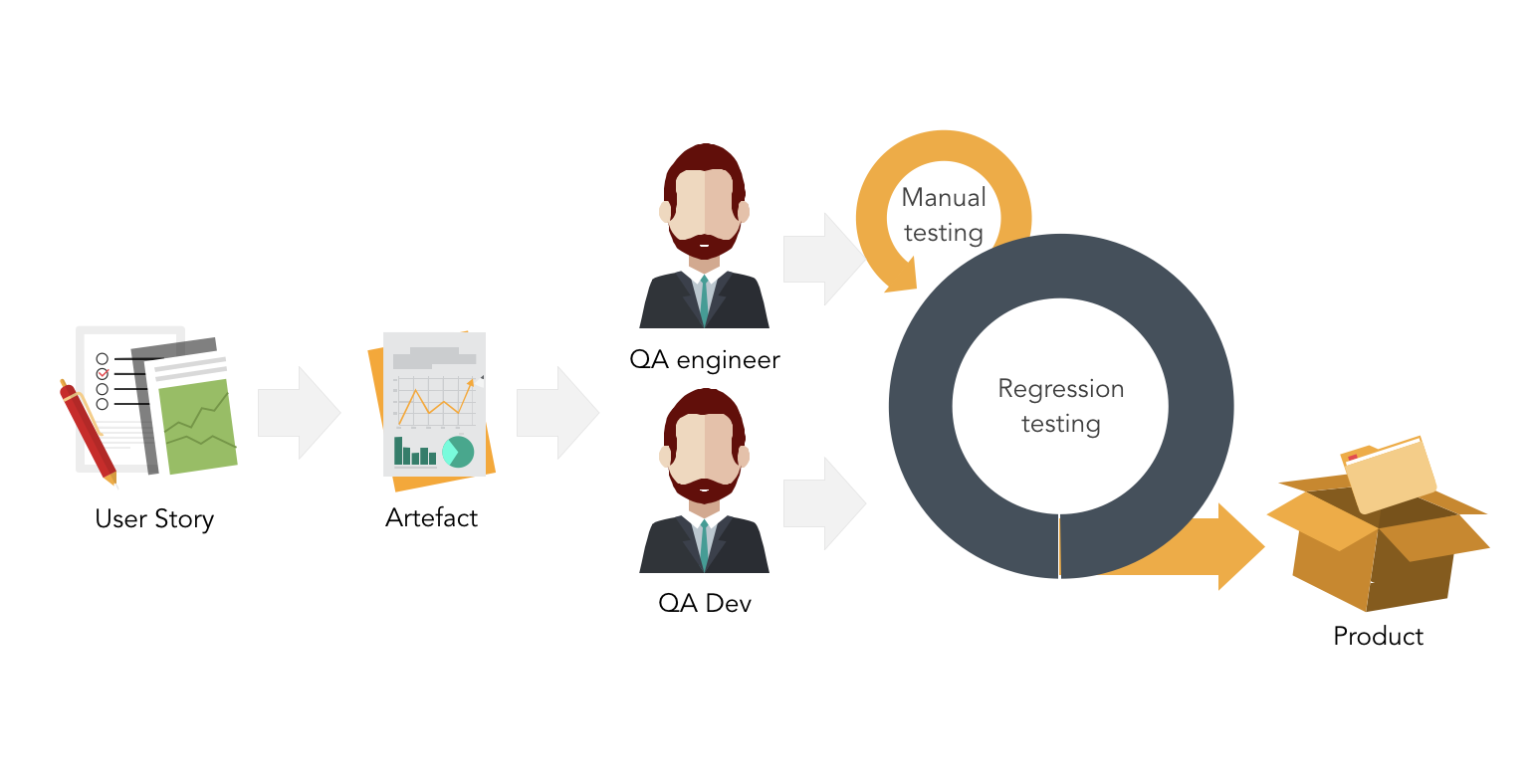

The first thing that occurred to me was to try to provide each team with an autotest developer. The key word is to try. For the whole year, I interviewed over 200 candidates, and only three of them were on our team. And nevertheless, we still decided to try and at least pilot the process when the autotest developer is inside the team. Our testing process has changed again:

- Now, when the artifact came for testing, the tester did the acceptance.

- Then the tests for this artifact are immediately automated.

- So all our regression is automated.

- And the artifact is deployed on the prod with already implemented autotests.

It would seem that everything is perfect. But after a couple of sprints, the following was found:

- The product / project did not generate the appropriate load on the autotester. This is despite the large number of meetings that were present at the team. And for which on average in the weekly sprint spent about 10 hours.

- In this case, the auto tester refuses to engage in functional testing, if you try to leave it alone in the team.

- But the tester is not competent enough to write an auto test independently.

- When they offered to write services for the application to the autotest developer, he refused, as his skills were not enough. And oddly enough, not all autotesters are interested in developing themselves as a developer.

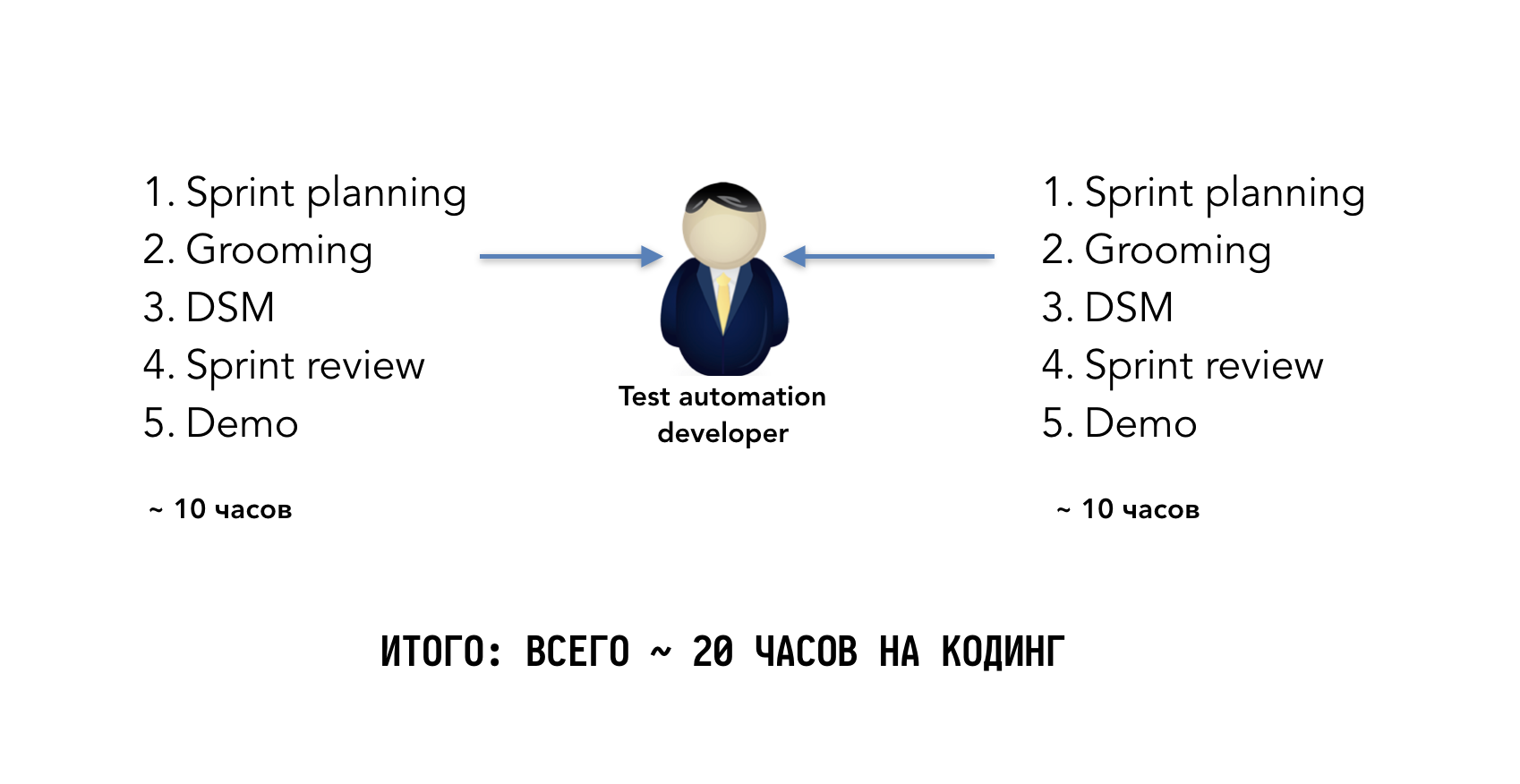

Attempt # 2: Autotest Developer is part of 2-3 teams

Then I thought that if the auto-tester is about 50% busy, then maybe try to “fumble” it for 2 teams? And that's what happened with us:

It turns out that the developer has about 20 hours of coding in the weekly sprint. And then the problem turned out to be simple: he just did not have enough time. Switching the context between the product led to the fact that now he could not quickly become involved in the automation process. And we again have a problem with the development of autotests that lags behind the development of the product. In addition, it was very difficult for the teams to synchronize so that their meetings did not overlap and that the developer had time for all the meetings with the teams.

And at the same time, he also could not refuse these meetings, since he lost the context of the application being developed, and automation became less effective.

Attempt # 3 or Successful Attempt: Teach testers to write autotests

Then the hypothesis came to mind that if we trained testers to independently develop autotests, then we would fix all our pains. So what did we start with?

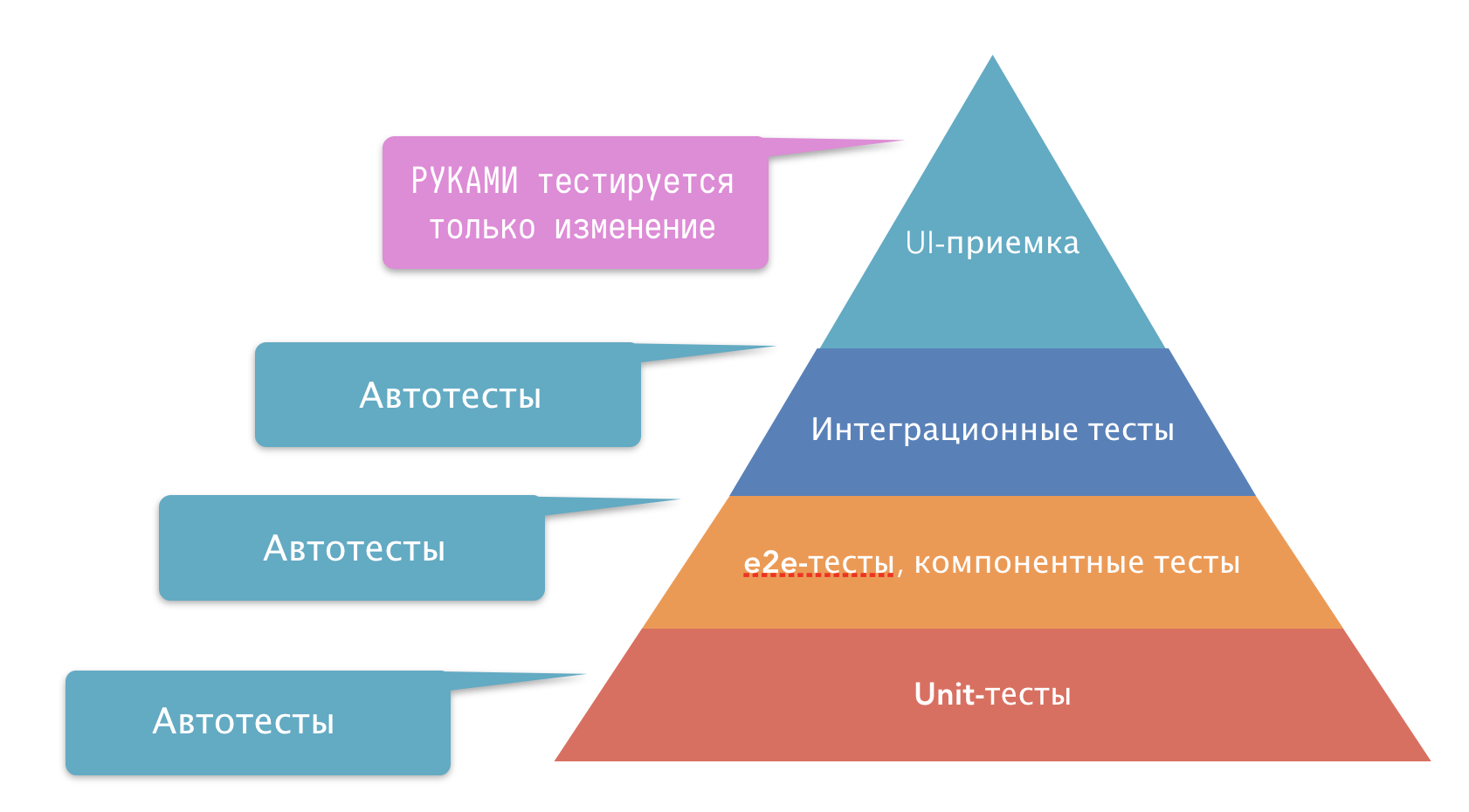

- To begin with, we built the correct testing pyramid. According to her, our test strategy was to ensure that tests were on different layers of the application. And between each layer must be integration tests. And hands should be tested only acceptance tests. At the same time immediately after acceptance - they are automated. That is, in the next iteration, the tester tests ONLY the change ONLY.

- “Smeared” the test automation process on a team. Since the tests were different, they were developed by different team members. Api tests were developed by the api developer. A front-end developer covered his UI components with tests. The tester must design a test model and implement integration tests that were performed by the browser (selenium tests).

- Used simple tools to automate testing. We decided not to complicate our lives and chose the simplest wrapper over selenium. At the moment - this is selenide, if your auto tests are written in java.

- Created a tool for writing autotests non-programmers. About this library (Akita BDD) an article has already been written in our blog at https://habrahabr.ru/company/alfa/blog/350238/ . And because we use BDD, we were able to engage analysts in writing autotests. By the way, the library in open source: https://github.com/alfa-laboratory/akita and https://github.com/alfa-laboratory/akita-testing-template

- They taught testers a little bit programming. The average training time took from 2 weeks to 2 months.

Due to the fact that we "smeared" the automation of testing on a team, the tester managed to do both manual testing and automation. Some testers thanks to this cross-functionality within the team so pumped up that they even sometimes began to help the team with the development of microservices.

When the team itself participates in the development of autotests - they themselves are responsible for the test coverage and understand how many tests have already been written and what more needs to be added to reduce the time for manual regression. There is no duplication of automation of the same scenarios. Since the developers are aware of them, and when writing their e2e tests and unit tests, they will be able to warn the tester about the absence of the need to automate certain scenarios.

The problem of outdated autotests is quickly solved. When the product develops rapidly, the presence of add. The person responsible for automation generates a lot of senseless work for him. Because while he is automating a new set of test cases on prototypes, the designer with PO can decide that the logic will be completely different. And it turns out that the next day he again needs to redo his tests. Only when you are always inside the team, you can “keep your finger on the pulse” and understand which tests it makes sense to automate now, and with which it makes sense to wait.

At the same time, the core library itself is supported by the testers themselves. Only more interested in this. Which became interesting to write code and they contribute akita-bdd. As a rule, all new chips and steps come from other teams that have tried something inside themselves and decided to share, making a pull request to the library. All communication takes place in the community inside the bank is weak, where the guys and find out the need for a particular step, and after that they rummage it.

Will the quality of the tests suffer?

Perhaps some of you are wondering, what if the team does not cope with the creation of a new test framework? Or autotests will be of poor quality? After all, they do not have a unique expertise of the autotest developer? So, I am of the opinion that auto testers are not unicorns. And the ideal candidate for writing this framework itself will be the member of the team that needs automation.

I will tell you the story that happened to me. When I first came to Alfa Bank, I already had my own test automation framework. It was developed by a dedicated team of autotest developers, about which I have already spoken. Developed over 2-3 years. It was a monstrous Frankenstein, learning to use which even an experienced developer was difficult. Accordingly, any attempts to teach the tester to automate tests with its help ended in failure. And also attempts to drag this tool into teams.

Then we decided to pilot the development of a new tool. But it should be developed by the team, and not the person / team in isolation from the production. Following the results of one project, we had a prototype of this tool, which we dragged back in a couple of new teams. And following the implementation of their products, we had an overgrown prototype with a large number of developments, which the teams themselves created and solved the problems themselves with a similar context. We analyzed what they did, and by choosing the best, we did the library.

That is, it was the same prototype, only on which a java-developer from one of those teams conjured a little. He brought order and beauty in the architecture of the application and improved the quality of the code of the library itself, so that it was not a trash can.

Now this library is used in more than 20 teams. And it develops on its own - testers from teams constantly contribute to and supplement, if necessary, with new steps.

And all innovations, as a rule, occurred in the context of the team. And having their diverse mix experiences contributed to better understanding and better solutions.

You might ask, but where does the rejection of autotest developers come from when you told us now about teamwork on autotests. The fact is that the situation with the availability of the autotest developer and the developers within the team resemble a situation when there are too many “cooks in the kitchen”. That is, leads to the fact that team members are attacking each other (or each other’s code). And as a result, we get a picture when application developers stop writing autotest code, which means they no longer know the context of the autotext writing problem and how they could solve them or prevent them from writing their part of the code.

Another reason for creating teams that write autotests without using a dedicated person for this task: since people work together in a team, they better represent the entire stack and context of the application being developed. And this means that they will develop it, taking into account the fact that later they will also need to develop autotests.

Consider a specific example: when our front developer started trying to write autotests, and learned the pain of writing xpath requests to different ui components on a page, he suggested creating unique css class name at the time of page layout to easily find an element on the page. Thus, we were able to stabilize tests and speed up their writing by simplifying the search for these elements. And the front-developer just appeared a new rule in the work - which did not complicate his workflow one iota

Well, when we integrate everything into a cross-functional team, we include all these dependencies in it and do not need any coordination. The level of control becomes much less.

findings

- Having a dedicated autotester team is not agile .

- Having dedicated autotest developers is expensive for a product / team

- Search / hunting of such people is expensive and long. And this hinders the development of the product.

- Autotest development lags far behind product development. As a result, we get not the maximum value from test automation.

And I would like to add to the wish that my story is not a silver bullet for everyone. But many of our approaches may well have you got, if you consider the following:

- The tester is also an engineer. And as long as the team does not begin to treat him as an engineer, he will not have the motivation to develop or learn to program.

- The team is also responsible for the quality. Not a tester who will be held responsible for the quality of the product. And the whole team. Including customer (PO).

- Auto tests are also part of the product. As long as you think that autotests are a product for another product, sprints without written autotests will close. And autotests will be your technical debt, which usually does not close. It is important to understand that autotests are what guarantee the quality of your product.

And finally, the team itself should want to write autotests and decide for itself who will do what. Without coercion from above.

ps If you are interested in my experience, I invite you to my blog ( http://travieso.me ). All my speeches, articles, lectures and notes are published there.

')

Source: https://habr.com/ru/post/352312/

All Articles