C ++ cycling for professionals

Classes that people write on their own, and then copy from one project to another, although they already exist in standard libraries, are called bicycles in the common people. The first question that arises when meeting with such a "bicycle" - why do people rewrite something again? There may be several options.

Today we will not say that bicycles are bad , this is not necessarily the case. We'll talk about what's really bad:

And also we will touch upon “harmful” tips , discuss the newest programming practices (C ++ 11 and later), think about what to do with the “perfect” bike.

')

About the speaker: Anton Polukhin ( @antoshkka ) Yandex employee, active Boost library developer, by TypeIndex, DLL, Stacktrace, Maintainer Boost Any, Conversion, Lexicalast, Variant, co-chair of the National Standardization Group C ++. All this together means that Anton sees a lot of code every day , both open-source and commercial, and knows very well how often developers invent bicycles.

So, the theme is bicycles. What class will we start from? Correctly - from the line.

Imagine that in the courtyard of the deep Middle Ages, people in armor jump on horses. You are a developer of a standard library, and the only standard that is is C ++ 98. You need to write your own line.

You will start writing something very simple without any templates. You will have a pointer to a dynamically allocated piece of memory (data), a variable size that stores the number of characters in an allocated piece of memory, and a variable capacity that sets the size of this dynamic piece of memory.

For your string class, you write a copy constructor and a constructor from an array of characters. Both of them will dynamically allocate a piece of memory, move the characters there. Here, it says new + memmove, but in fact there may be slight variations like malloc, memcpy, the meaning remains the same.

Since you are a good developer of a standard library, you are wondering how well such a string class will work. You open custom code, look at popular recipes, and find something like the example below, where the map is created from the string and int, and a couple is inserted there.

And then you realize that things are bad - slowly , very slowly.

You have a friend Vasya, who is also developing a standard library and his line class works faster. Where exactly the performance is lost :

It turns out a very sad picture - 3 new calls, 3 memmove calls, a couple delete calls, and all this is terribly slow. It annoys you that Vasya is faster, and you start thinking about how to do better.

Here you either invent yourself or read about Copy-On-Write (COW) technology somewhere. It allows you to work more effectively with temporary objects . Most of the losses were due to the fact that temporary objects are being created that are not modified in any way: they were simply created, some of them were copied and immediately destroyed.

By using Copy-On-Write technology, instead of the line uniquely owning the dynamic object that was created on the heap, you can have several lines simultaneously referring to a dynamic object . Then in this dynamic object there will be a reference count - how many lines simultaneously work with it. The code will look like this:

You write class string_impl (you can write it better) and add use_count , i.e. reference count — how many lines refer to a dynamic object at the same time. You also change the copy constructor; now it will take a pointer to a dynamic object from the input string and increase the reference count.

Little will have to tinker with non-constant functions that potentially change the line. If two lines own a dynamic object, and one of them starts changing it, the other will see the changes. This is a side effect that users will be dissatisfied with, because they first worked with a single line, and then it suddenly changed.

We need to add a check : if we uniquely own a dynamic object, that is, the reference count is 1, then we can do whatever we want with it. If the reference count is not 1, we must call new and memmove, create another dynamic object and copy the data from the old object.

Destructor also change. It will reduce the reference count and look: if we are the last owner of a dynamic object, then it needs to be removed.

There is nothing better in the constructor from the array of characters, still new and memmove. But then the constructor will be called to copy the string when creating a pair, which now does not call either new or memmove. When the pair is inserted into the map, the string copy constructor will again be invoked, which again does not invoke new and memmove.

All temporary object destructors will not cause delete either - beauty!

Total, COW more than 2 times speeds up code in C ++ 98 .

If someone tells me now that he knows a technology that increases the performance of my code by 2-3 times, I will use it everywhere.

In the 1990s, many libraries did this. For example, there is a QT library, where COW is present in almost all base classes .

But technology does not stand still. The 2000s are coming, there are processors with Hyper-threading and multi-core processors, not only server-side, but also user ones:

Now each user can have more than 1 core. And our line is already bad, because we have a common reference count.

For example, there are two streams, in which the string refers to a common dynamic object. If threads work with strings and at the same time decide to remove them, it turns out that we from two threads will try to simultaneously change the dynamic reference count use_count. This will cause either a memory leak or an application to crash .

To fix this, we introduce an atomic variable - let's pretend that C ++ 98 has std :: atomic. Now our class looks like this:

Everything works - great! Nearly…

Now we have both the copy constructor and the destructor have atomic instructions in themselves , which is not very good. Non-constant methods turn into a terrible disgrace, because boundary conditions appear that rarely arise, but which we are obliged to handle.

For example, two threads own lines. These lines refer to the same dynamic object. One thread decides to change the string. The line looks at that it non-uniquely owns the dynamic object and starts to clone the object. At this point, the second thread says that it no longer needs the line and calls its destructor.

And the first thread still clones, calls new, memmove. He finished his business and suddenly realizes that he has two lines, but he needs one.

It is necessary to provide logic for this as well, and these are additional checks, perhaps an additional call to new and memmove, and again many atomic operations, which is bad.

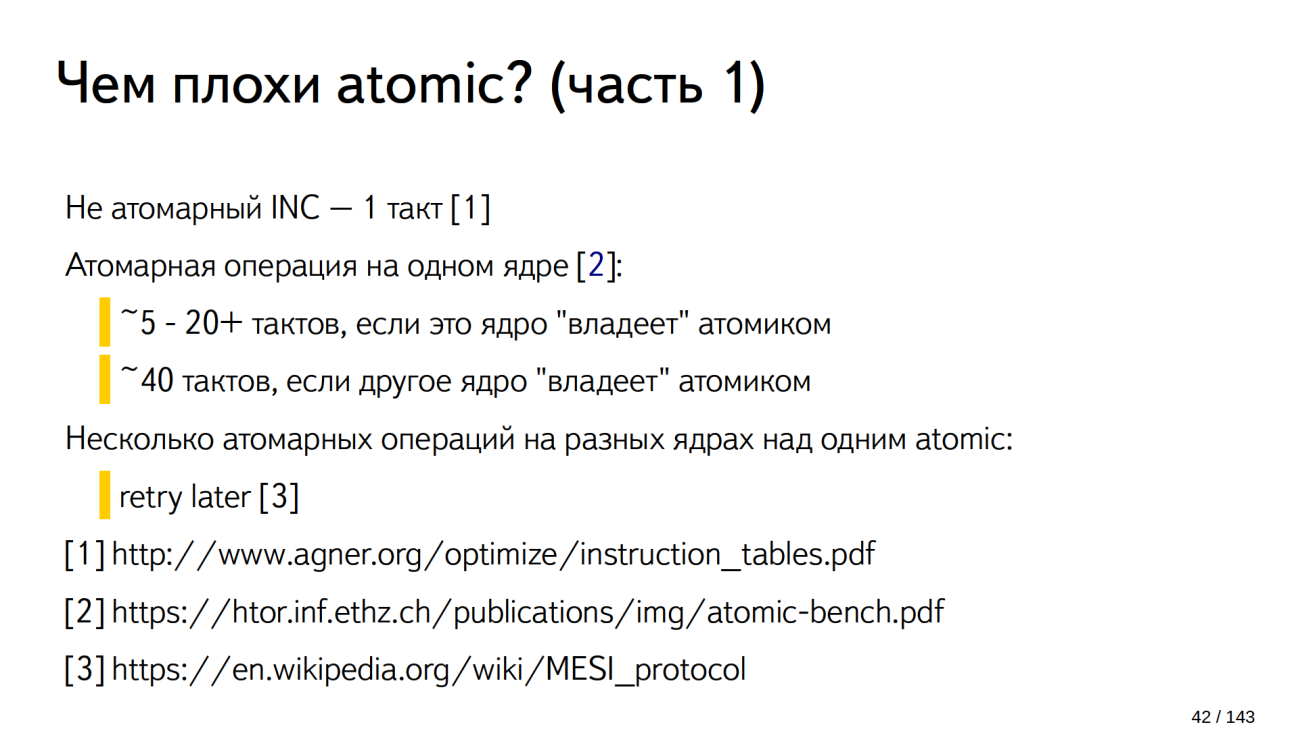

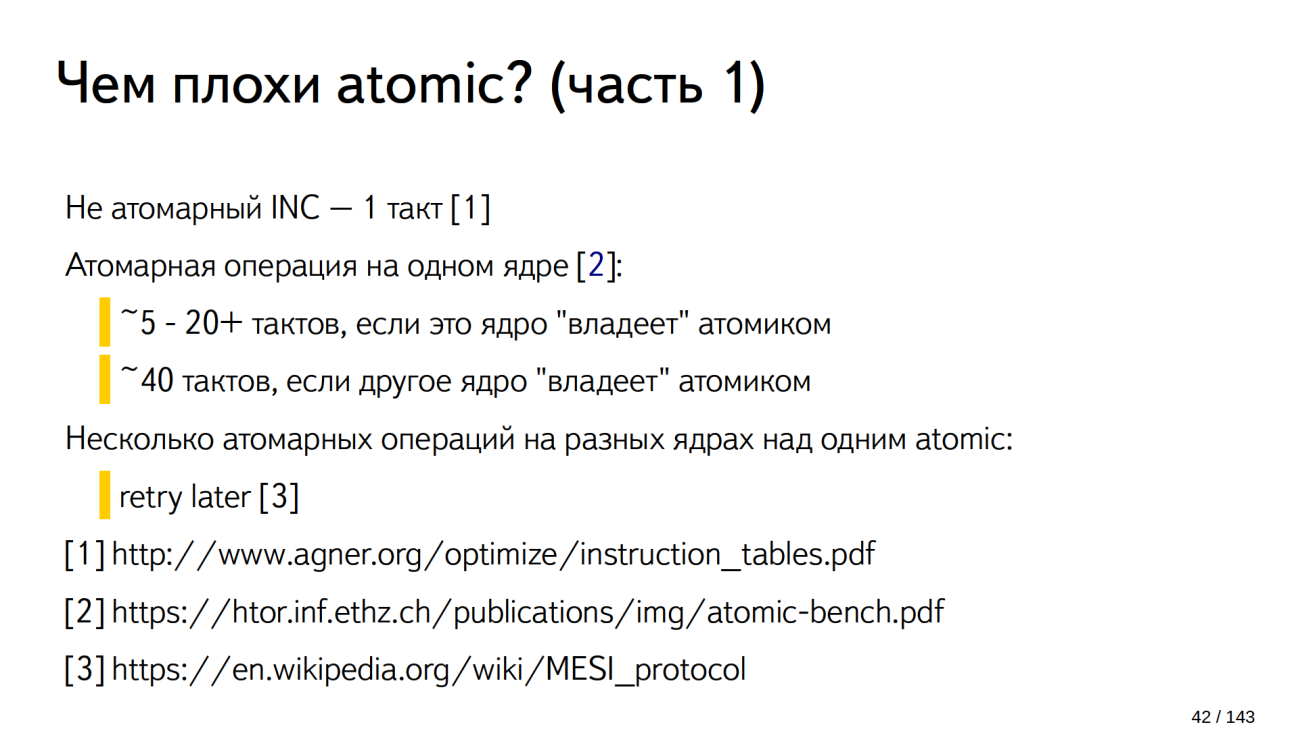

X86 is not an atomic increment - a simple increase in the integer by one takes one processor cycle [ 1 ]. If this operation is made atomic, it will take from 5 to 20 cycles if only one core makes an atomic instruction and if this core changes the atomic variable [ 2 ]. If the nucleus is not the last to change the atomic variable, then around 40 cycles.

So, this solution is 5-40 times slower only due to the fact that an atomic variable is used. Further worse.

If several cores simultaneously work with an atomic variable, for example, three cores simultaneously want to increment an atomic variable, we will have a lucky kernel that will start to make an atomic increment, the other two cores will wait from 5 to 40 cycles until the core finishes all its actions.

Then a second lucky core appears, which begins to perform an atomic operation, since it was not the latter that changed the atomic variable, it would take 40 cycles. The third core is still waiting. The more nuclei at the same time they try to perform an atomic operation on the same variable, the longer the last nucleus will wait.

This is how the last kernel waits:

In this example, 16 cores and the last core waits around 600 cycles . This is the easiest kernel unluckiest. If we sum up the downtime, then the idle picture will turn out to be quadratic.

At 16 cores, about 6000 clock cycles went nowhere .

There are systems containing more than 90 kernels, and if all of them 90 kernels decide to work simultaneously with the same atomic variable, everything will be very sad.

COWmore than 2 times speeds up code in C ++ 98. Anyway, Copy-On-Write is faster and that's it.

In C ++ 11, rvalue reference appears, which allows you to write special classes and special methods that work with temporary objects.

For example, a move constructor for a string works equally well if the string is COW and if the string is the most common baseline from the Middle Ages, where the object is dynamically allocated, and only one line owns it.

What's going on here? At the entrance comes the object s . It is temporary, we know that the compiler will delete it soon, that no one else will use it, and we can take resources from it . With it, you can do anything at all, the main thing is that for him you can then call the destructor.

Took away resources. We are an empty string, we received a string with the result, we make a swap . Now we are a string with the result, and the time string with no resources is empty.

Let's see how it works in our example, if we have a string without COW.

A string is created from the “Hello” character array. Here new and memmove are the same as with COW.

The next is better. When copying a string into a pair, we will call the move-constructor of the string (

When inserting a pair into a map, the map sees that the pair is also a temporary object (

Without new and memmove, the line will move inside the map: 1 call to new and 1 call to memmove - the same as with COW, but without atomic operations .

Well, what if we want to copy a line? We are all reasonable people, and we want to copy a line, as a rule, if we want to change it.

Without COW we copied the line, immediately called new and memmove. We can change the line and do anything.

If we have a COW line:

The result is the same as without COW, but at least a pair of unnecessary atomic operations appears.

Non-constant methods are still terrible. If we want to go through our line (the first ten characters in a line) using indices, the COW line is 10 atomic operations on the same atomic variable. If two streams simultaneously pass through the same line - a big trouble.

As a result, the COW constructor has atomic operators, the destructor will most likely call atomic instructions — there are many atomic instructions that we don’t need. We did not ask them, and this is against the rules of C ++. We pay for what we do not use.

Beginning with C ++ 11 COW, lines are not allowed in the standard. There are special conditions imposed on the line that COW cannot be implemented. All modern implementations of standard libraries do not have COW lines.

COW is outdated, more careful with its use.

Careful use COW in new projects. Do not trust articles that are more than 10 years old, recheck them. COW does not show such good results as 20 years ago.

If you had a COW line and said to all its users: “We have a COW line - it copies everything perfectly, everything is fine, we have COW everywhere”, users could sharpen it and write code taking into account that your classes use COW.

For example, a user could write a class bimap — this is a class that can search both by key and by value.

He could write it through two std :: map, each of which has a line. Here is the COW line, the user knows about this, as well as the fact that there are two copies of the line in the map. The user knows that they will dynamically refer to the same memory object, and that this is effective from memory. If here the COW line is changed to the usual one, the cost of memory will double.

In C ++ 17, this can be corrected, for example, as in the example above, replacing one of the lines with string_view. From memory it will turn out exactly the same, but atomic operations will be removed and the code will be slightly faster.

It turns out that we added a move constructor to that ancient line of the year 98, which uniquely owned a dynamic object, and that’s the perfect line? Nothing better can be done?

That's what people came up with. They looked at one of our first examples where a map is created from a string and an int, and a couple is inserted into it, shook their heads for a long time and said how bad things are:

People have come up with how you can, without changing the size of the string — the variable std :: string — to store characters in it without dynamically allocating memory . They looked at the very first class of the line:

Now inside the string in the example above is a union. The first part is a pointer to data and the size_t variable. The second part of the union, the structure, contains data (a small buffer of the size of char * and size_t). Approximately 16 bytes fit into it.

If capacity = 0 - this means that we have not dynamically allocated memory and we need to go to impl small_buffer_t, and our data will be in data without our dynamic allocation.

If capacity ≠ 0, it means that we have dynamically allocated memory somewhere, and we need to go to impl no_small_buffer_t. There is a pointer to a dynamically allocated piece of memory and the variable size.

Of course, in the example a slightly simplified version. Some standard libraries, such as Boost, can store 22 characters and, in addition, terminate 0 without dynamic allocations without increasing the size of the string. All modern standard libraries use this trick. This is called Small String Optimization .

This thing is good, firstly, on empty lines. They are now guaranteed not to allocate memory, and guaranteed to call the c_str () method will no longer allocate memory.

I know companies that have rewritten std :: string, because their empty string dynamically allocates memory. Do not do this anymore.

Secondly, for example, our “Hello!” Will fit without dynamic allocation. If the usernames are stored in the lines, their names and surnames are separate from the names, some identifiers, cities, countries, names of currencies - as a rule, everything will fit into the line without dynamic allocations.

But people looked and again thought that slowly. Starting with C ++ 17, the compiler is able to optimize memmove , its alternative is the one that is in the line at the compilation stage. But this was not enough. In C ++ 17 there is Guaranteed copy elision , which guarantees that in some cases there will be no copies at all and there will be no memmove. Just in a map, by some miracle, this line is formed without intermediate variables.

Using the example of std :: variant, consider what happens if people try to cycle-build without looking at other people's decisions.

In C ++ 17, the std :: variant class is adopted - this is a smart union that remembers what it stores. We can write std :: variant <std :: string, std :: vector <int >>. This means that the variant can store in itself either a string or a vector. If we write the word “Hello” in such a variant, the variant remembers the index of the template parameter in the variable t_index (which contains a line), locates the line in place and will store it.

If we now try to write a vector there, std :: variant will see that it stores the line at the moment, not the vector, will call delete for the line, construct a vector in place of the line and update t_index at some point.

The class was adopted in C ++ 17, and people all over the world immediately rushed to write it again, rewrite, and cycle building .

Above - the real code from the open source project, do you see an error in it? Here, instead of union, was a struct.

Below is the code from the commercial project:

Here the error is much worse and in many implementations of the variant it is present. There will be big problems with exceptions.

For example, we have a variant that stores std :: string and we are trying to write a vector into it. The destroy line will invoke delete for the string. From this point on, the variant is empty, it does not store anything.

Then in place of the line we are trying to create a vector. The vector is transmitted by a constant link and we have to copy it. Call placement newand trying to copy the vector. The vector is a large class, it can store user data, which when copying can throw exceptions. Suppose we threw an exception. It will exit the current block, causing the destructors of all local variables, and will exit the block above. There, an exception will also cause the destructors of all local variables. It will reach the block where the variant was declared and cause the variant destructor.

The destructor of the variant looks at what is written to it in the variable index, which has not yet been updated. It contains std :: string, i.e. variant thinks that it has a string to be deleted. But in fact, there is nothing at this place, the line has already been deleted. At this point , your entire program will crash .

To avoid this, it is enough to open the interface of the standard library and see that std :: variant has the valueless_by_exception function , wonder what it is, and see why it is needed. You can open the source Boost - there 2/3 of the code is devoted to this problem.

I say this all so that you and all your subordinates watch how other people have bikes written, in order to avoid such sad consequences. This is a very popular mistake. She slips everywhere.

I give an example of a variant only, but in many other classes there is a similar one. People rewrite, think that something is superfluous, do not look into someone else's code, and this leads to disastrous consequences.

It's time to jump on sick corns.

You can find projects where all methods are labeled as FORCE_INLINE - literally the entire project up and down in gigabytes of code. You ask people:

And from this point on, it is very difficult to argue with people, because they have a benchmark, and you cannot write such a benchmark.

Modern processors are very complex devices. Nobody knows until the end how they work, even the developers of processors.

A modern processor cannot run your program directly from disk or straight from RAM. Before he starts executing the program, he must pull it up in the instruction cache. Only from there the processor can execute instructions.

Some processors have a cunning instruction cache, which makes additional processing of commands that fall into it. But the instruction cache is small, there is not enough code in it. If you use a compiler that FORCE_INLINE really does, when you ask him to do FORCE_INLINE, your binary code increases.

Imagine that in the picture above your program. Green indicates what fit into the cache. This is additionally your hot path - the place where your program spends most of its time. Now it all fits into the processor’s cache, there will be no idle time in the instruction cache.

If you add FORCE_INLINE, on benchmarks everything will be fine, but the code will no longer fit into the instruction cache.

When you get to the red line, the processor will say: "I do not have new instructions, I have to wait for them to catch up with the memory." If it is somewhere in the loop, it will constantly flip through some instructions, pull them from memory to cache. It is slow.

In order for your benchmark to correctly reflect the real state of affairs, it must take into account all 3 caches - data, instructions and addresses.. To do this, the benchmark should:

In other words, it is impossible to write such a benchmark . You can test only your production code.

If you have added FORCE_INLINE and your production code has not become better - it is better to remove FORCE_INLINE. Modern compilers can inline. 10 years ago, they did not know how to do this well, and FORCE_INLINE really did help in some cases, for example, with GCC. Not now.

I say that FORCE_INLINE is bad, it is worth giving it up in most cases, although it can sometimes be useful.

However, compiler developers are smart people. They see that users write FORCE_INLINE everywhere and programs get slower. Therefore, modern compilers have different heuristics to ignore FORCE_INLINE. Some compilers simply count the lines inside the function - if your function is longer than three lines of code, FORCE_INLINE will be ignored.

There is a legend that there is a compiler, where people are very concerned about FORCE_INLINE, he got them so hard that they came up with the following heuristics:

If you suddenly wrote a program, added a dozen FORCE_INLINE there and everything became remarkably faster, it is possible that you use this compiler. See the assembler code if you really need something to be embedded so that FORCE_INLINE really is in this place. I myself was surprised that in some cases compilers silently ignore FORCE_INLINE.

Conversely, the compiler can silently ignore your "not inline". He will think: “You don’t know what you’re doing, wrote not inline, but I know better - I’m in line”.

I know a very smart programmer who wrote the implementation of B-Tree. Everything was done in C ++, on templates, perfect forwarding everywhere , without any dynamic allocation - just perfect. When this code was checked on one of the Performance Analyze, it turned out that execution was not fast enough because it took most of the time to wait for instructions in the instruction cache.

The programmer thought, slightly simplified B-tree, removed perfect forwarding, replaced it everywhere with constant links, because B-tree did not change the data that came to him, removed a couple of templates, made the code a little more compact, added noexcept, which also reduces the volume binary code.

As a result, the code began to work 2 times faster.. The algorithm was not changed - everything is the same, but the code began to work faster.

If you do not believe me that FORCE_INLINE is bad, then I recommend watching the lectures ( 1 , 2 ) of the Clang developer Chandler Karrut . He says the same thing as me, but in short, faster and in English.

From “harmful” benchmarks, we turn to “harmful” advice.

Rarely, when development goes under one platform. More often it happens that once upon a time they developed for one platform, and now for several.

Usually, if a company starts as a small startup, it can choose one platform for itself, for which it already has customers. Gradually, the company grows, the code becomes more popular, and the thought comes to port it to other platforms .

But at the moment when people start porting to GCC, the program stops working: everything compiles, but tests do not pass, the program gives some inadequate output. People climb Stack Overflow or other programming resources ask this question and get an answer:

And really - everything starts to work.

Let me explain with an example what this option does (I did not bother with the function name here).

The qwe function takes two variables: foo by reference, bar by constant reference. These are two different types of data. Inside the body of the function, the value b is added to f and the square from b is displayed.

Below is a slightly assembler pseudocode that compiles the compiler if you slip the -fno-strict-aliasing option.

Compiler:

Here, most people wonder why we loaded b a second time into the register, which is already in the register.

Because they added the option -fno-strict-aliasing. It tells the compiler that two types of data, absolutely different, not intersecting with each other, can still be in the same memory cell . Based on this, the compiler starts generating code.

When you do f + = b, the compiler thinks that perhaps f and b are in the same memory location, and writing to f changes the value of b. And this is in the whole of your project - an option was added, in the whole project the code began to be generated worse .

According to different measurements (for example, this and this), depending on the code and optimization, it gives from 5% to 30% of performance drawdown . It all depends on your code. If you just have system calls and networking, perhaps nothing will change for you with -fno-strict-aliasing. But just writing it when building your project is not entirely correct.

It is more correct to enable the compiler warning (GCC can warn when strict-aliasing is violated) and fix the strict-aliasing violation at this place. Typically, this violation occurs when you use reinterpret_cast for two types that are not related to each other.

Instead of reinterpret_cast it is better to use memcopy or memmove. GCC compilers can optimize them. The performance drawdown in the place where you make memcpy instead of reinterpret_cast should not be.

From fierce theory, we turn to tips that can be used in everyday practice.

Most likely, you know all this, and, most likely, do not use it.

Below is an example of the function foo, in which a vector is created from shared_ptr. We know shared_ptr is exactly a thousand. They are inserted into the vector, and it returns.

The first drawback: if we know how many elements our vector will hold, it is worth calling res. reserve (1000) . This will preallocate the required amount of memory , and the vector, when inserted into it, will not try to guess how much memory is needed. Without reserve in C ++ 11, the vector will first take a value under 2 elements, then under 4 elements, 8, 16, etc. All this time, when resizing, it will call new, delete, copy or move your data type, which is slow.

If you call res. reserve (1000), then the vector knows for sure that it is 1000 elements, there will be no additional move, new, delete .

Starting with C ++ 14 compilers are allowed to optimize calls to new. Some compilers can do this, but they should be lucky - not in all cases it works. If you want to be sure that the compiler will generate the optimal code, it is better to insert a reserve - it will not be worse.

The compiler will not be able to optimize another problematic place in this example. This is a call to std :: shared_ptr <int>. In this line there are two dynamic allocations:

And in memory they are in different places. If your application does not use weak pointers, or does not use them much, instead of std :: shared_ptr <int> (new int), it makes sense to write std :: make_shared <int> () .

Make_shared immediately allocates a chunk of memory where it can store both an int and a reference count. Due to the fact that these two variables will be next, it is more cash-friendly. Working with shared_ptr will be a little faster, there will be not two, but one dynamic allocation.

So, we accelerated the code in the first example a little more than twice, simply by adding reserve and replacing the constructor shared_ptr with make_shared .

The code in the example above is terrible. Let's improve it gradually, and, most likely, not from the place that you noticed.

V is a copy of an element from a vector. Outside the loop, and after iterations of the loop, we don’t need it. We can tell the compiler that the variable v is no longer in use, replacing v with std :: move (v) , turning it into an rvalue, and then inserting it into the resulting res container will occur via the rvalue reference. The resources from v will simply be thrown inside the container, which is faster.

The vector data array is no longer used; everything that it contains will be destroyed when the function exits. Instead of copying v , we can write for (auto &&v: data), i.e. make a link to v (&& - here it is also a link).

The result is the following: v - the link to the element inside the data. When we apply std :: move to this link, the resource will be taken from the data vector - the string will be dragged directly from the vector directly from the vector. There will be no dynamic allocations in the end. Compared to the original code, it became 100,500 times faster .

The situation, as in the example above, occurs more often - the same, but from the vector we memorize the last element, and then we retrieve this last element.

You can also optimize: we say std :: string res = std :: move ( data. Back () ) . After that in res is the value of the last element (we dragged the resources from the vector into the variable res). Now back inside the vector is an empty string, data.pop_back () will simply change one of the internal variables of the vector. No dynamic allocations, no delete calls - all faster.

Parsers and protocols often have arrays of previously known strings. People often write them like this:

It seems that this is only a little not optimal, but, in principle, not so scary. This will happen once when you start your program or when loading a DLL.

But when a project is very large, a large number of such pieces of code can accumulate in different parts of it - 10, 100, 1000. The initialization of the CONSTANTS variable occurs when the program is started or when the dynamic library is loaded. At this moment, this single-threaded code will call new, allocate, initialize the vector of lines, and this can slow down the start of your program or load the dynamic library.

For those who write realtime applications and applications that need to restart quickly, if they have fallen, this can be critical. If you have thousands and millions of such objects, it will slow the start of the program.

If you need an array of constants, and you refer to it only by index or use it only in range-based for, you can replace the vector with a regular array. That is, instead of std :: vector <std :: string> CONSTANTS, you can write std :: string_view CONSTANTS [] .

As a result, the start of your application will not do additional operations , call new, additionally fragment the memory.

If you need a strong guarantee that this will be fulfilled even before your program starts, you can

Above the base base class, in it the act method is nothing interesting. It is interesting when we inherit from this base. Imagine that you are a developer in a company and you were told to figure out someone else’s code, and you see only what goes below.

You only see this, you have not looked into the base description yet and you are wondering what the act method is, whether it is declared in the base class, whether we are overloading it, and in general what is happening here.

You can make the code more readable by simply adding the word override :

It will be not only more readable, but also more secure . When a new person reads your code, he sees override and thinks: “Aha, so this method is declared in the base class, we are overloading it, everything is in order”.

A new person will come and refactor the base class, change the signature of the act method there, add an integer there and at the compilation stage catch the error that in the heir class we are trying to rotate a class that is not already declared in the base class, which is not present.

As a result, it will not break your code . Without override, everything will compile, it will somehow work, but there will be an error at runtime. It can take a lot of time to catch it. Override will break on compiletime. The person who will do refactoring will notice and correct the act method here.

You can also add final to the first line:

The compiler sees that the class is final. When he sees some_implementation and a variable of this class, and act is called on this variable, the compiler understands that nobody else inherits from this class, and there is an act in it. It will call act without passing through virtual tables , there will be no different dynamic things — it will call act, as if it were not virtual.

You can still slightly improve by stuffing everything into an anonymous namespace:

An anonymous namespace is sort of static on steroids. You can declare a variable or function as static, then it will not be visible outside. Anything you declare inside the anonymous namespace will only be visible in this translation unit.

This is good because it unleashes the optimizer . It can do more optimizations based on the fact that no one else uses this class from the outside. The optimizer might also think that since no one uses this class from the outside, it does not need to be exported, which will increase the speed of loading and linking the application at runtime .

For example, in OpenOffice there was a problem that the stage of dynamic loading of libraries and the linker, which connected all the dependencies to each other, took 10 seconds, because a lot of characters stuck out. If you use an anonymous namespace, this problem will become smaller.

Below are the move constructors for the class my_data_struct. It only has the data vector.

Only move forgot. And noexcept . Let's rewrite:

A number of standard containers, such as vector, do complex compile-time calculations based on whether the move constructors are marked as noexcept or not. If your move constructors are not marked as noexcept, the vector can additionally allocate memory when it expands and copy the elements instead of moving them. This is a disgrace, because it is very slow.

To make it faster, add noexcept.

This post does not like most people. Here it is not clear what is written and you need to know:

A bunch of some parts that we do not need.

All you need to do is write here and all this will become the concern of the compiler:

Now the compiler will arrange itself noexcept, constexpr , if it can. The code from the standard library will work faster - it's easier for you.

I want to talk about one thing that I recently came across, and which surprised me.

People cached division of units by number . They created an extern array of 256 elements, in which they remembered:

Further work with this array wherever part of 1 is needed.

Do you think it will be faster or slower? Let's find out.

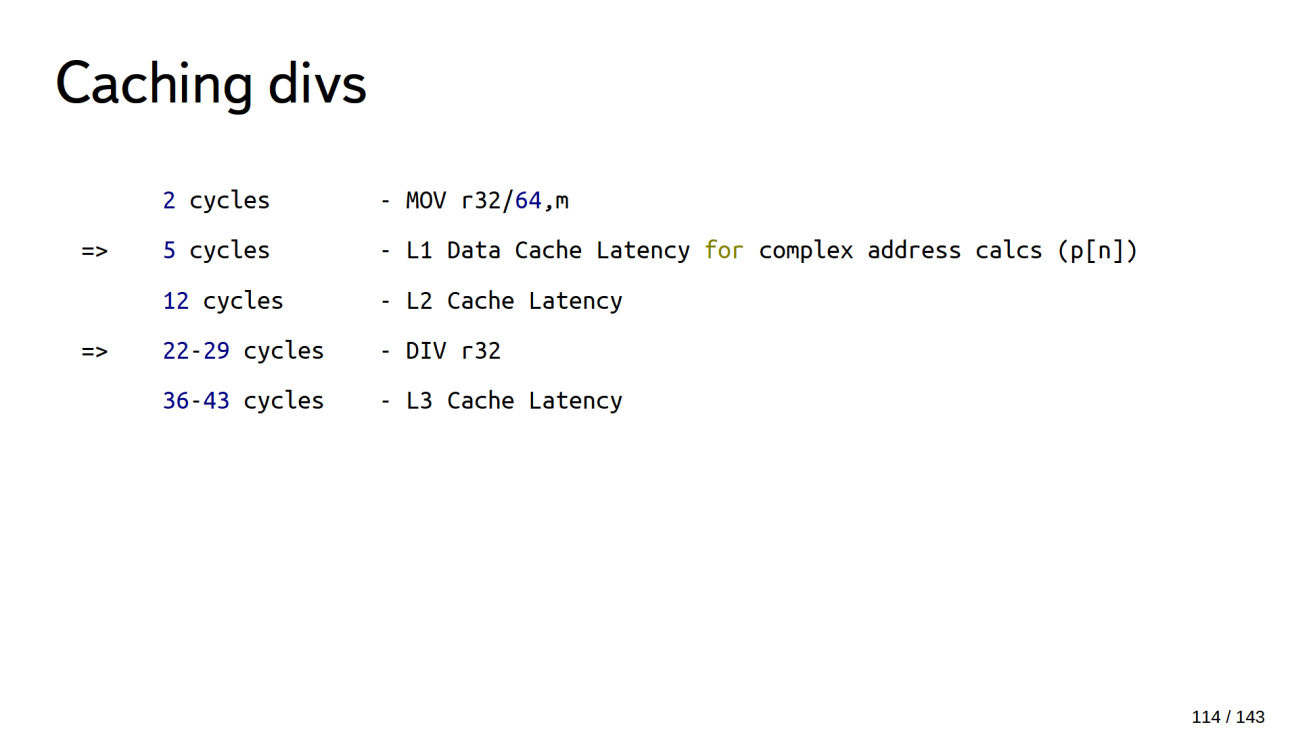

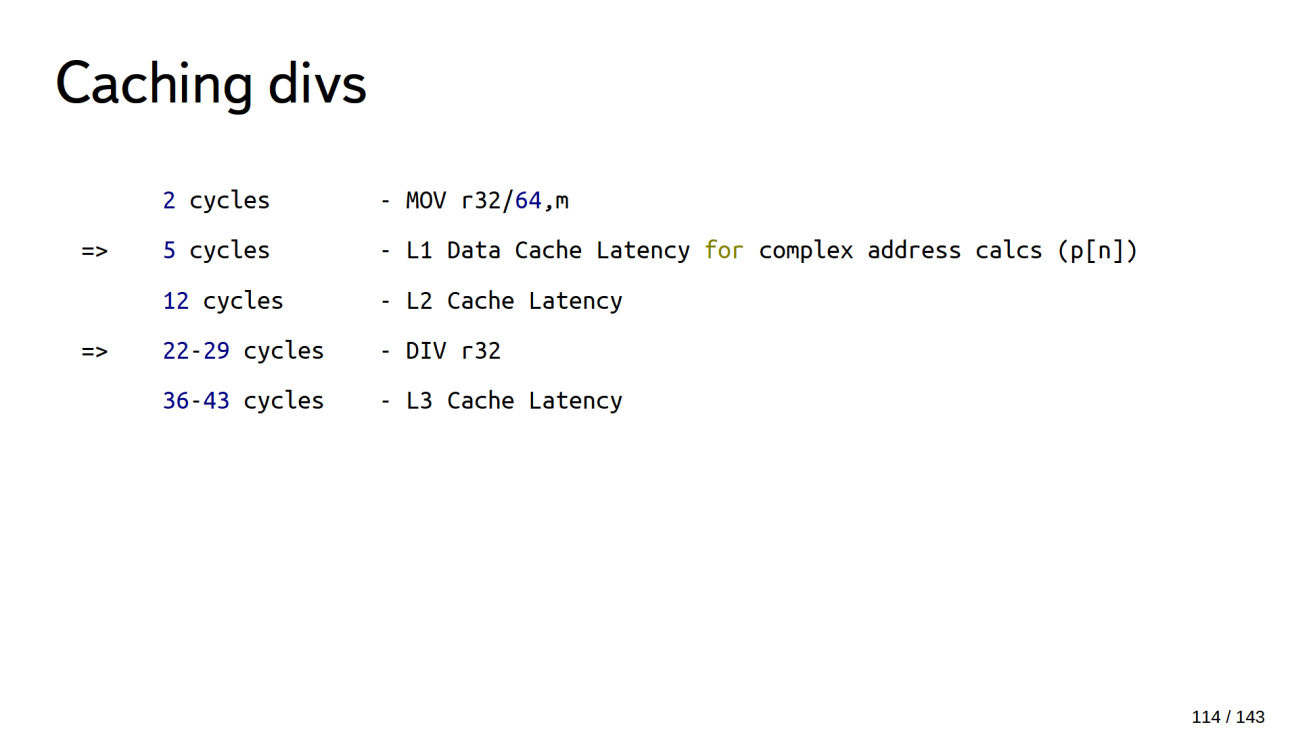

We climb into the Talmuds, where it is described how many clock cycles the instructions take, and we find that access to the element of the array, which is located in the L1 cache, takes 5 cycles, and the division is 22-29 cycles. In total, such caching can be 2-4 times faster, but ...

1. The optimizer does not see the value.

This is a serious negative impact on performance.

At the same time, the compiler will not even believe you if you write const everywhere, because it does not optimize based on const. If you wrote const somewhere, the compiler will ignore it, because somewhere else you could write const_cast.

2. We spent a lot of time discussing this ...

The fact that we spent a lot of time discussing it is also bad in this example. When you see it in someone else's code, you will also spend a lot of time to figure out what a mess this is.

3. We spent 1Kb L1 cache of 16 / 32Kb

People who develop compilers, write books on this topic and protect complex theories, say that in the modern world, system performance is not limited by processor speed, but often by working with memory:

We spent 1Kb cache of the 1st level to save the array, and other useful data will not fit there now. Ulrich Drepper,

developer of the standard C library for Linux, wrote “What Every Programmer Should Know About Memory”, that is, about what every programmer should know about memory. It contains serious theory and physics, but there is a wonderful simple example of the importance of cache. On a piece of code with matrix multiplication, practically without changing the algorithm and assembler instructions, Ulrich Drepper accelerated productivity 10 times simply due to more efficient work with the data cache .

Whether it becomes faster with such caching of units or not is not clear:

But people wrote a lot of code, and we spent a lot of time analyzing it. When you meet him in someone else's production code, you also spend a lot of time analyzing, your productivity will fall. The employer will be unhappy.

We have already talked about the "harmful", let's move on to the good.

How to write your perfect bike, your perfect program, I will not tell you. There are a lot of books for this, some of them are correct, some are not. But, if you wrote a program, a library that:

Welcome to the working group WG21 , we:

From the first time, to make a proposal is not easy, because there are special terms that must beware, and certain formalities that must be observed. If you just write a proposal and send it to the Committee, most likely nothing will happen next unless you (or a trusted person) arrive at the Committee and do not defend your idea before an international meeting. Many offers come in, and first of all, priority is given to those who are personally represented and protected.

Visit the website of the working group https://stdcpp.ru/ , where you can offer your idea, see what others offer, and we will discuss the most interesting proposals together.

You can learn about Anton’s other reports, see his presentations, publications, and lots of other useful information here - http://apolukhin.imtqy.com/

- Some do it for self-study : they take the class of the standard library, write it themselves from scratch, compare what happened with what is in the standard library - they learn something new for themselves in the process.

- Some projects have special code requirements . In embedded-development it is customary to work without RTTI and without exception, therefore parts of the standard library that use RTTI and exception must be rewritten without them.

- Rarely, but it happens when they write a bicycle, because they can write better than in a standard library. As a rule, such innovations sooner or later fall into the standard library.

- It only seems to others that they can write better , and there are more such people. But in the process they learn, find out for themselves something new and discover something interesting.

- There may be other reasons .

Today we will not say that bicycles are bad , this is not necessarily the case. We'll talk about what's really bad:

- mindlessly transfer outdated technologies of 20-30 years old into modern projects;

- use "harmful" benchmarks and optimizations.

And also we will touch upon “harmful” tips , discuss the newest programming practices (C ++ 11 and later), think about what to do with the “perfect” bike.

')

About the speaker: Anton Polukhin ( @antoshkka ) Yandex employee, active Boost library developer, by TypeIndex, DLL, Stacktrace, Maintainer Boost Any, Conversion, Lexicalast, Variant, co-chair of the National Standardization Group C ++. All this together means that Anton sees a lot of code every day , both open-source and commercial, and knows very well how often developers invent bicycles.

So, the theme is bicycles. What class will we start from? Correctly - from the line.

std :: string

string: C ++ 98

Imagine that in the courtyard of the deep Middle Ages, people in armor jump on horses. You are a developer of a standard library, and the only standard that is is C ++ 98. You need to write your own line.

class string { char* data; size_t size; size_t capacity; // ... }; You will start writing something very simple without any templates. You will have a pointer to a dynamically allocated piece of memory (data), a variable size that stores the number of characters in an allocated piece of memory, and a variable capacity that sets the size of this dynamic piece of memory.

class string { char* data; // ... public: string(const string& s) :data(s. clone()) // new + memmove {} // ... string(const char* s); // new + memmove }; For your string class, you write a copy constructor and a constructor from an array of characters. Both of them will dynamically allocate a piece of memory, move the characters there. Here, it says new + memmove, but in fact there may be slight variations like malloc, memcpy, the meaning remains the same.

Since you are a good developer of a standard library, you are wondering how well such a string class will work. You open custom code, look at popular recipes, and find something like the example below, where the map is created from the string and int, and a couple is inserted there.

std::map<string, int> m; m.insert( std::pair<string, int>("Hello!", 1) ); And then you realize that things are bad - slowly , very slowly.

You have a friend Vasya, who is also developing a standard library and his line class works faster. Where exactly the performance is lost :

- First, the string constructor from the character array is called — this is a call to new and memmove (

string("Hello!")). - Then the constructor of the pair is called, i.e. the copy-constructor of the string will be called. This is another call to new and another call to memmove (

make_pair(string("Hello!"), 1)). - Then this pair is inserted into the map. The pair and its contents are copied - another call to new and memmove (

m.insert(make_pair(string("Hello!"), 1))). - After that, the temporary object of the pair and the temporary object of the line will be deleted.

It turns out a very sad picture - 3 new calls, 3 memmove calls, a couple delete calls, and all this is terribly slow. It annoys you that Vasya is faster, and you start thinking about how to do better.

string: COW

Here you either invent yourself or read about Copy-On-Write (COW) technology somewhere. It allows you to work more effectively with temporary objects . Most of the losses were due to the fact that temporary objects are being created that are not modified in any way: they were simply created, some of them were copied and immediately destroyed.

std::map<string, int> m; m.insert( std::pair<string, int>("Hello!", 1) // ); // By using Copy-On-Write technology, instead of the line uniquely owning the dynamic object that was created on the heap, you can have several lines simultaneously referring to a dynamic object . Then in this dynamic object there will be a reference count - how many lines simultaneously work with it. The code will look like this:

class string_impl { char* data; size_t size; size_t capacity; size_t use_count; // <=== // ... }; You write class string_impl (you can write it better) and add use_count , i.e. reference count — how many lines refer to a dynamic object at the same time. You also change the copy constructor; now it will take a pointer to a dynamic object from the input string and increase the reference count.

class string { string_impl*impl; public: string(const string& s) :impl(s.impl) { ++impl->use_count; } }; Little will have to tinker with non-constant functions that potentially change the line. If two lines own a dynamic object, and one of them starts changing it, the other will see the changes. This is a side effect that users will be dissatisfied with, because they first worked with a single line, and then it suddenly changed.

We need to add a check : if we uniquely own a dynamic object, that is, the reference count is 1, then we can do whatever we want with it. If the reference count is not 1, we must call new and memmove, create another dynamic object and copy the data from the old object.

class string { string_impl*impl; public: char& operator[](size_t i) { if (impl->use_count > 1) *this = clone(); } return impl->data[i]; } }; Destructor also change. It will reduce the reference count and look: if we are the last owner of a dynamic object, then it needs to be removed.

class string { string_impl*impl; public: ~string() { --impl->use_count; if (!impl->use_count) { delete impl; } } }; Insert performance in std :: map

There is nothing better in the constructor from the array of characters, still new and memmove. But then the constructor will be called to copy the string when creating a pair, which now does not call either new or memmove. When the pair is inserted into the map, the string copy constructor will again be invoked, which again does not invoke new and memmove.

All temporary object destructors will not cause delete either - beauty!

Total, COW more than 2 times speeds up code in C ++ 98 .

If someone tells me now that he knows a technology that increases the performance of my code by 2-3 times, I will use it everywhere.

In the 1990s, many libraries did this. For example, there is a QT library, where COW is present in almost all base classes .

But technology does not stand still. The 2000s are coming, there are processors with Hyper-threading and multi-core processors, not only server-side, but also user ones:

- Hyper-threading in Pentium 4 processors.

- AMD64 2-core Opteron processor designed for servers.

- Pentium D x86-64 is the first 2-core processor for personal computers.

Now each user can have more than 1 core. And our line is already bad, because we have a common reference count.

For example, there are two streams, in which the string refers to a common dynamic object. If threads work with strings and at the same time decide to remove them, it turns out that we from two threads will try to simultaneously change the dynamic reference count use_count. This will cause either a memory leak or an application to crash .

string: COW MT fixes

To fix this, we introduce an atomic variable - let's pretend that C ++ 98 has std :: atomic. Now our class looks like this:

class string_impl { char* data; size_t size; size_t capacity; atomic<size_t> use_count; //<=== Fixed // ... }; Everything works - great! Nearly…

Now we have both the copy constructor and the destructor have atomic instructions in themselves , which is not very good. Non-constant methods turn into a terrible disgrace, because boundary conditions appear that rarely arise, but which we are obliged to handle.

class string { string_impl*impl; public: char& operator[](size_t i) { if (impl->use_count > 1) { // atomic string cloned = clone(); // new + memmove + atomic — swap(*this, cloned); // atomic in ~string for cloned; delete in ~string if other thread released the string } // ... } }; For example, two threads own lines. These lines refer to the same dynamic object. One thread decides to change the string. The line looks at that it non-uniquely owns the dynamic object and starts to clone the object. At this point, the second thread says that it no longer needs the line and calls its destructor.

And the first thread still clones, calls new, memmove. He finished his business and suddenly realizes that he has two lines, but he needs one.

It is necessary to provide logic for this as well, and these are additional checks, perhaps an additional call to new and memmove, and again many atomic operations, which is bad.

Atomic

X86 is not an atomic increment - a simple increase in the integer by one takes one processor cycle [ 1 ]. If this operation is made atomic, it will take from 5 to 20 cycles if only one core makes an atomic instruction and if this core changes the atomic variable [ 2 ]. If the nucleus is not the last to change the atomic variable, then around 40 cycles.

So, this solution is 5-40 times slower only due to the fact that an atomic variable is used. Further worse.

If several cores simultaneously work with an atomic variable, for example, three cores simultaneously want to increment an atomic variable, we will have a lucky kernel that will start to make an atomic increment, the other two cores will wait from 5 to 40 cycles until the core finishes all its actions.

Then a second lucky core appears, which begins to perform an atomic operation, since it was not the latter that changed the atomic variable, it would take 40 cycles. The third core is still waiting. The more nuclei at the same time they try to perform an atomic operation on the same variable, the longer the last nucleus will wait.

This is how the last kernel waits:

In this example, 16 cores and the last core waits around 600 cycles . This is the easiest kernel unluckiest. If we sum up the downtime, then the idle picture will turn out to be quadratic.

At 16 cores, about 6000 clock cycles went nowhere .

There are systems containing more than 90 kernels, and if all of them 90 kernels decide to work simultaneously with the same atomic variable, everything will be very sad.

COW

C ++ 11

In C ++ 11, rvalue reference appears, which allows you to write special classes and special methods that work with temporary objects.

For example, a move constructor for a string works equally well if the string is COW and if the string is the most common baseline from the Middle Ages, where the object is dynamically allocated, and only one line owns it.

string::string(string&& s) { swap(*this, s); } What's going on here? At the entrance comes the object s . It is temporary, we know that the compiler will delete it soon, that no one else will use it, and we can take resources from it . With it, you can do anything at all, the main thing is that for him you can then call the destructor.

Took away resources. We are an empty string, we received a string with the result, we make a swap . Now we are a string with the result, and the time string with no resources is empty.

Let's see how it works in our example, if we have a string without COW.

std::map<string, int> m; m.insert( std::pair<string, int>("Hello!", 1) // string("Hello!") ); A string is created from the “Hello” character array. Here new and memmove are the same as with COW.

The next is better. When copying a string into a pair, we will call the move-constructor of the string (

pair(string&&, int) ). The pair sees that the string is temporary, and takes resources from the temporary object. New and memmove will not.When inserting a pair into a map, the map sees that the pair is also a temporary object (

m.insert(pair&&) ). Map calls the move constructor for the pair, the couple calls the move constructor for its content, the move constructor for the string.Without new and memmove, the line will move inside the map: 1 call to new and 1 call to memmove - the same as with COW, but without atomic operations .

Well, what if we want to copy a line? We are all reasonable people, and we want to copy a line, as a rule, if we want to change it.

Without COW we copied the line, immediately called new and memmove. We can change the line and do anything.

If we have a COW line:

- create a new row object with an atomic increment of the total reference count;

- one of these lines is starting to change;

- the line will atomically look that we do not uniquely own the line, will cause new, memmove, perform additional checks so that the second thread doesn’t destroy this line, that we have the right number of lines, etc.

The result is the same as without COW, but at least a pair of unnecessary atomic operations appears.

char& operator[](size_t i) { if (impl->use_count > 1) { // atomic string cloned = clone(); // atomic— swap(*this, cloned); // atomic in ~string for cloned } return impl->data[i]; } Non-constant methods are still terrible. If we want to go through our line (the first ten characters in a line) using indices, the COW line is 10 atomic operations on the same atomic variable. If two streams simultaneously pass through the same line - a big trouble.

As a result, the COW constructor has atomic operators, the destructor will most likely call atomic instructions — there are many atomic instructions that we don’t need. We did not ask them, and this is against the rules of C ++. We pay for what we do not use.

Beginning with C ++ 11 COW, lines are not allowed in the standard. There are special conditions imposed on the line that COW cannot be implemented. All modern implementations of standard libraries do not have COW lines.

COW is outdated, more careful with its use.

Careful use COW in new projects. Do not trust articles that are more than 10 years old, recheck them. COW does not show such good results as 20 years ago.

Cases where COW is still burning

If you had a COW line and said to all its users: “We have a COW line - it copies everything perfectly, everything is fine, we have COW everywhere”, users could sharpen it and write code taking into account that your classes use COW.

For example, a user could write a class bimap — this is a class that can search both by key and by value.

// COW legacy class bimap { std::map<string, int> str_to_int; std::map<int, string> int_to_str; public: void insert(string s, int i) { str_to_int.insert(make_pair(s, i)); int_to_str.insert(make_pair(i, std::move(s))); } He could write it through two std :: map, each of which has a line. Here is the COW line, the user knows about this, as well as the fact that there are two copies of the line in the map. The user knows that they will dynamically refer to the same memory object, and that this is effective from memory. If here the COW line is changed to the usual one, the cost of memory will double.

// COW legacy fix class bimap { std::map<string, int> str_to_int; std::map<int, string_view> int_to_str; public: void insert(string s, int i) { auto it = str_to_int.insert(make_pair(std::move(s), i)); int_to_str.insert(make_pair(i, *it.first))); } In C ++ 17, this can be corrected, for example, as in the example above, replacing one of the lines with string_view. From memory it will turn out exactly the same, but atomic operations will be removed and the code will be slightly faster.

One optimization for string

It turns out that we added a move constructor to that ancient line of the year 98, which uniquely owned a dynamic object, and that’s the perfect line? Nothing better can be done?

That's what people came up with. They looked at one of our first examples where a map is created from a string and an int, and a couple is inserted into it, shook their heads for a long time and said how bad things are:

- Here new and memmove is called. For new there can be a system call and atomic operations, but we still do not have C ++ 14, where the compiler can optimize new. Everything is very bad - let's do something better!

People have come up with how you can, without changing the size of the string — the variable std :: string — to store characters in it without dynamically allocating memory . They looked at the very first class of the line:

—We have a pointer on a 64-bit platform here - this is 8 bytes, we have a size on a 64-bit platform too - this is again 8 bytes. This is 16 bytes - you can save 15 characters! What if to use these two variables not as a pointer variable and a size variable, but to place data directly on top of them?

class string { size_t capacity; union { struct no_small_buffer_t { char* data; size_t size; } no_small_buffer; struct small_buffer_t { char data[sizeof(no_small_buffer_t)]; }small_buffer; }impl; } Now inside the string in the example above is a union. The first part is a pointer to data and the size_t variable. The second part of the union, the structure, contains data (a small buffer of the size of char * and size_t). Approximately 16 bytes fit into it.

If capacity = 0 - this means that we have not dynamically allocated memory and we need to go to impl small_buffer_t, and our data will be in data without our dynamic allocation.

If capacity ≠ 0, it means that we have dynamically allocated memory somewhere, and we need to go to impl no_small_buffer_t. There is a pointer to a dynamically allocated piece of memory and the variable size.

Of course, in the example a slightly simplified version. Some standard libraries, such as Boost, can store 22 characters and, in addition, terminate 0 without dynamic allocations without increasing the size of the string. All modern standard libraries use this trick. This is called Small String Optimization .

This thing is good, firstly, on empty lines. They are now guaranteed not to allocate memory, and guaranteed to call the c_str () method will no longer allocate memory.

I know companies that have rewritten std :: string, because their empty string dynamically allocates memory. Do not do this anymore.

Secondly, for example, our “Hello!” Will fit without dynamic allocation. If the usernames are stored in the lines, their names and surnames are separate from the names, some identifiers, cities, countries, names of currencies - as a rule, everything will fit into the line without dynamic allocations.

But people looked and again thought that slowly. Starting with C ++ 17, the compiler is able to optimize memmove , its alternative is the one that is in the line at the compilation stage. But this was not enough. In C ++ 17 there is Guaranteed copy elision , which guarantees that in some cases there will be no copies at all and there will be no memmove. Just in a map, by some miracle, this line is formed without intermediate variables.

With the line figured out, but the answers to some clarifying questions from the audience here

- More is not needed!

- I have a real case from practice. Replacing the COW line with a string with Small String Optimization sped up the project by 7-15%, because for some reason we work most of the time with small lines that get into std :: string. Therefore it becomes faster.

Is it possible to use a line that will dynamically store size and capacity in memory? Can. There will be problems with the optimizer. Seeing that we have a pointer to some part of the memory, he will not be able to better optimize these variables. The optimizer will not be able to do heuristics based on size and capacity. If size and capacity are located on the stack and the optimizer sees this, it will be able to make heuristics on it and better optimize the code.

- Yes, worse. But the number of bytes, which is in small_buffer, is the same.

- So far, everything looks like a bicycle ad in fact. For example, the COW in the GCC was until 2015, before the release of 5.1. So you had to write bikes?

- More is not needed!

- How? For example, everyone says that the cool thing is Small String Optimization. But, let's say, when a line is dynamically allocated, size and capacity can be thrust into the same buffer as the line. Then the string will be only 8 bytes. And if I have many lines in the object, will it be more profitable?

- I have a real case from practice. Replacing the COW line with a string with Small String Optimization sped up the project by 7-15%, because for some reason we work most of the time with small lines that get into std :: string. Therefore it becomes faster.

Is it possible to use a line that will dynamically store size and capacity in memory? Can. There will be problems with the optimizer. Seeing that we have a pointer to some part of the memory, he will not be able to better optimize these variables. The optimizer will not be able to do heuristics based on size and capacity. If size and capacity are located on the stack and the optimizer sees this, it will be able to make heuristics on it and better optimize the code.

- Does this optimization work worse with wide unicode strings?

- Yes, worse. But the number of bytes, which is in small_buffer, is the same.

Development without regard to ready-made solutions. std :: variant

Using the example of std :: variant, consider what happens if people try to cycle-build without looking at other people's decisions.

In C ++ 17, the std :: variant class is adopted - this is a smart union that remembers what it stores. We can write std :: variant <std :: string, std :: vector <int >>. This means that the variant can store in itself either a string or a vector. If we write the word “Hello” in such a variant, the variant remembers the index of the template parameter in the variable t_index (which contains a line), locates the line in place and will store it.

union { T0 v0; T1 v1; T2 v2; // ... }; int t_index; If we now try to write a vector there, std :: variant will see that it stores the line at the moment, not the vector, will call delete for the line, construct a vector in place of the line and update t_index at some point.

The class was adopted in C ++ 17, and people all over the world immediately rushed to write it again, rewrite, and cycle building .

template <class... T> class variant { // ... std::tuple<T...> data; }; Above - the real code from the open source project, do you see an error in it? Here, instead of union, was a struct.

Below is the code from the commercial project:

variant<T...>& operator=(const U& value) { if (&value == this) { return *this; } destroy(); // data->~Ti() construct_value(value); // new (data) Tj(value); t_index = get_index_from_type<U>(); // t_index = j; return *this; } Here the error is much worse and in many implementations of the variant it is present. There will be big problems with exceptions.

For example, we have a variant that stores std :: string and we are trying to write a vector into it. The destroy line will invoke delete for the string. From this point on, the variant is empty, it does not store anything.

Then in place of the line we are trying to create a vector. The vector is transmitted by a constant link and we have to copy it. Call placement newand trying to copy the vector. The vector is a large class, it can store user data, which when copying can throw exceptions. Suppose we threw an exception. It will exit the current block, causing the destructors of all local variables, and will exit the block above. There, an exception will also cause the destructors of all local variables. It will reach the block where the variant was declared and cause the variant destructor.

variant<T...>& operator=(const U& value) { if (&value == this) { return *this; } destroy(); // data->~Ti() construct_value(value); // throw; ... // ... // ~variant() { // data->~Ti() // Oops!!! The destructor of the variant looks at what is written to it in the variable index, which has not yet been updated. It contains std :: string, i.e. variant thinks that it has a string to be deleted. But in fact, there is nothing at this place, the line has already been deleted. At this point , your entire program will crash .

To avoid this, it is enough to open the interface of the standard library and see that std :: variant has the valueless_by_exception function , wonder what it is, and see why it is needed. You can open the source Boost - there 2/3 of the code is devoted to this problem.

I say this all so that you and all your subordinates watch how other people have bikes written, in order to avoid such sad consequences. This is a very popular mistake. She slips everywhere.

I give an example of a variant only, but in many other classes there is a similar one. People rewrite, think that something is superfluous, do not look into someone else's code, and this leads to disastrous consequences.

Please look at the code that you are rewriting or inventing

again. This approach will greatly simplify your life.

FORCE_INLINE

It's time to jump on sick corns.

You can find projects where all methods are labeled as FORCE_INLINE - literally the entire project up and down in gigabytes of code. You ask people:

- Why are you all tagged FORCE_INLINE?

- We wrote a small benchmark, checked - with FORCE_INLINE faster. We wrote and here is a small benchmark - checked, with FORCE_INLINE faster. We tested everything with small benchmarks and everywhere faster with FORCE_INLINE - we pushed FORCE_INLINE into the whole project.

- You did the wrong benchmark.

And from this point on, it is very difficult to argue with people, because they have a benchmark, and you cannot write such a benchmark.

The processor is not only the data cache

Modern processors are very complex devices. Nobody knows until the end how they work, even the developers of processors.

" : , (TLB) () , , " [ 1 ].

A modern processor cannot run your program directly from disk or straight from RAM. Before he starts executing the program, he must pull it up in the instruction cache. Only from there the processor can execute instructions.

Some processors have a cunning instruction cache, which makes additional processing of commands that fall into it. But the instruction cache is small, there is not enough code in it. If you use a compiler that FORCE_INLINE really does, when you ask him to do FORCE_INLINE, your binary code increases.

Imagine that in the picture above your program. Green indicates what fit into the cache. This is additionally your hot path - the place where your program spends most of its time. Now it all fits into the processor’s cache, there will be no idle time in the instruction cache.

If you add FORCE_INLINE, on benchmarks everything will be fine, but the code will no longer fit into the instruction cache.

When you get to the red line, the processor will say: "I do not have new instructions, I have to wait for them to catch up with the memory." If it is somewhere in the loop, it will constantly flip through some instructions, pull them from memory to cache. It is slow.

In order for your benchmark to correctly reflect the real state of affairs, it must take into account all 3 caches - data, instructions and addresses.. To do this, the benchmark should:

- , production ;

- , production ;

- , production .

In other words, it is impossible to write such a benchmark . You can test only your production code.

If you have added FORCE_INLINE and your production code has not become better - it is better to remove FORCE_INLINE. Modern compilers can inline. 10 years ago, they did not know how to do this well, and FORCE_INLINE really did help in some cases, for example, with GCC. Not now.

I say that FORCE_INLINE is bad, it is worth giving it up in most cases, although it can sometimes be useful.

However, compiler developers are smart people. They see that users write FORCE_INLINE everywhere and programs get slower. Therefore, modern compilers have different heuristics to ignore FORCE_INLINE. Some compilers simply count the lines inside the function - if your function is longer than three lines of code, FORCE_INLINE will be ignored.

There is a legend that there is a compiler, where people are very concerned about FORCE_INLINE, he got them so hard that they came up with the following heuristics:

If the user marks the FORCE_INLINE function, which is guaranteed not to be done by FORCE_INLINE, the compiler remembers and considers how many times this has happened. If a certain threshold value is exceeded, the compiler in itself puts a tick: “The user does not know what FORCE_INLINE is and does not use it where it is necessary to ignore all FORCE_INLINE from the user”.

If you suddenly wrote a program, added a dozen FORCE_INLINE there and everything became remarkably faster, it is possible that you use this compiler. See the assembler code if you really need something to be embedded so that FORCE_INLINE really is in this place. I myself was surprised that in some cases compilers silently ignore FORCE_INLINE.

Conversely, the compiler can silently ignore your "not inline". He will think: “You don’t know what you’re doing, wrote not inline, but I know better - I’m in line”.

Case from practice

I know a very smart programmer who wrote the implementation of B-Tree. Everything was done in C ++, on templates, perfect forwarding everywhere , without any dynamic allocation - just perfect. When this code was checked on one of the Performance Analyze, it turned out that execution was not fast enough because it took most of the time to wait for instructions in the instruction cache.

The programmer thought, slightly simplified B-tree, removed perfect forwarding, replaced it everywhere with constant links, because B-tree did not change the data that came to him, removed a couple of templates, made the code a little more compact, added noexcept, which also reduces the volume binary code.

As a result, the code began to work 2 times faster.. The algorithm was not changed - everything is the same, but the code began to work faster.

If you do not believe me that FORCE_INLINE is bad, then I recommend watching the lectures ( 1 , 2 ) of the Clang developer Chandler Karrut . He says the same thing as me, but in short, faster and in English.

From “harmful” benchmarks, we turn to “harmful” advice.

Aliasing

Rarely, when development goes under one platform. More often it happens that once upon a time they developed for one platform, and now for several.

Usually, if a company starts as a small startup, it can choose one platform for itself, for which it already has customers. Gradually, the company grows, the code becomes more popular, and the thought comes to port it to other platforms .

But at the moment when people start porting to GCC, the program stops working: everything compiles, but tests do not pass, the program gives some inadequate output. People climb Stack Overflow or other programming resources ask this question and get an answer:

- Add the magic compiler option -fno-strict-aliasing and everything will work for you.

And really - everything starts to work.

Let me explain with an example what this option does (I did not bother with the function name here).

bar qwe(foo& f, const bar& b) { f += b; return b * b; } The qwe function takes two variables: foo by reference, bar by constant reference. These are two different types of data. Inside the body of the function, the value b is added to f and the square from b is displayed.

Below is a slightly assembler pseudocode that compiles the compiler if you slip the -fno-strict-aliasing option.

bar qwe(foo& f, const bar& b) { // load b // load f f += b; // load b return b * b; } Compiler:

- loads into the register the value of the variable b,

- loads into the register the value of the variable f,

- will perform the operation f + = b,

- write the value of f to memory

- will load the value of b again into memory,

- executes b 2 .

Here, most people wonder why we loaded b a second time into the register, which is already in the register.

Because they added the option -fno-strict-aliasing. It tells the compiler that two types of data, absolutely different, not intersecting with each other, can still be in the same memory cell . Based on this, the compiler starts generating code.

When you do f + = b, the compiler thinks that perhaps f and b are in the same memory location, and writing to f changes the value of b. And this is in the whole of your project - an option was added, in the whole project the code began to be generated worse .

According to different measurements (for example, this and this), depending on the code and optimization, it gives from 5% to 30% of performance drawdown . It all depends on your code. If you just have system calls and networking, perhaps nothing will change for you with -fno-strict-aliasing. But just writing it when building your project is not entirely correct.

It is more correct to enable the compiler warning (GCC can warn when strict-aliasing is violated) and fix the strict-aliasing violation at this place. Typically, this violation occurs when you use reinterpret_cast for two types that are not related to each other.

Instead of reinterpret_cast it is better to use memcopy or memmove. GCC compilers can optimize them. The performance drawdown in the place where you make memcpy instead of reinterpret_cast should not be.

From fierce theory, we turn to tips that can be used in everyday practice.

Daily tips

Most likely, you know all this, and, most likely, do not use it.

Example # 1, vector

Below is an example of the function foo, in which a vector is created from shared_ptr. We know shared_ptr is exactly a thousand. They are inserted into the vector, and it returns.

auto foo() { std::vector< std::shared_ptr<int> > res; for (unsigned i = 0; i < 1000; ++i) res.push_back( std::shared_ptr<int>(new int) ); return res; } The first drawback: if we know how many elements our vector will hold, it is worth calling res. reserve (1000) . This will preallocate the required amount of memory , and the vector, when inserted into it, will not try to guess how much memory is needed. Without reserve in C ++ 11, the vector will first take a value under 2 elements, then under 4 elements, 8, 16, etc. All this time, when resizing, it will call new, delete, copy or move your data type, which is slow.

If you call res. reserve (1000), then the vector knows for sure that it is 1000 elements, there will be no additional move, new, delete .

Starting with C ++ 14 compilers are allowed to optimize calls to new. Some compilers can do this, but they should be lucky - not in all cases it works. If you want to be sure that the compiler will generate the optimal code, it is better to insert a reserve - it will not be worse.

The compiler will not be able to optimize another problematic place in this example. This is a call to std :: shared_ptr <int>. In this line there are two dynamic allocations:

- The first is a new int.

- The second is hidden inside the shared_ptr constructor. He needs a reference counter, which he dynamically allocates somewhere on the heap.

And in memory they are in different places. If your application does not use weak pointers, or does not use them much, instead of std :: shared_ptr <int> (new int), it makes sense to write std :: make_shared <int> () .

Make_shared immediately allocates a chunk of memory where it can store both an int and a reference count. Due to the fact that these two variables will be next, it is more cash-friendly. Working with shared_ptr will be a little faster, there will be not two, but one dynamic allocation.

So, we accelerated the code in the first example a little more than twice, simply by adding reserve and replacing the constructor shared_ptr with make_shared .

Example # 2, for (auto v: data)

auto foo() { std::vector<std::string> data = get_data(); // ... for (auto v: data) res. insert( v ); return res; } The code in the example above is terrible. Let's improve it gradually, and, most likely, not from the place that you noticed.

V is a copy of an element from a vector. Outside the loop, and after iterations of the loop, we don’t need it. We can tell the compiler that the variable v is no longer in use, replacing v with std :: move (v) , turning it into an rvalue, and then inserting it into the resulting res container will occur via the rvalue reference. The resources from v will simply be thrown inside the container, which is faster.

The vector data array is no longer used; everything that it contains will be destroyed when the function exits. Instead of copying v , we can write for (auto &&v: data), i.e. make a link to v (&& - here it is also a link).

The result is the following: v - the link to the element inside the data. When we apply std :: move to this link, the resource will be taken from the data vector - the string will be dragged directly from the vector directly from the vector. There will be no dynamic allocations in the end. Compared to the original code, it became 100,500 times faster .

Example # 3, vector

auto foo() { std::vector<std::string> data = get_data(); // ... std::string res = data.back(); data.pop_back(); // ... return res; } The situation, as in the example above, occurs more often - the same, but from the vector we memorize the last element, and then we retrieve this last element.

You can also optimize: we say std :: string res = std :: move ( data. Back () ) . After that in res is the value of the last element (we dragged the resources from the vector into the variable res). Now back inside the vector is an empty string, data.pop_back () will simply change one of the internal variables of the vector. No dynamic allocations, no delete calls - all faster.

Example # 4 global constants

Parsers and protocols often have arrays of previously known strings. People often write them like this:

#include <vector> #include <string> static const std::vector<std::string> CONSTANTS = { "Hello", "Word", "!" }; It seems that this is only a little not optimal, but, in principle, not so scary. This will happen once when you start your program or when loading a DLL.

But when a project is very large, a large number of such pieces of code can accumulate in different parts of it - 10, 100, 1000. The initialization of the CONSTANTS variable occurs when the program is started or when the dynamic library is loaded. At this moment, this single-threaded code will call new, allocate, initialize the vector of lines, and this can slow down the start of your program or load the dynamic library.

For those who write realtime applications and applications that need to restart quickly, if they have fallen, this can be critical. If you have thousands and millions of such objects, it will slow the start of the program.

If you need an array of constants, and you refer to it only by index or use it only in range-based for, you can replace the vector with a regular array. That is, instead of std :: vector <std :: string> CONSTANTS, you can write std :: string_view CONSTANTS [] .

As a result, the start of your application will not do additional operations , call new, additionally fragment the memory.

If you need a strong guarantee that this will be fulfilled even before your program starts, you can

static constreplace it with constexpr. It will oblige your compiler to execute this piece of code before runtime.Example # 5 Inheritance

// base. hpp struct base { // ... virtual void act() = 0; virtual ~base(){} }; Above the base base class, in it the act method is nothing interesting. It is interesting when we inherit from this base. Imagine that you are a developer in a company and you were told to figure out someone else’s code, and you see only what goes below.

// something. cpp struct some_implementation: base { // ... void act() { /* ... */ } }; You only see this, you have not looked into the base description yet and you are wondering what the act method is, whether it is declared in the base class, whether we are overloading it, and in general what is happening here.

You can make the code more readable by simply adding the word override :