How to become a GPU engineer in an hour

Does an non-gaming iOS developer need to be able to work with the GPU? Does he even need to know that there is a GPU in the iPhone? Many successfully work in iOS development, never thinking about this topic. But the GPU can be useful both for 3D graphics and for other tasks, in some cases leaving the CPU behind.

When at the Mobius 2017 Moscow conference, Andrei Volodin (Prisma AI) spoke about the use of GPUs in iOS, his report was one of the favorites of the conference, receiving high ratings from the audience. And now, on the basis of this report, we have prepared a habrapost, which allows us to receive all the same information in text. It will be interesting even to those who do not work with iOS: the report begins with things that are not tied specifically to this platform.

The plan is as follows. First, look at the history of computer graphics: how it all began and how we came to what is now. Then let's figure out how to render on modern video cards. What does Apple offer us as an iron vendor? What is GPGPU. Why Metal Compute Shaders is a cool technology that changed everything. And at the very end we'll talk about the hype train, that is, the popular now: Metal Performance Shaders, CoreML, and the like.

')

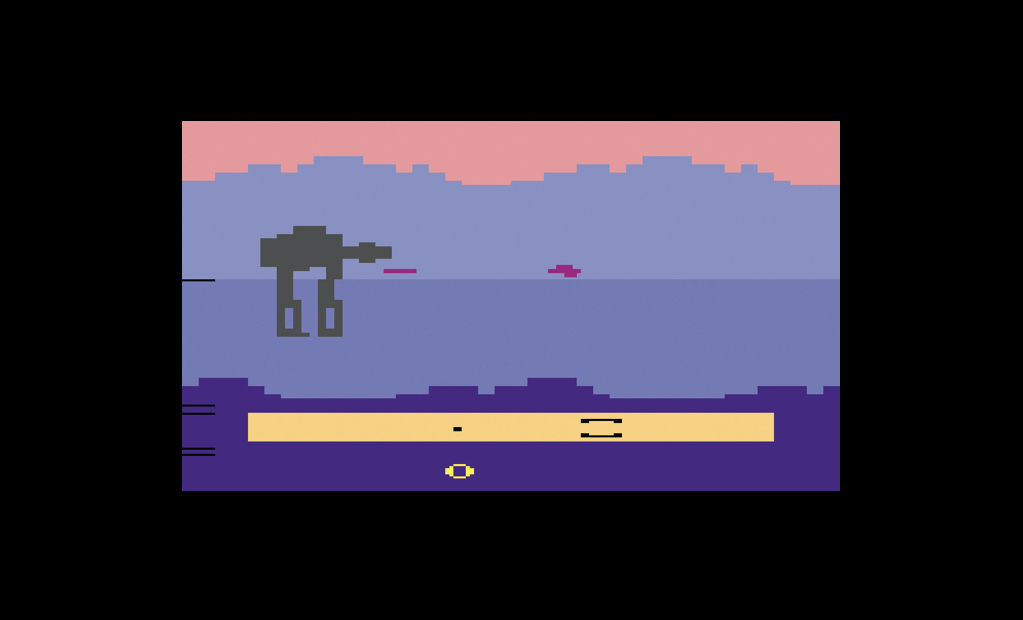

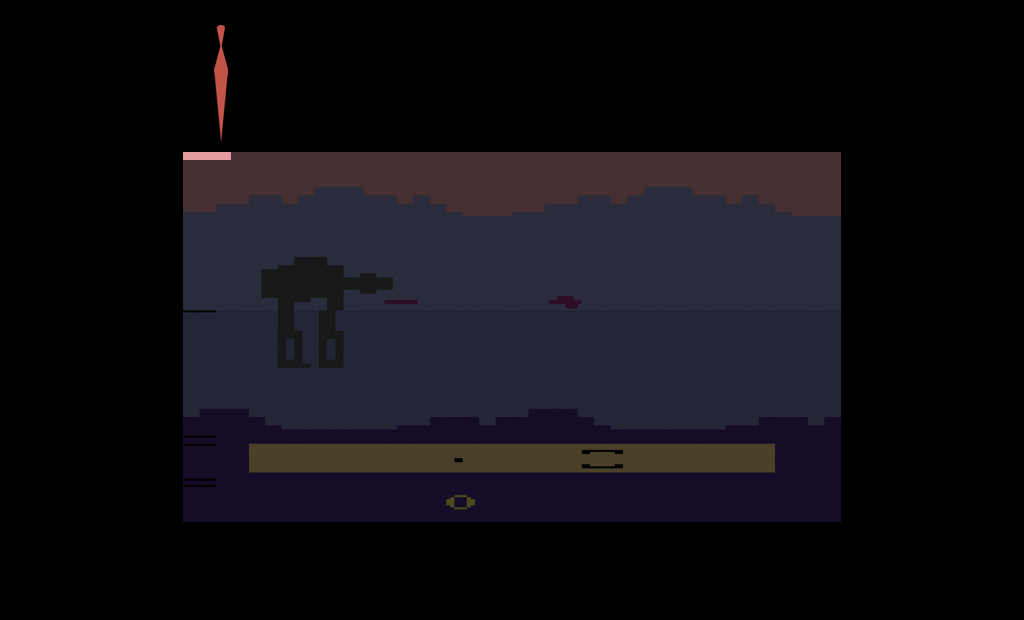

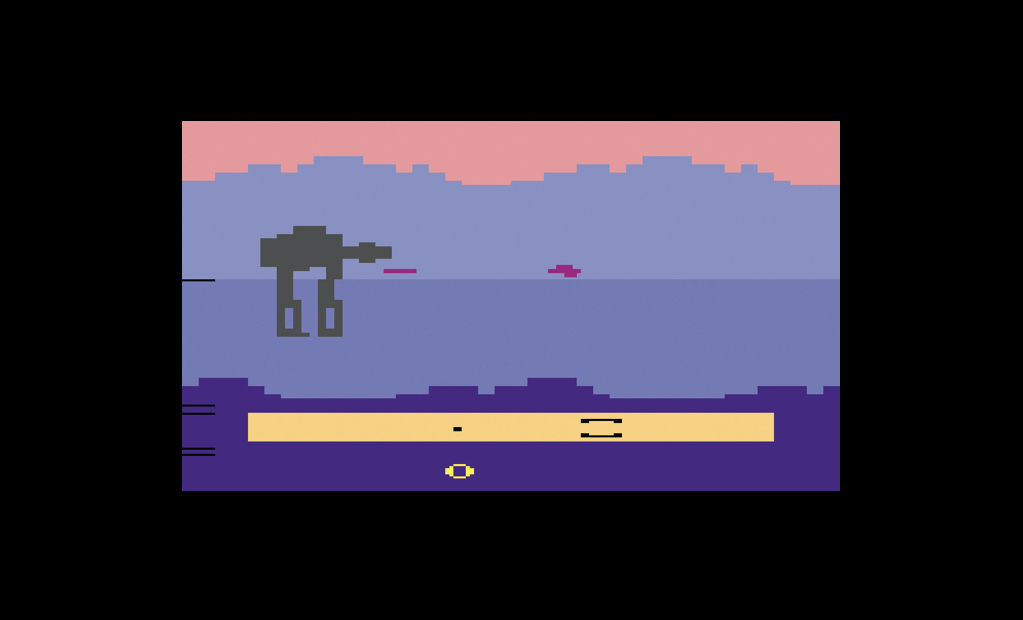

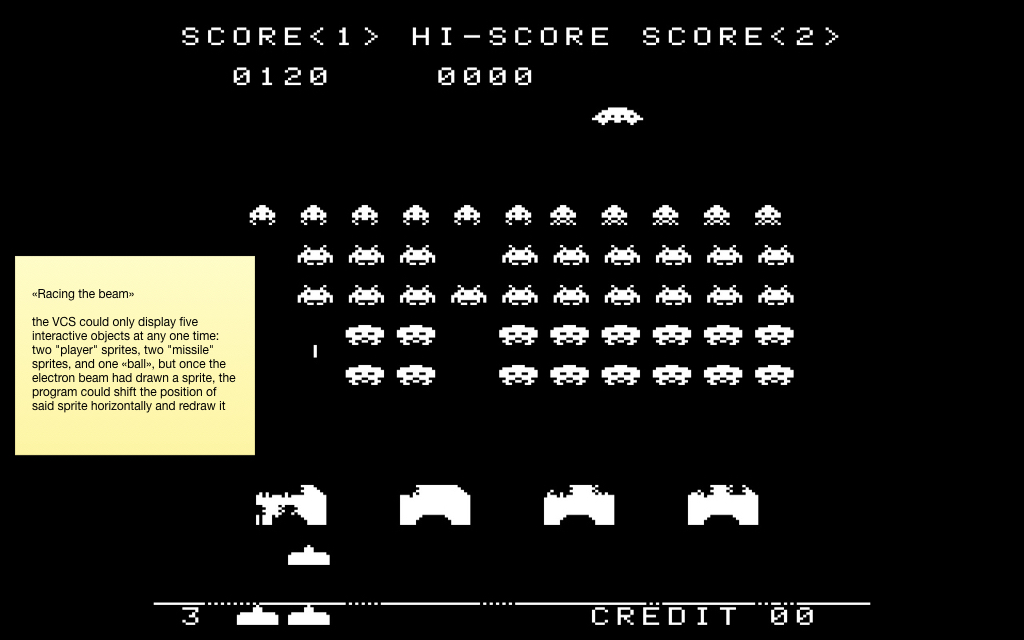

The first known system with a separate iron for the video was the Atari 2600. This is a fairly well-known classic gaming console, released in 1977. Its feature was that the amount of RAM was only 128 bytes, and not only available to the developer: it was for the game, for the operating system of the console itself, and for the entire call stack.

On average, the games were rendered in 160x192 resolution, and there were 128 colors in the palette. It is easy to calculate that storing one frame of the game required many times more RAM. Therefore, this console went down in history as one big hack: all the graphics in it were generated in real time (in the truest sense of the word).

At that time, televisions worked with ray guns, through electronic heads. The image was scanned line by line, and the developers had to, as the TV scans the image through an analog cable, tell it what color of the current pixel to draw. Thus the image appeared on the screen completely.

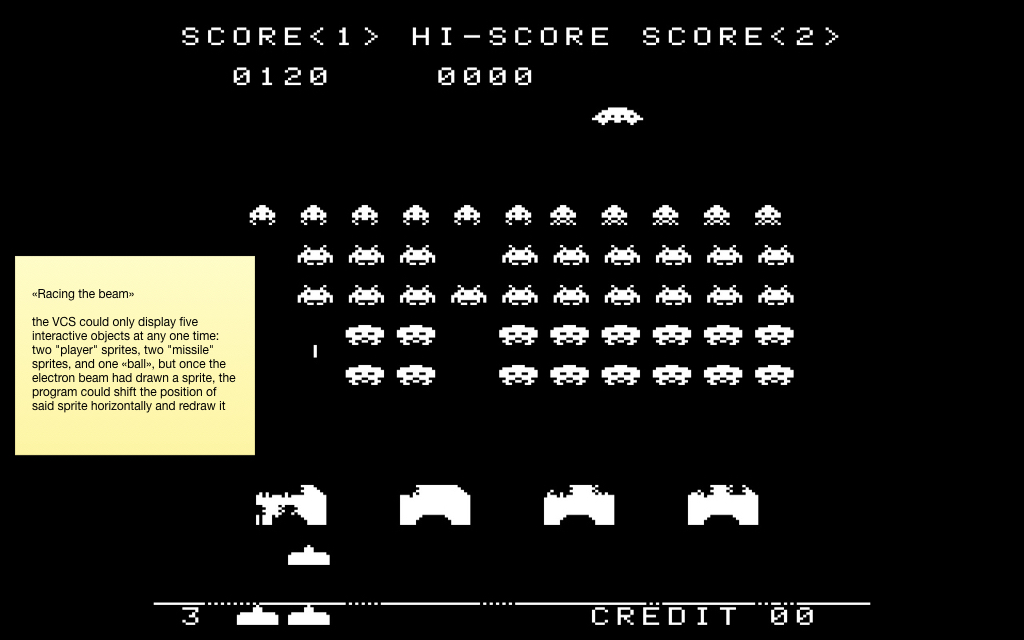

Another feature of this console was that at the iron level, it supported only five sprites at a time. Two sprites for the player, two for the so-called "missiles" and one sprite for the ball. It is clear that for most games this is not enough, because there are usually more interactive objects on the screen.

Therefore, there was used the technique, subsequently went down in history under the name "race with the beam." As the beam scanned the image from the console, those pixels that had already been drawn remained on the screen until the next frame. Therefore, the developers moved the sprites while the beam was moving, and thus could draw more than five objects on the screen.

This is a screenshot from the famous Space Invaders game, in which there are much more than five interactive objects. Exactly in the same way, effects like parallax (with wave-like animations) and others were drawn. Racing the Beam was written based on all of this fever. And from it I took this curious illustration:

The fact is that the TV scanned the image in a non-stop mode, and the developers had no time to read the joystick, calculate some kind of game logic, etc. Therefore, the resolution was made higher than on the screen. And the “vertical blank”, “overscan” and “horizontal blank” zones are a fake resolution that the TV scanned, but the video signal was not given at that time, and the developers considered the game logic. And in the Pitfall game from Activision, the logic was so complicated in those times that all the time they had to draw more treetops above and black ground below in order to have more time to cheat it.

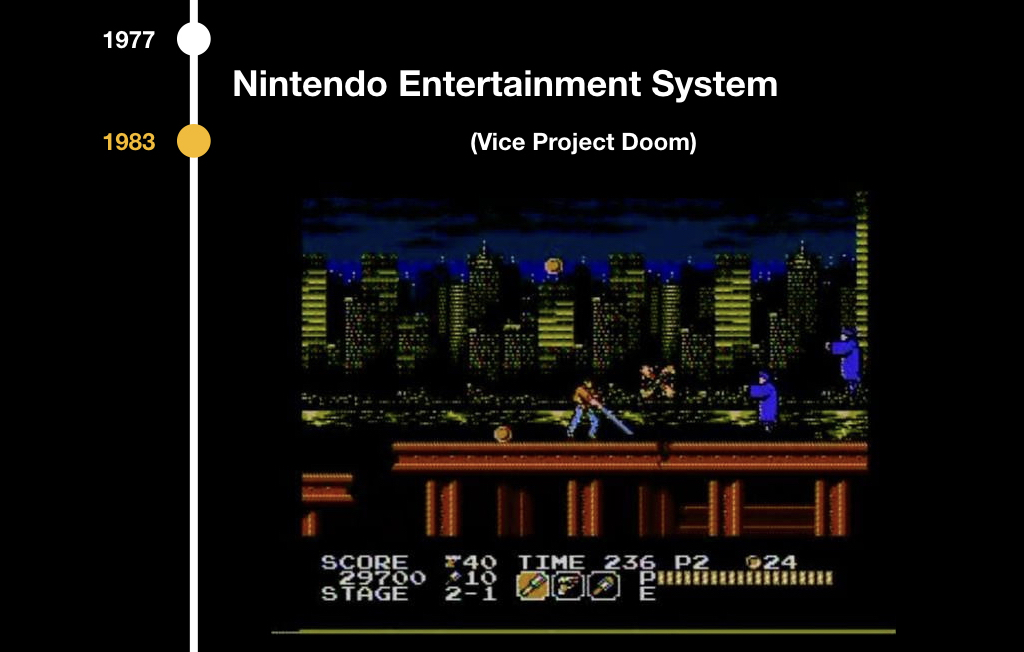

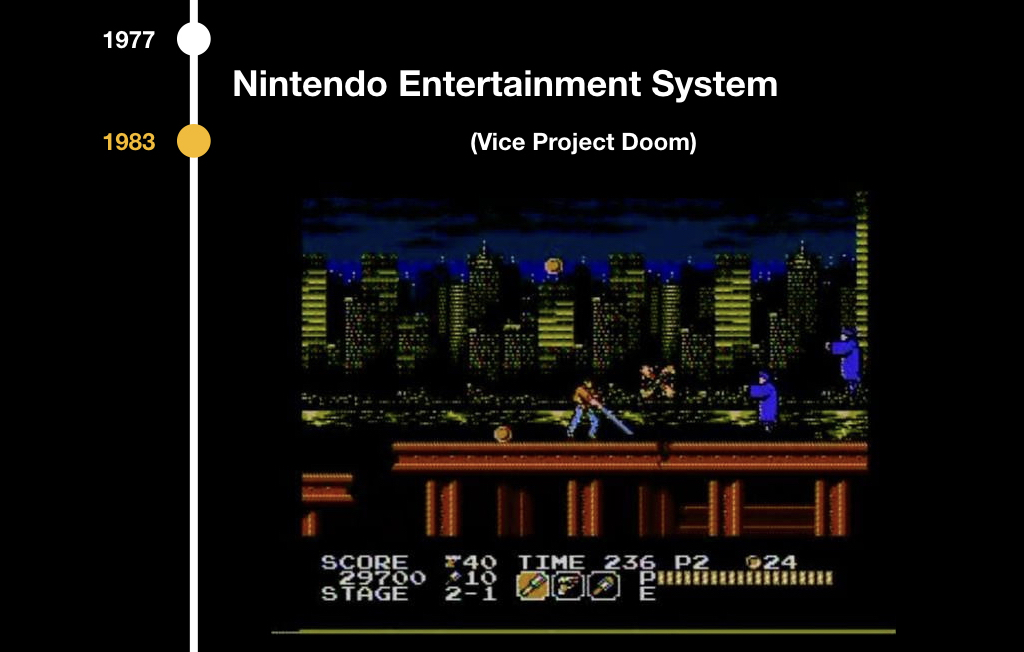

The next stage of development was the Nintendo Entertainment System in 1983, and there were similar problems: there was already an 8-bit palette, but still there was no frame buffer. But there was a PPU (picture processing unit) - a separate chip that was responsible for the video series, and there was used tile graphics. Tiles are such pieces of pixels, most often they were 8x8 or 8x16. Therefore, all the games of that period look a little square:

The system scanned the frame with such blocks and analyzed which parts of the image to draw. This made it possible to save video memory very seriously, and an additional advantage was the collision detection out of the box. Gravity appeared in games because it was possible to understand which squares intersect with which ones, it was possible to collect coins, take lives, when we come in contact with enemies, and so on.

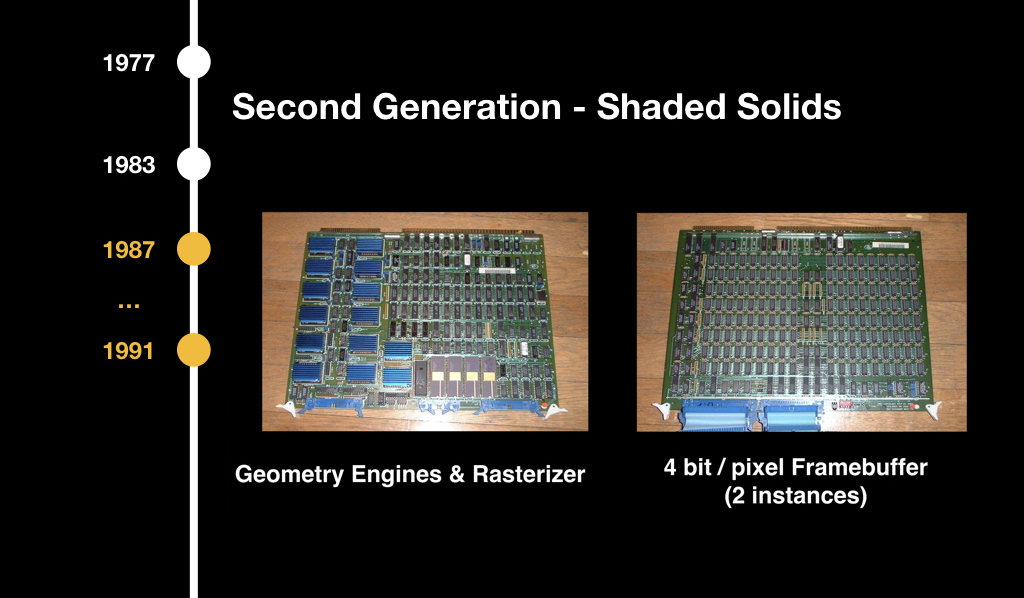

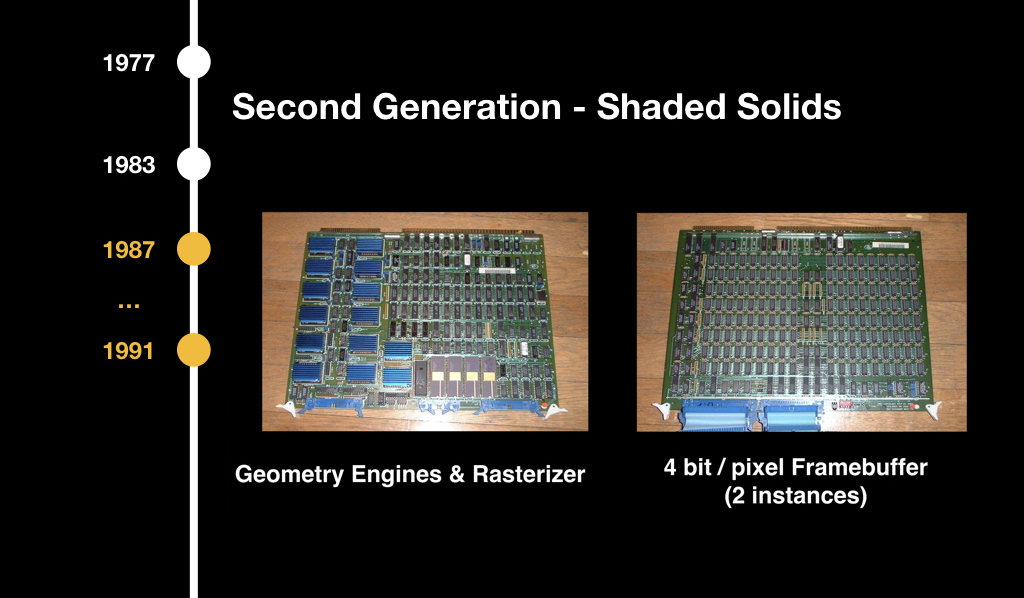

Subsequently, 3D-graphics began, but at first it was insanely expensive, mainly used in flight simulators and some entertaining solutions. It was considered to be such terrible, huge chips and did not reach ordinary consumers.

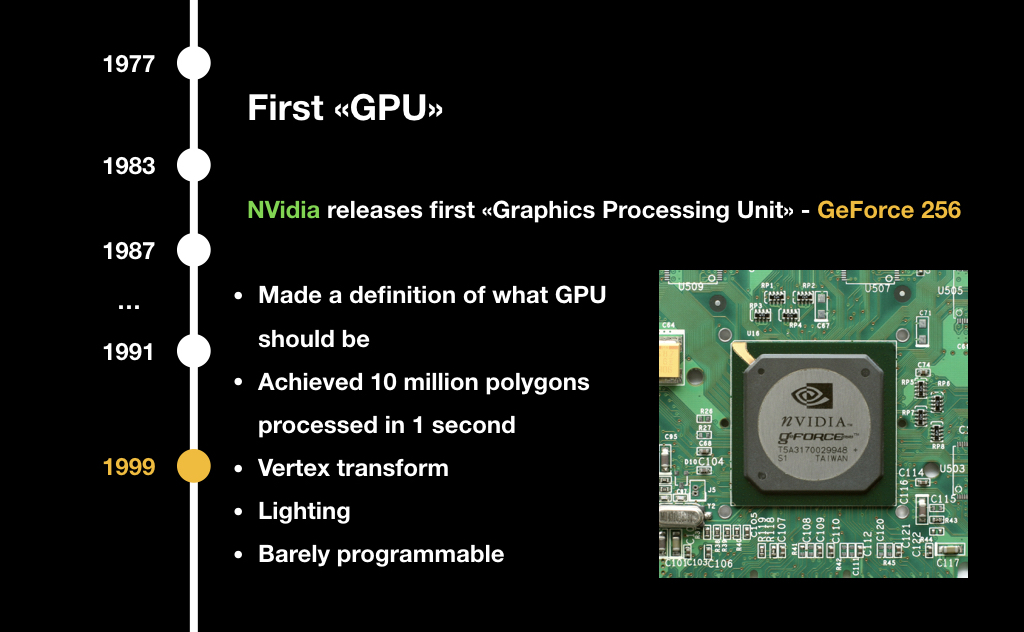

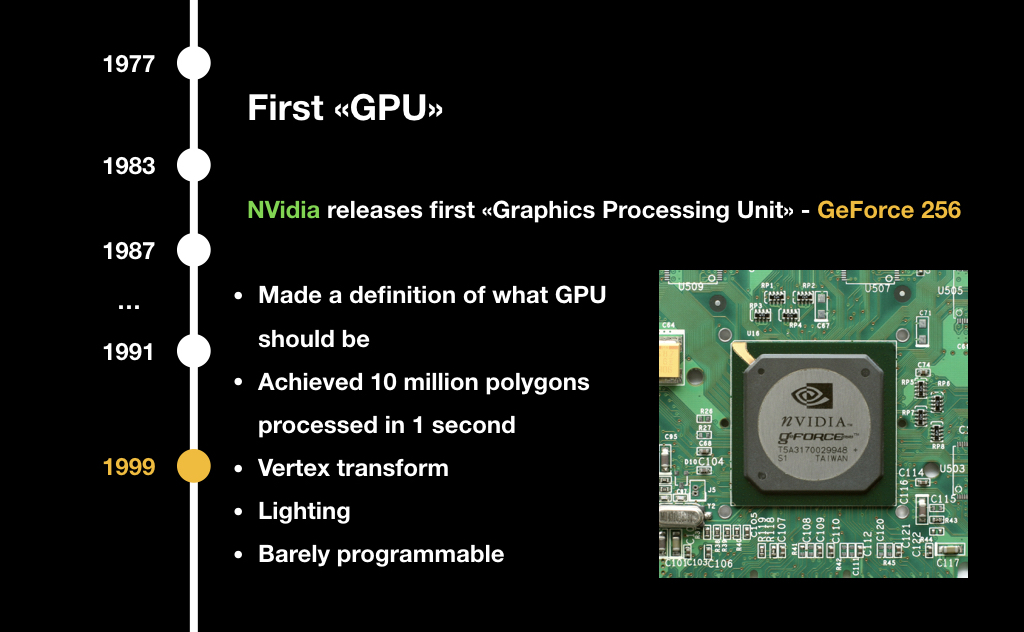

In 1999, the well-known company NVidia introduced the term GPU (graphics processing unit) with the release of the new device. At that time, it was a very highly specialized chip: it solved a number of tasks that allowed a little to speed up the 3D graphics, but it could not be programmed. One could only say what to do, and he returned the answer for some pre-built algorithms.

In 2001, NVidia released the GeForce 3 with the GeForceFX package, in which the shaders first appeared. We will definitely talk about them today. It was this concept that turned all computer graphics, although at that time it was still programmed in assembler and was quite difficult.

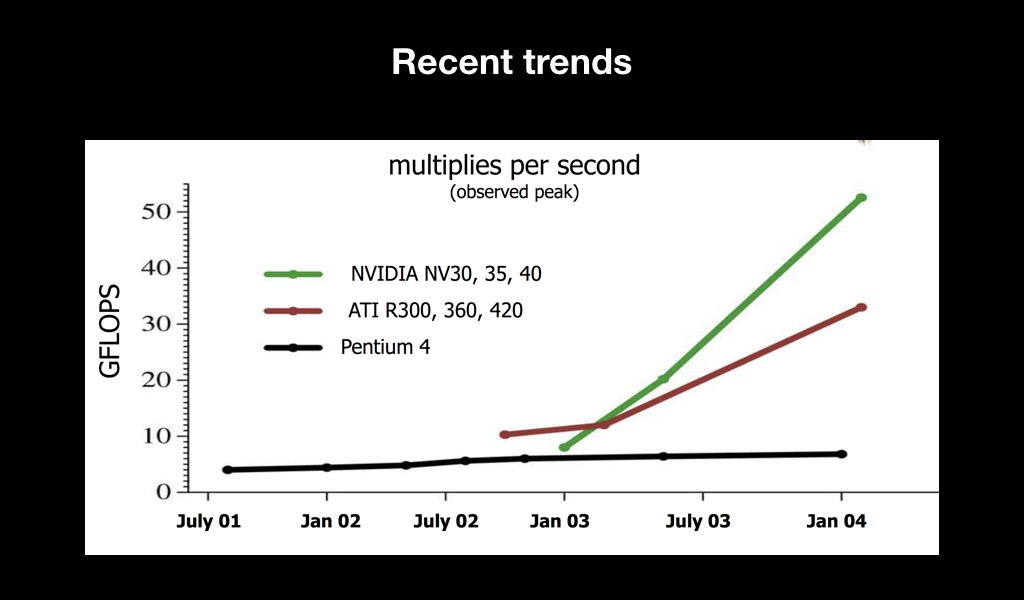

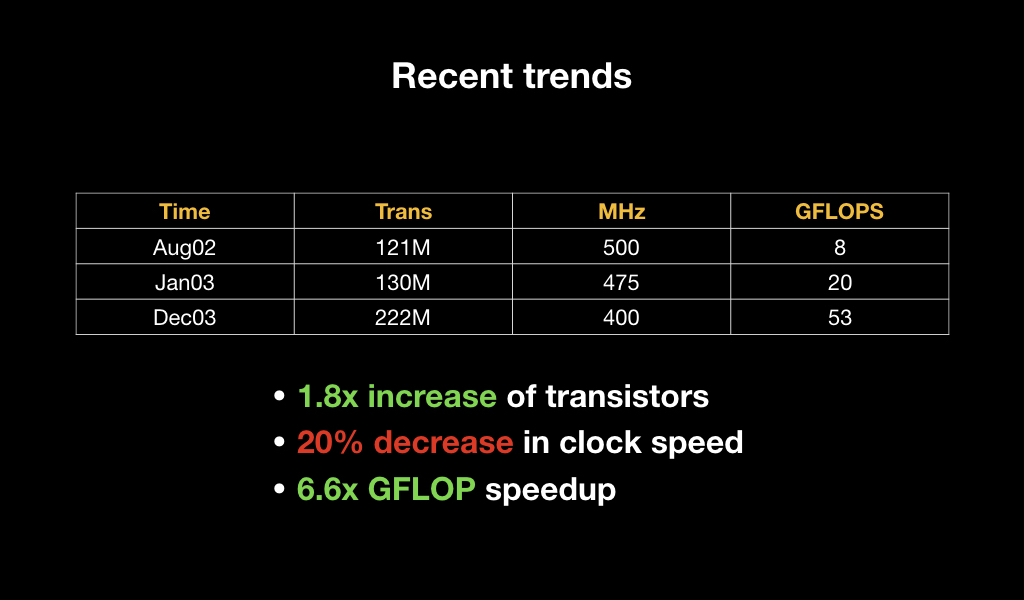

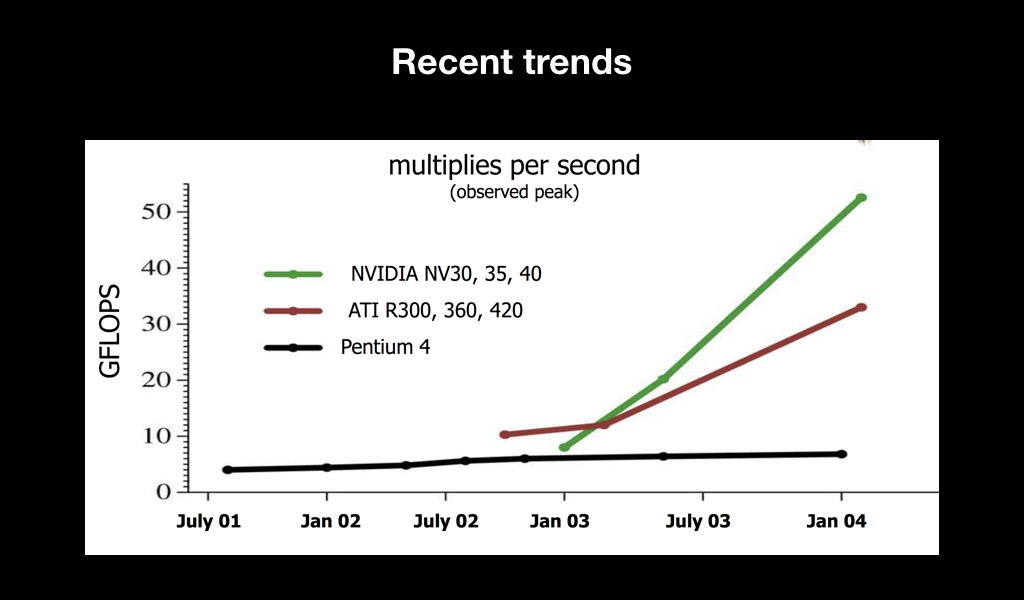

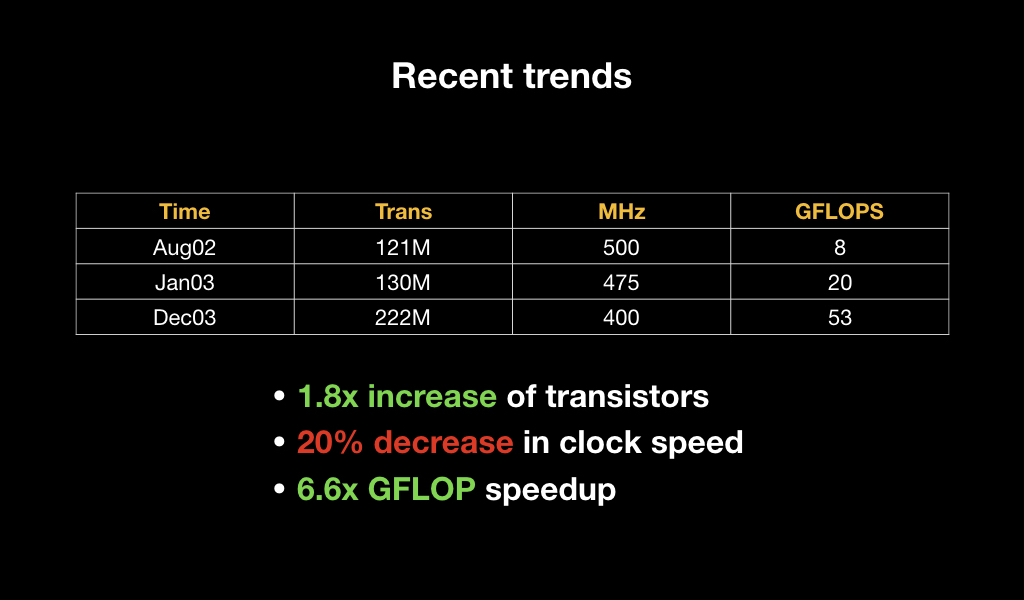

The main thing that happened after that was a trend. You have probably heard about such a metric of iron productivity as flops. And it became clear that over time, video cards, in comparison with central processors, simply fly off into space in terms of performance:

And if we look at their specifications, we will see that literally over the year the number of transistors has increased 2 times, while the clock frequency of each of them has decreased, but the overall performance has increased almost 7 times. This suggests that there was a bet on parallel computing.

To figure out why a GPU needs to take so many things in parallel, let's see how rendering is currently happening on most video cards, both desktop and mobile.

For many of you, it can be a major disappointment that a GPU is a very stupid piece of hardware. She can only draw triangles, lines and points, nothing else. And it is very optimized for working with floating point numbers. It does not know how to count double and, as a rule, works very poorly with int. There are no abstractions on it in the form of the operating system and others. It is as close to the gland as possible.

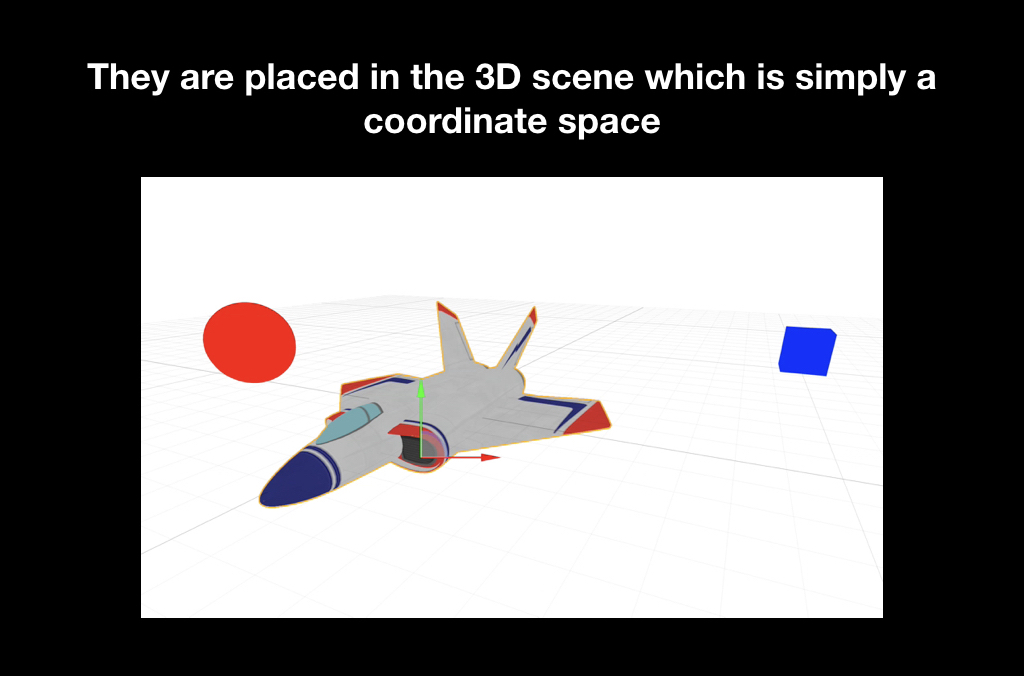

For this reason, all 3D objects are stored as a set of triangles, on which the texture is most often stretched. These triangles are often called polygons (those who play games are familiar with words like “there are twice as many polygons in Kratos' model).

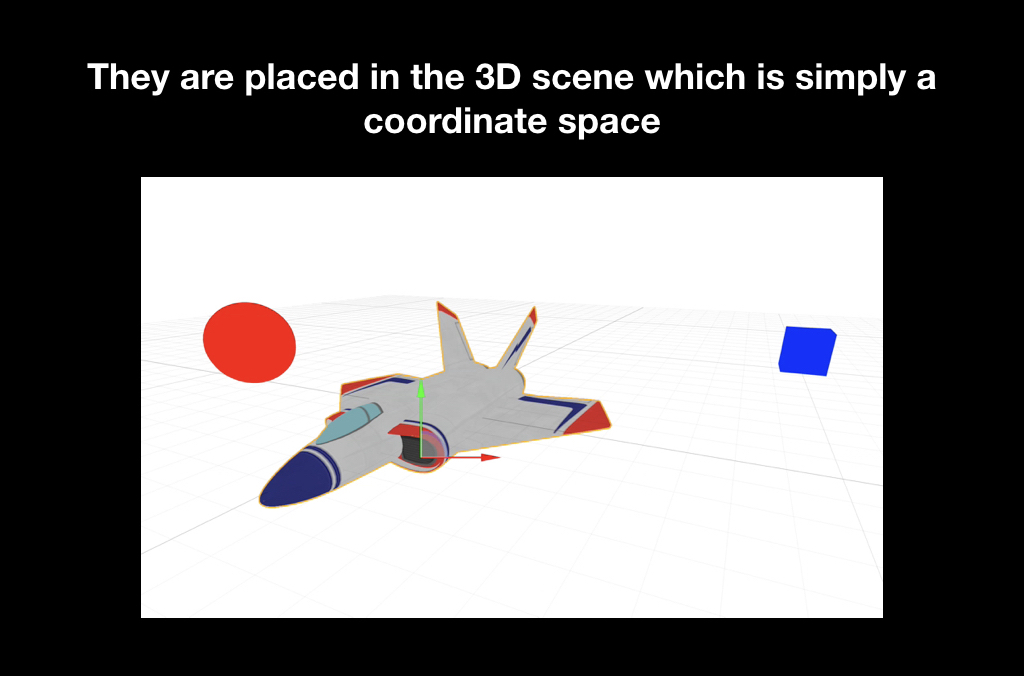

In order to render it all, these triangles are first placed in the game world. The game world is the usual three-dimensional coordinate system, where we put them, and each object has, as a rule, its own position, rotation, distortion, and so on.

Often there is such a concept as a camera, when we can look at the game world from different sides. But it is clear that in reality there is no camera there, and in reality it is not she who moves, but the whole game world: he turns to the monitor so that you can see it from the right angle.

And the last stage is the projection, when these triangles fall on your screen.

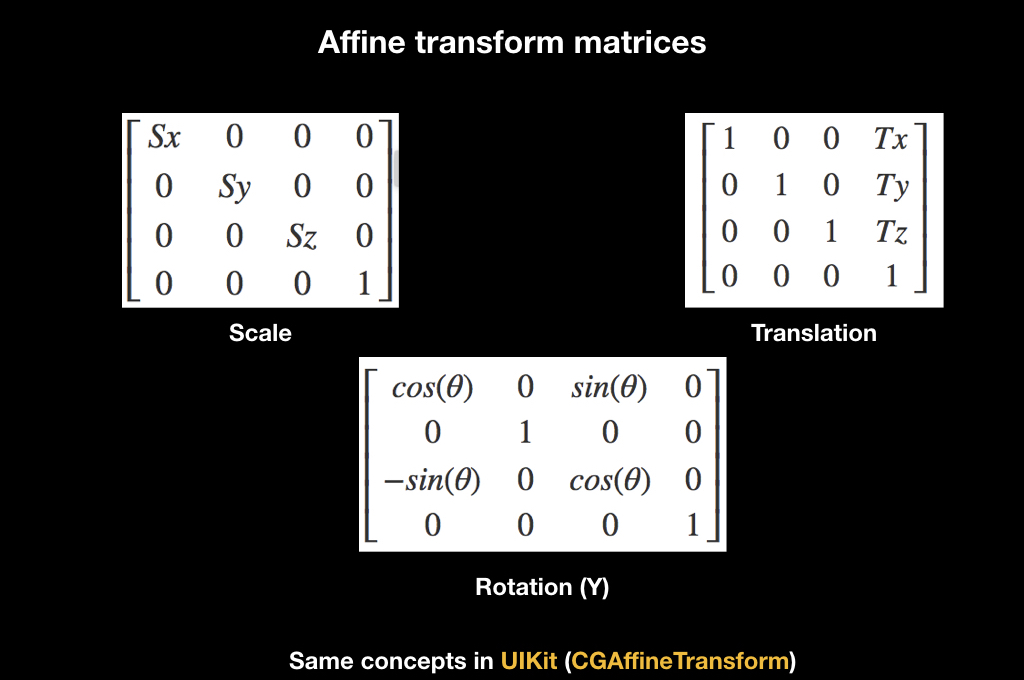

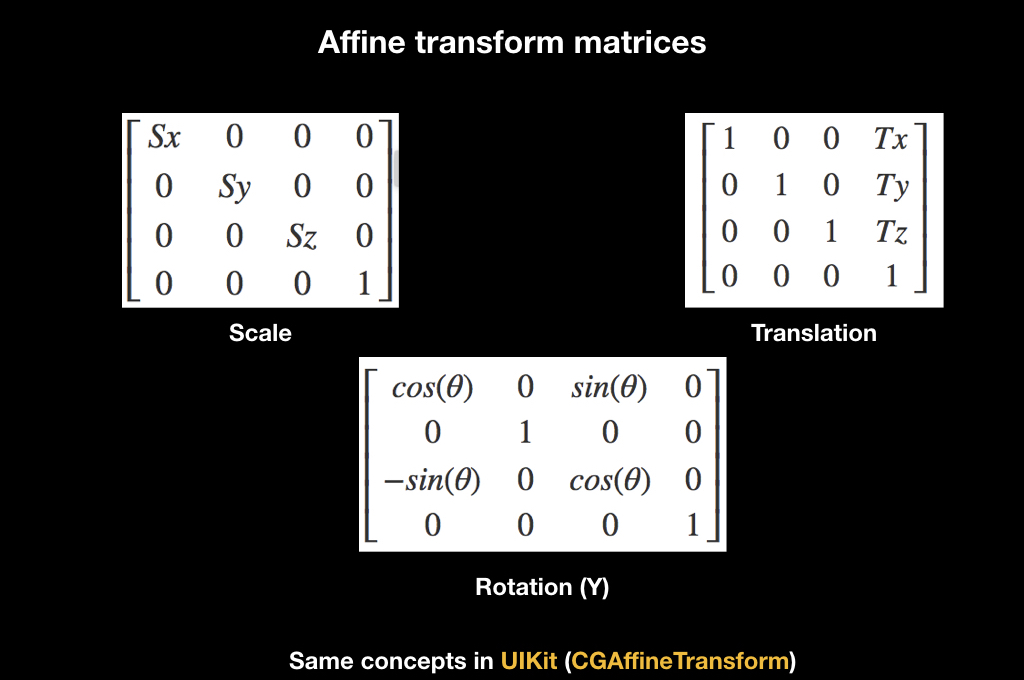

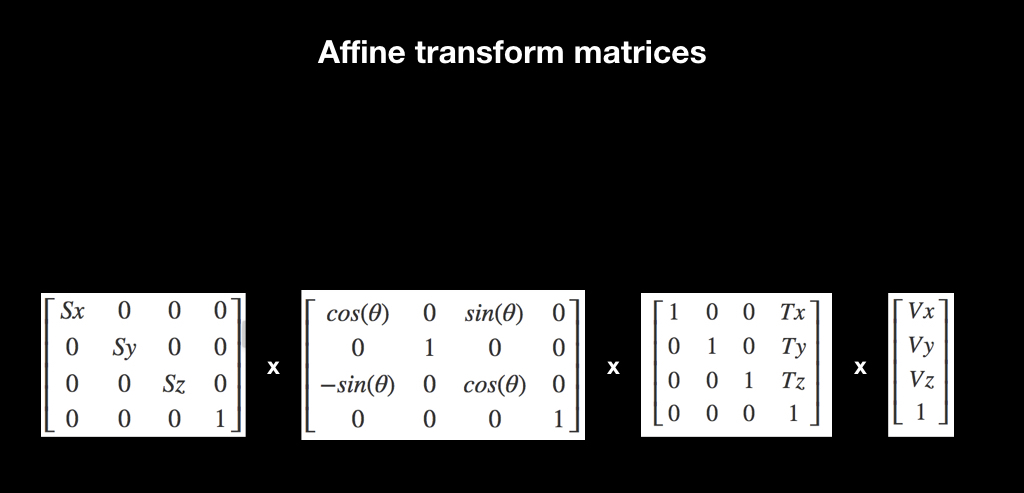

For all this, there is an excellent mathematical abstraction in the form of affine transformations. Who worked with UIKit, is familiar with this concept thanks to the CGAffineTransform, there all animations are made through it. There are different matrices of affine transformations, here for scale, for rotation and for transfer:

They work like this: if you multiply the matrix by some vector, then the transformation will be applied to it. For example, the Translation matrix shifts the vector when multiplied.

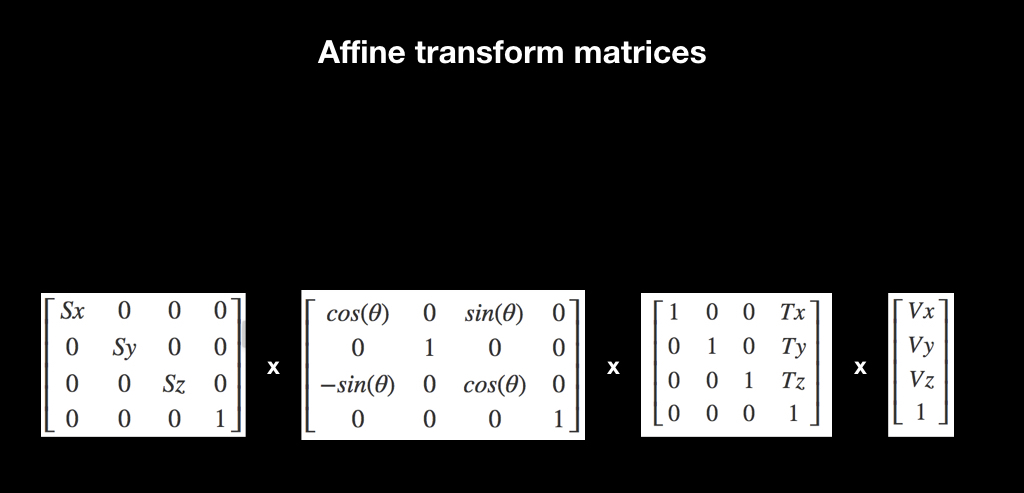

And another interesting fact related to them: if you multiply several such matrices, and then multiply them by a vector, the effect accumulates. If at first there is a scale, then a turn, and then a transfer, then when all this is applied to the vector, all of this is done right away.

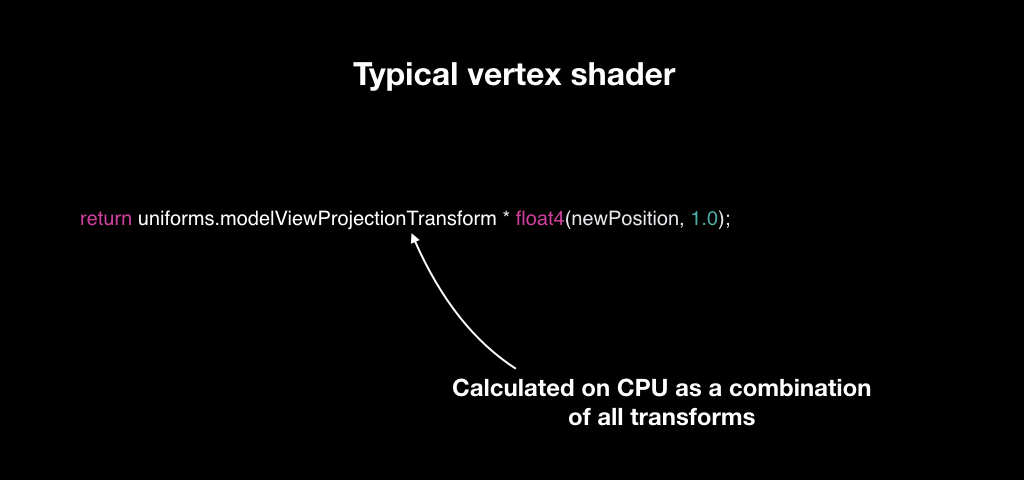

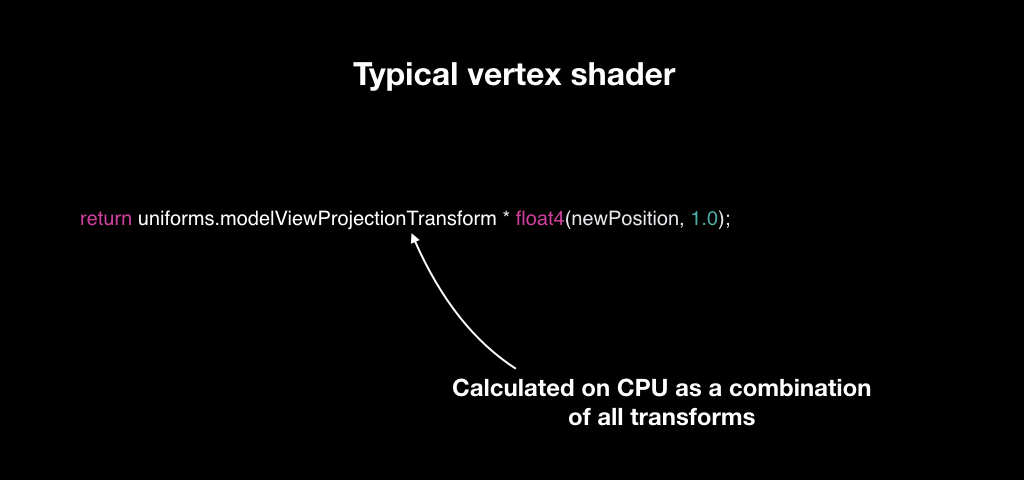

In order to do this efficiently, vertex shaders were invented. Vertex shaders are such a small program that runs for every point of your 3D model. You have triangles, each with three points. And for each of them, a vertex shader is launched, which accepts the vector's position in the coordinate system of the 3D model, and returns in the coordinate system of the screen.

Most often it works like this: we calculate for each object a unique transformation matrix. We have the matrix of the camera, the matrix of the world and the matrix of the object, we multiply them all and give it to the vertex shader. And for each vector it takes, multiplies it and returns a new one.

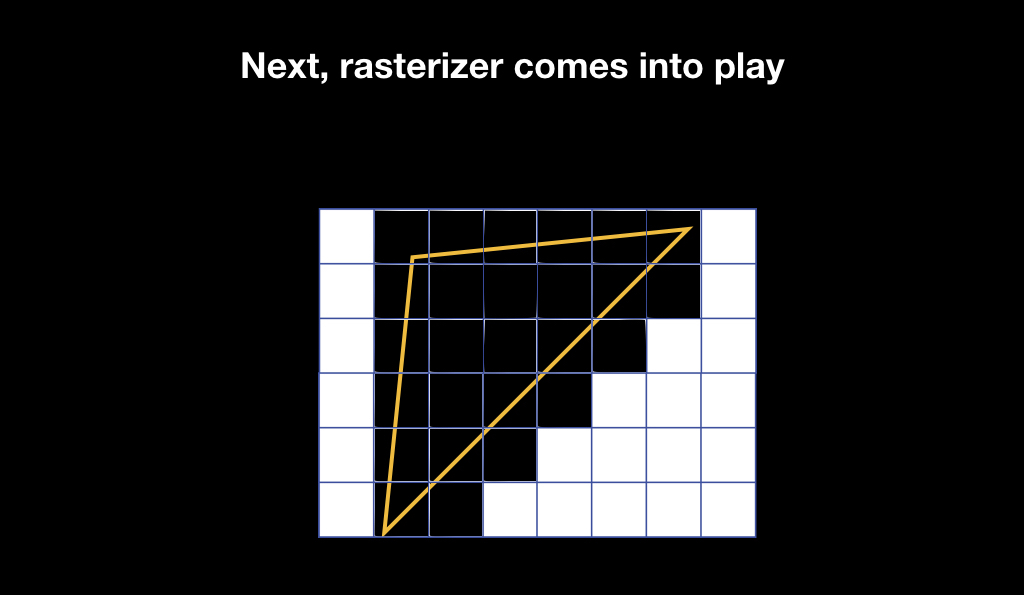

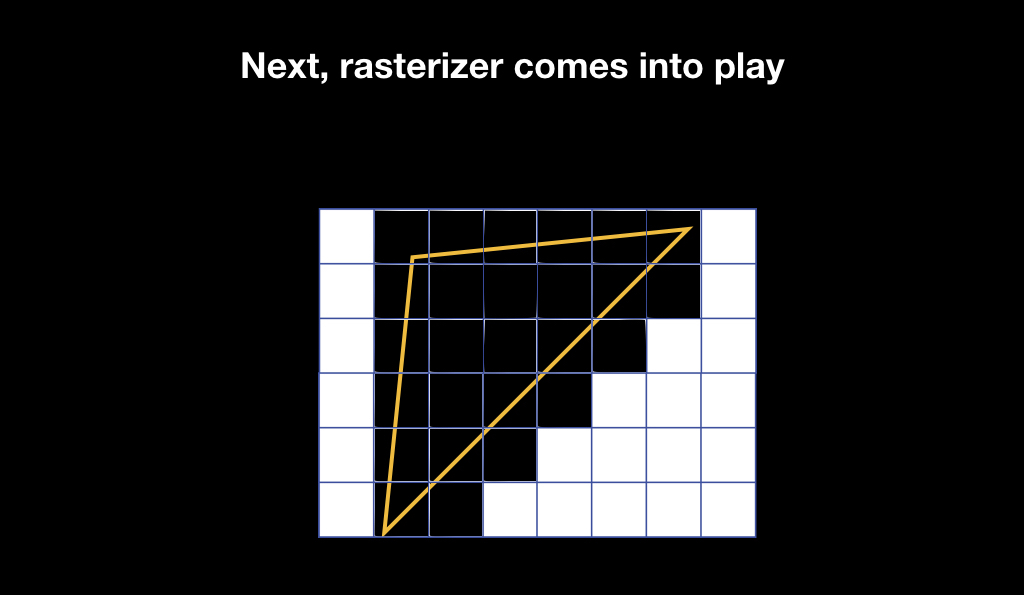

Our triangles appear on the screen, but it's still vector graphics. And then the rasterizer is included in the game. It does a very simple thing: it takes pixels on the screen and imposes a pixel grid on your vector geometry, selecting those pixels that intersect with your geometry.

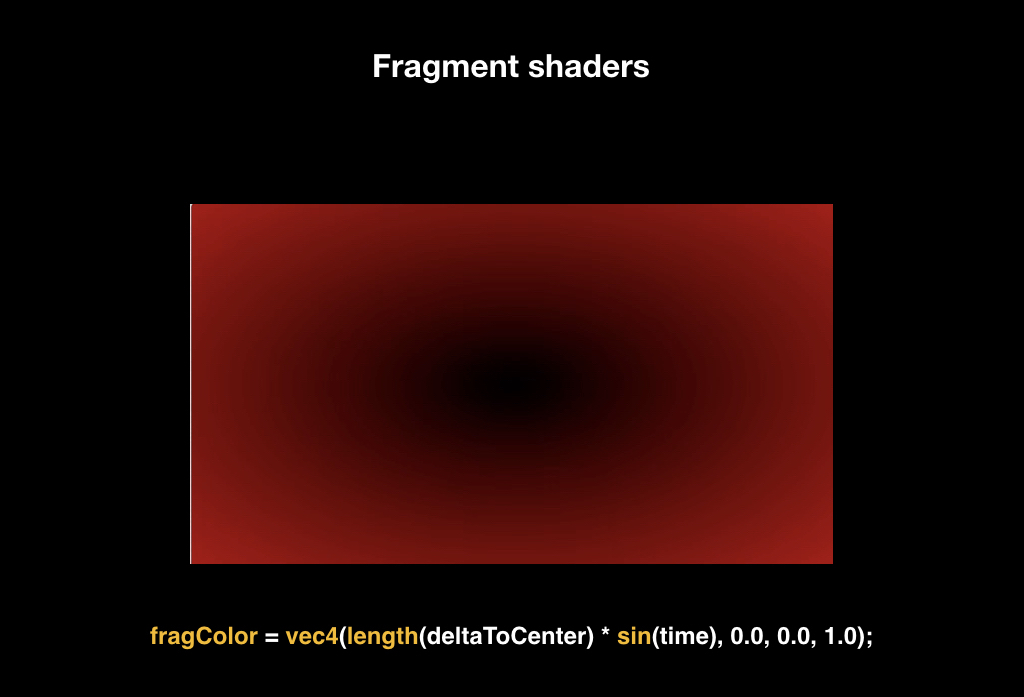

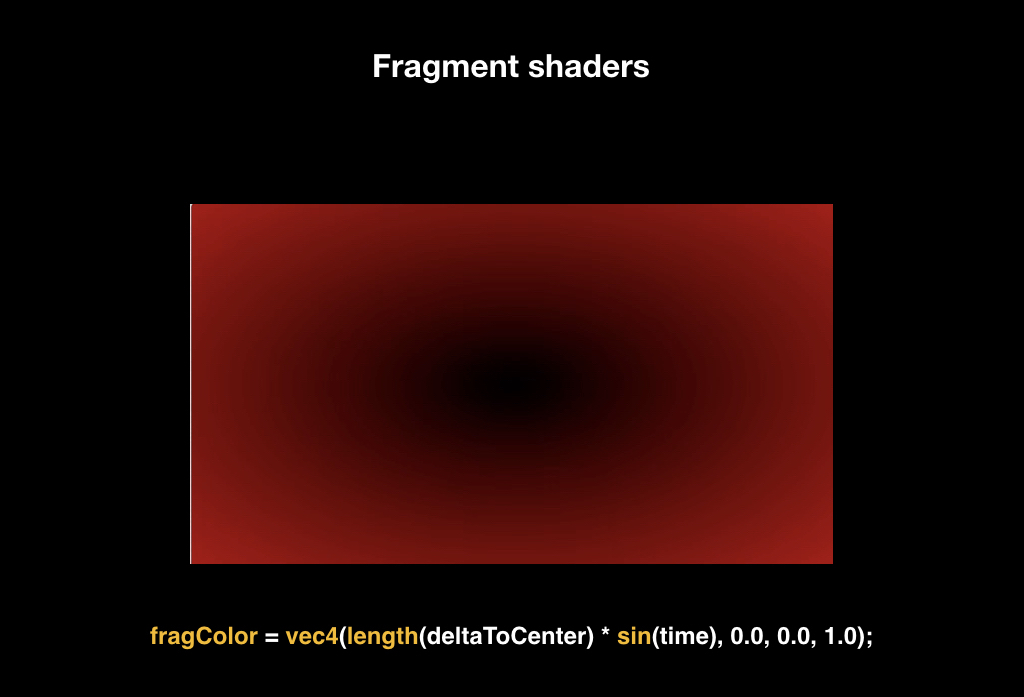

After that, run fragment shaders. Fragment shaders - this is also a small program, it starts already for each pixel that falls into the area of your geometry, and returns the color, which will later be displayed on the screen.

In order to explain how this works, imagine that we will draw two triangles that cover the entire screen (just for clarity).

The simplest fragmentary shader you can write is a shader that returns a constant. For example, red, and the entire screen becomes red. But it is quite boring.

Fragment shaders take the pixel coordinate as an input, so we can, for example, calculate the distance to the center of the screen, and use this distance as the red channel. Thus, a gradient appears on the screen: the pixels close to the center will be black, because the distance will tend to zero, and the edges of the screen will be red.

Further we can add, for example, a uniform. The uniform is a constant that we pass to the fragment shader, and he applies it equally for each pixel. Most often, such a uniform becomes time. As you know, time is stored as a number of seconds from a certain point, so we can take the sine from it, getting some value.

And if we multiply our red channel by this value, we get dynamic animation: when the sine of time goes to zero, everything becomes black, when the unit returns to its place.

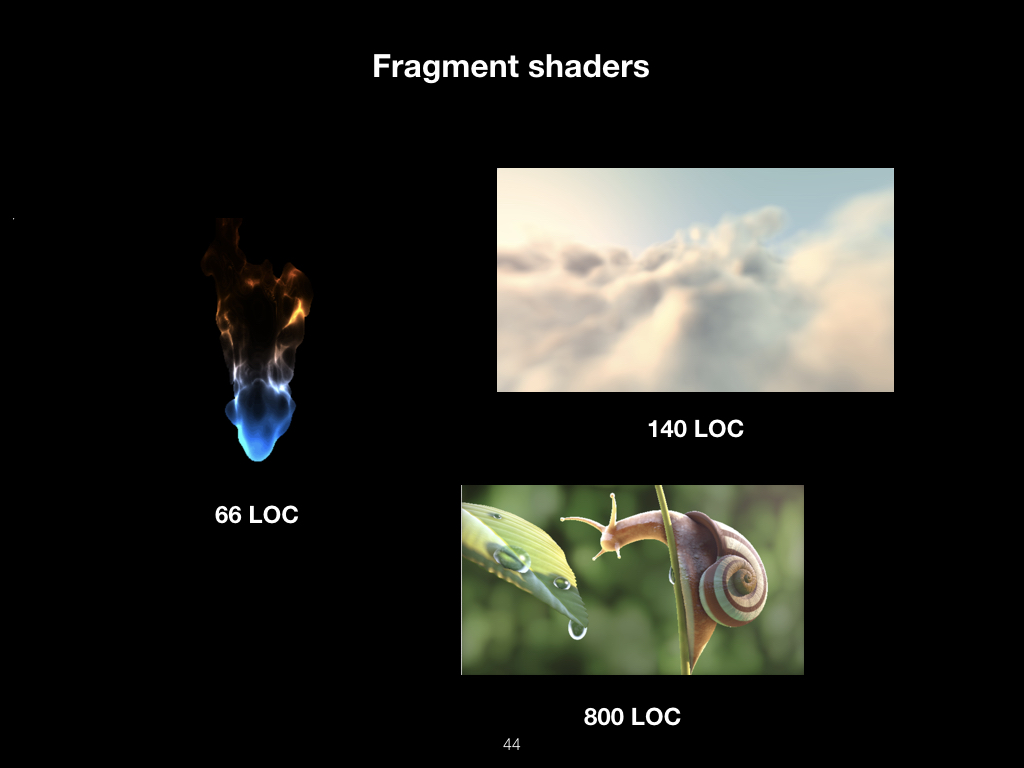

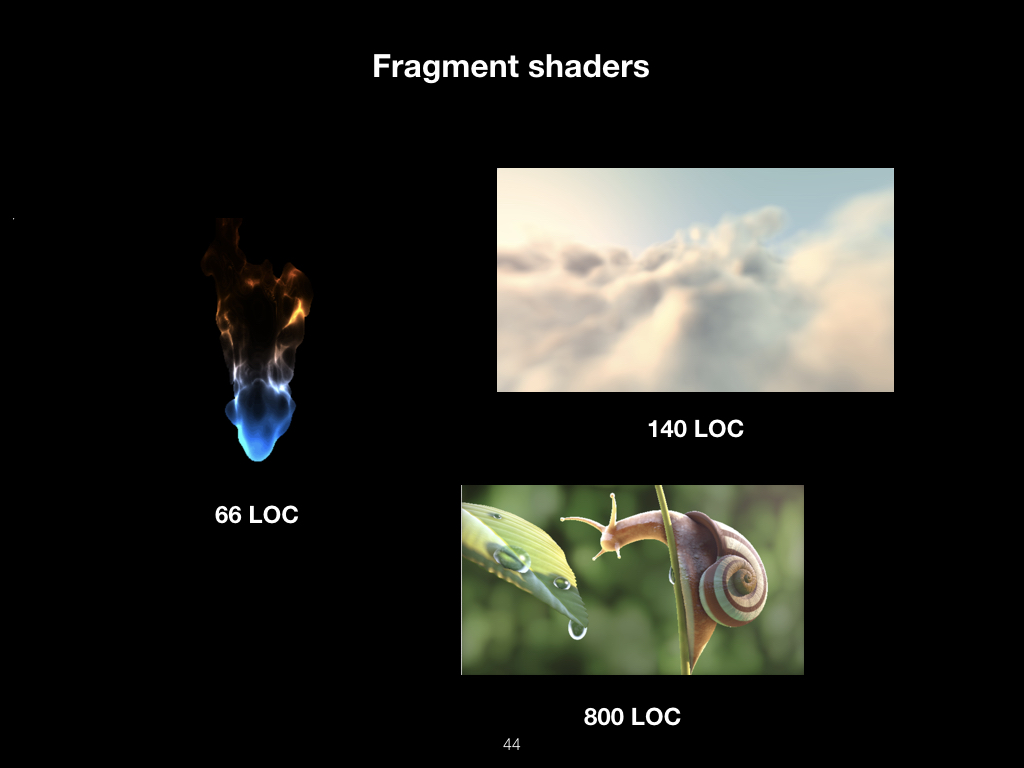

By itself, writing shaders has grown into a whole culture. These images are drawn using mathematical functions:

They do not use 3D models or textures. It's all pure math.

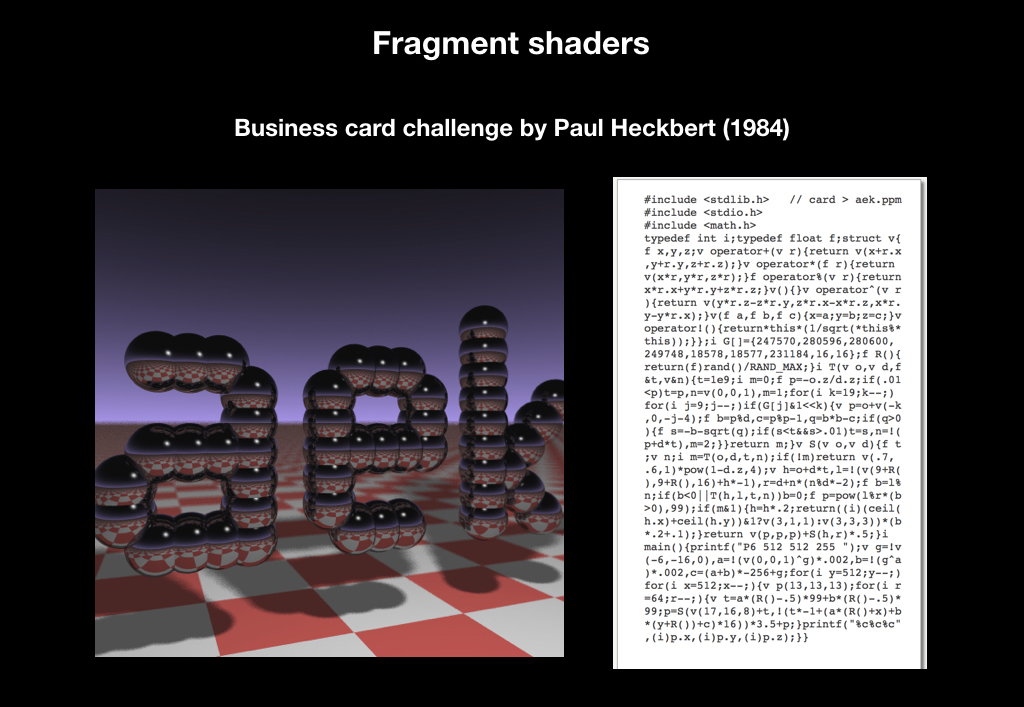

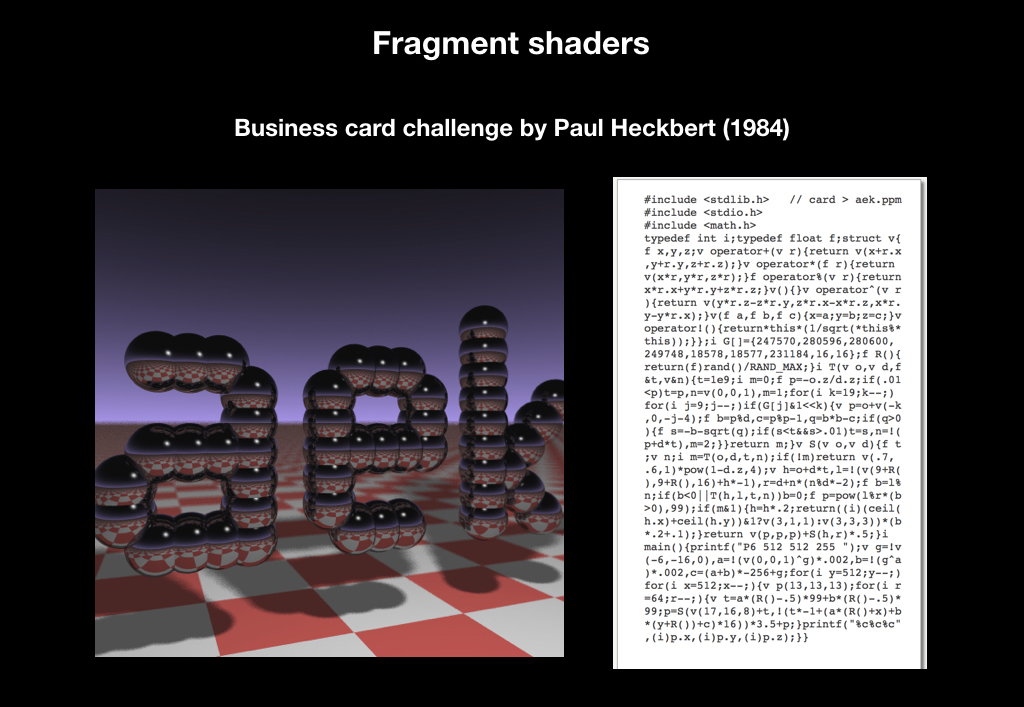

In 1984, one Paul Heckbert even launched a Challenge, when he distributed business cards with a code, by running which, you could get just such a picture:

And this challenge is still alive at SIGGRAPH, CVPR, major conferences in California. Until now, you can see business cards that print something.

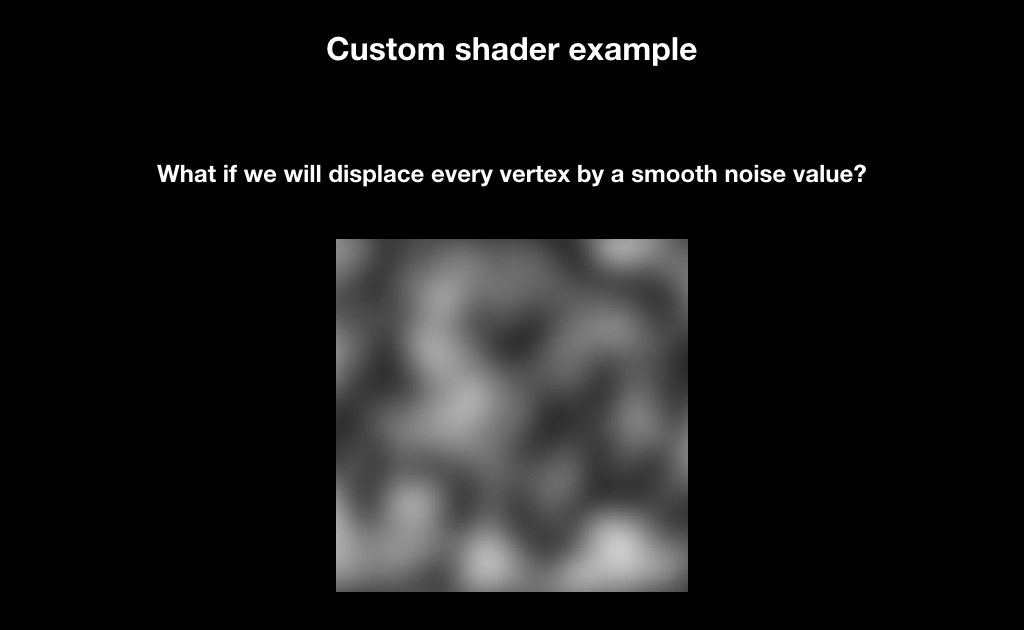

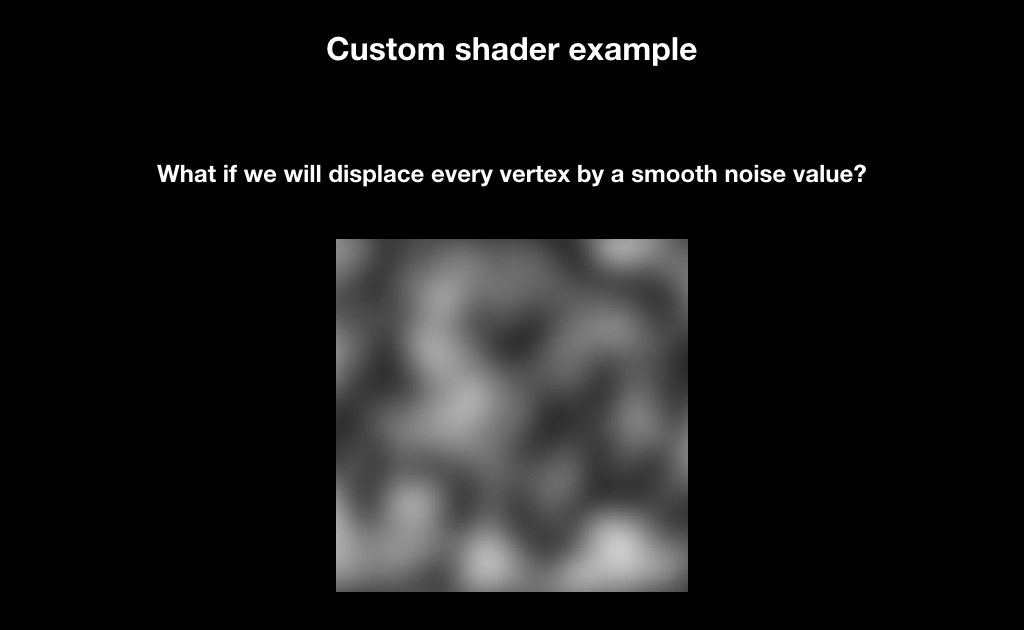

But it is unlikely that all this became part of the masses just because it was beautiful. And in order to understand what possibilities this all opens up, let's see what can be done with a conventional sphere. Suppose we have a 3D model of a sphere (it is clear that in reality it is not a sphere, but a set of triangles, forming a ball with some degree of approximation). We will take this texture, which is often called smooth noise:

These are just some random pixels that gradually flow from white to black. We will stretch this texture on our ball, and in the vertex shader we will do the following: we will shift the points falling on the darker pixels more weakly, and the points on the white pixels move more strongly.

And about the same we will do in the fragment shader, only slightly different. We will take the gradient texture shown on the right:

And the whiter the pixel, the more we read, and the darker the pixel that falls, the lower.

And each frame will slightly shift the reading zone and loop it. As a result, an animated fireball will turn out from the usual sphere:

The use of these shaders has turned the world of computer graphics, because it has opened up tremendous possibilities for creating cool effects by writing 30-40 lines of code.

This whole process is repeated several times for each object on the screen. In the following example, you can notice that the GPU cannot draw fonts, because it lacks accuracy, and each character is depicted using two triangles on which the letter texture is stretched:

After that, get a frame.

Now let's talk about what Apple provides us as a vendor of not only software, but also hardware.

In general, we have always had everything going well: from the very first iPhone, the OpenGL ES standard has been maintained, it is such a subset of the desktop OpenGL for mobile platforms. Already on the first iPhone 3D games appeared that were comparable in level to the PlayStation 2 in terms of graphics, and everyone started talking about revolution.

In 2010, the iPhone 4 came out. There was already the second version of the standard, and Epic Games very much boasted about their game Infinity Blade, which made a lot of noise.

And in 2016, the third version of the standard was released, which turned out to be not particularly interesting to anyone.

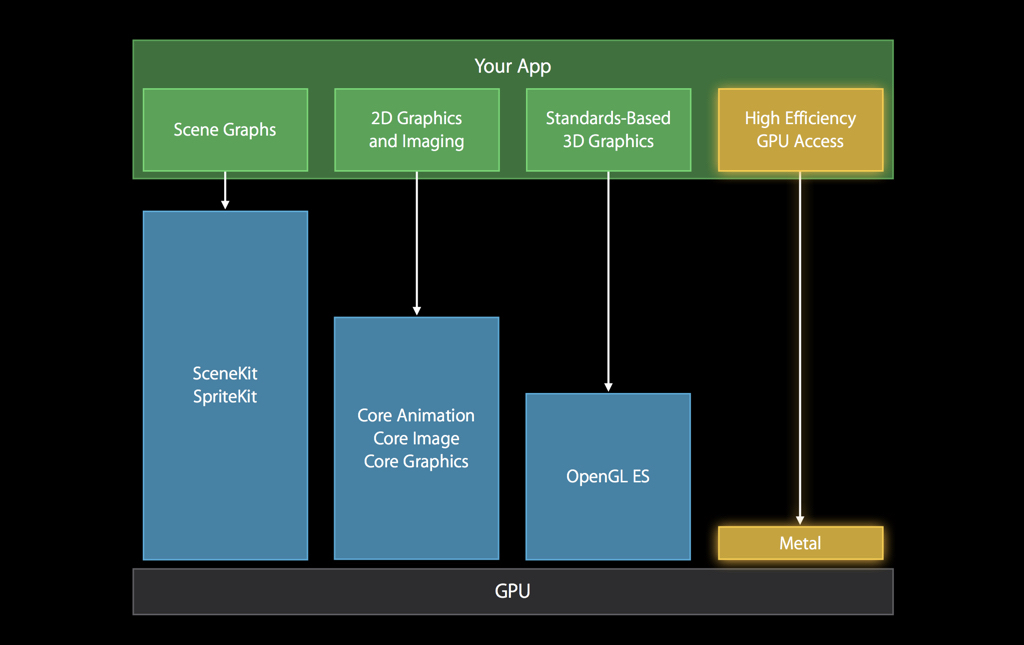

Why? During this time, a lot of frameworks have been released under the ecosystem, low-level, open-source - engines from Apple itself, engines from large vendors:

In 2015, the Khronos organization, which certifies the OpenGL standard, announced Vulkan, the next generation graphics API. And initially Apple was a member of the working group on this API, but left it. Mainly due to the fact that OpenGL, contrary to a common misconception, is not a library, but a standard. That is, roughly speaking, this is a large protocol or interface that says that the device should have such functions that do this and that with iron. And the vendor must implement them himself.

And since Apple is famous for its tight integration of software and hardware, any standardization causes certain difficulties. Therefore, instead of supporting Vulkan, the company in 2014 announced Metal, as if hinting at its name “very close to iron”.

It was an API for graphics, made exclusively for Apple's hardware. Now it is clear that this was done to release its own GPU, but at that time there were only rumors about it. Now there are no devices that do not support Metal.

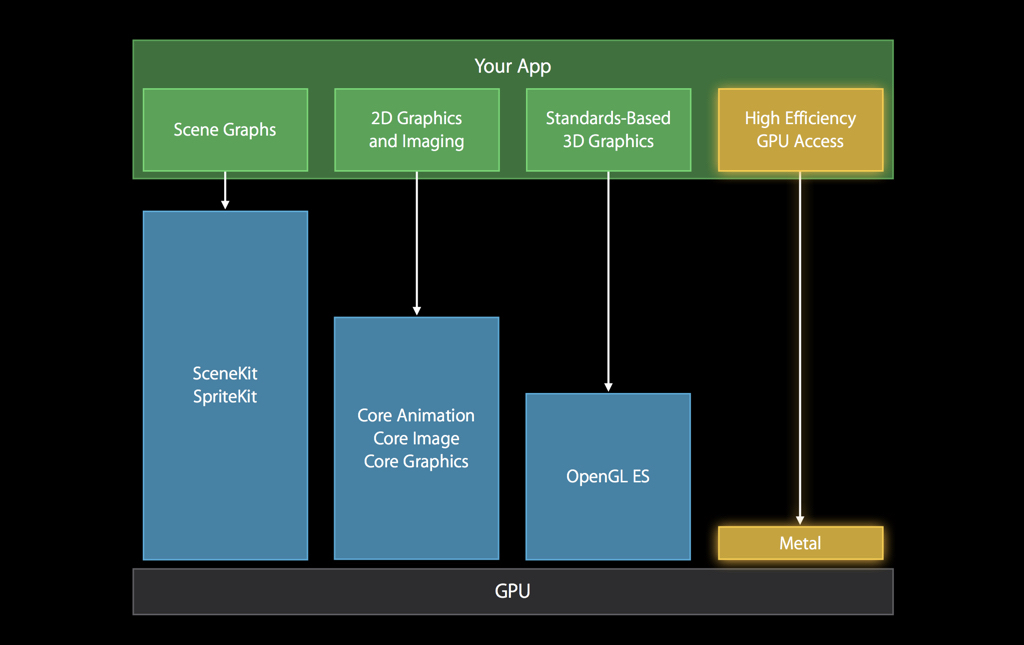

The diagram from Apple itself shows an overhead comparison: even compared to OpenGL, Metal has a much better performance, access to the GPU is much faster:

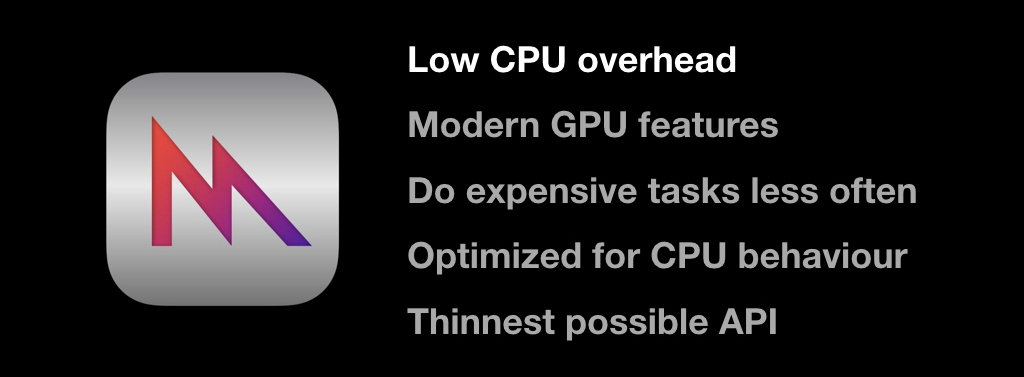

There were all the latest features like tessellations, we will not discuss them in detail now. The main idea was to shift most of the work to the initialization stage of the application and not to do many repetitive things. Another important feature is that this API is very careful about the time of the central processor that you have.

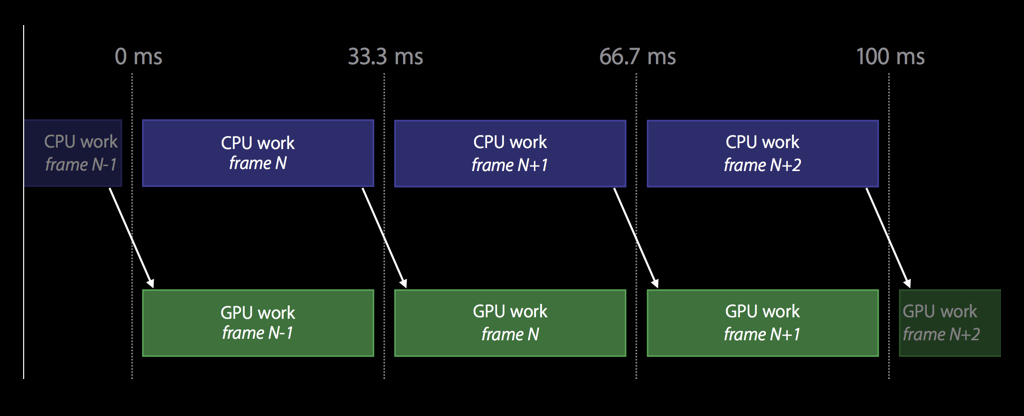

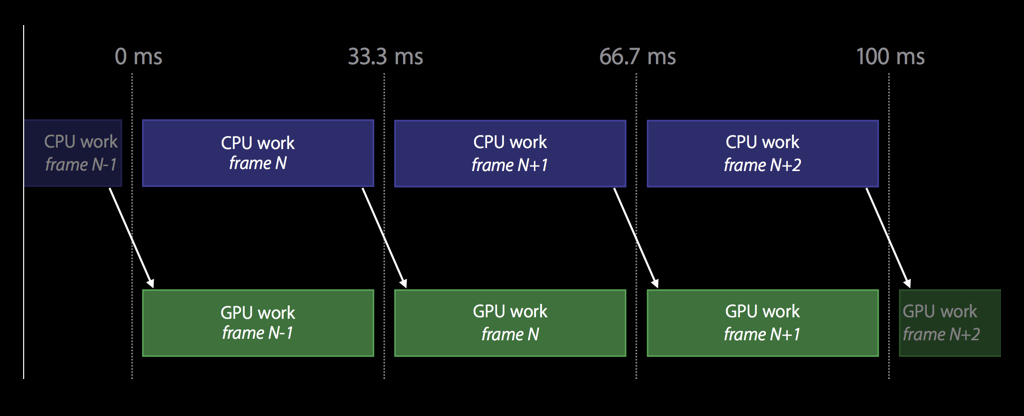

Therefore, unlike OpenGL, the work here is arranged in such a way that the CPU and GPU work in parallel with each other. As long as the CPU reads frame number N, at this time the GPU renders the previous frame N-1, and so on: you do not need to synchronize, and while the GPU renders something, you can continue to work on something useful.

Metal has a rather thin API, and this is the only graphical API that is object-oriented.

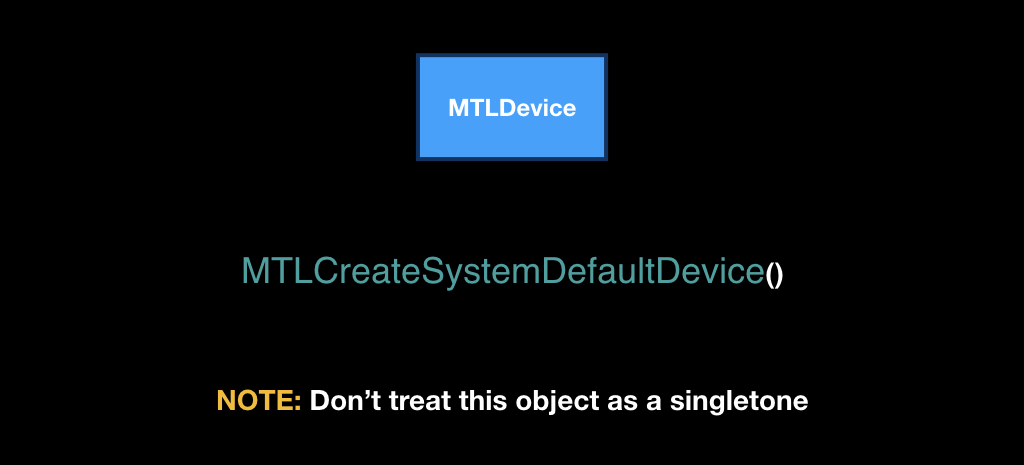

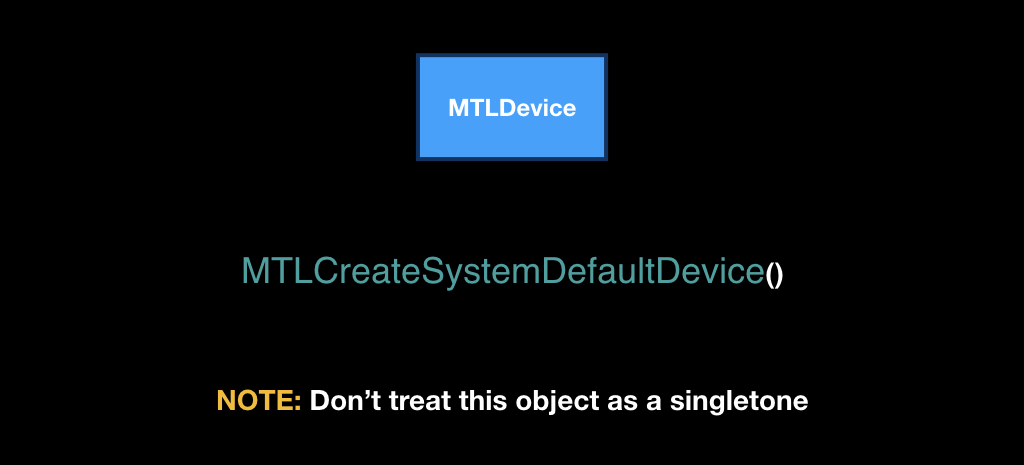

And just on the API, we now go through. At its heart lies the MTLDevice class, which is a single GPU. Most often on iOS, you can get it using the MTLCreateSystemDefaultDevice function.

Despite the fact that it is obtained through a global function, you do not need to treat this class as if it were a singleton. On iOS, the video card is really only one, but there is also Metal on the Mac, and there may be several video cards, and you will want to use some specific one: for example, an integrated one to save the user's battery.

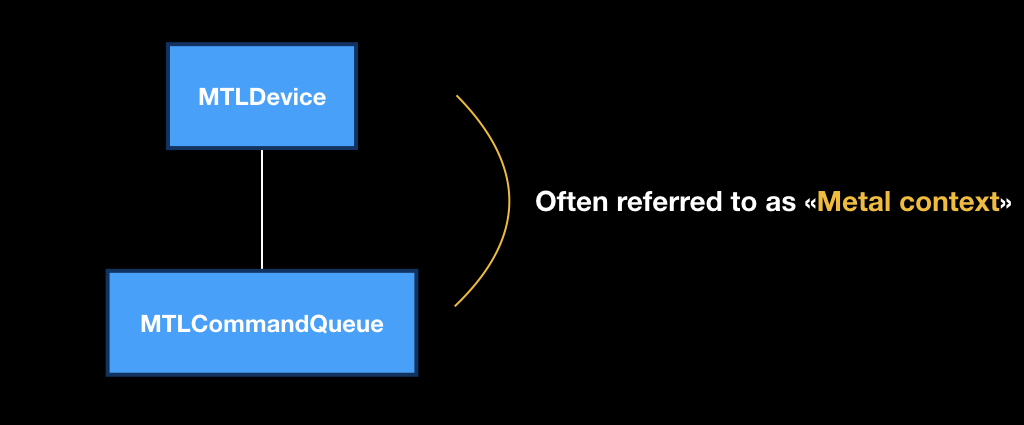

It is necessary to take into account that Metal is very different in architecture from all other Apple frameworks. It has end-to-end dependency injection, that is, all objects are created in the context of other objects, and it is very important to follow this ideology.

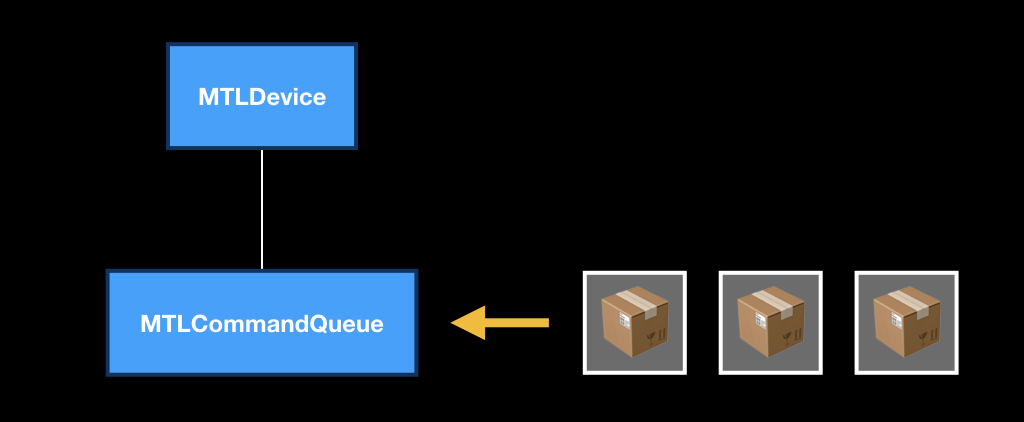

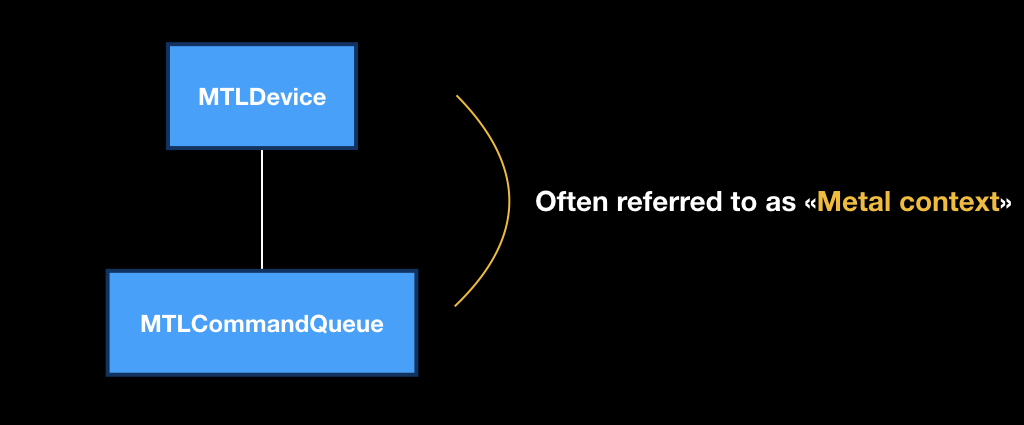

Each device has its own MTLCommandQueue software queue, which can be obtained using the makeCommandQueue method.

This pair of the device and the software queue is very often called the “Metal context”, that is, in the context of these two objects we will do all our operations.

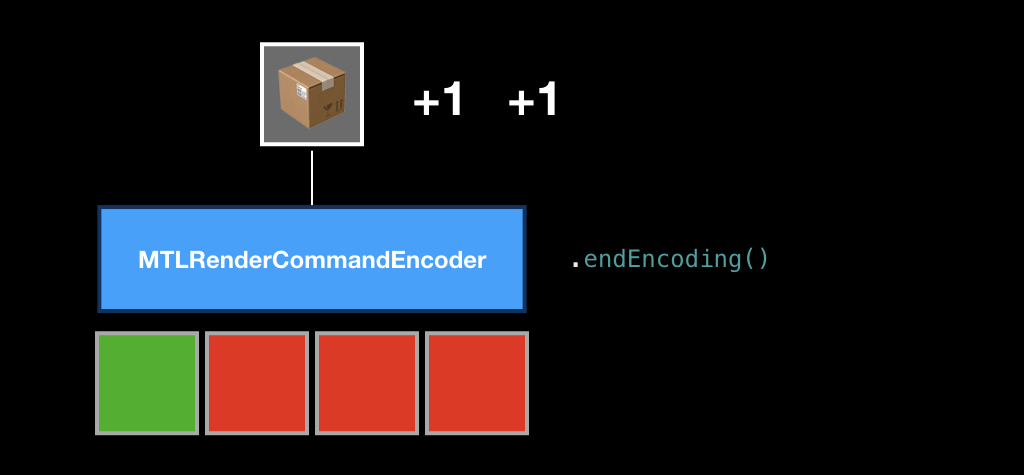

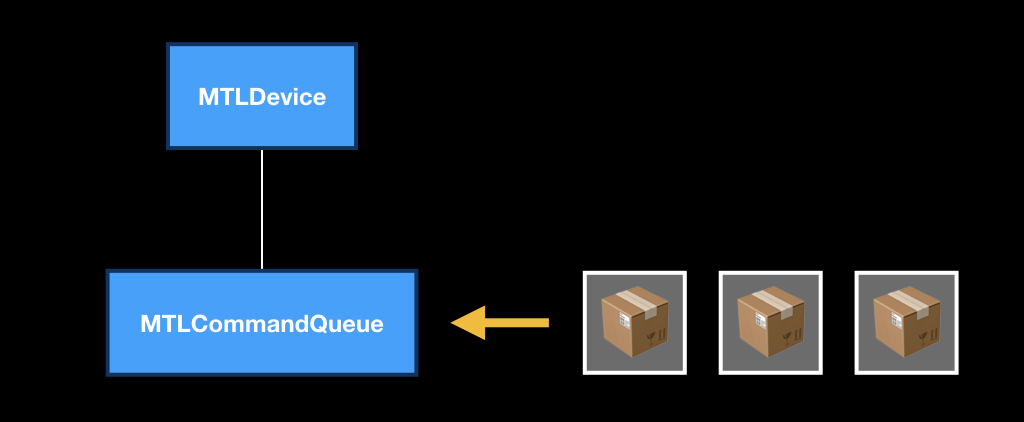

The MTLCommandQueue itself works like a normal queue. It comes "boxes", which are the instructions "what to do with the GPU." As soon as the device is released, the next box is taken, and the rest move up. At the same time, not only you from your stream put commands into this queue: they are also placed by iOS itself, some UIKit frameworks, MapKit, and so on.

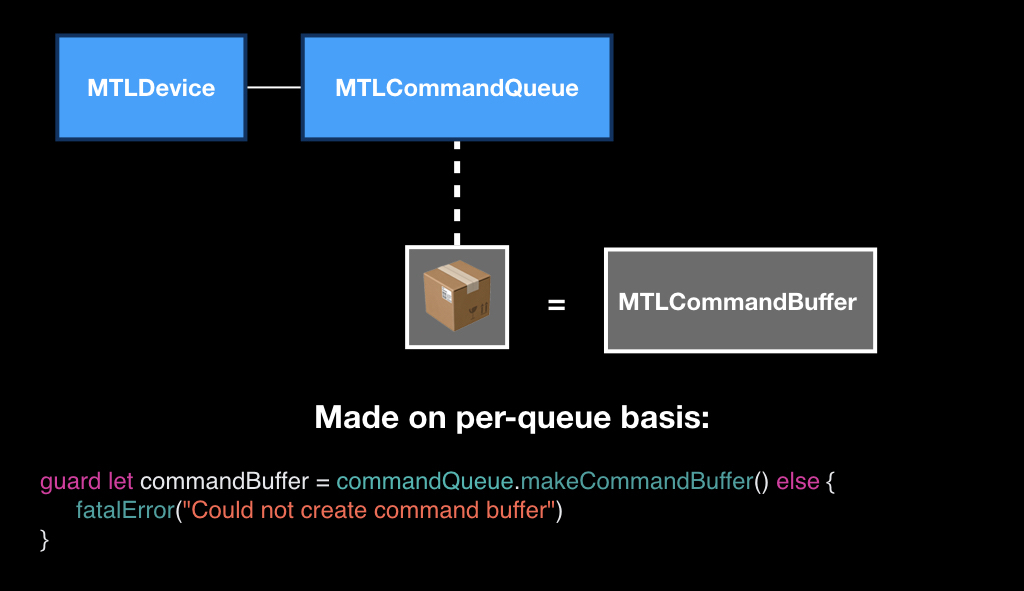

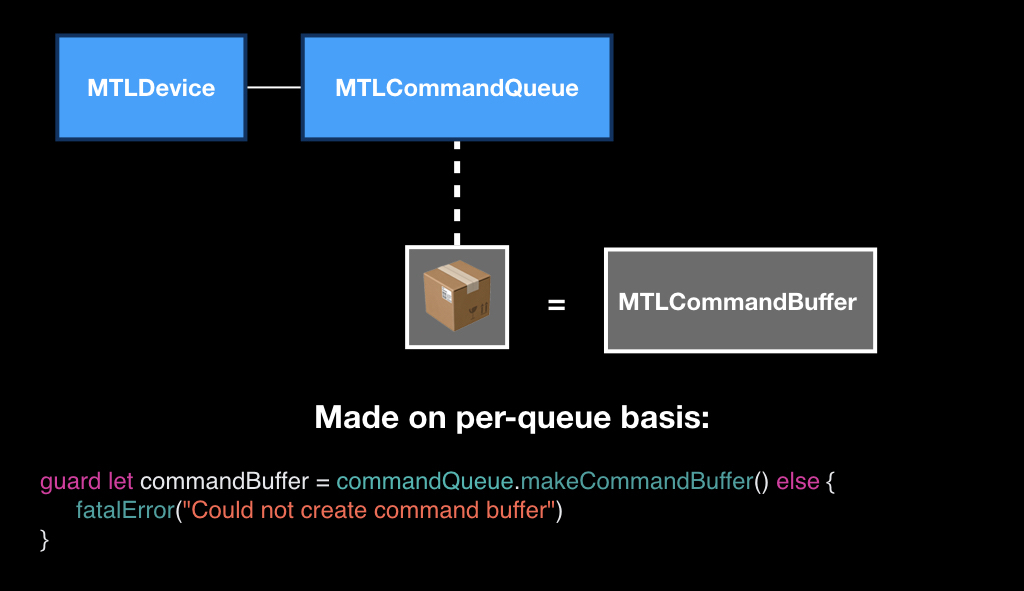

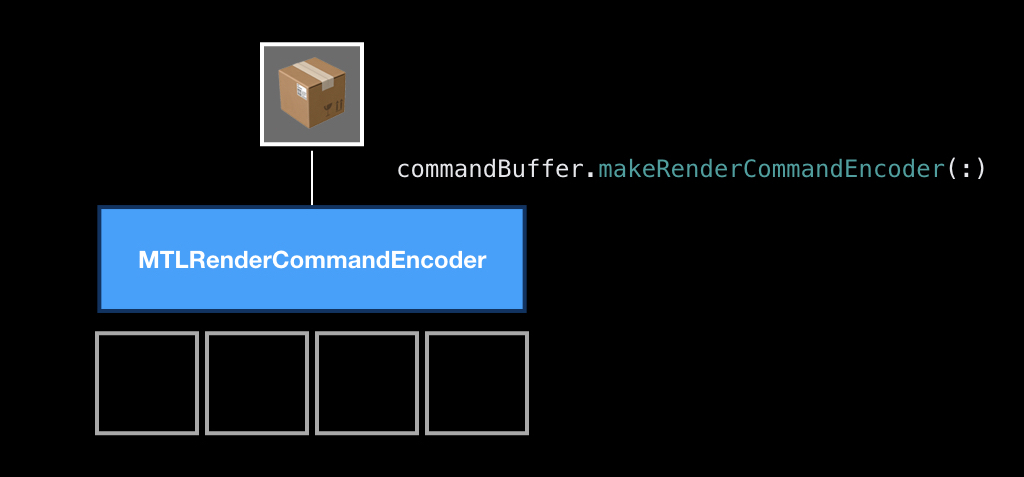

These boxes themselves are a class MTLCommandBuffer, and they are also created in the context of a queue, that is, each queue has its own empty boxes. You call a special method, as if to say: "Give us an empty box, we will fill it."

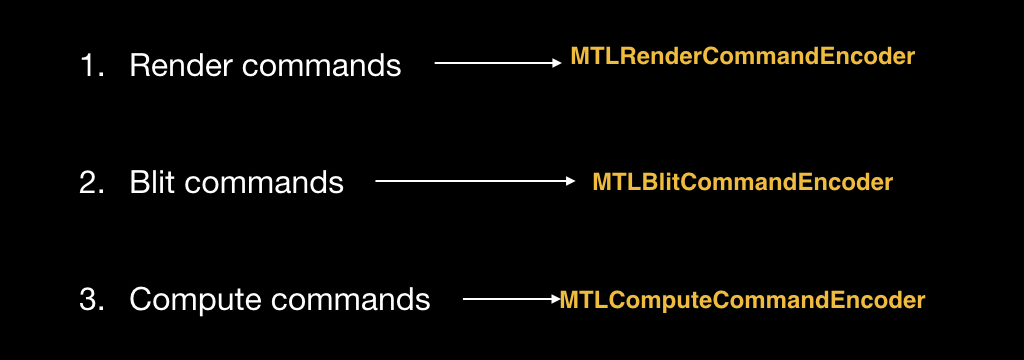

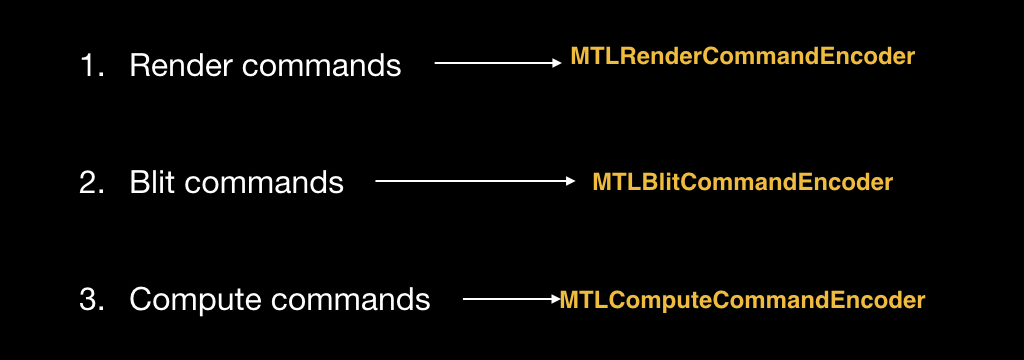

You can fill this box with three types of commands. Render commands for drawing primitives. Blit commands are commands for streaming data, when we need to transfer part of the pixels from one texture to another. And compute-teams, talk about them later.

In order to put these commands in a box, there are special objects, each type of command has its own:

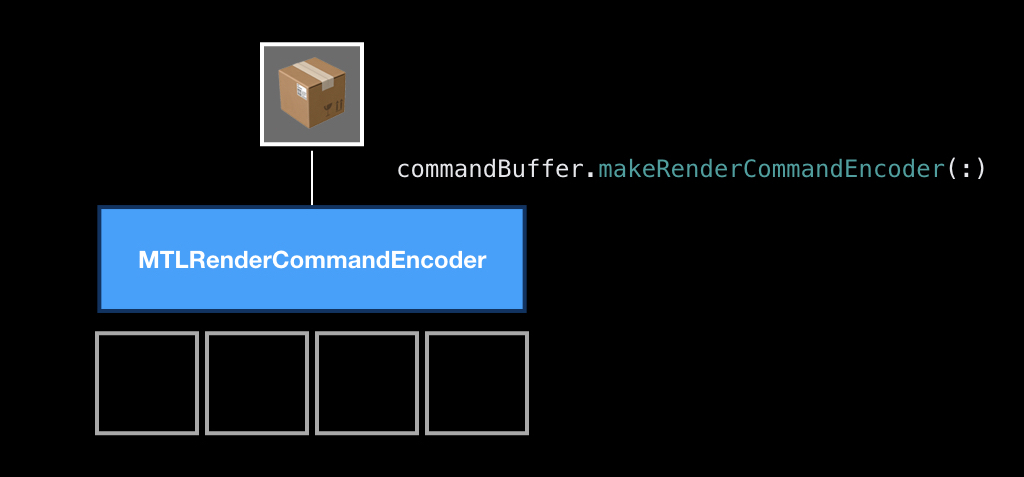

Objects are also created in the context of the box. That is, we get a box from the queue, and in the context of this box we create a special object called Encoder. In this example, we will create an encoder for the render command, because it is the most classic.

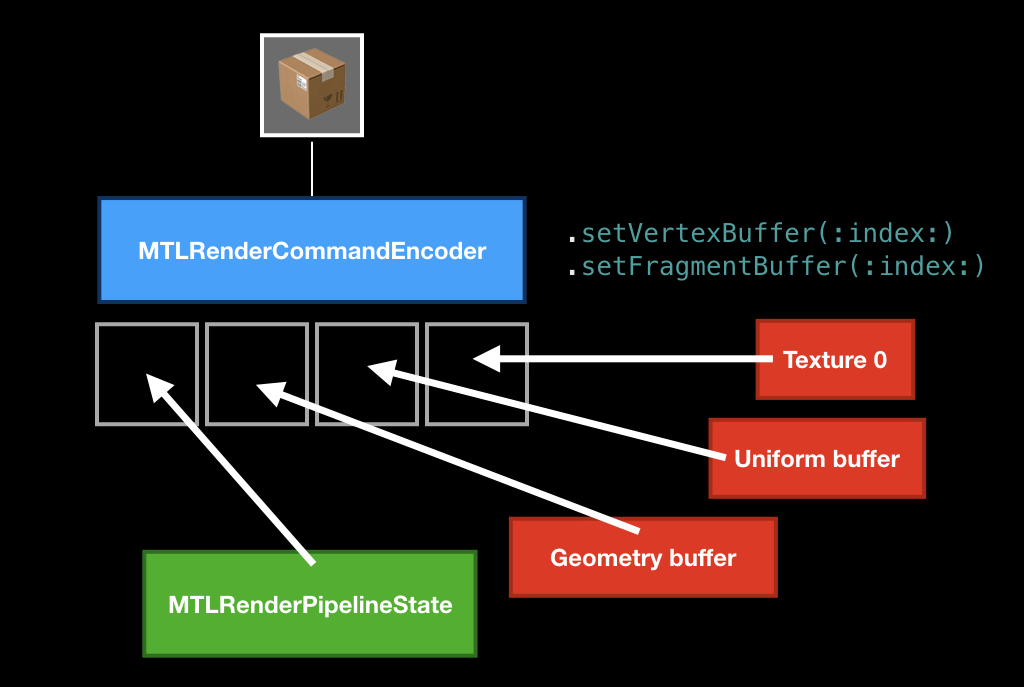

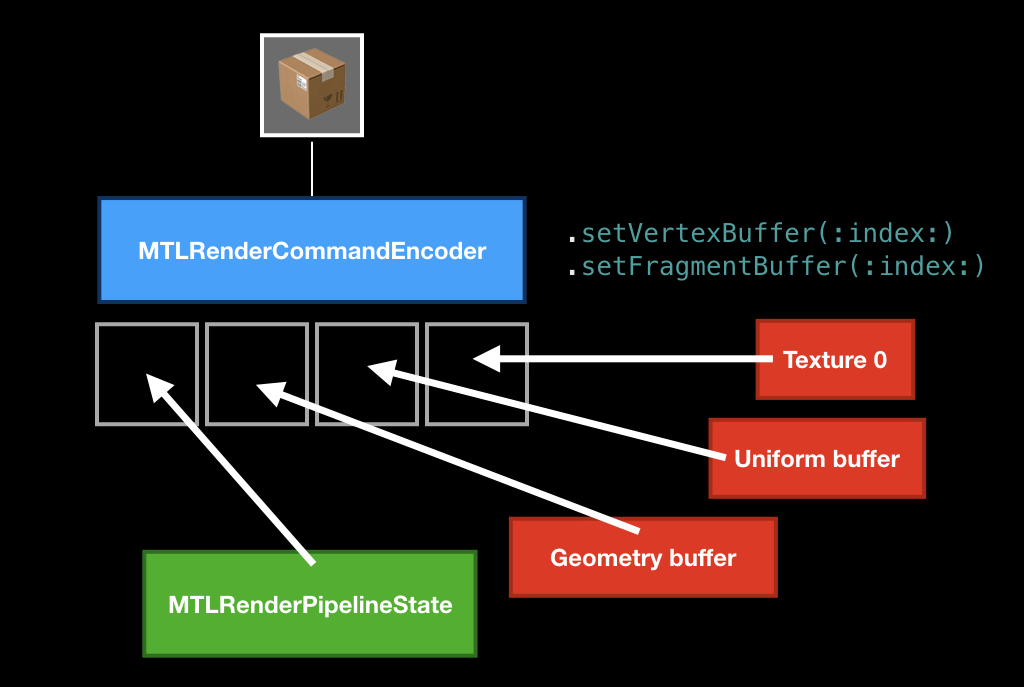

In itself, the process of encoding teams is very similar to the process of crafting in games. That is, when encoding, you have slots, you put something in there and craft a command from them.

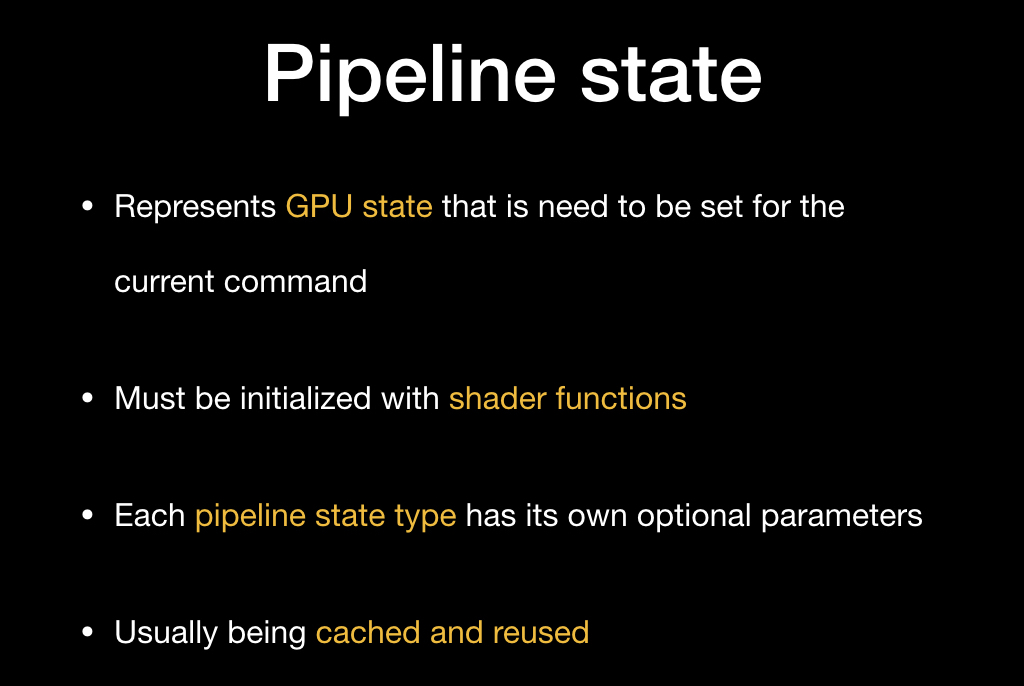

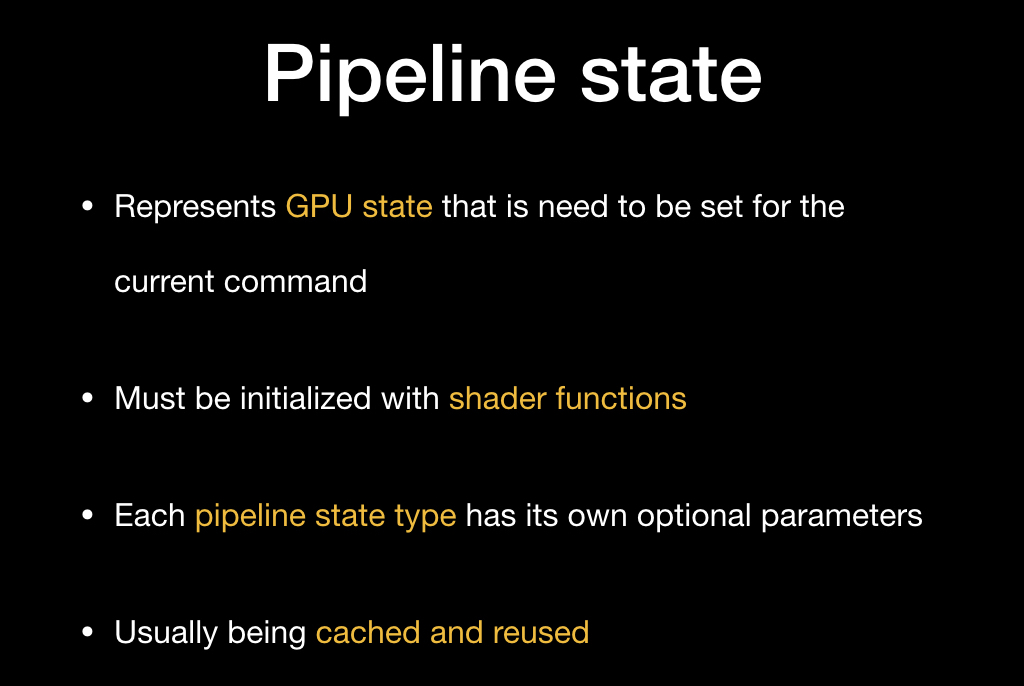

The main ingredient that must be required is the pipeline state, an object that describes the state of the video card into which it needs to be transferred in order to draw your primitives.

The main characteristic of this state is a unique pair of a vertex shader and a fragment shader, but there are still some parameters that can be changed, but you can not change it.Most often (and Apple recommends doing so) you have to cache this pipeline state somewhere at the beginning of the application, and then just reuse it.

It is created using a descriptor - this is a simple object in which you write the necessary parameters to the fields.

And then with the help of the device you create this pipeline state:

We put it, then we often put some kind of geometry that we want to draw using special methods, and optionally we can put some uniforms (for example, in the form of the same time, which we talked about today), pass on textures and stuff.

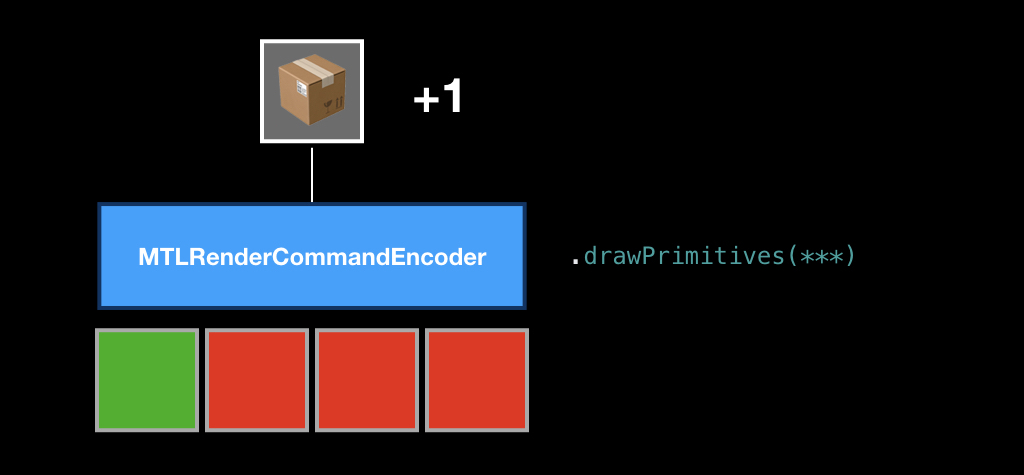

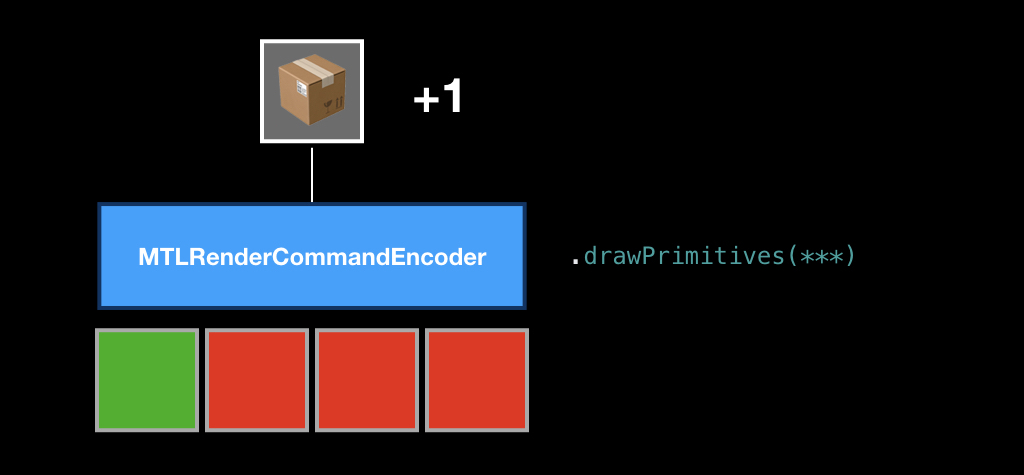

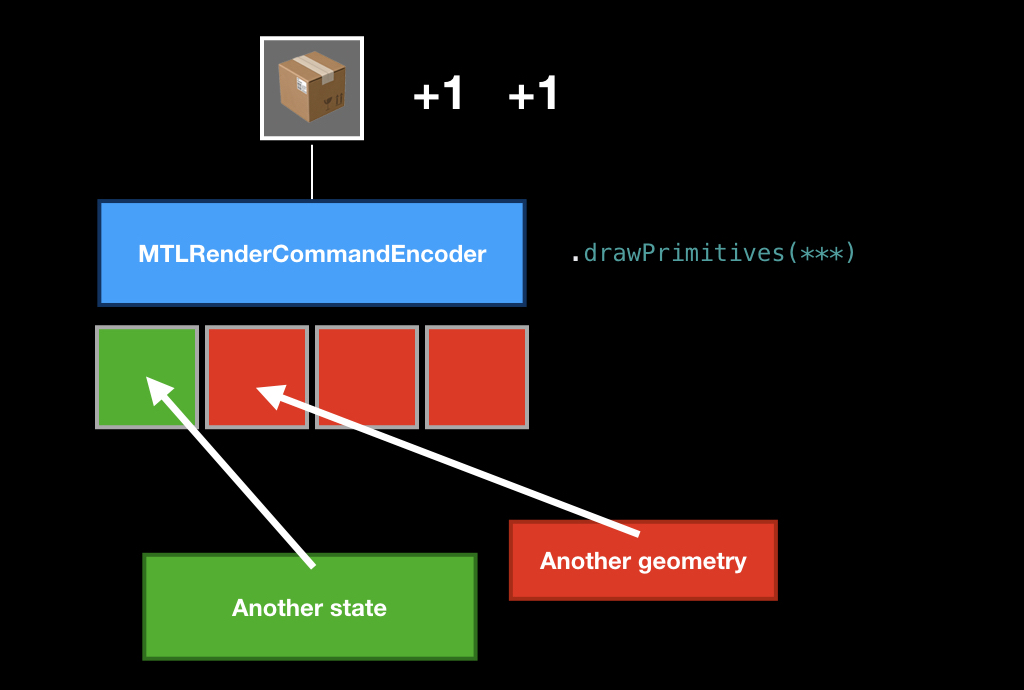

After that, we call the drawPrimitives method, and the command is put in our box.

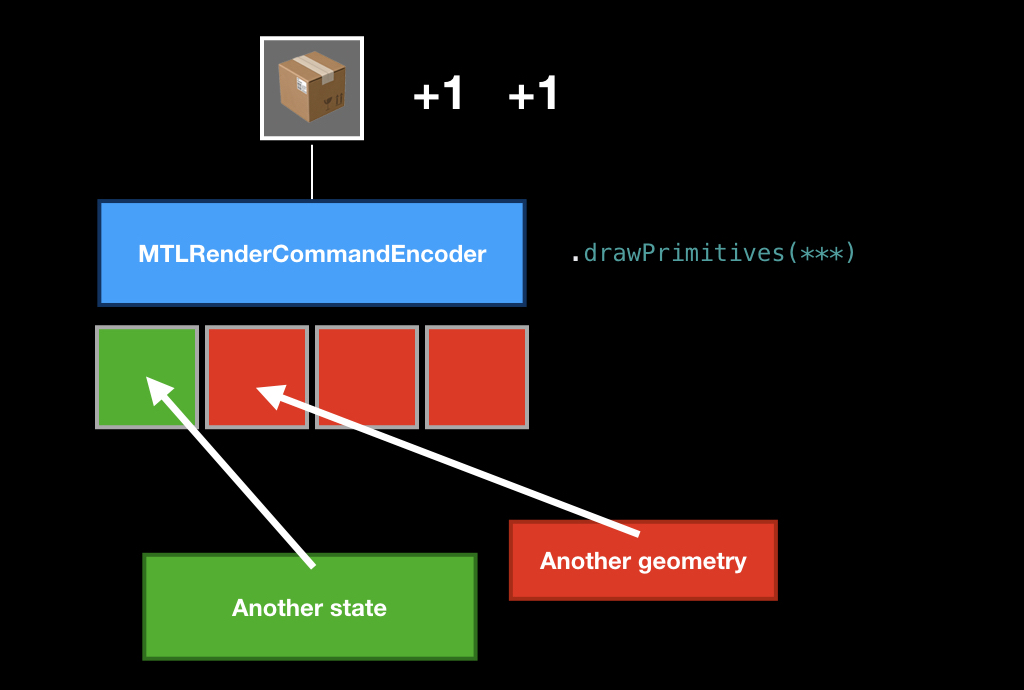

Then we can replace some ingredients, put another geometry or another pipeline state, call this method again, and another command appears in our box.

As soon as we file all the commands in our frame, we call the endEncoding method, and this box closes.

After that, we send the box to our queue using the commit method, and from that moment on, our fate is unknown to us. We don’t know when it will start to run, because we don’t know how loaded the GPU is and how many teams are in the queue. In principle, you can call a synchronous method that will force the CPU to wait until all the commands inside the box are executed. But this is a very bad practice, so, as a rule, you need to subscribe to the addCompletionHandler, which will be called asynchronously at the moment when each of the commands in this box is executed.

I think it’s unlikely that many in the audience came to this report for the sake of rendering. Therefore, we look at a technology such as Metal Compute Shaders.

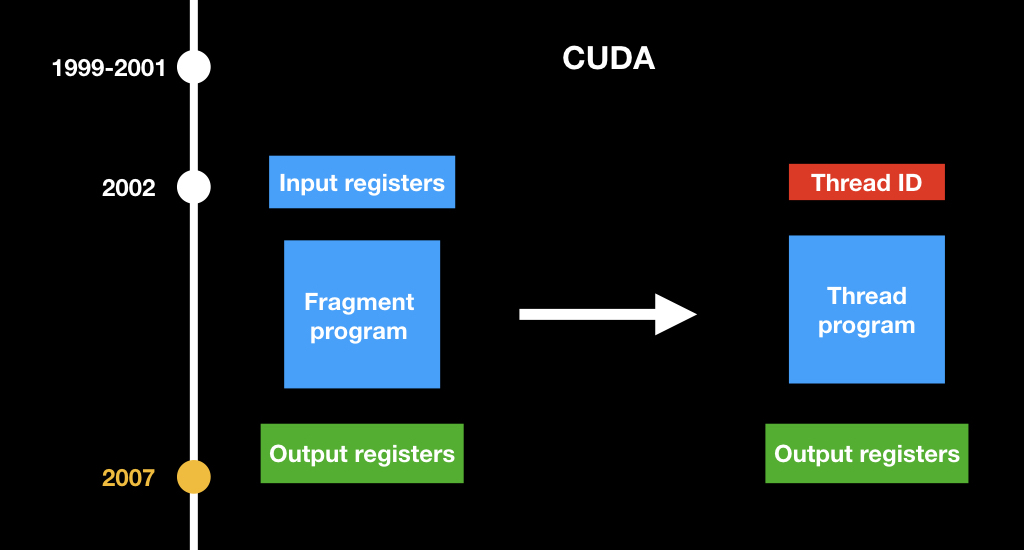

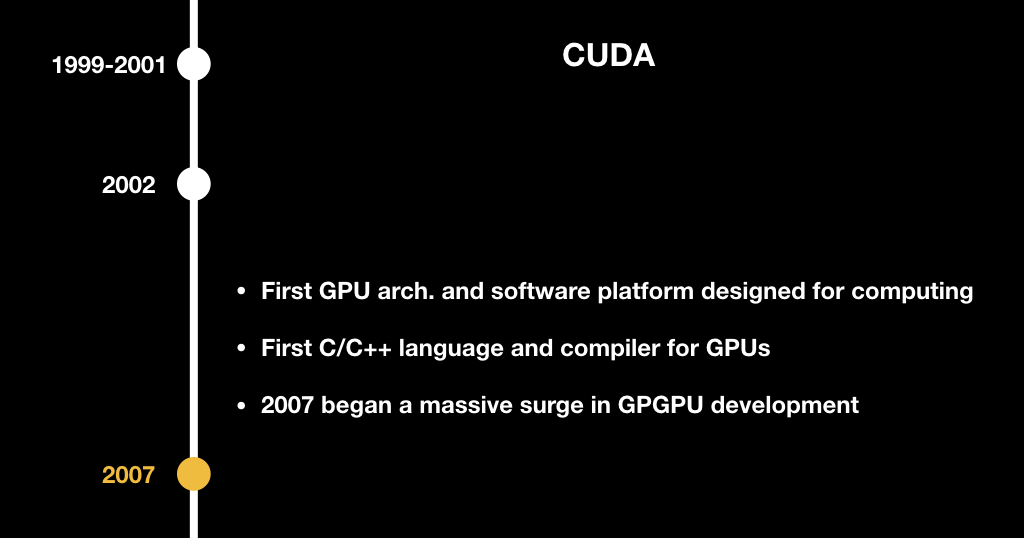

To understand what it is, you need to understand where it all came from. Already in 1999, as soon as the first GPU appeared, scientific research began to appear on how video cards can be used for common tasks (that is, for tasks not related to computer graphics).

It is necessary to understand that then it was hard: you had to have a PhD in computer graphics to do it. Then financial companies hired game developers to analyze the data, because only they could figure out what was going on.

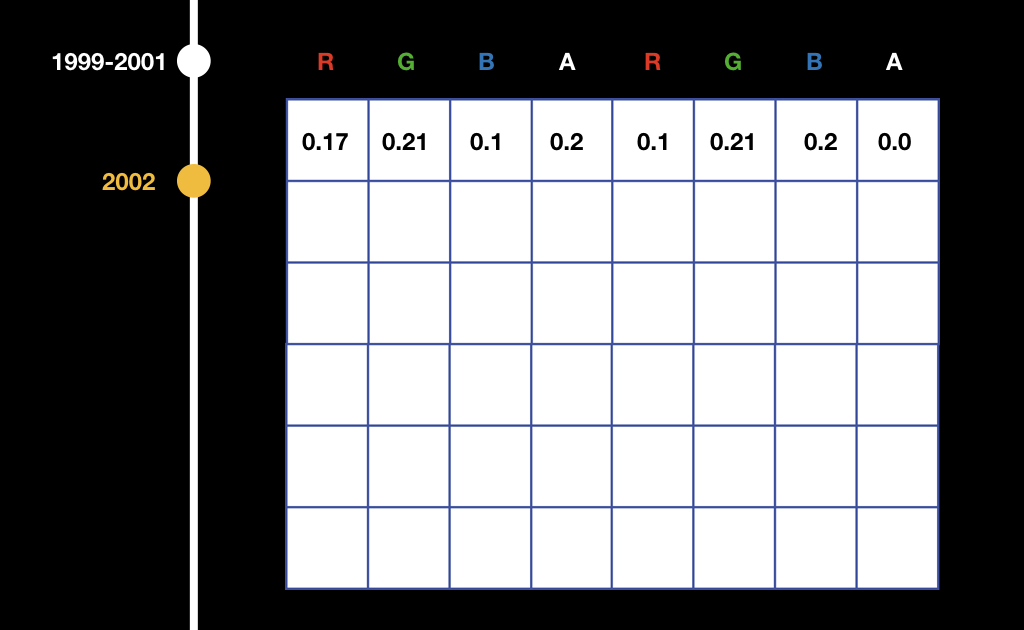

In 2002, Stanford graduate Mark Harris founded the GPGPU website and invented the term General Purpose GPU itself, that is, a general purpose GPU.

At that time, it was mainly scientists who were interested in this, who shifted tasks from chemistry, biology and physics to something that could be represented as graphics: for example, some chemical reactions that can be depicted as textures and somehow progressively count them.

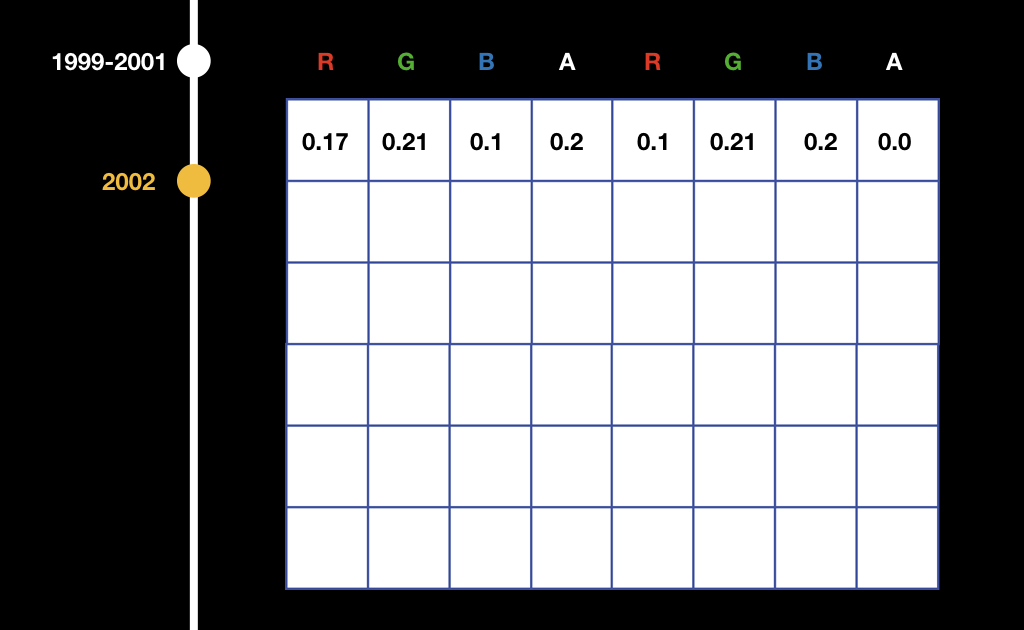

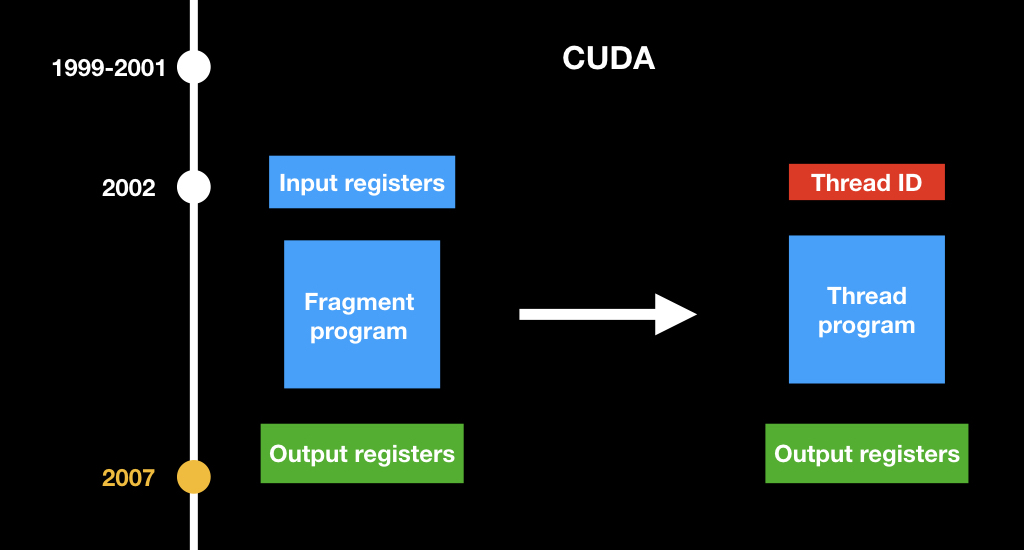

It worked a little sloppy. All data was recorded in textures and then somehow interpreted in a fragment shader.

That is, for example, if we have an array of float, we write it into the texture channel-by-channel: write the first number in the red channel of the first pixel, write the second number in the green channel of the first pixel, and so on. Then in the fragment shader it was necessary to read and watch it: if we render the leftmost upper pixel, then we do one, if we render the rightmost upper one, another, etc.

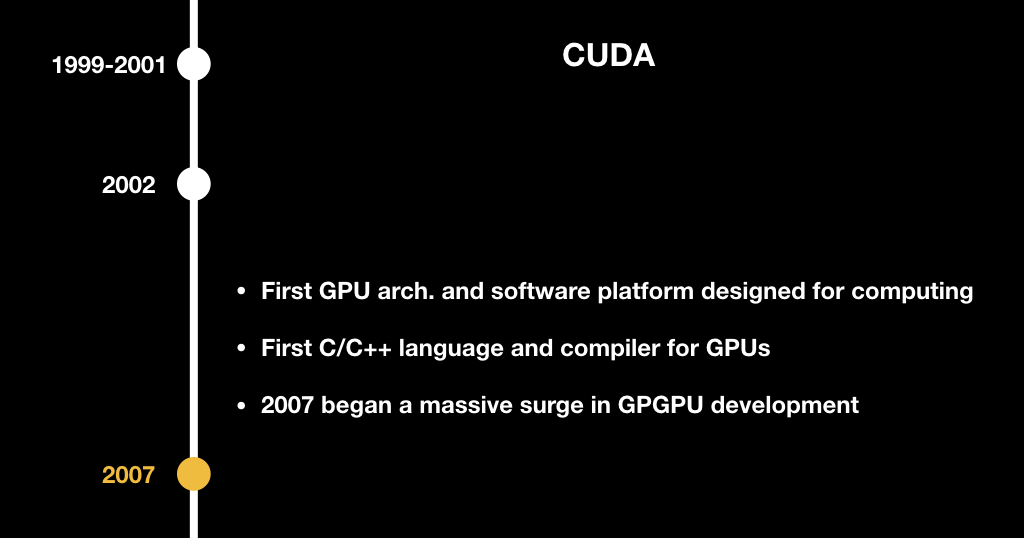

All this was difficult until the moment when CUDA appeared. Surely many of you have met this abbreviation. CUDA is also a project from NVidia, introduced in 2007. It is such a C ++-like language that allowed general-purpose programs to be written on the GPU and to do some calculations not related to computer graphics.

The most important concept that they changed: they invented a new kind of program. If earlier there were fragmentary shaders that take a pixel coordinate as an input, now there is just some abstract program that takes just the index of its stream and counts a certain part of the data.

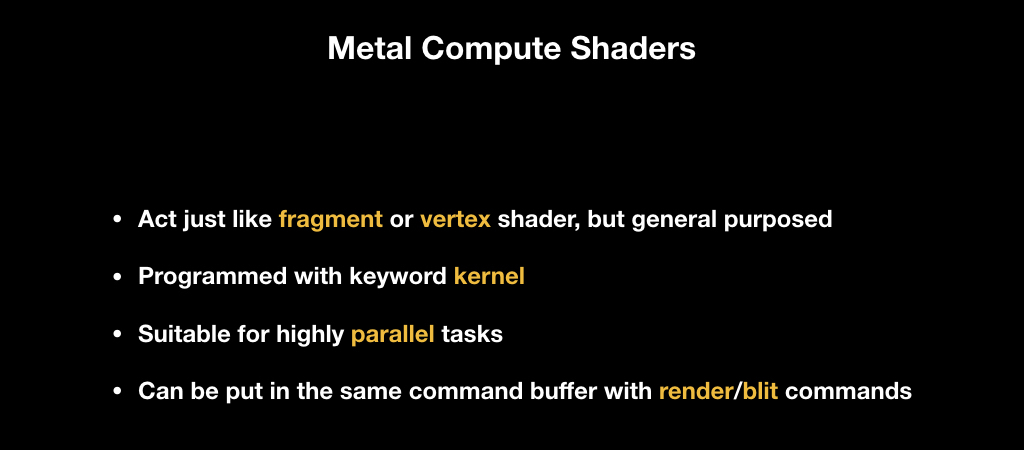

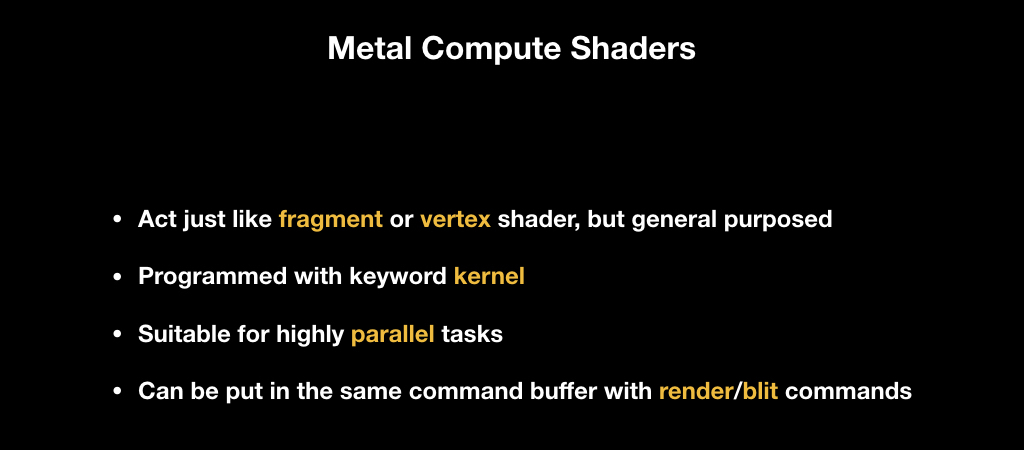

Actually, the same technology is implemented in the Metal Compute Shaders. These are the same shaders that are in the GPU on the desktop, they work the same way as fragment and vertex, that is, run in parallel. For them there is a special keyword kernel. They are suitable only for tasks that at the level of the algorithm can be very strongly parallelized and can lie in one command buffer, that is, in the same box with other commands. You can have a render command first, then a compute command, and then a render command that uses the results of the compute command.

In order to show how Metal Compute Shaders work, let's make a small demo. The scenario is pretty close to reality. I work in an AI company, and it often happens that R & D people come in and say that you need to do something with this buffer. We will simply multiply it by the number, but in real life you need to apply some function. This task is very suitable for parallelization: we have a buffer, and we need to multiply each number independently of each other by a constant.

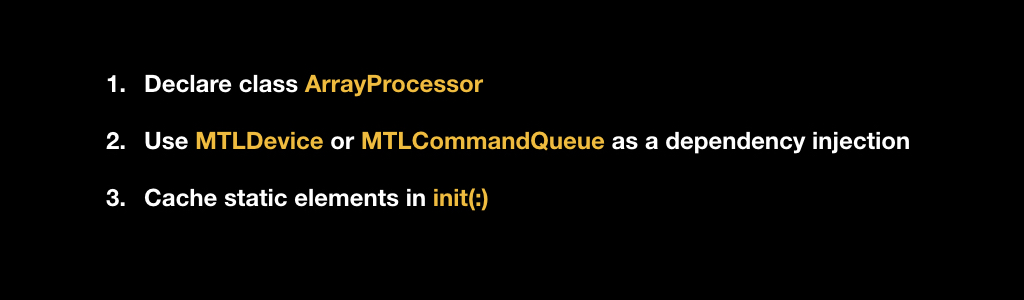

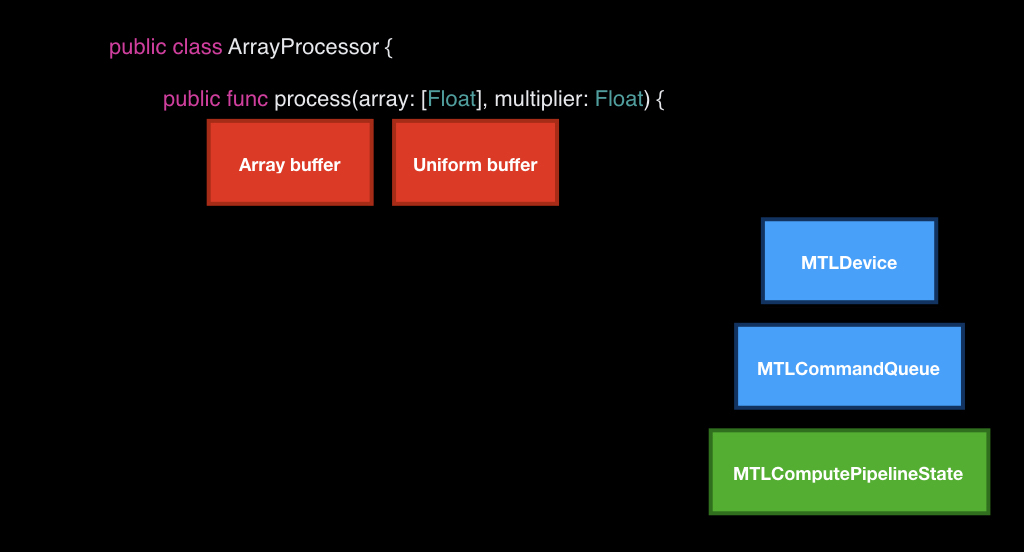

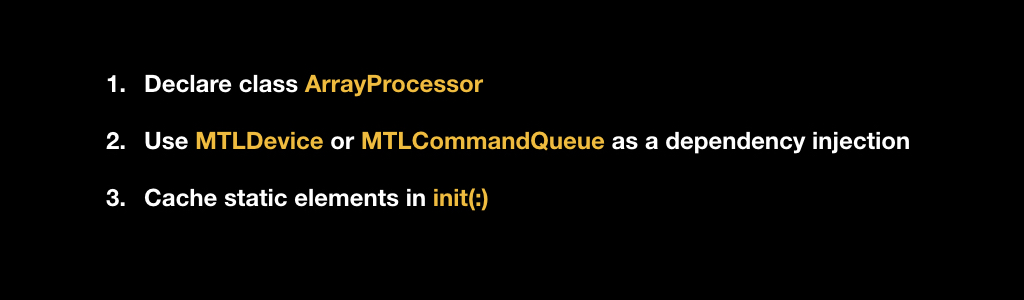

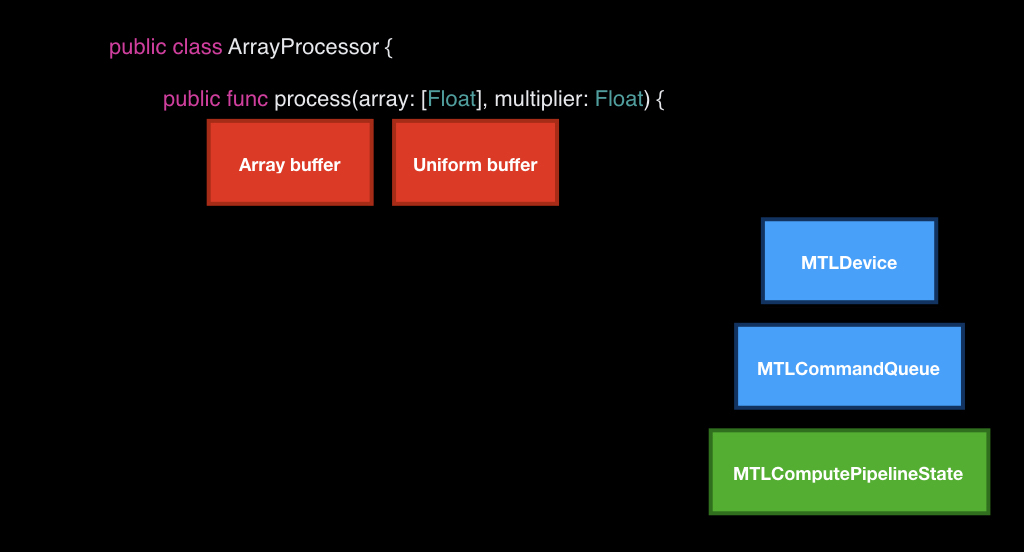

We will write our class in the Metal paradigm, so it will accept in the constructor an input link either to MTLDevice or to MTLCommandQueue as dependency injection.

In our constructor, we will cache all of this and create a pipeline state at the beginning of the initialization with our kernel, which we will write later.

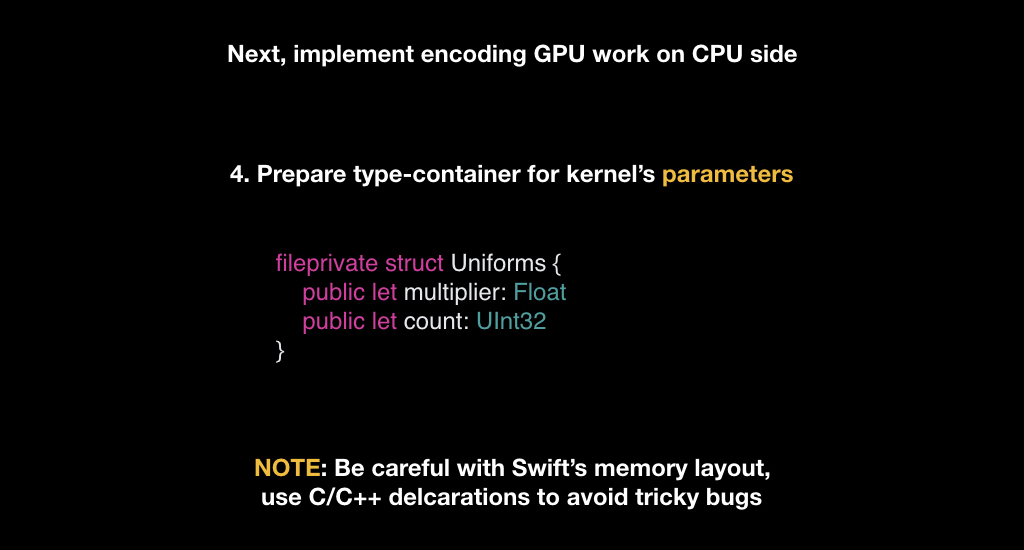

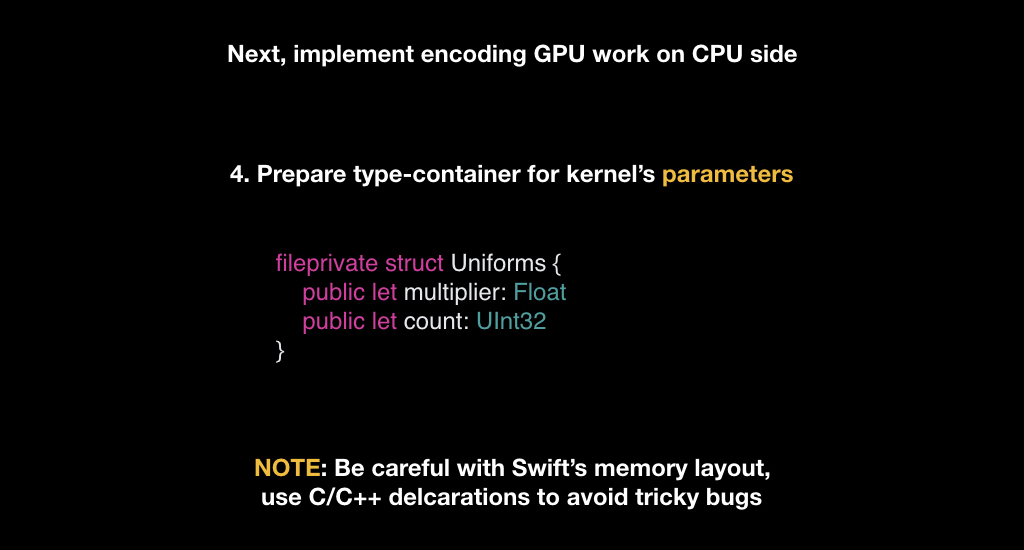

After that we will prepare types. We need only one type of Uniforms - this will be the structure that stores the constant, which we will multiply, and the count field, which will store the number of elements that we will need to calculate.

With this you need to be very careful. Many, especially C ++ programmers, know what data alignment is when the compiler changes the sequence of your fields in a structure or class, or, for example, changes the markup in order to most effectively read them by-byte. And Swift does the same thing, so when you declare your types in Swift, you need to be very careful. On Metal, C ++ type markup is used, and it may not coincide with SWIFT, so it is good practice when you describe these types somewhere in one C / C ++ header, and then fumble these descriptions between commands.

We only need to implement one method that will accept our array and the number to be multiplied as input. At the same time, we have already cached our device, the queue and the pipeline state.

The first thing we will do is take our device and use it to create two buffers that will be passed to the GPU.

We can not just transfer data, we need to create special objects using a device. We put the bytes from our array and create a structure from the uniform, where we put the count field from the array and our incoming constant.

After that in the queue we take an empty box. We take a special encoder for the compute-team and load it all there.

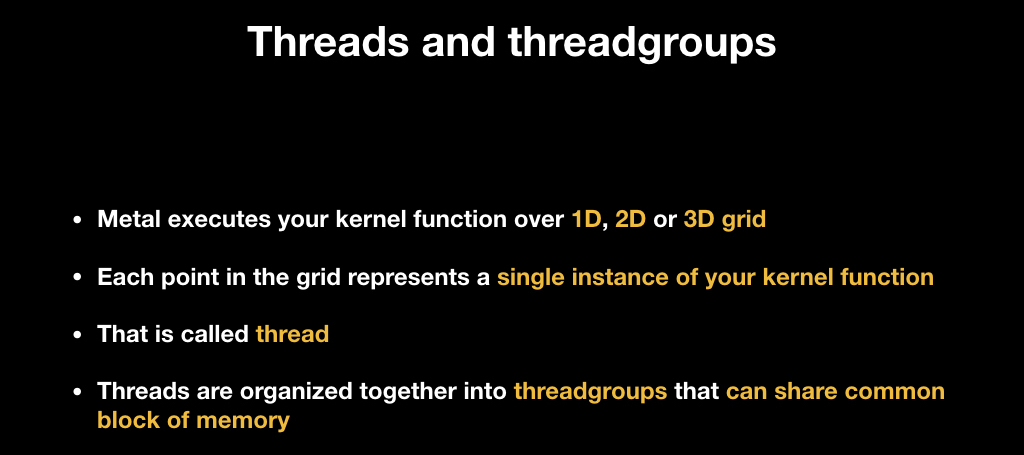

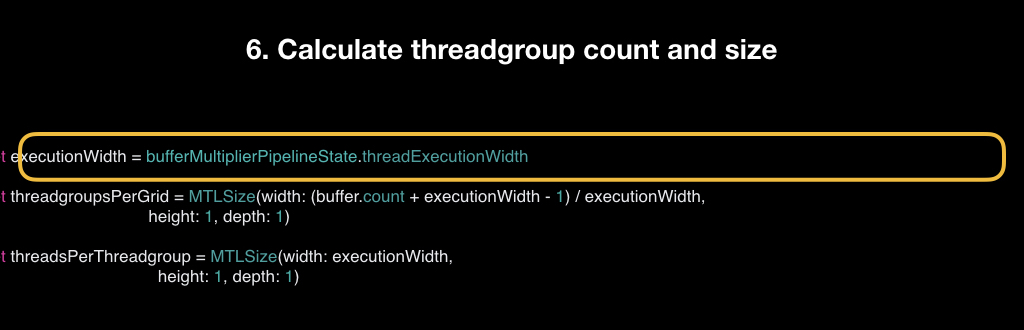

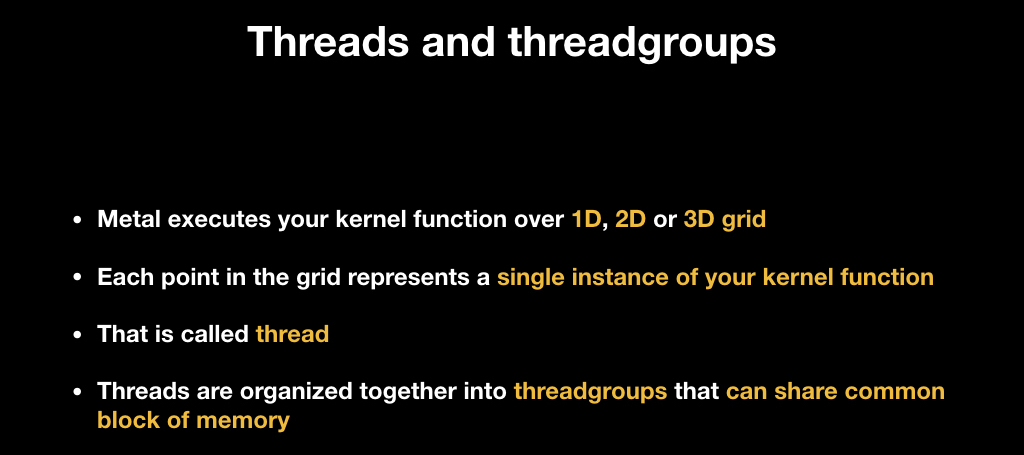

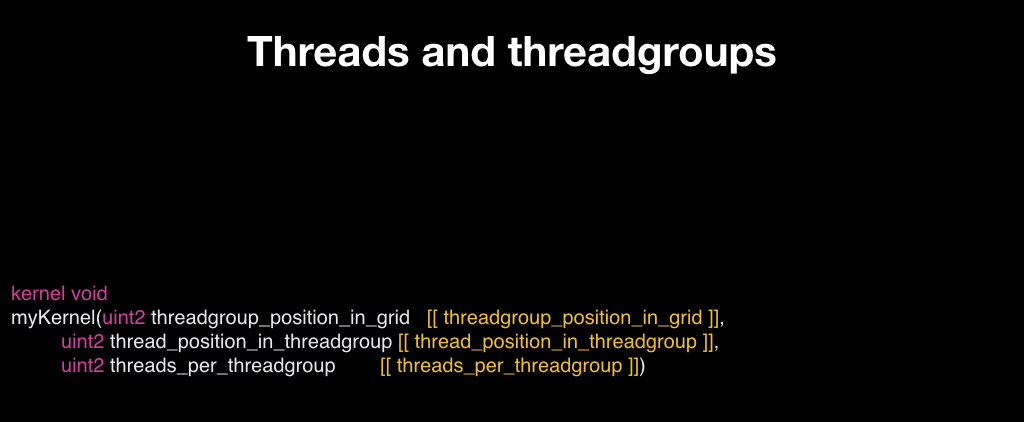

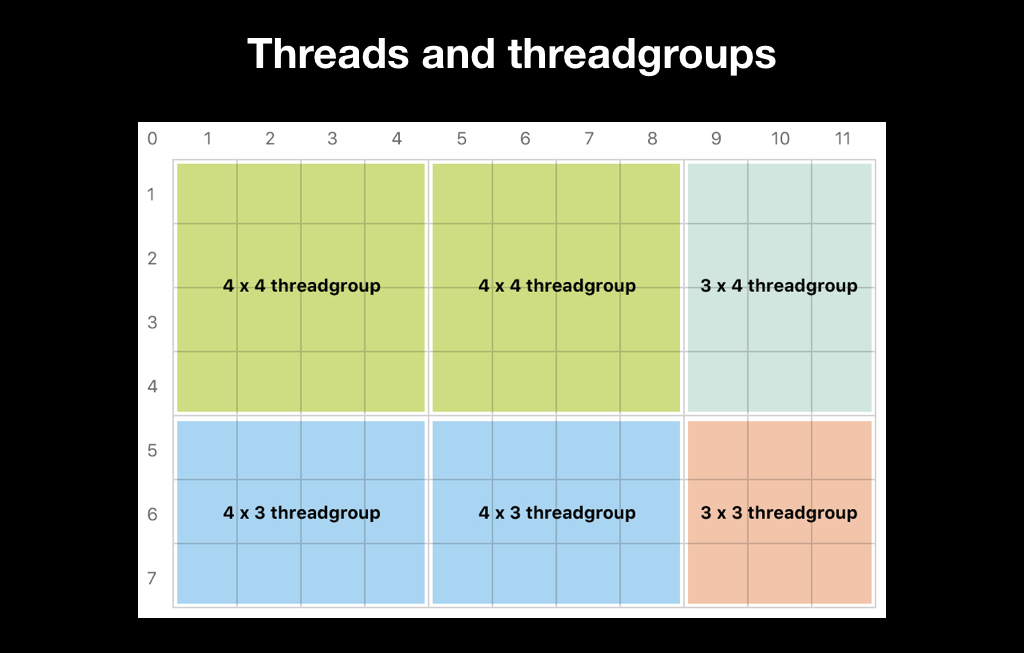

The question arises, what will we do next. If we called drawPrimitives in render commands, then everything was clear, but how to create a command for Compute Shader? To do this, you need to get acquainted with such a concept as threads and threads.

All Compute Shaders run on top of such a grid, it can be one-dimensional, two-dimensional and three-dimensional, it looks like arrays to make it easier to imagine. Each element of this grid is an instance of your function that accepts a coordinate in this grid as input. That is, if the grid is one-dimensional, then it’s just an index, if it’s two-dimensional, then, of course, it’s two-dimensional. Such an instance is called thread, that is, “thread”. But, as a rule, there is a lot of data, and the GPU cannot run them all at once in parallel, therefore, most often they are organized into groups and run into groups.

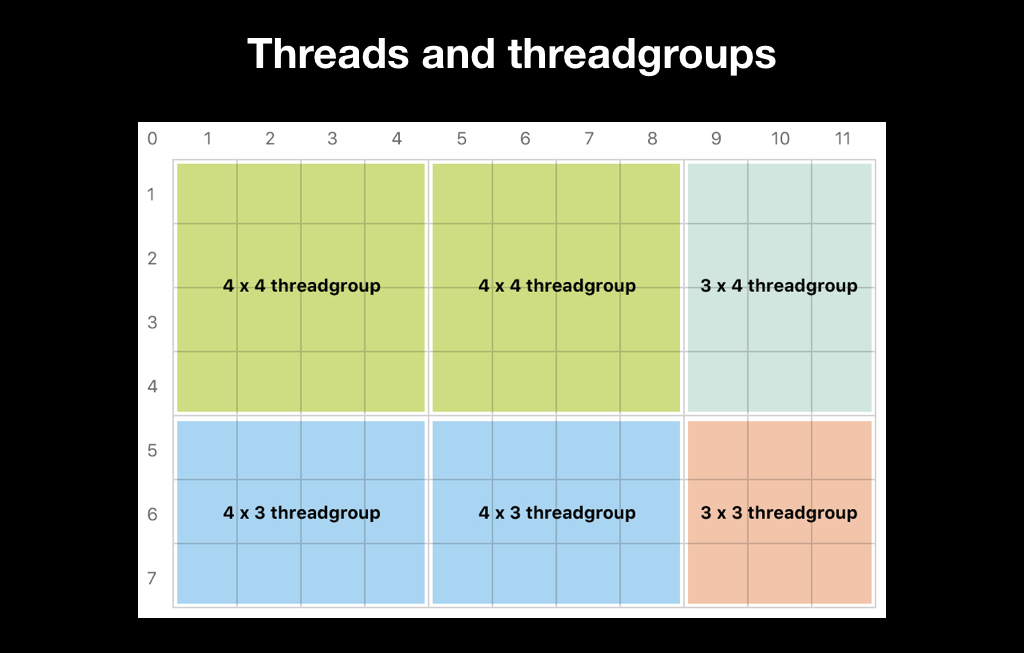

For example, we want to run one stream for each pixel in our picture, and we will make a thread group of 32x16 threads that will process the image with such rectangles, where each pixel will have its own thread:

Afterwards in the shader code we can get the current index of our stream, and process either the required pixel (as in this case), or any part of the data, depending on your business logic.

Tradable partitioning can be done in two ways. The first is to entrust it to Apple. This is a bad idea, because most often it is inefficient, and sometimes it does not even allow the algorithm. Often, algorithms, such as blur, require a thread group of exactly 3x3. Many algorithms require that all trade groups be the same size. And Apple itself breaks up the trade groups so that they contain all the data, and if the amount of your data is not completely divided, the size of the thread groups will differ:

Therefore, most often you choose the size of the thread group yourself and make it impossible to divide There were certainly more threads than your data:

It is also important to know how work groups work. Inside, they run on the principle of SIMD, that is, Single Instruction Multiple Data. All threads synchronously execute the same set of machine instructions, only different data is received at the input, that is, they take a step synchronously. And problems begin if one of your threads has a branch.

If the GPU understood which of the branches is correct, everyone else would have to wait. Instead, the GPU executes both branches: no matter how much if if it is encountered, all the code is executed so that it all works in parallel. Therefore, branching in a SIMD code is very bad, and it is important to minimize it. There are mathematical techniques that in certain cases help to avoid this.

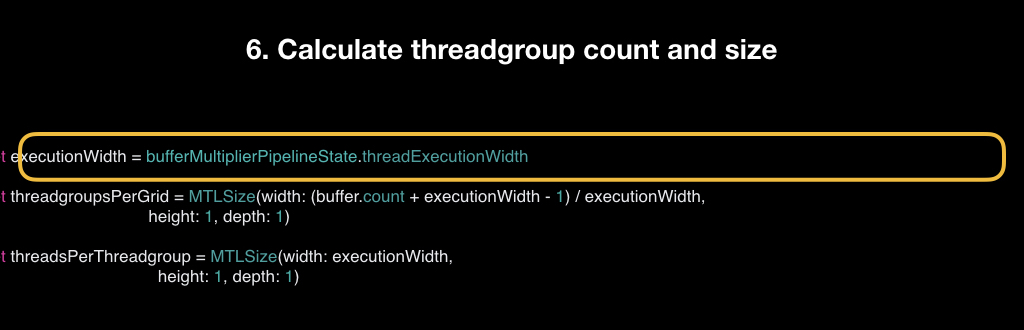

The division itself into SIMD groups is beyond our control; we can only know the so-called width of computations, that is, how many threads can be simultaneously contained in a SIMD group. This is necessary for cases where we need to split the data as efficiently as possible. Sometimes we break down threads according to an algorithm, as in the given example with blur, and sometimes you just need to do it as efficiently as possible, and we use width for this.

We take it from the pipeline state, count the number of thread groups, that is, take the count of our buffer, add the width to it minus 1, and divide it by this width. This is a simple formula to ensure that it works out with a margin. And count the number of threads in the same group.

We call this dispatchThreadgroups method, in which we pass the number of thread groups and the number of threads in each group.

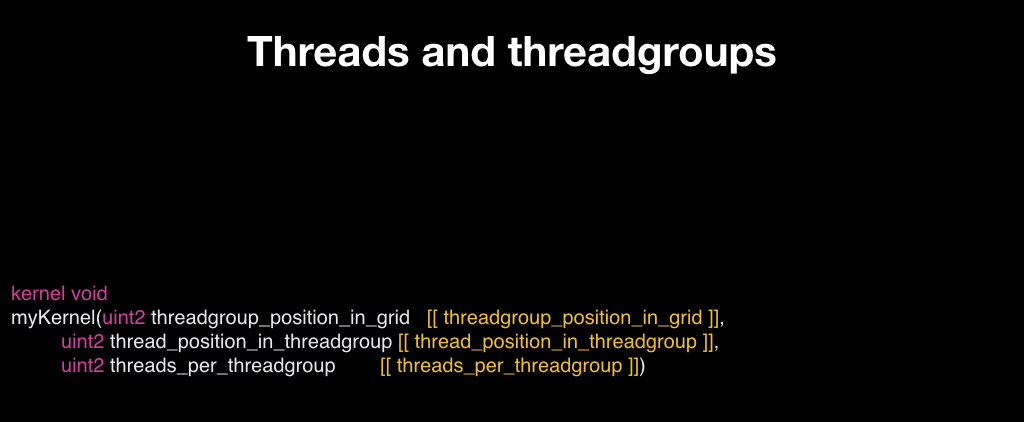

After that, our box flies to the GPU. And the very last step is to write the shader itself. At the input, it takes a buffer, a pointer to the beginning of the float of our uniform in the form of a structure, and a thread index is the index of the thread that is being launched.

The first thing we have to do is check that we are not going beyond the boundaries of our buffer (if this is the case, then we will immediately return). Otherwise, we read the necessary element, that is, each stream reads only one element. We read it and write it back already multiplied.

We have a benchmark of what happened. We will have an array with a million elements, each of which contains a unit, and a constant by which we will multiply. We will compare here with how this same task would be solved on the CPU (this would be solved in three lines):

Let's hope that has become faster. And indeed it is: Metal performs on average in 0.006 seconds, and CPU performs in 0.1. That is, the CPU is about 17 times slower, which should please us.

But the results will change a little if we replace a million elements by a thousand. In this case, Metal finished twice as fast as with a million. But the CPU finished exactly 1000 times faster, because the elements became exactly 1000 times smaller. And now the CPU is 30 times faster than the GPU.

This factor must be taken into account: even your algorithm will perfectly parallel, but it is not laborious enough, the overhead in transferring work from the CPU to the GPU can be so great that it will not make sense. And it will not necessarily write so much code, it will be enough to write three lines on the CPU. But you will never know until you try. It is very difficult to estimate this in advance.

In general, the tips are:

Based on Metal Compute Shaders, the Metal Performance Shaders framework was created. It was announced back in iOS 9, and was originally a collection of some general-purpose tasks — for example, transfer your image to grayscale. They were super-optimized, each iPhone had its own shader written, Metal has private APIs, and everything worked super-fast.

In iOS 10, neural network support was added there. It so happened that now all the artificial intelligence and all machine learning is just a multiplication of matrices. And the inference of neural networks is just perfect in order to do this on the GPU.

Therefore, this framework is now often called MPSCNN: it is used for the inference of neural networks, and allowed companies, including ours, to use calculations on the device, and not in the cloud.

But the code was very scary. Not complicated, but very cumbersome. For example, to zaenkodit some grid in the style of Inception-v3, you need about 1000 lines of the same type of code:

That is, ideologically, it is not complicated, but it is very easy to get confused in it. Therefore, in iOS 11, in addition to the second Metal, there are several new frameworks.

One of them is the NN Graph API, which makes it very convenient to build a graph of your neural network calculations and then simply transfer the ready box, that is, the command buffer, directly to the GPU.

After that, CoreML was announced, which made a lot of noise and that you probably already had all your ears buzzed, but I still say a few words.

Its main drawback and dignity at the same time is that it is proprietary. It works quite quickly, that is, with the implementation, which you can write yourself using MPS, it is approximately comparable in speed. But CoreML uses its internal analytics to understand where to run the code — on the GPU or CPU. He evaluates the overhead projector for some of his heuristics, and sometimes, when he realizes that it is inappropriate to send work to the GPU, he considers it on the CPU. And this is both cool and not, because you have no control over it, you do not know what will happen and where your grid will be considered as a result.

The advantage is that it is very easy to use. A lot of desktop frameworks are supported. If your company is engaged in machine learning, most often you have an R & D team that teaches your grids on some affordable framework in the style of TensorFlow, Torch, or something like that. And before the appearance of Apple solutions, everyone had their own approaches - for example, there was some kind of encoder that takes JSON and collects your neuron at Metal in runtime. And now it does CoreML.

Another advantage is that it works fast enough. But at the same time it does not lend itself to customization. If you need to implement some kind of custom operator in your neuron, you will not be able to do this. Therefore, CoreML is only suitable for a narrow class of tasks, mainly for long-solved tasks - in the style of classification and other things, that is, neural networks that can be found on the Internet for a long time. Sometimes he bang, and seriously. It is quite raw, at least, judging by our experience, and we report a lot of bugs. And you have no control over what happens under the hood. In general, the final conclusions are:

When at the Mobius 2017 Moscow conference, Andrei Volodin (Prisma AI) spoke about the use of GPUs in iOS, his report was one of the favorites of the conference, receiving high ratings from the audience. And now, on the basis of this report, we have prepared a habrapost, which allows us to receive all the same information in text. It will be interesting even to those who do not work with iOS: the report begins with things that are not tied specifically to this platform.

Careful traffic: under the cut a lot of images from the slides.

The plan is as follows. First, look at the history of computer graphics: how it all began and how we came to what is now. Then let's figure out how to render on modern video cards. What does Apple offer us as an iron vendor? What is GPGPU. Why Metal Compute Shaders is a cool technology that changed everything. And at the very end we'll talk about the hype train, that is, the popular now: Metal Performance Shaders, CoreML, and the like.

')

Let's start with the story

The first known system with a separate iron for the video was the Atari 2600. This is a fairly well-known classic gaming console, released in 1977. Its feature was that the amount of RAM was only 128 bytes, and not only available to the developer: it was for the game, for the operating system of the console itself, and for the entire call stack.

On average, the games were rendered in 160x192 resolution, and there were 128 colors in the palette. It is easy to calculate that storing one frame of the game required many times more RAM. Therefore, this console went down in history as one big hack: all the graphics in it were generated in real time (in the truest sense of the word).

At that time, televisions worked with ray guns, through electronic heads. The image was scanned line by line, and the developers had to, as the TV scans the image through an analog cable, tell it what color of the current pixel to draw. Thus the image appeared on the screen completely.

Another feature of this console was that at the iron level, it supported only five sprites at a time. Two sprites for the player, two for the so-called "missiles" and one sprite for the ball. It is clear that for most games this is not enough, because there are usually more interactive objects on the screen.

Therefore, there was used the technique, subsequently went down in history under the name "race with the beam." As the beam scanned the image from the console, those pixels that had already been drawn remained on the screen until the next frame. Therefore, the developers moved the sprites while the beam was moving, and thus could draw more than five objects on the screen.

This is a screenshot from the famous Space Invaders game, in which there are much more than five interactive objects. Exactly in the same way, effects like parallax (with wave-like animations) and others were drawn. Racing the Beam was written based on all of this fever. And from it I took this curious illustration:

The fact is that the TV scanned the image in a non-stop mode, and the developers had no time to read the joystick, calculate some kind of game logic, etc. Therefore, the resolution was made higher than on the screen. And the “vertical blank”, “overscan” and “horizontal blank” zones are a fake resolution that the TV scanned, but the video signal was not given at that time, and the developers considered the game logic. And in the Pitfall game from Activision, the logic was so complicated in those times that all the time they had to draw more treetops above and black ground below in order to have more time to cheat it.

The next stage of development was the Nintendo Entertainment System in 1983, and there were similar problems: there was already an 8-bit palette, but still there was no frame buffer. But there was a PPU (picture processing unit) - a separate chip that was responsible for the video series, and there was used tile graphics. Tiles are such pieces of pixels, most often they were 8x8 or 8x16. Therefore, all the games of that period look a little square:

The system scanned the frame with such blocks and analyzed which parts of the image to draw. This made it possible to save video memory very seriously, and an additional advantage was the collision detection out of the box. Gravity appeared in games because it was possible to understand which squares intersect with which ones, it was possible to collect coins, take lives, when we come in contact with enemies, and so on.

Subsequently, 3D-graphics began, but at first it was insanely expensive, mainly used in flight simulators and some entertaining solutions. It was considered to be such terrible, huge chips and did not reach ordinary consumers.

In 1999, the well-known company NVidia introduced the term GPU (graphics processing unit) with the release of the new device. At that time, it was a very highly specialized chip: it solved a number of tasks that allowed a little to speed up the 3D graphics, but it could not be programmed. One could only say what to do, and he returned the answer for some pre-built algorithms.

In 2001, NVidia released the GeForce 3 with the GeForceFX package, in which the shaders first appeared. We will definitely talk about them today. It was this concept that turned all computer graphics, although at that time it was still programmed in assembler and was quite difficult.

The main thing that happened after that was a trend. You have probably heard about such a metric of iron productivity as flops. And it became clear that over time, video cards, in comparison with central processors, simply fly off into space in terms of performance:

And if we look at their specifications, we will see that literally over the year the number of transistors has increased 2 times, while the clock frequency of each of them has decreased, but the overall performance has increased almost 7 times. This suggests that there was a bet on parallel computing.

Rendering today

To figure out why a GPU needs to take so many things in parallel, let's see how rendering is currently happening on most video cards, both desktop and mobile.

For many of you, it can be a major disappointment that a GPU is a very stupid piece of hardware. She can only draw triangles, lines and points, nothing else. And it is very optimized for working with floating point numbers. It does not know how to count double and, as a rule, works very poorly with int. There are no abstractions on it in the form of the operating system and others. It is as close to the gland as possible.

For this reason, all 3D objects are stored as a set of triangles, on which the texture is most often stretched. These triangles are often called polygons (those who play games are familiar with words like “there are twice as many polygons in Kratos' model).

In order to render it all, these triangles are first placed in the game world. The game world is the usual three-dimensional coordinate system, where we put them, and each object has, as a rule, its own position, rotation, distortion, and so on.

Often there is such a concept as a camera, when we can look at the game world from different sides. But it is clear that in reality there is no camera there, and in reality it is not she who moves, but the whole game world: he turns to the monitor so that you can see it from the right angle.

And the last stage is the projection, when these triangles fall on your screen.

For all this, there is an excellent mathematical abstraction in the form of affine transformations. Who worked with UIKit, is familiar with this concept thanks to the CGAffineTransform, there all animations are made through it. There are different matrices of affine transformations, here for scale, for rotation and for transfer:

They work like this: if you multiply the matrix by some vector, then the transformation will be applied to it. For example, the Translation matrix shifts the vector when multiplied.

And another interesting fact related to them: if you multiply several such matrices, and then multiply them by a vector, the effect accumulates. If at first there is a scale, then a turn, and then a transfer, then when all this is applied to the vector, all of this is done right away.

In order to do this efficiently, vertex shaders were invented. Vertex shaders are such a small program that runs for every point of your 3D model. You have triangles, each with three points. And for each of them, a vertex shader is launched, which accepts the vector's position in the coordinate system of the 3D model, and returns in the coordinate system of the screen.

Most often it works like this: we calculate for each object a unique transformation matrix. We have the matrix of the camera, the matrix of the world and the matrix of the object, we multiply them all and give it to the vertex shader. And for each vector it takes, multiplies it and returns a new one.

Our triangles appear on the screen, but it's still vector graphics. And then the rasterizer is included in the game. It does a very simple thing: it takes pixels on the screen and imposes a pixel grid on your vector geometry, selecting those pixels that intersect with your geometry.

After that, run fragment shaders. Fragment shaders - this is also a small program, it starts already for each pixel that falls into the area of your geometry, and returns the color, which will later be displayed on the screen.

In order to explain how this works, imagine that we will draw two triangles that cover the entire screen (just for clarity).

The simplest fragmentary shader you can write is a shader that returns a constant. For example, red, and the entire screen becomes red. But it is quite boring.

Fragment shaders take the pixel coordinate as an input, so we can, for example, calculate the distance to the center of the screen, and use this distance as the red channel. Thus, a gradient appears on the screen: the pixels close to the center will be black, because the distance will tend to zero, and the edges of the screen will be red.

Further we can add, for example, a uniform. The uniform is a constant that we pass to the fragment shader, and he applies it equally for each pixel. Most often, such a uniform becomes time. As you know, time is stored as a number of seconds from a certain point, so we can take the sine from it, getting some value.

And if we multiply our red channel by this value, we get dynamic animation: when the sine of time goes to zero, everything becomes black, when the unit returns to its place.

By itself, writing shaders has grown into a whole culture. These images are drawn using mathematical functions:

They do not use 3D models or textures. It's all pure math.

In 1984, one Paul Heckbert even launched a Challenge, when he distributed business cards with a code, by running which, you could get just such a picture:

And this challenge is still alive at SIGGRAPH, CVPR, major conferences in California. Until now, you can see business cards that print something.

But it is unlikely that all this became part of the masses just because it was beautiful. And in order to understand what possibilities this all opens up, let's see what can be done with a conventional sphere. Suppose we have a 3D model of a sphere (it is clear that in reality it is not a sphere, but a set of triangles, forming a ball with some degree of approximation). We will take this texture, which is often called smooth noise:

These are just some random pixels that gradually flow from white to black. We will stretch this texture on our ball, and in the vertex shader we will do the following: we will shift the points falling on the darker pixels more weakly, and the points on the white pixels move more strongly.

And about the same we will do in the fragment shader, only slightly different. We will take the gradient texture shown on the right:

And the whiter the pixel, the more we read, and the darker the pixel that falls, the lower.

And each frame will slightly shift the reading zone and loop it. As a result, an animated fireball will turn out from the usual sphere:

The use of these shaders has turned the world of computer graphics, because it has opened up tremendous possibilities for creating cool effects by writing 30-40 lines of code.

This whole process is repeated several times for each object on the screen. In the following example, you can notice that the GPU cannot draw fonts, because it lacks accuracy, and each character is depicted using two triangles on which the letter texture is stretched:

After that, get a frame.

What Apple provides

Now let's talk about what Apple provides us as a vendor of not only software, but also hardware.

In general, we have always had everything going well: from the very first iPhone, the OpenGL ES standard has been maintained, it is such a subset of the desktop OpenGL for mobile platforms. Already on the first iPhone 3D games appeared that were comparable in level to the PlayStation 2 in terms of graphics, and everyone started talking about revolution.

In 2010, the iPhone 4 came out. There was already the second version of the standard, and Epic Games very much boasted about their game Infinity Blade, which made a lot of noise.

And in 2016, the third version of the standard was released, which turned out to be not particularly interesting to anyone.

Why? During this time, a lot of frameworks have been released under the ecosystem, low-level, open-source - engines from Apple itself, engines from large vendors:

In 2015, the Khronos organization, which certifies the OpenGL standard, announced Vulkan, the next generation graphics API. And initially Apple was a member of the working group on this API, but left it. Mainly due to the fact that OpenGL, contrary to a common misconception, is not a library, but a standard. That is, roughly speaking, this is a large protocol or interface that says that the device should have such functions that do this and that with iron. And the vendor must implement them himself.

And since Apple is famous for its tight integration of software and hardware, any standardization causes certain difficulties. Therefore, instead of supporting Vulkan, the company in 2014 announced Metal, as if hinting at its name “very close to iron”.

It was an API for graphics, made exclusively for Apple's hardware. Now it is clear that this was done to release its own GPU, but at that time there were only rumors about it. Now there are no devices that do not support Metal.

The diagram from Apple itself shows an overhead comparison: even compared to OpenGL, Metal has a much better performance, access to the GPU is much faster:

There were all the latest features like tessellations, we will not discuss them in detail now. The main idea was to shift most of the work to the initialization stage of the application and not to do many repetitive things. Another important feature is that this API is very careful about the time of the central processor that you have.

Therefore, unlike OpenGL, the work here is arranged in such a way that the CPU and GPU work in parallel with each other. As long as the CPU reads frame number N, at this time the GPU renders the previous frame N-1, and so on: you do not need to synchronize, and while the GPU renders something, you can continue to work on something useful.

Metal has a rather thin API, and this is the only graphical API that is object-oriented.

Metal API

And just on the API, we now go through. At its heart lies the MTLDevice class, which is a single GPU. Most often on iOS, you can get it using the MTLCreateSystemDefaultDevice function.

Despite the fact that it is obtained through a global function, you do not need to treat this class as if it were a singleton. On iOS, the video card is really only one, but there is also Metal on the Mac, and there may be several video cards, and you will want to use some specific one: for example, an integrated one to save the user's battery.

It is necessary to take into account that Metal is very different in architecture from all other Apple frameworks. It has end-to-end dependency injection, that is, all objects are created in the context of other objects, and it is very important to follow this ideology.

Each device has its own MTLCommandQueue software queue, which can be obtained using the makeCommandQueue method.

This pair of the device and the software queue is very often called the “Metal context”, that is, in the context of these two objects we will do all our operations.

The MTLCommandQueue itself works like a normal queue. It comes "boxes", which are the instructions "what to do with the GPU." As soon as the device is released, the next box is taken, and the rest move up. At the same time, not only you from your stream put commands into this queue: they are also placed by iOS itself, some UIKit frameworks, MapKit, and so on.

These boxes themselves are a class MTLCommandBuffer, and they are also created in the context of a queue, that is, each queue has its own empty boxes. You call a special method, as if to say: "Give us an empty box, we will fill it."

You can fill this box with three types of commands. Render commands for drawing primitives. Blit commands are commands for streaming data, when we need to transfer part of the pixels from one texture to another. And compute-teams, talk about them later.

In order to put these commands in a box, there are special objects, each type of command has its own:

Objects are also created in the context of the box. That is, we get a box from the queue, and in the context of this box we create a special object called Encoder. In this example, we will create an encoder for the render command, because it is the most classic.

In itself, the process of encoding teams is very similar to the process of crafting in games. That is, when encoding, you have slots, you put something in there and craft a command from them.

The main ingredient that must be required is the pipeline state, an object that describes the state of the video card into which it needs to be transferred in order to draw your primitives.

The main characteristic of this state is a unique pair of a vertex shader and a fragment shader, but there are still some parameters that can be changed, but you can not change it.Most often (and Apple recommends doing so) you have to cache this pipeline state somewhere at the beginning of the application, and then just reuse it.

It is created using a descriptor - this is a simple object in which you write the necessary parameters to the fields.

And then with the help of the device you create this pipeline state:

We put it, then we often put some kind of geometry that we want to draw using special methods, and optionally we can put some uniforms (for example, in the form of the same time, which we talked about today), pass on textures and stuff.

After that, we call the drawPrimitives method, and the command is put in our box.

Then we can replace some ingredients, put another geometry or another pipeline state, call this method again, and another command appears in our box.

As soon as we file all the commands in our frame, we call the endEncoding method, and this box closes.

After that, we send the box to our queue using the commit method, and from that moment on, our fate is unknown to us. We don’t know when it will start to run, because we don’t know how loaded the GPU is and how many teams are in the queue. In principle, you can call a synchronous method that will force the CPU to wait until all the commands inside the box are executed. But this is a very bad practice, so, as a rule, you need to subscribe to the addCompletionHandler, which will be called asynchronously at the moment when each of the commands in this box is executed.

In addition to rendering

I think it’s unlikely that many in the audience came to this report for the sake of rendering. Therefore, we look at a technology such as Metal Compute Shaders.

To understand what it is, you need to understand where it all came from. Already in 1999, as soon as the first GPU appeared, scientific research began to appear on how video cards can be used for common tasks (that is, for tasks not related to computer graphics).

It is necessary to understand that then it was hard: you had to have a PhD in computer graphics to do it. Then financial companies hired game developers to analyze the data, because only they could figure out what was going on.

In 2002, Stanford graduate Mark Harris founded the GPGPU website and invented the term General Purpose GPU itself, that is, a general purpose GPU.

At that time, it was mainly scientists who were interested in this, who shifted tasks from chemistry, biology and physics to something that could be represented as graphics: for example, some chemical reactions that can be depicted as textures and somehow progressively count them.

It worked a little sloppy. All data was recorded in textures and then somehow interpreted in a fragment shader.

That is, for example, if we have an array of float, we write it into the texture channel-by-channel: write the first number in the red channel of the first pixel, write the second number in the green channel of the first pixel, and so on. Then in the fragment shader it was necessary to read and watch it: if we render the leftmost upper pixel, then we do one, if we render the rightmost upper one, another, etc.

All this was difficult until the moment when CUDA appeared. Surely many of you have met this abbreviation. CUDA is also a project from NVidia, introduced in 2007. It is such a C ++-like language that allowed general-purpose programs to be written on the GPU and to do some calculations not related to computer graphics.

The most important concept that they changed: they invented a new kind of program. If earlier there were fragmentary shaders that take a pixel coordinate as an input, now there is just some abstract program that takes just the index of its stream and counts a certain part of the data.

Actually, the same technology is implemented in the Metal Compute Shaders. These are the same shaders that are in the GPU on the desktop, they work the same way as fragment and vertex, that is, run in parallel. For them there is a special keyword kernel. They are suitable only for tasks that at the level of the algorithm can be very strongly parallelized and can lie in one command buffer, that is, in the same box with other commands. You can have a render command first, then a compute command, and then a render command that uses the results of the compute command.

Code time

In order to show how Metal Compute Shaders work, let's make a small demo. The scenario is pretty close to reality. I work in an AI company, and it often happens that R & D people come in and say that you need to do something with this buffer. We will simply multiply it by the number, but in real life you need to apply some function. This task is very suitable for parallelization: we have a buffer, and we need to multiply each number independently of each other by a constant.

We will write our class in the Metal paradigm, so it will accept in the constructor an input link either to MTLDevice or to MTLCommandQueue as dependency injection.

In our constructor, we will cache all of this and create a pipeline state at the beginning of the initialization with our kernel, which we will write later.

After that we will prepare types. We need only one type of Uniforms - this will be the structure that stores the constant, which we will multiply, and the count field, which will store the number of elements that we will need to calculate.

With this you need to be very careful. Many, especially C ++ programmers, know what data alignment is when the compiler changes the sequence of your fields in a structure or class, or, for example, changes the markup in order to most effectively read them by-byte. And Swift does the same thing, so when you declare your types in Swift, you need to be very careful. On Metal, C ++ type markup is used, and it may not coincide with SWIFT, so it is good practice when you describe these types somewhere in one C / C ++ header, and then fumble these descriptions between commands.

We only need to implement one method that will accept our array and the number to be multiplied as input. At the same time, we have already cached our device, the queue and the pipeline state.

The first thing we will do is take our device and use it to create two buffers that will be passed to the GPU.

We can not just transfer data, we need to create special objects using a device. We put the bytes from our array and create a structure from the uniform, where we put the count field from the array and our incoming constant.

After that in the queue we take an empty box. We take a special encoder for the compute-team and load it all there.

The question arises, what will we do next. If we called drawPrimitives in render commands, then everything was clear, but how to create a command for Compute Shader? To do this, you need to get acquainted with such a concept as threads and threads.

All Compute Shaders run on top of such a grid, it can be one-dimensional, two-dimensional and three-dimensional, it looks like arrays to make it easier to imagine. Each element of this grid is an instance of your function that accepts a coordinate in this grid as input. That is, if the grid is one-dimensional, then it’s just an index, if it’s two-dimensional, then, of course, it’s two-dimensional. Such an instance is called thread, that is, “thread”. But, as a rule, there is a lot of data, and the GPU cannot run them all at once in parallel, therefore, most often they are organized into groups and run into groups.

For example, we want to run one stream for each pixel in our picture, and we will make a thread group of 32x16 threads that will process the image with such rectangles, where each pixel will have its own thread:

Afterwards in the shader code we can get the current index of our stream, and process either the required pixel (as in this case), or any part of the data, depending on your business logic.

Tradable partitioning can be done in two ways. The first is to entrust it to Apple. This is a bad idea, because most often it is inefficient, and sometimes it does not even allow the algorithm. Often, algorithms, such as blur, require a thread group of exactly 3x3. Many algorithms require that all trade groups be the same size. And Apple itself breaks up the trade groups so that they contain all the data, and if the amount of your data is not completely divided, the size of the thread groups will differ:

Therefore, most often you choose the size of the thread group yourself and make it impossible to divide There were certainly more threads than your data:

It is also important to know how work groups work. Inside, they run on the principle of SIMD, that is, Single Instruction Multiple Data. All threads synchronously execute the same set of machine instructions, only different data is received at the input, that is, they take a step synchronously. And problems begin if one of your threads has a branch.

If the GPU understood which of the branches is correct, everyone else would have to wait. Instead, the GPU executes both branches: no matter how much if if it is encountered, all the code is executed so that it all works in parallel. Therefore, branching in a SIMD code is very bad, and it is important to minimize it. There are mathematical techniques that in certain cases help to avoid this.

The division itself into SIMD groups is beyond our control; we can only know the so-called width of computations, that is, how many threads can be simultaneously contained in a SIMD group. This is necessary for cases where we need to split the data as efficiently as possible. Sometimes we break down threads according to an algorithm, as in the given example with blur, and sometimes you just need to do it as efficiently as possible, and we use width for this.

We take it from the pipeline state, count the number of thread groups, that is, take the count of our buffer, add the width to it minus 1, and divide it by this width. This is a simple formula to ensure that it works out with a margin. And count the number of threads in the same group.

We call this dispatchThreadgroups method, in which we pass the number of thread groups and the number of threads in each group.

After that, our box flies to the GPU. And the very last step is to write the shader itself. At the input, it takes a buffer, a pointer to the beginning of the float of our uniform in the form of a structure, and a thread index is the index of the thread that is being launched.

The first thing we have to do is check that we are not going beyond the boundaries of our buffer (if this is the case, then we will immediately return). Otherwise, we read the necessary element, that is, each stream reads only one element. We read it and write it back already multiplied.

We have a benchmark of what happened. We will have an array with a million elements, each of which contains a unit, and a constant by which we will multiply. We will compare here with how this same task would be solved on the CPU (this would be solved in three lines):

Let's hope that has become faster. And indeed it is: Metal performs on average in 0.006 seconds, and CPU performs in 0.1. That is, the CPU is about 17 times slower, which should please us.

But the results will change a little if we replace a million elements by a thousand. In this case, Metal finished twice as fast as with a million. But the CPU finished exactly 1000 times faster, because the elements became exactly 1000 times smaller. And now the CPU is 30 times faster than the GPU.

This factor must be taken into account: even your algorithm will perfectly parallel, but it is not laborious enough, the overhead in transferring work from the CPU to the GPU can be so great that it will not make sense. And it will not necessarily write so much code, it will be enough to write three lines on the CPU. But you will never know until you try. It is very difficult to estimate this in advance.

In general, the tips are:

- remember the markup of memory

- remember the overhead, which is always there from the transfer of work on the GPU and getting the results back

- avoid branching

- use a 16-bit float if possible (for this there is a special type of half on the GPU)

- if possible, do not use ints (GPU is very difficult with them, it counts them through magic)

- correctly consider the size of the thread groups

- on the CPU side, we cache everything correctly

- and never wait

Based on Metal Compute Shaders, the Metal Performance Shaders framework was created. It was announced back in iOS 9, and was originally a collection of some general-purpose tasks — for example, transfer your image to grayscale. They were super-optimized, each iPhone had its own shader written, Metal has private APIs, and everything worked super-fast.

In iOS 10, neural network support was added there. It so happened that now all the artificial intelligence and all machine learning is just a multiplication of matrices. And the inference of neural networks is just perfect in order to do this on the GPU.

Therefore, this framework is now often called MPSCNN: it is used for the inference of neural networks, and allowed companies, including ours, to use calculations on the device, and not in the cloud.

But the code was very scary. Not complicated, but very cumbersome. For example, to zaenkodit some grid in the style of Inception-v3, you need about 1000 lines of the same type of code:

That is, ideologically, it is not complicated, but it is very easy to get confused in it. Therefore, in iOS 11, in addition to the second Metal, there are several new frameworks.

One of them is the NN Graph API, which makes it very convenient to build a graph of your neural network calculations and then simply transfer the ready box, that is, the command buffer, directly to the GPU.

After that, CoreML was announced, which made a lot of noise and that you probably already had all your ears buzzed, but I still say a few words.

Its main drawback and dignity at the same time is that it is proprietary. It works quite quickly, that is, with the implementation, which you can write yourself using MPS, it is approximately comparable in speed. But CoreML uses its internal analytics to understand where to run the code — on the GPU or CPU. He evaluates the overhead projector for some of his heuristics, and sometimes, when he realizes that it is inappropriate to send work to the GPU, he considers it on the CPU. And this is both cool and not, because you have no control over it, you do not know what will happen and where your grid will be considered as a result.

The advantage is that it is very easy to use. A lot of desktop frameworks are supported. If your company is engaged in machine learning, most often you have an R & D team that teaches your grids on some affordable framework in the style of TensorFlow, Torch, or something like that. And before the appearance of Apple solutions, everyone had their own approaches - for example, there was some kind of encoder that takes JSON and collects your neuron at Metal in runtime. And now it does CoreML.

Another advantage is that it works fast enough. But at the same time it does not lend itself to customization. If you need to implement some kind of custom operator in your neuron, you will not be able to do this. Therefore, CoreML is only suitable for a narrow class of tasks, mainly for long-solved tasks - in the style of classification and other things, that is, neural networks that can be found on the Internet for a long time. Sometimes he bang, and seriously. It is quite raw, at least, judging by our experience, and we report a lot of bugs. And you have no control over what happens under the hood. In general, the final conclusions are:

Minute advertising. If you liked this report from last year's Mobius, please note that in April Mobius 2018 Piter will be held. His program is already known, and there are also many interesting things there - both about iOS and about Android.

Source: https://habr.com/ru/post/352192/

All Articles