REST services on ASP.NET Core under Linux in production

This article is based on the report by Denis Ivanov ( @DenisIvanov ) on RIT ++ 2017, in which he shared his experience in developing and launching a REST service on ASP.NET Core on Kubernetes. At the moment, you can already do this without any particular problems and you should not be afraid to use .NET Core, judging by the experience of 2GIS.

Configuration: ASP.NetCore on Linux allowed not only to use the existing on-premise platform, but also brought several additional advantages, in particular, in the form of full-fledged Docker and Kubernetes , which greatly simplify life.

From April 1, 2017, an icon appeared in 2GIS products, which you can click on and the video will start playing. Advertisers who are placed in the directory, can now buy a new way of advertising, and all the products of our company (mobile, online, API), go to the service, which I will talk about today.

')

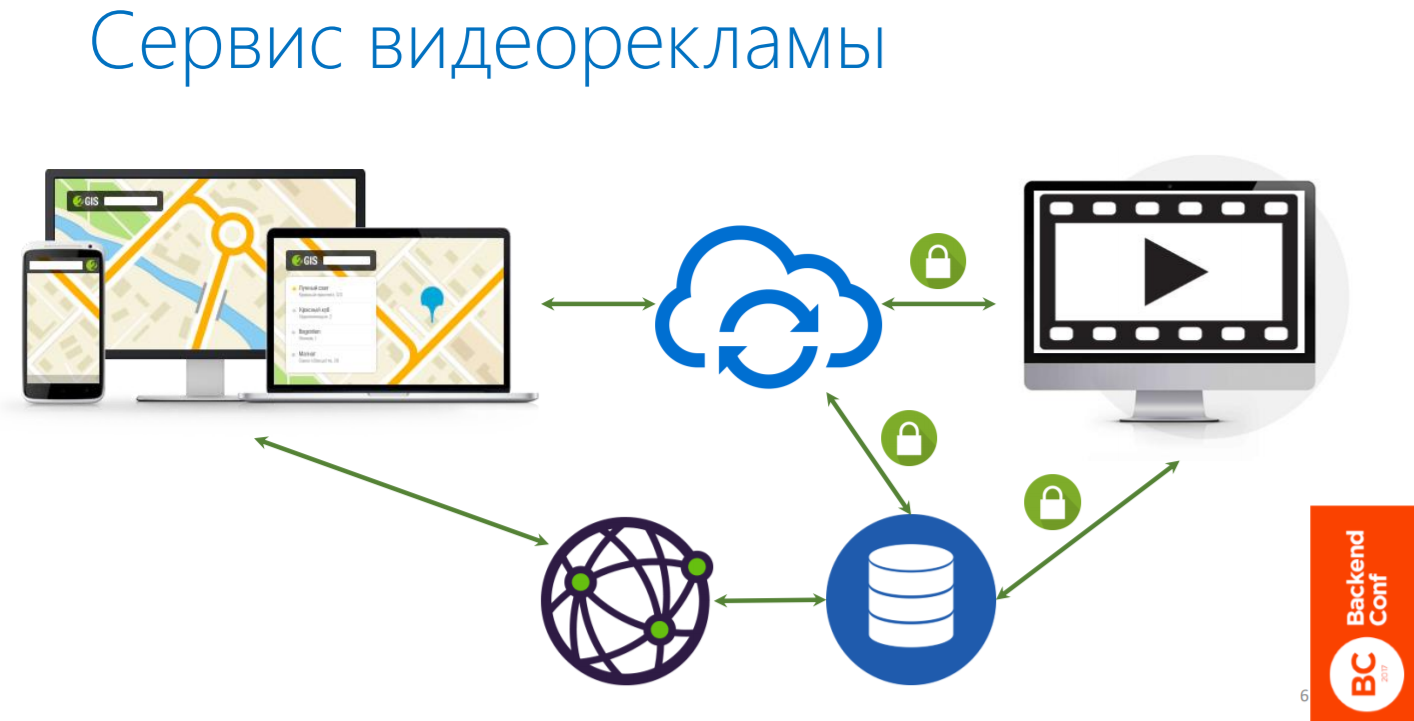

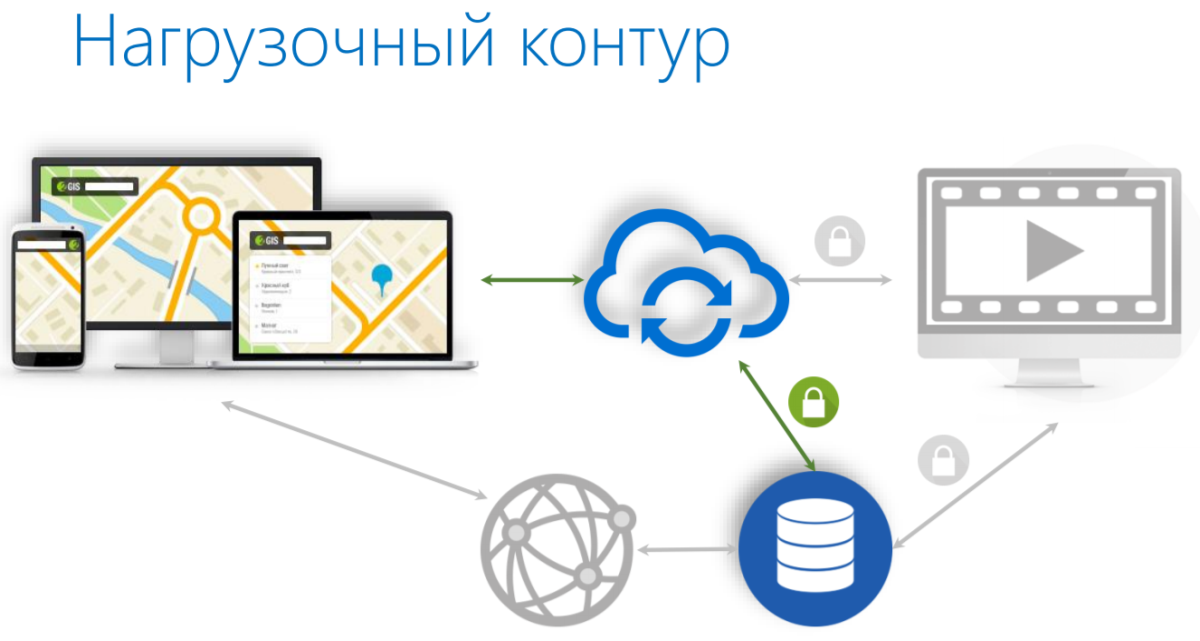

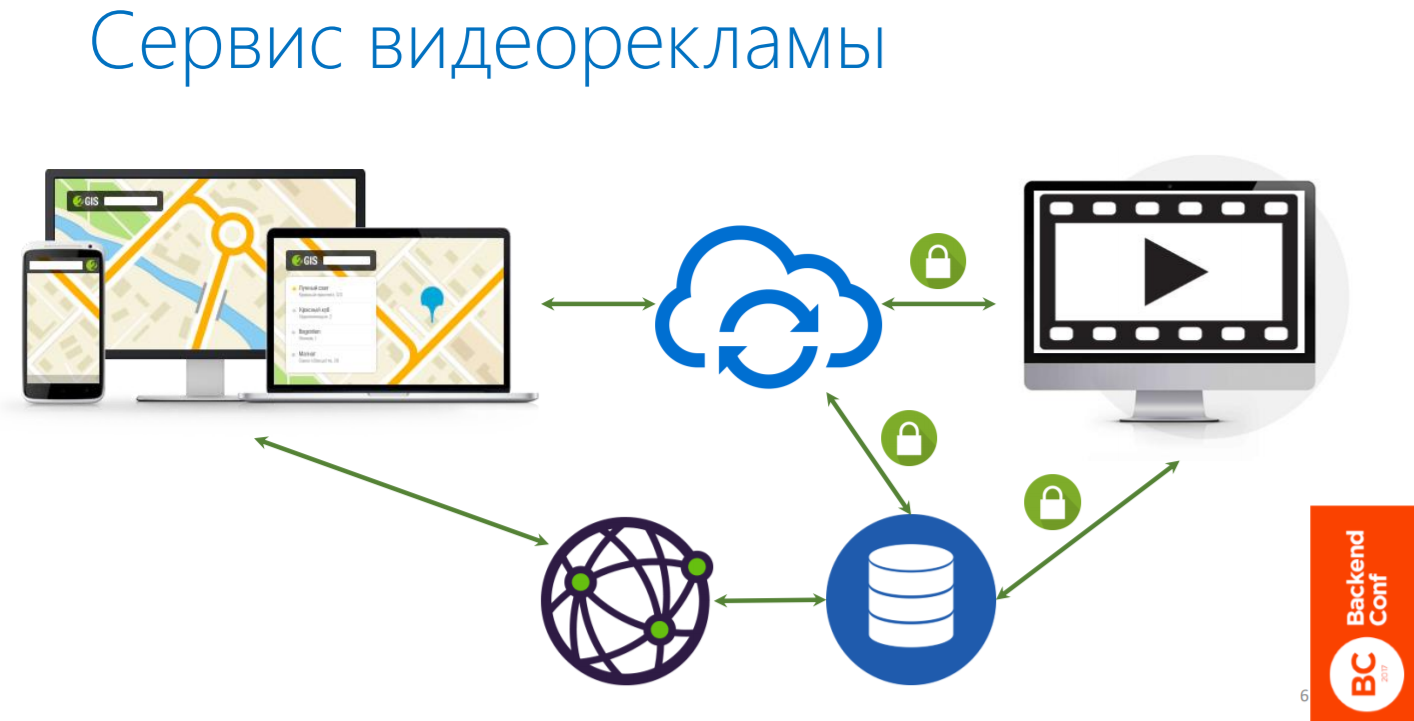

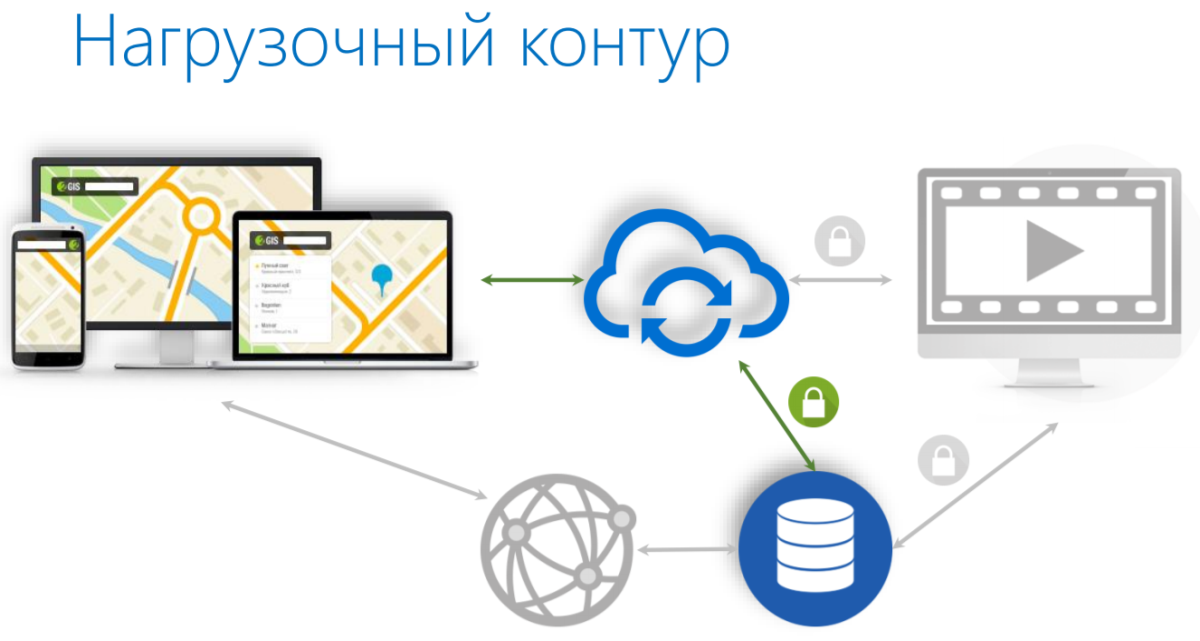

The topology of this service is shown in the picture below. The service is marked with a cloud in the center, it is essentially a backend for products. When a product comes in and says: “Give me all the information about video advertising for this advertiser,” the service obediently gives it to him. This is the information of such a plan: such a cover lies on such and such a CDN, such and such a video file in such resolutions is there and there, the video itself lasts such an amount of time and so on.

Caution: a lot of information and code.

About the speaker: Denis Ivanov works at 2GIS and is the MVP (Most Valuable Professional) in the field of Visual Studio and Development Technologies, his main technology stack is from Microsoft.

So, let's continue ... Based on this information, our final products decide which video file to display: if it is online, the user has a large screen and a wide communication channel, then a higher resolution video is played; if the mobile device is smaller.

In order to distribute videos and transcode them, we use a separate API. We will not talk about it today, as well as about everything related to the processing of media files, their placement on the CDN, their availability. So far this makes our partner, but maybe later we will start doing it ourselves.

Our service has another component (that is marked with locks on the diagram), for which we also have our own API and it is closed for external access. These are internal processes in the company that are needed so that the users - our sales managers - sell this advertisement: upload videos, images, choose covers, that is, fully customize how it will look in the final product. It also has its own API for such processes. It is closed for external access.

Requirements for video advertising service:

When we started to develop the service, the first requirement was high availability, and all over the world. We have users in Russia, in the CIS countries and there are several international projects, even in Chile.

The response time should be as short as possible, including as much as the API we use provides. We chose a figure of 200 ms simply because we do not want to have any problems - we need to respond very quickly.

Our development team at 2GIS, is engaged in just advertising services and make a sales system within the company. This project is our first experience in developing services specifically for public access. We, as a development team, know very well .NET, since we have been doing this for 10 years (I have been at the company for 7 years) and have been building applications on this stack for a long time.

For this project we have chosen ASP.Net Core. Due to the high availability requirements that I have already mentioned, we need to place the service exactly where our final products are located, that is, to use the same platform. In addition to the fact that we were able to use the existing on-premise platform , which is in 2GIS, we also received several additional advantages:

In my opinion, this is a good solution that helps launch the processes of continuous integration and continuous deployment and store the entire infrastructure as code. There is a YAML file in which you can describe all the steps of the assembly.

There is a CI starting kit in the company. He, by the way, open-source. On the basis of make, the guys inside the company have written many different scripts that simply make it easier to perform routine tasks.

We can fully use Docker, since it is Linux. It is clear that Docker also exists on Windows, but the technology is quite new, and so far there are few examples of production applications.

In addition, if you use Linux, you can make your applications using any technological stack, microservice approach, etc. We can do some components on .Net, others - on other platforms. We used this advantage to perform load testing of our application.

Kubernetes can also be used simply because Linux.

Therefore, the following configuration was formed: ASP.Net Core on Linux. We began to learn what Microsoft has for this and from the community. At the beginning of last year, everything with this question was already good: .Net was released in version 1, the libraries that we would need were also there. Therefore, we start.

We came to our guys who are engaged in a platform for hosting applications, and asked what requirements the application must meet in order to be comfortable to use later. They said that it should be at least 12-factor. I'll tell you about it in more detail.

There is a set of 12 rules . It is believed that if you follow them, your application will be good, isolated, and it can be raised using all the tools that concern Docker, etc.

The Twelve-Factor App:

I would like to highlight some of them.

Dependencies - with the application. This means that the application should not require any pre-setting from the environment where we are deploying it. For example, there should not be .Net of a certain version, as we like it on Windows. We have to carry both the application, and runtime, and the libraries that we use, along with the application itself, so that we can simply copy and run it.

Configuration through the environment. We all use Config files to configure the application to run at runtime. But we would like to have some preset values in these Config-files, and we could pass through the environment variables some additional parameters of the environment itself so as to make the application work in certain modes: it is a dev environment, or a staging environment or production.

But the application code and different configuration parameters should not change. In classic .Net, which is on Windows, there is such a thing as the transformation of configs (XDT-transformation), which is no longer needed here, and everything is greatly simplified.

Another important feature that introduces 12-factorality: we must be able to quickly stop and start new processes. If we use the application in any environment, and suddenly something happened to it, we should not wait. It is better that Wednesday itself extinguish our application and launch it again. In particular, there may be reconfigurations of the environment itself, this should not affect the application.

Logging to stdout is another important thing. All the logs that our application produces should be logged to the console, and then the platform on which the application is running will figure out: take these logs and put them in a file, or in ELK in Elasticsearch or simply show the console.

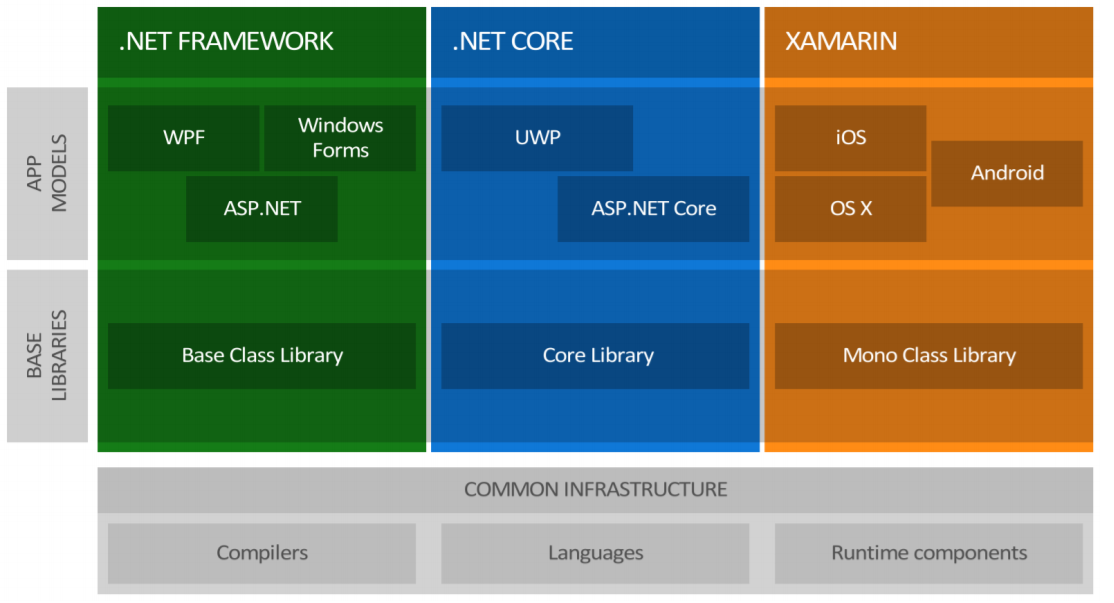

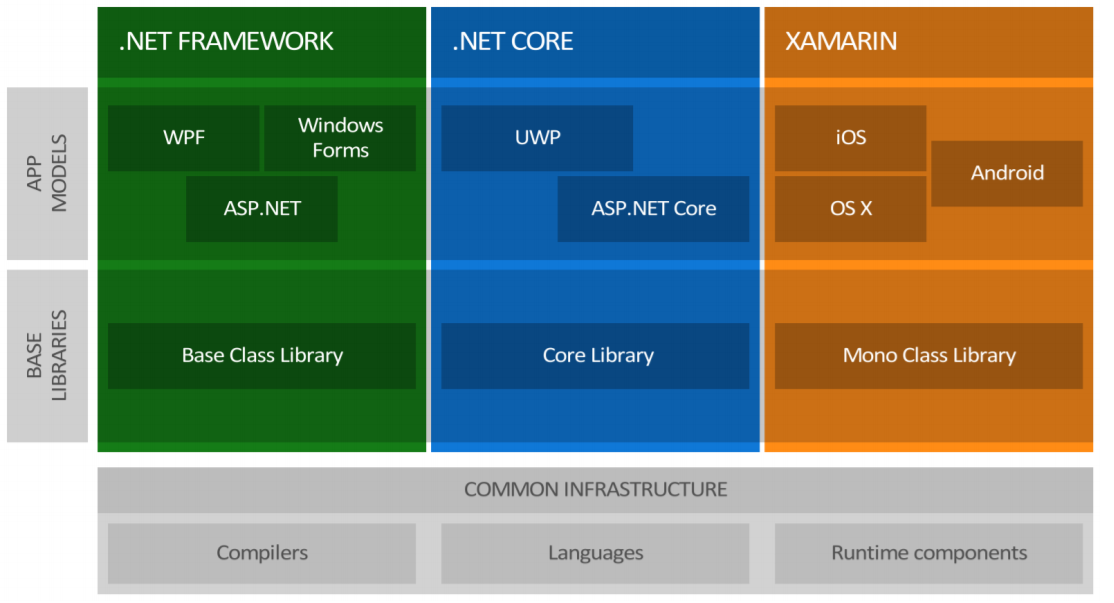

Microsoft has long been the good old .NET, which is on Windows, which has WPF, Windows Forms, ASP.Net, under it there is the Base Class Library, Runtime, and so on.

But, on the other hand, there has always been an alternative approach - take mono and actually run the code written in C # on other platforms. Microsoft made another stack called .NET Core, where they just implemented the ability to write applications that would be initially cross-platform.

They have done quite a lot in order to create a common infrastructure: compilers, languages and runtime - all cross-platform.

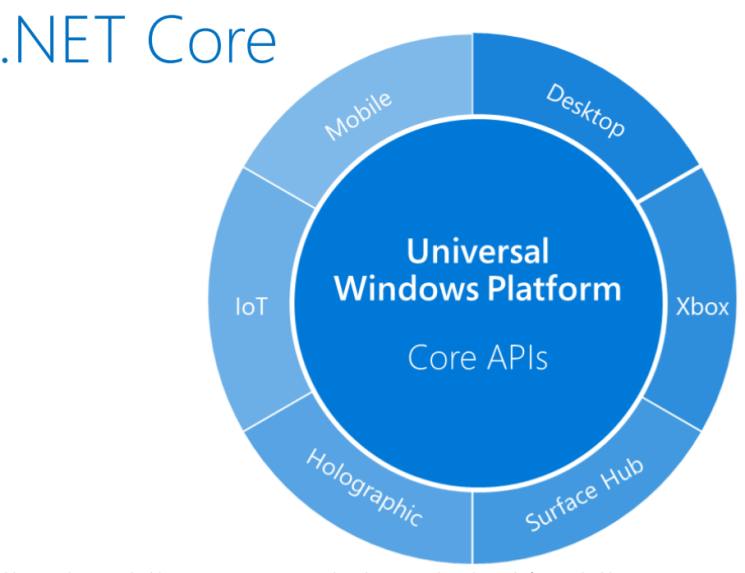

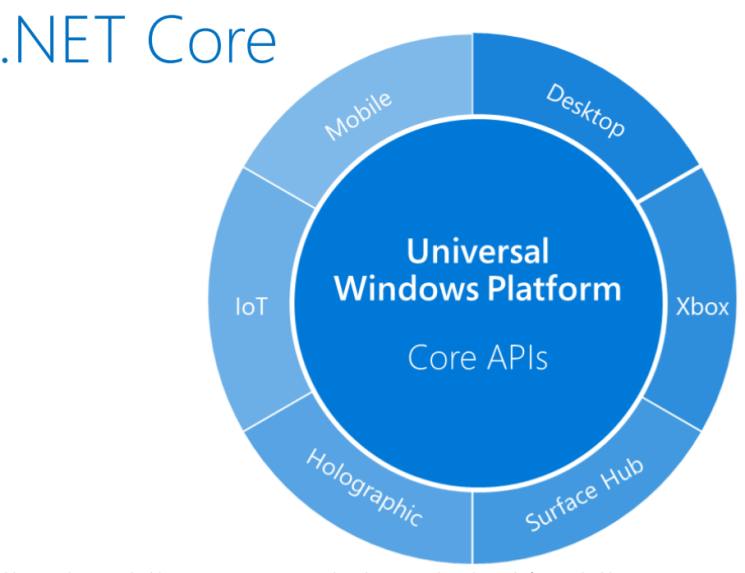

On the .NET Core there is a UWP block - this is a separate world. It allows you to run .NET-applications under different platforms, from IoT-devices to the desktop. For example, I have seen people running .NET Core applications on a Raspberry Pi.

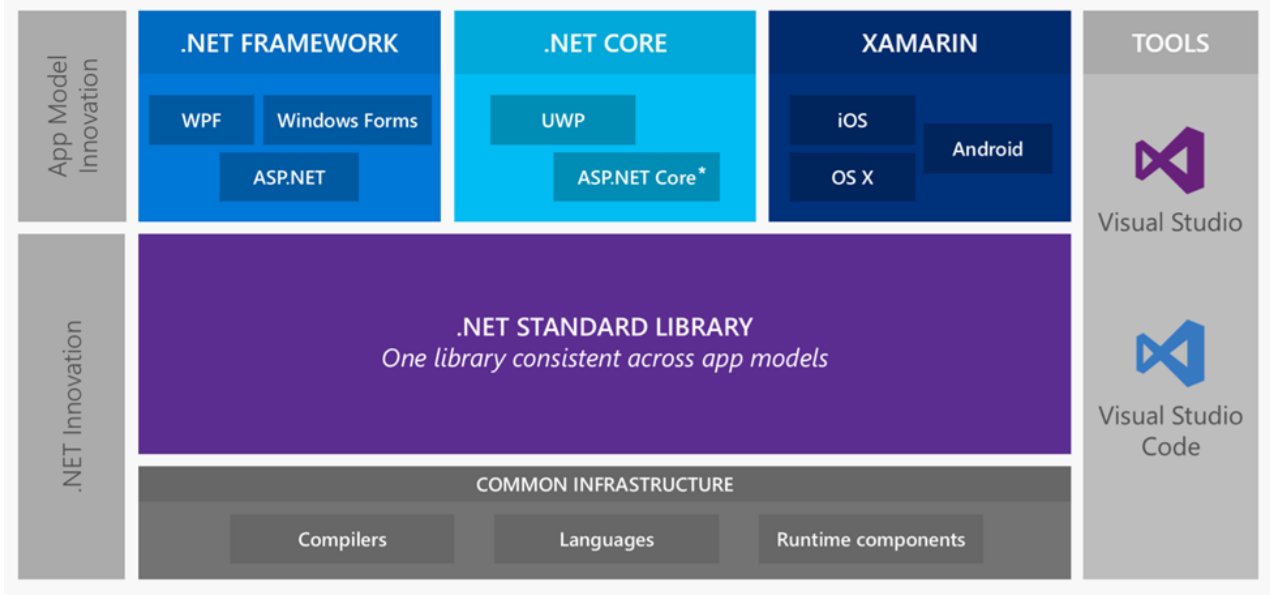

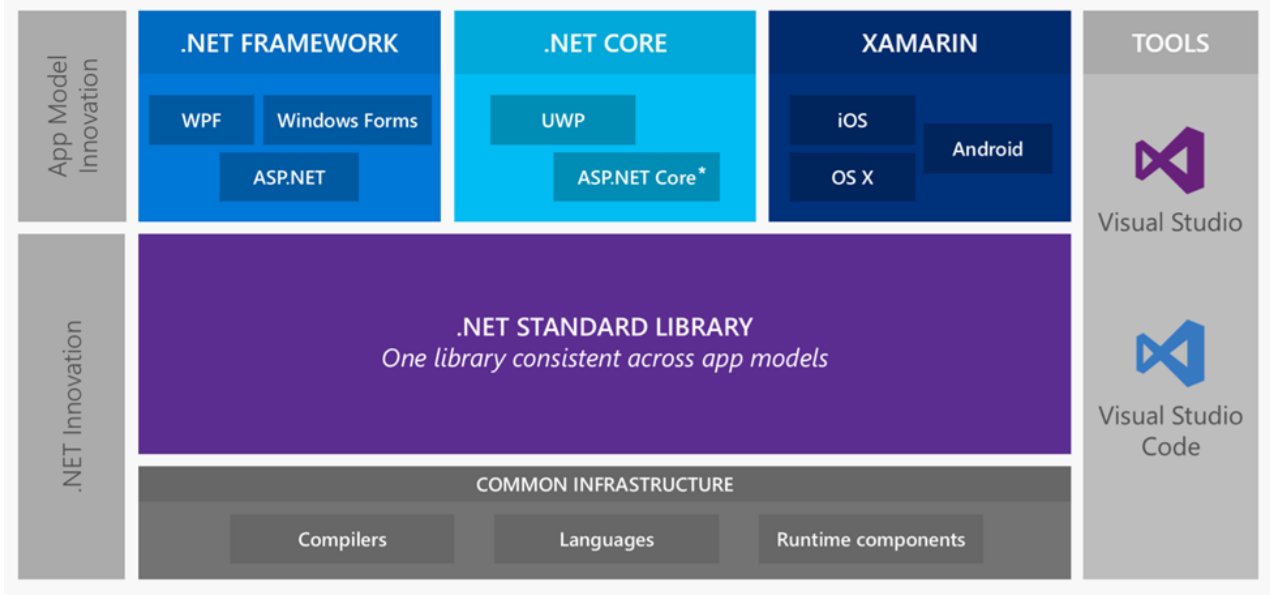

There were several base libraries: Base Class Library, Core Library and Mono Class Library. Living with it was pretty hard. Then the Portable Class Library appeared, etc. Therefore, Microsoft went in the direction of unifying APIs that are cross-platform and work on all these stacks, and made the .Net Standard Library.

Currently, this is .Net Standard version 2.0, in which practically everything that was in the old classic .NET works cross-platform.

We will talk about .NET Core and about its part, which concerns ASP.Net Core.

What .Net Core gives us in terms of 12-factor? In fact, the 12-factor is implemented here quite well.

There is complete dependency control , i.e. we have the ability to build the application and fully control its dependencies.

We can configure the .NET application so that the platform is explicitly specified (win10-x64 / ubuntu.16.04-x64 / osx.10.12-x64) during the build, and in the final artifact folder we get everything related to the application, runtime, libraries , that is, all-all-all, collected under a specific platform. To do this, we just need to choose it - be it Windows, Linux or OSX.

As I said before, we need to select a framework (currently, it is netstandard1.6) and include 2 libraries:

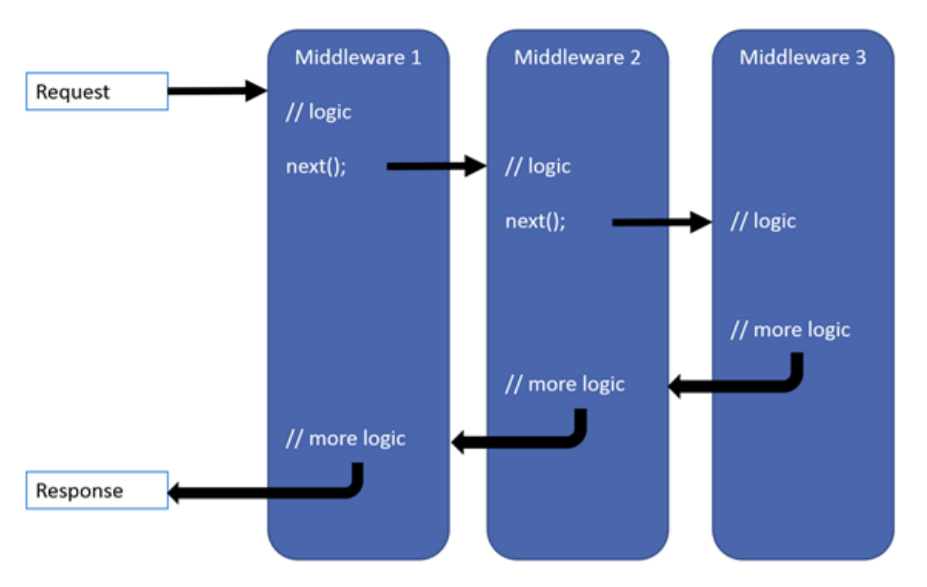

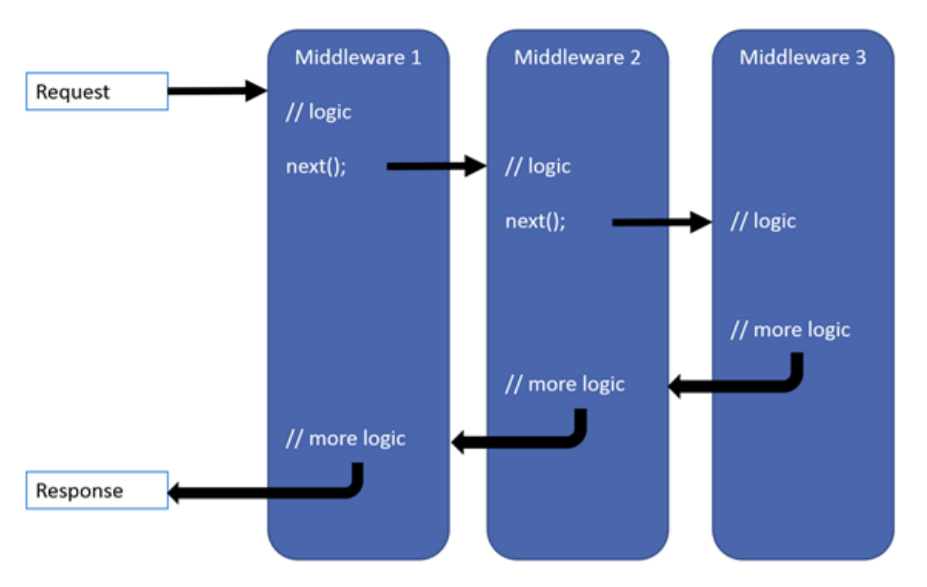

The whole ideology of ASP.Net Core is built on the principles of Middleware. We have a certain number of layers, when a request comes into our application, the first Middleware becomes in its path, which executes its logic, and sends this request to the next one. The next one executes, the next forwards, and so on until the pipeline has ended. Back we send Response to the user.

If we talk about the applied aspects of this all, then:

This is the simplest example of Middleware, which is what we did in order to host the application in Kubernetes. Let's take a closer look at what is there.

Here in the constructor is RequestDelegate next . This is the next Middleware that is inserted into the constructor, and we have the opportunity to send him a request. Specifically, this Middleware is responsible for responding to all requests that came on / healthcheck.

If the request did not come to this path (path), then we give control to the next Middleware, that is, forward it further. If this, then we say 200, everything is fine, the sentence works, and we output OK to the console. Further the request does not continue to be executed. This is where it ends.

This is a very fast thing. In our tests, all this code works literally in 2-3 ms.

Here are the basic features of the REST services that are usually needed when we make such applications:

If you visualize it, it looks like this.

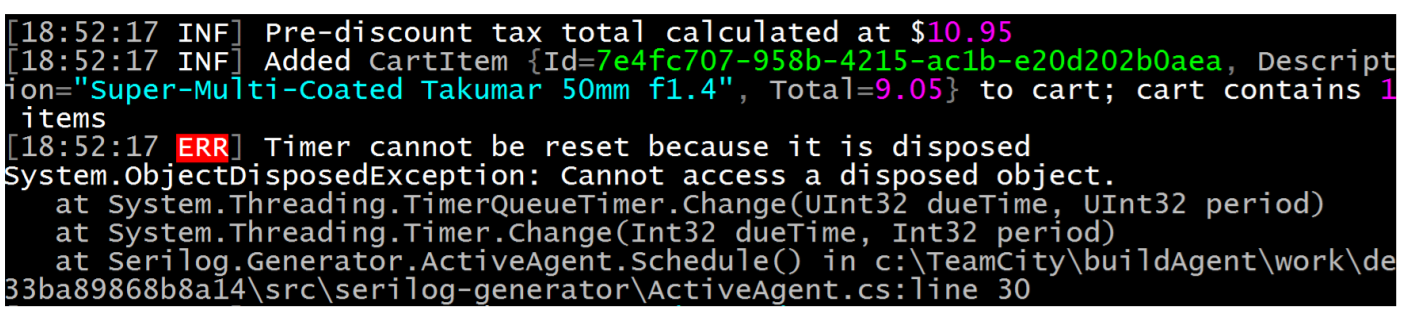

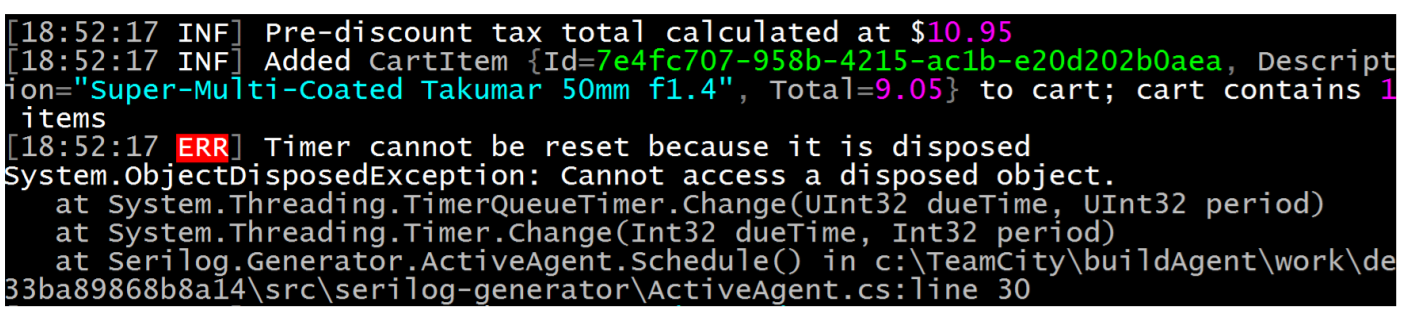

There is a console with multi-colored lines. In fact, if you look in more detail, we will see that each message that is displayed in the log has Data, a level (error, warning, info) and so on. But, in addition, there are some parameters that are highlighted in different colors.

In addition to the text of the logs, we can still have some key / value pairs that can be explicitly indexed. If we want, say, to log everything completely, which our application sends for a specific request, we can add the request id to the log, just like a key / value pair: request id is equal to so much. Then there is an opportunity for this request id to be indexed by our logs somewhere. In the end, simply by filtering all the logs for this request id, we can find all the messages that were generated by this request. This wonderful thing gives us structural logging.

In order to perform logging, ASP.Net Core has wonderful Serilog, log4net and NLog libraries. We stopped on Serilog , because everything is out of the box, including the ability to output logs in any format, not only to any consoles or to the highlighted console, but also immediately, if you suddenly need it, to Elastic or to a file.

So it is customizable. We have a logging level. Then we say that we want to display logs in the console, and then in what form these logs should be displayed in the console. If our application is working on staging or in a production-environment, I would like these logs to be output in Json format, so that the environment can then pick them up and index them in some way. Again, all this is out of the box and it is possible to enrich all these logs with some additional parameters, for example, to output ThreadId, RequestId, etc. in such a declarative way.

We would like the API of the service we are doing to support versioning out of the box. When we work with public clients and do not know how many there are and what they are in principle, versioning is a very useful thing.

In our case, it was just important, because we have different customers. The life cycle of a specific version of the mobile application 2GIS can be up to six months, or even more. That is, users may not update the application on the device for more than six months.

But, nevertheless, if this particular version of the application comes to the backend, we have to give the data for exactly the contract that is supposed for this device and for this version of the application. If later, over time (this usually happens), we took and changed something in the API - we added, or, even worse, deleted some data from the answer, or basically changed the interaction contract, we would not want it to break the old one. attachment.

There is a wonderful library ASP.NET API versioning from Microsoft. It is fully open-source, works on the guidelines that Microsoft has publicly formulated for its services, and gives the opportunity to version the API in various ways, including using SemVer or on dates, like Netflix does.

Again, there are various options for transferring information about the API version: via the query string, URL path, header — everything is supported out of the box.

Suppose we have such a controller.

All we have to do is first indicate that the version of the API that the controller provides is 1.0. Next we need to write our requests to include information about the version of the API.

In this configuration, there are two ways to include the API version in the request — via the URL path, or via the query string. Clients can choose a convenient way for them, but all requests will come to the same endpoint.

If over time we have a second version of the API, we need to do a little - come to the same controller and say that we have a second version of the same API.

In fact, all we need to do is to leave the method that was, without changing anything in it either. It gives data to customers who knew about this version of the API at the time

We can add a new controller method and tell all requests that come to API 2.0 to be redirected here. Thus, we will not break old customers, and add functionality for new customers.

It is not necessary to do this in one controller. We can create a new controller and say that it is a controller for API version 2.0 (line

I also want to talk about the library Swashbuckle.AspNetCore , which allows you to enable Swagger.

Many have heard about Swagger, so the only thing I would like to say is that by connecting Swashbuckle.AspNetCore for ASP.Net Core, we get this functionality out of the box again, and it knows how to be friends with versioning API.

That is, by configuring the application in this way, saying that we have a certain VersionDescriptionProvider, and just running through the foreach on ApiVersionDescriptions, we can add Swagger documents, that is, the very formal descriptions of our APIs.

After that we can add SwaggerUI, saying that we have SwaggerEndpoint on such json'ah, again, depending on the version of the API that we have. Thus, we will get API versioning, which Swagger knows about, there is a switch and an opportunity to see which of these API versions have these methods. Everything is cool and all this is in the demo, you can see.

Gently move on. We wrote an application in which there is all the necessary functionality, we even wrote business logic there. How do we get this all down? Yes, so that later zadeploit!

In fact, everything is quite simple. I said that we use GitLab CI. Consider the example of one of the fragments of the GitLab CI file one build step.

We have a build step, on which we want to build an application. For this we need not so much again: the base image provided by Microsoft. This image has everything you need: all the tools, the .Net command line, the .Net itself of a certain version, runtime, etc. This image is quite large, so, of course, in no case should it be dragged into production. It should be used only to build the application.

This is just about that same 12-factor, when at the stage of assembly and operation of the application should be separated.

We use this image, we say: “Gather us, please, all the dependencies that exist in this application just for this platform, under which we want to build!”

Next, of course, we want to run unit tests before building the application. If the unit tests did not pass, then nothing is needed to collect because the application does not work. If everything is fine, we say

We are talking about where it is necessary to deploy all this in terms of the artifacts that are obtained. In this example, the folder

After we have compiled the application, we need to collect the Docker image, and collect the image directly for each change, since we have the same CI_TAG at the Gitlub CI level. In fact, this is a step to the Continuous deployment mode.

Next, we configure other parameters, take the artifacts that were generated in the last step, and call docker-build. After the docker-build has passed, we got the image locally and can place it on the docker-hub - local, global - anywhere.

Thus, we have an application compiled for the OS we need and there is an image of this application on the docker-hub.

All we need to do next is to deploy it, and it is time to talk about Kubernetes.

This is a container orchestration tool that allows you not to bother too much about how to launch, lift, monitor their logs, etc. Our company has deployed as many as four Kubernetes clusters that can be used.

There are basic concepts in Kubernetes.

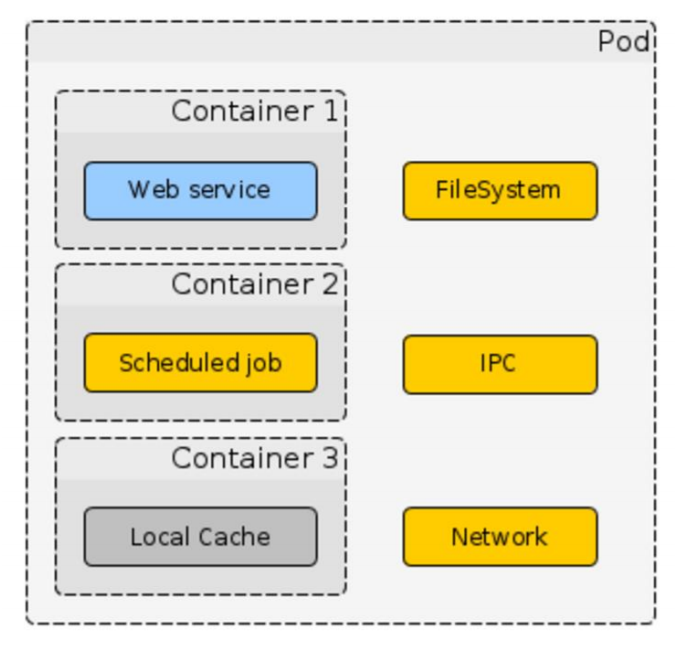

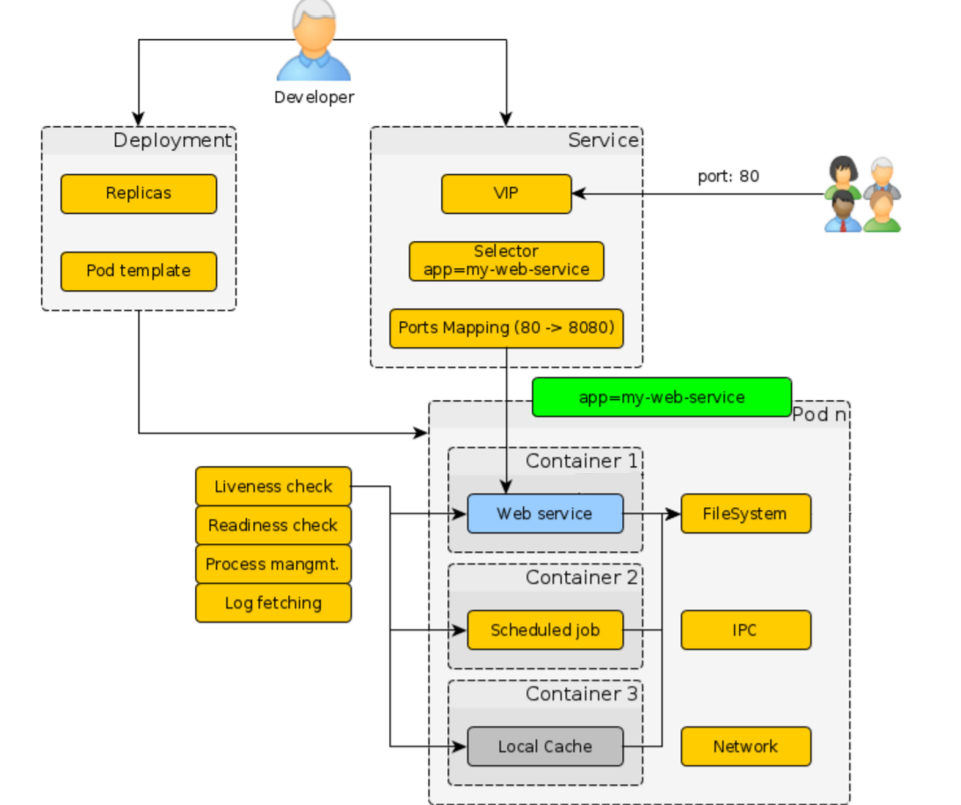

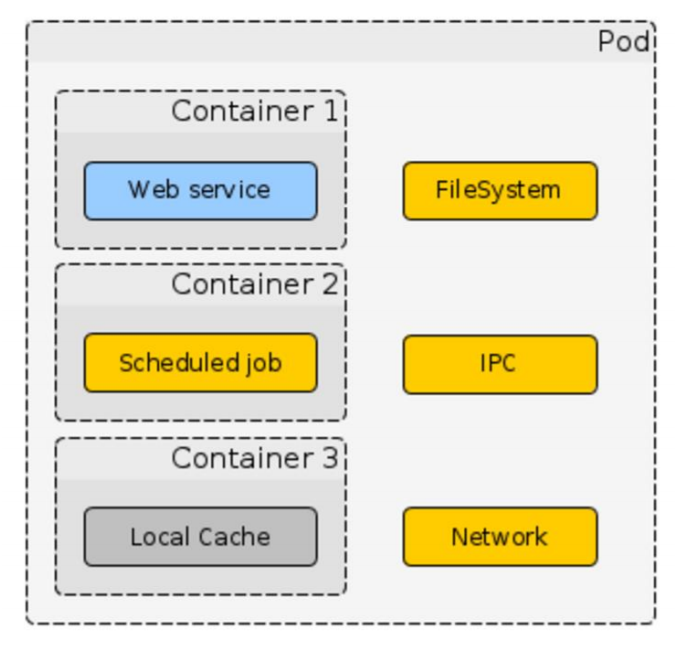

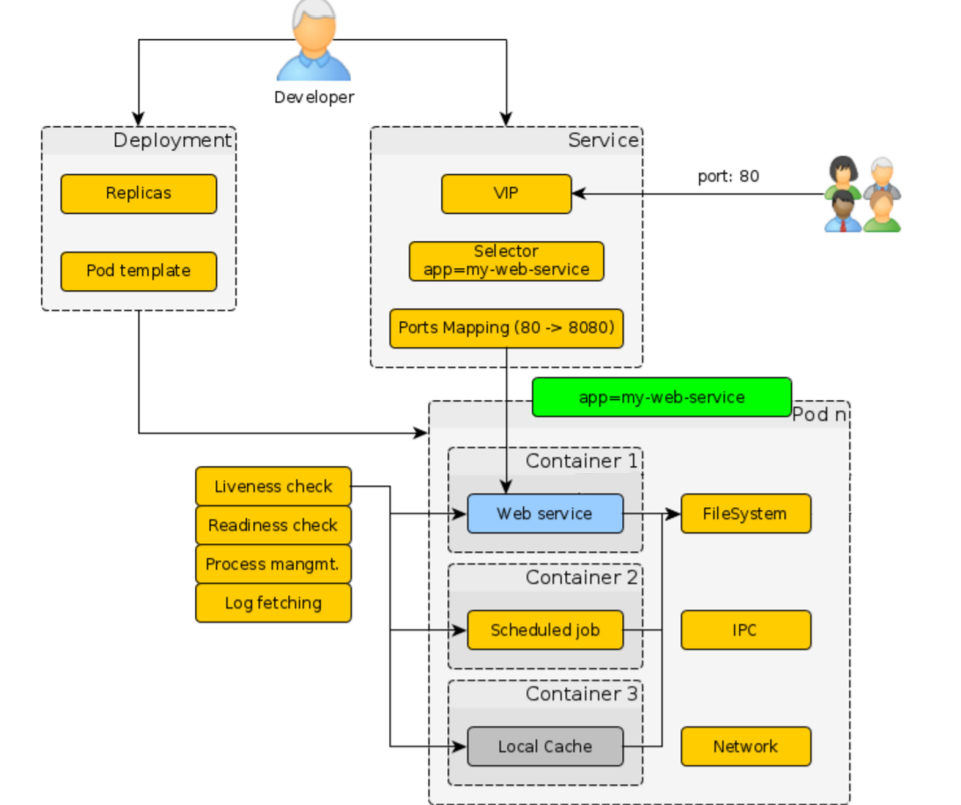

Pod

This is a logical union of containers. There may be one container, maybe several, and it is assumed that all containers that lie in the Pod are rigidly interconnected and use some kind of shared resources.

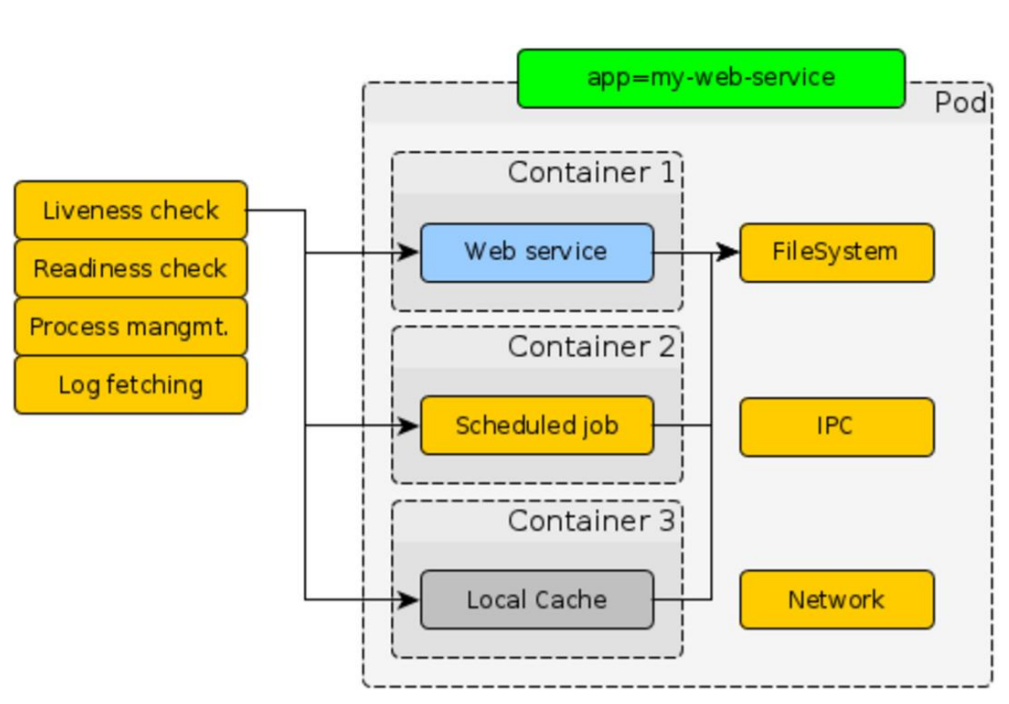

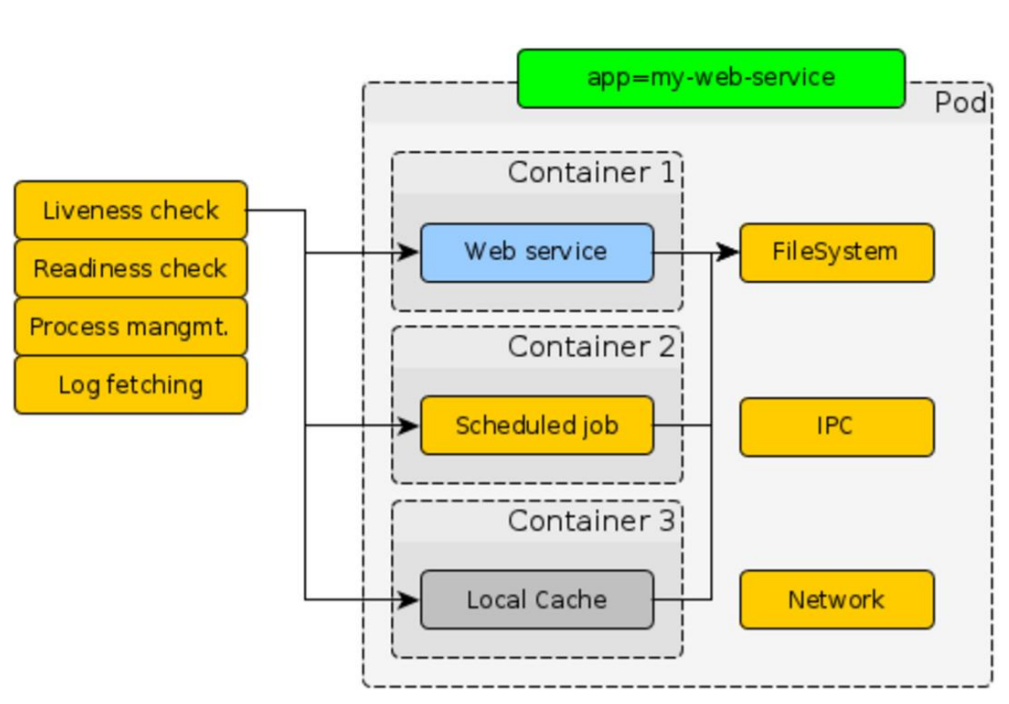

Kubernetes out of the box checks our application: liveness check, readiness check, log fetching, and so on. All these issues that concern container management, Kubernetes solves for us.

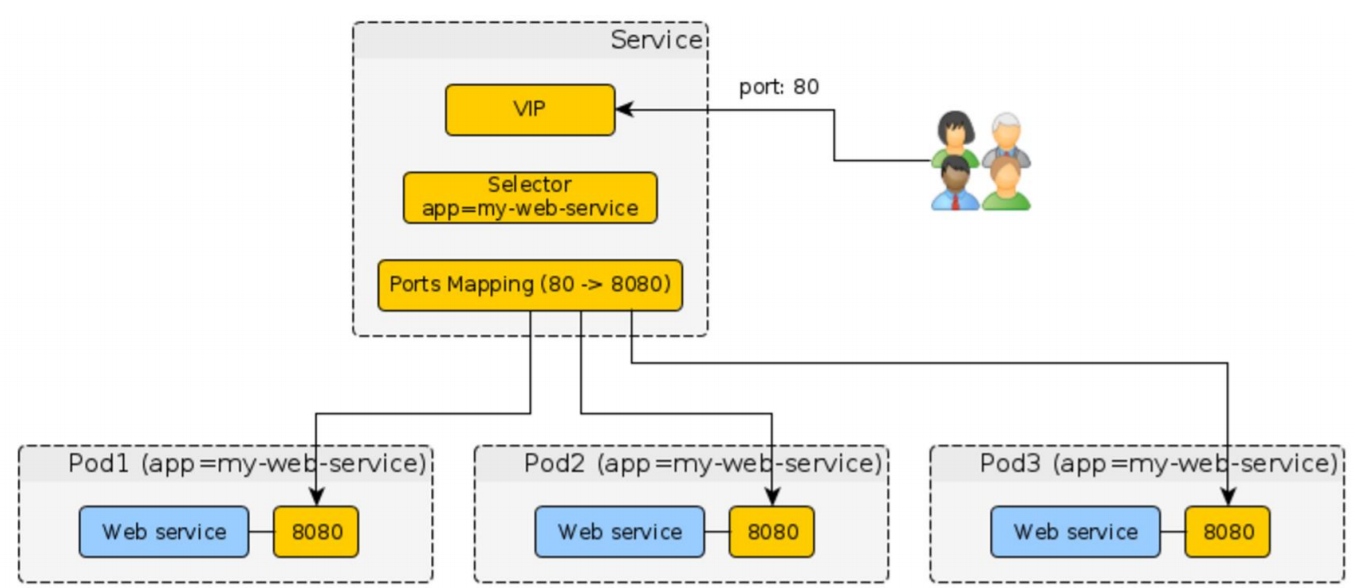

Service

The second thing that is important to know when we work with Kubernetes is service. It allows you to receive external traffic to Kubernetes and say where this traffic should be redirected inside Kubernetes - to which Pods, to which containers.

For this, he needs 2 things:

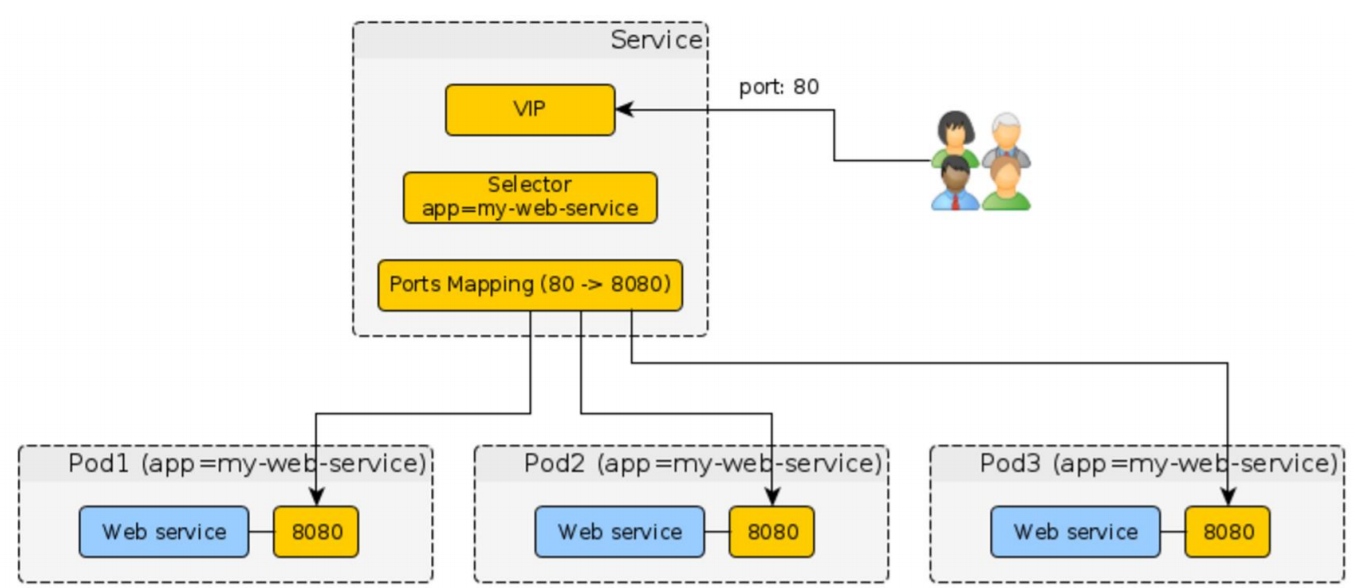

The general picture in the figure above: there are users who come to the Kubernetes cluster from the outside; we get this traffic, we understand by the domain name, who needs it; on the selector we find those Pod'y; we send this traffic to the corresponding ports in containers.

Deployment

Allows you to deploy Pods automatically across the entire Kubernetes cluster. The whole picture looks like this.

As developers, we have to write Deployment, tell us how many replicas we need, what template, etc. Next, put all the labels in order to associate them with the service. Next, write a service that will just stand at the entrance to Kubernetes.

In fact, everything - the user's path is defined.

The only thing that is not in Kubernetes itself, and that we had to add on our own in order to close the application with a specific version on Kubernetes, is to write specific Deployment-files with an indication of a specific version of our application and a specific version of the image. This is necessary in order to embed all this into the continuous deployment pipeline. I think that not only we did it, because so far Kubernetes does not provide such an opportunity.

The Deployment example is higher and has:

The service looks like this.

We are talking about the fact that the external port is 8080, and the internal one will be transmitted through the template. And, actually, the selector.

That's the whole service.

Next we need to specify the parameters in order to collect files for Kubernetes using these template engines.

Specify which cluster Kubernetes we will use; application port 5000;

After we have done all this, we are writing another build step for gitlub CI.

In fact, we are talking about the fact that we have such a config file, raise the container from the image on the Docker hub and call the k8s-handle utility in it.

This is enough to deploy any , not just ASP.Net Core application on Kubernetes. So it works in 2GIS and does not cause any special problems.

By the way, k8s-handle is also an open source thing.

Now you need to make sure that the application that we have done, really fulfills the load, is fault-tolerant and is given for a certain time.

We are developers, and especially in testing we do not understand, but in 2GIS there is a separate load testing team. We came to them and said:

Testers wrote us tests on Scala. They use Gatling to perform stress tests.

Everything is simple here. It is written that we have some asserts, we want the number of requests per second to be more or less so much. We want there to be no failed requests, so that the responseTime is so much.

After that, we say how to conduct a stress test:

At the end, you need to specify that there is such a test, with such and such steps, HTTP, a runtime of 180 seconds in our case, and such and such asserts.

This is one example of a load test.

After that, they wrote us a template in gitlub CI.

Here is the code for configuring the tool, but the tool itself (

We ran the test in 2 variants:

In the first iteration, our results were not very good - not the ones we were waiting for. And we went towards Performance.

The first thing that comes to mind to increase the number of requests that we can handle, and reduce ResponseTime, is caching. If we have resources that we can save in the cache, why go after them again each time.

To do this, we have a good old ResponseCache attribute in ASP.Net, but in ASP.Net Core it has acquired additional functionality.

If we simply set ResponseCache and specify its Duration, then we get caching on the client by default. That is, the client on his side saves the data that was given to him, and does not send more requests to us.

But in ASP.Net Core also added server caching. There is such a thing Response Caching Middleware , which allows you to manage server caching quite well, that is, cache directly the responses from the services in the process memory. And we can expose how we want to share these answers. In our case, if there is a versioning, it would be nice, of course, to give different answers to each version of the API.

After we turned on server-side caching and client-side caching and started our test, everything went wrong, because somehow everything quickly began to arrive. Therefore, then on the side of load tests, we turned off client caching, so that everything was more or less similar to real conditions when we have different clients.

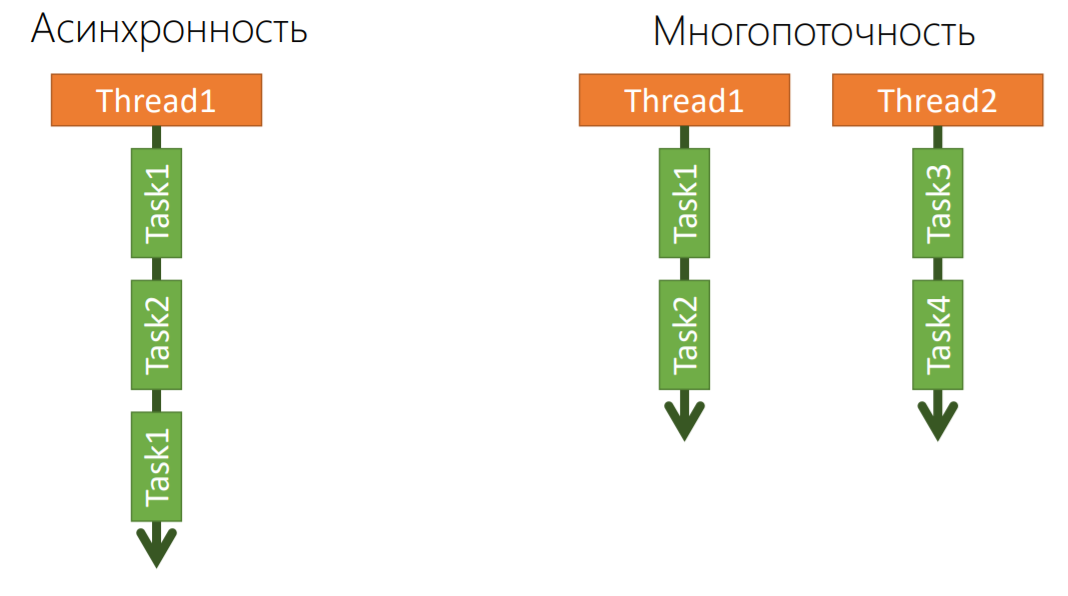

The second thing that comes to mind when we talk about performance is work with threads. Yes, we have .Net, in which everything is fine from the point of view of streams, so why not use it.

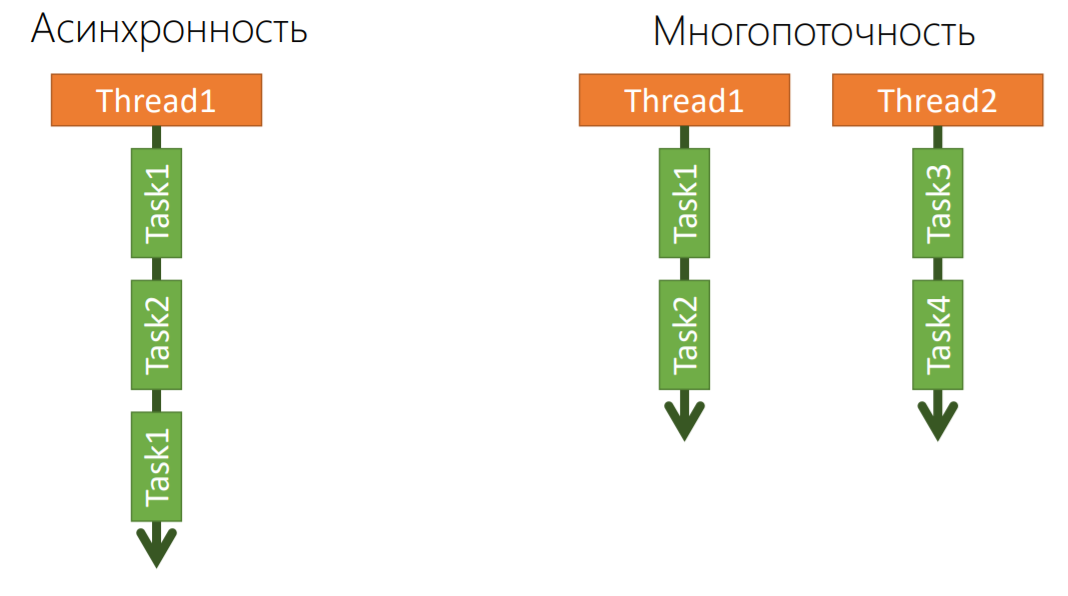

There are 2 things here - asynchrony and multithreading, and this is not the same thing.

Asynchrony is when there is one thread, one OS resource on which we can perform a series of tasks sequentially. If Task1 has sent a request to the database and is waiting for something, we can send this thread to the pool so that the next request from the client can be processed (Task2). If he is also thinking, we can change the context of the thread and execute Task1, if a response from the database has already come to him, etc.

That is, we can perform several asynchronous tasks on one physical OS resource. Async, Await in C # is just about asynchrony.

Multithreading is when there are many different threads, many OS resources, no one bothers to run some processes in parallel, but no one says that these parallel processes within themselves cannot be asynchronous.

Therefore, Async / Await is not only about asynchrony, but also about multithreading, but you need to use it correctly.

Below is a simple example where you can use .Net multithreading right out of the box.

Suppose we have a service that gives us data in the form of a collection, on the basis of this data, we still need to iterate, cycle through to other services in order to get additional data based on the existing ones.

This is an example straight from our lives. We have video advertising, and it can be in several formats, we need to find out what divisions, bit-rate, etc., are in all of these formats.

Our first request to the API: “Tell us, please, what media files are on your side?” She replies that there is a collection of data. Further on each of them we receive already detailed information.

In developing this all, we certainly didn’t think much about performance. Our load tests showed us that the response from our service was about a second, which is not very good.

Therefore, we rewrote. This is the correct version.

Unfortunately, it is not always possible to write the correct, efficient multi-threaded code the first time so that there are no deadlocks.

Here we do almost the same thing, but we launch requests to the API already in parallel, and also asynchronously.

We receive a collection of Task'ov on an exit, and we launch one more which will wait for their performance. Thereby we get acceleration as many times as we have video files.

If, say, for each video ad there are 3 file resolutions, we get a reduction in time by as much as 4 times. We need to make 1 request to get all the metadata, and then another 3, 4 or 5 requests. They are executed in parallel, so the total time of their execution is about the same, and we can get a serious performance gain.

After we implemented this, load testing showed other results.

We realized that we have some limits on memory and processor, which, on the one hand, are dictated by the runtime, .Net, on the other hand, by the code that we wrote. These are specific figures from the production:

Based on our experience, we can say that .NET Core can currently be used in production . We did not have any freezes, incomprehensible nuances with the work of garbage collector or problems with stream orchestration, etc. Everything that is usual in .Net currently has the same quality in ASP.NET Core, and you should not be afraid to use it, judging by our experience.

There were no problems concerning Linux . If there are any problems, they are most likely yours. We had deadlocks with just the use of threads, but they depended not on Linux, but on programmers.

As I have already shown, Docker and Kubernetes greatly simplify life.in terms of developing .Net applications, and, in principle, in terms of developing applications, and using different stack technologies.

The last thing I would like to say is that you need to optimize applications . All this is out of the box, but you still need to think so as not to get interesting effects.

Contacts:

Code and presentation here .

E-mail: denis@ivanovdenis.ru

Configuration: ASP.NetCore on Linux allowed not only to use the existing on-premise platform, but also brought several additional advantages, in particular, in the form of full-fledged Docker and Kubernetes , which greatly simplify life.

About service

From April 1, 2017, an icon appeared in 2GIS products, which you can click on and the video will start playing. Advertisers who are placed in the directory, can now buy a new way of advertising, and all the products of our company (mobile, online, API), go to the service, which I will talk about today.

')

The topology of this service is shown in the picture below. The service is marked with a cloud in the center, it is essentially a backend for products. When a product comes in and says: “Give me all the information about video advertising for this advertiser,” the service obediently gives it to him. This is the information of such a plan: such a cover lies on such and such a CDN, such and such a video file in such resolutions is there and there, the video itself lasts such an amount of time and so on.

Caution: a lot of information and code.

About the speaker: Denis Ivanov works at 2GIS and is the MVP (Most Valuable Professional) in the field of Visual Studio and Development Technologies, his main technology stack is from Microsoft.

Content

- Briefly about the service

- On-premise platform

- .NET Core, ASP.NET Core and basic features

- Build

- Deploy

- Stress Testing

- Means of increasing productivity , including:

So, let's continue ... Based on this information, our final products decide which video file to display: if it is online, the user has a large screen and a wide communication channel, then a higher resolution video is played; if the mobile device is smaller.

In order to distribute videos and transcode them, we use a separate API. We will not talk about it today, as well as about everything related to the processing of media files, their placement on the CDN, their availability. So far this makes our partner, but maybe later we will start doing it ourselves.

Our service has another component (that is marked with locks on the diagram), for which we also have our own API and it is closed for external access. These are internal processes in the company that are needed so that the users - our sales managers - sell this advertisement: upload videos, images, choose covers, that is, fully customize how it will look in the final product. It also has its own API for such processes. It is closed for external access.

Requirements for video advertising service:

- 99.99% Worldwide Availability

When we started to develop the service, the first requirement was high availability, and all over the world. We have users in Russia, in the CIS countries and there are several international projects, even in Chile.

- 200ms response time

The response time should be as short as possible, including as much as the API we use provides. We chose a figure of 200 ms simply because we do not want to have any problems - we need to respond very quickly.

Why linux

Our development team at 2GIS, is engaged in just advertising services and make a sales system within the company. This project is our first experience in developing services specifically for public access. We, as a development team, know very well .NET, since we have been doing this for 10 years (I have been at the company for 7 years) and have been building applications on this stack for a long time.

For this project we have chosen ASP.Net Core. Due to the high availability requirements that I have already mentioned, we need to place the service exactly where our final products are located, that is, to use the same platform. In addition to the fact that we were able to use the existing on-premise platform , which is in 2GIS, we also received several additional advantages:

- Gitlab ci

In my opinion, this is a good solution that helps launch the processes of continuous integration and continuous deployment and store the entire infrastructure as code. There is a YAML file in which you can describe all the steps of the assembly.

- CI starting kit based on make

There is a CI starting kit in the company. He, by the way, open-source. On the basis of make, the guys inside the company have written many different scripts that simply make it easier to perform routine tasks.

- Docker hub & docker images

We can fully use Docker, since it is Linux. It is clear that Docker also exists on Windows, but the technology is quite new, and so far there are few examples of production applications.

- Components on any technology stack

In addition, if you use Linux, you can make your applications using any technological stack, microservice approach, etc. We can do some components on .Net, others - on other platforms. We used this advantage to perform load testing of our application.

- Kubernetes

Kubernetes can also be used simply because Linux.

Therefore, the following configuration was formed: ASP.Net Core on Linux. We began to learn what Microsoft has for this and from the community. At the beginning of last year, everything with this question was already good: .Net was released in version 1, the libraries that we would need were also there. Therefore, we start.

The Twelve-Factor App

We came to our guys who are engaged in a platform for hosting applications, and asked what requirements the application must meet in order to be comfortable to use later. They said that it should be at least 12-factor. I'll tell you about it in more detail.

There is a set of 12 rules . It is believed that if you follow them, your application will be good, isolated, and it can be raised using all the tools that concern Docker, etc.

The Twelve-Factor App:

- One application - one repository;

- Dependencies - along with the application;

- Configuration through the environment;

- Services used as resources;

- The build, image creation and execution phases are separated;

- Services are separate stateless processes;

- Port binding;

- Scaling through processes;

- Quick stop and start processes;

- The environments are most similar;

- Logging to stdout;

- Administrative processes.

I would like to highlight some of them.

Dependencies - with the application. This means that the application should not require any pre-setting from the environment where we are deploying it. For example, there should not be .Net of a certain version, as we like it on Windows. We have to carry both the application, and runtime, and the libraries that we use, along with the application itself, so that we can simply copy and run it.

Configuration through the environment. We all use Config files to configure the application to run at runtime. But we would like to have some preset values in these Config-files, and we could pass through the environment variables some additional parameters of the environment itself so as to make the application work in certain modes: it is a dev environment, or a staging environment or production.

But the application code and different configuration parameters should not change. In classic .Net, which is on Windows, there is such a thing as the transformation of configs (XDT-transformation), which is no longer needed here, and everything is greatly simplified.

Another important feature that introduces 12-factorality: we must be able to quickly stop and start new processes. If we use the application in any environment, and suddenly something happened to it, we should not wait. It is better that Wednesday itself extinguish our application and launch it again. In particular, there may be reconfigurations of the environment itself, this should not affect the application.

Logging to stdout is another important thing. All the logs that our application produces should be logged to the console, and then the platform on which the application is running will figure out: take these logs and put them in a file, or in ELK in Elasticsearch or simply show the console.

.NET Core

Microsoft has long been the good old .NET, which is on Windows, which has WPF, Windows Forms, ASP.Net, under it there is the Base Class Library, Runtime, and so on.

But, on the other hand, there has always been an alternative approach - take mono and actually run the code written in C # on other platforms. Microsoft made another stack called .NET Core, where they just implemented the ability to write applications that would be initially cross-platform.

They have done quite a lot in order to create a common infrastructure: compilers, languages and runtime - all cross-platform.

On the .NET Core there is a UWP block - this is a separate world. It allows you to run .NET-applications under different platforms, from IoT-devices to the desktop. For example, I have seen people running .NET Core applications on a Raspberry Pi.

There were several base libraries: Base Class Library, Core Library and Mono Class Library. Living with it was pretty hard. Then the Portable Class Library appeared, etc. Therefore, Microsoft went in the direction of unifying APIs that are cross-platform and work on all these stacks, and made the .Net Standard Library.

Currently, this is .Net Standard version 2.0, in which practically everything that was in the old classic .NET works cross-platform.

We will talk about .NET Core and about its part, which concerns ASP.Net Core.

.Net Core Self-contained deployment

What .Net Core gives us in terms of 12-factor? In fact, the 12-factor is implemented here quite well.

There is complete dependency control , i.e. we have the ability to build the application and fully control its dependencies.

We can configure the .NET application so that the platform is explicitly specified (win10-x64 / ubuntu.16.04-x64 / osx.10.12-x64) during the build, and in the final artifact folder we get everything related to the application, runtime, libraries , that is, all-all-all, collected under a specific platform. To do this, we just need to choose it - be it Windows, Linux or OSX.

As I said before, we need to select a framework (currently, it is netstandard1.6) and include 2 libraries:

- Microsoft.NETCore.Runtime.CoreCLR in order to execute application code.

- Microsoft .NETCore.DotNetHostPolicy in order to run the application on the target system.

How does ASP.Net Core work?

The whole ideology of ASP.Net Core is built on the principles of Middleware. We have a certain number of layers, when a request comes into our application, the first Middleware becomes in its path, which executes its logic, and sends this request to the next one. The next one executes, the next forwards, and so on until the pipeline has ended. Back we send Response to the user.

If we talk about the applied aspects of this all, then:

- At the first stage, we can insert the Exception handler so that all exceptions are fully tracked, logged, and so on.

- The second middleware can be a security module.

- The third may be the MVC framework, which is engaged in routing and gives either data (in the case of REST services) or a page.

This is the simplest example of Middleware, which is what we did in order to host the application in Kubernetes. Let's take a closer look at what is there.

public sealed class HealthCheckMiddleware { private const string Path = "/healthcheck"; private readonly RequestDelegate _next; public HealthCheckMiddleware(RequestDelegate next) { _next = next; } public async Task Invoke(HttpContext context) { if (!context.Request.Path.Equals(Path, StringComparison.OrdinalIgnoreCase)) { await _next(context); } else { context.Response.ContentType = "text/plain"; context.Response.StatusCode = 200; context.Response.Headers.Add(HeaderNames.Connection, "close"); await context.Response.WriteAsync("OK"); } } } Here in the constructor is RequestDelegate next . This is the next Middleware that is inserted into the constructor, and we have the opportunity to send him a request. Specifically, this Middleware is responsible for responding to all requests that came on / healthcheck.

If the request did not come to this path (path), then we give control to the next Middleware, that is, forward it further. If this, then we say 200, everything is fine, the sentence works, and we output OK to the console. Further the request does not continue to be executed. This is where it ends.

This is a very fast thing. In our tests, all this code works literally in 2-3 ms.

Basic features of REST services

Here are the basic features of the REST services that are usually needed when we make such applications:

- Logging is necessary for everyone and it would be great for it to be structural.

- API versioning (SemVer, DateTime) - it would be nice if it was right out of the box.

- It is also great to have a formal description of the API (Swagger).

Structural logging

If you visualize it, it looks like this.

There is a console with multi-colored lines. In fact, if you look in more detail, we will see that each message that is displayed in the log has Data, a level (error, warning, info) and so on. But, in addition, there are some parameters that are highlighted in different colors.

In addition to the text of the logs, we can still have some key / value pairs that can be explicitly indexed. If we want, say, to log everything completely, which our application sends for a specific request, we can add the request id to the log, just like a key / value pair: request id is equal to so much. Then there is an opportunity for this request id to be indexed by our logs somewhere. In the end, simply by filtering all the logs for this request id, we can find all the messages that were generated by this request. This wonderful thing gives us structural logging.

In order to perform logging, ASP.Net Core has wonderful Serilog, log4net and NLog libraries. We stopped on Serilog , because everything is out of the box, including the ability to output logs in any format, not only to any consoles or to the highlighted console, but also immediately, if you suddenly need it, to Elastic or to a file.

appsettings.json { "Serilog": { "MinimumLevel": "Debug", "WriteTo": [ { "Name": "Console", "Args": { "formatter": "Serilog.Formatting.Compact.RenderedCompactJsonFormatter, Serilog.Formatting.Compact" } } ], "Enrich": [ "FromLogContext", "WithThreadId" ] } } So it is customizable. We have a logging level. Then we say that we want to display logs in the console, and then in what form these logs should be displayed in the console. If our application is working on staging or in a production-environment, I would like these logs to be output in Json format, so that the environment can then pick them up and index them in some way. Again, all this is out of the box and it is possible to enrich all these logs with some additional parameters, for example, to output ThreadId, RequestId, etc. in such a declarative way.

API Versioning

We would like the API of the service we are doing to support versioning out of the box. When we work with public clients and do not know how many there are and what they are in principle, versioning is a very useful thing.

In our case, it was just important, because we have different customers. The life cycle of a specific version of the mobile application 2GIS can be up to six months, or even more. That is, users may not update the application on the device for more than six months.

But, nevertheless, if this particular version of the application comes to the backend, we have to give the data for exactly the contract that is supposed for this device and for this version of the application. If later, over time (this usually happens), we took and changed something in the API - we added, or, even worse, deleted some data from the answer, or basically changed the interaction contract, we would not want it to break the old one. attachment.

There is a wonderful library ASP.NET API versioning from Microsoft. It is fully open-source, works on the guidelines that Microsoft has publicly formulated for its services, and gives the opportunity to version the API in various ways, including using SemVer or on dates, like Netflix does.

Again, there are various options for transferring information about the API version: via the query string, URL path, header — everything is supported out of the box.

Suppose we have such a controller.

[ApiVersion("1.0")] [Route("api/medias")] // /api/medias [Route("api/{version:apiVersion}/medias")] // /api/1.0/medias public sealed class MediasController : Controller { // /api/medias/id?api-version=1.0 or /api/1.0/medias/id [HttpGet("{id}")] public async Task<IActionResult> Get(long id) { ... } } All we have to do is first indicate that the version of the API that the controller provides is 1.0. Next we need to write our requests to include information about the version of the API.

In this configuration, there are two ways to include the API version in the request — via the URL path, or via the query string. Clients can choose a convenient way for them, but all requests will come to the same endpoint.

If over time we have a second version of the API, we need to do a little - come to the same controller and say that we have a second version of the same API.

[ApiVersion("1.0")] [ApiVersion("2.0")] [Route("api/medias")] [Route("api/{version:apiVersion}/medias")] public sealed class MediasController : GatewayController { // /api/medias/id?api-version=1.0 or /api/1.0/medias/id [HttpGet("{id}")] public async Task<IActionResult> Get(long id) { ... } // /api/medias/id?api-version=2.0 or /api/2.0/medias/id [MapToApiVersion("2.0")] [HttpGet("{id}")] public async Task<IActionResult> GetV2(long id) { ... } } In fact, all we need to do is to leave the method that was, without changing anything in it either. It gives data to customers who knew about this version of the API at the time

We can add a new controller method and tell all requests that come to API 2.0 to be redirected here. Thus, we will not break old customers, and add functionality for new customers.

It is not necessary to do this in one controller. We can create a new controller and say that it is a controller for API version 2.0 (line

[MapToApiVersion("2.0")] ). In general, there are many different options, and everything is done very simply.Swagger

I also want to talk about the library Swashbuckle.AspNetCore , which allows you to enable Swagger.

Many have heard about Swagger, so the only thing I would like to say is that by connecting Swashbuckle.AspNetCore for ASP.Net Core, we get this functionality out of the box again, and it knows how to be friends with versioning API.

services.AddSwaggerGen( x => { IApiVersionDescriptionProvider provider; foreach (var description in provider.ApiVersionDescriptions) { x.SwaggerDoc(description.GroupName, new Info { ... }); } }); ... app.UseSwagger(); app.UseSwaggerUI( c => { IApiVersionDescriptionProvider provider; foreach (var description in provider.ApiVersionDescriptions) { options.SwaggerEndpoint( $"/swagger/{description.GroupName}/swagger.json", description.GroupName.ToUpperInvariant()); } }); That is, by configuring the application in this way, saying that we have a certain VersionDescriptionProvider, and just running through the foreach on ApiVersionDescriptions, we can add Swagger documents, that is, the very formal descriptions of our APIs.

After that we can add SwaggerUI, saying that we have SwaggerEndpoint on such json'ah, again, depending on the version of the API that we have. Thus, we will get API versioning, which Swagger knows about, there is a switch and an opportunity to see which of these API versions have these methods. Everything is cool and all this is in the demo, you can see.

Build

Gently move on. We wrote an application in which there is all the necessary functionality, we even wrote business logic there. How do we get this all down? Yes, so that later zadeploit!

In fact, everything is quite simple. I said that we use GitLab CI. Consider the example of one of the fragments of the GitLab CI file one build step.

build:backend-conf-demo: image: $REGISTRY/microsoft/aspnetcore-build:1.1.2 stage: build:app script: - dotnet restore --runtime ubuntu.16.04-x64 - dotnet test Demo.Tests/Demo.Tests.csproj --configuration Release - dotnet publish Demo --configuration Release --runtime ubuntu.16.04-x64 --output publish/backend-conf tags: [ 2gis, docker ] artifacts: paths: - publish/backend-conf/ We have a build step, on which we want to build an application. For this we need not so much again: the base image provided by Microsoft. This image has everything you need: all the tools, the .Net command line, the .Net itself of a certain version, runtime, etc. This image is quite large, so, of course, in no case should it be dragged into production. It should be used only to build the application.

This is just about that same 12-factor, when at the stage of assembly and operation of the application should be separated.

We use this image, we say: “Gather us, please, all the dependencies that exist in this application just for this platform, under which we want to build!”

Next, of course, we want to run unit tests before building the application. If the unit tests did not pass, then nothing is needed to collect because the application does not work. If everything is fine, we say

dotnet publish and indicate exactly the same runtime that interests us.We are talking about where it is necessary to deploy all this in terms of the artifacts that are obtained. In this example, the folder

publish/backend-conf will contain everything related to applications and its dependencies.After we have compiled the application, we need to collect the Docker image, and collect the image directly for each change, since we have the same CI_TAG at the Gitlub CI level. In fact, this is a step to the Continuous deployment mode.

build:backend-conf-demo-image: stage: build:app script: - IMAGE=my-namespace/backend-conf TAG=$CI_TAG DOCKER_FILE=publish/backend-conf/Dockerfile DOCKER_BUILD_CONTEXT=publish/backend-conf make docker-build - IMAGE=my-namespace/backend-conf TAG=$CI_TAG make docker-push tags: [ docker-engine, io ] dependencies: - build:app Next, we configure other parameters, take the artifacts that were generated in the last step, and call docker-build. After the docker-build has passed, we got the image locally and can place it on the docker-hub - local, global - anywhere.

Thus, we have an application compiled for the OS we need and there is an image of this application on the docker-hub.

Deploy

All we need to do next is to deploy it, and it is time to talk about Kubernetes.

Kubernetes

This is a container orchestration tool that allows you not to bother too much about how to launch, lift, monitor their logs, etc. Our company has deployed as many as four Kubernetes clusters that can be used.

There are basic concepts in Kubernetes.

Pod

This is a logical union of containers. There may be one container, maybe several, and it is assumed that all containers that lie in the Pod are rigidly interconnected and use some kind of shared resources.

Kubernetes out of the box checks our application: liveness check, readiness check, log fetching, and so on. All these issues that concern container management, Kubernetes solves for us.

Service

The second thing that is important to know when we work with Kubernetes is service. It allows you to receive external traffic to Kubernetes and say where this traffic should be redirected inside Kubernetes - to which Pods, to which containers.

For this, he needs 2 things:

- Selector . The label that was set up for Pod is just needed to find out all the Pods that are for this application;

- Ports Mapping provides the ability to redirect containers to different ports.

The general picture in the figure above: there are users who come to the Kubernetes cluster from the outside; we get this traffic, we understand by the domain name, who needs it; on the selector we find those Pod'y; we send this traffic to the corresponding ports in containers.

Deployment

Allows you to deploy Pods automatically across the entire Kubernetes cluster. The whole picture looks like this.

As developers, we have to write Deployment, tell us how many replicas we need, what template, etc. Next, put all the labels in order to associate them with the service. Next, write a service that will just stand at the entrance to Kubernetes.

In fact, everything - the user's path is defined.

The only thing that is not in Kubernetes itself, and that we had to add on our own in order to close the application with a specific version on Kubernetes, is to write specific Deployment-files with an indication of a specific version of our application and a specific version of the image. This is necessary in order to embed all this into the continuous deployment pipeline. I think that not only we did it, because so far Kubernetes does not provide such an opportunity.

apiVersion: extensions/v1beta1 kind: Deployment metadata: name: {{ app_name }} spec: replicas: {{ replicas_count }} template: metadata: labels: app: {{ app_name }} spec: containers: - name: backend-conf image: {{ image_path }}:{{ image_version }} ports: - containerPort: {{ app_port }} readinessProbe: httpGet: { path: '{{ app_probe_path }}', port: {{ app_port }} } initialDelaySeconds: 10 periodSeconds: 10 env: - name: ASPNETCORE_ENVIRONMENT value: {{ env }} The Deployment example is higher and has:

- Label, about which I spoke, and which we can indicate somewhere on top through the template engine.

- An indication of where the image of our application and its version are.

- CI_TAG.

- An indication of which port to go to our application in this container.

- Checking readiness so that Kubernetes knows if our application works, whether everything is good or not very good to it.

- Environment variables

The service looks like this.

apiVersion: v1 kind: Service metadata: name: {{ app_name }} annotations: router.deis.io/domains: "{{ app_name }}" router.deis.io/ssl.enforce: "{{ ssl_enforce | default('False') }}" spec: ports: - name: http port: 80 targetPort: {{ app_port }} selector: app: {{ app_name }} We are talking about the fact that the external port is 8080, and the internal one will be transmitted through the template. And, actually, the selector.

That's the whole service.

Next we need to specify the parameters in order to collect files for Kubernetes using these template engines.

common: replicas_count: 1 max_unavailable: 0 k8s_master_uri: https://master.staging.dc-nsk1.hw:6443 k8s_token: "{{ env='K8S_TOKEN_STAGE' }}" k8s_ca_base64: "{{ env='K8S_CA' }}" k8s_namespace: my-namespace ssl_enforce: true app_port: 5000 app_probe_path: /healthcheck image_version: "{{ env='CI_TAG' }}" image_path: docker-hub.2gis.ru/my-namespace/backend-conf env: Stage backend-conf-demo: app_name: "backend-conf-demo" app_limits_cpu: 500m app_requests_cpu: 100m app_limits_memory: 800Mi app_requests_memory: 300Mi kubectl: - template: deployment.yaml.j2 - template: service-stage.yaml.j2 Specify which cluster Kubernetes we will use; application port 5000;

app_probe_path : /healthcheck (the same Middleware); image version is taken from the environment variable; These images lie there and the environment of env, for example, Stage . For production there will be another variable. Further we register resources for the application and templates which I gave above.After we have done all this, we are writing another build step for gitlub CI.

deploy:backend-conf-demo-stage: stage: deploy:stage when: manual image: $REGISTRY/2gis-io/k8s-handle:latest script: - export ENVIRONMENT=Stage - k8s-handle deploy --config config-stage.yaml --section backend-conf —sync-mode True only: - tags tags: [ 2gis, docker ] In fact, we are talking about the fact that we have such a config file, raise the container from the image on the Docker hub and call the k8s-handle utility in it.

This is enough to deploy any , not just ASP.Net Core application on Kubernetes. So it works in 2GIS and does not cause any special problems.

By the way, k8s-handle is also an open source thing.

Stress Testing

Now you need to make sure that the application that we have done, really fulfills the load, is fault-tolerant and is given for a certain time.

We are developers, and especially in testing we do not understand, but in 2GIS there is a separate load testing team. We came to them and said:

- Guys, we made the application. We have such and such requirements. weWhen they came to us, they asked what we wanted to test at all, we answered that the most critical for us is a public endpoint, and we have the following load loop:

locally tested, all is well. Let's load it!

- Yes, no problem! Tomorrow we will come and do everything!

- How do you do it at all?

- We have our own tools. What are you deploying the application to?

- On Kubernetes.

- Wonderful! So everything will be cool!

- There are some products that generate load.

- There is a service.

- There is a provider - API, which lies under our service.

Testers wrote us tests on Scala. They use Gatling to perform stress tests.

Everything is simple here. It is written that we have some asserts, we want the number of requests per second to be more or less so much. We want there to be no failed requests, so that the responseTime is so much.

After that, we say how to conduct a stress test:

- Raise the load from 1 to 20 users, for example, within 30 seconds.

- For another two minutes, keep a constant load of 20 users.

At the end, you need to specify that there is such a test, with such and such steps, HTTP, a runtime of 180 seconds in our case, and such and such asserts.

This is one example of a load test.

After that, they wrote us a template in gitlub CI.

.perf:template: &perf_template stage: test:perf environment: perf only: - master - /^perf.*$/ variables: PERF_TEST_PATH: "tests/perf" PERF_ARTIFACTS: "target/gatling" PERF_GRAPHITE_HOST: "graphite-exporter.perf.os-n3.hw" PERF_GRAPHITE_ROOT_PATH_PREFIX: "gatling.service-prefix" image: $REGISTRY/perf/tools:1 artifacts: name: perf-reports when: always expire_in: 7 day paths: - ${PERF_TEST_PATH}/${PERF_ARTIFACTS}/* tags: [perf-n3-1] Here is the code for configuring the tool, but the tool itself (

image: $REGISTRY/perf/tools:1 ) is just in this image. perf:run-tests: <<: *perf_template script: - export PERF_GRAPHITE_ROOT_PATH_PREFIX PERF_GRAPHITE_HOST - export PERF_APP_HOST=http://${APP_PERF}.web-staging.2gis.ru - cd ${PERF_TEST_PATH} - ./run_test.sh --capacity - ./run_test.sh --resp_time after_script: - perfberry-cli logs upload --dir ${PERF_TEST_PATH}/${PERF_ARTIFACTS} --env ${APP_PERF}.web-staging.2gis.ru gatling ${PERFBERRY_PROJECT_ID} We ran the test in 2 variants:

- Capacity test, when we sent requests in turn: waited for a response, and only then sent the next request. Thus, we calculated the capacity of our application, that is, how many requests per second it can work.

- This load test, when we did not wait for answers, but ran these requests in parallel.

In the first iteration, our results were not very good - not the ones we were waiting for. And we went towards Performance.

Performance

Caching

The first thing that comes to mind to increase the number of requests that we can handle, and reduce ResponseTime, is caching. If we have resources that we can save in the cache, why go after them again each time.

[AllowAnonymous] [HttpGet("{id}")] [ResponseCache( VaryByQueryKeys = new[] { "api-version" }, Duration = 3600)] public async Task<IActionResult> Get(long id) { ... } To do this, we have a good old ResponseCache attribute in ASP.Net, but in ASP.Net Core it has acquired additional functionality.

If we simply set ResponseCache and specify its Duration, then we get caching on the client by default. That is, the client on his side saves the data that was given to him, and does not send more requests to us.

But in ASP.Net Core also added server caching. There is such a thing Response Caching Middleware , which allows you to manage server caching quite well, that is, cache directly the responses from the services in the process memory. And we can expose how we want to share these answers. In our case, if there is a versioning, it would be nice, of course, to give different answers to each version of the API.

After we turned on server-side caching and client-side caching and started our test, everything went wrong, because somehow everything quickly began to arrive. Therefore, then on the side of load tests, we turned off client caching, so that everything was more or less similar to real conditions when we have different clients.

Asynchrony and multithreading

The second thing that comes to mind when we talk about performance is work with threads. Yes, we have .Net, in which everything is fine from the point of view of streams, so why not use it.

There are 2 things here - asynchrony and multithreading, and this is not the same thing.

Asynchrony is when there is one thread, one OS resource on which we can perform a series of tasks sequentially. If Task1 has sent a request to the database and is waiting for something, we can send this thread to the pool so that the next request from the client can be processed (Task2). If he is also thinking, we can change the context of the thread and execute Task1, if a response from the database has already come to him, etc.

That is, we can perform several asynchronous tasks on one physical OS resource. Async, Await in C # is just about asynchrony.

Multithreading is when there are many different threads, many OS resources, no one bothers to run some processes in parallel, but no one says that these parallel processes within themselves cannot be asynchronous.

Therefore, Async / Await is not only about asynchrony, but also about multithreading, but you need to use it correctly.

Below is a simple example where you can use .Net multithreading right out of the box.

var data = await _remoteService.IOBoundOperationAsync(timeoutInSec: 1); var result = new List<string>[data.Count]; foreach (var item in data) { var detailed = await _remoteService.IOBoundOperationAsync(timeoutInSec: 5); result.Add(string.Join(", ", detailed)); } Suppose we have a service that gives us data in the form of a collection, on the basis of this data, we still need to iterate, cycle through to other services in order to get additional data based on the existing ones.

This is an example straight from our lives. We have video advertising, and it can be in several formats, we need to find out what divisions, bit-rate, etc., are in all of these formats.

Our first request to the API: “Tell us, please, what media files are on your side?” She replies that there is a collection of data. Further on each of them we receive already detailed information.

In developing this all, we certainly didn’t think much about performance. Our load tests showed us that the response from our service was about a second, which is not very good.

Therefore, we rewrote. This is the correct version.

var data = await _remoteService.IOBoundOperationAsync(timeoutInSec: 1); var result = new string[data.Count]; var tasks = data.Select( async (item, index) => { var detailed = await _remoteService.IOBoundOperationAsync(timeoutInSec: 5); result[index] = string.Join(", ", detailed) }); await Task.WhenAll(tasks); Unfortunately, it is not always possible to write the correct, efficient multi-threaded code the first time so that there are no deadlocks.

Here we do almost the same thing, but we launch requests to the API already in parallel, and also asynchronously.

We receive a collection of Task'ov on an exit, and we launch one more which will wait for their performance. Thereby we get acceleration as many times as we have video files.

If, say, for each video ad there are 3 file resolutions, we get a reduction in time by as much as 4 times. We need to make 1 request to get all the metadata, and then another 3, 4 or 5 requests. They are executed in parallel, so the total time of their execution is about the same, and we can get a serious performance gain.

Testing

After we implemented this, load testing showed other results.

We realized that we have some limits on memory and processor, which, on the one hand, are dictated by the runtime, .Net, on the other hand, by the code that we wrote. These are specific figures from the production:

- 384Mb and 1.5 CPU - so much our application can consume at its peak.

- Synchronous (capacity) test showed ~ 24 RPS (without cache).

- With the use of server caching ~ 400 RPS, i.e., the response time is about 2.5 ms.

- Asynchronous (load) test passed.

Instead of conclusion

Based on our experience, we can say that .NET Core can currently be used in production . We did not have any freezes, incomprehensible nuances with the work of garbage collector or problems with stream orchestration, etc. Everything that is usual in .Net currently has the same quality in ASP.NET Core, and you should not be afraid to use it, judging by our experience.

There were no problems concerning Linux . If there are any problems, they are most likely yours. We had deadlocks with just the use of threads, but they depended not on Linux, but on programmers.

As I have already shown, Docker and Kubernetes greatly simplify life.in terms of developing .Net applications, and, in principle, in terms of developing applications, and using different stack technologies.

The last thing I would like to say is that you need to optimize applications . All this is out of the box, but you still need to think so as not to get interesting effects.

Contacts:

Code and presentation here .

E-mail: denis@ivanovdenis.ru

Questions and answers

Linux , .Net Core. Linux . , , .

, , Prometheus Server. runtime, endpoint Prometheus . - , .

. : : ; .

. , .Net, Windows. , Docker, , , , . - — .

— .Net Core . , Linux — ? eventTracing, PerformanceCounter ?, PerformanceCounter, Windows . . ?

Linux , .Net Core. Linux . , , .

— , , , , Performance. Linux ?Yes. , , . . - . -, , , . , , , ! — .

— Performance ? , -, , - , , , , . , ., , , . . , PerformanceCounter -, .

, , Prometheus Server. runtime, endpoint Prometheus . - , .

. : : ; .

— ASP.Net Core Linux ?Linux. , , , Linux. Linux.

. , .Net, Windows. , Docker, , , , . - — .

HighLoad++ Junior Backend Conf — Backend Conf ++ . , , 9 . , ++ .

Source: https://habr.com/ru/post/352168/

All Articles