Installing Facebook image recognition package. All rake in one place

Recently, Facebook released its open-source image recognition project. Of course, I immediately wanted to touch him, see how he works and what can be obtained with it. We decided to deal with its installation and empirically check whether it is so easy to use it, as the developers write about it in the instructions.

Recently, Facebook released its open-source image recognition project. Of course, I immediately wanted to touch him, see how he works and what can be obtained with it. We decided to deal with its installation and empirically check whether it is so easy to use it, as the developers write about it in the instructions.

This project is not the easiest, so the question arises, why is it needed if there are ready-made frameworks such as Keras, TensorFlow and Caffe, where, as they say, “sat down and went”? And the answer is simple: you need a flexible tool with the ability to expand, which will make friends with Python. We have learned how to distinguish a whale from a seagull, but what will it give us? IFunny seriously makes a fun application and wants to surprise users with new features, so why not explore such a rich direction and apply?

After reading this analysis, you will be one step closer to enlightenment. Ready? Then grab a pen, paper and proceed!

And yes, you can safely consider this article as a manual for installation, and step by step, but you can also refer to it if an error has come out, and Google looks away to the side or gives utter nonsense in a search engine.

Getting started

A little before reaching the moment when you need to write the first git clone for their project, let's stop at the stage of iron and environment.

It seems to be all transparent and beautiful. But. Let's start with iron. The project is very, very demanding. Especially memory, swap and video card. Initially, the build was done on an AWS instance with a configuration:

- OS: Linux Redhat 2017.09 (EC2) → Ubuntu 16.04 LTS

- CPU: 16 x Intel® Xeon® CPU E5-2686 v4 @ 2.30GHz;

- HDD: 1 Tb;

- Swap: 16 Gb → 128 Gb (!!!);

- RAM: 122 Gb (7 x 16 Gb, 1 x 10 Gb);

- Graphic adapter: GRID K520 → Tesla M60.

It is better to immediately use Ubuntu LTS, because it already has all the necessary basic compilers of the latest versions, or it is easy to upgrade to the latest versions. During the build and launch process, it will be clear why we jumped so drastically from one system to another, changed the video card and threw a swap.

If you want to collect all this out of curiosity, to see how it works and play, then acquire at least a video card with CUDA technology. Without it, trying to start is useless. To understand what kind of graphics card that can - here is a link to the wiki.

GRID K520 video card belongs to CUDA capability 3.0+. It is already possible to work with her, but not as quickly as we would like.

Go? Or stand a little bit ...

We put the package from Nvidia

We proceed directly to the environment. To begin with, make sure that we have a g ++ version not lower than 4.9, otherwise at the very end we may suffer a fiasco. Take the newest, freshest g ++, just from the repository. Next put fresh drivers from Nvidia.

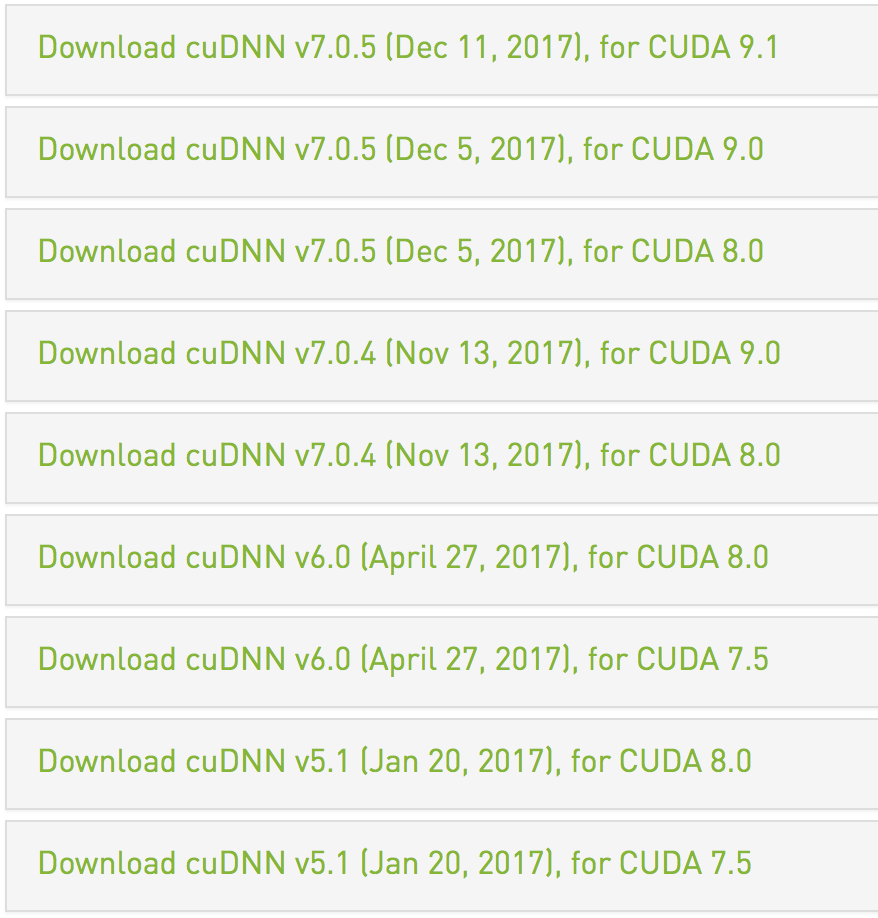

sudo yum install nvidia #- , sudo yum install cuda #, , nvcc --version # CUDA Register at http://developers.nvidia.com and download the cuDNN library for your version of CUDA.

cd ~/ tar -xzvf cudnn-$VERSION-linux-x64-v7.tgz For Ubuntu 16.04, everything looks simpler:

sudo apt-get install nvidia-384* sudo apt-get install nvidia-cuda-dev sudo apt-get install nvidida-cuda-toolkit And here you can do differently. The official manual requires that we copy the files to different places. You can agree with the manual and copy as indicated. But you can do it easier. From cuda / include copy the libraries to /usr/local/cuda/lib64 .

Installing Lua and LuaRocks

Farther. We need Lua and LuaRocks (another package manager - not enough for us, not enough). Everything is simple for both OSs:

curl -R -O http://www.lua.org/ftp/lua-5.3.4.tar.gz tar zxf lua-5.3.4.tar.gz cd lua-5.3.4 make linux test cd ~/ wget luarocks-2.4.3.tar.gz tar -xvf luarocks-2.4.3.tar.gz cd luarocks-2.4.3 ./configure make build sudo make install cd ~/ Install Torch7 and its dependencies

We already have a luarocks dependency manager. And now the most interesting thing: you need to put some of the dependencies through LuaRocks (everything will not work, otherwise delivered and will not take off at all). In general, when installing these dependencies, there should not be any problems, but there are many, many small grabelles (and what about without them).

Torch (should install Torch7).

git clone https://github.com/torch/distro.git ~/torch --recursive cd ~/torch; bash install-deps; # ./install.sh — , LUAJIT21 # TORCH_LUA_VERSION=LUA52 ./install.sh # lua52 : # , warning source ~/.bashrc # torch th #In theory, nothing will stop you at this moment, except for the error with the 'half'. This problem is solved as follows: first we execute the command in the console.

export TORCH_NVCC_FLAGS="-D__CUDA_NO_HALF_OPERATORS__".Then run the installation again.

COCO API: is collected only from source, but at least it is installed without problems.

git clone https://github.com/cocodataset/cocoapi.git cd cocoapi luarocks make LuaAPI/rocks/coco-scm-1.rockspecimage: no problem. We put through luarocks:

luarocks install imagetds: all of a sudden it is not a package and generally you need to install lua ffi

luarocks install --server=http://luarocks.org/dev luafficjson: in theory, there are no problems, except that the package is called json.

luarocks install jsonnnx: and here is an ambush. You are already happy with the Torch framework? Then we clone the repository into the torch root and RETRIEVE it entirely. Here it is necessary to clarify that it will not be possible to assemble immediately with nnx, COCO API, image, tds and cjson packages are indicated in its dependencies.

optim: no problems found, put directly

luarocks install optim.inn: similar to

luarocks install inn.- cutorch, cunn, cudnn - put in exactly this sequence

luarocks install cutorch luarocks install cunn luarocks install cudnn

It seems to be all set. But it does not leave the feeling that something is forgotten ... Something important ... So it is. The project itself who will clone?

cd ~/ git clone https://github.com/facebookresearch/deepmask.git cd deepmask We set up models for training

Tightened? Well done. And now it will be the longest part. Download training packs and grids. And we have two ways. The first way is to download ready-made models and, without training the network, immediately start checking your picture (here you can safely skip the neural network training step and immediately add dependencies further).

mkdir -p pretrained/deepmask; cd pretrained/deepmask wget https://s3.amazonaws.com/deepmask/models/deepmask/model.t7 mkdir -p pretrained/sharpmask; cd pretrained/sharpmask wget https://s3.amazonaws.com/deepmask/models/sharpmask/model.t7 cd ~/deepmask/ th computeProposals.lua pretrained/deepmask # run DeepMask th computeProposals.lua pretrained/sharpmask # run SharpMask th computeProposals.lua pretrained/sharpmask -img /path/to/image.jpg As already mentioned, downloaded models are enough for a quick start. This can be done not to train the neural network for several days, but this is not enough for something more serious. The second path begins here. Therefore, we continue.

mkdir -p pretrained wget https://s3.amazonaws.com/deepmask/models/resnet-50.t7 # , 250 . mkdir -p ~/deepmask/data; cd ~/deepmask/data wget http://msvocds.blob.core.windows.net/annotations-1-0-3/instances_train-val2014.zip # ~158 Mb wget http://msvocds.blob.core.windows.net/coco2014/train2014.zip # ~13 Gb >85k wget http://msvocds.blob.core.windows.net/coco2014/val2014.zip # ~6.2 Gb >40k We solve mistakes with CUDA

Now we have the necessary kits for training the neural network and training validation. It seems that there is.

th train.lua --help .... THCudaCheck FAIL file=/home/ubuntu/torch/extra/cutorch/lib/THC/THCGeneral.c line=70 error=30 : unknown error /home/ubuntu/torch/install/bin/lua: /home/ubuntu/torch/install/share/lua/5.2/trepl/init.lua:389: cuda runtime error (30) : unknown error at /home/ubuntu/torch/extra/cutorch/lib/THC/THCGeneral.c:70

We look trace. Hmm ... It is very similar to the fact that there are not enough established dependencies: cunn, cudnn was established crookedly. Okay, reinstall. But it's not about them. Let's make an investigation! Check further. We try to install cutorch again and ... Bingo! The cwrap module was not found.

Installing, what else is left to do.

luarockt install cwrap luarockt install cutorch And here we again get the error that the cwrap module is not installed. WTF? I will not delve into the mechanics of LuaRocks, but the cwrap package cannot be put just like that, right away. We dig in the assistant and find the following: “in case the package is installed, but in fact it is not there, apply the --local directive”. Schrödinger package. It seems to have, but it is not. Okay.

luarockt install --local wrap  And again the same rake. But this is not funny. I categorically do not recommend putting all this under sudo. Well, why the math package superuser? Several steps that help install this package from the rockspec file. Check the paths LUA_PATH and LUA_CPATH and run the following commands:

And again the same rake. But this is not funny. I categorically do not recommend putting all this under sudo. Well, why the math package superuser? Several steps that help install this package from the rockspec file. Check the paths LUA_PATH and LUA_CPATH and run the following commands:

cd ~/torch git clone https://github.com/torch/cwrap.git cd cwrap cmake . cd .. TORCH_LUA_VERSION=LUA52 ./install.sh cd cwrap luarocks make rocks/cwrap-scm-1.rockspec luarocks install cutorch luarocks install inn luarocks install cunn luarocks install cudnn We teach the neural network (if the models were downloaded - skip this step)

Coped. It's time to start learning neural network. Further actions may take several days! Carefully look at the options with which you start training and validation!

cd ~/deepmask th train.lua --help ```bash , . , . ```bash -nthreads # — . # (!) (!) , # — 16 -batch # . 32 -maxload # , #. 4000 -testmaxload # . , # , , #; 500 -maxepoch # . 300 . , # So let's get started. Since we got such a powerful instance, what prevents us from loading the maximum processors?

th train.lua -nthreads 16 -batch 500 Oops ... But Nvidia installed its libraries and dismisses all vcc --version with the correct info. Only here the libraries are in /home/ubuntu/cuda/lib64/libcudnn.so.5 . And this means that at the very end of the .bashrc file we add the directive:

export CUDNN_PATH="/home/ubuntu/cuda/lib64/libcudnn.so.5" In theory, we are waiting for happiness, but no. We can step on the rake again. Serious enough.

In case the rake was not found, you will see a text similar to the text in the screenshot.

Either one of the error messages. The errors themselves and their solution methods are described in detail under the spoilers.

th train.lua -nthreads 16 -batch 500 Found Environment variable CUDNN_PATH = /home/ubuntu/cuda/lib64/libcudnn.so.5-- ignore option dm -- ignore option reload -- ignore option datadir nthreads 16 2 -- ignore option rundir -- ignore option gpu batch 500 32 | running in directory /home/ubuntu/deepmask/exps/deepmask/exp,batch=500,nthreads=16 | number of paramaters trunk: 15198016 | number of paramaters mask branch: 1608768 | number of paramaters score branch: 526337 | number of paramaters total: 17333121 convert: data//annotations/instances_train2014.json --> .t7 [please be patient] convert: data//annotations/instances_train2014.json --> .t7 [please be patient] convert: data//annotations/instances_train2014.json --> .t7 [please be patient] convert: data//annotations/instances_train2014.json --> .t7 [please be patient] convert: data//annotations/instances_train2014.json --> .t7 [please be patient] /home/ubuntu/torch/install/bin/lua: ...e/ubuntu/torch/install/share/lua/5.2/threads/threads.lua:183: [thread 16 callback] /home/ubuntu/torch/install/share/lua/5.2/coco/CocoApi.lua:142: Expected value but found T_END at character 1 If you have suffered such a wonderful mistake, then you just need to make a series of wonderful steps:

cd ~ rm -rf torch/ git clone https://github.com/torch/distro.git ~/torch --recursive cd ~/torch; bash install-deps; TORCH_LUA_VERSION=LUA52 ./install.sh git checkout 5961f52a65fe33efa675f71e5c19ad8de56e8dad ./clean.sh bash install-reps TORCH_LUA_VERSION-LUA52 ./install.sh luarocks install cudnn luarocks install cutorch # ! . luarocks install cunn luarocks install inn luarocks install tds luarocks install optim luarocks install nnx luarocks install image cd ~/coco/ luarocks make LuaAPI/rocks/coco-scm-1.rockspec Rebuilt. Reinstalled. Run again with the same options and see:

th train.lua -nthreads 16 -batch 500 -- ignore option rundir -- ignore option dm -- ignore option reload -- ignore option gpu -- ignore option datadir nthreads 1 2 | running in directory /Users/ryan/mess/2016/34/deepmask/exps/deepmask/exp,nthreads=1 | number of paramaters trunk: 15198016 | number of paramaters mask branch: 1608768 | number of paramaters score branch: 526337 | number of paramaters total: 17333121 convert: data//annotations/instances_train2014.json --> .t7 [please be patient] FATAL THREAD PANIC: (write) not enough memory . ? ! Workaround . . . . ```bash cd ~/deepmask/ th coco = require 'coco' coco.CocoApi("data/annotations/instances_train2014.json") # , #convert: data/annotations/instances_train2014.json --> .t7 [please be patient] #converting: annotations #converting: categories #converting: images #convert: building indices #convert: complete [57.22 s] #CocoApi coco.CocoApi("data/annotations/instances_val2014.json") # , #convert: data/annotations/instances_val2014.json --> .t7 [please be patient] #converting: annotations #converting: categories #converting: images #convert: building indices #convert: complete [26.07 s] #CocoApi We teach neural network

Now the preparations are complete. All models are there, there are networks, there are images for training and validation. Run again. Let's try to load as much as possible.

th train.lua -batch 500 -nthreads 16 In parallel, we look at htop (not the best idea, since it will not show the division of consumed memory by type).

To put it mildly, it's hot. I remind you: 16 physical processors, each with 8 cores, with virtualization. And memory. It is not surprising that after a few minutes of operation, the terminal issued "Killed"!

We try again, we make the conditions "easier":

th train.lua -maxepoch 2 -nthreads 4 Found Environment variable CUDNN_PATH = /home/ubuntu/cuda/lib64/libcudnn.so.5maxepoch 2 300 -- ignore option datadir -- ignore option gpu -- ignore option dm nthreads 4 2 -- ignore option rundir -- ignore option reload | running in directory /home/ubuntu/deepmask/exps/deepmask/exp,maxepoch=2,nthreads=4 | number of paramaters trunk: 15198016 | number of paramaters mask branch: 1608768 | number of paramaters score branch: 526337 | number of paramaters total: 17333121 | start training Again. The 154 GB of memory used are all RAM banks, part of the swap, and part of the virtual memory. Stop. Stop through kill or Ctrl + C is not critical at all. When restarting training, all old models are overwritten.

Even on the Amazon machine, you can safely leave the script to work and go about your business for an hour and a half or two.

By the way, an important note: to see that something happened, the results of training the neural network, etc., when working through ssh, use the screen. Did the connection suddenly disappear and so on? No problem. Reconnected to the car, entered screen -r - and we again see what we took off on.

The -nthreads 4 option is forced by restarting and timing the neural network training time. Because with a larger load, the competition for RAM begins, and we have very, very many parameters (in the amount of 17 million).

On the training sample in 85K images. By the way, each even step of learning the script validates the neural network.

th train.lua -nthreads 16 -maxepoch 12 Found Environment variable CUDNN_PATH = /home/ubuntu/cuda/lib64/libcudnn.so.5-- ignore option reload -- ignore option dm maxepoch 12 300 -- ignore option gpu nthreads 16 2 -- ignore option datadir -- ignore option rundir | running in directory /home/ubuntu/deepmask/exps/deepmask/exp,maxepoch=12,nthreads=16 | number of paramaters trunk: 15198016 | number of paramaters mask branch: 1608768 | number of paramaters score branch: 526337 | number of paramaters total: 17333121 | start training [train] | epoch 00001 | s/batch 0.67 | loss: 0.31743 [train] | epoch 00002 | s/batch 0.67 | loss: 0.18296 [test] | epoch 00002 | IoU: mean 054.60 median 061.36 suc@.5 062.63 suc@.7 036.53 | acc 092.91 | bestmodel * [train] | epoch 00003 | s/batch 0.67 | loss: 0.16256 [train] | epoch 00004 | s/batch 0.67 | loss: 0.15217 [test] | epoch 00004 | IoU: mean 059.10 median 065.86 suc@.5 068.97 suc@.7 043.31 | acc 093.93 | bestmodel * [train] | epoch 00005 | s/batch 0.67 | loss: 0.14583 [train] | epoch 00006 | s/batch 0.67 | loss: 0.14183 [test] | epoch 00006 | IoU: mean 056.79 median 064.92 suc@.5 065.88 suc@.7 042.34 | acc 094.27 | bestmodel x [train] | epoch 00007 | s/batch 0.67 | loss: 0.13739 [train] | epoch 00008 | s/batch 0.67 | loss: 0.13489 [test] | epoch 00008 | IoU: mean 059.53 median 067.17 suc@.5 069.44 suc@.7 045.33 | acc 094.69 | bestmodel * [train] | epoch 00009 | s/batch 0.67 | loss: 0.13417 [train] | epoch 00010 | s/batch 0.67 | loss: 0.13290 [test] | epoch 00010 | IoU: mean 061.80 median 069.41 suc@.5 072.71 suc@.7 048.92 | acc 094.67 | bestmodel * [train] | epoch 00011 | s/batch 0.67 | loss: 0.13070 [train] | epoch 00012 | s/batch 0.67 | loss: 0.12711 [test] | epoch 00012 | IoU: mean 060.16 median 067.43 suc@.5 070.71 suc@.7 046.34 | acc 094.85 | bestmodel x Conducted training and you can proceed to the next stage. Based on the received DeepMask-models, you need to generate SharpMask.

th train.lua -dm ~/deepmask/exps/deepmask/exp\,maxepoch\=2\,nthreads\=4/ # , If the training was started with other parameters, then the name of the model folder will be different. But the main way to it is the same. While we are learning DeepMask or building SharpMask, we will continue the installation.

We put google-glog

There were no problems with him, because he was really simple and trouble-free.

cd ~/ git clone https://github.com/google/glog.git cd glog ./autogen.sh ./configure make sudo make install Multipathnet, fbpython and a whole bunch of dependencies

Now all we have to do is to clone a multipathnet project, install dependencies on it, and start recognition. It seems that I have already heard somewhere that everything is "easy and simple."

cd ~/ git clone https://github.com/facebookresearch/multipathnet.git luarocks install torchnet luarocks install class luarocks install fbpython Do not attempt to install fbpython via Conda or Anaconda and others. As Facebook expresses in the instruction: If we translate roughly and with a bit of humor, then "this is their own barge, with its own troubles and its own captain."

So, someone again kept silent about dependencies.

... -- Found Torch7 in /home/ubuntu/torch/install -- REQUIRED_ARGS (missing: THPP_INCLUDE_DIR THPP_LIBRARIES) -- Looking for pthread.h ... We googling in all directions, what are these variables and why we still do not have them.

cd ~/ git clone https://github.com/facebook/folly.git git clone https://github.com/facebook/fbthrift.git git clone https://github.com/facebook/thpp git clone https://github.com/facebookarchive/fblualib.git # , , I find it wonderful. Another small zoo. Okay. For those on Ubuntu:

sudo apt-get install zlib1g-dev binutils-dev libjemalloc-dev libssl-dev sudo apt-get install libevent-dev sudo apt-get install libsnappy-dev sudo apt-get install libboost-all-dev libgoogle-glog-dev libgflags-dev liblz4-dev liblzma-dev libsnappy-dev Linux: cd ~/ git clone https://github.com/gflags/gflags.git cd gflags mkdir build && cd build $ ccmake .. - Press 'c' to configure the build system and 'e' to ignore warnings. # SHARED_LIB - Set CMAKE_INSTALL_PREFIX and other CMake variables and options. # 'c' - Continue pressing 'c' until the option 'g' is available. # 'g' - Then press 'g' to generate the configuration files for GNU Make. cd .. cmake -fpic -shared configure . make sudo make install cd ~/ git clone https://github.com/google/glog.git export LDFLAGS='-L/usr/local/lib' cd glog autoreconf -ivf ./configure make sudo make install cd ~/ git clone https://github.com/google/double-conversion.git cd double-conversion cmake . -DBUILD_SHARED_LIBS=ON # . # cmake -fpic -shared make sudo make install : wget https://github.com/google/googletest/archive/release-1.8.0.tar.gz && \ tar zxf release-1.8.0.tar.gz && \ rm -f release-1.8.0.tar.gz && \ cd googletest-release-1.8.0 && \ cmake configure . && \ make && \ sudo make install cd ~/folly cmake -fpic -shared configure .. make (optionally -j$(proc)) sudo make install Then everything goes only for Ubuntu, since it was pointless on RHEL to try to update the whole environment. At the time of writing this article, it was simply impossible to advance further into RHEL, the limitation on the maximum version of packages for Amazon instances is to blame.

Linux users can continue installing packages on their own, but you will need to search for the necessary packages (and if you run into a rake in the process, welcome to the comments, I will be happy to help solve problems).

We put fbthrift

NEVER touch build_folly_fbthrift.sh. Otherwise, you will have to roll back to the moment when there is nothing except the training of the model.

sudo apt-get install flex bison git clone https://github.com/no1msd/mstch.git cd mstch cmake . make sudo make install cd ~/ git clone https://github.com/facebook/wangle.git cd wangle/wangle cmake . make sudo make install cd ~/ git clone https://github.com/facebook/zstd.git cd zstd make sudo make install cd ~/ git clone https://github.com/krb5/krb5.git cd krb5/src autoreconf -ivf ./configure make sudo make install cd ~/fbthrift/build cmake -fpic -shared configure . make sudo make install And now you think that there is happiness and you can install thpp. As practice has shown - no. Resting in a thousand shoals ... Fbthrift! To start:

cd ~/fbthrift/thrift/compiler/py sudo python setup.py install This will install the missing thrift_compiler. Then it turns out that there is no frontend.so at all. Lost compile and put in a folder. Well, okay (for reference: below is the generation line frontend.so for g ++ ~ v5.4).

cd ~/fbthrift/thrift/compiler/py g++ -I /usr/include/python2.7 -I ~/fbthrift -std=c++1y -fpic -shared -o frontend.so compiler.cc -lboost_python -lpython2.7 -L/build/lib -lcompiler_base -lcompiler_ast -lboost_system -lboost_filesystem -lssl -lcrypto sudo cp frontend.so /usr/local/lib/python2.7/dist-packages/thrift_py-0.9.0-py2.7.egg/thrift_compiler/ If you are missing something, compile yourself. It feels like you’ll have to add code for them further. And the premonition was not deceived.

If you catch an error calling methods with the wrong number of parameters

Vooot, now we are waiting for happiness. Almost. Good Samaritans from Facebook changed the function calls in torch7, but between times they forgot to make the correct call in thpp. How cute.

cd ~/thpp/thpp/detail/ nano TensorGeneric.h It is necessary to correct the following calls (cap prompts: after dim the part ", 0" was added):

static void _max(THTensor* values, THLongTensor* indices, THTensor* t, int dim) { return THTensor_(max)(values, indices, t, dim, 0); } static void _min(THTensor* values, THLongTensor* indices, THTensor* t, int dim) { return THTensor_(min)(values, indices, t, dim, 0); } static void _sum(THTensor* r, THTensor* t, int dim) { return THTensor_(sum)(r, t, dim, 0); } static void _prod(THTensor* r, THTensor* t, int dim) { return THTensor_(prod)(r, t, dim, 0); } We collect the package again, but that's not all ...

cd ~/thpp/thpp ccmake . # NO_TEST = ON, 'c', 'g' cmake . make sudo make install We put fblualib

Now in line fblualib. You can deal with it easily and simply. I repeat: in no case do not use their scripts, which are the tempting names of install-all.sh. Cover all your dancing, and you have to start almost from scratch.

sudo apt-get install \ libedit-dev \ libmatio-dev \ libpython-dev \ python-numpy Put, suddenly missed. No wonder with such a "simple" instruction from the developers. Check that we have numpy for ALL python versions.

cd ~/fblualib/fblualib cmake -fpic -shared configure . make sudo make install And again a lot of mistakes. And why? Yes, because someone did not edit the function calls in rocks-packages. So, rule calls hands. We just wrote instructions for Lua version below 5.2, and we already have 5.2, and there’s no point in looking for the old version you need. Easier to fix the call functions.

cd ~/fblualib/fblualib/python luarocks make rockspec/* Will give an error when compiling the type "underdefined". It's simple. In the file with the error, we correct the definition of the type luaL_reg to luaL_Reg (WHY change the call, I do not know!)

Files with an error will be two. Edits should be made on lines 191 and 341 in two files that will be in the error message.

Corrected call - tried. Compiled? You can rejoice.

In principle, after these manipulations, it would be possible to simply call ./build.sh again from ~/fblualib/fblualib ./build.sh , but this is already a matter of taste - with more reliable hands.

We continue the torment:

cd ~/coco luarocks make LuaAPI/rocks/coco-scm-1.rockspec # sudo pip install Cython cd PythonAPI make sudo make install Run the construction of SharpMask for the trained neural network

In theory, we have already reached the bottom, in the sense - we reached the end of the installation instructions. Remained the most enjoyable part. By the way, the process of creating SharpMask has just ended.

th train.lua -dm exps/deepmask/exp\,maxepoch\=2\,nthreads\=4/ -maxepoch 2 -nthreads 4 Found Environment variable CUDNN_PATH = /home/ubuntu/cuda/lib64/libcudnn.so.5gSz 160 112 nthreads 4 2 -- ignore option gpu -- ignore option reload maxepoch 2 300 -- ignore option datadir -- ignore option dm hfreq 0 0.5 -- ignore option rundir | running in directory /home/ubuntu/deepmask/exps/sharpmask/exp,gSz=160,hfreq=0,maxepoch=2,nthreads=4 | number of paramaters net h: 1090466 | number of paramaters net v: 1660050 | number of paramaters total: 2750516 | start training [train] | epoch 00001 | s/batch 1.95 | loss: 0.16018 [train] | epoch 00002 | s/batch 1.95 | loss: 0.13267 [test] | epoch 00002 | IoU: mean 059.74 median 065.92 suc@.5 069.00 suc@.7 043.39 | bestmodel * Quite good data for further work with models. LUA_PATH /home//?.lua .bashrc . , multipathnet deepmask/data sharpmask/model.t7 deepmask/model.t7 .

cd ~/deepmask mkdir data && cd data mkdir models && mkdir proposals ln -s ~/deepmask/data ~/multipathnet/data #deepMask sharpMask — , # ln -s ~/deepmask/exps/deepmask/exp,maxepoch=2,nthreads=4/model.t7 ~/deepmask/data/models/deepmask.t7 ln -s ~/deepmask/exps/sharpmask/exp,gSz=160,hfreq=0,maxepoch=2,nthreads=4/model.t7 ~/deepmask/data/models/sharpmask.t7 -

fbcoco.lua :

require 'testCoco.init' require 'Tester_FRCNN' .

!

!

th demo.lua -img data/test6.jpg Found Environment variable CUDNN_PATH = /home/ubuntu/cuda/lib64/libcudnn.so.5nn.Sequential { [input -> (1) -> (2) -> (3) -> (4) -> (5) -> (6) -> output] (1): nn.ParallelTable { input |`-> (1): nn.Sequential { | [input -> (1) -> (2) -> (3) -> output] | (1): NoBackprop: nn.Sequential { | [input -> (1) -> (2) -> (3) -> (4) -> (5) -> output] | (1): nn.SpatialConvolution(3 -> 64, 7x7, 2,2, 3,3) without bias | (2): inn.ConstAffine | (3): nn.ReLU | (4): nn.SpatialMaxPooling(3x3, 2,2, 1,1) | (5): nn.Sequential { | [input -> (1) -> (2) -> output] | (1): nn.Sequential { | [input -> (1) -> (2) -> (3) -> output] | (1): nn.ConcatTable { | input | |`-> (1): nn.Sequential { | | [input -> (1) -> (2) -> (3) -> (4) -> (5) -> output] | | (1): nn.SpatialConvolution(64 -> 64, 3x3, 1,1, 1,1) without bias | | (2): inn.ConstAffine | | (3): nn.ReLU | | (4): nn.SpatialConvolution(64 -> 64, 3x3, 1,1, 1,1) without bias | | (5): inn.ConstAffine | | } | `-> (2): nn.Identity | ... -> output | } | (2): nn.CAddTable | (3): nn.ReLU | } | (2): nn.Sequential { | [input -> (1) -> (2) -> (3) -> output] | (1): nn.ConcatTable { | input | |`-> (1): nn.Sequential { | | [input -> (1) -> (2) -> (3) -> (4) -> (5) -> output] | | (1): nn.SpatialConvolution(64 -> 64, 3x3, 1,1, 1,1) without bias | | (2): inn.ConstAffine | | (3): nn.ReLU | | (4): nn.SpatialConvolution(64 -> 64, 3x3, 1,1, 1,1) without bias | | (5): inn.ConstAffine | | } | `-> (2): nn.Identity | ... -> output | } | (2): nn.CAddTable | (3): nn.ReLU | } | } | } | (2): nn.Sequential { | [input -> (1) -> (2) -> output] | (1): nn.Sequential { | [input -> (1) -> (2) -> (3) -> output] | (1): nn.ConcatTable { | input | |`-> (1): nn.Sequential { | | [input -> (1) -> (2) -> (3) -> (4) -> (5) -> output] | | (1): nn.SpatialConvolution(64 -> 128, 3x3, 2,2, 1,1) without bias | | (2): inn.ConstAffine | | (3): nn.ReLU | | (4): nn.SpatialConvolution(128 -> 128, 3x3, 1,1, 1,1) without bias | | (5): inn.ConstAffine | | } | `-> (2): nn.SpatialConvolution(64 -> 128, 1x1, 2,2) without bias | ... -> output | } | (2): nn.CAddTable | (3): nn.ReLU | } | (2): nn.Sequential { | [input -> (1) -> (2) -> (3) -> output] | (1): nn.ConcatTable { | input | |`-> (1): nn.Sequential { | | [input -> (1) -> (2) -> (3) -> (4) -> (5) -> output] | | (1): nn.SpatialConvolution(128 -> 128, 3x3, 1,1, 1,1) without bias | | (2): inn.ConstAffine | | (3): nn.ReLU | | (4): nn.SpatialConvolution(128 -> 128, 3x3, 1,1, 1,1) without bias | | (5): inn.ConstAffine | | } | `-> (2): nn.Identity | ... -> output | } | (2): nn.CAddTable | (3): nn.ReLU | } | } | (3): nn.Sequential { | [input -> (1) -> (2) -> output] | (1): nn.Sequential { | [input -> (1) -> (2) -> (3) -> output] | (1): nn.ConcatTable { | input | |`-> (1): nn.Sequential { | | [input -> (1) -> (2) -> (3) -> (4) -> (5) -> output] | | (1): nn.SpatialConvolution(128 -> 256, 3x3, 2,2, 1,1) without bias | | (2): inn.ConstAffine | | (3): nn.ReLU | | (4): nn.SpatialConvolution(256 -> 256, 3x3, 1,1, 1,1) without bias | | (5): inn.ConstAffine | | } | `-> (2): nn.SpatialConvolution(128 -> 256, 1x1, 2,2) without bias | ... -> output | } | (2): nn.CAddTable | (3): nn.ReLU | } | (2): nn.Sequential { | [input -> (1) -> (2) -> (3) -> output] | (1): nn.ConcatTable { | input | |`-> (1): nn.Sequential { | | [input -> (1) -> (2) -> (3) -> (4) -> (5) -> output] | | (1): nn.SpatialConvolution(256 -> 256, 3x3, 1,1, 1,1) without bias | | (2): inn.ConstAffine | | (3): nn.ReLU | | (4): nn.SpatialConvolution(256 -> 256, 3x3, 1,1, 1,1) without bias | | (5): inn.ConstAffine | | } | `-> (2): nn.Identity | ... -> output | } | (2): nn.CAddTable | (3): nn.ReLU | } | } | } `-> (2): nn.Identity ... -> output } (2): inn.ROIPooling (3): nn.Sequential { [input -> (1) -> (2) -> (3) -> output] (1): nn.Sequential { [input -> (1) -> (2) -> output] (1): nn.Sequential { [input -> (1) -> (2) -> (3) -> output] (1): nn.ConcatTable { input |`-> (1): nn.Sequential { | [input -> (1) -> (2) -> (3) -> (4) -> (5) -> output] | (1): nn.SpatialConvolution(256 -> 512, 3x3, 2,2, 1,1) without bias | (2): inn.ConstAffine | (3): nn.ReLU | (4): nn.SpatialConvolution(512 -> 512, 3x3, 1,1, 1,1) without bias | (5): inn.ConstAffine | } `-> (2): nn.SpatialConvolution(256 -> 512, 1x1, 2,2) without bias ... -> output } (2): nn.CAddTable (3): nn.ReLU } (2): nn.Sequential { [input -> (1) -> (2) -> (3) -> output] (1): nn.ConcatTable { input |`-> (1): nn.Sequential { | [input -> (1) -> (2) -> (3) -> (4) -> (5) -> output] | (1): nn.SpatialConvolution(512 -> 512, 3x3, 1,1, 1,1) without bias | (2): inn.ConstAffine | (3): nn.ReLU | (4): nn.SpatialConvolution(512 -> 512, 3x3, 1,1, 1,1) without bias | (5): inn.ConstAffine | } `-> (2): nn.Identity ... -> output } (2): nn.CAddTable (3): nn.ReLU } } (2): nn.SpatialAveragePooling(7x7, 1,1) (3): nn.View(512) } (4): nn.ConcatTable { input |`-> (1): nn.Linear(512 -> 81) |`-> (2): nn.Linear(512 -> 81) |`-> (3): nn.Linear(512 -> 81) |`-> (4): nn.Linear(512 -> 81) |`-> (5): nn.Linear(512 -> 81) |`-> (6): nn.Linear(512 -> 81) `-> (7): nn.Linear(512 -> 324) ... -> output } (5): nn.ModeSwitch { input |`-> (1): nn.ConcatTable { | input | |`-> (1): nn.SelectTable(4) | `-> (2): nn.SelectTable(7) | ... -> output | } `-> (2): nn.Sequential { [input -> (1) -> (2) -> output] (1): nn.ParallelTable { input |`-> (1): nn.Sequential { | [input -> (1) -> (2) -> output] | (1): nn.SoftMax | (2): nn.View(1, -1, 81) | } |`-> (2): nn.Sequential { | [input -> (1) -> (2) -> output] | (1): nn.SoftMax | (2): nn.View(1, -1, 81) | } |`-> (3): nn.Sequential { | [input -> (1) -> (2) -> output] | (1): nn.SoftMax | (2): nn.View(1, -1, 81) | } |`-> (4): nn.Sequential { | [input -> (1) -> (2) -> output] | (1): nn.SoftMax | (2): nn.View(1, -1, 81) | } |`-> (5): nn.Sequential { | [input -> (1) -> (2) -> output] | (1): nn.SoftMax | (2): nn.View(1, -1, 81) | } |`-> (6): nn.Sequential { | [input -> (1) -> (2) -> output] | (1): nn.SoftMax | (2): nn.View(1, -1, 81) | } `-> (7): nn.Identity ... -> output } (2): nn.ConcatTable { input |`-> (1): nn.Sequential { | [input -> (1) -> (2) -> (3) -> output] | (1): nn.NarrowTable | (2): nn.JoinTable | (3): nn.Mean | } `-> (2): nn.SelectTable(7) ... -> output } } ... -> output } (6): nn.ParallelTable { input |`-> (1): nn.Identity `-> (2): nn.BBoxNorm ... -> output } } { 1 : { 1 : CudaTensor - size: 2x81 2 : CudaTensor - size: 2x324 } } { 1 : { 1 : CudaTensor - size: 2x3x224x224 2 : CudaTensor - size: 2x5 } } { 1 : 0.17677669529664 2 : 0.25 3 : 0.35355339059327 4 : 0.5 5 : 0.70710678118655 6 : 1 7 : 1.4142135623731 } 0.66218614578247 17 dog | done

, ! .

! . ( , ), , , .

, , .

: , . , , , . , , .

, -.

, . : CUDA 9.1, cuDNN 7, . , (, inn, cunn, cudnn, cutorch LuaRocks ).

, , !

')

Source: https://habr.com/ru/post/352068/

All Articles