Static site optimization: tenfold acceleration

Dzhonluka De Caro, the author of the material, the translation of which we are publishing today, once found himself on a trip abroad and wanted to show a friend his personal page on the Internet. I must say that it was a regular static site, but in the course of the demonstration it turned out that everything works more slowly than one would expect.

The site did not use any dynamic mechanisms - there was a bit of animation, it was created using responsive design methods, but the content of the resource almost always remained unchanged. The author of the article says that what he saw, having quickly analyzed the situation, literally horrified him. Events

')

In this article, Jonluca De Caro will talk about how he managed to speed up his static website tenfold.

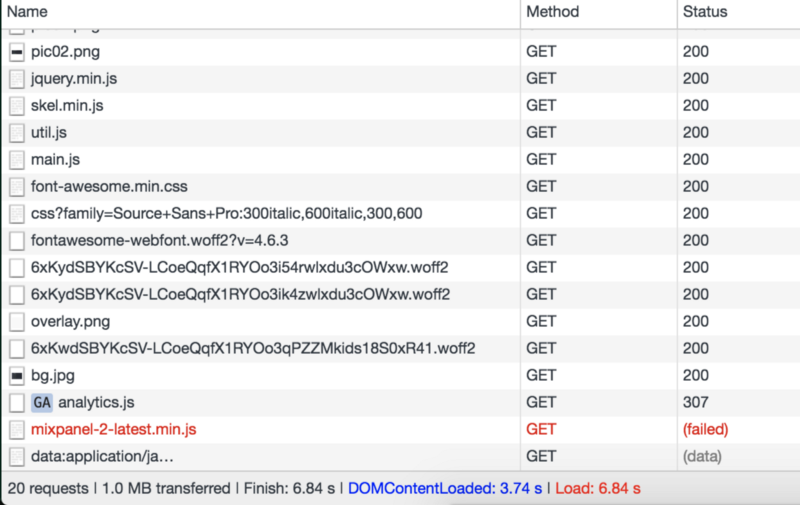

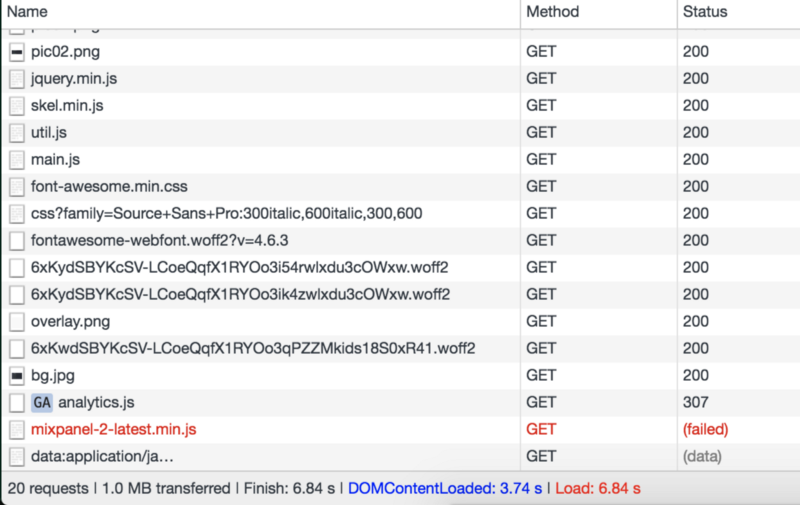

This is how the site looked, in terms of the number of requests needed to generate it, the load time and the amount of downloaded data, when it became clear that it had to be optimized.

Non-optimized site data

When I saw all this, I first took up the optimization of my site. Up to this point, if it was necessary to add a library to the site or something else, I would just take and load what I needed, using the

Then I began to look for people who were confronted with something similar. Unfortunately, publications on the optimization of static sites are quickly becoming obsolete. For example, the recommendations dated 2010-2011 are devoted to the use of libraries, and some of them made assumptions regarding the fact that libraries are not used in the development of the site at all. Analyzing these materials, I also faced the fact that some of them simply repeat the same sets of rules over and over again.

However, I still found two excellent sources of information: the High Performance Browser Networking resource and the Dan Luu publication. Although I didn’t go as far as optimizing as Dan did, by sorting out the contents of the site and its formatting tools, I was able to speed up the loading of my page about ten times. Now,

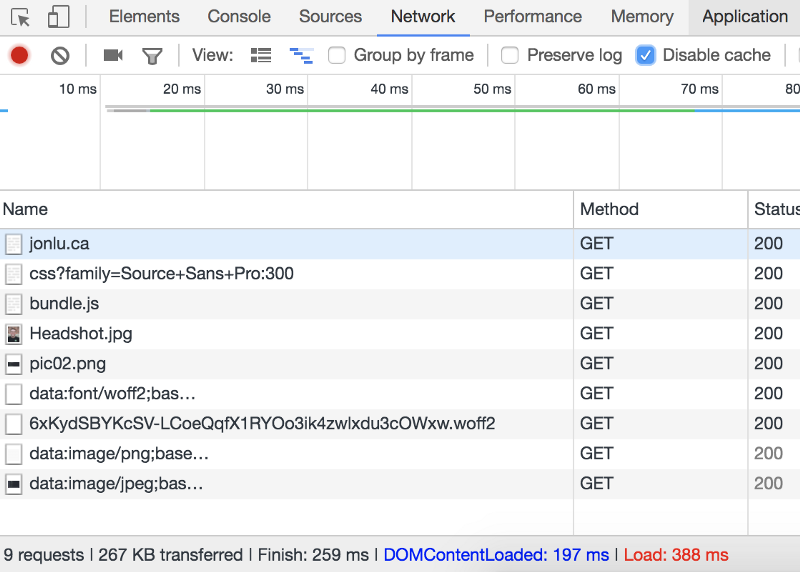

Optimization results

Now let's talk about how to achieve such results.

The first step in the optimization process was profiling the site. I wanted to understand what exactly requires the most time, and how best to parallelize the execution of tasks. I, for profiling the site, used various tools and checked how the site is loaded from different places of the Earth. Here is a list of resources that I used:

Some of these resources made recommendations for improving the site. However, when it takes several dozens of requests to form a static site, you can do a lot of things - from gif-tricks from the 90s to getting rid of unused resources (I downloaded 6 fonts, for example, although I only used of them).

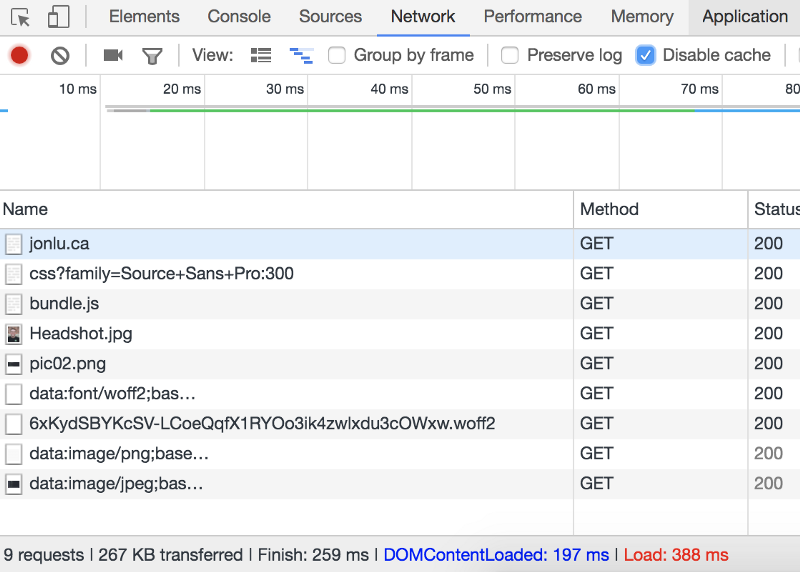

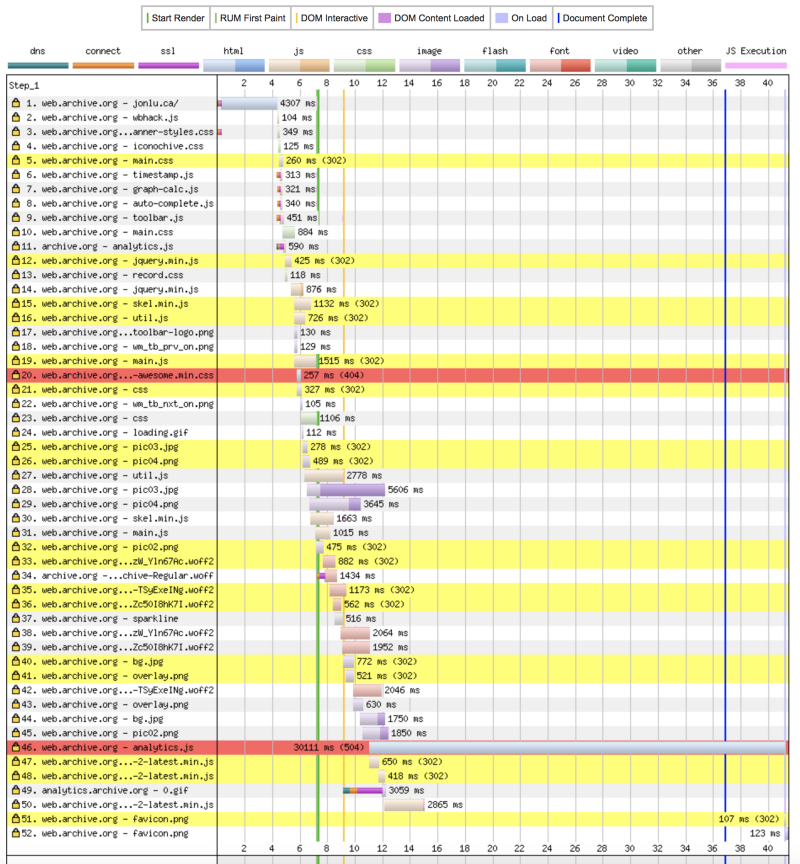

The timeline for a site very similar to mine. I didn’t take a screenshot in time for my site, but what’s shown here looks almost like what I saw several months ago when analyzing my site

I wanted to optimize everything that I can influence - from the content of the site and the speed of the scripts to the settings of the web server (Nginx in my case) and DNS.

The first thing I noticed was that the page performs many requests for loading CSS and JS files (it did not use persistent HTTP connections), which lead to various resources, some of which were handled via HTTPS. All of this meant that by the time the data was loaded, the time it took to go through multiple requests to content delivery networks or to regular servers was added and to receive answers from them. However, some JS files requested loading of other files, which led to the situation of lock cascades shown in the figure above.

In order to combine everything you need into one JS-file, I used the webpack . Every time I made some changes to the content of the page, the webpack automatically minified and collected all the dependencies into one file. Here is the content of my

I experimented with various options, in the end I got one file

Next, I worked with the Font Awesome library and removed everything from there, except for the three icons I used:

The way the site is designed does not allow it to be displayed correctly if the browser processes only HTML and CSS, so I did not try to deal with the blocking download of the

This approach revealed several additional useful properties. So, I no longer had to contact third-party servers or content delivery networks, as a result, when my page was loaded into a browser, the system was exempt from the following tasks:

While the use of CDN and distributed caching may make sense for large-scale web projects, my small static site does not benefit from the use of CDN resources. Rejecting them, at the cost of some efforts on my part, made it possible to save an additional amount of about a hundred milliseconds, which, in my opinion, justifies these efforts.

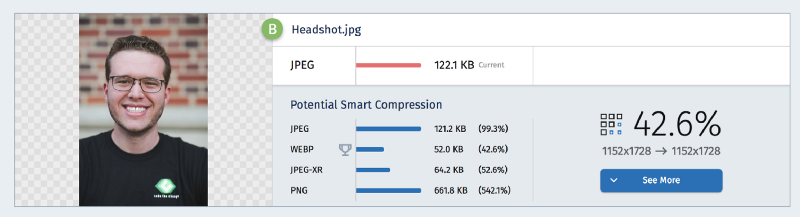

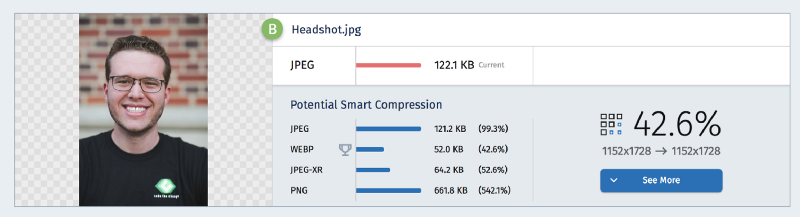

Continuing the analysis of the site, I discovered that my 8-megabyte portrait was loaded onto the page, which was displayed in a size that was 10% in width and height from its original size. This is not just a lack of optimization. Such things demonstrate the developer’s almost complete indifference to the use of the bandwidth of the user's Internet channel.

Photo optimization

I compressed all images used on the site using https://webspeedtest.cloudinary.com/ . There I was advised to use the webp format, but I wanted my page to be compatible with as many browsers as possible, so I settled on the jpeg format. You can customize everything so that webp images are used only in browsers that support this format, but I strove for maximum simplicity and decided that the benefits from the additional level of abstraction associated with images are not worth the effort spent on achieving these benefits.

Starting to optimize the server, I immediately switched to HTTPS. At the very beginning, I used the usual Nginx on port 80, which simply served the files from

So, I started by configuring HTTPS and redirecting all HTTP requests to HTTPS. I received a TLS certificate from Let's Encrypt (this wonderful organization, moreover, recently began to sign wildcard certificates). Here is the updated

By adding the

Please note that using gzip and TLS increases the risk of BREACH attacks on a web resource. In my case, since we are talking about a static site, the risk of such an attack is very low, so I calmly use compression.

What else can be done using only Nginx? The first thing that comes to mind is the use of caching and compression directives.

Previously, my server gave clients plain, uncompressed HTML. Using a single line

In fact, this indicator can be further improved if we use the

In addition, my site changes quite rarely, so I wanted to ensure that its resources would be cached for as long as possible. This would lead to the fact that, visiting my site several times, the user would not need to re-download all the materials (in particular,

This is what server configuration (

Please note that all previous improvements regarding TCP settings, gzip directives and caching are not affected here. If you want to know about all this details - take a look at this material on setting up Nginx.

But the server configuration block from my

And finally, I made a small change in the site, which could significantly improve the situation. On the page there are 5 images that can be seen only by clicking on the thumbnails corresponding to them. These images were loaded while loading the rest of the site content (the reason for this is that the paths to them were in the tags

I wrote a small script to modify the corresponding attribute of each element with the

This script accesses the

I have in mind a few more improvements that can increase page loading speed. Perhaps the most interesting of them are using a service worker to intercept network requests, which will allow the site to work even without an internet connection, and caching data using CDN, which will allow users located far from my server in San Francisco to save time, necessary to appeal to him. These are valuable ideas, but they are not particularly important in my case, since this is a small static site that plays the role of an online resume.

The above optimization allowed us to reduce the load time of my page from 8 seconds to about 350 milliseconds when it was first accessed, and, on subsequent accesses, to an incredible 200 milliseconds. As you can see, it took not so much time and effort to achieve such improvements. And, by the way, for those who are interested in optimizing websites, I recommend reading this .

Dear readers! How do you optimize your sites?

The site did not use any dynamic mechanisms - there was a bit of animation, it was created using responsive design methods, but the content of the resource almost always remained unchanged. The author of the article says that what he saw, having quickly analyzed the situation, literally horrified him. Events

DOMContentLoaded had to wait about 4 seconds, the full page load took 6.8 seconds. During the download, 20 requests were executed, the total amount of transferred data was about a megabyte. But we are talking about a static site. Then Dzhonluka realized that he had previously considered his site incredibly fast just because he was used to a low-latency Gigabit Internet connection, using which, from Los Angeles, he worked with a server located in San Francisco. Now he was in Italy and used the 8 Mbit / s Internet connection. And it completely changed the picture of what is happening.')

In this article, Jonluca De Caro will talk about how he managed to speed up his static website tenfold.

Overview

This is how the site looked, in terms of the number of requests needed to generate it, the load time and the amount of downloaded data, when it became clear that it had to be optimized.

Non-optimized site data

When I saw all this, I first took up the optimization of my site. Up to this point, if it was necessary to add a library to the site or something else, I would just take and load what I needed, using the

src="…" construct. I absolutely did not pay attention to performance, did not think about anything that could affect it, including caching, embedding code, lazy loading.Then I began to look for people who were confronted with something similar. Unfortunately, publications on the optimization of static sites are quickly becoming obsolete. For example, the recommendations dated 2010-2011 are devoted to the use of libraries, and some of them made assumptions regarding the fact that libraries are not used in the development of the site at all. Analyzing these materials, I also faced the fact that some of them simply repeat the same sets of rules over and over again.

However, I still found two excellent sources of information: the High Performance Browser Networking resource and the Dan Luu publication. Although I didn’t go as far as optimizing as Dan did, by sorting out the contents of the site and its formatting tools, I was able to speed up the loading of my page about ten times. Now,

DOMContentLoaded had to wait for about a fifth of a second, and the full page load required 388 ms (I must say that these results cannot be called absolutely accurate, since they were affected by the lazy download, which will be discussed below).

Optimization results

Now let's talk about how to achieve such results.

Site analysis

The first step in the optimization process was profiling the site. I wanted to understand what exactly requires the most time, and how best to parallelize the execution of tasks. I, for profiling the site, used various tools and checked how the site is loaded from different places of the Earth. Here is a list of resources that I used:

- https://tools.pingdom.com

- http://www.webpagetest.org/

- https://tools.keycdn.com/speed

- https://developers.google.com/web/tools/lighthouse/

- https://developers.google.com/speed/pagespeed/insights/

- https://webspeedtest.cloudinary.com/

Some of these resources made recommendations for improving the site. However, when it takes several dozens of requests to form a static site, you can do a lot of things - from gif-tricks from the 90s to getting rid of unused resources (I downloaded 6 fonts, for example, although I only used of them).

The timeline for a site very similar to mine. I didn’t take a screenshot in time for my site, but what’s shown here looks almost like what I saw several months ago when analyzing my site

I wanted to optimize everything that I can influence - from the content of the site and the speed of the scripts to the settings of the web server (Nginx in my case) and DNS.

Optimization

▍Minification and pooling of resources

The first thing I noticed was that the page performs many requests for loading CSS and JS files (it did not use persistent HTTP connections), which lead to various resources, some of which were handled via HTTPS. All of this meant that by the time the data was loaded, the time it took to go through multiple requests to content delivery networks or to regular servers was added and to receive answers from them. However, some JS files requested loading of other files, which led to the situation of lock cascades shown in the figure above.

In order to combine everything you need into one JS-file, I used the webpack . Every time I made some changes to the content of the page, the webpack automatically minified and collected all the dependencies into one file. Here is the content of my

webpack.config.js file: const UglifyJsPlugin = require('uglifyjs-webpack-plugin'); const ZopfliPlugin = require("zopfli-webpack-plugin"); module.exports = { entry: './js/app.js', mode: 'production', output: { path: __dirname + '/dist', filename: 'bundle.js' }, module: { rules: [{ test: /\.css$/, loaders: ['style-loader', 'css-loader'] }, { test: /(fonts|images)/, loaders: ['url-loader'] }] }, plugins: [new UglifyJsPlugin({ test: /\.js($|\?)/i }), new ZopfliPlugin({ asset: "[path].gz[query]", algorithm: "zopfli", test: /\.(js|html)$/, threshold: 10240, minRatio: 0.8 })] }; I experimented with various options, in the end I got one file

bundle.js , blocking the download of which is performed in the <head> section of my site. The size of this file was 829 KB. This included everything except images (fonts, CSS, all libraries and dependencies, as well as my JS code). Most of this volume, 724 of 829 KB, was in font-awesome fonts.Next, I worked with the Font Awesome library and removed everything from there, except for the three icons I used:

fa-github , fa-envelope , and fa-code . In order to extract only the icons I need from the library, I used the fontello service. As a result, I managed to reduce the size of the downloaded file to just 94 Kb.The way the site is designed does not allow it to be displayed correctly if the browser processes only HTML and CSS, so I did not try to deal with the blocking download of the

bundle.js file. Download time was approximately 118 ms, which was more than an order of magnitude better than before.This approach revealed several additional useful properties. So, I no longer had to contact third-party servers or content delivery networks, as a result, when my page was loaded into a browser, the system was exempt from the following tasks:

- Perform DNS queries to third-party resources.

- Perform HTTP connection setup procedures.

- Perform a full load of required data.

While the use of CDN and distributed caching may make sense for large-scale web projects, my small static site does not benefit from the use of CDN resources. Rejecting them, at the cost of some efforts on my part, made it possible to save an additional amount of about a hundred milliseconds, which, in my opinion, justifies these efforts.

▍ Image Compression

Continuing the analysis of the site, I discovered that my 8-megabyte portrait was loaded onto the page, which was displayed in a size that was 10% in width and height from its original size. This is not just a lack of optimization. Such things demonstrate the developer’s almost complete indifference to the use of the bandwidth of the user's Internet channel.

Photo optimization

I compressed all images used on the site using https://webspeedtest.cloudinary.com/ . There I was advised to use the webp format, but I wanted my page to be compatible with as many browsers as possible, so I settled on the jpeg format. You can customize everything so that webp images are used only in browsers that support this format, but I strove for maximum simplicity and decided that the benefits from the additional level of abstraction associated with images are not worth the effort spent on achieving these benefits.

▍ Improving the web server: HTTP2, TLS and something else

Starting to optimize the server, I immediately switched to HTTPS. At the very beginning, I used the usual Nginx on port 80, which simply served the files from

/var/www/html . Here is the code of my source file nginx.conf . server{ listen 80; server_name jonlu.ca www.jonlu.ca; root /var/www/html; index index.html index.htm; location ~ /.git/ { deny all; } location ~ / { allow all; } } So, I started by configuring HTTPS and redirecting all HTTP requests to HTTPS. I received a TLS certificate from Let's Encrypt (this wonderful organization, moreover, recently began to sign wildcard certificates). Here is the updated

nginx.conf . server { listen 443 ssl http2; listen [::]:443 ssl http2; server_name jonlu.ca www.jonlu.ca; root /var/www/html; index index.html index.htm; location ~ /.git { deny all; } location / { allow all; } ssl_certificate /etc/letsencrypt/live/jonlu.ca/fullchain.pem; # managed by Certbot ssl_certificate_key /etc/letsencrypt/live/jonlu.ca/privkey.pem; # managed by Certbot } By adding the

http2 directive, Nginx was able to use the most useful features of the latest HTTP features. Please note that in order to use HTTP2 (previously called SPDY), you need to use HTTPS. Details about this can be found here . In addition, using constructions like http2_push images/Headshot.jpg; You can use the HTTP2 push directives.Please note that using gzip and TLS increases the risk of BREACH attacks on a web resource. In my case, since we are talking about a static site, the risk of such an attack is very low, so I calmly use compression.

▍Using caching and compression directives

What else can be done using only Nginx? The first thing that comes to mind is the use of caching and compression directives.

Previously, my server gave clients plain, uncompressed HTML. Using a single line

gzip: on; I was able to reduce the size of the transmitted data from 16000 bytes to 8000, and this means reducing their volume by 50%.In fact, this indicator can be further improved if we use the

Nginx gzip_static: on; directive Nginx gzip_static: on; That will allow the system to focus on the use of precompressed versions of files This is consistent with the above configuration webpack, in particular, in order to organize the preliminary compression of all files during the assembly, you can use ZopfliPlugin . This saves computational resources and allows you to maximize compression without losing speed.In addition, my site changes quite rarely, so I wanted to ensure that its resources would be cached for as long as possible. This would lead to the fact that, visiting my site several times, the user would not need to re-download all the materials (in particular,

bundle.js ).This is what server configuration (

nginx.conf ) I finally came to. worker_processes auto; pid /run/nginx.pid; worker_rlimit_nofile 30000; events { worker_connections 65535; multi_accept on; use epoll; } http { ## # Basic Settings ## sendfile on; tcp_nopush on; tcp_nodelay on; keepalive_timeout 65; types_hash_max_size 2048; # Turn of server tokens specifying nginx version server_tokens off; open_file_cache max=200000 inactive=20s; open_file_cache_valid 30s; open_file_cache_min_uses 2; open_file_cache_errors on; include /etc/nginx/mime.types; default_type application/octet-stream; add_header Referrer-Policy "no-referrer"; ## # SSL Settings ## ssl_protocols TLSv1 TLSv1.1 TLSv1.2; ssl_prefer_server_ciphers on; ssl_dhparam /location/to/dhparam.pem; ssl_ciphers 'ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-AES256-GCM-SHA384:DHE-RSA-AES128-GCM-SHA256:DHE-DSS-AES128-GCM-SHA256:kEDH+AESGCM:ECDHE-RSA-AES128-SHA256:ECDHE-ECDSA-AES128-SHA256:ECDHE-RSA-AES128-SHA:ECDHE-ECDSA-AES128-SHA:ECDHE-RSA-AES256-SHA384:ECDHE-ECDSA-AES256-SHA384:ECDHE-RSA-AES256-SHA:ECDHE-ECDSA-AES256-SHA:DHE-RSA-AES128-SHA256:DHE-RSA-AES128-SHA:DHE-DSS-AES128-SHA256:DHE-RSA-AES256-SHA256:DHE-DSS-AES256-SHA:DHE-RSA-AES256-SHA:AES128-GCM-SHA256:AES256-GCM-SHA384:AES128-SHA256:AES256-SHA256:AES128-SHA:AES256-SHA:AES:CAMELLIA:!aNULL:!eNULL:!EXPORT:!DES:!RC4:!MD5:!PSK:!aECDH:!EDH-DSS-DES-CBC3-SHA:!EDH-RSA-DES-CBC3-SHA:!KRB5-DES-CBC3-SHA'; ssl_session_timeout 1d; ssl_session_cache shared:SSL:50m; ssl_stapling on; ssl_stapling_verify on; add_header Strict-Transport-Security 'max-age=31536000; includeSubDomains; preload'; ssl_certificate /location/to/fullchain.pem; ssl_certificate_key /location/to/privkey.pem; ## # Logging Settings ## access_log /var/log/nginx/access.log; error_log /var/log/nginx/error.log; ## # Gzip Settings ## gzip on; gzip_disable "msie6"; gzip_vary on; gzip_proxied any; gzip_comp_level 6; gzip_buffers 16 8k; gzip_http_version 1.1; gzip_types text/plain text/css application/json application/javascript text/xml application/xml application/xml+rss text/javascript application/vnd.ms-fontobject application/x-font-ttf font/opentype image/svg+xml image/x-icon; gzip_min_length 256; ## # Virtual Host Configs ## include /etc/nginx/conf.d/*.conf; include /etc/nginx/sites-enabled/*; } Please note that all previous improvements regarding TCP settings, gzip directives and caching are not affected here. If you want to know about all this details - take a look at this material on setting up Nginx.

But the server configuration block from my

nginx.conf . server { listen 443 ssl http2; server_name jonlu.ca www.jonlu.ca; root /var/www/html; index index.html index.htm; location ~ /.git/ { deny all; } location ~* /(images|js|css|fonts|assets|dist) { gzip_static on; # Tells nginx to look for compressed versions of all requested files first expires 15d; # 15 day expiration for all static assets } } ▍Lazy loading

And finally, I made a small change in the site, which could significantly improve the situation. On the page there are 5 images that can be seen only by clicking on the thumbnails corresponding to them. These images were loaded while loading the rest of the site content (the reason for this is that the paths to them were in the tags

<img align="center" src="…"> ).I wrote a small script to modify the corresponding attribute of each element with the

lazyload class. As a result, these images are now loaded only by clicking on the corresponding thumbnails. Here is the lazyload.js file: $(document).ready(function() { $("#about").click(function() { $('#about > .lazyload').each(function() { // src img data-src $(this).attr('src', $(this).attr('data-src')); }); }); $("#articles").click(function() { $('#articles > .lazyload').each(function() { // src img data-src $(this).attr('src', $(this).attr('data-src')); }); }); }); This script accesses the

<img> elements, setting <img src="…"> based on <img data-src="…"> , which allows you to load images when they are needed, and not during the loading of basic site materials.Further improvements

I have in mind a few more improvements that can increase page loading speed. Perhaps the most interesting of them are using a service worker to intercept network requests, which will allow the site to work even without an internet connection, and caching data using CDN, which will allow users located far from my server in San Francisco to save time, necessary to appeal to him. These are valuable ideas, but they are not particularly important in my case, since this is a small static site that plays the role of an online resume.

Results

The above optimization allowed us to reduce the load time of my page from 8 seconds to about 350 milliseconds when it was first accessed, and, on subsequent accesses, to an incredible 200 milliseconds. As you can see, it took not so much time and effort to achieve such improvements. And, by the way, for those who are interested in optimizing websites, I recommend reading this .

Dear readers! How do you optimize your sites?

Source: https://habr.com/ru/post/352030/

All Articles