How to stay in the TOP when changing search algorithms (a guide for novice SEOs)

After each update of the search engine algorithm, some SEO optimizers say that it was unsuccessful. There are over 200 site ranking factors. It is reasonable to assume that there are quite a lot of possible updates. In this article, we will describe how the search algorithms work, why they are constantly changing and how to react to it.

They are based on formulas that determine the position of a site in search results for a specific query. They are aimed at selecting the web pages that most closely match the phrase entered by the user. However, they try not to take into account irrelevant sites and those that use different types of spam.

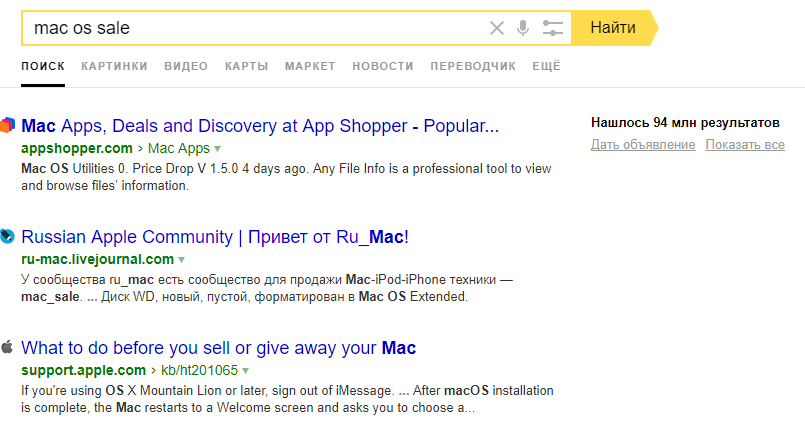

Search engines look at the texts of the site, in particular, the presence of keywords in them. Content is one of the most important factors when deciding on a site’s ranking. Yandex and Google have different algorithms, and therefore the search results for the same phrase may differ. Yet the basic ranking rules laid down in the algorithm are the same. For example, they both follow the originality of the content.

Initially, when few sites were registered on the Internet, search engines took very few parameters into account: headers, keywords, text size, etc. But soon the owners of web resources began to actively use unfair promotion methods. This made the search engines develop algorithms in the direction of spam tracking. As a result, now it is often spammers with their new and new methods that dictate the development trends of search algorithms.

')

The main difficulty in SEO-site optimization is that Yandex and Google do not talk about the principles of their work. Thus, we have only a rough idea of the parameters that they take into account. This information is based on the findings of SEO-specialists who are constantly trying to determine what affects the ranking of sites.

For example, it became known that search engines determine the most visited pages of a site and calculate the time that people spend on them. Systems assume that if a user is on a page for a long time, then the published information is useful for him.

Google’s algorithms were the first to take into account the number of links to other resources leading to a specific site. Innovation allowed to use the natural development of the Internet to determine the quality of sites and their relevance. This was one of the reasons why Google became the most popular search engine in the world.

Then he began to take into account the relevance of information and its relation to a specific location. Since 2001, the system has learned to distinguish information sites from selling. Then she began to attach greater importance to links that are posted on higher-quality and popular resources.

In 2003, Google began to pay attention to too frequent use of key phrases in texts. This innovation has significantly complicated the work of SEO-optimizers and forced them to look for new methods of promotion.

Soon, Google began to take into account the quality of the ranked sites.

Yandex talks about how its algorithms work more than Google does. Since 2007, he began to publish information about the changes in his blog.

For example, in 2008, the company announced that the search engine began to understand the abbreviations and learned to translate the words in the query. At the same time, Yandex began working with foreign resources, as a result of which Russian-language sites became more difficult to occupy high positions on requests with foreign words.

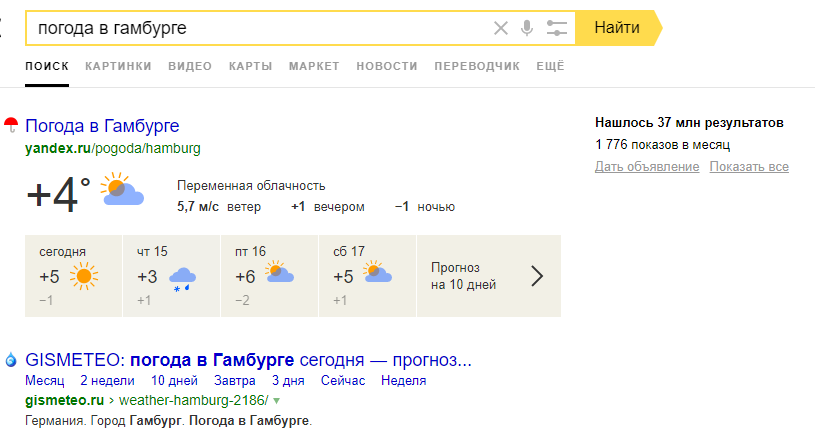

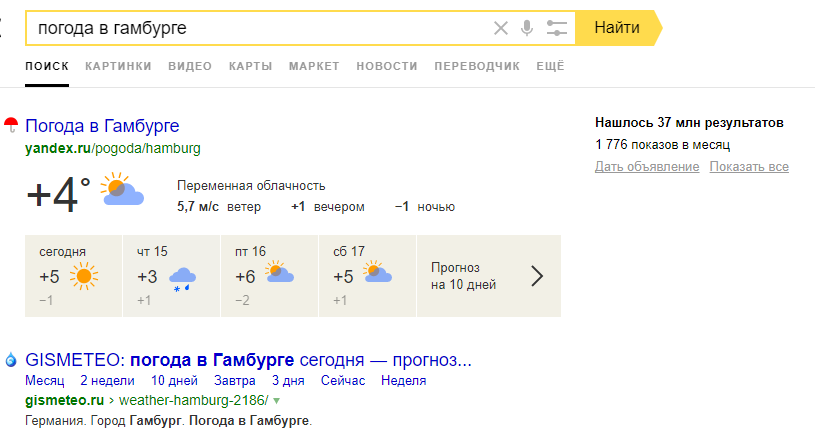

In the same year, users began to receive answers to some queries directly on the results pages. For example, to find out the weather, it is not necessary to go to the site.

Yandex drew attention to the originality of the texts.

Further, the search engine began to better process requests with negative words, to understand when the user made a grammatical error. In addition, SEO experts concluded that Yandex began to give preference to older sites, raising their rating.

In most cases, when we talk about updates, we mean changes in the main search engine algorithm. It consists of traditional ranking factors, as well as algorithms designed to track and remove spam.

Therefore, when there is a change in the algorithm, and this happens almost daily, the search engine can make many adjustments at once. And they can seriously affect the ranking of your site on the page issuance.

Google updates are designed to help users. However, this does not mean that they help site owners.

There are two types of updates. Some of them concern the UX, that is, the usability of the site: advertising, pop-up windows, download speed, etc. These include, for example, updates about catching spam and evaluating the usability of the mobile version of the site.

Other updates are necessary for evaluating site content: how valuable it is to the user. Low-quality content has the following characteristics:

We can say that often updates concern the quality of the content, because problems with the perception of information are closely related to its content.

Requirements for sites are quite extensive, which causes dissatisfaction with SEO-optimizers. Some believe that Google defends the power of its brand and is biased against small sites.

To begin with, determine if they contribute to raising or lowering your rating. If the rating fell, it could not happen just like this: it means something is done wrong.

Many algorithms are aimed at tracking spam. Yet it is possible that the site loses its position, while not doing anything wrong. Then the reason usually lies in changing the criteria for selecting sites for TOP. In other words, instead of downgrading the site for any errors, the algorithm can promote other resources that do something better.

Therefore, you need to constantly analyze why websites bypass you. For example, if a user enters an information request and your site is commercial, then the page with informational content will be higher than your site.

Here are some reasons why competitors may overtake you:

In general, do not worry if your site is still on the first page of search results. But if he was on the second or farther away, this indicates the presence of serious problems. Sometimes the reason is the SEO changes you made recently.

In other cases, a downgrade may be associated with the introduction of a new ranking factor. It is important to know the difference between innovations that contribute to the promotion of other pages, and innovations that lead to the loss of the position of your site. Perhaps your SEO promotion strategy needs to be updated.

Are the search algorithms wrong?

Yes, sometimes the algorithm goes wrong. But this happens very rarely.

Algorithm errors are usually visible in long search queries. Study their results to better understand the innovations in the algorithm. An error can be considered cases where the results page does not clearly correspond to the query.

Of course, first you will come up with the most obvious explanation, but it is not always true. If you decide that the search engine prefers certain brands or the problem lies solely in the UX site, then dig a little deeper. You might find a better explanation.

It is not so easy to understand the principles of the algorithms, and to track their changes, you must be constantly included in the information field. However, not every company has an SEO specialist, often the task of promotion in search engines is given to freelancing. Then you need to adjust SEO in such a way that the position of the site remains consistently high regardless of the innovations of search engines.

Here are some ways to do this.

1. Properly use promotion with the help of links.

The main purpose of Yandex and Google innovations is to protect against spam. For example, before SEO-algorithms raised higher sites that were most links to other web pages. As a result, this kind of spam has appeared, like commenting blogs. Fake posts usually start with a ridiculous cliche greeting like “What a wonderful and informative blog you have” and end with a link to a page whose content does not correspond to this blog.

As a result, search engines began to actively introduce ranking factors, allowing to take into account this type of spam. Now links to your site are still important, but obviously unnatural comments can damage your SEO promotion.

2. Interact with the audience.

Communicating with potential buyers will not only help your SEO, but also directly increase sales. Interaction means advertising, PR, SMM and other ways to “hook” users on the Internet.

The natural dissemination of information about your brand leads to an increase in links and links to your website. Thus, using different promotion methods you win twice: in terms of SEO and direct sales.

3. Clearly define your SEO goals.

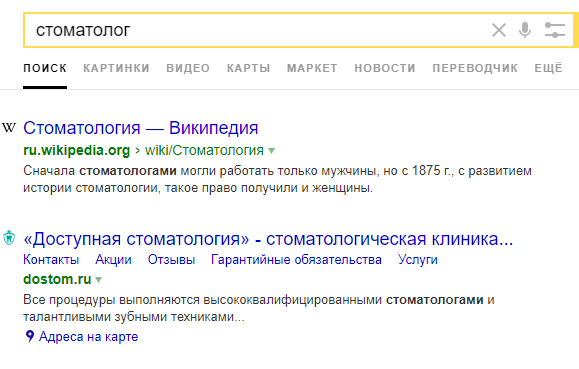

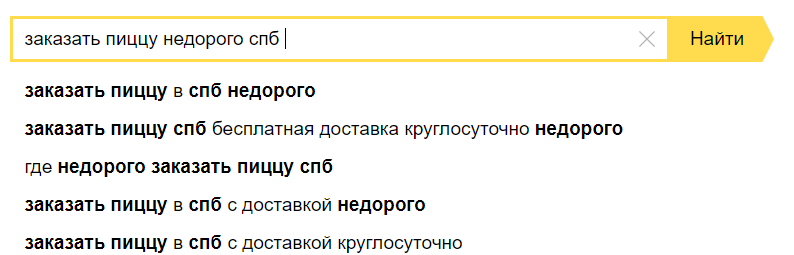

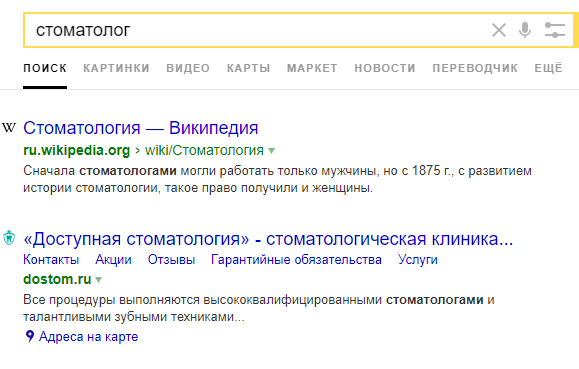

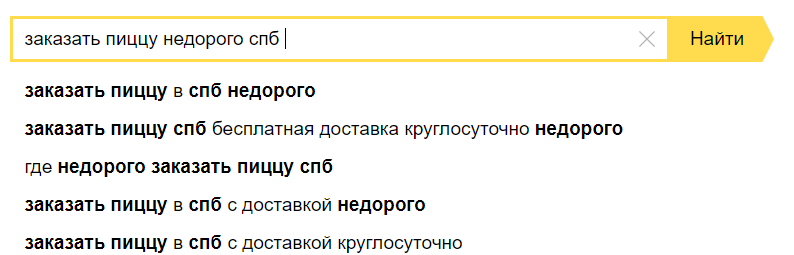

Often, representatives of companies believe that promotion in search engines is necessary in order to occupy high positions on single-word requests, such as “lawyer”, “dentistry”, “sushi”. However, it is not. Users are increasingly introducing long search phrases, for example: “inexpensive coffee shop in Samara”. In addition, search engines give hints that can make the final query even longer.

It is important to occupy high positions precisely for such narrow low- and mid-frequency queries.

The basic principle of working with SEO, which will not be affected by changes in algorithms, is to focus not on increasing the number of clicks to the site, but on attracting real customers. That is why the development of SEO should not be reduced to just buttons and texts written specifically for algorithms. Of course, this cannot be done without, but such elements are meaningless if they do not lead buyers.

SEO-optimizers should not only select key phrases for each individual page of the site and take into account the prompts that search engine users offer, but also understand the competitive advantages of the company, which must be used when designing and filling the site.

One of the most popular mistakes SEO specialists make is to select keywords manually, without using special programs. As a result, they miss some valuable requests and, at the same time, leave inefficient ones. This, of course, still improves the position of the site, but only slightly.

The use of automated services can increase the effectiveness and speed of collecting the semantic core. With their help, the formation process will not take more than two minutes. The optimizer will just have to make sure that all queries are suitable for the company's goals.

Such programs include, for example, SEMrush and Key Collector. These services are paid - access to them is provided for a period of one month.

The second common mistake is inconvenient for the user site structure. For example, there may be an insufficient number of filters, tags and subcategories. This affects the usability of the search by product. When the process is too complicated for the user, your potential client will most likely go to another site.

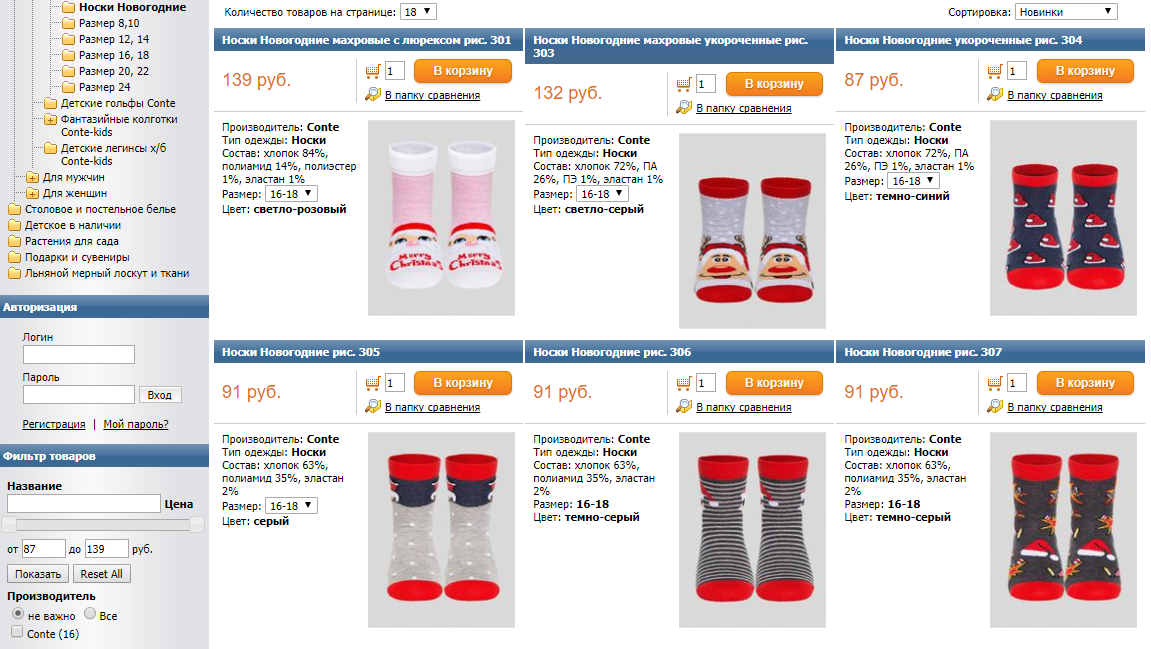

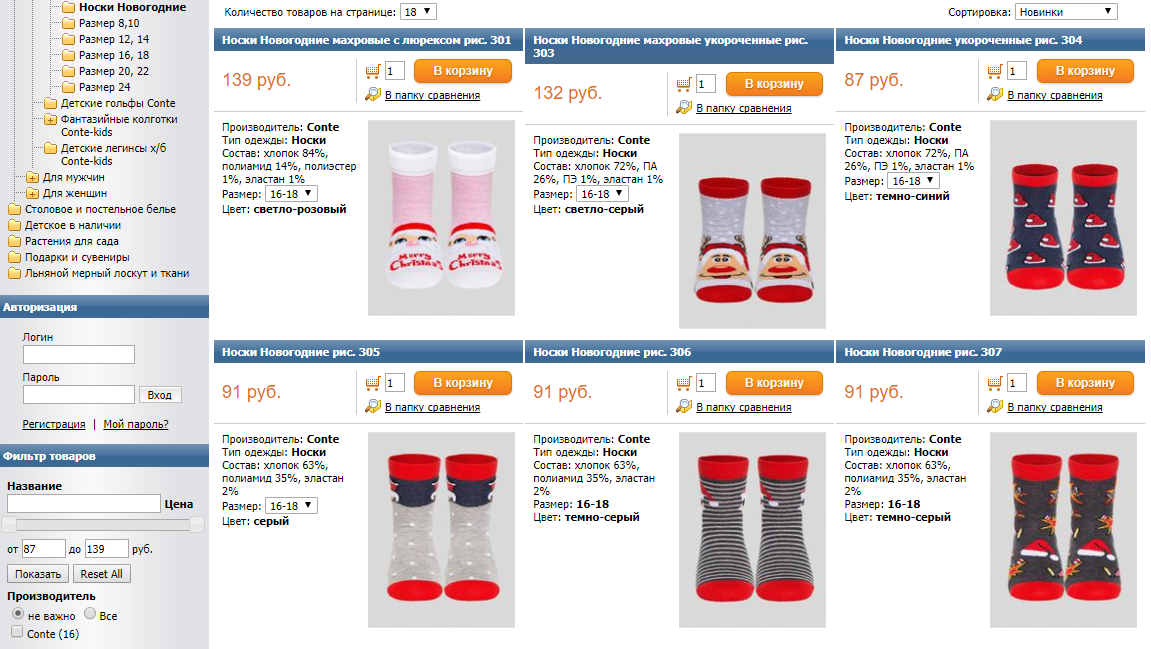

So, if two hundred pairs of socks are laid out in your online store, then you should not add all of them to one category “Socks” without the ability to filter. Do not force users to scroll through your entire range for a long time. It is much more convenient for them to have a separate page for highly specialized queries. For example, the search phrase “Christmas socks size 36” should correspond to a page with several matching pairs.

Many sites already use this technique, and users are used to finding the most detailed and convenient answer to their request. Now people quickly understand when the structure of the site is poorly thought out.

Although it seems that search engines, changing their algorithms every day, try to do everything to complicate the lives of SEO specialists, this is not so. The changes, for the most part, are due to the fight against spam, and therefore help sites promoted using honest methods. Innovations are also aimed at improving the ranking of high-quality sites, which is an excellent reason for you to become better.

Regardless of changes in the basis of algorithms, the same principles always lie. Therefore, setting quality SEO once, you can almost forget about the constant innovations.

What are search algorithms?

They are based on formulas that determine the position of a site in search results for a specific query. They are aimed at selecting the web pages that most closely match the phrase entered by the user. However, they try not to take into account irrelevant sites and those that use different types of spam.

Search engines look at the texts of the site, in particular, the presence of keywords in them. Content is one of the most important factors when deciding on a site’s ranking. Yandex and Google have different algorithms, and therefore the search results for the same phrase may differ. Yet the basic ranking rules laid down in the algorithm are the same. For example, they both follow the originality of the content.

Initially, when few sites were registered on the Internet, search engines took very few parameters into account: headers, keywords, text size, etc. But soon the owners of web resources began to actively use unfair promotion methods. This made the search engines develop algorithms in the direction of spam tracking. As a result, now it is often spammers with their new and new methods that dictate the development trends of search algorithms.

')

The main difficulty in SEO-site optimization is that Yandex and Google do not talk about the principles of their work. Thus, we have only a rough idea of the parameters that they take into account. This information is based on the findings of SEO-specialists who are constantly trying to determine what affects the ranking of sites.

For example, it became known that search engines determine the most visited pages of a site and calculate the time that people spend on them. Systems assume that if a user is on a page for a long time, then the published information is useful for him.

Comparison of Google and Yandex algorithms

Google’s algorithms were the first to take into account the number of links to other resources leading to a specific site. Innovation allowed to use the natural development of the Internet to determine the quality of sites and their relevance. This was one of the reasons why Google became the most popular search engine in the world.

Then he began to take into account the relevance of information and its relation to a specific location. Since 2001, the system has learned to distinguish information sites from selling. Then she began to attach greater importance to links that are posted on higher-quality and popular resources.

In 2003, Google began to pay attention to too frequent use of key phrases in texts. This innovation has significantly complicated the work of SEO-optimizers and forced them to look for new methods of promotion.

Soon, Google began to take into account the quality of the ranked sites.

Yandex talks about how its algorithms work more than Google does. Since 2007, he began to publish information about the changes in his blog.

For example, in 2008, the company announced that the search engine began to understand the abbreviations and learned to translate the words in the query. At the same time, Yandex began working with foreign resources, as a result of which Russian-language sites became more difficult to occupy high positions on requests with foreign words.

In the same year, users began to receive answers to some queries directly on the results pages. For example, to find out the weather, it is not necessary to go to the site.

Yandex drew attention to the originality of the texts.

Further, the search engine began to better process requests with negative words, to understand when the user made a grammatical error. In addition, SEO experts concluded that Yandex began to give preference to older sites, raising their rating.

Types of changes in algorithms

In most cases, when we talk about updates, we mean changes in the main search engine algorithm. It consists of traditional ranking factors, as well as algorithms designed to track and remove spam.

Therefore, when there is a change in the algorithm, and this happens almost daily, the search engine can make many adjustments at once. And they can seriously affect the ranking of your site on the page issuance.

Google updates are designed to help users. However, this does not mean that they help site owners.

There are two types of updates. Some of them concern the UX, that is, the usability of the site: advertising, pop-up windows, download speed, etc. These include, for example, updates about catching spam and evaluating the usability of the mobile version of the site.

Other updates are necessary for evaluating site content: how valuable it is to the user. Low-quality content has the following characteristics:

- The same text is repeated several times on the page.

- Does not help the user to find the answer to the request.

- Fraudulently inclines a site visitor to buy goods.

- Often contains false information.

- Not authentic.

- There is no clear structure: the selection of sections and paragraphs.

- The content does not meet the interests of the target audience of the site.

- It has low uniqueness.

We can say that often updates concern the quality of the content, because problems with the perception of information are closely related to its content.

Requirements for sites are quite extensive, which causes dissatisfaction with SEO-optimizers. Some believe that Google defends the power of its brand and is biased against small sites.

How to perceive updates of search engines?

To begin with, determine if they contribute to raising or lowering your rating. If the rating fell, it could not happen just like this: it means something is done wrong.

Many algorithms are aimed at tracking spam. Yet it is possible that the site loses its position, while not doing anything wrong. Then the reason usually lies in changing the criteria for selecting sites for TOP. In other words, instead of downgrading the site for any errors, the algorithm can promote other resources that do something better.

Therefore, you need to constantly analyze why websites bypass you. For example, if a user enters an information request and your site is commercial, then the page with informational content will be higher than your site.

Here are some reasons why competitors may overtake you:

- More accurate match to the user's request.

- A large number of links to this page posted on other resources.

- The recent update of the page, as a result of which it has become more relevant and valuable.

In general, do not worry if your site is still on the first page of search results. But if he was on the second or farther away, this indicates the presence of serious problems. Sometimes the reason is the SEO changes you made recently.

In other cases, a downgrade may be associated with the introduction of a new ranking factor. It is important to know the difference between innovations that contribute to the promotion of other pages, and innovations that lead to the loss of the position of your site. Perhaps your SEO promotion strategy needs to be updated.

Are the search algorithms wrong?

Yes, sometimes the algorithm goes wrong. But this happens very rarely.

Algorithm errors are usually visible in long search queries. Study their results to better understand the innovations in the algorithm. An error can be considered cases where the results page does not clearly correspond to the query.

Of course, first you will come up with the most obvious explanation, but it is not always true. If you decide that the search engine prefers certain brands or the problem lies solely in the UX site, then dig a little deeper. You might find a better explanation.

How not to depend on changes in algorithms?

It is not so easy to understand the principles of the algorithms, and to track their changes, you must be constantly included in the information field. However, not every company has an SEO specialist, often the task of promotion in search engines is given to freelancing. Then you need to adjust SEO in such a way that the position of the site remains consistently high regardless of the innovations of search engines.

Here are some ways to do this.

1. Properly use promotion with the help of links.

The main purpose of Yandex and Google innovations is to protect against spam. For example, before SEO-algorithms raised higher sites that were most links to other web pages. As a result, this kind of spam has appeared, like commenting blogs. Fake posts usually start with a ridiculous cliche greeting like “What a wonderful and informative blog you have” and end with a link to a page whose content does not correspond to this blog.

As a result, search engines began to actively introduce ranking factors, allowing to take into account this type of spam. Now links to your site are still important, but obviously unnatural comments can damage your SEO promotion.

2. Interact with the audience.

Communicating with potential buyers will not only help your SEO, but also directly increase sales. Interaction means advertising, PR, SMM and other ways to “hook” users on the Internet.

The natural dissemination of information about your brand leads to an increase in links and links to your website. Thus, using different promotion methods you win twice: in terms of SEO and direct sales.

3. Clearly define your SEO goals.

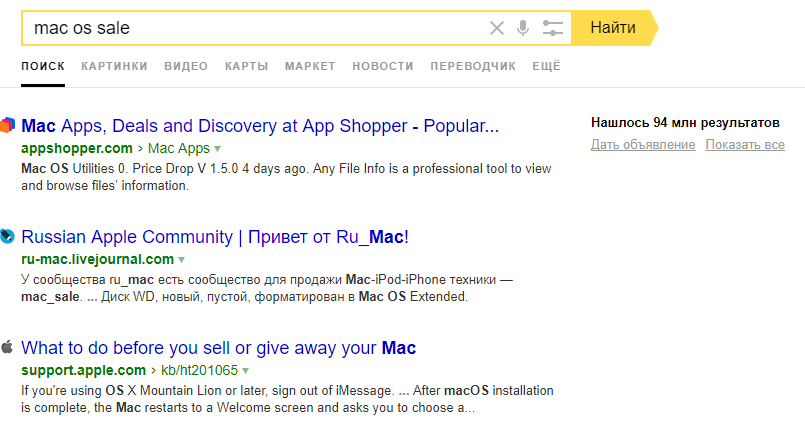

Often, representatives of companies believe that promotion in search engines is necessary in order to occupy high positions on single-word requests, such as “lawyer”, “dentistry”, “sushi”. However, it is not. Users are increasingly introducing long search phrases, for example: “inexpensive coffee shop in Samara”. In addition, search engines give hints that can make the final query even longer.

It is important to occupy high positions precisely for such narrow low- and mid-frequency queries.

Frequent errors in SEO

The basic principle of working with SEO, which will not be affected by changes in algorithms, is to focus not on increasing the number of clicks to the site, but on attracting real customers. That is why the development of SEO should not be reduced to just buttons and texts written specifically for algorithms. Of course, this cannot be done without, but such elements are meaningless if they do not lead buyers.

SEO-optimizers should not only select key phrases for each individual page of the site and take into account the prompts that search engine users offer, but also understand the competitive advantages of the company, which must be used when designing and filling the site.

One of the most popular mistakes SEO specialists make is to select keywords manually, without using special programs. As a result, they miss some valuable requests and, at the same time, leave inefficient ones. This, of course, still improves the position of the site, but only slightly.

The use of automated services can increase the effectiveness and speed of collecting the semantic core. With their help, the formation process will not take more than two minutes. The optimizer will just have to make sure that all queries are suitable for the company's goals.

Such programs include, for example, SEMrush and Key Collector. These services are paid - access to them is provided for a period of one month.

The second common mistake is inconvenient for the user site structure. For example, there may be an insufficient number of filters, tags and subcategories. This affects the usability of the search by product. When the process is too complicated for the user, your potential client will most likely go to another site.

So, if two hundred pairs of socks are laid out in your online store, then you should not add all of them to one category “Socks” without the ability to filter. Do not force users to scroll through your entire range for a long time. It is much more convenient for them to have a separate page for highly specialized queries. For example, the search phrase “Christmas socks size 36” should correspond to a page with several matching pairs.

Many sites already use this technique, and users are used to finding the most detailed and convenient answer to their request. Now people quickly understand when the structure of the site is poorly thought out.

findings

Although it seems that search engines, changing their algorithms every day, try to do everything to complicate the lives of SEO specialists, this is not so. The changes, for the most part, are due to the fight against spam, and therefore help sites promoted using honest methods. Innovations are also aimed at improving the ranking of high-quality sites, which is an excellent reason for you to become better.

Regardless of changes in the basis of algorithms, the same principles always lie. Therefore, setting quality SEO once, you can almost forget about the constant innovations.

Source: https://habr.com/ru/post/351930/

All Articles