Feel Neural Network or Neural Network Designer

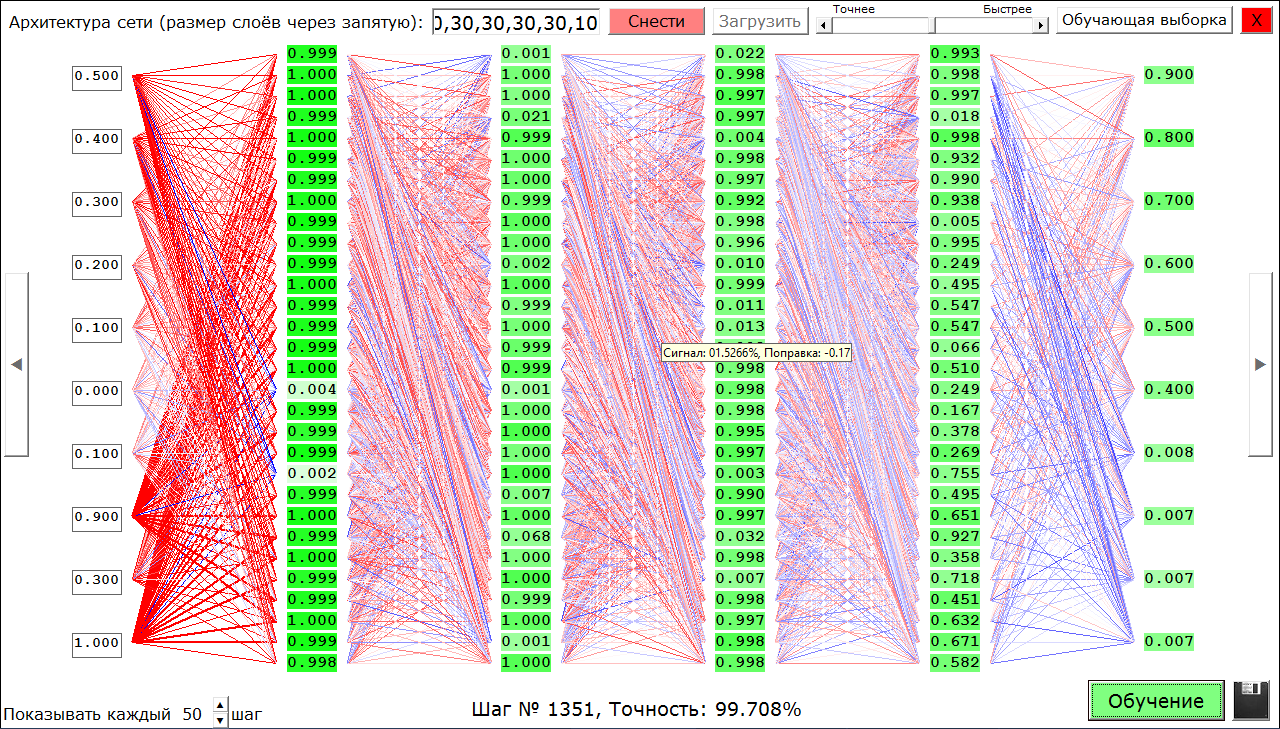

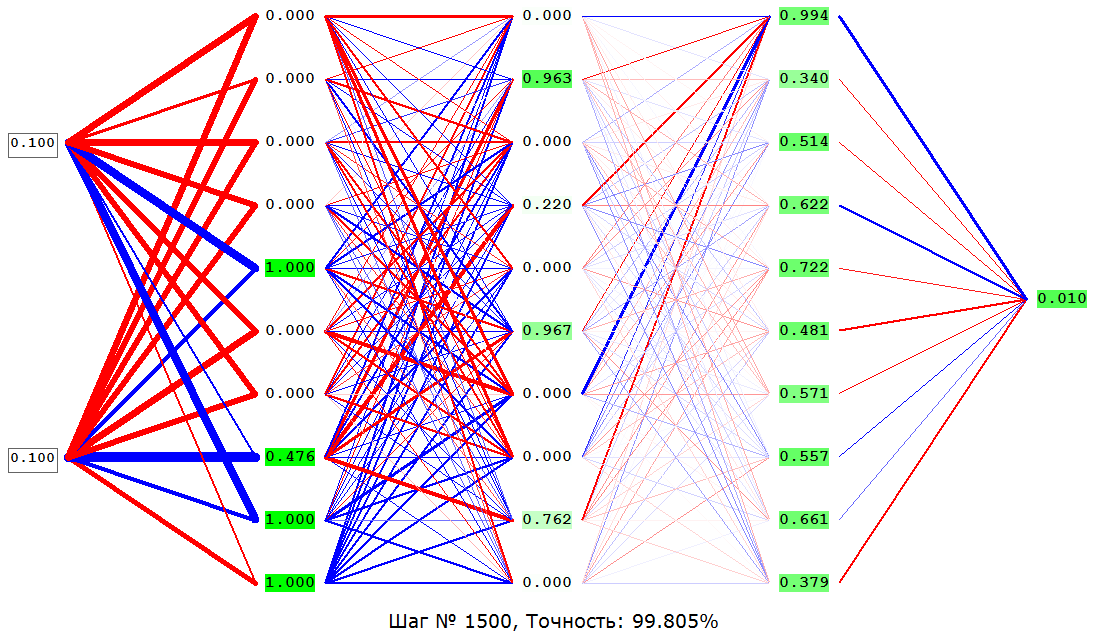

I have been interested in neural networks for a long time, but only from the point of view of the viewer - I followed the new opportunities that they offer in comparison with the usual programming. But never climbed either into theory or into practice. And suddenly (after the sensational news about AlphaZero) I wanted to make my neural network. After seeing a few lessons on this topic on YouTube, I got a little idea of the theory and moved on to practice. In the end, I did even better than my neural network. It turned out the designer of the neural networks and visual aid for them (that is, you can see what is happening inside the neural network). Here's what it looks like:

And now a little more. With this constructor, you can create a network of direct distribution (Feedforward neural network) up to 8 hidden layers (plus a layer of inputs and a layer of outputs, totaling 10 layers (usually 4 layers is more than enough)) in each layer up to 30 neurons because all this is simultaneously displayed on the screen, if there are requests in the comments, I will release a version without limitations and visualization). The activation function of all neurons is sigmoid based on the logistic function. It is also possible to train the resulting networks by the method of back propagation of error by gradient descent according to given examples. And, most importantly, you can look at each neuron in each individual case (what value it transmits further, its displacement (correction, bias) - neurons with negative displacement are white, with positive displacement are bright green), connections of neurons depending on their weight are marked red - positive, blue - negative, and also differ in thickness - the greater the module weight, the thicker. And if you hover the mouse on a neuron, you can still see what signal it comes to, and what exactly is its offset. This is useful to understand how a particular network works or to show students how the direct distribution networks work. But the most important thing is that you can save your network to a file and share it with the world.

')

Next, there will be instructions for using the program, embedding the networks created in your projects, as well as the analysis of several networks that are bundled.

How to use the constructor

To get started, download the archive from here .

Unpack to the root of the

D:\ driveRun

NeuroNet.exeYou can try to “Download” any network, look at it, click on “Training”, see its accuracy, poke arrows to the left, to the right (on the sides), to view various options of input (left column of neurons) and output (right) data, Click "Stop" and try to enter your input data (any values from 0 to 1 are allowed, consider this when creating your networks and normalize the input and output data).

Now how to build your network. First of all, you need to define the network architecture (the number of neurons in each layer, separated by commas), click “Build” (or “Demolish” first, then build, if you have another network displayed on the screen), click “Teaching sample”, “Delete all »And enter your teaching examples, according to the instructions on the screen. You can also indicate the input and output small square images (maximum 5x5 pixels), from which the normalized brightness values of the pixels will be determined (disregarding their color), for which you need to click on "in" and "out", respectively. Click "Add an example", repeat the procedure the desired number of times. Click “Finish”, “Training” and how accuracy will be satisfactory (usually 98%), click “Stop” icon in the form of a floppy disk (save), give the network a name and be happy that you created the neural network yourself. Additionally, you can set the learning speed with the “More Accurate / Faster” slider, and also visualize not every 50th step, but every 10th or 300th step, as you like.

Integration of established networks into your projects

To use my neural networks in my own projects, I created a separate application,

doNet.exe , which needs to be run with parameters: “ D:\NeuroNet\doNet.exe < > < > ”, wait for the application to finish, after which read the output from D:\NeuroNet\temp.txtFor example, a 4-5.exe application is created using the 4-5 network (about this and other networks below). This application describes in detail how to properly run doNet.exe

Parsing the bundled networks

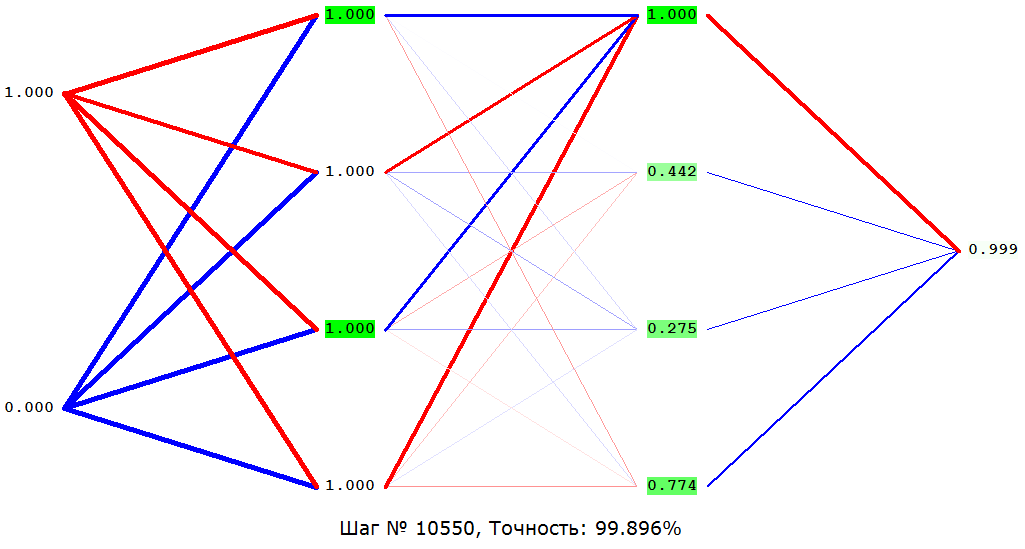

Let's start with the classics - "XOR (Half-rated)." Among others, in particular, this task — addition modulo 2 — in 1969 was cited as an example of the limitations of neural networks (namely, single-layer perceptrons). In general, there are two inputs (with values either 0 or 1 each), our task is to answer 1 if the values of the inputs are different, 0 - if they are the same.

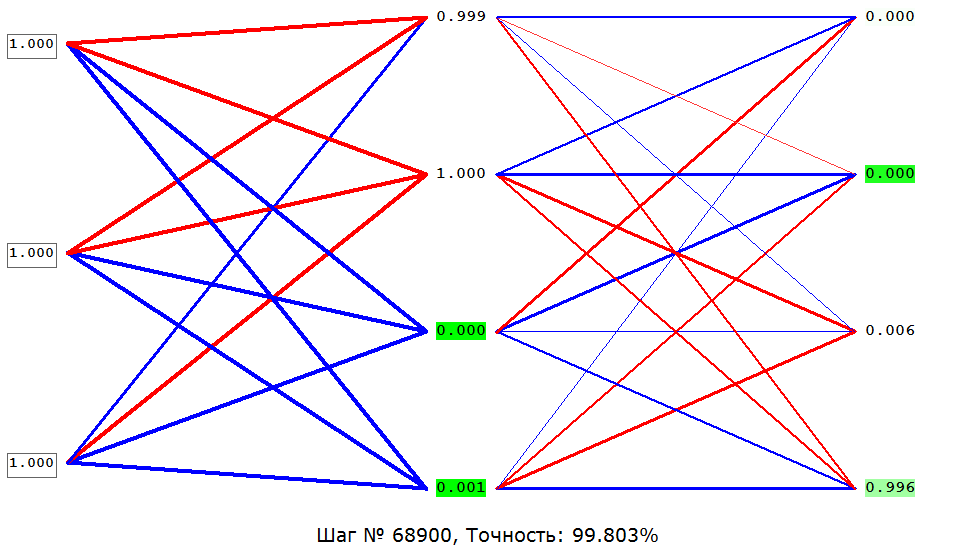

Further "Quantity-units". Three inputs (0 or 1 on each). It is required to count how many units were filed. Implemented as a classification task - four outputs for each answer variant (0,1,2,3 units). At which output the maximum value, respectively, the answer is the same.

"Multiplication" - Two inputs (real from 0 to 1), the output of their product.

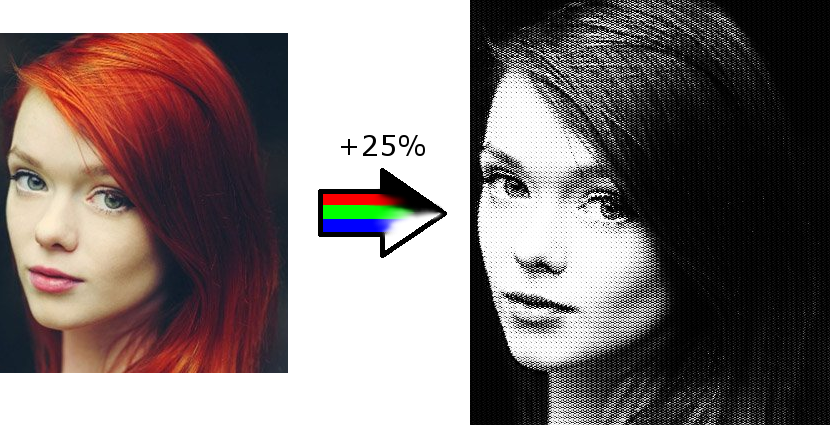

“4-5” - The normalized brightness values of the pixels of the 4x4 image are fed to the input, and the output has the normalized brightness values of the pixels of the 5x5 image.

The network conceived how to increase the quality of a large image by 25%, and the interesting filter for a photo came out:

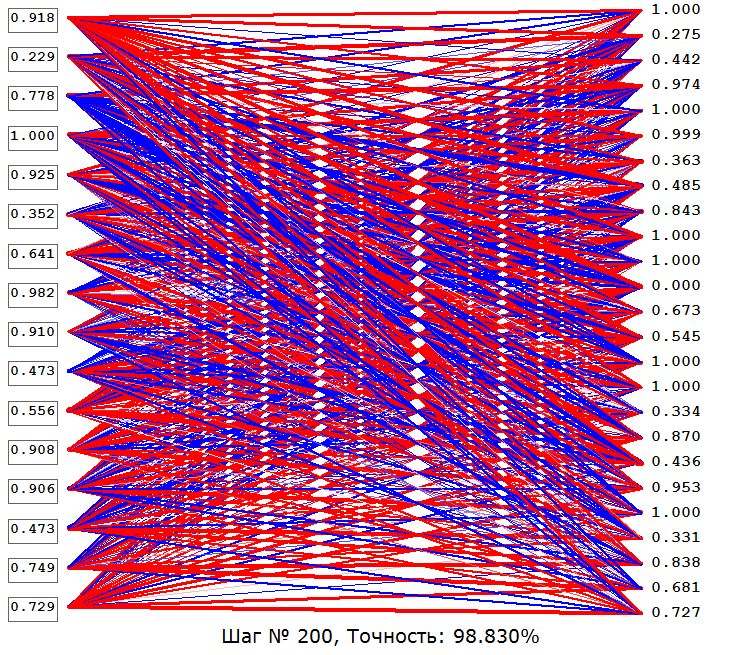

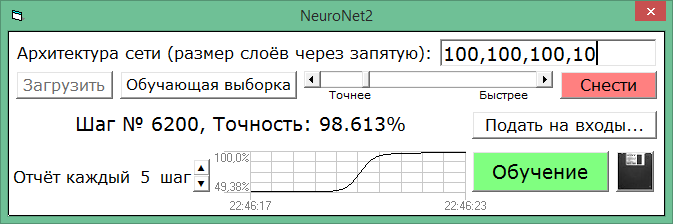

UPD: The NeuroNet2.exe application was added to the archive (the same constructor, but without visualization (thanks to which it works 2 times faster) and restrictions on the number of neurons in the layer (up to 1024 instead of 30), also in the training set, input and output can be submitted square pictures up to 32x32). Also added to the training schedule. Neural networks can now use (and integrate into their projects (even on the server)) and those who do not know their theory! In the semi-automatic mode (after training, manually submit values to the input and get the result on the screen) they can be used even without programming knowledge!

That's all, waiting for comments.

PS If an error occurs, try registering with the administrator using regsvr32 comdlg32 files, which are also in the archive.

Source: https://habr.com/ru/post/351922/

All Articles