Waited for: YAML and Ansible support (no cows) in dapp

At the beginning of this year, we considered that our Open Source-utility for supporting the processes CI / CD - dapp version 0.25 - has a sufficient set of functions and work was started on innovations. In version 0.26, the YAML syntax appeared, and Ruby DSL was declared classical (it will no longer be supported at all). In the next version, 0.27, the main innovation can be considered the appearance of the collector with Ansible. It's time to talk about these updates in more detail.

Prehistory

We have been developing dapp for more than 2 years and actively use in the daily service of many projects of various scales. The first versions of the utility were designed to use Chef to build images. When we added to this the fact that Ruby was familiar to almost all of our engineers and developers, we made the logical decision to implement the dapp as a Ruby gem. It was considered appropriate to make the Dappfile config in the form of Ruby DSL - all the more so since we know a successful example from a close area - Vagrant.

As the utility developed, it became clear that dapp needed a second specialization - application delivery to Kubernetes . This is how the Helm charts mode appeared, and the engineers mastered the YAML syntax and patterns on Go, while the developers started sending patches to Helm. On the one hand, the delivery to Kubernetes has become an integral part of the dapp, and on the other, the de facto standard in the Docker and Kubernetes ecosystem is Go. Our dapp, being written in Ruby, is now out of the big picture: if it’s difficult for us to reuse Docker code, users often just don’t want to install Ruby on building machines - because it’s much easier and more familiar to download a binary ... As a result, the main development goals of dapp : a) translation of the code base to Go; b) implementation of the YAML syntax.

In addition, since then, Chef has ceased to arrange us for a number of reasons, both for controlling machines and for assembling. As it turned out, the transition to Ansible solves some of the problems not only of our DevOps engineers: the most frequent issue at conferences was the support of Ansible in dapp . Thus, the third goal was the implementation of Ansible-collector.

')

YAML syntax

I have already introduced the introduction to the YAML syntax in this article , but now I’ll take a closer look at it.

The build configuration can be described in the

dappfile.yaml file (or dappfile.yml ). Configuration processing steps are as follows:- dapp reads

dappfile.y[a]ml; - a go-templating engine is started, the final YAML is rendered;

- The rendered config is broken up into YAML documents (

---with a line break); - verifies that each YAML document contains a dimg or artifact attribute at the top level;

- checks the composition of the remaining attributes;

- if everything is in order, then the final config is made of the specified dimgs and artifacts.

The classic Dappfile is Ruby DSL, due to which some programming was possible: reference to the

ENV dictionary for environment variables, defining dimg in loops, defining general assembly instructions using context inheritance. In order not to take away such opportunities from the developers, it was decided to add support for Go-templates to dappfile.yml - similar to Helm's charts.However, we refused to inherit the context through nesting and through dimg_groups, since it made more confusion than convenience. Therefore,

dappfile.yml is a linear array of YAML documents, each of which is a dimg or artifact description.As before, a dimg can be one and it can be nameless:

dimg: ~ from: alpine:latest shell: beforeInstall: - apk update Artifacts must have a name, because Now it is not the export of files from the artifact image that is described, but the import (similar to the multi-stage capability from the Dockerfile). Therefore, you need to specify which artifact you want to get the files from:

artifact: application-assets ... --- dimg: ~ ... import: - artifact: application-assets add: /app/public/assets after: install - artifact: application-assets add: /vendor to: /app/vendor after: install The

git , git remote , shell directives went from DSL to YAML almost “as is”, but there are two points: instead of underscores, camelCase is used (as in Kubernetes) and you need not to repeat the directives, but to merge the parameters, specifying the array: git: - add: / to: /app owner: app group: app excludePaths: - public/assets - vendor - .helm stageDependencies: install: - package.json - Bowerfile - Gemfile.lock - app/assets/* - url: https://github.com/kr/beanstalkd.git add: / to: /build shell: beforeInstall: - useradd -d /app -u 7000 -s /bin/bash app - rm -rf /usr/share/doc/* /usr/share/man/* - apt-get update - apt-get -y install apt-transport-https git curl gettext-base locales tzdata setup: - locale-gen en_US.UTF-8 A basic description of all available attributes is available in the documentation .

docker ENV and LABEL

In

dappfile.yml , environment variables and labels can be added as follows: docker: ENV: <key>: <value> ... LABELS: <key>: <value> ... In YAML, it will not be possible to repeat

ENV or LABELS , as it was in the Dappfile and in the Dockerfile.Template engine

Templates can be used to define a common build configuration for different dimg or artifacts. This can be, for example, a simple indication of a common base image using a variable:

{{ $base_image := "alpine:3.6" }} dimg: app from: {{ $base_image }} ... --- dimg: worker from: {{ $base_image }} ... or something more complicated using defined templates:

{{ $base_image := "alpine:3.6" }} {{- define "base beforeInstall" }} - apt: name=php update_cache=yes - get_url: url: https://getcomposer.org/download/1.5.6/composer.phar dest: /usr/local/bin/composer mode: 0755 {{- end}} dimg: app from: {{ $base_image }} ansible: beforeInstall: {{- include "base beforeInstall" .}} - user: name: app uid: 48 ... --- dimg: worker from: {{ $base_image }} ansible: beforeInstall: {{- include "base beforeInstall" .}} ... In this example, part of the instructions for the

beforeInstall stage beforeInstall defined as a common part and then connected in each dimg.You can read more about the capabilities of Go-templates in the documentation for the text / template module and in the documentation for the sprig module, the functions from which complement the standard features.

Ansible support

Ansible-collector consists of three parts:

- The image of dappdeps / ansible , in which Python 2.7 lies, compiled with its glibc and other libraries to work in any distribution (especially important for Alpine). Immediately installed Ansible.

- Support for describing the assembly of stages using Ansible in

dappfile.yaml. - Builder in dapp , running containers for stages. Tracks specified in

dappfile.ymlare executed in these containers. Builder creates a playbook and generates a command to launch it.

Ansible is being developed as a system for managing a large number of remote hosts, and therefore things that are relevant for a local launch can be ignored by developers. For example, there is no real-time output from running commands, as was the case in Chef: an assembly may include a lengthy command, the output of which would be good to see in real time, but Ansible will show the output only after completion. When launched via GitLab CI, this can be interpreted as a hang of a build.

The second trouble was the stdout callbacks , which are part of Ansible. Among them was not "moderately informative." There is either too verbose output with full result in the form of JSON, or minimalism with the host name, module name and status. Of course, I'm exaggerating, but there really isn't a suitable module for assembling images.

The third thing we encountered was the dependence of some Ansible modules on external utilities (not scary), Python modules (even less scary), and on binary Python modules (a nightmare!). Again, the authors of Ansible did not take into account that their creation will be run separately from the system binaries and that, for example,

userdel will not be located in /sbin , but somewhere in another directory ...The problem with binary modules is a feature of the apt module. It uses the python-apt module as an SO-library. Another feature of the apt module turned out that when performing a task, in case of unsuccessful loading of python-apt, an attempt is made to install a package with this module into the system.

To solve the above problems, a “live” output for the raw and script task was implemented , since they can run without Ansiballz. I also had to implement my stdout callback, add the

useradd , userdel , usermod , getent and similar utilities to dappdeps / ansible and copy the python-apt modules.As a result, the Ansible collector in dapp works with Linux distributions of Ubuntu, Debian, CentOS, Alpine, but not all modules are yet tested and therefore there is a list of modules in Dapp that are supported exactly. If the module is used in the configuration not from the list, the assembly will not start - this is a temporary measure. A list of supported modules can be found here .

The build configuration using Ansible in

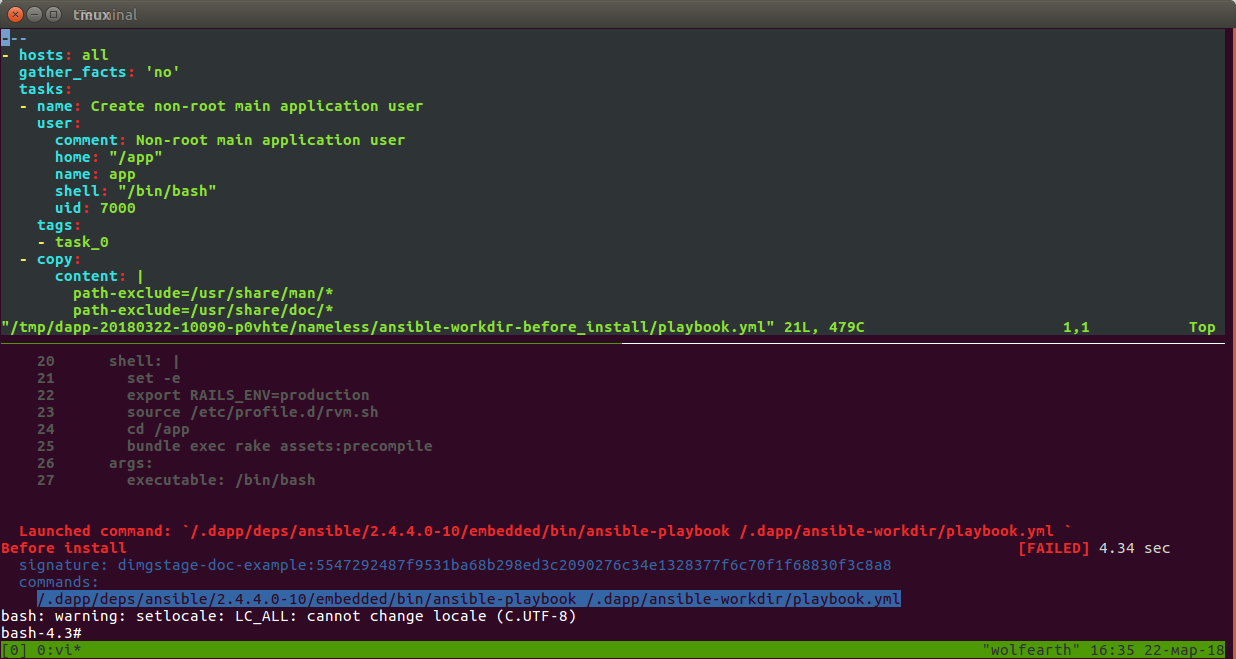

dappfile.yml is similar to the shell configuration. The necessary steps are listed in the ansible key and an array of tasks is defined for each of them - almost as in a regular playbook, only the name of the stage is indicated instead of the tasks attribute: ansible: beforeInstall: - name: "Create non-root main application user" user: name: app comment: "Non-root main application user" uid: 7000 shell: /bin/bash home: /app - name: "Disable docs and man files installation in dpkg" copy: content: | path-exclude=/usr/share/man/* path-exclude=/usr/share/doc/* dest: /etc/dpkg/dpkg.cfg.d/01_nodoc install: - name: "Precompile assets" shell: | set -e export RAILS_ENV=production source /etc/profile.d/rvm.sh cd /app bundle exec rake assets:precompile args: executable: /bin/bash The example is taken from the documentation .

Now the question arises: if there is only a list of tasks in

dappfile.yml , then where is the rest (top level playbook, inventory), how to turn on become and where are talking cows (or how to turn them off)? It's time to describe how to run Ansible.The builder is responsible for launching - this is not a very complicated piece of code that defines the launch parameters of the Docker-container with the stage: environment variables, the ansible-playbook launch command, the necessary mounts. Also, the builder creates a directory in the temporary directory of the application where several files are generated:

hosts- inventory for Ansible. There is only one localhost host with the path to Python inside the mounted image dappdeps / ansible;ansible.cfg- Ansible configuration. In the config, thelocalconnection type, the path to inventory, the path to callback stdout, the paths to temporary directories and thebecomesettings are specified: all tasks are started from the root user; if you usebecome_user, then all environment variables will be accessible to the user process and$HOME(sudo -E -H) will be set correctly;playbook.yml- this file is generated from the list of tasks for the stage being executed. The file specifies thehosts: allfilter and disables the implicit fact collection by setting thegather_facts: nosetting. The setup and set_fact modules are in the list of supported ones, so you can use them to explicitly collect facts.

The list of tasks for the

beforeInstall stage from the example previously turns into this playbook.yml : --- hosts: all gather_facts: no tasks: - name: "Create non-root main application user" user: name: app ... - name: "Disable docs and man files installation in dpkg" copy: content: | path-exclude=/usr/share/man/* path-exclude=/usr/share/doc/* dest: /etc/dpkg/dpkg.cfg.d/01_nodoc Features of Ansible for assembly

Become

The settings for

become in ansible.cfg are: [become] become = yes become_method = sudo become_flags = -E -H become_exe = path_to_sudo_insdie_dappdeps/ansible_image Therefore, in the task it is enough to specify only

become_user: username to run the script or copy from the user.Command modules

Ansible has 4 modules for running commands and scripts:

raw , script , shell and command . raw and script are executed without the Ansiballz mechanism, which is slightly faster, and there is a live output for them. Using raw you can run multiline ad-hoc scripts: - raw: | mvn -B -f pom.xml -s /usr/share/maven/ref/settings-docker.xml dependency:resolve mvn -B -s /usr/share/maven/ref/settings-docker.xml package -DskipTests True, the

environment attribute is not supported, but it can be circumvented as follows: - raw: | mvn -B -f pom.xml -s $SETTINGS dependency:resolve mvn -B -s $SETTINGS package -DskipTests args: executable: SETTINGS=/usr/share/maven/ref/settings-docker.xml /bin/ash -e Files

At this stage, there is no mechanism for forwarding files from the repository to containers, except for the

git directive. To add various kinds of configs, scripts and other small files to the image, you can use the copy module: - name: "Disable docs and man files installation in dpkg" copy: content: | path-exclude=/usr/share/man/* path-exclude=/usr/share/doc/* dest: /etc/dpkg/dpkg.cfg.d/01_nodoc If the file is large, in order not to store it inside

dappfile.yml , you can use the Go-template and the function .Files.Get : - name: "Disable docs and man files installation in dpkg" copy: content: | {{.Files.Get ".dappfiles/01_nodoc" | indent 6}} dest: /etc/dpkg/dpkg.cfg.d/01_nodoc In the future, the mechanism of connecting files to the assembly container will be implemented to make it easier to copy large and binary files, as well as use

include* or import* .Templates

About go-patterns in

dappfile.yaml has already been said. Ansible for its part supports jinja2 templates, and the separators of the two systems are the same, so the jinja call needs to be escaped from the Go template: - name: "create temp file for archive" tempfile: state: directory register: tmpdir - name: Download archive get_url: url: https://cdn.example.com/files/archive.tgz dest: '{{`{{ tmpdir.path }}`}}/archive.tgz' Debugging build issues

When performing a task, some kind of error may occur, but the messages on the screen are sometimes not enough to understand. In this case, you can start by specifying the environment variable

ANSIBLE_ARGS="-vvv" - then the output will contain all the arguments for tasks and all the arguments of the results (similar to using json stdout callback).If the situation is not clarified, you can run the assembly in introspect mode:

dapp dimg bulid --introspect-error . Then the build will stop after the error and the shell will be launched in the container. The command that caused the error will be visible, and in the adjacent terminal you can go to the temporary directory and edit the playbook.yml :

Go to go

This is our third goal in the development of dapp, but from the user's point of view, it makes little difference, besides simplifying the installation. For release 0.26, the parser

dappfile.yaml was implemented on Go. Now, work continues on translating the main dapp functionality to Go: running assembly containers, builders, working with Git. Therefore, it will not be superfluous for your help in testing - including Ansible modules. We are waiting for the issue on GitHub or go to our group in the Telegram: dapp_ru .PS

So what's up with the cows? The cowsay program is not in dappdeps / ansible, and the callback stdout used does not call those methods where cowsay is enabled. Unfortunately, Ansible in dapp without cows (but no one will stop you from creating an issue).

Pps

Read also in our blog:

- " Officially present dapp - DevOps utility to maintain the CI / CD ";

- “ Build projects with dapp. Part 1: Java ;

- “ Build and heat applications in Kubernetes using dapp and GitLab CI ”;

- " Practice with dapp. Part 1: Build simple applications ";

- " Practice with dapp. Part 2. Deploying Docker images in Kubernetes with the help of Helm ”;

- “ We assemble Docker images for CI / CD quickly and conveniently along with dapp (review and video of the report) ”.

Source: https://habr.com/ru/post/351838/

All Articles