Meet Windows Machine Learning - WinML

Artificial intelligence and machine learning are two high-end trends of recent years. The required for AI & ML computational volumes are usually performed in data centers on special high-performance and energy-efficient equipment (for example, servers with TPU). Evolution is cyclical, and the pendulum swings back, toward computing on peripheral devices such as PCs, tablets, and IoT. In particular, this will lead to an increase in the speed of response of devices to voice commands and increase the comfort of communication with personal assistants.

WinML is a new set of APIs that will allow developers to use all the capabilities of any Windows 10 device to calculate pre-trained machine learning models loaded into the application in the Open Neural Network Exchange (ONNX) format.

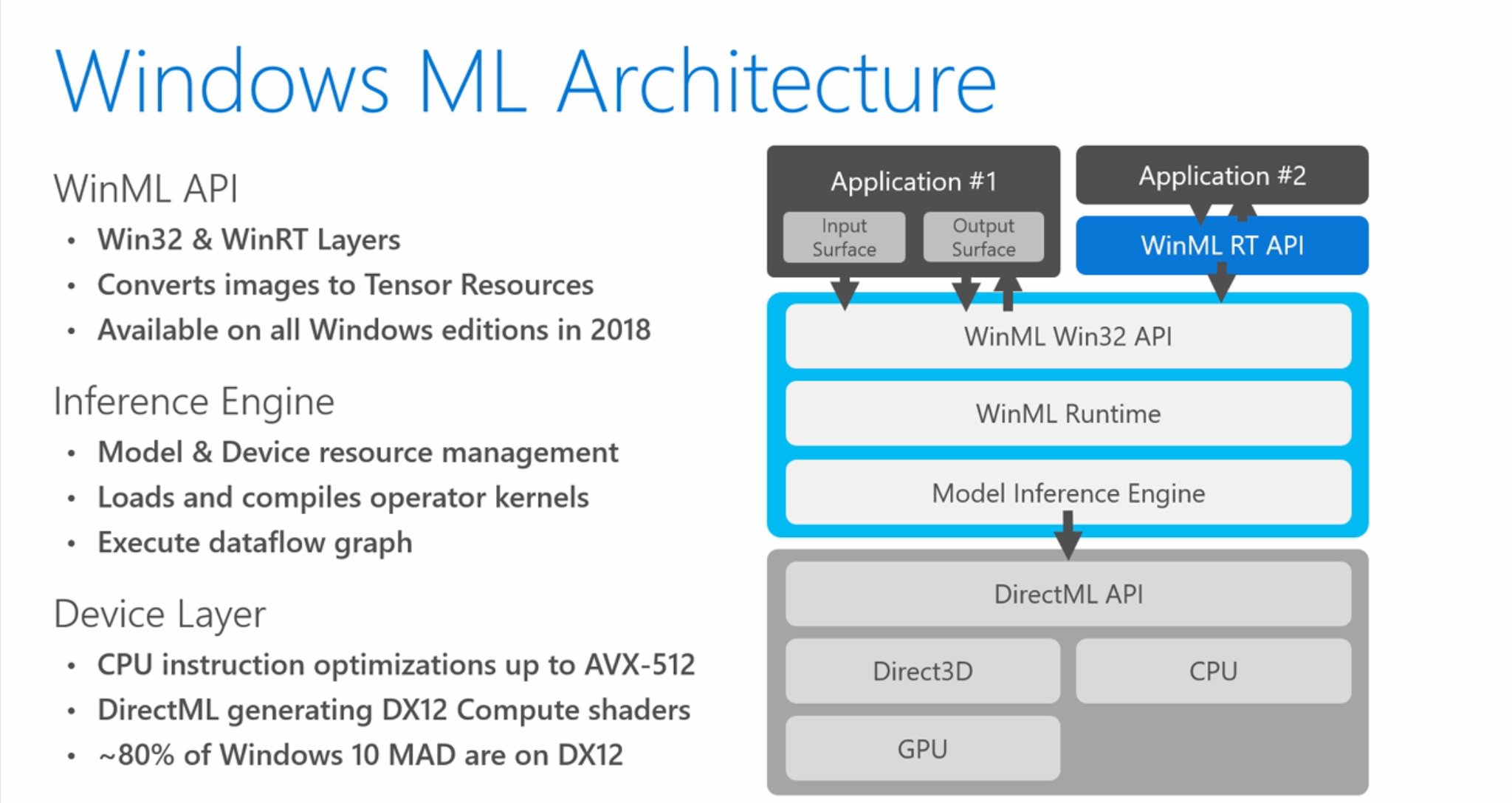

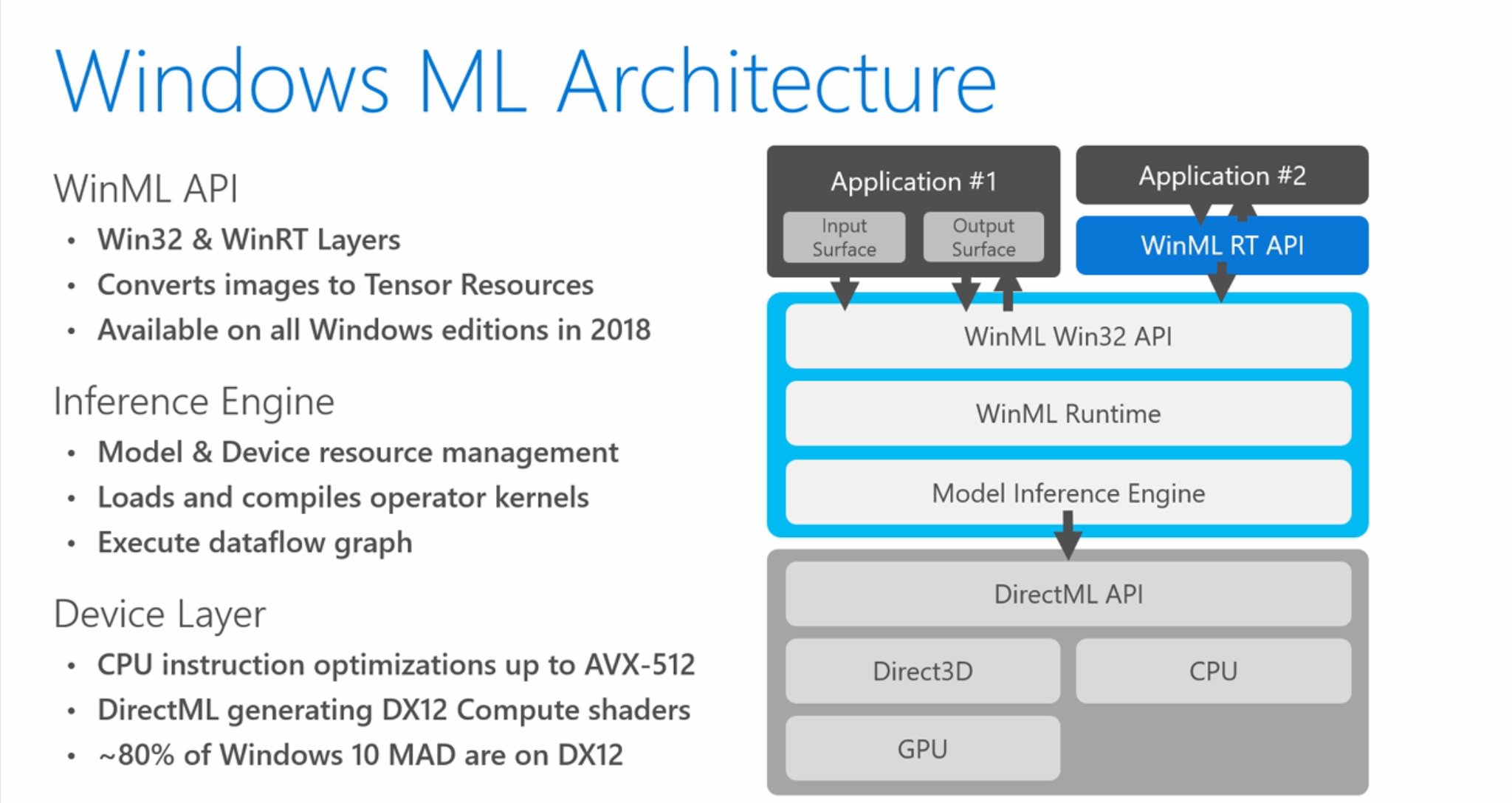

Following its long-standing and win-win tradition (but we remember WinPhone), Microsoft followed Apple's CoreML for iOS and TensorFlow Lite / Neural Networks API for Android 8.1 for developers to publish a high-level WinML API that runs on top of DirectML. This layer can use DirectX 12 or directly access computing devices (CPU / VPU / GPU).

')

The essence of the development is to transfer the prepared machine learning models from the cloud or specialized packages (for example, Anaconda, Microsoft Cognitive Toolkit, TensorFlow) to UWP applications and classic Windows applications (WPF / WinForms / ConsoleApp) to Windows 10. In addition, the model can be obtained using the Azure Machine Learning Workbench, and in the near future, the Azure Custom Vision Service will also support the creation of ONNX models for Windows. The WinML API will be available for all editions of Windows 10.

This is a solution that Windows developers have been waiting for. Microsoft has created a comfortable ecosystem. The challenge for competing ecosystems is to drag users and developers into their ecosystems. There is even a radical opinion that you need to forget about Windows when it comes to machine learning. Microsoft is aware of this and taking the next step, hopes to break through to a new market and defeat the skeptics.

At the heart of the model exchange technology between model-teaching tools and applications that use them is the ONNX project, supported by major technology giants - Microsoft, Facebook and Amazon. Developers can convert PyTorch, Caffe2 and other models to ONNX format and then integrate them into their applications. In this case, the developer of the final application, it is not necessary to be an experienced machine learning specialist. Only you need to understand that the model is a function, to the input of which you need to submit a prepared data set and interpret the response received.

And, at first, the ONNX format was seen by me for storing only neural network models. On the project site it is written: ONNX is a open format to represent deep learning models . Yes, and in the name of the Open Neural Network Exchange. But the published WinML documentation describes an example of transferring the Support Vector Machine model ( support vector machine) to ONNX using the WinMLTools package. SVM belongs to the family of linear classifiers and, in principle, can be mapped into a neural network model. What other models can be unloaded in ONNX has not yet looked. It can be assumed that it is still possible to unload logistic regression models (in fact one neuron) or even linear regression (a neuron without an activation function). My first experience of creating the simplest MLP model in CNTK (Microsoft Cognitve Toolkit) with unloading into ONNX failed. The selected hyper parameters could not be converted to the current format implementation. Compatibility issue. But it was version CNTK 2.3.1. A week ago, CNTK 2.5 was released. In the new versions they write about improving support for ONNX.

ML was available on .Net and earlier, through alternative libraries. For example, the Open Source project Accord.Net. The usefulness of WinML is that it offers a certain standard, which, on the one hand, allows you to get models from popular packages, and on the other, run models on various hardware.

In his presentation, Microsoft said about the rapid calculation of loaded models on various platforms due to the layer DirectML. For developers, optimizing computing for a zoo of hardware and software platforms is a titanic, sometimes overwhelming work. As conceived by Microsoft, applications can work not only on the desktop, but also on the Xbox, Hololens, and even IoT.

Hardware can be involved specialized cores / modules on CPU / GPU Intel, AMD, Qualcomm, NVidia. Have a video card? The model will work on it. There is no video card, but there is Intel - the model will work on AVX256 or even AVX512 vector instructions. Integrated graphics may be involved. There is not enough computing power on the IoT device - connect the Intel Movidius VPU . All this is possible thanks to the technology of automatic dynamic compilation of the computational model for the existing equipment.

The developer does not need to worry about what equipment is available to the application. The WinML engine will dynamically use the hardware and create the necessary native code to get the maximum performance from any hardware available on the device. Even more impressive is the ability to work WinML even on new laptops and tablets running the SnapDragon 835 or even on IoT devices. Thus, all the available additional computing power can be used, and if they are not available, only a CPU will be sufficient for the calculations.

With the spring upgrade, developers can use the WinML platform across the entire Windows device family, from IoT devices, to workstations, servers, and data centers. WinML preview supports FP32 calculations. They promise that the final version will support FP16. Today, this is irrelevant, but with the advent of the Volta GPU, this will make it possible to achieve significantly higher performance on FP16 tensor cores (for some specially trained models). Microsoft has already demonstrated an acceleration of 8 times.

As a result, developers get a number of advantages:

Low latency , real-time results. Windows can compute a model using PC resources, allowing large local data, such as images and video, to be analyzed on the fly. Calculation results can be obtained quickly and without delay.

Reduced operating costs, due to the unloading of models from the cloud to users' devices. Substantial savings can be achieved by reducing or eliminating the cost of transferring data between the network analytics service and the client. Running the ML-model on the client side allows you to process requests locally, and small new data for additional training of the model can be sent to the service.

Flexibility. Developers can choose where to run the calculations, locally or in the cloud. Client policy may prohibit the sending of sensitive data to the network, or, on the contrary, may require network computing to reduce the time for obtaining the result, which is important for large models and low-power devices.

To use the technology will need to update the software. Starting with Visual Studio Preview 15.7, adding an ONNX file to a project. UWP will automatically add the necessary interfaces to the developer project. Note that this is a preview. In addition, the required version of Windows 10 today is only available on the program Insider Preview. For previous versions of Visual Studio, developers can use the MLGen tool to create the desired interface, and then manually add it to their projects. This feature will soon be available for the Visual Studio tools for AI tool.

The Windows ML API documentation was published on March 7th. At the same time, Windows Developer Day March 2018 was held. The video was posted on YouTube on the Windows Developer channel.

For those who are already working with machine learning, the benefits of the standard library are clear. And for those who are not yet in the subject I will briefly describe what can be obtained with ready-made ML-models in their applications.

As mentioned above, the ML model is a function whose input is a vector of a fixed (parameterized entity description) or variable length (sound / spectrogram, video sequence) and the output is a response vector, which can be interpreted as assigning the input vector to a certain class or classes (problems of classification, clustering) with some degree of likelihood (probability), as well as some calculated parameters of the input vector (regression problem). This is in the simplest case. More complex applications in this article will not be considered.

As an example, Microsoft has posted on GitHub a well-known example of MNIST . The model can be connected to your program and further recognize the numbers drawn with a finger on the touchscreen. However, a similar and even richer functionality has long been implemented in the API Handwriting Recognition.

As an input vector there can be a certain image or a representation of a certain situation (game). An image can describe a series of user actions or what he sees, on the basis of which he acts. The model can predict user actions and design a new environment based on them.

You can customize the game world for the player, offering him more tasks and more gifts, quickly adjusting to his preferences. If the player likes to find treasures in the game and does not like to participate in battles, the model can add more treasures and reduce the complexity of the battles.

reinforcement and imitation training models can contribute to gaming experience

The image can be a description of the environment for the bot, and its actions will be the result of calculating the model. It can be complicated graphics, for example, drawing emotions or plausible articulation on an audio file.

A rather difficult task is the calculation of the plausible dynamics of the movement of the character's limbs.

Last year, SIGGRAPH 2017 introduced the ML model for calculating fluid dynamics without direct modeling of hydrodynamics, which significantly reduces the amount of computation.

If you turn on the imagination, you can use models for procedural generation of textures, by transferring the style (similar to Prisma ). Similarly, you can create landscapes, architecture and other entities.

As I see it, it is quite possible to form a market for trained models (bots) of game characters, similar to the main character of the game Moss. First of all, with the developed dynamics of the skeleton / shell. Suppose we enter the input conditions (speed / state, gender / steps coordinates, vector of emotions, actions / goals) to the input of the ML-model, and at the output we get the following state. From these frames, you can collect related 3D-animation.

You can build a model that will classify the user's actions by the dynamics of the mouse movement or the profile of using the keyboard, etc.

An unexpected approach for me is the use of ML models to improve the quality of the picture. A number of articles provide an example of NVIDIA's “smart” filtering, which is called “super-resolution technology”.

ML Super Sampling (left) and bilinear upsampling (right)

The scope of ML models is extensive and limited only by the imagination of developers. If you like the article, in the following articles we will discuss the issues of constructing and using ML-models using simple examples.

WinML is a new set of APIs that will allow developers to use all the capabilities of any Windows 10 device to calculate pre-trained machine learning models loaded into the application in the Open Neural Network Exchange (ONNX) format.

Following its long-standing and win-win tradition (but we remember WinPhone), Microsoft followed Apple's CoreML for iOS and TensorFlow Lite / Neural Networks API for Android 8.1 for developers to publish a high-level WinML API that runs on top of DirectML. This layer can use DirectX 12 or directly access computing devices (CPU / VPU / GPU).

')

The essence of the development is to transfer the prepared machine learning models from the cloud or specialized packages (for example, Anaconda, Microsoft Cognitive Toolkit, TensorFlow) to UWP applications and classic Windows applications (WPF / WinForms / ConsoleApp) to Windows 10. In addition, the model can be obtained using the Azure Machine Learning Workbench, and in the near future, the Azure Custom Vision Service will also support the creation of ONNX models for Windows. The WinML API will be available for all editions of Windows 10.

This is a solution that Windows developers have been waiting for. Microsoft has created a comfortable ecosystem. The challenge for competing ecosystems is to drag users and developers into their ecosystems. There is even a radical opinion that you need to forget about Windows when it comes to machine learning. Microsoft is aware of this and taking the next step, hopes to break through to a new market and defeat the skeptics.

At the heart of the model exchange technology between model-teaching tools and applications that use them is the ONNX project, supported by major technology giants - Microsoft, Facebook and Amazon. Developers can convert PyTorch, Caffe2 and other models to ONNX format and then integrate them into their applications. In this case, the developer of the final application, it is not necessary to be an experienced machine learning specialist. Only you need to understand that the model is a function, to the input of which you need to submit a prepared data set and interpret the response received.

And, at first, the ONNX format was seen by me for storing only neural network models. On the project site it is written: ONNX is a open format to represent deep learning models . Yes, and in the name of the Open Neural Network Exchange. But the published WinML documentation describes an example of transferring the Support Vector Machine model ( support vector machine) to ONNX using the WinMLTools package. SVM belongs to the family of linear classifiers and, in principle, can be mapped into a neural network model. What other models can be unloaded in ONNX has not yet looked. It can be assumed that it is still possible to unload logistic regression models (in fact one neuron) or even linear regression (a neuron without an activation function). My first experience of creating the simplest MLP model in CNTK (Microsoft Cognitve Toolkit) with unloading into ONNX failed. The selected hyper parameters could not be converted to the current format implementation. Compatibility issue. But it was version CNTK 2.3.1. A week ago, CNTK 2.5 was released. In the new versions they write about improving support for ONNX.

ML was available on .Net and earlier, through alternative libraries. For example, the Open Source project Accord.Net. The usefulness of WinML is that it offers a certain standard, which, on the one hand, allows you to get models from popular packages, and on the other, run models on various hardware.

In his presentation, Microsoft said about the rapid calculation of loaded models on various platforms due to the layer DirectML. For developers, optimizing computing for a zoo of hardware and software platforms is a titanic, sometimes overwhelming work. As conceived by Microsoft, applications can work not only on the desktop, but also on the Xbox, Hololens, and even IoT.

Hardware can be involved specialized cores / modules on CPU / GPU Intel, AMD, Qualcomm, NVidia. Have a video card? The model will work on it. There is no video card, but there is Intel - the model will work on AVX256 or even AVX512 vector instructions. Integrated graphics may be involved. There is not enough computing power on the IoT device - connect the Intel Movidius VPU . All this is possible thanks to the technology of automatic dynamic compilation of the computational model for the existing equipment.

The developer does not need to worry about what equipment is available to the application. The WinML engine will dynamically use the hardware and create the necessary native code to get the maximum performance from any hardware available on the device. Even more impressive is the ability to work WinML even on new laptops and tablets running the SnapDragon 835 or even on IoT devices. Thus, all the available additional computing power can be used, and if they are not available, only a CPU will be sufficient for the calculations.

With the spring upgrade, developers can use the WinML platform across the entire Windows device family, from IoT devices, to workstations, servers, and data centers. WinML preview supports FP32 calculations. They promise that the final version will support FP16. Today, this is irrelevant, but with the advent of the Volta GPU, this will make it possible to achieve significantly higher performance on FP16 tensor cores (for some specially trained models). Microsoft has already demonstrated an acceleration of 8 times.

As a result, developers get a number of advantages:

Low latency , real-time results. Windows can compute a model using PC resources, allowing large local data, such as images and video, to be analyzed on the fly. Calculation results can be obtained quickly and without delay.

Reduced operating costs, due to the unloading of models from the cloud to users' devices. Substantial savings can be achieved by reducing or eliminating the cost of transferring data between the network analytics service and the client. Running the ML-model on the client side allows you to process requests locally, and small new data for additional training of the model can be sent to the service.

Flexibility. Developers can choose where to run the calculations, locally or in the cloud. Client policy may prohibit the sending of sensitive data to the network, or, on the contrary, may require network computing to reduce the time for obtaining the result, which is important for large models and low-power devices.

Getting Started with Windows Machine Learning

To use the technology will need to update the software. Starting with Visual Studio Preview 15.7, adding an ONNX file to a project. UWP will automatically add the necessary interfaces to the developer project. Note that this is a preview. In addition, the required version of Windows 10 today is only available on the program Insider Preview. For previous versions of Visual Studio, developers can use the MLGen tool to create the desired interface, and then manually add it to their projects. This feature will soon be available for the Visual Studio tools for AI tool.

The Windows ML API documentation was published on March 7th. At the same time, Windows Developer Day March 2018 was held. The video was posted on YouTube on the Windows Developer channel.

For those who are already working with machine learning, the benefits of the standard library are clear. And for those who are not yet in the subject I will briefly describe what can be obtained with ready-made ML-models in their applications.

As mentioned above, the ML model is a function whose input is a vector of a fixed (parameterized entity description) or variable length (sound / spectrogram, video sequence) and the output is a response vector, which can be interpreted as assigning the input vector to a certain class or classes (problems of classification, clustering) with some degree of likelihood (probability), as well as some calculated parameters of the input vector (regression problem). This is in the simplest case. More complex applications in this article will not be considered.

As an example, Microsoft has posted on GitHub a well-known example of MNIST . The model can be connected to your program and further recognize the numbers drawn with a finger on the touchscreen. However, a similar and even richer functionality has long been implemented in the API Handwriting Recognition.

Consider these tasks on the example of GameDev.

As an input vector there can be a certain image or a representation of a certain situation (game). An image can describe a series of user actions or what he sees, on the basis of which he acts. The model can predict user actions and design a new environment based on them.

You can customize the game world for the player, offering him more tasks and more gifts, quickly adjusting to his preferences. If the player likes to find treasures in the game and does not like to participate in battles, the model can add more treasures and reduce the complexity of the battles.

reinforcement and imitation training models can contribute to gaming experience

The image can be a description of the environment for the bot, and its actions will be the result of calculating the model. It can be complicated graphics, for example, drawing emotions or plausible articulation on an audio file.

A rather difficult task is the calculation of the plausible dynamics of the movement of the character's limbs.

Last year, SIGGRAPH 2017 introduced the ML model for calculating fluid dynamics without direct modeling of hydrodynamics, which significantly reduces the amount of computation.

If you turn on the imagination, you can use models for procedural generation of textures, by transferring the style (similar to Prisma ). Similarly, you can create landscapes, architecture and other entities.

As I see it, it is quite possible to form a market for trained models (bots) of game characters, similar to the main character of the game Moss. First of all, with the developed dynamics of the skeleton / shell. Suppose we enter the input conditions (speed / state, gender / steps coordinates, vector of emotions, actions / goals) to the input of the ML-model, and at the output we get the following state. From these frames, you can collect related 3D-animation.

You can build a model that will classify the user's actions by the dynamics of the mouse movement or the profile of using the keyboard, etc.

An unexpected approach for me is the use of ML models to improve the quality of the picture. A number of articles provide an example of NVIDIA's “smart” filtering, which is called “super-resolution technology”.

ML Super Sampling (left) and bilinear upsampling (right)

The scope of ML models is extensive and limited only by the imagination of developers. If you like the article, in the following articles we will discuss the issues of constructing and using ML-models using simple examples.

Source: https://habr.com/ru/post/351724/

All Articles