ComputerVision and what it eat

With the development of computer power and the emergence of a variety of image processing technologies, the question has increasingly arisen: can we teach the machine to see and recognize images? For example, to distinguish a cat from a dog or even a bloodhound from a basset? There is no need to speak about the accuracy of recognition: our brain is incomparably faster able to understand that it is in front of us, provided that earlier we received enough information about the object. Those. even seeing only a part of a dog, we can safely say that it is a dog. And if you are a dog breeder, then it is easy to determine the breed of a dog. But how to teach the car to distinguish between them? What are the algorithms? Is it possible to deceive the car? (Spoiler: of course you can! In the same way as our brain.) Let's try to comprehend all these questions and, if possible, answer them. So let's get started.

With the development of computer power and the emergence of a variety of image processing technologies, the question has increasingly arisen: can we teach the machine to see and recognize images? For example, to distinguish a cat from a dog or even a bloodhound from a basset? There is no need to speak about the accuracy of recognition: our brain is incomparably faster able to understand that it is in front of us, provided that earlier we received enough information about the object. Those. even seeing only a part of a dog, we can safely say that it is a dog. And if you are a dog breeder, then it is easy to determine the breed of a dog. But how to teach the car to distinguish between them? What are the algorithms? Is it possible to deceive the car? (Spoiler: of course you can! In the same way as our brain.) Let's try to comprehend all these questions and, if possible, answer them. So let's get started.How to teach the car to see?

Machine Vision refers to Machine Learning, which includes semantic analyzers, sound recognizers, and so on. This is a complex subject area, and it requires in-depth knowledge of mathematics (including brrrrr, tensor mathematics). There are many algorithms that are used in pattern recognition: VGG16, VGG32, VGG29, ResNet, DenseNet, Inception (V1, V2, V3, V ... there are hundreds of them!). The most popular are VGG16 and Inception V3 and V4. The easiest, most popular and most suitable for a quick start is VGG16, so we select and pick it. Let's start with the name VGG16 and find out what is sacred in it and how it stands for. And here K.O. prompts that everything is simple: VGG is the Visual Geometry Group c of the Faculty of Engineering Sciences of the Oxford University. Well, where did 16 come from? It is a bit more complicated and directly related to the algorithm. 16 - the number of layers of the neural network, which is used for pattern recognition (finally, an interesting thing is started, otherwise everything is around). And it's time to cut into the very essence of matan. They themselves use from 16 to 19 layers and 3x3 filters at each level of the neural network. Actually, there are several reasons: the greatest performance with just such a number of layers, recognition accuracy (this is quite enough, links to articles, once and twice ). It should be noted that the neural network is used not simple, but an improved convolutional neural network (CNN) and Convolutional Neural Network with Refinement (CNN-R).

And now we will understand the principles of operation of this algorithm, why we need a convolutional neural network and with what parameters it works. And, of course, it is better to do it in practice right away. Assistant in the studio!

')

This wonderful husky will become our assistant, and we will set up experiments on it. She is already glowing with happiness and wants to quickly get into the details.

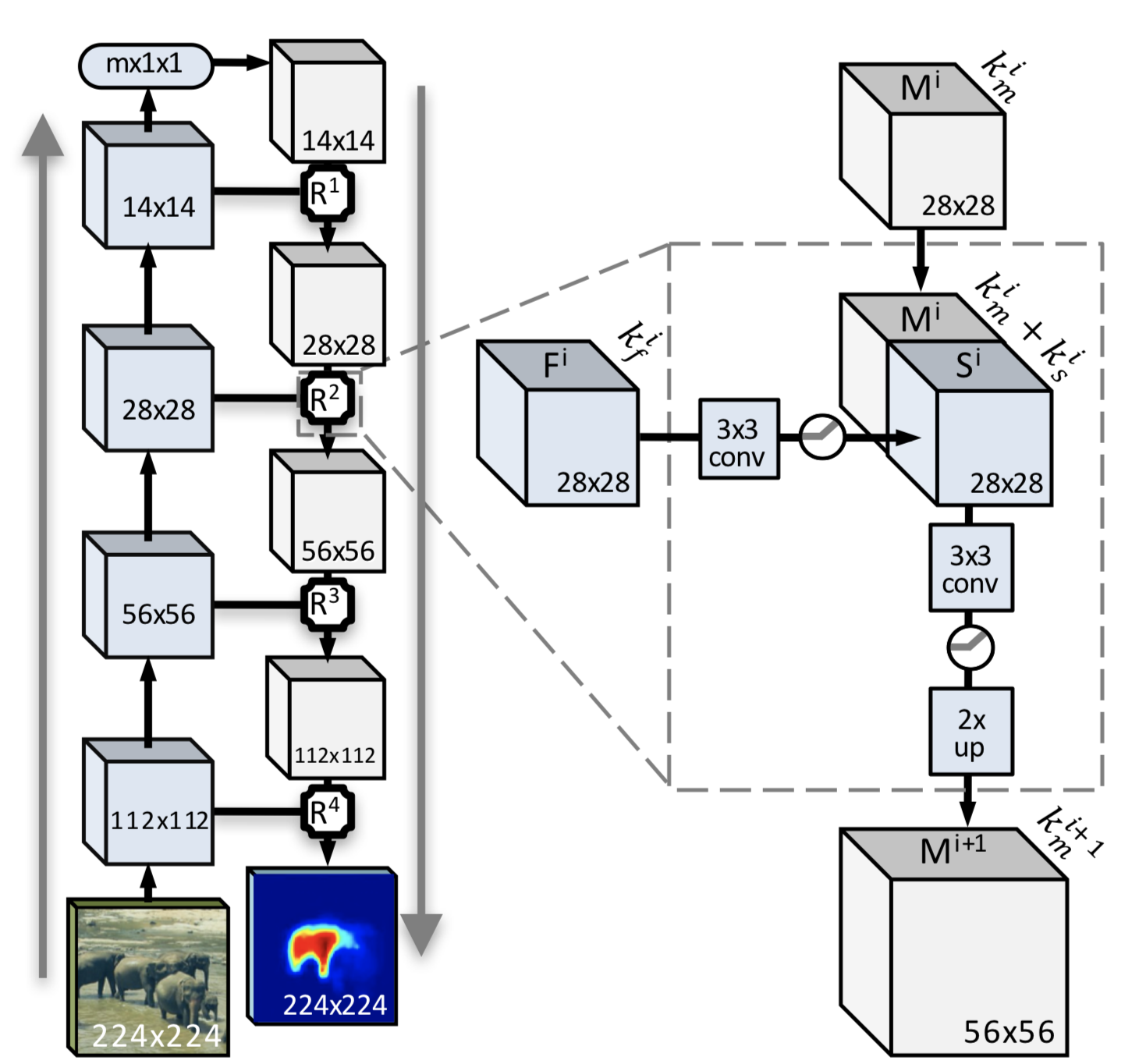

So, in simple words: the convolutional neural network works with two “commands” (I exaggerate a lot, but it will be clearer). The first - select the fragment, the second - try to find the whole object. Why the whole thing? Having available a set of the smallest patterns with contours of whole objects, it is easier for neural networks to further obtain the characteristic features and compare them with the image that is required to be recognized. And here we will help the image of the algorithm in the final form. Who wants to study in detail, with tons of matan - that's pruflink . And we go further.

Explaining the following algorithm is not so difficult. This is the complete algorithm for the work of the convolutional neural network with the enhancement module (this is the one that is surrounded by a dotted line and is present after each step of the convolution).

The first step: we are cutting the image in half (done with the Huskies, but it doesn’t look as impressive as the elephants in the picture). Then in half, then more and more, and so we reach the cockroaches, in the sense of a block 14x14 and less (the minimum size, as already mentioned, 3x3). Between steps - the main magic. Namely - getting the mask of the whole object and the probability of finding the whole object on the sawed piece. After all these manipulations we get a set of pieces and a set of masks for them. What to do with them now? The answer is simple: when reducing the image, we get the degradation of neighboring pixels, which will simplify the construction of the mask in a simplified form and provide an opportunity to increase the probability of distinguishing transitions between objects.

Based on the analysis of reduced images, independent initial descriptions are built and then the result is averaged for all masks. But it should be noted that this option is not the best for the segmentation of the object. The average mask may not always be applicable when there are small differences in color between the objects and the background (this is why this test image was chosen).

The proposed approach is more or less efficient, but for objects that are entirely in the image and contrast with the background, at the same time, the separation of two identical or similar objects (we will not go far beyond the example: a flock of sheep, all have white wool) .

To obtain an adequate pixel mask and the separation of objects it is necessary to bring the algorithm to mind.

And now the time has come to ask: why do we need this “module-improver”, if everything seems to be so good?

At each step, we add not only the construction of the mask, but also the use of all the information obtained from low-level functions together with knowledge of high-level objects that are recognized in the upper layers of the neural network. Along with obtaining independent results, at each step generation and coarse recognition is performed, the result is a semantically complete functional multichannel map into which only positive information from the early layers is integrated.

What is the difference between the classic convolutional neural network and the improved convolutional neural network?

Each step of reducing the original image allows you to get a coded mask generated when passing from the overall image to a smaller one. At the same time, in the improved convolutional neural network, there is a movement from a smaller image to a larger one, with obtaining characteristic functions (points). The result is a mask resulting from the bidirectional merging of functions and characteristic points.

It sounds quite confusing. Let's try to apply to our image.

Effectively. This is called DeepMask - the rough boundaries of the objects in the image. Let's try to figure out why. Let's start with a simple - with the nose. When the image is degraded, the contrast is obvious, therefore the nose is selected as an independent object. The same with the nostrils: at certain levels of the convolution, they became independent objects due to the fact that they were completely on the fragment. In addition, the face of the second dog is circled separately (how can you not see? Why, she is right in front of you!). A piece of hand was recognized as a background. So what to do? With the main part of the background color does not contrast. But the transition "hand-shirt" is highlighted. And the error in the form of a “hand-mesh” spot, and a large spot, an exciting background and a husky's head.

Well, the result is interesting! How can you help the algorithm to cope with the task better? Only if you make fun of our assistant. To begin, let's try to make it in grayscale. Hmm ... The photo is worse than the passport. After that, the technician had nothing to lose, I just turned on one filter, and everything began to turn ... Sepia, and after it solarization, overlaying and subtracting layers, blurring the background with the addition of images - in general, what I saw was used; The main thing is that the objects become more noticeable in the image. As they say, the picture in Photoshop can endure. Mocked, it would be time to see how the image will now be recognized.

The neural network said: "There is no one who can somehow be classified." It is logical, contrasting only the nose and eyes. Not very typical with a small set of training.

Wow, what a horror (sorry, friend)! But here we seriously added contrast to the objects in the image. How? We take and duplicate the image (several times). On one we unscrew the contrast, on the other - the brightness, on the third - we burn the colors (make them unnaturally bright) and then add it all up. And finally, we will try to stuff the long-suffering husky into processing.

I must say that it became better. Not straightforward “wow, how cool,” but better. The number of incomplete objects decreased, the contrast between the objects appeared. Further experiments with preprocessing will give more contrast: object - object, object - background. We get 4 segments instead of 8. From the point of view of processing a large flow of images (150 images per minute) it’s better not to bother with pre-processing at all. She - so, play on the home computer.

Go ahead. SharpMask will not differ much. SharpMask is the construction of a refined object mask. The improvement algorithm is the same.

The main problem of DeepMask is that this model uses a simple network of direct distribution, which successfully creates “rough” masks, but does not perform segmentation with pixel accuracy. We skip the example for this step, as the Huskies are already not so easy to live.

The last step is an attempt to recognize what happened after the mask was clarified.

We run a quickly assembled demo and get the result - as much as 70% that this is a dog. Not bad.

But how did the car realize that it was a dog? So we sawed pieces, got beautiful masks, matrices for them and sets of signs. What's next? And then everything is simple: we have a trained network, which has reference sets of signs, masks, etc., etc., etc. Well, are they, and what's next? Here is our husky with a set of signs, here is a reference spherical dog in a vacuum with a set of signs. Stupid comparison in the forehead can not be done, because the lack of signs in the image, which we recognize, will lead to an error. And what to do? To do this, they came up with such a wonderful parameter as a dropout, or a random network reset. This means the following: both sets are taken and signs are randomly removed from each of them (in other words, there are sets of 10 signs, dropout = 0.1; we discard each sign, then compare). And as a result? And as a result - PROFIT.

I will answer the question why the second dog is not a dog, and the hand is not a man. The test sample was only 1000 images of cats and dogs. She studied in just two steps of evolution.

Instead of conclusions

So, we got a picture and the result that this is a dog (it is obvious to us, but for a neural network it was not very). The training was conducted in just two steps and there was no evolution of the model (which is very important). The image was loaded as it is, without processing. In the future, it is planned to check whether the neural network can recognize dogs by the minimum set of features.

Of the benefits:

- We conducted training on one machine. If necessary, files can be scattered immediately to other machines, and they (the machines) will already be able to do the same.

- High definition accuracy when learning and network evolution (this is soooo long).

- You can evolve under different recognition algorithms and "train" the network.

- Huge database of COCO and VOC images (updated annually).

Of the minuses: dancing with a tambourine with each framework.

PS When conducting experiments, no husky suffered. About how they collected, what they collected, how many rakes, to which places and so on - in the next article of our series “Machine Vision for Housewives”.

PPS And very, very briefly: a silver bullet does not exist, but there is a Faust-cartridge that can be turned with a file. And for a quick start, the following frameworks were used:

Keras

Caffe

Tensorflow

Source: https://habr.com/ru/post/351638/

All Articles