Node.js + face-recognition.js: simple and reliable face recognition with deep learning

Translation of the article Node.js + face-recognition.js: Simple and Robust Face Recognition using Deep Learning .

In this article we will explain how to implement a reliable face recognition system using face-recognition.js . We were looking for a suitable Node.js library that could accurately recognize faces, but found nothing. I had to write on my own!

')

This npm package uses the dlib library, which provides Node.js bindings for very proven recognition tools inside this library. Dlib uses deep learning methods and comes with already trained models that demonstrate recognition accuracy of 99.38% when running the LFW benchmark .

What for?

Recently, we are trying to create a face recognition application based on Node.js, which would highlight and recognize faces of characters from the Big Bang Theory series. At first it was planned to make an application using OpenCV recognition tools, as described in the Node.js + OpenCV for Face Recognition article.

However, despite the high speed, the quality of work of these tools turned out to be insufficient. More precisely, they coped well with full-face faces, but as soon as a person turned away from the camera a little, the quality of recognition decreased.

In search of a solution, C + + - the dlib library came across; we fiddled with the Python API, were impressed with the result and finally decided: we will use this library together with Node.js ! So this npm-package was born, providing a simplified Node.js API for face recognition.

And what is face-recognition.js?

I wanted to make a package based on face-recogntion.js, which:

- allows you to quickly begin to recognize faces through a simple API;

- if necessary, allows for fine tuning;

- easy to install (ideally, it would be enough to write

npm install).

Although the package is not finished yet, you can already download some tools.

Face Detection

For quick and not very reliable identification of faces in an image, you can use a deep learning neural network or a simple front-end recognizer:

Face Recognizer

The recognizer is a deep learning neural network that uses the models mentioned above to compute unique face descriptors. You can train the recognizer on an array of tagged face images, after which it will be able to mark faces on the input image:

Face Landmarks

With this package you can also define from 5 to 68 reference points on the faces:

Great story, show now how it works!

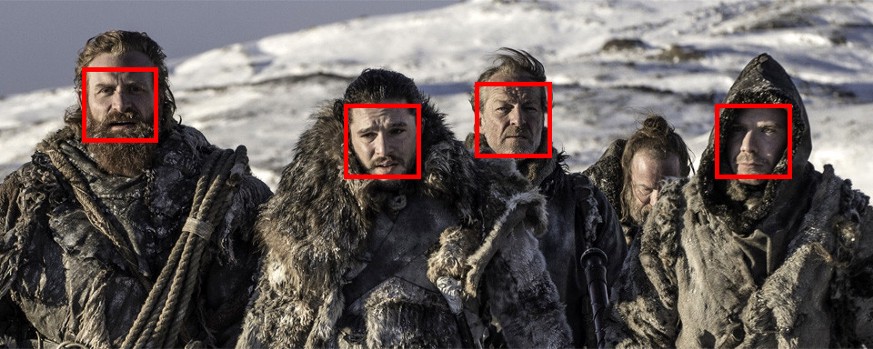

So, to solve the problem using OpenCV failed. We still have a pack of faces of Sheldon, Rajesh, Leonard, Howard, and Stewart, each 150 x 150 pixels in size. Using this data, you can easily teach Face Recognizer to recognize new faces. The code for this example is in the repository.

Data preparation

We collected about 20 faces of each character in different angles:

Take 10 individuals for training, and use the rest to assess the accuracy of recognition:

const path = require('path') const fs = require('fs') const fr = require('face-recognition') const dataPath = path.resolve('./data/faces') const classNames = ['sheldon', 'lennard', 'raj', 'howard', 'stuart'] const allFiles = fs.readdirSync(dataPath) const imagesByClass = classNames.map(c => allFiles .filter(f => f.includes(c)) .map(f => path.join(dataPath, f)) .map(fp => fr.loadImage(fp)) ) const numTrainingFaces = 10 const trainDataByClass = imagesByClass.map(imgs => imgs.slice(0, numTrainingFaces)) const testDataByClass = imagesByClass.map(imgs => imgs.slice(numTrainingFaces)) Each file name contains the name of the character, so we can easily match our class names:

['sheldon', 'lennard', 'raj', 'howard', 'stuart']with arrays of images for each class. With

fr.loadImage(fp) you can read the image specified in the file path.Definition of persons

Images of faces measuring 150 x 150 pixels were pre-cut using opencv4nodejs. But you can define faces, cut, save and label them in this way:

const image = fr.loadImage('image.png') const detector = fr.FaceDetector() const targetSize = 150 const faceImages = detector.detectFaces(image, targetSize) faceImages.forEach((img, i) => fr.saveImage(img, `face_${i}.png`)) Recognizer Training

Now you can start learning:

const recognizer = fr.FaceRecognizer() trainDataByClass.forEach((faces, label) => { const name = classNames[label] recognizer.addFaces(faces, name) }) This code feeds the faces of the neural network, which gives a handle to each person and stores it in the appropriate class. By

numJitters third argument to numJitters , you can apply rotation , scaling, and mirroring , creating different versions of each of the input entities. Increasing the number of modifications can improve recognition accuracy, but at the same time, the neural network is learning longer.You can also save the state of the recognizer, so as not to train it again each time, but simply load from the file:

Preservation:

const modelState = recognizer.serialize() fs.writeFileSync('model.json', JSON.stringify(modelState)) Loading:

const modelState = require('model.json') recognizer.load(modelState) Recognizing new faces

Now, using the control data, we will check the recognition accuracy and save the results to the log:

const errors = classNames.map(_ => []) testDataByClass.forEach((faces, label) => { const name = classNames[label] console.log() console.log('testing %s', name) faces.forEach((face, i) => { const prediction = recognizer.predictBest(face) console.log('%s (%s)', prediction.className, prediction.distance) // count number of wrong classifications if (prediction.className !== name) { errors[label] = errors[label] + 1 } }) }) // print the result const result = classNames.map((className, label) => { const numTestFaces = testDataByClass[label].length const numCorrect = numTestFaces - errors[label].length const accuracy = parseInt((numCorrect / numTestFaces) * 10000) / 100 return `${className} ( ${accuracy}% ) : ${numCorrect} of ${numTestFaces} faces have been recognized correctly` }) console.log('result:') console.log(result) Now the recognition is performed as follows: first, the Euclidean distance of the descriptor vector to each class descriptor is calculated at the input face, and then the average value of all distances is calculated. You may argue that for this task clustering using the k-means method or the SVM classifier is better suited. Perhaps in the future they will also be implemented, but the speed and efficiency of the Euclidean distance is still quite sufficient.

By calling

predictBest we get the result with the smallest Euclidean distance, that is, with the greatest similarity. Like that:{ className: 'sheldon', distance: 0.5 }If you need for a particular person to get the distance of the descriptors of all classes, you can simply use

recognizer.predict(image) , which for each class will produce arrays of distances: [ { className: 'sheldon', distance: 0.5 }, { className: 'raj', distance: 0.8 }, { className: 'howard', distance: 0.7 }, { className: 'lennard', distance: 0.69 }, { className: 'stuart', distance: 0.75 } ] results

If to execute the above-stated code, we will receive such results.

We train on 10 faces of each character:

sheldon ( 90.9% ) : 10 of 11 faces have been recognized correctly lennard ( 100% ) : 12 of 12 faces have been recognized correctly raj ( 100% ) : 12 of 12 faces have been recognized correctly howard ( 100% ) : 12 of 12 faces have been recognized correctly stuart ( 100% ) : 3 of 3 faces have been recognized correctly We train only on 5 faces of each character:

sheldon ( 100% ) : 16 of 16 faces have been recognized correctly lennard ( 88.23% ) : 15 of 17 faces have been recognized correctly raj ( 100% ) : 17 of 17 faces have been recognized correctly howard ( 100% ) : 17 of 17 faces have been recognized correctly stuart ( 87.5% ) : 7 of 8 faces have been recognized correctly And this is how it looks in the video:

Conclusion

Judging by the results, even a small sample of training data allows for fairly accurate recognition. This is despite the fact that some of the input images are very blurred due to their small size.

Source: https://habr.com/ru/post/351586/

All Articles