Evolution of testing strategies - stop being a monkey

In this series of articles, I want to describe our experience in creating a fully automated testing strategy (without QA) of the Nielsen Marketing Cloud web application that we have been creating over the past few years.

In the Nielsen Marketing Cloud development center, we work without any manual testing of both new functionality and regressions. And it gives us a lot of advantages:

- manual testing is expensive. The automatic test is written once, and you can run it millions of times.

- each developer feels more responsibility, since no one will check his new features

- release time is reduced, you can upload new functionality every day without having to wait for other people or teams

- confidence in the code

- code self-documentation

In the long run, good testing automation gives better quality and reduces the number of regressions for the old functionality.

')

But then the million dollar question arises - how to automate testing effectively?

Part 1 - How not to do

When most developers hear “automated testing” they think “automating user behavior” (end to end tests using Selenium) and we, too, were no exception to this rule.

Of course, we knew about the importance of unit and integration tests, but “Let's just go into the system, press the button and check all parts of the system together” seemed like a rather logical first step in automation.

As a result, the decision was made - let's write a bunch of end to end tests through Selenium!

It seemed like a good idea. But the result to which we eventually came was not so joyful. Here are the problems we encountered:

1. Unstable tests

At some point, when we wrote a fairly large number of end to end tests, they began to fall. And the falls were not permanent.

What do the developers do when they do not understand why the test accidentally fell? That's right - they add sleep.

It begins with the addition of sleep for 300ms in one place, then for 1 second in another, then for 3 seconds.

At some point, we find something like this in the tests:

sleep (60000) // wait for action to be completedI think it's obvious enough why adding sleep is a bad idea, huh? *

* if all the same is not present - sleeps slow down the work of tests and they are permanent, which means that if something goes wrong and some operation takes more time than it was planned, your tests will fall, that is, they will be unstable and not deterministic.

2. Tests are slow

Because automated end to end tests test a real system with a real server and database, running such tests is a rather slow process.

When something is slow, developers will usually avoid it, so no one will run tests on the local environment and the code will push into the version control system with the hope that the CI will be green. But it is not. And CI is red. And the developer has already gone home. AND…

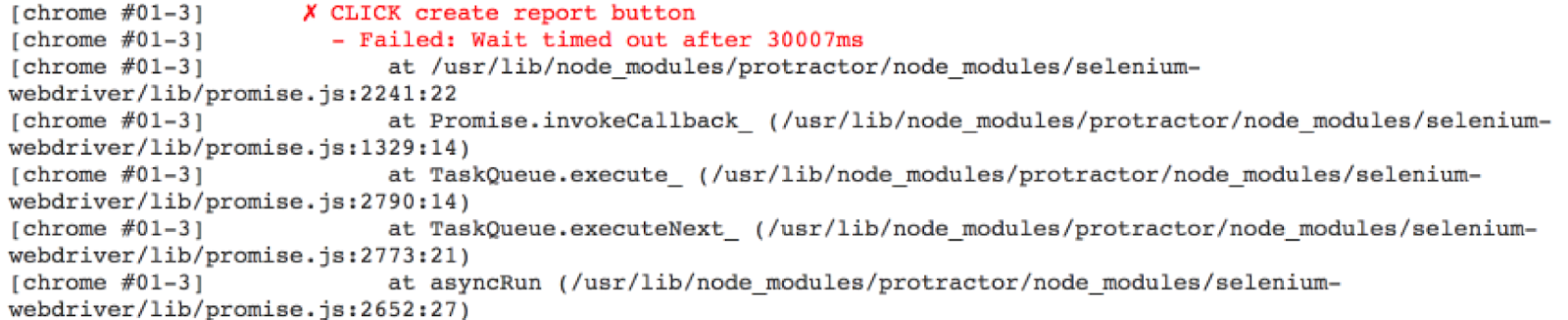

3. The fall of the tests is not informative

One of the biggest problems of our end to end tests is that if something is wrong with them, we cannot understand what the problem is.

And the problem can be ANYWHERE: the environment (remember that ALL parts of the system are part of the equation), configuration, server, frontend, data, or just not a stable test (not enough sleep).

And the only thing you see is:

In the end - the definition of what really went wrong takes a lot of time and quickly begins to bother.

4. Test data

Since we test the entire system with all the layers together, it is sometimes very difficult to simulate or create a complex script. For example, if we want to test how the user interface will react when the server is not available or the data in the database is corrupted, this is not a trivial task at all, so most developers will take the path of least resistance and simply test only positive scenarios. And no one will know how the system will behave in the event of problems (and they will definitely arise).

But this is just an example, often you need to check a specific scenario when a button should be inactive, and building a whole universe for this particular scenario requires real big effort. And this is not to mention the fact that it would clear the data after the tests, so as not to leave trash for the following tests.

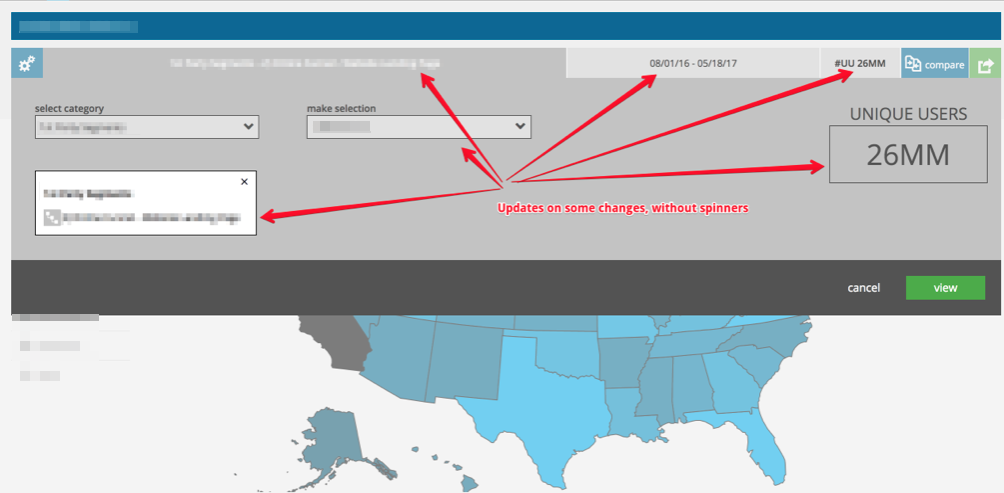

5. Dynamic user interface

In fashionable single-page (SPA) applications, the user interface is very dynamic, elements appear and disappear, animations hide elements, pop-up windows appear, parts of the screen change asynchronously depending on data in other parts, etc.

This makes automating scripts much more difficult, since in most cases it is very difficult to define a condition that means that the action was completed successfully.

6. Let's build our own framework

At some point, we began to realize that our tests are quite repeating each other, since different parts of the user interface use the same elements (search, filtering, tables, etc.).

We didn’t really want to copy-paste the test code, so we started building our own test framework with some generalized scenarios that could be used in other scenarios.

After that, we realized that some elements could be used differently, have different data, etc. So our generalized parts became overgrown with configurations, inheritance, factories and other design patterns.

In the end, our test code became really complicated and only a few people in the team understood how this magic works.

Total

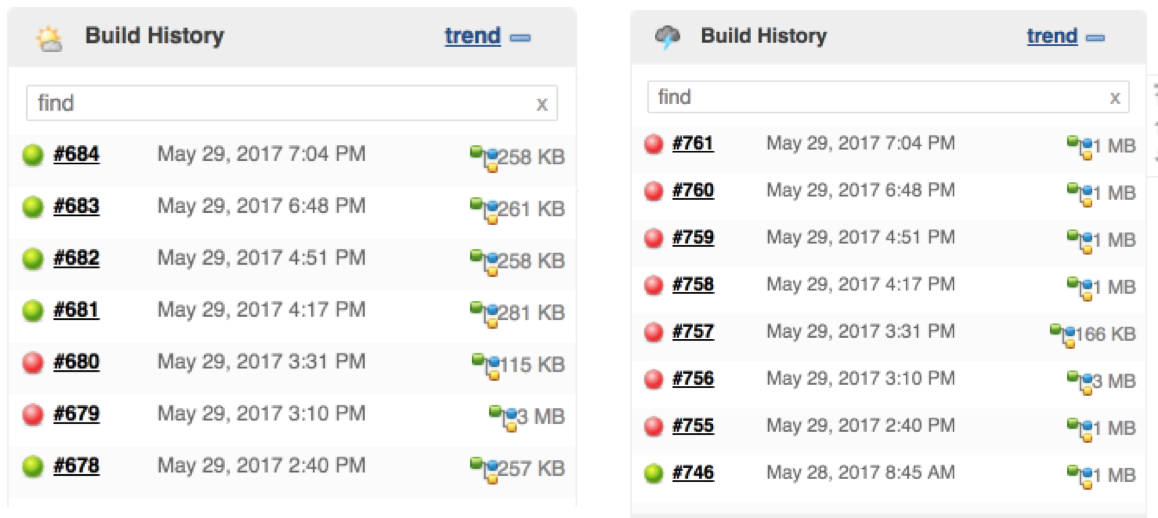

Of course, some problems can be partially solved (parallel testing of tests, taking screenshots when tests fall, etc.), but as a result this set of end to end tests has become very problematic, difficult and expensive to maintain.

Moreover, at some point we had so many false drops and stability problems that the developers simply stopped considering the red tests seriously. And this is even worse than not having any tests at all!

As a result, we have:

- thousands of end to end tests

- 1.5 hours per start (20 minutes with parallel start)

- fall almost every day and when they are red - we have to test manually

- it takes a lot of developer time to play and fix test problems

- 30 thousand lines of code in end to end tests

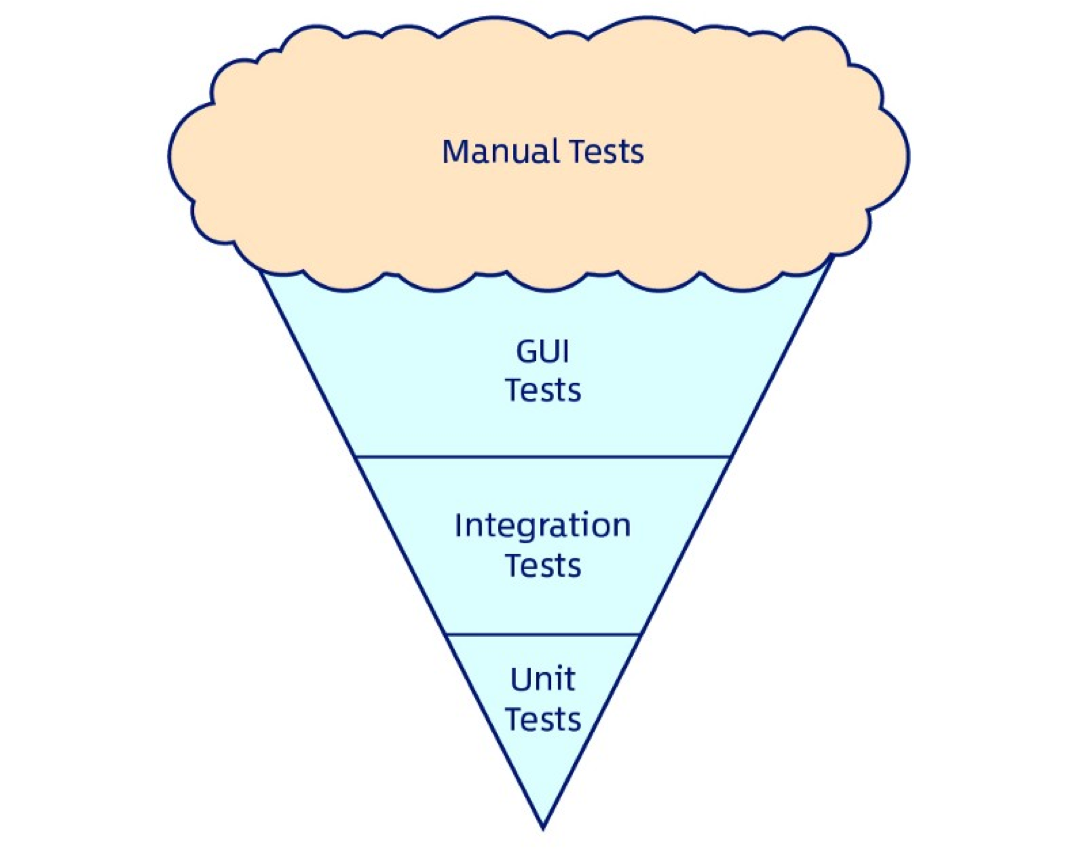

The final result we got is known as the " Antipattern Ice Cream Horn ".

In general, we decided to significantly reduce the number of our end-to-end tests and use something much steeper instead. But about it in the following part of our narration.

Source: https://habr.com/ru/post/351448/

All Articles