Auto Test Concepts

Hello, my name is Dmitry Karlovsky and, unfortunately, I have no time to write a great article, but I really want to share some ideas. So let me test a little note about programming on you. Today we will talk about automated testing:

- Why do we write tests?

- What are the tests?

- How do we write tests?

- How should they write?

- Why are unit tests bad?

Auto Test Tasks

From more important to less:

- Defect detection as early as possible. Before the user sees, before posting to the server, before submitting for testing, before committing.

- Localization problems. The test affects only part of the code.

- Acceleration development. Test execution is much faster than manual checking.

- Current documentation. The test is a simple and guaranteed to use.

Orthogonal classifications

- Object classification

- Classification by test type

- Classification by type of testing process

In any case, I emphasize that we are talking exclusively about automated testing.

Test objects

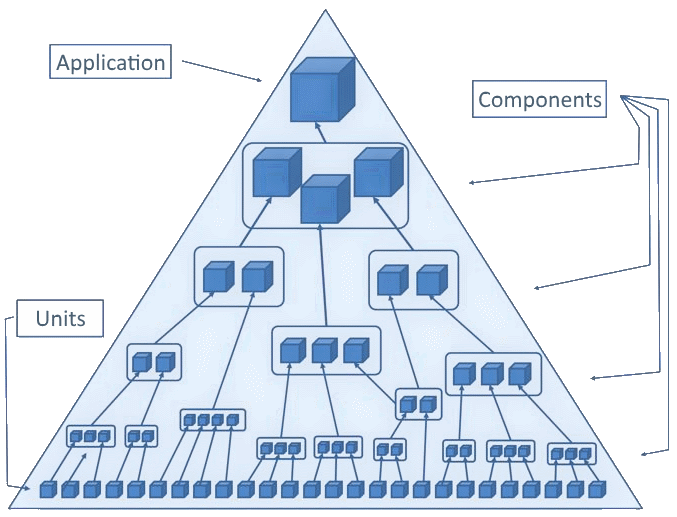

- A module or unit is a minimal piece of code that can be tested independently of the rest of the code. Module testing is also known as unit testing.

- The component is a relatively independent part of the application. May include other components and modules.

- An application or system is a degenerate case of a component that indirectly includes all other components.

Types of tests

- Functional - checking compliance with functional requirements

- Integration - testing the compatibility of neighboring test objects

- Stress - performance check

Types of testing processes

- Acceptance - check new / changed functionality.

- Regression - checking for defects in unchanged functionality.

- Smoke - check the main functionality for obvious defects.

- Full - check all the functionality.

- Configuration - check all the functionality on different configurations.

The number of tests

- Tests are code.

- Any code takes time to write.

- Any code takes time to support.

- Any code may contain errors.

The more tests, the slower the development.

Completeness of testing

- Tests should check all user scripts.

- Tests should go into each branch of logic.

- Tests should test all equivalence classes.

- Tests should check all boundary conditions.

- Tests should check the response to non-standard conditions.

The more complete the tests, the faster the refactoring and testing, and as a result, the delivery of new functionality.

Business priorities

- Maximize development speed. The developer needs to write a minimum of tests that are quickly executed.

- Minimize defects. It is necessary to ensure maximum coverage.

- Minimize development costs. We need to spend a minimum of effort on writing and maintaining the code (including tests).

Testing strategies

Depending on priorities , there are several basic strategies:

- Quality We write functional tests for all modules . We check their compatibility with integration tests. We add tests for all non-degenerate components . Do not forget about the integration component . Sprinkled with tests of the entire application . Multi-level exhaustive testing will require a lot of time and resources, but will make it more likely to detect defects.

- Speed We use only smoke testing applications. We know for sure that the main functions work, and we will fix the rest, if suddenly. Thus, we quickly deliver the functionality, but spend a lot of resources on bringing it to mind.

- Cost We write tests only for the entire application. Critical defects are thus detected in advance, which reduces the cost of support and, as a consequence, the relatively high speed of delivery of new functionality.

- Quality and speed . We cover all (including degenerate) components with tests, which gives maximum coverage with a minimum of tests, and therefore a minimum of defects at high speed, resulting in a relatively low cost .

Sample application

So that my analytics is not completely unfounded, let's create the simplest application of two components. It will contain a name entry field and a block with a welcome message addressed to this name.

$my_hello $mol_list rows / <= Input $mol_string value?val <=> name?val \ <= Output $my_hello_message target <= name - $my_hello_message $mol_view sub / \Hello, <= target \ Those who are not familiar with this notation, I suggest to look at the equivalent TypeScript code:

export class $my_hello extends $mol_list { rows() { return [ this.Input() , this.Output() ] } @mem Input() { return this.$.$mol_string.make({ value : next => this.name( next ) , }) } @mem Output() { return this.$.$my_hello_message.make({ target : ()=> this.name() , }) } @mem name( next = '' ) { return next } } export class $my_hello_message extends $mol_view { sub() { return [ 'Hello, ' , this.target() ] } target() { return '' } } @mem is a reactive caching decorator. this.$ - di-context. Binding occurs through property overrides. .make simply creates an instance and overrides the specified properties.

Component Testing

With this approach, we use real dependencies whenever possible.

What should mock up anyway:

- Interaction with the outside world (http, localStorage, location, etc.)

- Undetermined (Math.random, Date.now and etc.)

- Particularly slow things (the calculation of cryptographic hash and TP)

- Asynchrony (synchronous tests are easier to understand and debug)

So, first we write a test for the embedded component:

// Components tests of $my_hello_message $mol_test({ 'print greeting to defined target'() { const app = new $my_hello_message app.target = ()=> 'Jin' $mol_assert_equal( app.sub().join( '' ) , 'Hello, Jin' ) } , }) And now we add tests to the external component:

// Components tests of $my_hello $mol_test({ 'contains Input and Output'() { const app = new $my_hello $mol_assert_like( app.sub() , [ app.Input() , app.Output() , ] ) } , 'print greeting with name from input'() { const app = new $my_hello $mol_assert_equal( app.Output().sub().join( '' ) , 'Hello, ' ) app.Input().value( 'Jin' ) $mol_assert_equal( app.Output().sub().join( '' ), 'Hello, Jin' ) } , }) As you can see, all we need is a public interface component. Pay attention, we don't care what property is and how the value is transferred to Output. We check exactly the requirements: so that the displayed greeting matches the name entered by the user.

Unit testing

For unit tests, it is necessary to isolate the module from the rest of the code. When a module does not interact with other modules, the tests are the same as the component ones:

// Unit tests of $my_hello_message $mol_test({ 'print greeting to defined target'() { const app = new $my_hello_message app.target = ()=> 'Jin' $mol_assert_equal( app.sub().join( '' ), 'Hello, Jin' ) } , }) If the module needs other modules, they are replaced by plugs and we check that communication with them is as expected.

// Unit tests of $my_hello $mol_test({ 'contains Input and Output'() { const app = new $my_hello const Input = {} as $mol_string app.Input = ()=> Input const Output = {} as $mol_hello_message app.Output = ()=> Output $mol_assert_like( app.sub() , [ Input , Output , ] ) } , 'Input value binds to name'() { const app = new $my_hello app.$ = Object.create( $ ) const Input = {} as $mol_string app.$.$mol_string = function(){ return Input } as any $mol_assert_equal( app.name() , '' ) Input.value( 'Jin' ) $mol_assert_equal( app.name() , 'Jin' ) } , 'Output target binds to name'() { const app = new $my_hello app.$ = Object.create( $ ) const Output = {} as $my_hello_message app.$.$mol_hello_message = function(){ return Output } as any $mol_assert_equal( Output.title() , '' ) app.name( 'Jin' ) $mol_assert_equal( Output.title() , 'Jin' ) } , }) Mocking is not free - it leads to more complicated tests. But the saddest thing is that having checked the work with mocks, you cannot be sure that with real modules it will all work correctly. If you were attentive, you already noticed that in the last code we expect that the name should be passed through the title property. And this leads us to two types of errors:

- The correct module code may give errors on mocks.

- A defective module code may not give errors on mocks.

And finally, the tests, it turns out, do not check the requirements (let me remind you that the greeting with the substituted name should be displayed), and the implementation (such a method is called inside with such and such parameters). This means that tests are fragile.

Fragile tests are tests that break down at equivalent implementation changes.

Equivalent changes are such implementation changes that do not break the code’s compliance with functional requirements.

Test Driven Development

The TDD algorithm is quite simple and quite useful:

- We write the test , we make sure that it falls, which means that the test is actually testing something and changes in the code are really necessary.

- We write code until the test stops falling, which means that we have fulfilled all the requirements.

- Refactor code , making sure that the test does not fall, which means that our code still meets the requirements.

If we write fragile tests, then at the refactor step they will constantly fall, requiring research and adjustment, which reduces the programmer's productivity.

Integration tests

To overcome the cases remaining after the modular tests, they invented an additional type of tests - integration tests. Here we take several modules and check that they interact correctly:

// Integration tests of $my_hello $mol_test({ 'print greeting with name'() { const app = new $my_hello $mol_assert_equal( app.Output().sub().join( '' ) , 'Hello, ' ) app.Input().value( 'Jin' ) $mol_assert_equal( app.Output().sub().join( '' ), 'Hello, Jin' ) } , }) Yeah, we got that latest component test. In other words, we somehow wrote all the component tests that checked the requirements, but additionally recorded in the tests a specific implementation of logic. This is usually redundant.

Statistics

| Criteria | Cascaded component | Modular + Integrational |

|---|---|---|

| CLOS | 17 | 34 + 8 |

| Complexity | Simple | Complex |

| Incapsulation | Black box | White box |

| Fragility | Low | High |

| Coverage | Full | Extra |

| Velocity | High | Low |

| Duration | Low | High |

Related Links

- Misconceptions about automated testing - continuation of the history of automated testing

- The problem of duplication and obsolescence of knowledge in mock-objects or Integration tests is good - here the author says the right things, but calls the component tests integration tests.

- Tautological tests - here the author shows the disadvantages of mocking, which is the basis of the unit tests.

')

Source: https://habr.com/ru/post/351430/

All Articles