I am a network architect and this worries me

100G with breakdown on 4x25 in our data center

The basis of the network architect's work on * aaS-projects is how to build a building that is evolving. It seemed to be a five-story building, when they built four floors, it became necessary to do another 21, then it was necessary to attach houses connected by tunnels under the ground, and then all this should become a huge residential complex with a covered courtyard. And yet there are tenants inside, and they can not block the sewage system, water supply and access roads.

Well, yes. And then there are the current problems of network standards (10 years behind the real requirements). Most often this means inventing tricky bikes instead of applying seemingly obvious solutions. But bikes are everywhere, of course.

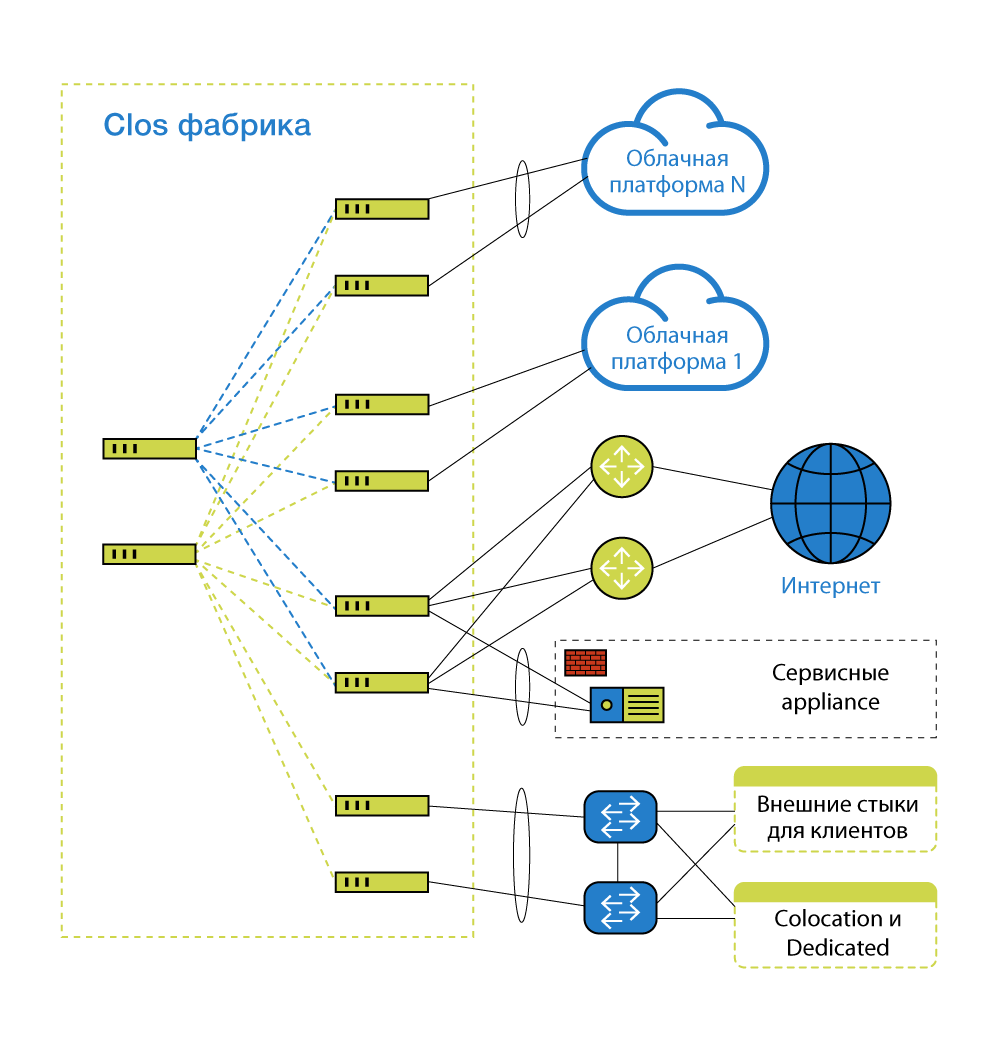

There are cloud platform and services next to it, which are interconnected. Server clouds are stuck into the network. Devices that provide network operation by themselves are plugged into the same network. Cloud client equipment is sometimes plugged into the same network. And a lot of things stuck, for example, channels on the Internet. That is, the network should be, and for it, someone must be responsible. Since the project is rather big, with all sorts of complex interrelations (there is an OpenStack-part and VMware-part, or another * aaS), it is not enough just to configure. We need some kind of entity that will take care of all this and be responsible. Of course, this is exploitation - they are great for us. But their main task is to exploit.

')

My task is to know what will happen in a couple of years and what other architects and productologists want in order to build such a network, where all their puzzle pieces will connect properly. I also meet with vendors, contractors and customers - I listen to everyone, gather in my head the idea of how to do better about resources, taking into account all the information.

Why you need a network architect, and not a general system? Because it can be a system architect, just highly specialized in networking technologies. This is only a name that emphasizes the specifics. A network architect translates business tasks into technology and money into processes. Informs “upstairs” about his decision in detail, and if the business likes it, everything is ok. Then I write the documentation, put the system into operation.

What is special about network architect? In my opinion, he should:

- have some number of years of experience with complex networks distributed geographically both within enterprises and the data center, and between them. It is difficult to determine the number of years: one person in 2 years can see, touch and do as much as another and in 10 years will not be able to;

- Know the key network protocols. Key - depend on the place of application of the architect;

- understand how systems work with applications and what role the network plays in their work, how one affects the other and in opposite directions;

- be able to interact with employees of different levels: from managers through other architects to technical support, often speaking in the form of an interpreter, a “bridge” between often opposite tasks (for example, reducing staff and not buying hardware / software / services, expanding staff, buying spare parts / hardware / software / services). You need to be able to both write, and speak, and talk, and this should not annoy or lead people. It is not enough to know the protocols, you need to be able to explain them.

What does work look like?

We had a network architecture created historically. Once we built a cloud, as we thought was correct, according to the realities that existed then, and this became the basis. Over time, the infrastructure grew, changed - and after a while it ceased to please us as internal customers. We began to see our network differently in a couple of years, we began to look at how and what would become a bottleneck. Simply put, at some point it became obvious to us that it was necessary to change a number of network qualities. And while maintaining the cost, it is desirable.

The committee came specialists. System architects gave their introductory and wishes, got options and nuances, jointly discussed limitations and development. And I was left to calculate the decision. And accompany him.

During the development of infrastructure, new technologies have appeared, so I appreciated them, assessed our accumulated experience, superfluous different overheads — and suggested what needs to be changed and how. System architects took off their vision in terms of performance, speed and scalability - he took that into account. Operation added Wishlist and wishes - took this into account.

One of the requirements set by system architects was the ability to enable 25 Gbps Ethernet ports. Previously, everything was done on N * 10 GB, and the 40 Gbit / s interface was a set of 4 to 10 Gbit / s, and 100 Gbit / s - 10 to 10 Gbit / s. Now you can get hundreds of 4x25, which simplifies operation, and less requirements for SCS. Yes, and in the protocols I wanted to pick something up. Here is the target:

Details

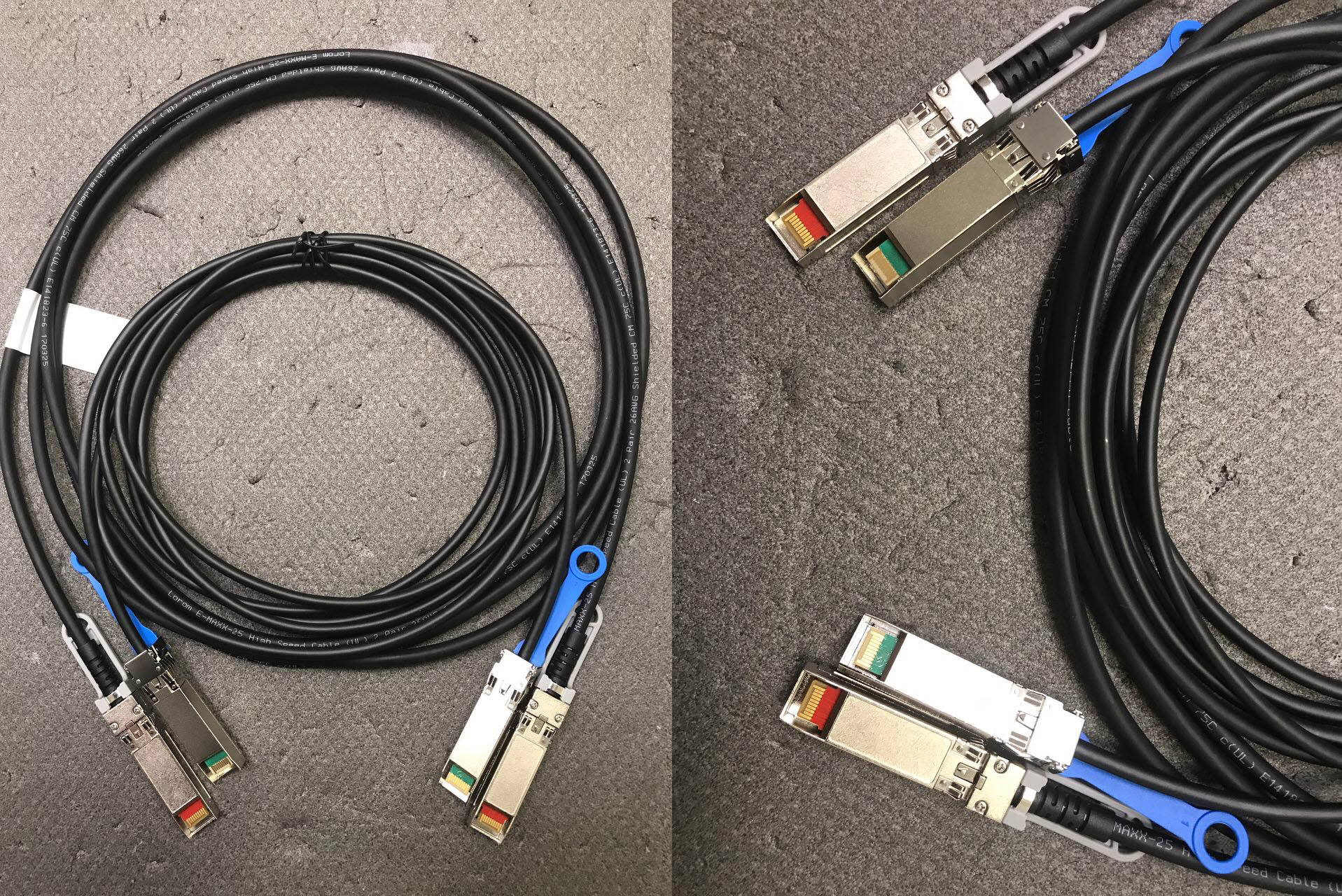

25G Ethernet - it is not much different in price, network and server hardware than 10GbE links. Calculated on the port, the price is almost the same, but the speed increase is very pleasant. And customers will definitely need it in the coming years. At the same time, we switched to the joints between the switches for 100 GB. The network inside the data center with a band is much simpler than between data centers - optics and transceivers are cheaper.

10 and 25 Gb / s DAC cables - you can't tell the difference

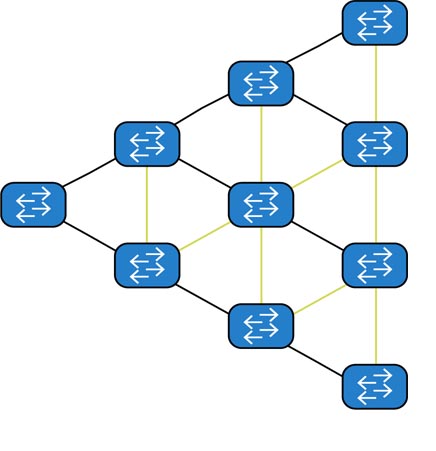

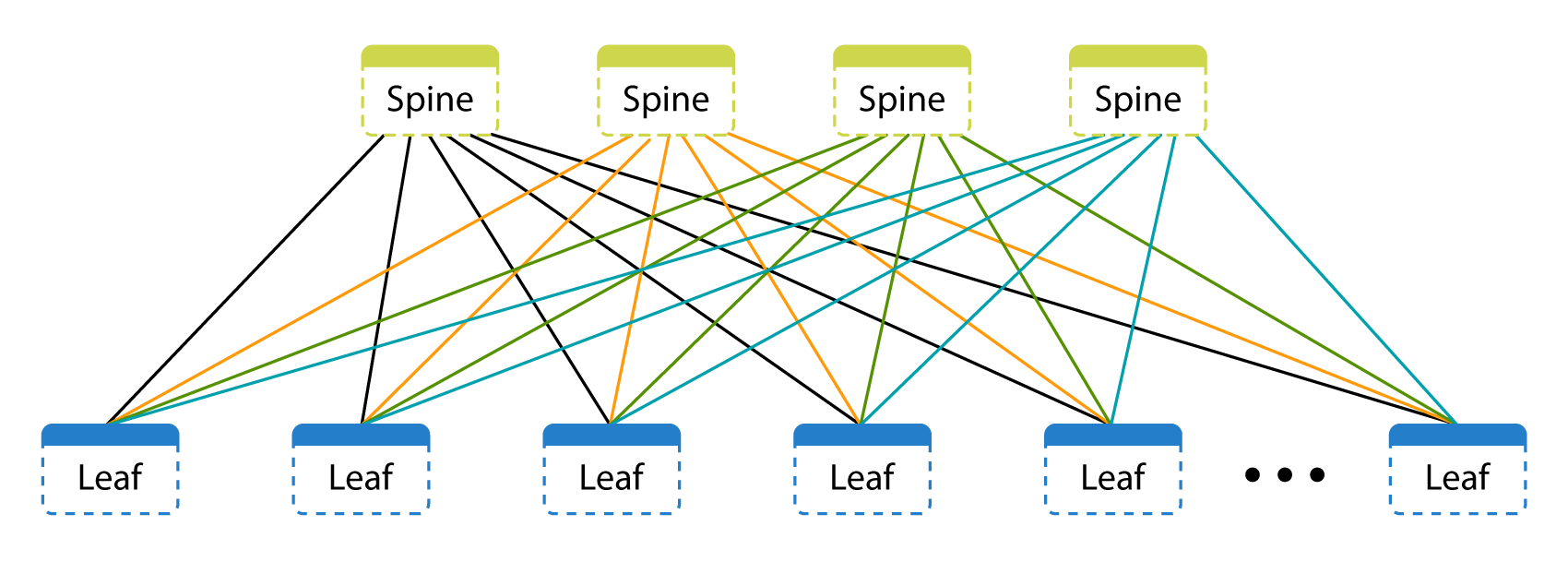

The target architecture is based on the CLOS (Leaf & Spine) topology. Here is the old technology of combining switches over spanning trees:

But the new one:

As you can see, each switch is independent on its own. There is a control plane (control) and data plane (data transfer). The management level is determined by the switch OS and routing and signaling protocols. The data transfer layer is characterized by what the switch does with the data transmitted from port to port.

Historically, to increase the port capacity and mainly for the possibility of collecting LAG (link aggregation group - a fail-safe link of two or more physical between different switches), either stand-alone switches or switches (interface cards) were used in a single chassis unit, or individual switches were combined in the virtual chassis. In the virtual chassis, everything is convenient: a single control plane unites different switches under a single control, and the engineer sees the individual switches as interface cards in the same chassis. But the chassis has its drawbacks: a strong set-up control plane can lead to the failure of all switches in the virtual chassis. This does not mean that the virtual chassis has no place in this world, but for our tasks and processes they are less convenient and reliable.

Spine level is just a transfer from one node to another, they have no client connections.

Spanning Tree is an old and time-tested protocol, the main purpose of which is to avoid loops with ring topologies. Where do the loops come from? Look at the picture above. Loops are the result of link reservations. Links are blocked, which can lead to rings - they will be used only during an accident and after rebuilding the tree. You can twist the STP timers, you can use additional mechanisms that speed up the time of rebuilding the tree, but still locked links. The problem with rebuilding the tree is also in the losses at the time of rebuilding: if 100 Mbit / s and 100 Gbit / s at the same time, even the minimum time of rebuilding will lose much more signals (data). Using Per VLAN Spanning Tree or MSTP regions also does not solve the problems of our tasks: we have a very different VLAN load - they are difficult to distribute once and for all or automatically by links.

By itself, the use of VLAN for segmentation for us also becomes a problem of scaling: in one domain there can be no more than 4000. Customers often build hybrid systems: part at home, part at the cloud. The joints between the customer and the cloud infrastructure - in 95% of cases using VLAN. Couples. And then add a couple more. And one more system will be spread to the cloud ... And one more ... VLAN crossing at the entrance is not a problem: you can always “remap” them (remap - change the tag at the junction with the customer). But the number of VLANs and their loading on the links using STP is a problem.

And there is no particular alternative. You need a big chassis with a lot of ports, so that is enough for everyone! Pair (for reserve)! But we have a lot of different segments that are physically even in one data center spread across different rooms (this happened for different reasons - both architectural and “historically”), it will be difficult to physically connect all the hosts to this pair, and even more difficult to operate . Also, if this couple falls, we lose the entire data center in the worst of cases: all hosts lose each other. Moreover, different segments require different parameters for delays and buffering, which is more difficult to achieve inside one chassis. Reserving host links to different switches without MLAG cannot be solved in any way, if we want a backup with a VLAN. Thus, the tunneling of traffic in the VLAN is not enough for our tasks.

Consider IEEE 802.1aq, also known as SPB, and its “killer” - TRILL - open standards, and we all love open standards! But support from network equipment vendors for these protocols is not very - they do not like them. Immediately limit the suppliers to 2-3. In a couple of years, we may lose their support in existing platforms or not even get the same vendors in new platforms. Cisco FabricPath - though almost TRILL, but full vendor lock.

Anything clever, strong and in itself is SDN! Big Switch, Plexxi or OpenFlow based solutions are very beautiful and have undeniable advantages. But we saw in them a complete vendor lock, which is unacceptable for us as a service provider. We abandoned them for this project.

So we came to Leaf & Spine on IP with EVPN and VXLAN.

Here you have to digress and say that there are 2 options for building Leaf & Spine: L2-factory or L3-factory using overlays for “forwarding” L2 around the factory (alas, we need overlays, because we don’t have our application, which can only work IP). If you build an L2-factory with redundancy of factory nodes, then you need to use VLANs, the number of which is limited to 4K, while there is no possibility to use different VLAN domains within the factory, it is necessary to ensure their uniqueness. For the Active-Active topology, you must also use Multi-Chassis Link Aggregation is utilized (MC-LAG) on all nodes. L3-factory allows with overlays to achieve greater flexibility, does not depend on the number and uniqueness of the VLAN. As an overlay, you can use what is supported in ASIC network equipment (and, of course, in switch software), and now it is MPLS and VXLAN (RFC 7348). VXLAN needs to be “signaled”, for this you can use a static configuration, multicast, MP-BGP EVPN (Multiprotocol Border Gateway Protocol Ethernet Virtual Private Network) or controllers. EVPN is chosen as a more scalable and cheaper option, because it is an open and industrial standard - in theory it will allow to achieve inter-vendor interaction of equipment and software (as, for example, happened to the “simple” or “basic” BGP). The protocol does not introduce in the root of the new signaling (unlike SPB or TRILL) and does not impose restrictions on the data plane (there are no requirements for other encapsulations - VXLAN or MPLS will be suitable, unlike, for example, from Geneve). The protocol also does not require additional features or settings such as multicast. EVPN address family includes information on both layer 2 (MAC) and layer 3 (IP), and also has ARP suppression mechanisms, which, in conjunction with the localization of MAC / ARP learning, allows minimizing flooding on the network through EVPN: every network the device builds its topology based on the data from the "neighbors". Of course, this is a controversial point: for someone centralization of management and decision can be a plus (for example, the presence of controllers a la SDN). EVPN allows you to achieve multihoming LAG: terminal hosts will “think” that they are connected to the same switch. Given that EVPN has not lived for another five years, it is not clear how much of a solid future it has. In general, we can change the vendor without changing the protocols. For us it is important that part of the standard is not nailed - it is already the fifth year in the draft mode. Some vendors even implemented the recorded standards in their own way, and each in its own volume and in its own script. No vendor has a complete set of new technology features in one place. For example, a different set of types of routes in different directions. The code is not yet polished to shine at the expense of many years of operation and a large installation base.

Someday the zoo will end

If you look at all our decisions above, you can see that, in principle, all this will someday be decided by one more or less intelligible standard. The problem is that there are many vendors, everyone wants theirs, and the network as such is developing slowly. Once we, architects, may be replaced with a simple script, but this is still very, very far away.

Therefore, if you want to poke around in undocumented features of hardware and software, learn a lot about what is not written in the instructions, and be a free tester for the vendor - go to the network architects. In order to avoid “what kind of idiot did you configure it?”, You need a team game with business, architects, productologists and exploitation. For example, if you don’t talk with exploitation, which in general, logically, is not needed in the design process, then you may not know a number of features that are visible only when you turn the nuts with your hands. In general, a team game. In general, the network architect is both an architect, a translator, and a person who is rebuilding a ship afloat. The only difference is that this is his ship, and if you work together with everyone, it will be cosmically cool.

The text was prepared by Oleg Alekseenko, network architect Technoserv Cloud .

Source: https://habr.com/ru/post/351226/

All Articles