Richard Hamming: Chapter 5. The History of Computers — A Practical Application

“The goal of this course is to prepare you for your technical future.”

Hi, Habr. Remember the awesome article "You and your work" (+219, 2365 bookmarks, 360k readings)?

Hi, Habr. Remember the awesome article "You and your work" (+219, 2365 bookmarks, 360k readings)?So Hamming (yes, yes, self-checking and self-correcting Hamming codes ) has a whole book based on his lectures. Let's translate it, because the man is talking.

This book is not just about IT, it is a book about the thinking style of incredibly cool people. “This is not just a charge of positive thinking; it describes the conditions that increase the chances of doing a great job. ”

')

We have already translated 13 (out of 30) chapters.

Thanks for the translation thanks to Sergey Metlov, who responded to my call in the "previous chapter." Who wants to help with the translation - write in a personal or mail magisterludi2016@yandex.ru (By the way, we also started the translation of another cool book - "The Dream Machine: The History of Computer Revolution" )

Chapter 5. The history of computers - practical application

As you probably noticed, I use technical material to relate several stories, hence I will begin with a story about how this chapter and the previous two came into being. By the 1950s, I realized that I was afraid to speak in front of a large audience, despite serious college studies for many years. Having considered this fact, I came to the conclusion that I cannot afford to become a great scientist, having such a weakness. The duty of a scientist is not only to make discoveries, but also to successfully convey them in the form of:

- books and publications;

- public speaking;

- informal conversations.

Problems with any of these skills could seriously pull my career to the bottom. My task was to learn how to speak in public without fear of the audience. Undoubtedly, practice is the main tool, and it should remain at the forefront, despite the presence of other useful techniques.

Shortly after I realized this, I was invited to give an evening lecture for a group of engineers. They were IBM customers and studied some aspects of working with IBM computers. Earlier, I myself attended this course, so I knew that lectures usually take place during the week on weekdays. As entertainment in the evenings, IBM usually organizes a party on the first day, a visit to the theater on some of the other days, and a lecture on a common topic about computers on one of the last evenings. Definitely, I was invited to one of these last evenings.

I immediately accepted the offer, because it was for me the very chance to practice speaking in public, which I needed. Soon I decided that my presentation should be so good that I was invited again. It would give me more room to practice. At first, I wanted to give a lecture on one of my favorite topics, but soon I realized that if I want to be called again, I have to start with what will be interesting for the audience. And this is usually a completely different topic. I didn’t know for sure that people would like to hear what course they are taking and what their abilities are, so I chose a topic that would be of interest to the majority - “The history of computing technology before the year 2000”. I was curious about this topic, and I myself was wondering what exactly I could tell about it! Moreover, and this is important in preparing for the performance, I was preparing myself for the future.

Asking myself, “What do they want to hear?”, I do not speak as a politician, but as a scientist who must tell the truth as it is. A scientist should not act just for fun, because The purpose of a lecture is usually to convey scientific information from the lecturer to the audience. This does not mean that speech should be boring. There is a thin, but very definite line between scientific discussion and entertainment, and the scientist should always be on the right side of this line.

My first speech was about hardware, in the creation and operation of which there are, as I noted in Chapter 3, three natural limitations of the nature: the size of molecules, the speed of light, and the problem of heat dissipation. I added beautiful colored VuGraph schemes, denoting on some layers the limitations of quantum mechanics, including the result of the uncertainty principle. My speech was successful, because An IBM employee who invited me to speak, told me later how much the participants liked her. I casually mentioned that I also liked it, and I will be glad to come to New York again any day to give my lecture, provided that I am called in advance. IBM agreed. This was the first cycle of lectures, which lasted for many years, two or three times a year. For myself, I received a sufficient amount of practice of public speaking and stopped being so afraid of it. You should always feel some excitement when speaking. Even the best actors and actresses usually have a slight stage fright. Your mood will be passed on to the public, so if you are too relaxed, people may get bored or even fall asleep!

My speech gave me the opportunity to keep abreast of the latest news and trends in computers, contributed to my intellectual development and helped me improve my oratory. I was not just lucky - I did a serious job, trying to delve into the essence of the issue. In any lecture, wherever it took place, I began to pay attention not only to what was said, but also to the style of presentation, trying to understand whether the final speech was sufficiently effective. I preferred to skip lectures, which were purely entertaining, but I myself learned to tell jokes. Evening lecture usually has to contain three good jokes: one at the beginning, one in the middle and the last at the very end, so that the listeners remember at least one. All three, however, should be well narrated. I needed to find my own style in humor, and I practiced telling jokes to the secretaries.

After several of my lectures, I realized that not only hardware, but also software would limit the evolution of computer technology as it approached 2000, as noted in the previous chapter 4. Eventually, after a while, I began to realize that economy is what is more likely to advance the evolution of computers. Much, though not all, of what was to happen, must be economically justified. This will be discussed later in this chapter.

The development of computer technology began with simple arithmetic, then went through a great many astronomical applications and came up with cumbersome computational problems. the implementation of which requires a long time). However, it is worth remembering the Spanish theologian and philosopher Raymond Lulla (1235-1315), also known as Lully, who built a logical machine! It was the same car that Swift made fun of in “The Adventures of Gulliver” when Gulliver was on the island of Liliput. I have the impression that Lilliputta corresponds to Mallorca, where Lull lived and was in good health.

In the early years of the development of modern computer technology, say in the area of the 1940s and 1950s, “number crunching” was its main driving force, since people who needed serious computations were the only ones who had enough money to allow themselves (at that time) to perform them on computers. Since the cost of computers fell, the set of tasks for which it became profitable to use them, expanded and began to include tasks that go beyond the “number crunching” framework. We realized that they could also be performed on computers, it was simply not profitable enough at that time.

Another significant moment in my experience in the field of computing occurred at Los Alamos. We worked on solving partial differential equations (atomic bomb behavior) on primitive equipment. First, at Bell Telephone Laboratories, I solved partial differential equations on relay computers; I even solved a partial differential-integral equation! Later, with much better cars, I moved on to ordinary differential equations for calculating the trajectories of the missiles. Later I published several articles on how to compute a simple integral. Then I went to the article about the evaluation of the function, and finally published a document on the combination of numbers! Yes, we were able to solve some of the most difficult tasks on the most primitive equipment - it was necessary to do this in order to prove that machines can do things that could not be done without them. Then, and only then, could we turn to the question of the cost-effectiveness of solving problems that until then could only be solved manually! And for this we needed to develop the basic theories of numerical analysis and practical calculations suitable for machines, and not for manual calculations.

This is typical of many situations. First of all, it is necessary to prove that a new thing, a device, a method or something else can cope with the most difficult tasks before it can penetrate into the system in order to perform first routine, and later more useful tasks. Any innovation always meets with such resistance, so do not lose heart, seeing how your new idea is most stupidly refused to accept. Understanding the scale of the actual problem, you can decide whether you should continue to make efforts, or whether you should improve your decision, and not waste your energy in the fight against inertia and stupidity.

In the early years of the development of computers, I soon turned to the problem of solving many small problems on a large machine. I realized that I was actually engaged in mass production of an ever-changing product — I had to organize the work so that I could cope with most of the tasks that would arise next year, and at the same time without knowing what exactly they would be. Then I realized that computers in the broad sense of the word opened the door to mass production of a variable product, regardless of what it is; numbers, words, word processing, furniture making, weaving or something else. They give us the opportunity to work with diversity without excessive standardization, and, therefore, we can grow faster, moving towards a desired future! Today you can observe that this applies to the computers themselves!

Computers, with little human intervention, develop their own chips and more or less automatically assemble themselves from standard parts. You simply specify what you need in the new computer, and the automated system collects it. Some computer manufacturers today are assembling parts with little or no human intervention.

The peculiar feeling that I was involved in the mass production of a variable product with all its advantages and disadvantages led me to the IBM 650, which I mentioned in the previous chapter. With an effort equal to about 1 person-year in total for 6 months, I found that by the end of the year I had more work done than if I took on every task in turn! Creating software has paid off in one year! In such a rapidly changing field as computer software, if it does not bring the expected benefits in the near future, it is unlikely to ever pay off.

I have not told about my experience outside of science and engineering. For example, I solved one rather serious business problem for AT & T using UNIVAC-I in New York, and one day I will tell you what lesson I learned then.

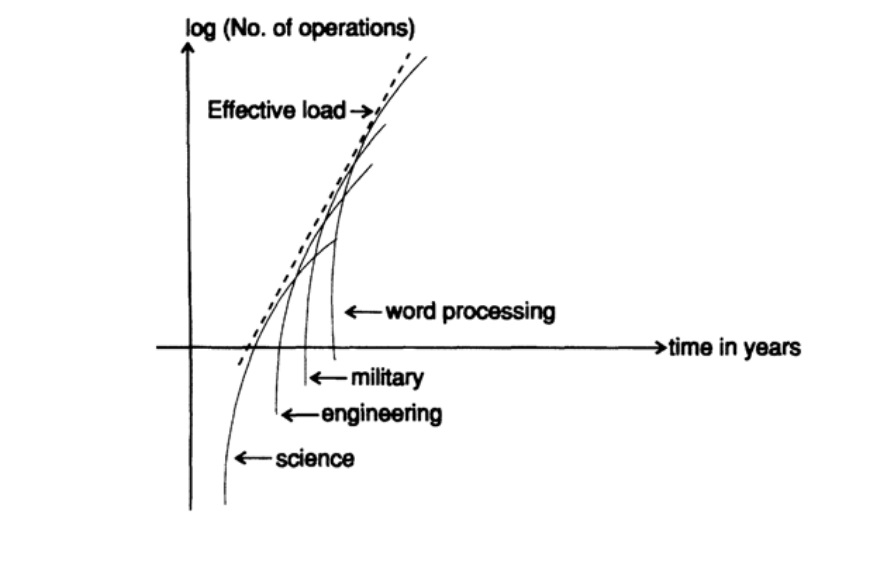

Let me discuss in more detail the use of computers. It is no secret that when I worked in the research department at Bell Telephone Laboratories, the tasks were initially mostly scientific, but in the process we were also confronted with engineering. First (see Fig. 5.1), following only the growth of purely scientific problems, you get a curve that grows exponentially (pay attention to the vertical logarithmic scale), but soon you will see how the upper part of the S-shaped curve flattens to more moderate growth rates. In the end, given the problem that I worked at Bell Telephone Laboratories and the total number of scientists in the laboratory, there was a limit to what they could offer and what resources to use. As you know, they started to offer much larger tasks much more slowly, so scientific calculations still constitute a significant part of the use of computers, but not the mainstream in most organizations.

Soon, engineering tasks appeared, and their volume grew almost along the same curve, but they were larger and became atop an earlier scientific curve. Then, at least in Bell Telephone Laboratories, I found an even larger segment — military computing, and finally, when we turned to word processing, the compile time for higher languages and other details showed similar growth. . Thus, while each type of workload seems to be gradually approaching saturation, in turn, the net net effect of them was to maintain a relatively constant growth rate.

Figure 5.1

What will happen next to continue this linear logarithmic growth curve and prevent the inevitable smoothing of the curves? The next big area, I believe, is pattern recognition. I doubt our ability to cope with the general problem of pattern recognition, because first of all it means too much. But in areas such as speech recognition, image recognition from radar, image analysis and redrawing, workload planning in factories and offices, analysis of statistical data, creation of virtual images, etc., we are free to consume a very large amount of computing power. The calculation of virtual reality will become a major consumer of computing power, and its apparent economic value ensures that this happens in both practical areas and in the field of entertainment. In addition, I believe that artificial intelligence, which one day will reach the stage that its use justifies investments in computing power, will become a new source of problems that need to be solved.

We started early to perform interactive calculations, which I was introduced to by a scientist named Jack Kane. At that time, he had a wild idea to connect a small Scientific Data Systems (SDS) 910 computer to a cyclotron in Brookhaven, where we spent a lot of time. My vice president asked me if Jack could do it, and when I carefully studied the question (and Jack himself), I said that I thought I could. I was then asked: “Will the computer manufacturer be able to carry out its support in the course of work?”, Since the vice-president had no desire to get some kind of unsupported car. This required much more effort from me in non-technical directions, and I finally made an appointment with the SDS President in his office in Los Angeles. I received a satisfactory answer and returned with a sense of confidence, but more on that later. So we did it, and I was sure, as I am sure now, that this cheap little SDS 910 machine at least doubled the effective performance of a huge expensive cyclotron! It was, of course, one of the first computers that during the cyclotron launch collected, reduced and displayed the collected data on the screen of a small oscilloscope (which Jack collected and made him work for several days). This allowed us to get rid of a lot of not quite correct runs; let's say the sample was not exactly in the middle of the beam, the noise was at the edge of the spectrum, and so we had to redesign the experiment, or something funny just happened, and we needed more details to understand what had happened. All this causes the experiment to pause and make changes, not to bring it to the end, and then look for the problem.

This experience led us to Bell Telephone Laboratories, where we began installing small computers in the lab. At first, just to collect, minimize and display data, but soon to conduct experiments. It is often easier to give a computer program the form of an electromotive voltage for experiments using a standard digital-to-analog converter than to create special circuits for this. This significantly increased the range of possible experiments and added practical interest in conducting interactive experiments. Again, we got the car under one pretext, but its presence ultimately changed both the problem itself and what the computer was used for initially. When you see that you have been able to successfully use a computer for solving some problem, you understand that you are already doing some equivalent, but different from the initial, work on it. Again, you can see how the presence of a computer ultimately changed the very nature of many of the experiments that we conducted.

The Boeing Company (in Seattle) later applied a somewhat similar idea, namely: the current scheme of the proposed aircraft design was retained on film. The implication was that everyone would use this film, so when designing any particular aircraft, all parts of a vast company would be synchronized. It did not work as planned by management. I know this for sure, because I had been hiding for extremely important work for the top management of Boeing for two weeks under the guise of carrying out a routine computer center check for one of the lower link groups!

The reason why the method did not work is rather simple. If the design diagram in the current state is on film (currently on disk), and you use data to study, say, the area, shape and profile of the wing, then when you make changes to your parameters and see how you can improve the scheme, it is possible , this was due to the fact that someone else made changes to the overall design, and not to the change that you made, which often only worsens the situation! Therefore, in practice, during the optimization study, each group made a copy of the current tape and used it without any updates from other groups. Only when they finally finished working on their design did they make changes to the general scheme — and, of course, they had to check their new design, combined with new projects from other groups. You simply cannot use the ever-changing database for optimization work.

This brings me to talking about databases.Computers were seen as salvation in this area, which is often observed in other areas to this day. Of course, airlines with their backup systems are a good example of what can be done using a computer - just think about the mess created by manual data processing, with a lot of errors caused by human factors, not to mention the scale of the problems. Airlines now store many databases, including weather records. Weather conditions and delays at the airport are used to compile a flight profile for each flight just before take-off and, if necessary, be adjusted during the flight due to the latest information.

It seems that managers working in different companies always think that if they only know about the current state of affairs in the company in all details, they could manage it much better. Therefore, by all means, they should always have an up-to-date database of all the company's activities. There are difficulties, as shown above. But there is another; Suppose that you and I are both vice-presidents of the company, and for the morning meeting on Monday we need to prepare identical reports. You download data from the program on Friday afternoon, while I, being wiser and knowing that on weekends there is a lot of information from distant branches, I wait until Sunday. Obviously, there may be significant differences in our two reports, although we both used the same program to prepare them!In practice, this is simply unbearable. In addition, most important reports and decisions should not depend so much on whether you made them a minute earlier or a minute later.

How about a scientific database? For example, whose measurement results fall into it?

Of course, there is a certain prestige for you to make it yours, so there will be hot, expensive, annoying conflicts of interest in this area. How will such conflicts be resolved? Only through significant costs! Again, when you do optimization studies, you have the above problem; Was there a change in some physical constant about which you did not know what made the new model better than the old one? How can you save change status for all users? It is not enough to simply force users to study all your changes every time they use the machine, and if they do not, then errors will occur in the calculations. Blaming users does not fix bugs!

At first, I mostly talked about general-purpose computers, but I gradually began to discuss its use as a special-purpose device for controlling things, such as cyclotron and laboratory equipment. One of the main steps to this happened when someone from the industry of creating integrated circuits for customers, instead of creating a special chip for each client, suggested producing a four-bit general-purpose computer and then programming it for each specific task (INTEL 4004). He replaced the complex production work with the work of creating software, although, of course, the chip still had to be done, but this time it will be a large batch of identical 4-bit chips. Again, this is a trend I noted earliermoving from hardware to software to produce a mass-produced variable product — always using the same general-purpose computer. The 4-bit chip was soon expanded to 8-bit, then to 16-bit, etc., and now 64-bit computers are built into some of the chips!

You are usually not aware of how many computers you interact with daily. Traffic lights, elevators, washing machines, telephones, which currently have a lot of computers, unlike my youth, on the other end we were always waiting for a cheerful operator, eager to hear the number of the subscriber with whom you wanted to talk, answering machines, cars with many computers under the hood, are examples of how actively the range of their application is expanding. You just have to observe and note how versatile computers are in our lives. And of course, over time they will evolve even more - the same simple general-purpose computer can perform so many specific tasks that it rarely requires a special chip.

You see much more specialized chips around than what is actually required. One of the main reasons is the satisfaction of the big ego that you have your own special chip, and not one of the common herd. (I repeat part of chapter 2.) Before you make this mistake and use a special chip in any equipment, ask yourself a series of questions. Let me repeat them. Do you want the only one using your chip? How much do you need them in stock? Do you really want to work with only one or several suppliers instead of buying them on the open market? Isn't the total cost much higher in the long run?

If you have a universal chip, then all users will contribute to the detection of flaws, and the manufacturer will try to correct them. Otherwise, you will have to create your own manuals, diagnostic tools, etc., and in addition, the experience that specialists have in working with other chips will rarely help them in working with yours. In addition, updates to general-purpose chips that you may need are likely to be available to you at no cost to you, since this is usually taken care of by someone else. You will inevitably need to update the chips, because soon you will need to perform more work than the original plan required. To meet this new need, it's much easier to work with a general-purpose chip,in which there are some redundant opportunities for imminent expansion in the future.

I do not need to give you a list of how computers are used in your business. You should know better than me how quickly their field of application is expanding throughout your organization, not only directly in production, but also far beyond. You should also be well aware of the ever-increasing rate of change, modernization, and flexibility of these versatile information-processing devices, enabling the entire organization to meet the ever-changing requirements for the operating environment. The list of possible options for the use of computers has just begun to take shape, and it has to be expanded - perhaps for you. I don’t mind a 10% improvement in the current situation, but I’m also expecting from you innovations that will affect your organization so much that history will remember them for at least a few years.

As you move up the career ladder, you must explore the successful applications of computers and those that fail; try to learn to distinguish between them; try to understand the situations that lead to success, and those that almost guarantee failure. Realize the fact that, as a rule, you ultimately need to solve not the original task, but the equivalent one, and do it in such a way that in the future you can easily make improvements and edits (if the approach works). And always think about how your technology will be used in combat, because, as a rule, the reality will be different from your ideas.

Variants of using computers in society are far from exhausted, and there are many other important areas where they can be used. And it is easier to find them than many people think!

In the two previous chapters, I made several conclusions about possible limitations in the areas of application, as well as in hardware and software. Therefore, I should discuss some possible limitations of the applications. I will do this in the next few chapters under the general title “Artificial Intelligence, AI”.

To be continued...

Who wants to help with the translation - write in a personal or mail magisterludi2016@yandex.ru

By the way, we also launched another translation of the coolest book - “The Dream Machine: The History of Computer Revolution” )

Book content and translated chapters

— magisterludi2016@yandex.ru

- Intro to The Art of Doing Science and Engineering: Learning to Learn (March 28, 1995) ( ) : 1

- «Foundations of the Digital (Discrete) Revolution» (March 30, 1995) 2. ()

- «History of Computers — Hardware» (March 31, 1995) ( )

- «History of Computers — Software» (April 4, 1995) 4. —

- «History of Computers — Applications» (April 6, 1995) 5. —

- «Artificial Intelligence — Part I» (April 7, 1995) ( )

- «Artificial Intelligence — Part II» (April 11, 1995) ( )

- «Artificial Intelligence III» (April 13, 1995) 8. -III

- «n-Dimensional Space» (April 14, 1995) 9. N-

- «Coding Theory — The Representation of Information, Part I» (April 18, 1995) ( )

- «Coding Theory — The Representation of Information, Part II» (April 20, 1995)

- «Error-Correcting Codes» (April 21, 1995) ( )

- «Information Theory» (April 25, 1995) ( , )

- «Digital Filters, Part I» (April 27, 1995)

- «Digital Filters, Part II» (April 28, 1995)

- «Digital Filters, Part III» (May 2, 1995)

- «Digital Filters, Part IV» (May 4, 1995)

- «Simulation, Part I» (May 5, 1995) ( )

- «Simulation, Part II» (May 9, 1995)

- «Simulation, Part III» (May 11, 1995)

- «Fiber Optics» (May 12, 1995)

- «Computer Aided Instruction» (May 16, 1995) ( )

- «Mathematics» (May 18, 1995) 23.

- «Quantum Mechanics» (May 19, 1995) 24.

- «Creativity» (May 23, 1995). : 25.

- «Experts» (May 25, 1995) 26.

- «Unreliable Data» (May 26, 1995) ( )

- «Systems Engineering» (May 30, 1995) 28.

- «You Get What You Measure» (June 1, 1995) 29. ,

- «How Do We Know What We Know» (June 2, 1995)

- Hamming, «You and Your Research» (June 6, 1995). :

— magisterludi2016@yandex.ru

Source: https://habr.com/ru/post/351148/

All Articles