How to optimize DevOps using machine learning

DevOps software development (development and operations) methodology, which is popular today, is aimed at active interaction and integration of development specialists and information technology service specialists. Characteristically, during DevOps, large amounts of data are generated that can be used to simplify workflows, orchestration, monitoring, troubleshooting, or other tasks. The problem is that there is too much data. Server logs alone can accumulate hundreds of megabytes per week. If monitoring tools are used, megabytes and gigabytes of data are generated in a short period of time.

The result is predictable: the developers do not directly look at the data themselves, but set threshold values, that is, they are looking for exceptions, and not doing data analytics. But even with the help of modern analytical tools, you should know what to look for.

Most of the data created in DevOps processes is related to application deployment. Application monitoring replenishes server logs, generates error messages, transaction tracing. The only sensible way to analyze this data and come to some conclusions in real time is to use machine learning (ML).

')

Most machine learning systems use neural networks, which are a set of multi-level algorithms for processing data. They are trained by entering previous data with a known result. The application compares the algorithmically obtained results with known results. Then the coefficients of the algorithm are adjusted to try to simulate the results.

This may take some time, but if the algorithms and network architecture are correctly aligned, the machine learning system will begin to produce results that correspond to the actual ones. In essence, the neural network “learned” or modeled the relationship between data and results. This model can then be used to estimate future data.

Algorithms of machine analysis and training allow you to monitor information objects (databases, applications, etc.) and build profiles of normal (without failures) system operation. In case of any deviations (anomalies), for example, when the response time increases, the application hangs or transactions are slowed down, the system records this situation and sends a notification about it, which allows you to build preventive policies that prevent such anomalies.

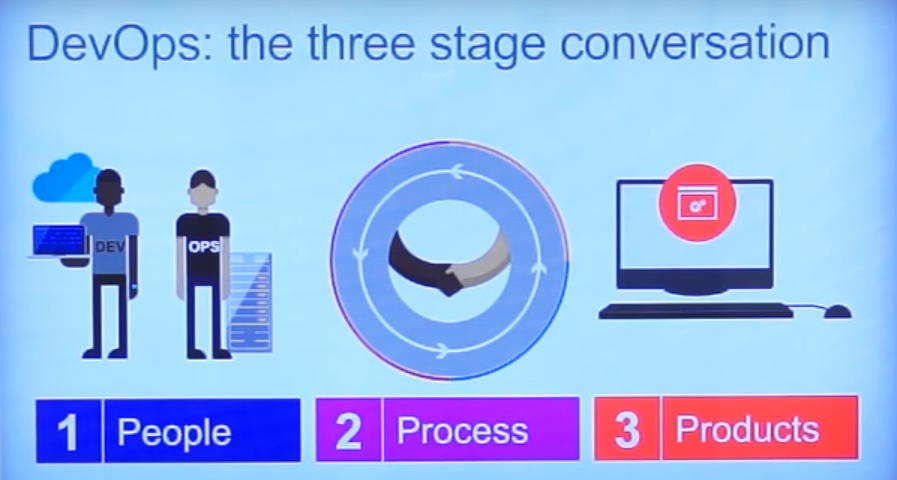

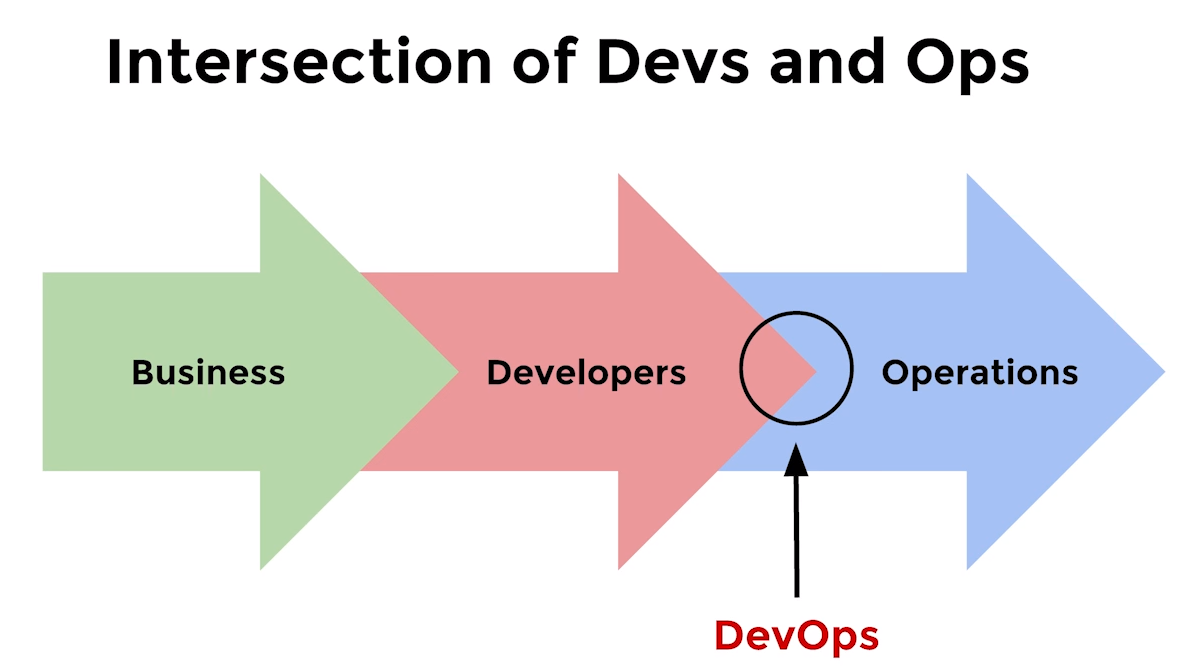

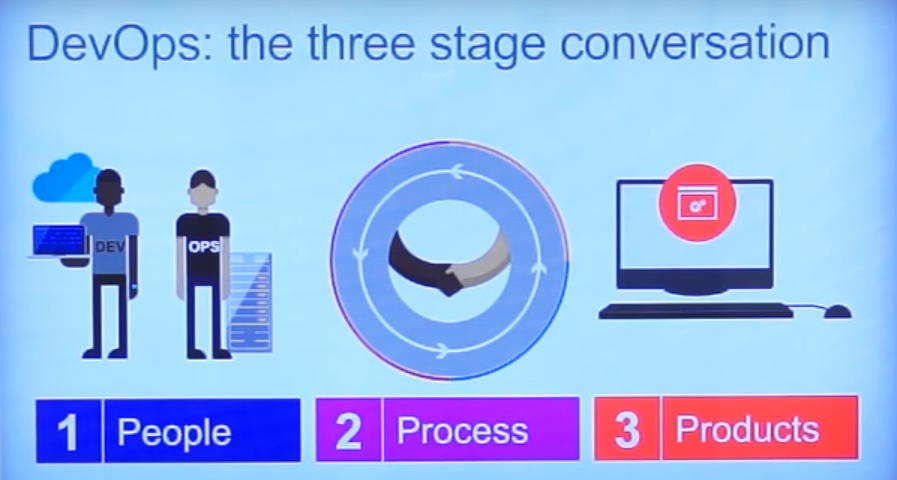

DevOps is a combination of software development and operation. Its components are processes, people, and products.

How difficult is it to train such a system, how much time is required for this, the efforts of experts? Essentially, no training is needed - she learns herself on data sets without programming help and can predict the relationship of the data sets. This makes it possible to eliminate the "human factor", thereby speeding up the system by eliminating manual processes (such as identifying data correlation, dependencies, etc.).

The system itself builds the profiles of the normal functioning of the object, and for additional adjustment there are enough parameterization mechanisms. However, although machine learning is a very powerful tool, it needs to accumulate data. Over time, the number of false positives decreases (false positive). Their number can be reduced almost by an order of magnitude with the help of “fine tuning”.

Adjustment mechanisms help to make the algorithms more accurate, to adapt them to specific needs. Thus, over time, the accuracy is further increased due to the accumulated statistics.

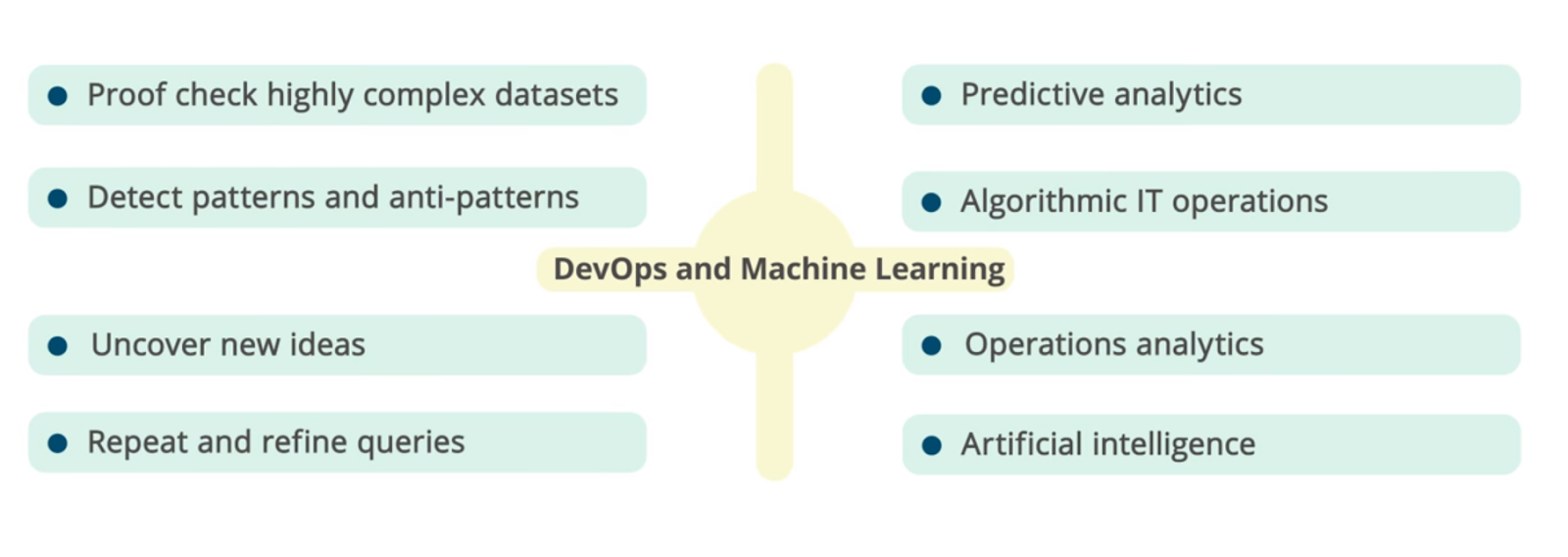

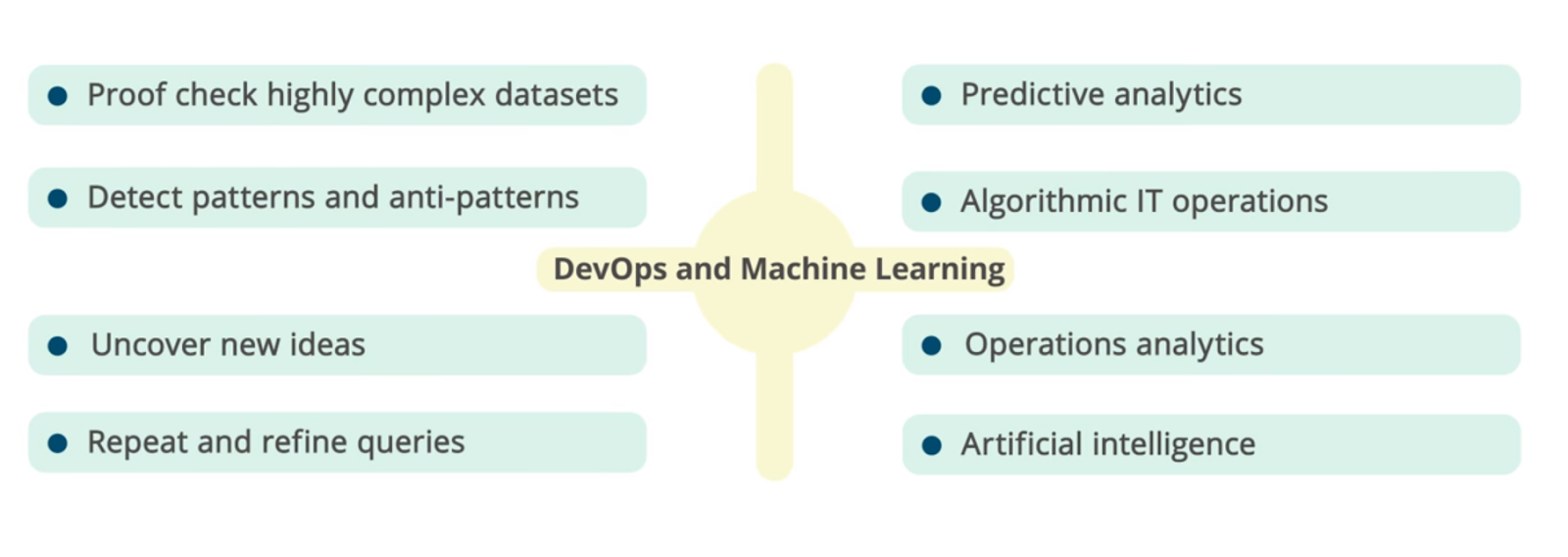

Synergy of DevOps and machine learning (ML) opens up new opportunities for predictive analytics, IT Operations Analytics (ITOA), Algorithmic IT Operations (AIOps), and others.

Algorithmic approaches are aimed at identifying anomalies, clustering and data correlation, forecasting. They help find answers to many questions. What is the cause of the problem? How to prevent it? Is this normal behavior or abnormal? What can be improved in the application? What should I look for immediately? How to balance the load? Machine learning in DevOps can find many uses.

DevOps and ML: analysis of complex data sets, identification of dependencies and patterns, predictive analytics, operational analytics, artificial intelligence, etc.

Machine learning allows you to use large data sets and helps to make informed conclusions. Identifying statistically significant anomalies makes it possible to identify abnormal behavior of infrastructure objects. In addition, machine learning makes it possible to identify not only various anomalies in the processes, but also illegal actions.

Recognizing and grouping records based on common templates helps you focus on important data and cut off background information. The analysis of the records preceding the error and following it increases the efficiency of finding the root causes of problems, the constant monitoring of applications to identify problems facilitates their quick elimination during operation.

Identity, user data, information security, diagnostic, transactional data, metrics (applications, hosts, virtual machines, containers, servers) - user-level data, applications, connective layer, virtualization level and infrastructure - all are well suited for machine learning and have predictable format.

Regardless of whether you are buying a commercial application or creating it yourself, there are several ways to use machine learning to improve DevOps.

The ultimate goal is to improve, in a measurable way, DevOps methods from concept to deployment and decommissioning.

Machine learning systems are able to process a variety of real-time data and provide an answer that DevOps developers can use to improve processes and better understand application behavior.

The result will be DevOps acceleration - a combination of software development and operation. Instead of weeks and months, they are reduced to days.

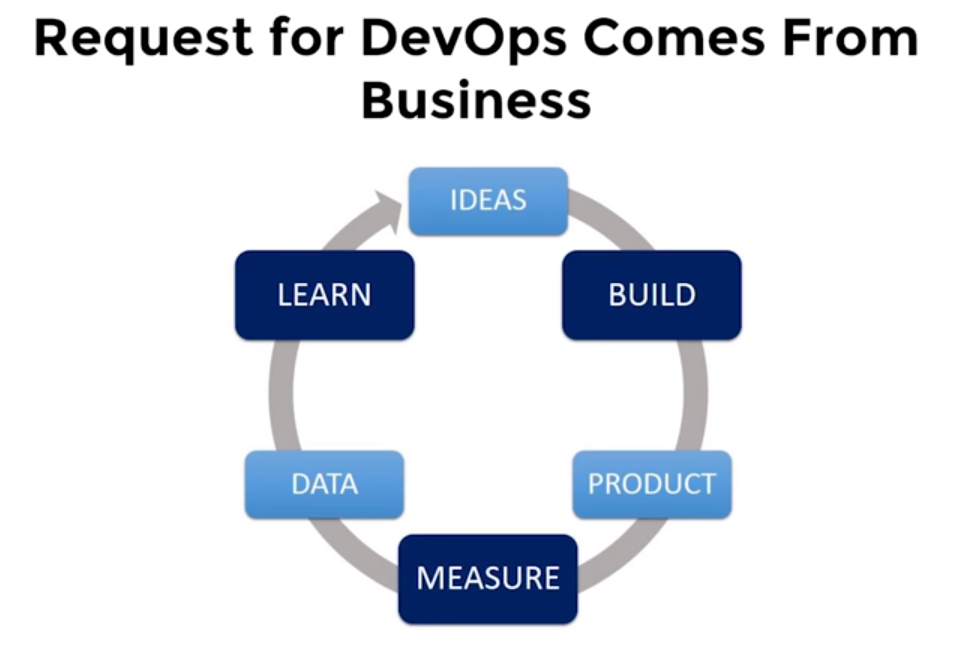

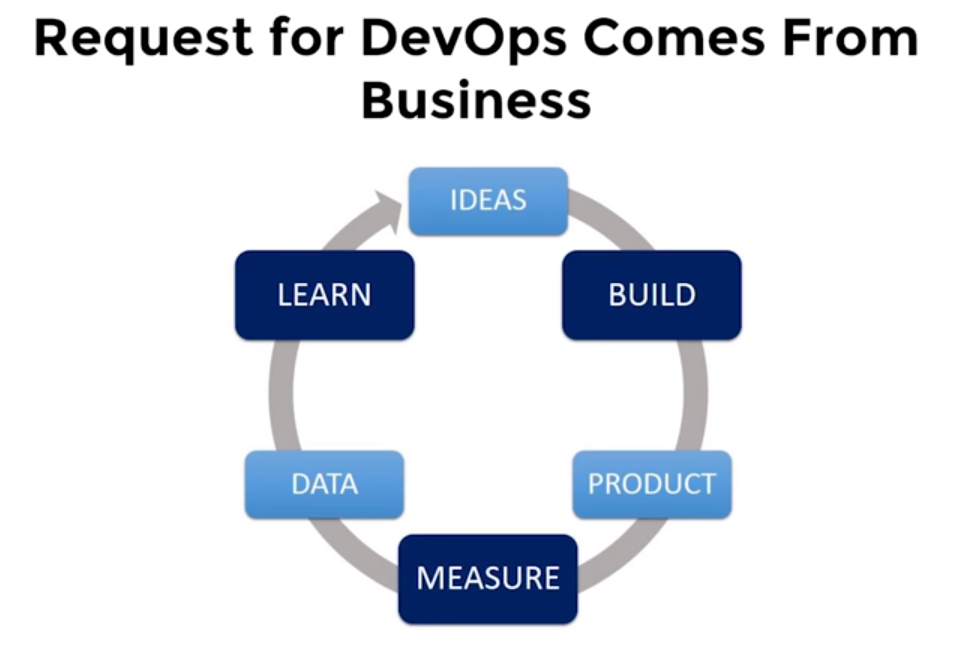

The DevOps cycle (idea, creation, product release, measurement, data collection, study) requires acceleration.

The "human factor" significantly affects the processes of operation (Ops). The task of analyzing data from different sources, their correlation and interpretation is assigned to a person. In this case, different tools are used. Such a task is difficult, time consuming and takes a lot of time. Its automation not only speeds up the process, but also eliminates the different interpretation of data. That is why the application of modern machine learning algorithms to DevOps data is so important.

Machine learning implements a single interface for developers and maintenance specialists (Dev and Ops).

Correlation of user data, transaction data, etc. is the most important tool for detecting anomalies in the behavior of various software components. And forecasting is extremely important in terms of management, monitoring application performance. It allows you to take proactive measures, to understand what you need to pay attention now, and what will happen tomorrow, or find out how to balance the load.

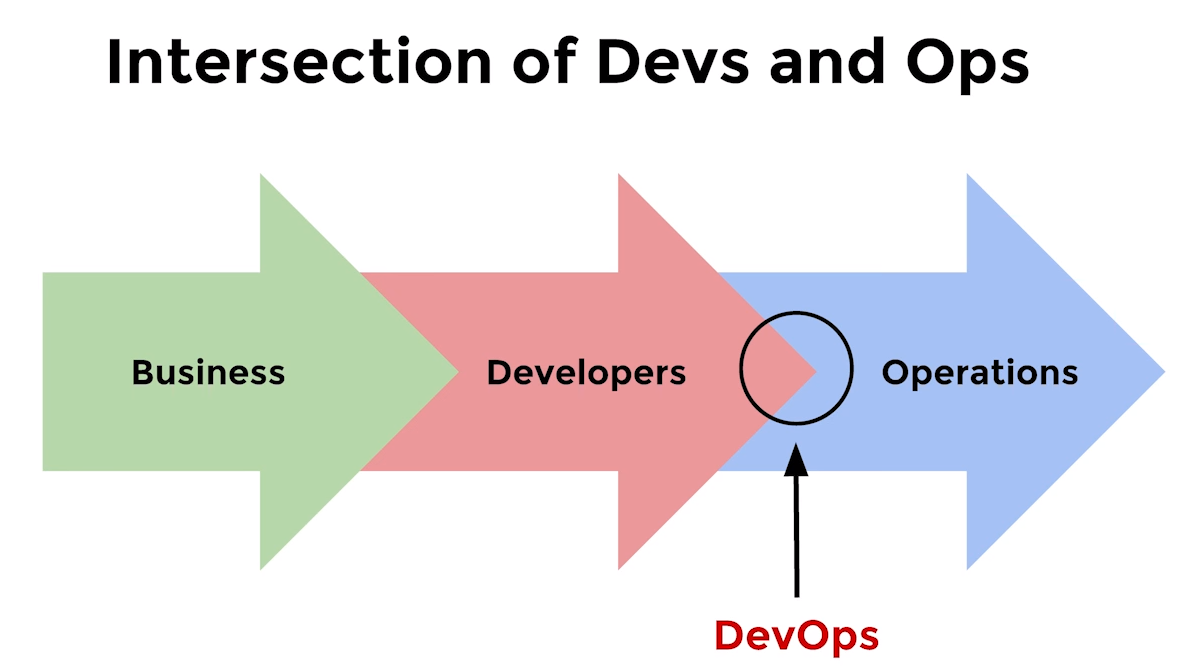

The product delivery cycle should be simple, transparent and fast, however, Dev and Ops often have conflicts at the “intersection point” .

In addition, a system with machine learning mechanisms eliminates barriers between developers and operational services (Dev and Ops). By diagnosing application performance, developers gain access to valuable diagnostic data.

The maintenance service and development teams work together to complement each other. Release cycles are shortened and accelerated, coordination and feedback are improved, testing time is reduced.

Implementing Docker, microservices, cloud technologies and APIs for deploying applications and ensuring high reliability requires new approaches. Therefore, it is important to use smart tools, and DevOps tool vendors integrate smart features into their products to further simplify and speed up software development processes.

Of course, ML is no substitute for intelligence, experience, creativity, and hard work. But today we already see wide possibilities for its use and even greater potential in the future.

The result is predictable: the developers do not directly look at the data themselves, but set threshold values, that is, they are looking for exceptions, and not doing data analytics. But even with the help of modern analytical tools, you should know what to look for.

Most of the data created in DevOps processes is related to application deployment. Application monitoring replenishes server logs, generates error messages, transaction tracing. The only sensible way to analyze this data and come to some conclusions in real time is to use machine learning (ML).

')

Most machine learning systems use neural networks, which are a set of multi-level algorithms for processing data. They are trained by entering previous data with a known result. The application compares the algorithmically obtained results with known results. Then the coefficients of the algorithm are adjusted to try to simulate the results.

This may take some time, but if the algorithms and network architecture are correctly aligned, the machine learning system will begin to produce results that correspond to the actual ones. In essence, the neural network “learned” or modeled the relationship between data and results. This model can then be used to estimate future data.

ML to help DevOps

Algorithms of machine analysis and training allow you to monitor information objects (databases, applications, etc.) and build profiles of normal (without failures) system operation. In case of any deviations (anomalies), for example, when the response time increases, the application hangs or transactions are slowed down, the system records this situation and sends a notification about it, which allows you to build preventive policies that prevent such anomalies.

DevOps is a combination of software development and operation. Its components are processes, people, and products.

How difficult is it to train such a system, how much time is required for this, the efforts of experts? Essentially, no training is needed - she learns herself on data sets without programming help and can predict the relationship of the data sets. This makes it possible to eliminate the "human factor", thereby speeding up the system by eliminating manual processes (such as identifying data correlation, dependencies, etc.).

The system itself builds the profiles of the normal functioning of the object, and for additional adjustment there are enough parameterization mechanisms. However, although machine learning is a very powerful tool, it needs to accumulate data. Over time, the number of false positives decreases (false positive). Their number can be reduced almost by an order of magnitude with the help of “fine tuning”.

Adjustment mechanisms help to make the algorithms more accurate, to adapt them to specific needs. Thus, over time, the accuracy is further increased due to the accumulated statistics.

Synergy of DevOps and machine learning (ML) opens up new opportunities for predictive analytics, IT Operations Analytics (ITOA), Algorithmic IT Operations (AIOps), and others.

Algorithmic approaches are aimed at identifying anomalies, clustering and data correlation, forecasting. They help find answers to many questions. What is the cause of the problem? How to prevent it? Is this normal behavior or abnormal? What can be improved in the application? What should I look for immediately? How to balance the load? Machine learning in DevOps can find many uses.

| Machine Learning Applications | Explanation |

|---|---|

| Application Tracking | DevOps tool activity data (for example, Jira, Git, Jenkins, SonarQube, Puppet, Ansible, etc.) provide transparency in the delivery process. Using ML can reveal anomalies in these data — large amounts of code, long build times, extended release times and code checks, identify many “deviations” in software development processes, including inefficient use of resources, frequent task switching, or slowing down the process. |

| Application quality assurance | By analyzing the test results, ML can identify new errors and create a library of test patterns based on such detection. This helps ensure thorough testing of each release, improving the quality of the applications delivered (QA). |

| Patterns of behavior | Patterns of user behavior can be as unique as fingerprints. Applying ML behaviors to Dev and Ops can help identify anomalies that constitute malicious activity. For example, abnormal access patterns to important repositories or users who intentionally or accidentally use known “bad” patterns (for example, with backdoors), unauthorized code deployment, or theft of intellectual property. |

| Operation management | Analyzing an application during operation is an area where machine learning can really prove itself, since you have to deal with large amounts of data, number of users, transactions, etc. DevOps specialists can use ML to analyze user behavior, use of resources, throughput transaction abilities, etc. for the purpose of subsequent detection of “abnormal” patterns (for example, DDoS attacks, memory leaks, etc.). |

| Notification management | A simple and practical use of ML is to manage the mass flow of warnings (alarm) in the systems being operated. This may be due to a common transaction identifier, a common set of servers or a common subnet, or the reason is more complex and requires systems to “learn” over time to recognize “known good” and “known bad” warnings. This allows you to filter warnings. |

| Troubleshooting and Analytics | This is another area where modern machine learning technologies perform well. ML can automatically detect and sort "known problems" and even some unknown ones. For example, ML tools can detect anomalies in “normal” processing, and then further analyze the logs in order to relate this problem to a new configuration or deployment. Other automation tools can use ML to alert operations, open a ticket (or chat window), and assign it to an appropriate resource. Over time, ML can even offer the best solution. |

| Preventing disruptions during operation | ML allows you to go far beyond simple resource planning to prevent crashes. It can be used to predict, for example, the best configuration to achieve the desired level of performance; the number of customers who will use the new feature; infrastructure requirements, etc. ML reveals "early signs" in systems and applications, allowing developers to start fixing or to avoid problems in advance. |

| Business Impact Analysis | Understanding the impact of code release on business goals is crucial to success in DevOps. By synthesizing and analyzing actual utilization rates, ML systems can detect good and bad models for implementing an “early warning system” when applications have problems (for example, by reporting an increase in the frequency of cases of rejection of the shopping basket or an increase in the customer’s route). |

DevOps and ML: analysis of complex data sets, identification of dependencies and patterns, predictive analytics, operational analytics, artificial intelligence, etc.

Machine learning allows you to use large data sets and helps to make informed conclusions. Identifying statistically significant anomalies makes it possible to identify abnormal behavior of infrastructure objects. In addition, machine learning makes it possible to identify not only various anomalies in the processes, but also illegal actions.

Recognizing and grouping records based on common templates helps you focus on important data and cut off background information. The analysis of the records preceding the error and following it increases the efficiency of finding the root causes of problems, the constant monitoring of applications to identify problems facilitates their quick elimination during operation.

Identity, user data, information security, diagnostic, transactional data, metrics (applications, hosts, virtual machines, containers, servers) - user-level data, applications, connective layer, virtualization level and infrastructure - all are well suited for machine learning and have predictable format.

DevOps enhancement

Regardless of whether you are buying a commercial application or creating it yourself, there are several ways to use machine learning to improve DevOps.

| The way | What does it mean |

|---|---|

| From Thresholds to Data Analytics | Since there is a lot of data, DevOps developers rarely view and analyze the entire data set. Instead, they set thresholds — a condition for some action. In fact, they discard most of the data collected and focus on deviations. Machine learning applications are capable of more. They can be trained on all data, and in run mode, these applications can view the entire data stream and draw conclusions. This will help apply predictive analytics. |

| Search for trends, not errors | It follows from the above that, when learning from all data, the machine learning system can show not only the problems identified. Analyzing data trends, DevOps experts can identify what will happen over time, that is, make predictions. |

| Analysis and Correlation of Data Sets | Much of the data is time series, and one variable is easy to trace. But many trends are a consequence of the interaction of several factors. For example, the response time may decrease when multiple transactions simultaneously perform the same action. Such trends are almost impossible to detect “with the naked eye” or with the help of traditional analytics. But properly trained applications will accommodate these correlations and trends. |

| New development metrics | In all likelihood, you are collecting data on the speed of delivery, indicators of search and correction of errors, plus data obtained from the system of continuous integration. The possibilities for finding any combination of data are enormous. |

| Historical data context | One of the biggest problems in DevOps is to learn from your mistakes. Even if there is a strategy of constant feedback, then, most likely, this is something like a wiki, which describes the problems we encountered, and what we did to investigate them. A common solution is to restart the server or restart the application. Machine learning systems can analyze data and clearly show what happened in the last day, week, month, or year. You can see seasonal or daily trends. They will at any time give us a picture of our application. |

| Search for root causes | This feature is important for improving the quality of applications and allows developers to fix availability or performance problems. Often, failures and other problems are not fully investigated, because they promptly solve the problem of restoring the functionality of the application. Sometimes a reboot restores work, but the main cause of the problem is lost. |

| The correlation between monitoring tools | In DevOps, several tools are often used to view and process data. Each of them controls the performance and performance of the application in different ways, but lacks the ability to find the relationship between this data from different tools. Machine learning systems can collect all of these disparate data streams, use them as raw data, and create a more accurate and reliable picture of the state of the application. |

| Orchestration efficiency | If there are metrics for the orchestration process, machine learning can be used to determine how effectively this orchestration is performed. Inefficiency can be the result of incorrect methods or poor orchestration, so studying these characteristics can help both in the choice of tools and in the organization of processes. |

| Prediction of failure at a certain point in time | If you know that monitoring systems give certain readings during a failure, then a machine learning application can look for these patterns as a prerequisite for a particular type of failure. If you understand the reason for this failure, you can take action to avoid it. |

| Optimization of a specific metric | Want to increase uptime? Maintain performance standard? Reduce the time between deployments? An adaptive machine learning system can help. Adaptive systems - systems without a specific answer or result. Their goal is to obtain input data and optimize certain characteristics. For example, air ticket sales systems try to fill in airplanes and optimize revenues by changing ticket prices up to three times a day. You can similarly optimize DevOps processes. The neural network is trained to maximize (or minimize) the value, rather than achieve a known result. This allows the system to change its parameters during operation in order to gradually achieve the best result. |

The ultimate goal is to improve, in a measurable way, DevOps methods from concept to deployment and decommissioning.

Machine learning systems are able to process a variety of real-time data and provide an answer that DevOps developers can use to improve processes and better understand application behavior.

Acceleration of processes

The result will be DevOps acceleration - a combination of software development and operation. Instead of weeks and months, they are reduced to days.

The DevOps cycle (idea, creation, product release, measurement, data collection, study) requires acceleration.

The "human factor" significantly affects the processes of operation (Ops). The task of analyzing data from different sources, their correlation and interpretation is assigned to a person. In this case, different tools are used. Such a task is difficult, time consuming and takes a lot of time. Its automation not only speeds up the process, but also eliminates the different interpretation of data. That is why the application of modern machine learning algorithms to DevOps data is so important.

Machine learning implements a single interface for developers and maintenance specialists (Dev and Ops).

Correlation of user data, transaction data, etc. is the most important tool for detecting anomalies in the behavior of various software components. And forecasting is extremely important in terms of management, monitoring application performance. It allows you to take proactive measures, to understand what you need to pay attention now, and what will happen tomorrow, or find out how to balance the load.

The product delivery cycle should be simple, transparent and fast, however, Dev and Ops often have conflicts at the “intersection point” .

In addition, a system with machine learning mechanisms eliminates barriers between developers and operational services (Dev and Ops). By diagnosing application performance, developers gain access to valuable diagnostic data.

The maintenance service and development teams work together to complement each other. Release cycles are shortened and accelerated, coordination and feedback are improved, testing time is reduced.

Implementing Docker, microservices, cloud technologies and APIs for deploying applications and ensuring high reliability requires new approaches. Therefore, it is important to use smart tools, and DevOps tool vendors integrate smart features into their products to further simplify and speed up software development processes.

Of course, ML is no substitute for intelligence, experience, creativity, and hard work. But today we already see wide possibilities for its use and even greater potential in the future.

Source: https://habr.com/ru/post/351138/

All Articles