Low-level code optimization on the Elbrus platform: vector addition uint16_t using intrinsics

In this article we will tell about lower-level optimizations that can be done on Elbrus processors.

In principle, optimization of this level is not a necessary stage of development for Elbrus. For most computational operations requiring high performance, you can and should use functions from the EML library.

')

However, in the current version of EML, we did not find some functions that are interesting to us, so we decided to write them ourselves.

For this we used intrinsiki. Intrinsics are constructs that look like normal functions to a programmer, but their calls are replaced by the in-place compiler with high-performance code. Most often intrinsics are needed when you want to use vector processor extensions that allow you to perform the same operation on a register containing several data elements at once. Even an optimizing compiler cannot always guess that such a construction will speed up your code. In such cases, earlier, if there was no suitable optimized library, it was necessary to use an assembler. But the speed of the assembly code essentially depends on the efficiency of using registers, taking into account ALU delays and other wonderful things. And Elbrus also has a VLIW architecture, which means that if we want to write in assembler, we will have to independently monitor the formation of broad command words. On the other hand, optimizing compilers are created for such subtleties. The transition to intrinsiki allows you to intelligently distribute the work between the person and the program. Code using intrinsiki can be easily transferred between systems that support all involved intrinsiki. That is, in our situation intrinsiki are obviously the best solution.

The Elbrus-4C and Elbrus-8C microprocessors support vector operations with a 64-bit register. Using this register, you can simultaneously process two 32-bit numbers, four 16-bit integers or eight 8-bit integers. The Elbrus microprocessor intrinsic set includes operations for data conversion, initialization of vector elements, arithmetic operations, bitwise logical operations, permutation of vector elements and, it seemed to us, quite similar to the x86 SSE / SSE2 instruction set.

So, we will start optimization. Take a piece of code to add two arrays of type uint16_t with writing the result to the third array (there is no such operation in EML yet):

// 0 // eml_16u *src1 - // eml_16u *src2 - // eml_16u *dst - // len - for (size_t i = 0; i < len; ++i) dst[i] = src1[i] + src2[i]; Now rewrite it using intrinsikov. For simplicity, we assume that the length of the len arrays is divided by 4, and the remaining elements of the arrays are processed separately. Then get something like this:

// 1 // eml_16u *src1 - // eml_16u *src2 - // eml_16u *dst - // len - static const size_t block_size = sizeof(eml_64u) / sizeof(eml_16u); for (size_t i = 0; i < len; i += block_size, src1 += block_size, src2 += block_size, dst += block_size) *(__di*)dst = __builtin_e2k_paddh(*(__di*)src1, *(__di*)src2); Here __di is the 64-bit data type, and __builtin_e2k_paddh is the intrinsic performing 16-bit unsigned addition.

However, this code is not optimal, since to load an unaligned 64-bit number r at address p processor needs to perform the following elementary operations:

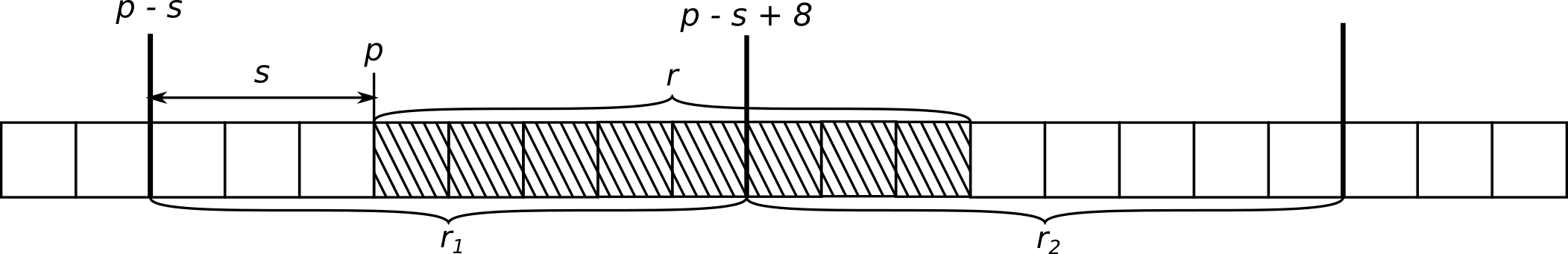

Determine the offset

saddresspfrom the alignment boundary to 64 bits (see Fig. 1). The address of the aligned 64-bit number containing the beginning ofrwill be equal top - s. The address of the next aligned 64-bit number containing the end ofrwill be equal top - s + 8.Load from memory 2 64-bit numbers r 1 , r 2 , containing

r, by aligned addresses.- Find the number

r, knowing r 1 , r 2 ,s.

Fig. 1. Scheme of unaligned loading of 64-bit data from memory.

For further optimization, we write this explicitly using Elbrus macros:

__di s = E2K_BYTES_FROM_ALIGN(p, 8); __di tmp; E2K_PREPARE_ALIGN(s, tmp); const __di *p_aligned = (__di *)E2K_ALIGN_PTR_BACK(p, 8); __di r1 = *p_aligned; __di r2 = *(p_aligned + 1); __di r; E2K_ALIGN_DATA(r1, r2, r, tmp); This code will perform 6 memory hits at each iteration of the loop, as well as the original version with unaligned loading. However, explicit addressing by aligned addresses makes it possible to use a special array swap buffer available in the Elbrus architecture to increase the efficiency of memory access (by the way, this buffer was also used in the code without intrinsics).

You can easily reduce the number of memory hits on each iteration to 3, while maintaining the values loaded on the previous iteration of the loop. In addition, we will use only the aligned record in the result array, processing the initial part of the arrays separately. Thanks to this, both reading and writing to memory will be more efficient.

// 2 // eml_16u *src1 - // eml_16u *src2 - // eml_16u *dst - // len - size_t i = 0; // 64- dst size_t offset = E2K_BYTES_TO_ALIGN(dst, sizeof(eml_64u)) / sizeof(eml_16u); for (; i < offset; ++i) dst[i] = src1[i] + src2[i]; // __di spec0, spec1; __di tmp0, tmp1; __di align1 = E2K_BYTES_FROM_ALIGN(src1 + offset, sizeof(eml_64u)); E2K_PREPARE_ALIGN(align1, spec0); __di align2 = E2K_BYTES_FROM_ALIGN(src2 + offset, sizeof(eml_64u)); E2K_PREPARE_ALIGN(align2, spec1); const __di *v1 = (__di *)E2K_ALIGN_PTR_BACK(src1 + offset, 8); const __di *v2 = (__di *)E2K_ALIGN_PTR_BACK(src2 + offset, 8); __di *v3 = (__di*)(dst + offset); __di d01, d11; __di d00 = *v1; __di d10 = *v2; ++v1; ++v2; static const size_t block_size = sizeof(eml_64u) / sizeof(eml_16u); size_t effective_len = offset + ((len - offset) & ~(block_size - 1)); for (; i < effective_len; i += block_size, ++v1, ++v2, ++v3) { d01 = *v1; d11 = *v2; E2K_ALIGN_DATA(d00, d01, tmp0, spec0); E2K_ALIGN_DATA(d10, d11, tmp1, spec1); *v3 = __builtin_e2k_paddh(tmp0, tmp1); d00 = d01; d10 = d11; } // , It would seem that you can still do?

However, as we remember, on modern Elbrus there are 6 execution channels in which up to 24 instructions can be placed, and they will be executed in 1 clock cycle. Of these instructions, only 6 can be arithmetic for integers, since there is only one vector ALU for each channel (other instructions could relate to real arithmetic, load / write, etc.) In addition, there is one more subtlety: these 6 ALUs are different, and each arithmetic command can be executed only in certain channels. For the unsaturated addition, only channels 0 and 3 are suitable. Therefore, in 1 measure we can perform no more than 2 additions. To tell a careful compiler that these two additions are independent (i.e., the result of the first is not used in the second), turn the loop. This can be done manually or by using the compiler directive:

#pragma unroll(2)

In addition, you can tell the compiler the number of expected iterations of the cycle, for example, a number about 1024 is suitable for a cycle on an image line (this is quite a reasonable estimate of the linear dimensions of recognizable images, and colleagues from the MCST recommended this size; the general idea is that the number should be large enough for the compiler to consider using special cyclic optimizations):

#pragma loop count(1024)

Of course, in obviously short cycles the compiler should be left with the opposite hint (see below).

// 3 // eml_16u *src1 - // eml_16u *src2 - // eml_16u *dst - // len - size_t i = 0; // 64- dst size_t offset = E2K_BYTES_TO_ALIGN(dst, sizeof(eml_64u)) / sizeof(eml_16u); #pragma loop count(3) for (; i < offset; ++i) { dst[i] = src1[i] + src2[i]; } // __di spec1, spec2; __di tmp0, tmp1; __di align1 = E2K_BYTES_FROM_ALIGN(src1 + offset, sizeof(eml_64u)); E2K_PREPARE_ALIGN(align1, spec1); __di align2 = E2K_BYTES_FROM_ALIGN(src2 + offset, sizeof(eml_64u)); E2K_PREPARE_ALIGN(align2, spec2); const __di *v1 = (__di *)E2K_ALIGN_PTR_BACK(src1 + offset, sizeof(eml_64u)); const __di *v2 = (__di *)E2K_ALIGN_PTR_BACK(src2 + offset, sizeof(eml_64u)); __di *v3 = (__di*)(dst + offset); __di d01, d11, d02, d12; __di d00 = *v1; __di d10 = *v2; ++v1; ++v2; size_t effective_len = offset + ((len - offset) & ~0x03); #pragma unroll(2) #pragma loop count(1024) for (; i < effective_len; i += 4, ++v1, ++v2, ++v3) { d01 = *v1; d11 = *v2; E2K_ALIGN_DATA(d00, d01, tmp0, spec0); E2K_ALIGN_DATA(d10, d11, tmp1, spec1); *v3 = __builtin_e2k_paddh(tmp0, tmp1); d00 = d01; d10 = d11; } // , Now we give the results of measurements. To do this, we measured the time of addition of two arrays of length 105. The average time over 105 iterations is given in the table.

| Option | The average time of addition of arrays, µs |

|---|---|

| 0 | 219.0 |

| one | 250.7 |

| 2 | 62.6 |

| 3 | 31.4 |

Our optimization allowed us to speed up the addition 7 times! We see that by setting ourselves the goal of maximizing productivity and spending some time studying the features of Elbrus, one can achieve significant results.

Many thanks to the staff of the MCST, who repeatedly advised us on writing this material!

Source: https://habr.com/ru/post/351134/

All Articles