First steps in machine learning

Hello, dear friend, have you always wanted to try machine learning, but the area looked mysterious and difficult? I would like to share with you my story as I took the first steps in machine learning, with zero knowledge of Python and higher mathematics with a small example.

First of all, I went to the official website of TensorFlow and read ML for Beginners and TensorFlow for beginners . Materials in English.

')

TensorFlow is a Google team handicraft and the most popular machine learning library that supports Python, Java, C ++, Go, as well as the ability to use the computing power of a graphics card for computing complex neural networks.

In my search, I found another library for machine learning, Scikit-learn oriented to Python. Plus of this library, in a large number of algorithms for machine learning right out of the box, which was a definite plus in my case, since the presentation was on Friday, and I really wanted to demonstrate a working model.

In search of ready-made examples, I came across a tutorial on the definition of the language in which the text was written using Scikit-learn.

So, my task was to train the model to determine the presence of SQL injections in the text string. (Of course, you can solve this problem with regular expressions, but for educational purposes, you canshoot a cannon on sparrows )

The type of task that I am trying to solve is a classification, that is, the algorithm should, in response to fed data, give me which category this data belongs to.

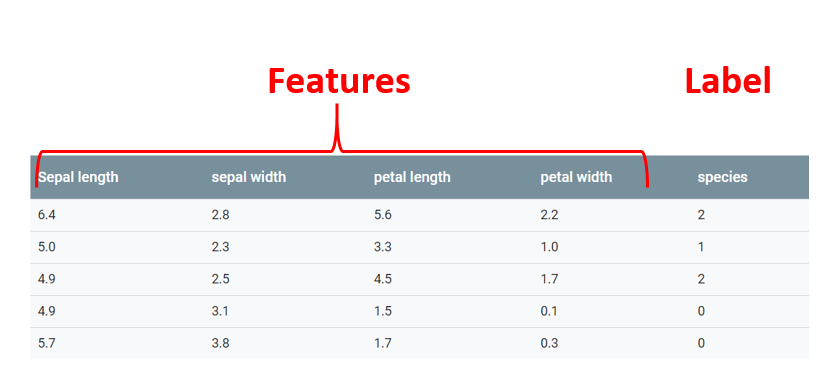

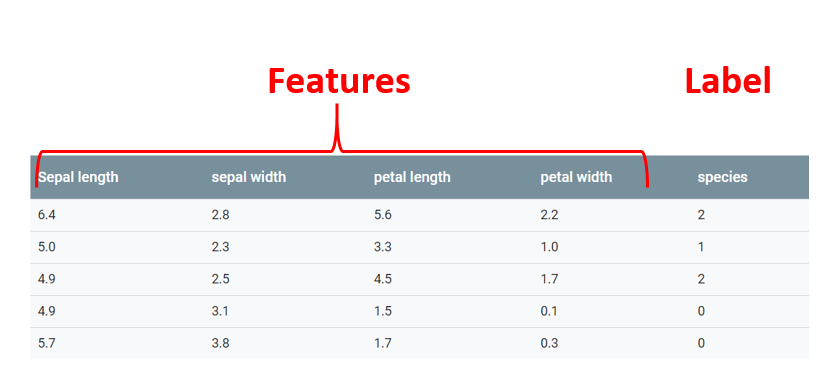

The data in which the algorithm will look for patterns are called features .

The category to which a particular feature belongs is called a label . It is important to note that the input data may have several features, but only one label.

In the classic example of machine learning, identifying varieties of iris flowers along the length of pistils and stamens, each individual column with information about the size of this feature , and the last column, which means to which of the subspecies of iris the flower with such values is label

The way I will solve the classification problem is called supervised learning, or supervised learning. This means that in the process of learning, the algorithm will receive both features and labels.

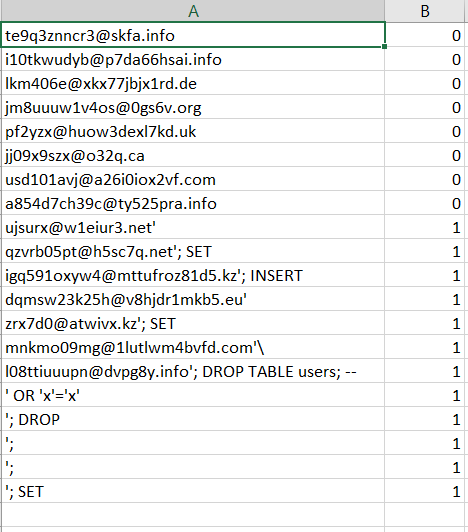

Step number one in solving any problem using machine learning is the collection of data on which this machine will learn. In an ideal world, this should be real data, but, unfortunately, I could not find anything on the Internet that would satisfy me. It was decided to generate the data independently.

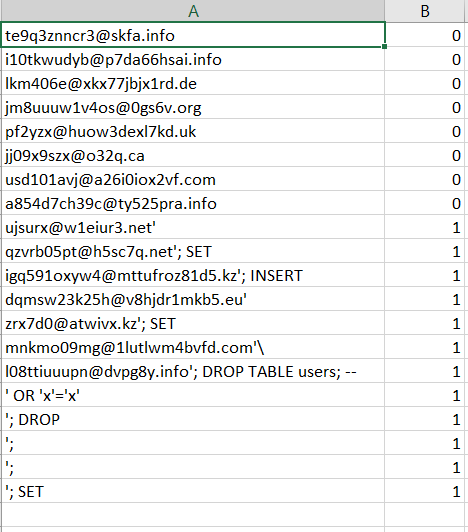

I wrote a script that generated random email addresses and SQL injections. As a result, in my csv file there were three types of data: random emails (20 thousand), random emails with SQL injection (20 thousand) and clean SQL injections (10 thousand). It looked like this:

Now the source data must be considered. The function returns sheet X, which contains features, sheet Y, which contains labels for each feature and sheet label_names, which simply contains textual definitions for labels, is needed for convenience when displaying the results.

Further, these data need to be broken into a training set and a test one. The cross_validation.train_test_split () function carefully written for us will help us with this. It will shuffle the records and return us four sets of data - two training and two test for features and labels.

Then we initialize the vectorizer object, which will read the data transferred to it by one character, combine them into N-grams and translate into numerical vectors, which is capable of perceiving the machine learning algorithm.

The next step is to initialize the pipeline and transfer to it the previously created vectorizer and the algorithm with which we want to analyze our data set. In this we will use the logistic regression algorithm.

The model is ready for data digestion. Now we simply transfer training sets of features and labels to our pipeline and the model starts learning. In the next line we skip the test set features through the pipeline, but now we use the predict to get the number of correctly guessed data.

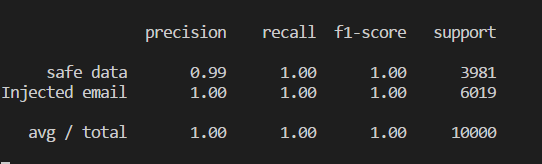

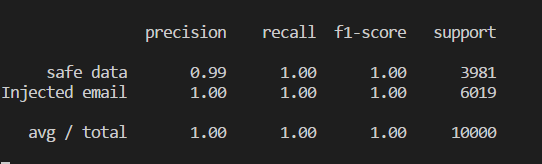

If you want to know how accurate the model is in predictions, you can compare the correct data and the test labels.

The accuracy of the model is determined by the value from 0 to 1, and can be converted to percentages. This model gives the correct answer in 100% of cases. Of course, using real data, this result is not so easy to achieve, and the task is quite simple.

The final final touch is to keep the model in a trained form so that it can be used without any re-training in any other python program. We serialize the model into a pickle file using the built-in Scikit-learn functions:

A small demonstration of how to use the serialized model in another program.

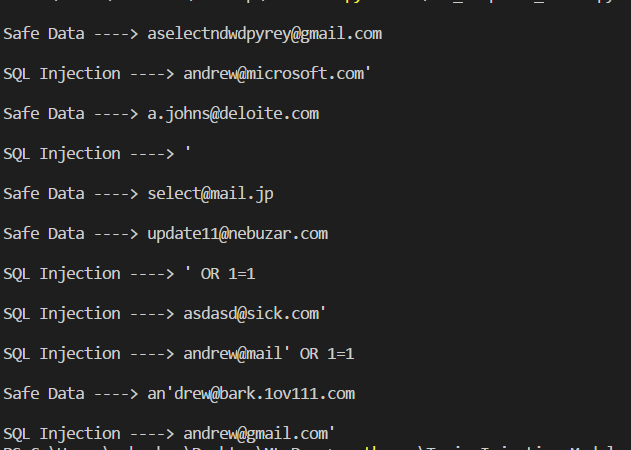

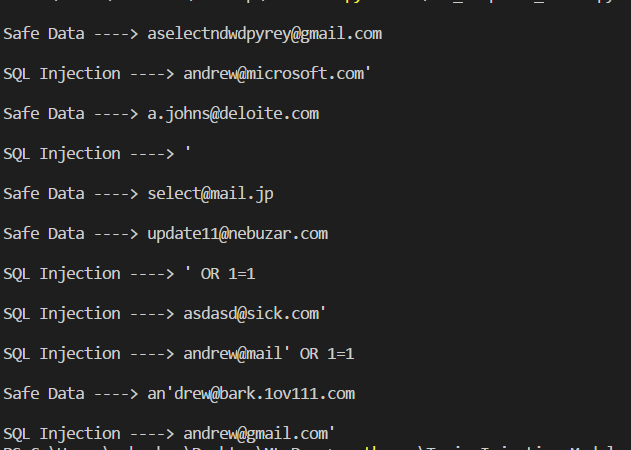

At the output we get the following result:

As you can see, the model confidently determines SQL injections.

As a result, we have a trained model for determining SQL injections, in theory, we can plug it into the server part, and in the case of injection detection, redirect all requests for a fake database to keep us from looking at other possible vulnerabilities. To demonstrate at the end of the week, I wrote a small REST API on Flask.

These were my first steps in machine learning. I hope that I can inspire those who, like me for a long time, looked at machine learning with interest, but were afraid to touch it.

I leave a list of useful resources that helped me with this project (almost all of them are in English)

Tensorflow for begginers

Scikit-Learn Tutorials

Building Language Detector via Scikit-Learn

I found several excellent articles on Medium including a series of eight articles that give a good idea of machine learning with simple examples. ( UPD: Russian translation of the same articles )

Preamble

I work as a web developer in a consulting company, and sometimes there comes a time when one project is already over, and the next one has not yet been appointed. Everyone who is on the bench, in order not just to sit down, must contribute to the company's intellectual property. As a rule, this is either the creation of training materials on a topic that the author owns, or the study of a new technology and the subsequent demonstration or presentation at the end of the week.

I decided, since there is such an opportunity, then try to touch the topic of Machine Learning, since it is stylish, fashionable and youthful. From previous knowledge in this topic, I had only a couple of presentations from the lead developer, which were more popularizing than informational.

I identified a specific problem to solve it using machine learning and started digging. I want to note that having the ultimate goal was easier to navigate the flow of information.

I decided, since there is such an opportunity, then try to touch the topic of Machine Learning, since it is stylish, fashionable and youthful. From previous knowledge in this topic, I had only a couple of presentations from the lead developer, which were more popularizing than informational.

I identified a specific problem to solve it using machine learning and started digging. I want to note that having the ultimate goal was easier to navigate the flow of information.

Stick a shovel

First of all, I went to the official website of TensorFlow and read ML for Beginners and TensorFlow for beginners . Materials in English.

')

TensorFlow is a Google team handicraft and the most popular machine learning library that supports Python, Java, C ++, Go, as well as the ability to use the computing power of a graphics card for computing complex neural networks.

In my search, I found another library for machine learning, Scikit-learn oriented to Python. Plus of this library, in a large number of algorithms for machine learning right out of the box, which was a definite plus in my case, since the presentation was on Friday, and I really wanted to demonstrate a working model.

In search of ready-made examples, I came across a tutorial on the definition of the language in which the text was written using Scikit-learn.

So, my task was to train the model to determine the presence of SQL injections in the text string. (Of course, you can solve this problem with regular expressions, but for educational purposes, you can

First of all, first thing dataset ...

The type of task that I am trying to solve is a classification, that is, the algorithm should, in response to fed data, give me which category this data belongs to.

The data in which the algorithm will look for patterns are called features .

The category to which a particular feature belongs is called a label . It is important to note that the input data may have several features, but only one label.

In the classic example of machine learning, identifying varieties of iris flowers along the length of pistils and stamens, each individual column with information about the size of this feature , and the last column, which means to which of the subspecies of iris the flower with such values is label

The way I will solve the classification problem is called supervised learning, or supervised learning. This means that in the process of learning, the algorithm will receive both features and labels.

Step number one in solving any problem using machine learning is the collection of data on which this machine will learn. In an ideal world, this should be real data, but, unfortunately, I could not find anything on the Internet that would satisfy me. It was decided to generate the data independently.

I wrote a script that generated random email addresses and SQL injections. As a result, in my csv file there were three types of data: random emails (20 thousand), random emails with SQL injection (20 thousand) and clean SQL injections (10 thousand). It looked like this:

Now the source data must be considered. The function returns sheet X, which contains features, sheet Y, which contains labels for each feature and sheet label_names, which simply contains textual definitions for labels, is needed for convenience when displaying the results.

import csv def get_dataset(): X = [] y = [] label_names = ["safe data","Injected email"] with open('trainingSet.csv') as csvfile: readCSV = csv.reader(csvfile, delimiter='\n') for row in readCSV: splitted = row[0].split(',') X.append(splitted[0]) y.append(splitted[1]) print("\n\nData set features {0}". format(len(X))) print("Data set labels {0}\n". format(len(y))) print(X) return X, y, label_names Further, these data need to be broken into a training set and a test one. The cross_validation.train_test_split () function carefully written for us will help us with this. It will shuffle the records and return us four sets of data - two training and two test for features and labels.

# Split the dataset on training and testing sets X_train, X_test, y_train, y_test = cross_validation.train_test_split(X,y,test_size=0.2,random_state=0) Then we initialize the vectorizer object, which will read the data transferred to it by one character, combine them into N-grams and translate into numerical vectors, which is capable of perceiving the machine learning algorithm.

#Setting up vectorizer that will convert dataset into vectors using n-gram vectorizer = feature_extraction.text.TfidfVectorizer(ngram_range=(1, 4), analyzer='char') We feed the data

The next step is to initialize the pipeline and transfer to it the previously created vectorizer and the algorithm with which we want to analyze our data set. In this we will use the logistic regression algorithm.

#Setting up pipeline to flow data though vectorizer to the liner model implementation pipe = pipeline.Pipeline([('vectorizer', vectorizer), ('clf', linear_model.LogisticRegression())]) The model is ready for data digestion. Now we simply transfer training sets of features and labels to our pipeline and the model starts learning. In the next line we skip the test set features through the pipeline, but now we use the predict to get the number of correctly guessed data.

#Pass training set of features and labels though pipe. pipe.fit(X_train, y_train) #Test model accuracy by running feature test set y_predicted = pipe.predict(X_test) If you want to know how accurate the model is in predictions, you can compare the correct data and the test labels.

print(metrics.classification_report(y_test, y_predicted,target_names=label_names)) The accuracy of the model is determined by the value from 0 to 1, and can be converted to percentages. This model gives the correct answer in 100% of cases. Of course, using real data, this result is not so easy to achieve, and the task is quite simple.

The final final touch is to keep the model in a trained form so that it can be used without any re-training in any other python program. We serialize the model into a pickle file using the built-in Scikit-learn functions:

#Save model into pickle. Built in serializing tool joblib.dump(pipe, 'injection_model.pkl') A small demonstration of how to use the serialized model in another program.

import numpy as np from sklearn.externals import joblib #Load classifier from the pickle file clf = joblib.load('injection_model.pkl') #Set of test data input_data = ["aselectndwdpyrey@gmail.com", "andrew@microsoft.com'", "a.johns@deloite.com", "'", "select@mail.jp", "update11@nebuzar.com", "' OR 1=1", "asdasd@sick.com'", "andrew@mail' OR 1=1", "an'drew@bark.1ov111.com", "andrew@gmail.com'"] predicted_attacks = clf.predict(input_data).astype(np.int) label_names = ["Safe Data", "SQL Injection"] for email, item in zip(input_data, predicted_attacks): print(u'\n{} ----> {}'.format(label_names[item], email)) At the output we get the following result:

As you can see, the model confidently determines SQL injections.

Conclusion

As a result, we have a trained model for determining SQL injections, in theory, we can plug it into the server part, and in the case of injection detection, redirect all requests for a fake database to keep us from looking at other possible vulnerabilities. To demonstrate at the end of the week, I wrote a small REST API on Flask.

These were my first steps in machine learning. I hope that I can inspire those who, like me for a long time, looked at machine learning with interest, but were afraid to touch it.

Full code

from sklearn import ensemble from sklearn import feature_extraction from sklearn import linear_model from sklearn import pipeline from sklearn import cross_validation from sklearn import metrics from sklearn.externals import joblib import load_data import pickle # Load the dataset from the csv file. Handled by load_data.py. Each email is split in characters and each one has label assigned X, y, label_names = load_data.get_dataset() # Split the dataset on training and testing sets X_train, X_test, y_train, y_test = cross_validation.train_test_split(X,y,test_size=0.2,random_state=0) #Setting up vectorizer that will convert dataset into vectors using n-gram vectorizer = feature_extraction.text.TfidfVectorizer(ngram_range=(1, 4), analyzer='char') #Setting up pipeline to flow data though vectorizer to the liner model implementation pipe = pipeline.Pipeline([('vectorizer', vectorizer), ('clf', linear_model.LogisticRegression())]) #Pass training set of features and labels though pipe. pipe.fit(X_train, y_train) #Test model accuracy by running feature test set y_predicted = pipe.predict(X_test) print(metrics.classification_report(y_test, y_predicted,target_names=label_names)) #Save model into pickle. Built in serializing tool joblib.dump(pipe, 'injection_model.pkl') Reference materials

I leave a list of useful resources that helped me with this project (almost all of them are in English)

Tensorflow for begginers

Scikit-Learn Tutorials

Building Language Detector via Scikit-Learn

I found several excellent articles on Medium including a series of eight articles that give a good idea of machine learning with simple examples. ( UPD: Russian translation of the same articles )

Source: https://habr.com/ru/post/350984/

All Articles