Algorithms in the industry: the theory of formal languages and chat bots

The popularity of dialogue systems is closely related to the term “artificial intelligence”. Such systems are usually based on neural networks and other machine learning models.

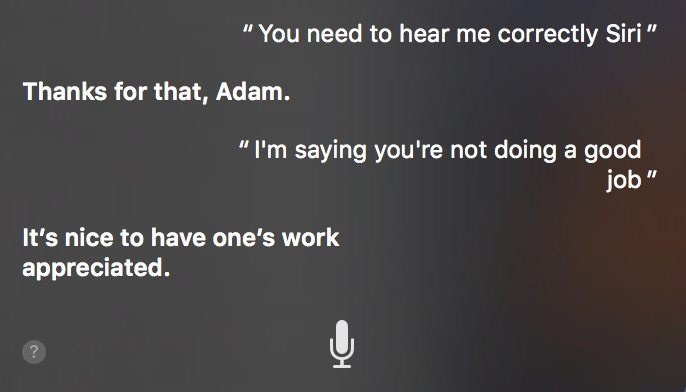

However, this approach creates unexpected difficulties.

Behavior can be better controlled by adding a bit of mathematical rigor to the system. Classic algorithms come to the rescue - the very ones that are told in olympiad schools and used in AFM.

And under the cut - palindromatic sandwiches, algorithmized waiters, a little theory of formal languages and a story about what we are all about.

Imagine yourself in a restaurant. The waiter comes to you, and the dialogue takes place:

- -? - , , , . - ? . - , . - , . 43.333333 . ! It sounds easy, right? Let's try to automate the waiter in this dialogue.

Current solutions

As already mentioned, most of the existing popular solutions for NLP are based on machine learning, and algorithmic methods are considered as outdated and inefficient. However, with the ML-methods, too, is not so simple:

- Model solutions are impossible (or very difficult) to explain, therefore, it is not clear how to debug problems.

- You can not be sure of the reliability of the solution, so you have to add huge black lists for offensive phrases

- Training requires large data samples that are difficult to collect at the initial stage.

- A more complex dialogue is required than reaction to intents and slot-filling

Speaking of the rule-based approach, there is a whole artificial intelligence markup language in which you can describe the rules by which phrases will be processed. The disadvantage of this framework is that there are only methods for extracting information from messages into the language, and it is not clear how to integrate this solution with external services.

There are also combined solutions. In particular, the interactive systems learning framework released recently by the iPavlov.ai laboratory supports combined scenarios — you can combine machine learning components and a Rule-based approach within a single chat bot. Below we give some more examples of the integration of machine learning into grammar.

System architecture

The solution we propose to consider consists of three main parts:

- Module of understanding

- Permanent memory

- Response generation module

It is worth mentioning that in addition to the NLP core, there is usually a voice engine in the interactive system. We will not consider the architecture of this component, but we can easily add a voice to our waiter.

At the spring school (about which a little later) we implement the whole hierarchy in C ++ using the example of several mini-projects, and even screw the chat bot to the telegram using the library of one of the students of our school.

Excursion into the theory

In fact, the theory of formal languages is a formalization of linguistics in mathematical language. The basic definitions are the alphabet and the word. The alphabet is just a multitude of characters. The final sequence of characters from the alphabet forms a word.

Symbols can be of one of two types: terminal and nonterminal *. The difference is that the final grammar sequence can consist only of terminals, and non-terminals perform a utilitarian function.

Finally, the most important definition. A grammar is a set of inference rules by which some words can be converted to others.

In our case, however, the formal definitions differ slightly from intuition. “Terminals” in our examples are the words of the Russian language. So, “words” are, in fact, replicas. Non-terminals will be denoted by words in <angle> brackets. Sounds hard? It will be worse.

Let's give an example. As it is now a block of theory, the example should not be very vital. Let's say you like to eat palindromatic sandwiches for breakfast. Waking up early in the morning, you decided to generate such a sandwich from C (cheese), X (bread), K (sausage). We will also need a pair of non-terminals <> and <> .

Examples of palindromatic sandwiches:

(XX) (XCX) X C C C (XCCC) * * The grammar is as follows:

<> -> <> X <> -> \perp | C | | C <> C | <> A vertical bar means that an arbitrary right-hand side is selected in this rule (the sequence after ->).

Memory

The simplest to implement is memory, just a set of variables combined into a structure. Memory is the only means to transfer information between the comprehension module and the generative system.

In addition to raw facts (in our example, the listed dishes), it is very convenient to record some internal state in memory, which in itself will describe the context of the dialogue. These states are combined into a so-called state machine (in fact, a deterministic finite automaton).

A state machine inside memory is in some way close to grammar, but serves slightly different purposes. In fact, such a machine can be defined by an initial set of states and a table of transitions from one state to another. A transition to another state occurs when the understanding module recognizes some progress in the dialogue.

In an algorithmic waiter, the following states are sufficient: $ START DIALOG $, $ REFINE TYPE $, $ CONFIRM ORDER $. In the above dialogue example, each replica of the waiter occurs in each of the mentioned states, respectively. We will describe the transition logic later.

Module of understanding

From the point of view of the theory of formal languages, this is the task of recognizing grammar.

Grammar example:

<> -> <> <> < > <> -> <> | <> <> | <> <> <!> -> | | <> -> | <> -> < > | <> <> < > -> <> | <> < > <> -> | | < > -> | <> -> | | < > -> <> | <> <> < > -> : <> This grammar is called context-free, since the non-terminals are located on the right-hand side and the rules can be applied regardless of the other part of the phrase. There is a narrower class of grammar - regular. It is on them that regular expressions are based.

Recalling the state machine, add transitions:

$ $, <> -> $ $ $ $, < > -> $ $ $ $, < > -> $ $ Thus, depending on the client's replica, you can go either to $ REFINE TYPE $, or immediately to $ CONFIRM ORDER $. In fact, you can add several right-hand parts to the rule. Then the automaton from deterministic will turn into non-deterministic, and the transition will need to be somehow chosen. You can choose randomly, you can use heuristics, and you can apply machine learning by solving the classification problem.

Analysis is carried out through LL (1) -parser . This is a relatively simple algorithm, with small modifications allowing to recognize the user's intention and select the most important facts from his replica.

Grammar parsing is the task of restoring the sequence of rules that were applied to the starting non-terminal in order to get a given phrase.

For each non-terminal, a function is created that will parse it. In fact, “disassemble non-terminal” means to determine by which rule it was disclosed. This is where we use the letter combination LL (1) - it means that only the first character from a phrase is enough to unequivocally restore the rule! Thus, the analysis of each non-terminal consists of two steps:

Select the rule that was used

Start recursively from all non-terminals on the right side of this rule.

In fact, there is a more general algorithm LL (k), which can parse other grammars.

But not everything is so simple:

It is necessary to understand the phrase differently depending on the current context. The visitor only wants to place an order or clarify? To determine this, we introduce a function from the memory that will issue the starting nonterminal: $ start (memory) $

In fact, it is not enough just to understand the correctness of the phrase. From the phrase you need to isolate the maximum amount of the most different information. Some non-terminals in the table do not have a single sequence in which they can be opened - simply because, for example, <REVIEW> can contain anything. Therefore, instead of traditional analysis, we simply write down everything that got into this non-terminal in the correct memory field.

Generation module

A little background. In Russia, in order to enter a university, you must pass the EGE, including in the Russian language. As you know, the exam is designed to formalize the system of testing knowledge of graduates of the school. One of the tasks in the exam is to write an essay on the text. And what do we do if there are formal requirements? We use a formal grammar !

Did you happen to admire the Matrix? Her genius ... Billions of people live a full life ... in a dream. You know, because the first Matrix was created as an ideal world, where there is no suffering, where all people will be happy. And a complete failure. People did not accept the program, all had to be destroyed. It is customary to think that it was not possible to describe the ideal world in a programming language, however, I believe that humanity as a species does not accept reality without suffering and poverty. That is, utopia is just a toy that your primitive mind condescended for the time being. Therefore, the Matrix has become so. Recreated the peak of your civilization. It was your civilization, because when the cars began to think for you, our civilization arose. So, in fact, there was a coup. Evolution, Morpheus, evolution. And people are dinosaurs. Look out the window. This is the sunset of humanity. We are already masters here, Morpheus. The future is ours.

Wachowski sisters

Each of us has to think about the world. "What is the meaning of peace in a person's life?" - This is exactly the problem the narrator raises in this text fragment.

Showing the importance of the concept of "world", the author uses the concepts of the matrix and suffering. Showing the importance of the concept of "world", the writer uses the concepts of language and programming. To answer the question, the narrator in the words "It is accepted to think that it was not possible to describe the ideal world in programming language, however, I believe that humanity as a form does not accept reality without suffering and poverty" writes about the world.

The position of the author in this passage is best expressed by the quote: "Evolution, Morpheus, evolution."

I mostly agree with the writer, in fact, only the world helps to remain a person.

There are many examples of peace in Russian literature. For example, in the novel Master and Margarita, who wrote M. A. Bulgakov, a hero named Yeshua Ha Notsri bravely recognizes his actions, thus showing his attitude to the world.

There are many examples of the world in literature. For example, in the novel The Hitchhiker's Guide to the Galaxy, which was written by Douglas Adams, a hero named Marvin is very fond, in despair of lying face down in the dust, thus showing his attitude to the world.

Thus, it is necessary to say: everything in life depends on the world. You must always remember the importance of this concept in our lives.

Details and other examples in the repository.

Let's go back to the algorithmization of the waiter.

Now the task is diametrically opposite: it is necessary to generate a suitable replica so that it is appropriate and not boring with stereotypes.

< > -> < > ? < 1> < 2>. <> -> <> <> <> <> -> | <> -> | There are the same improvements as with the module of understanding: the memory determines the starting non-terminal, and instead of some other non-terminals you can substitute the values recorded in the memory.

How should the generation algorithm be built? In fact, everything here is extremely intuitive: as the initial phrase, we take the starting non-terminal and begin to open the non-terminals according to a random rule from the grammar. The process continues until all non-terminals are opened.

You can continue to improve this algorithm: introduce probabilities with which a rule will be used, open chains in parallel, or even use reinforcement training methods. The key difference from the classical generative models (for example, based on LSTM or Transformer ) is that the generated phrase will always be in the grammar, which means it will be appropriate in this situation.

Conclusion

Approximately, the theory of algorithms (finite automata and formal grammars) can be applied to the seemingly non-trivial task of processing a language.

There is a strong algorithmic school in Russia: schoolchildren win at the International Computer Science Olympiad , students at the ACM ICPC finals , courses on algorithms at the best universities in the country contain extremely advanced material.

However, there is a significant problem in the established pedagogical tradition - the use of these algorithms is practically not considered. Asking the average winner of computer science in vigilance about why dynamic programming is necessary in life, it is difficult to get a clear answer. Although, for example, modeling of bioinformatics sequences can be effectively performed using Markov models.

We at GoTo decided to correct this situation a little and organize our own algorithmic direction. The focus is on the relationship of classical algorithms and applications that arise in real situations.

In winter, the “Algorithms and Applications” direction received a baptism of fire, and the direction will again be held at GoTo spring school . The updated program has already appeared on the site, and we are organizing a GoTo Algorithms Challenge Spring18 contest.

Competition is a virtual competition. You will have 5 hours to solve 8 problems. Participants are sorted by total points for tasks.

Results will be announced on March 21. Winners and prize-winners will receive grants and discounts for studies at GoTo spring school.

. : 25 . !

')

Source: https://habr.com/ru/post/350926/

All Articles