FastTrack Training. "Network Basics". "Basics of data centers." Part 2. Eddie Martin. December 2012

About a year ago, I noticed an interesting and fascinating series of lectures by Eddie Martin, which, thanks to its history and real-life examples, as well as its tremendous learning experience, is amazingly comprehensible and allows you to gain an understanding of quite complex technologies.

We continue the cycle of 27 articles based on his lectures:

')

01/02: “Understanding the OSI Model” Part 1 / Part 2

03: "Understanding the Cisco Architecture"

04/05: “The Basics of Switching or Switches” Part 1 / Part 2

06: "Switches from Cisco"

07: "Area of use of network switches, the value of Cisco switches"

08/09: "Basics of a Wireless LAN" Part 1 / Part 2

10: "Products in the field of wireless LAN"

11: The Value of Cisco Wireless LANs

12: Routing Basics

13: "The structure of routers, routing platforms from Cisco"

14: The Value of Cisco Routers

15/16: “The Basics of Data Centers” Part 1 / Part 2

17: "Equipment for data centers"

18: "The Value of Cisco in Data Centers"

19/20/21: "The Basics of Telephony" Part 1 / Part 2 / Part 3

22: "Cisco Collaboration Software"

23: The Value of Collaboration Products from Cisco

24: "The Basics of Security"

25: "Cisco Security Software"

26: "The Value of Cisco Security Products"

27: "Understanding Cisco Architectural Games (Review)"

And here is the sixteenth of them.

What do you think of Cisco as a company? According to business analytics, our company belongs to mid-teens - enterprises with an increase of about 15%. Previously, Cisco grew annually by 40%. I remember the National Trade Congress, where such growth was announced, and it came as a shock to many of those present. However, it was easy to maintain such growth when we were a small company. But the more we become, the more difficult it is to do. We captured 50% of the switches and routers market, and then this segment of the market cannot grow as fast as we need. So, we must stimulate its growth with new products, shape the market needs for our new products.

I will draw a vertical axis — income, and a horizontal axis — market share. We, as leading market players, are right here at the top.

However, sales of switches do not grow by more than 12% per year. We cannot change our place in the sale sector quite rapidly. So, we must raise our positions in another area, the field of advanced technologies! I will mark them on the graph in terms of AT. This for Cisco means billions of dollars.

What is included here? Wireless networks WLAN, they provide us with 10-12-14% of the annual turnover of 40 billion dollars, but only at the expense of them to ensure growth is difficult. We can grow above the norm only when the market is growing, but if the market is not growing ... Next comes security, or information security, then video. All these things support our upper “ball”, our position in the market, but the volume of their implementations cannot be infinite.

Therefore, we must launch a new "ball". It is MDS 9500.

But until we created a new product, our ball was not launched. To do this, we will create a SAN - Storage Area Networks, a storage area network.

We invested in them 60-80 million dollars, and they created a sales market with a volume of about 400 million dollars. We are launching new technologies to the market, and now data centers are what helps maintain our leading position. We come up with a new product almost every month - the Nexus 9000, Nexus 5000, Nexus 2000, or even the Nexus 1000. And some of them are not even switches, but software. This is the “ball” that increases our business.

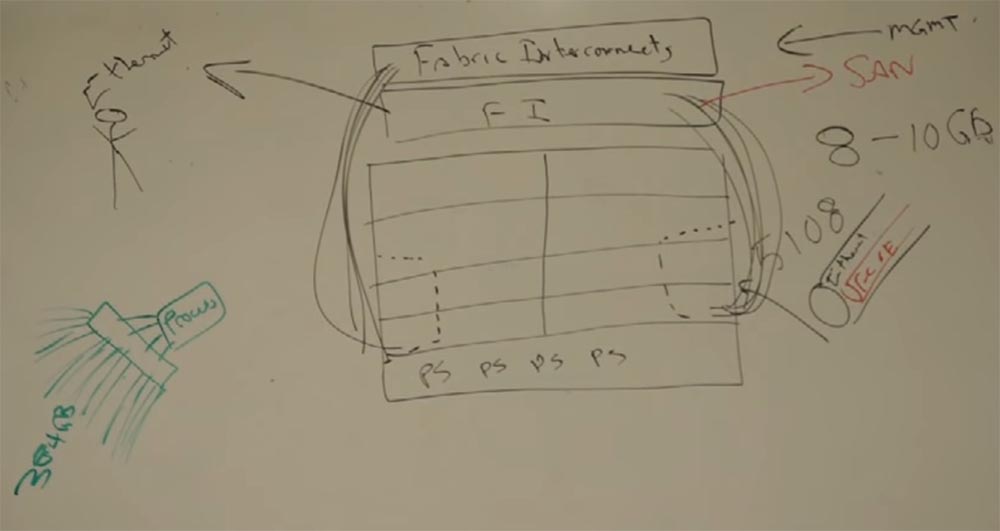

We are talking about capturing another market that will create other opportunities for us. These will be other types of servers for networks - BLADE-servers. How do they differ from independent or virtualized servers? A typical blade server chassis has 2 PS power supplies for redundancy, an MGMT server management module for managing servers inside, an Ethernet connection module, Cisco essentially sold IBM, HP and Dell Catalyst switch ports to everyone else so that they can release this solution. There is also a host adapter for the HBA bus and the server blades themselves - there can be from 1 to 12 pieces. The value of all this is that you can get more servers in terms of the number of occupied units in the cabinet than using independent servers. Each of these "blades" contains a processor and RAM memory and has a common power supply and a common Ethernet module. That is, it is a chassis.

And everything was great, until the moment when virtualization appeared. VMware, which we talked about in a previous lecture. It turned out that blade servers have one major drawback. This is a shortage of RAM. Suppose that each processor has 4 cores, and one “blade” processes an application that consumes memory up to 96 GB. It is enough for one application to work. But when virtualization came here, it turned out that one application needs 48 GB, another requires 24 GB, and the third one needs 32 GB. Everything, mathematics does not converge, we do not fit our 96.

Cisco said: “This is where we have the opportunity! We will return to zero and build a “blade” server just for virtualization ”! And we did it, we created the B chassis, which allowed us to enter the market with a turnover of 37 billion dollars. It is important for us to add a new “ball” - USC. If in a few years we have a 10% share of this, then it will already be 3.7 billion dollars.

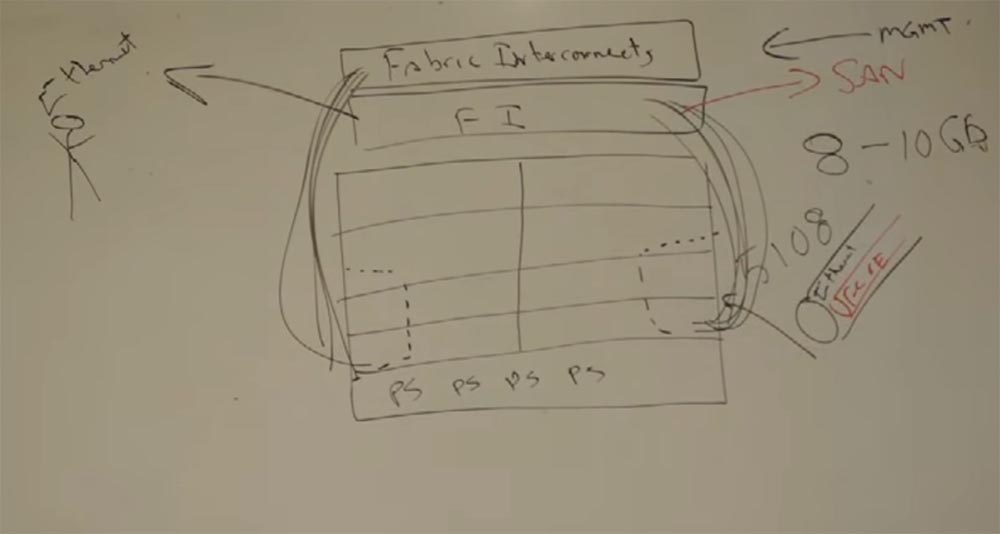

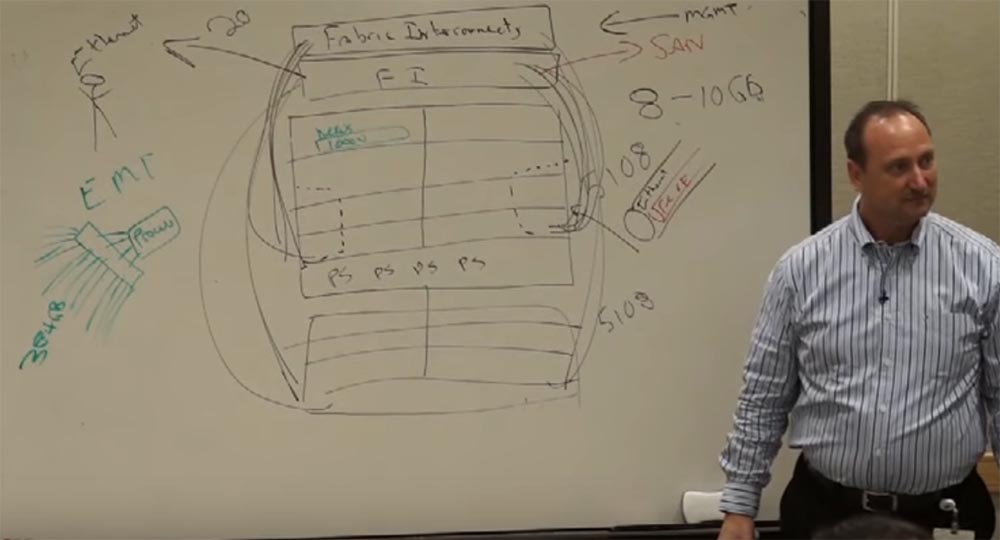

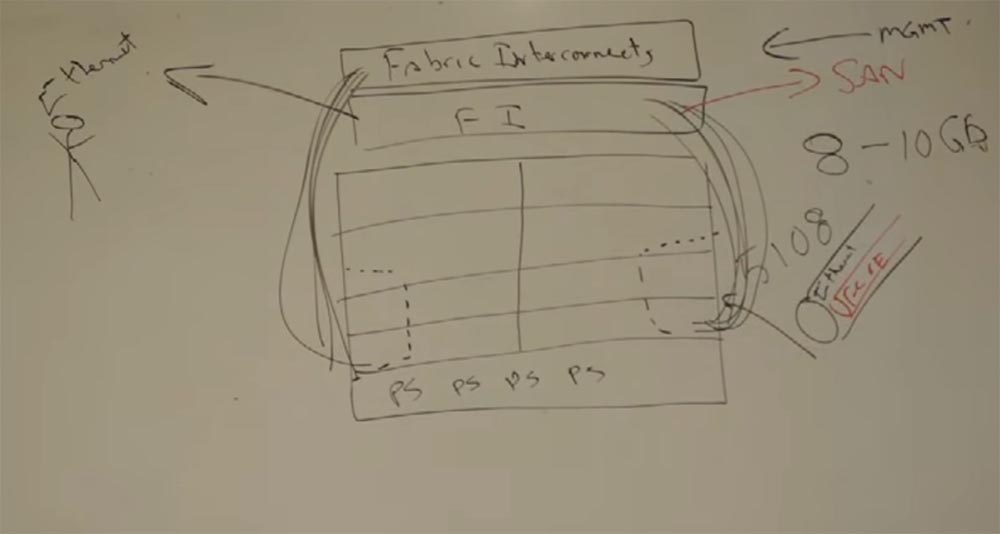

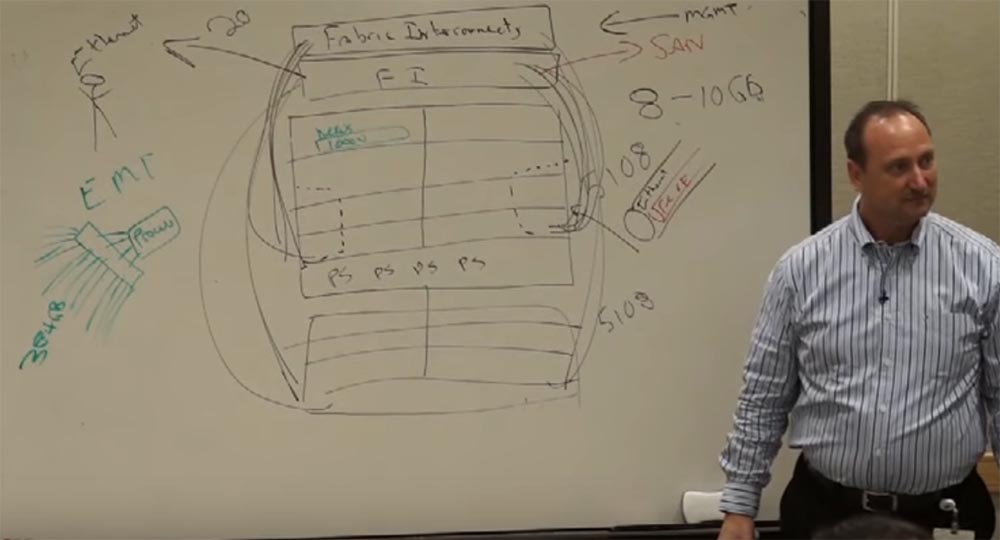

Now I will show you in detail what we have done. By the way, most of us have developed more in this company campus than you can imagine. So, we need a lot of RAM in our blades. Think about it: we have one control module for 12 servers. If we can do better, it will be good too. Cisco has entered the market with a large metal chassis 5108 - this is such a cabinet with connectors. Inside this chassis, we placed 8 servers, which is why the model is called 5108, a maximum of 8 slots here, half the width in size, and below we put several power supplies - I will draw 4 pieces. And the most important elements we placed outside the chassis - these two blocks of fiber-optic connectors FI, Fabric Interconnect. Inside them is the MGMT management platform, the Management Platform, its own management platform. Behind the servers are ports for connecting to FI, 16 ports of 10 gigabits each. Thus, we have 160 gigabit connectivity in a single chassis. And inside each connector was Ethernet, which was needed for external users, as well as Fiber Channel over Ethernet, which was connected to the MP management platform, from which it could communicate with the SAN, the data storage network. So we used a unified connection, one cable provided all of this.

As I said before, the problem with the blade servers was the lack of memory when processing multi-tasking processes. Cisco wondered if the new ASIC integrated circuit could solve this problem and asked Intel about this issue.

How does the processor use memory? He gets it through the DIMM pinouts. Cisco wondered how to “outwit” this process. What is meant by “outsmart”?

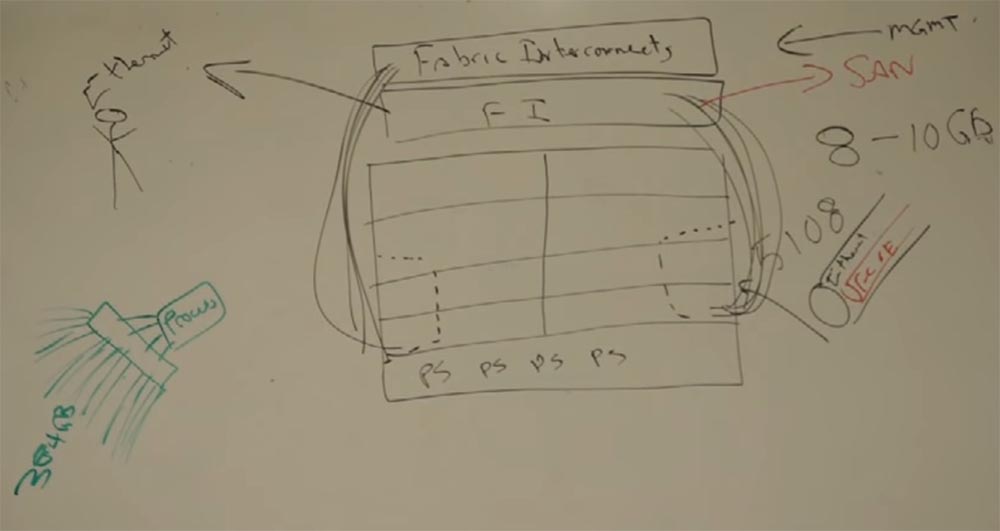

What will happen if there is an ASIC built in between the processor and memory so that the processor can see 4 outputs, and 16 come to the chip? We will provide the processor with 384 GB of memory if we integrate this chip here! That is, instead of 96 GB, the processor will be able to operate four times as much memory! Intel said it’s cool and they’ll start supporting our chips.

This is called EMT (extended memory technology) technology. This allows you to virtualize more applications on our blades. It was a short-term victory, but users quickly realized the benefits of this solution and began working with us. At least we were treated.

But we had other advantages that, I think, were more attractive. Remember, I told you that we collaborated with VMware, we actually owned a part of them, looked at their technologies in their laboratories. And if I put a lot of servers in our chassis, I need a lot of network cards, a lot of VLANs and so that I don’t put a whole bunch of this better apply a software solution that will be able to provide QoS and everything else. So, VMware can create a multi-tasking software switch with all the necessary functions at the hypervisor level that provide the data exchange process. But VMware decided that it was too difficult for them, and then Cisco said: “Give it to us, we are specialists in switches, we know how to do it”!

We have inserted software into our servers that allows us to create virtual devices inside the chassis. We called it the Nexus 1000V software switch. We further decided that if the control module can control one chassis, it can do this with several. We placed another chassis with similar content at the bottom and connected it to MGMT. Thus, you can control the twenty chassis immediately!

Multiply 20 by 8 and get 160 servers against 12 servers that can be in the same blade switch. Customers also liked it - less control interfaces, easier management.

Further, Cisco thought about this question: “If you want to become a leader on the dance floor, the best dancer, then you shouldn’t let other dancers enter this area”! Do you know why VMware were among the leaders in organizing data centers? Because they knew better than us the needs of customers, all their applications, powerful networks necessary for their work, and so on. In fact, we became contractors for them, because we developed our devices for their needs.

To remedy the situation, Cisco decided to host a MGMT, Server Profile, inside our control device, which would allow us to manage servers, but would have 3 specific sections. The first of them contains IT organizations with their MAC addresses, IP addresses, VLAN, all the software that is required for the operation of network applications. That is, everything that is required by an IT group of customers is in this software. The second contains all the applications used by users of the SAN group, and the third contains the applications of the clients, that is, the APP user group.

That is, our software has 62 different characteristics and 3 independent management platforms. And all this is in a single software module called Server Profile, or server profile. We can put this program for servers where it is needed, in any place. And customers liked it too!

This means that if a virtual machine is running in one of our chassis servers, I can move it to any of the 20 chassis whenever I want and in any way! And who prompted us to this idea? VMware! They forced us to do it.

Imagine that every day from 12 am to 6 am we run an application on the server that uses a 2 core processor and 24 GB of memory. But after 6 am, at 8 o'clock, many users come to work, use this application for their needs, server utilization increases dramatically, and it begins to lack processor power and memory. What happens then? The management platform moves this application to another server, where at that time 8 cores and 64 GB of memory are free. Of course, our customers liked it very much!

The uniqueness of the Cisco solution lies in the fact that we developed a project for VMware, that is, we combined hardware and software in the best possible way and embodied it all in one constructive solution. And this solution, called Management Interface, is owned by our company.

Thus, if some company in the city or a company that is not among our regular customers will need additional computing power for their applications, we can provide them with this opportunity through our network within a few seconds.

If another company, for example HP, wants to use our facilities, but it has other connectors, adapters, etc., we can simply reprogram the server profile for their software.

I explain to our clients our advantages: “we offer an innovative approach to equipment and software that saves you from the need to periodically increase the physical capacities of your servers, we help you significantly save on network development”.

We get the same situation that was at the beginning of the development of IP-telephony. The company Nortel Anivia had all the opportunities for its development even before Cisco engaged in this, why did she not do this? Because if she wanted to capture the market, she would have to drag all the traditional equipment there.

How intercepted this market Cisco? In 1999, she created software that allowed them to use their technology in a completely new way! They developed an IP telephony project for the old physical architecture, and we developed a project for new virtual solutions. We changed the rules of the game, erased the slide, which depicted the old bulky architecture, focused on the "iron". Think about which side of a piece of paper is easier to draw - on something that is filled with text, or on the back, blank side of a sheet? Our innovative solutions didn’t intersect in any way with the innovations of HP or other market players; we followed our own path.

And we keep it going. Do not think that we have completed the development of EMT technology - we spent 6-8 months on it and continue to work on it! Who do you refer to in the market - to someone who just squeezes money out of you or to someone who offers you innovation? We enter the market when our innovations have matured.

But Cisco wants to do more! Remember the Nexus? Let's go back to our networks. What does a client do when he has multiple data centers? He wants to unite them in order to facilitate their use. Suppose one data center where the applications are located is located in Los Angeles, and another in Phoenix, Arizona, and there is equipment. We want to transfer the server, which has an IP of 10.10.10.6, from Los Angeles to Phoenix so that it has the same IP address - 10.10.10.6. Why do we need it? Because next to this server in the first data center is the server 10.10.10.7, which communicates with the 10.10.10.6 applications on the internal network, and we do not want to break their connection. How did Cisco solve this problem?

We connected these two servers to the 2000 series switch, this switch to the 5000 series switch, and already to the 7000 series switch. We created a completely new technology called OTV (overlay to transfer transport virtualization) - network overlay technology for data centers. It transports virtualization so that we can move the server to 10.10.10.6 another data center. And when he wants to contact the server on 10.10.10.7, he sends a broadcast, his data is encapsulated and moved around the network to Los Angeles in such a way that 10.10.10.7 thinks that his colleague 10.10.10.6 is still next to him!

Thus, we can combine 16 data centers in such a way that they will look like one, and all the equipment inside will “think” what is in one and not in different places.

Imagine how customers like this idea! We simply place the arrays in different places and send data between them. In what other cases might clients need it?

Remember the Hurricane Sandy that hit New Jersey. You needed a data center in the middle of the country, off the coast, with all its IP addresses and so on. And we move it from New Jersey to where you need it. I will not wait until the hurricane destroys my data center, but I know the forecast, so transfer half of my capacity to a safe place, and then I will see how the situation develops. And if it worsens, I will ask you to transfer the second half from there.

We can use that part of the power that will be needed to handle a specific amount of applications, the Server Profile will provide. At the same time, I don’t know in detail how it works, I’m not competent in this, better ask your PSS for details. As Clint Eastwood said: "A man must know his limitations." And Cisco spent on this technology a lot of money in a short period of time, as it developed rapidly, we offered it to the market in 2009. As a result, we have the B series chassis and the C series servers. Consider what the C series servers are.

This is a standalone, standalone server built for virtualization. And again, can we manage it by means of inerconnect with a factory? Yes, just like we could do with the blade server. In this way, we can manage both virtual servers located on independent servers and blades, and all on one interface. I will draw several blades with fiber connections, several cabinets with blades connected to each other through the factory. So, we have several server racks that are managed by a factory capable of managing up to 20 chassis. Large server density here. The FEX (fabric extender) 2200 factory extender is used here.

I have already shown you the chassis in which the network card modules and supervisors are located. And our chassis still has 8 servers. So, in the first group of racks, we install the fiber optic expander of the FEX 2200 series to connect our server blades. Next we created a rack with servers, each of which is autonomous, and also added a FEX 2200 to it. This is called TOR, Top of Rack Switches. A lot of them. In fact, they are the same “blades” that need a supervisor.

Next we will install another cabinet with servers and TOR FEX 2200. In order to provide all three sections of cabinets with control, we install a 4th cabinet next to it, in which we install a 5500 supervisor. We connect three existing cabinets with servers and switches with this supervisor . This is our brain.

It allows you to manage 16 of these cabinets. If we need more, the entire system of cabinets, united in groups of 4 sections, is connected to the supervisor of a higher power series 7000. It can manage 20 or 24 groups. This allows you to manage a large number of connections using a single smart device. The 5500 itself is connected to MDS using fiber-optic FCP connectors, which further expands our capabilities. From above, I draw the Nexus 7000 series switches, and now we can transfer 40 Gbps Ethernet to them. These 2200 series switches are not smart enough, so they need to be controlled by an intelligent device. Thus, we can provide any necessary bandwidth for our servers.

If you want to create a traditional SAN, we must install fiber-optic switches in the second and third cabinets, since we will not deal with Ethernet. , , - .

Cisco . «» , VCE, Cisco, EMC VMware. EMC - «», , -, . VBlock. NetApp, FlexPod. FlexPod — -, .

, . , . , . . HCS (Hosting Colaboration Solutions) – , , AT&T, IBM, British Telecom.

7000. , : F , M . Nexus , 2200. 2002 , , MDS 9500 , №1 «» . iOS , Linux. , . «» , . , Nexus , BGP, , . Linux . , . — . iOS Linux, Catalyst 6500 4500 Linux.

iOS Linux, . , , 1990-. . - GUI, , , . Linux , , BGP, . Basic COBOL , Nexus – . , 10 Nexus , Catalyst 6500, , Nexus 7000 c , Catalyst 6500. , Linux, , , .

Continued:

FastTrack Training. "Network Basics". « -». Eddie Martin December 2012

Thank you for staying with us. Do you like our articles? Want to see more interesting materials? Support us by placing an order or recommending to friends, 30% discount for Habr users on a unique analogue of the entry-level servers that we invented for you: The Truth About VPS (KVM) E5-2650 v4 (6 Cores) 10GB DDR4 240GB SSD 1Gbps $ 20 or how to share the server? (Options are available with RAID1 and RAID10, up to 24 cores and up to 40GB DDR4).

Dell R730xd 2 times cheaper? Only we have 2 x Intel Dodeca-Core Xeon E5-2650v4 128GB DDR4 6x480GB SSD 1Gbps 100 TV from $ 249 in the Netherlands and the USA! Read about How to build an infrastructure building. class c using servers Dell R730xd E5-2650 v4 worth 9000 euros for a penny?

We continue the cycle of 27 articles based on his lectures:

')

01/02: “Understanding the OSI Model” Part 1 / Part 2

03: "Understanding the Cisco Architecture"

04/05: “The Basics of Switching or Switches” Part 1 / Part 2

06: "Switches from Cisco"

07: "Area of use of network switches, the value of Cisco switches"

08/09: "Basics of a Wireless LAN" Part 1 / Part 2

10: "Products in the field of wireless LAN"

11: The Value of Cisco Wireless LANs

12: Routing Basics

13: "The structure of routers, routing platforms from Cisco"

14: The Value of Cisco Routers

15/16: “The Basics of Data Centers” Part 1 / Part 2

17: "Equipment for data centers"

18: "The Value of Cisco in Data Centers"

19/20/21: "The Basics of Telephony" Part 1 / Part 2 / Part 3

22: "Cisco Collaboration Software"

23: The Value of Collaboration Products from Cisco

24: "The Basics of Security"

25: "Cisco Security Software"

26: "The Value of Cisco Security Products"

27: "Understanding Cisco Architectural Games (Review)"

And here is the sixteenth of them.

FastTrack Training. "Network Basics". "Basics of data centers." Part 2. Eddie Martin. December 2012

What do you think of Cisco as a company? According to business analytics, our company belongs to mid-teens - enterprises with an increase of about 15%. Previously, Cisco grew annually by 40%. I remember the National Trade Congress, where such growth was announced, and it came as a shock to many of those present. However, it was easy to maintain such growth when we were a small company. But the more we become, the more difficult it is to do. We captured 50% of the switches and routers market, and then this segment of the market cannot grow as fast as we need. So, we must stimulate its growth with new products, shape the market needs for our new products.

I will draw a vertical axis — income, and a horizontal axis — market share. We, as leading market players, are right here at the top.

However, sales of switches do not grow by more than 12% per year. We cannot change our place in the sale sector quite rapidly. So, we must raise our positions in another area, the field of advanced technologies! I will mark them on the graph in terms of AT. This for Cisco means billions of dollars.

What is included here? Wireless networks WLAN, they provide us with 10-12-14% of the annual turnover of 40 billion dollars, but only at the expense of them to ensure growth is difficult. We can grow above the norm only when the market is growing, but if the market is not growing ... Next comes security, or information security, then video. All these things support our upper “ball”, our position in the market, but the volume of their implementations cannot be infinite.

Therefore, we must launch a new "ball". It is MDS 9500.

But until we created a new product, our ball was not launched. To do this, we will create a SAN - Storage Area Networks, a storage area network.

We invested in them 60-80 million dollars, and they created a sales market with a volume of about 400 million dollars. We are launching new technologies to the market, and now data centers are what helps maintain our leading position. We come up with a new product almost every month - the Nexus 9000, Nexus 5000, Nexus 2000, or even the Nexus 1000. And some of them are not even switches, but software. This is the “ball” that increases our business.

We are talking about capturing another market that will create other opportunities for us. These will be other types of servers for networks - BLADE-servers. How do they differ from independent or virtualized servers? A typical blade server chassis has 2 PS power supplies for redundancy, an MGMT server management module for managing servers inside, an Ethernet connection module, Cisco essentially sold IBM, HP and Dell Catalyst switch ports to everyone else so that they can release this solution. There is also a host adapter for the HBA bus and the server blades themselves - there can be from 1 to 12 pieces. The value of all this is that you can get more servers in terms of the number of occupied units in the cabinet than using independent servers. Each of these "blades" contains a processor and RAM memory and has a common power supply and a common Ethernet module. That is, it is a chassis.

And everything was great, until the moment when virtualization appeared. VMware, which we talked about in a previous lecture. It turned out that blade servers have one major drawback. This is a shortage of RAM. Suppose that each processor has 4 cores, and one “blade” processes an application that consumes memory up to 96 GB. It is enough for one application to work. But when virtualization came here, it turned out that one application needs 48 GB, another requires 24 GB, and the third one needs 32 GB. Everything, mathematics does not converge, we do not fit our 96.

Cisco said: “This is where we have the opportunity! We will return to zero and build a “blade” server just for virtualization ”! And we did it, we created the B chassis, which allowed us to enter the market with a turnover of 37 billion dollars. It is important for us to add a new “ball” - USC. If in a few years we have a 10% share of this, then it will already be 3.7 billion dollars.

Now I will show you in detail what we have done. By the way, most of us have developed more in this company campus than you can imagine. So, we need a lot of RAM in our blades. Think about it: we have one control module for 12 servers. If we can do better, it will be good too. Cisco has entered the market with a large metal chassis 5108 - this is such a cabinet with connectors. Inside this chassis, we placed 8 servers, which is why the model is called 5108, a maximum of 8 slots here, half the width in size, and below we put several power supplies - I will draw 4 pieces. And the most important elements we placed outside the chassis - these two blocks of fiber-optic connectors FI, Fabric Interconnect. Inside them is the MGMT management platform, the Management Platform, its own management platform. Behind the servers are ports for connecting to FI, 16 ports of 10 gigabits each. Thus, we have 160 gigabit connectivity in a single chassis. And inside each connector was Ethernet, which was needed for external users, as well as Fiber Channel over Ethernet, which was connected to the MP management platform, from which it could communicate with the SAN, the data storage network. So we used a unified connection, one cable provided all of this.

As I said before, the problem with the blade servers was the lack of memory when processing multi-tasking processes. Cisco wondered if the new ASIC integrated circuit could solve this problem and asked Intel about this issue.

How does the processor use memory? He gets it through the DIMM pinouts. Cisco wondered how to “outwit” this process. What is meant by “outsmart”?

What will happen if there is an ASIC built in between the processor and memory so that the processor can see 4 outputs, and 16 come to the chip? We will provide the processor with 384 GB of memory if we integrate this chip here! That is, instead of 96 GB, the processor will be able to operate four times as much memory! Intel said it’s cool and they’ll start supporting our chips.

This is called EMT (extended memory technology) technology. This allows you to virtualize more applications on our blades. It was a short-term victory, but users quickly realized the benefits of this solution and began working with us. At least we were treated.

But we had other advantages that, I think, were more attractive. Remember, I told you that we collaborated with VMware, we actually owned a part of them, looked at their technologies in their laboratories. And if I put a lot of servers in our chassis, I need a lot of network cards, a lot of VLANs and so that I don’t put a whole bunch of this better apply a software solution that will be able to provide QoS and everything else. So, VMware can create a multi-tasking software switch with all the necessary functions at the hypervisor level that provide the data exchange process. But VMware decided that it was too difficult for them, and then Cisco said: “Give it to us, we are specialists in switches, we know how to do it”!

We have inserted software into our servers that allows us to create virtual devices inside the chassis. We called it the Nexus 1000V software switch. We further decided that if the control module can control one chassis, it can do this with several. We placed another chassis with similar content at the bottom and connected it to MGMT. Thus, you can control the twenty chassis immediately!

Multiply 20 by 8 and get 160 servers against 12 servers that can be in the same blade switch. Customers also liked it - less control interfaces, easier management.

Further, Cisco thought about this question: “If you want to become a leader on the dance floor, the best dancer, then you shouldn’t let other dancers enter this area”! Do you know why VMware were among the leaders in organizing data centers? Because they knew better than us the needs of customers, all their applications, powerful networks necessary for their work, and so on. In fact, we became contractors for them, because we developed our devices for their needs.

To remedy the situation, Cisco decided to host a MGMT, Server Profile, inside our control device, which would allow us to manage servers, but would have 3 specific sections. The first of them contains IT organizations with their MAC addresses, IP addresses, VLAN, all the software that is required for the operation of network applications. That is, everything that is required by an IT group of customers is in this software. The second contains all the applications used by users of the SAN group, and the third contains the applications of the clients, that is, the APP user group.

That is, our software has 62 different characteristics and 3 independent management platforms. And all this is in a single software module called Server Profile, or server profile. We can put this program for servers where it is needed, in any place. And customers liked it too!

This means that if a virtual machine is running in one of our chassis servers, I can move it to any of the 20 chassis whenever I want and in any way! And who prompted us to this idea? VMware! They forced us to do it.

Imagine that every day from 12 am to 6 am we run an application on the server that uses a 2 core processor and 24 GB of memory. But after 6 am, at 8 o'clock, many users come to work, use this application for their needs, server utilization increases dramatically, and it begins to lack processor power and memory. What happens then? The management platform moves this application to another server, where at that time 8 cores and 64 GB of memory are free. Of course, our customers liked it very much!

The uniqueness of the Cisco solution lies in the fact that we developed a project for VMware, that is, we combined hardware and software in the best possible way and embodied it all in one constructive solution. And this solution, called Management Interface, is owned by our company.

Thus, if some company in the city or a company that is not among our regular customers will need additional computing power for their applications, we can provide them with this opportunity through our network within a few seconds.

If another company, for example HP, wants to use our facilities, but it has other connectors, adapters, etc., we can simply reprogram the server profile for their software.

I explain to our clients our advantages: “we offer an innovative approach to equipment and software that saves you from the need to periodically increase the physical capacities of your servers, we help you significantly save on network development”.

We get the same situation that was at the beginning of the development of IP-telephony. The company Nortel Anivia had all the opportunities for its development even before Cisco engaged in this, why did she not do this? Because if she wanted to capture the market, she would have to drag all the traditional equipment there.

How intercepted this market Cisco? In 1999, she created software that allowed them to use their technology in a completely new way! They developed an IP telephony project for the old physical architecture, and we developed a project for new virtual solutions. We changed the rules of the game, erased the slide, which depicted the old bulky architecture, focused on the "iron". Think about which side of a piece of paper is easier to draw - on something that is filled with text, or on the back, blank side of a sheet? Our innovative solutions didn’t intersect in any way with the innovations of HP or other market players; we followed our own path.

And we keep it going. Do not think that we have completed the development of EMT technology - we spent 6-8 months on it and continue to work on it! Who do you refer to in the market - to someone who just squeezes money out of you or to someone who offers you innovation? We enter the market when our innovations have matured.

But Cisco wants to do more! Remember the Nexus? Let's go back to our networks. What does a client do when he has multiple data centers? He wants to unite them in order to facilitate their use. Suppose one data center where the applications are located is located in Los Angeles, and another in Phoenix, Arizona, and there is equipment. We want to transfer the server, which has an IP of 10.10.10.6, from Los Angeles to Phoenix so that it has the same IP address - 10.10.10.6. Why do we need it? Because next to this server in the first data center is the server 10.10.10.7, which communicates with the 10.10.10.6 applications on the internal network, and we do not want to break their connection. How did Cisco solve this problem?

We connected these two servers to the 2000 series switch, this switch to the 5000 series switch, and already to the 7000 series switch. We created a completely new technology called OTV (overlay to transfer transport virtualization) - network overlay technology for data centers. It transports virtualization so that we can move the server to 10.10.10.6 another data center. And when he wants to contact the server on 10.10.10.7, he sends a broadcast, his data is encapsulated and moved around the network to Los Angeles in such a way that 10.10.10.7 thinks that his colleague 10.10.10.6 is still next to him!

Thus, we can combine 16 data centers in such a way that they will look like one, and all the equipment inside will “think” what is in one and not in different places.

Imagine how customers like this idea! We simply place the arrays in different places and send data between them. In what other cases might clients need it?

Remember the Hurricane Sandy that hit New Jersey. You needed a data center in the middle of the country, off the coast, with all its IP addresses and so on. And we move it from New Jersey to where you need it. I will not wait until the hurricane destroys my data center, but I know the forecast, so transfer half of my capacity to a safe place, and then I will see how the situation develops. And if it worsens, I will ask you to transfer the second half from there.

We can use that part of the power that will be needed to handle a specific amount of applications, the Server Profile will provide. At the same time, I don’t know in detail how it works, I’m not competent in this, better ask your PSS for details. As Clint Eastwood said: "A man must know his limitations." And Cisco spent on this technology a lot of money in a short period of time, as it developed rapidly, we offered it to the market in 2009. As a result, we have the B series chassis and the C series servers. Consider what the C series servers are.

This is a standalone, standalone server built for virtualization. And again, can we manage it by means of inerconnect with a factory? Yes, just like we could do with the blade server. In this way, we can manage both virtual servers located on independent servers and blades, and all on one interface. I will draw several blades with fiber connections, several cabinets with blades connected to each other through the factory. So, we have several server racks that are managed by a factory capable of managing up to 20 chassis. Large server density here. The FEX (fabric extender) 2200 factory extender is used here.

I have already shown you the chassis in which the network card modules and supervisors are located. And our chassis still has 8 servers. So, in the first group of racks, we install the fiber optic expander of the FEX 2200 series to connect our server blades. Next we created a rack with servers, each of which is autonomous, and also added a FEX 2200 to it. This is called TOR, Top of Rack Switches. A lot of them. In fact, they are the same “blades” that need a supervisor.

Next we will install another cabinet with servers and TOR FEX 2200. In order to provide all three sections of cabinets with control, we install a 4th cabinet next to it, in which we install a 5500 supervisor. We connect three existing cabinets with servers and switches with this supervisor . This is our brain.

It allows you to manage 16 of these cabinets. If we need more, the entire system of cabinets, united in groups of 4 sections, is connected to the supervisor of a higher power series 7000. It can manage 20 or 24 groups. This allows you to manage a large number of connections using a single smart device. The 5500 itself is connected to MDS using fiber-optic FCP connectors, which further expands our capabilities. From above, I draw the Nexus 7000 series switches, and now we can transfer 40 Gbps Ethernet to them. These 2200 series switches are not smart enough, so they need to be controlled by an intelligent device. Thus, we can provide any necessary bandwidth for our servers.

If you want to create a traditional SAN, we must install fiber-optic switches in the second and third cabinets, since we will not deal with Ethernet. , , - .

Cisco . «» , VCE, Cisco, EMC VMware. EMC - «», , -, . VBlock. NetApp, FlexPod. FlexPod — -, .

, . , . , . . HCS (Hosting Colaboration Solutions) – , , AT&T, IBM, British Telecom.

7000. , : F , M . Nexus , 2200. 2002 , , MDS 9500 , №1 «» . iOS , Linux. , . «» , . , Nexus , BGP, , . Linux . , . — . iOS Linux, Catalyst 6500 4500 Linux.

iOS Linux, . , , 1990-. . - GUI, , , . Linux , , BGP, . Basic COBOL , Nexus – . , 10 Nexus , Catalyst 6500, , Nexus 7000 c , Catalyst 6500. , Linux, , , .

Continued:

FastTrack Training. "Network Basics". « -». Eddie Martin December 2012

Thank you for staying with us. Do you like our articles? Want to see more interesting materials? Support us by placing an order or recommending to friends, 30% discount for Habr users on a unique analogue of the entry-level servers that we invented for you: The Truth About VPS (KVM) E5-2650 v4 (6 Cores) 10GB DDR4 240GB SSD 1Gbps $ 20 or how to share the server? (Options are available with RAID1 and RAID10, up to 24 cores and up to 40GB DDR4).

Dell R730xd 2 times cheaper? Only we have 2 x Intel Dodeca-Core Xeon E5-2650v4 128GB DDR4 6x480GB SSD 1Gbps 100 TV from $ 249 in the Netherlands and the USA! Read about How to build an infrastructure building. class c using servers Dell R730xd E5-2650 v4 worth 9000 euros for a penny?

Source: https://habr.com/ru/post/350920/

All Articles