FastTrack Training. "Network Basics". "Basics of data centers." Part 1. Eddie Martin. December 2012

About a year ago, I noticed an interesting and fascinating series of lectures by Eddie Martin, which, thanks to its history and real-life examples, as well as its tremendous learning experience, is amazingly comprehensible and allows you to gain an understanding of quite complex technologies.

We continue the cycle of 27 articles based on his lectures:

')

01/02: “Understanding the OSI Model” Part 1 / Part 2

03: "Understanding the Cisco Architecture"

04/05: “The Basics of Switching or Switches” Part 1 / Part 2

06: "Switches from Cisco"

07: "Area of use of network switches, the value of Cisco switches"

08/09: "Basics of a Wireless LAN" Part 1 / Part 2

10: "Products in the field of wireless LAN"

11: The Value of Cisco Wireless LANs

12: Routing Basics

13: "The structure of routers, routing platforms from Cisco"

14: The Value of Cisco Routers

15/16: “The Basics of Data Centers” Part 1 / Part 2

17: "Equipment for data centers"

18: "The Value of Cisco in Data Centers"

19/20/21: "The Basics of Telephony" Part 1 / Part 2 / Part 3

22: "Cisco Collaboration Software"

23: The Value of Collaboration Products from Cisco

24: "The Basics of Security"

25: "Cisco Security Software"

26: "The Value of Cisco Security Products"

27: "Understanding Cisco Architectural Games (Review)"

And here is the fifteenth of them.

Why do Cisco data centers? Our company and data centers are a really interesting story! And I have memories that can confirm this. I was a guy from IP-telephony, a specialist in the field of voice transmission, "a guy is a telephonist", as I was called in my team, when we started working on what I personally call a data center.

It happened in 2002, our team met, Cisco just announced the acquisition of Andiamo, and we were going to start selling fiber-optic switches that use a completely different protocol than Ethernet. We were only 12 software engineers, they discussed it all and I, frankly, didn’t listen to them very much, communicating with a colleague, and one of them said that he didn’t know anything about this fiber. I turned around and said that the most important thing to know about this is that the fiber optic cable is written “FIBER”, not “FIBER”. Our senior engineer immediately responded to my words, who said: “Oh, you apparently know a lot about this, you are a real specialist”! I realized that it was better for me to keep my mouth shut and not to be clever, since the next few months I figured out what we got into and how to get out of it. I had to become a small specialist in this, before we have a ready-made model and all these other things, one of those who pass this knowledge to my group.

What I've done. The first thing I had to do was learn about competitors. I went to Denver, Colorado, where I was going to take part in McDATA workshops. This company was the number 1 provider to use, as they said, large director-class switches. When I called them and said that I was from Cisco, they replied that they did not conduct master classes in which I could take part. Probably, since we already announced our plans and this company was not very happy about it. I reported this to my boss, to which he said: “I don’t want to know anything, show all your creativity to get there”! I called McDATA again and said that I was a consultant, but I cannot advise clients on what I do not understand. They answered me: “Well, in that case, we have a master class especially for you”! So, I had to remove all Cisco markings from myself and go there.

I learned a lot of interesting things there. When I called one of my colleagues who was engaged in fiber-optic communication, and he asked what I can say about it all now, I told him that now, in 2002, I feel as if I returned in 1995. These switches had only a small range of options, but it was a very reliable and high-speed connection, by the standards of the time, but within all these boxes there were no services! I took a week-long course, and we disassembled and assembled the 6140 series switch. I really enjoyed the training, and I flew back on Saturday. When I came to work on Sunday and saw our new switch of the MDS 9500 series, I realized that with such equipment we would just kill the entire market of our competitors!

At that time we were completely different people, we had completely different discussions, optical fiber only entered the market, we implemented many new virtualization projects, implemented VLANs. Just then, Cisco acquired the VSANs technology (virtual storage technology) that nobody has created until then. But how could we explain all this? Yes, we could say that we implemented VSANs. But they asked us: “What is VSANs like? What kind of VLAN? The people we spoke to did not even know what Cisco was doing in terms of networking. Most of them believed that Cisco is a company that delivers products for building networks on the same principle as companies involved in the delivery of food from stores. They did not know about our capabilities, they did not know about who we are. And what have we done? We started hiring people from various EMS companies around the world who dealt with all the other things, and I worked with them and other teams, teaching them our wisdom. And I learned terribly much about data centers, as about our future, it turns out, if we take the total IT budget, then 60% of this budget is spent in data centers! Cisco could not even imagine such a thing! Our company expanded by 45-50% per year, but we could “catch only half of the fish” in the organization of data centers, so we decided to invest in this promising new direction of the market, that is, to seize “fish places” and bring valuable development to of them.

Cisco entered the data center market just before creating VMware virtual machine technology, so we began to introduce products to use this technology when creating information processing centers. It was the most successful time and the most suitable opportunity to use virtualization. But I want to say that we did not act too wisely, investing huge sums in the creation of servers for clients over the past 15 years. But let's go directly to our topic. Let's start with applications - in terms of their importance, applications are located at the top of the requirements for network equipment. If you are a vice president and you need to ensure a 12% growth of your company without attracting additional staff, you need to use more productive and functional applications. Today, you can buy a ready-made software product based on the self-development platform for salesforce.com, but what if you need to create something unique for your internal needs? You have to be prepared for the fact that it will be necessary to invest a lot of money in developing what exactly your business project needs, but you can then own it.

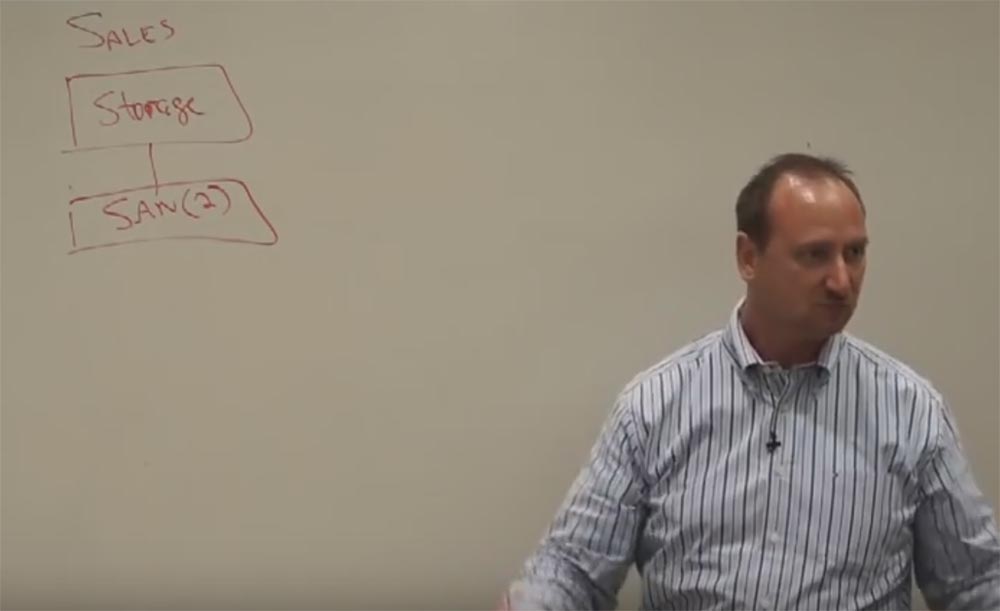

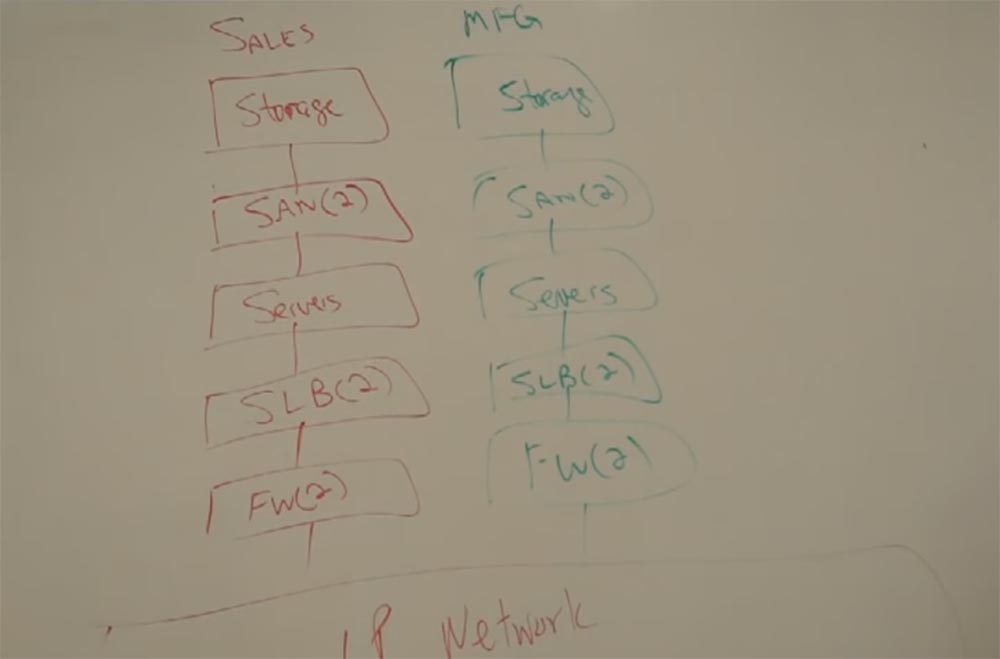

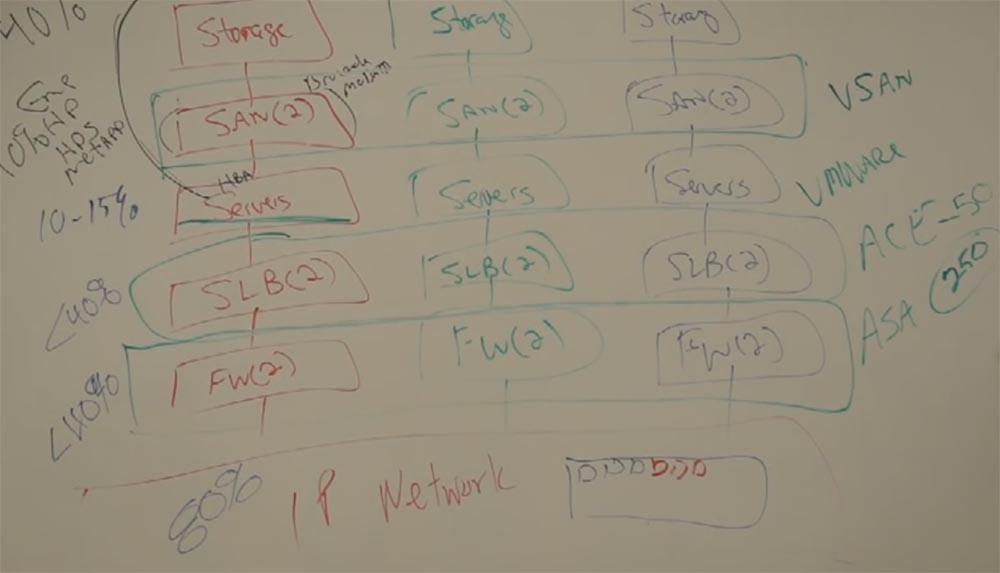

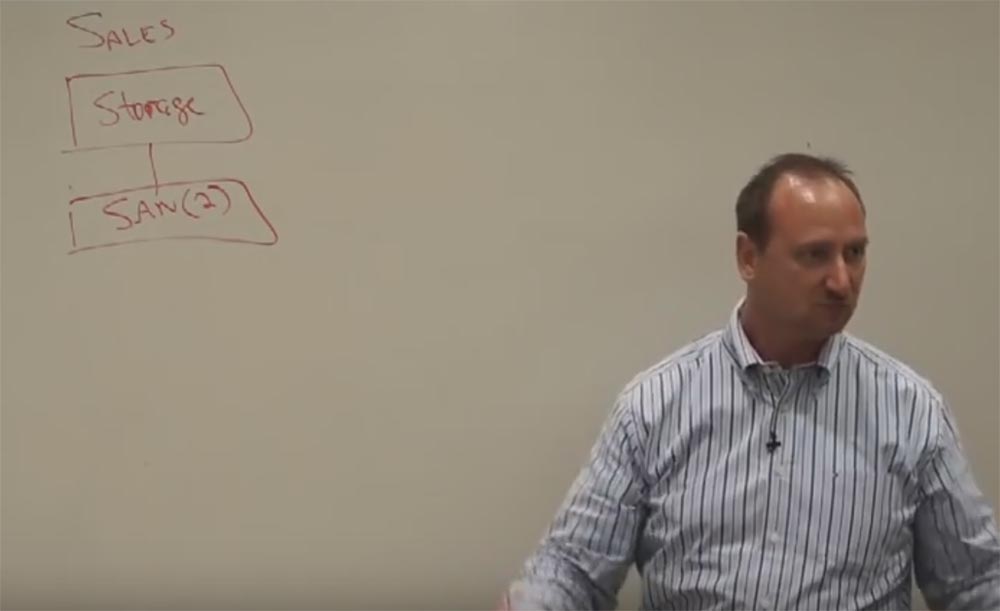

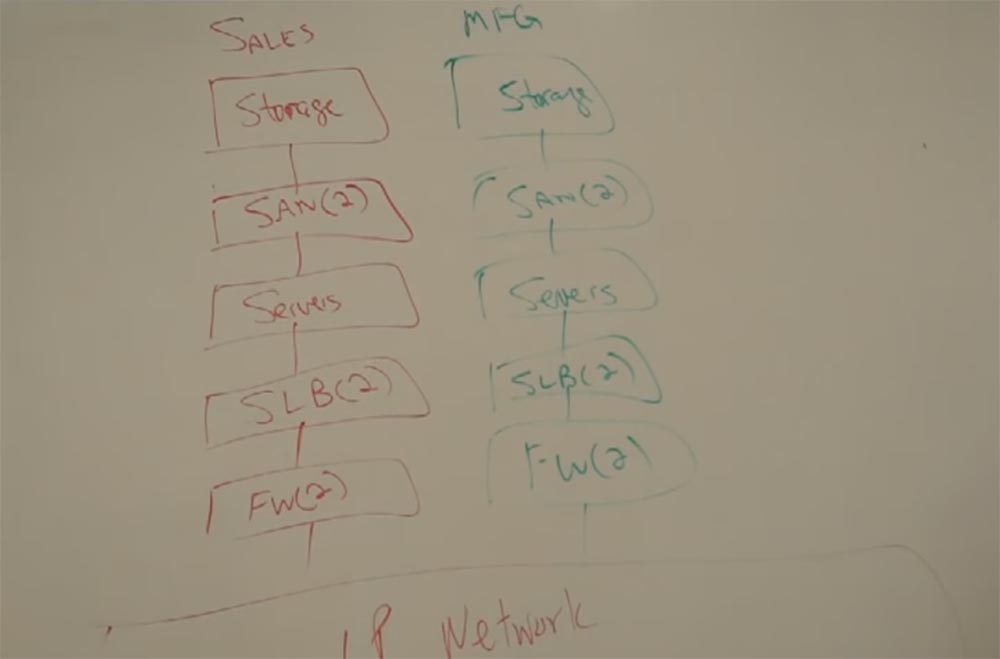

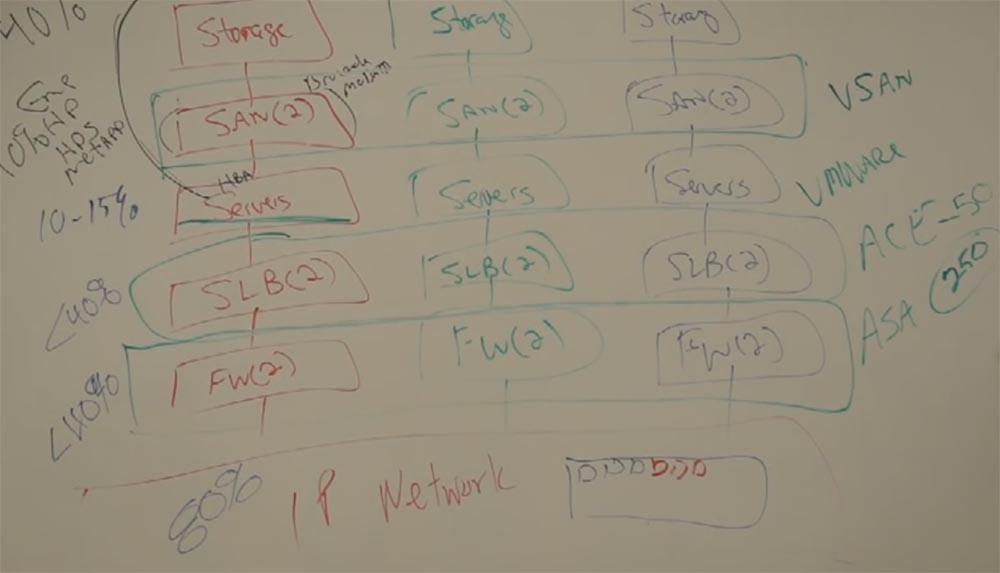

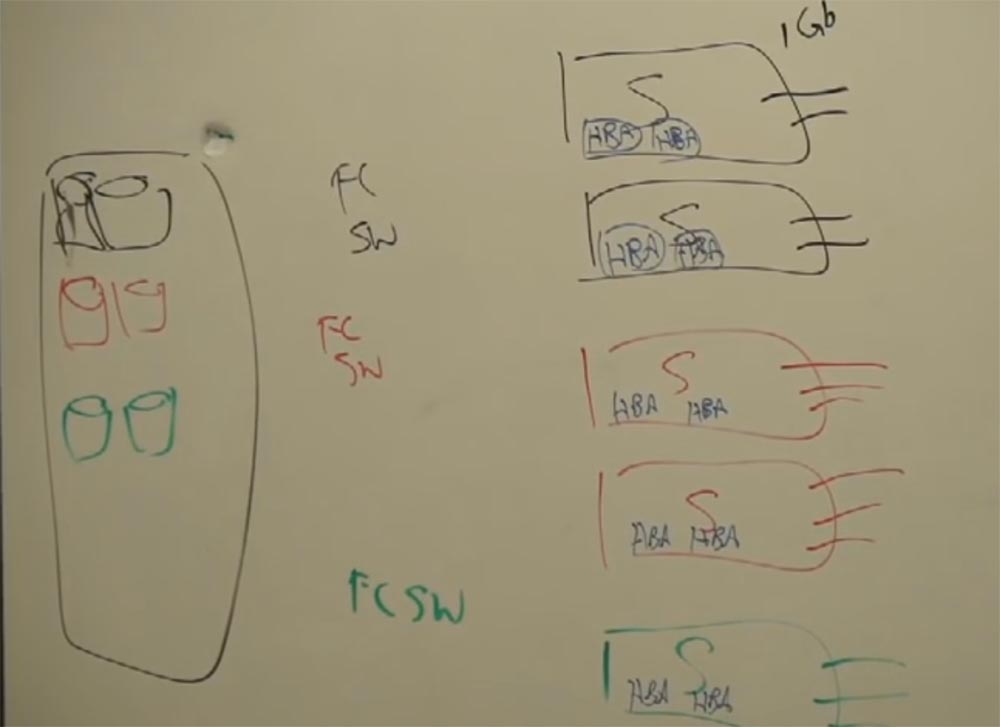

Let it be an application for the sales department. And the first thing that should be there is a database, which will contain data for all accounts. And I will need to determine the requirement for the storage that will store and process all this data, be able to create backup copies, etc. And for this, I need a network, 2 switches that connect me to the SAN storage. Then I will need servers that can be with Windows, Linux or Unix, depending on other software required for the applications to work.

And if I have servers, then I still need two load balancing devices for the SLB servers and 2 firewalls for protection. And then I have to make it all available via an IP network, where everyone can use this application. All this is necessary for you, and all this costs money.

Now let's talk about the production. We are going to sell more widgets, which means we need to produce more widgets. But we are not going to build more production capacity for this. We need to make current production more intelligent, optimize it. And again we turn to applications, perhaps to those that will help ensure timely delivery or any other. But it will be essentially a similar infrastructure next to ours, which will be included in our IP network.

Customer service, let's talk about it now. Maybe we want to deploy some applications that will help us serve them. And we will deploy the third infrastructure in the same way and connect it to our IP network.

Why do we make them separate? Control, the solution of a particular problem, various sources of funding, right? And I can make them with the help of equipment from different manufacturers. Because again, the one who decides on the infrastructure for the operation of an application can decide on what it will work. Different types of servers and everything else may be required. Everything is funded differently. And different technical problems can be here.

The SAN uses the Fiber Channel Adapter Protocol for operation, which works here in host bus adapters. This solution is sold in a holistic manner. And it was sold to many manufacturers - EMC, HP, HDS, NetApp. It eventually passed the certification, it uses Brocade or McDATA switches, which cost no more than the "tax". Since the cost of such a decision may be $ 3 million. No one worries about the cost of two switches. SAN paid a “tax” for using third-party switches. And the use of these switches was due to the fact that they provided a fairly quick connection of servers and storage. But there was no intelligence in the vault. And because they are interested in EMC, HP, HDS, netApp, since 80% of their income is the development of applications for existing hardware solutions that are for them only for sale.

And Cisco also decided to participate. But the fact is that the fiber-optic protocol is a “hard” protocol, unforgiving, guaranteeing a very fast transport of a huge amount of information to the right place, a very cool protocol, but not at all like Ethernet. When you connect to a fiber switch, a three-step connection process occurs, called: FLOGI (fabric login), PLOGI (port login), and PRLI (process login). You are connecting to a "factory" fiber network. And if I need to “submit” a new server to the SAN storage for everyone else, connect it, all processes stop, no one can use the network while the F-login process is in progress. What happens to the applications? They stop their work. Therefore, if I deploy such an infrastructure, I must be sure that no additional servers will be added to it. I want to keep them independent. That is why, if the solution is small - they bought small Brocade switches, and for larger ones - McData. That's all. They didn't bother much. 80% of McData’s business was concentrated here, they provided special prices and were actually owned by EMC.

That's why Cisco decided to participate, as we thought we could change that. Cisco began to "rock the boat", developing the technology of intelligent traffic management process. Since everyone built these infrastructures of the same type, a lot of people. People bought storage for a variety of tasks, just think, I buy storage for 20 TB here, 10 TB here and 8 TB here. And what is my recycling of these stores? 40%! Imagine you come to CFO and say, we want to spend another half a million dollars and buy more storage, as we need more space, but we only recycle the current by 40%. Do not tell him this, do not say! It's all the same to buy a gallon of milk (3.78 liters), drink 2 large glasses and say when more than half the bottle is left, that we need to buy more. We do not want to do this, but this is how these people do, it is ineffective. Ports on SAN switches are less than 40% full. We buy them, but we do not use them.

And the worst thing that could happen in this case was the appearance of multicore processors in the servers. Every 3 years, people bought new servers, updating old ones, but the new servers had a much more powerful processor, and the price remained the same. It turned out that your applications do not require such power to work, they have enough performance of old processors, but you bought new servers with multi-core processors that you were not able to load at full capacity. What happened in this case with the server? Its utilization rate fell, its effectiveness fell. We reached values of 10-15% on some servers, because we deployed only 1 application on the server. SLBs were used at 40% power, firewalls at 40% power, and so on. But we needed to have several servers to ensure reliability at the expense of redundancy, as well as to be able to scale.

While the LAN switches were utilized by more than 80%, since we virtualized them and used them for several applications. I have one application and it needs a port, I create a VLAN for it, then I get a second application that also needs a port and I create a second VLAN, the advantage of this technology is that I can assign as many VLANs to one port . And because I do not need to buy a new switch every time I need a new VLAN. We virtualized it many years ago, in the middle of the 90s.

Virtualization happened here. We “pushed” this technology into the data center segment and called it “the consolidation of switches by virtualization”. We released MDS 9500 switches to the market and announced to our customers that they should refrain from buying all this “trifle” serving one port. We offered them to purchase one large device that could create virtual networks of any scale, and called it VSAN technology. I will combine the three SAN switches into one VSAN switch in the figure.

Our biggest problem was not technical, but was how to explain these technologies to clients. And the first sales of our switches began only in December 2002. I even conducted a two-day master class on storage organization to explain the benefits of using virtualization. And then VMware began to gain popularity, some of us may remember this. Then they are smart and fearless enough, maybe they were even drunk when they did it for the first time, they thought that it was possible to virtualize the server. Instead of running one application on one server, we can download a program to it called the hypervisor and run many applications on one server independently of each other. One physical server was able to simultaneously work with Microsotf applications, Linux applications and programs from other manufacturers. Previously, this would require a separate server for Microsotf, a separate server for Linux, and so on. Then people remembered multi-core processors and said: “Oh, now they will be needed”! VMware technology began to be used for server virtualization.

Now consider the SLB devices that Cisco decided to replace with the ACE module. This module, by the way, is quite expensive, became part of the Catalist 6500 switch and ensured even load distribution on the server. ACE, Application Content Engine, stands for “content engine”, provided load balancing. In 1 switch we placed 50 independent virtual ACEs, 50 independent load balancers on one “blade”, and customers no longer needed to buy several physical SLBs. We replaced the firewalls with one ASA module, each of which contained up to 250 virtual firewalls.

Each of the virtual firewalls obeyed the rules that you set for a particular application, that is, you could protect 250 programs with different conditions. Well, storage manufacturers began to replace it with one common virtual space, VD Space.

Such virtualization is the world's greatest invention. We have eliminated the situation when 60% of the IT budget was wasted on unnecessary things. I repeat - we started with the integration of physical devices based on virtual machines in order to then virtualize the entire process of creating data centers. As you know, EMC acquired VMware technology and created a separate company based on it, and Cisco was forced to buy it out for an $ 150 million IPO. You know, I also went to the IPO, but I did not invest $ 150 million, like Cisco. I invested only a few thousand dollars, buying a few shares of VMware. And by itself, that having come to them and saying: "I am Eddie, I want to know all your secrets." I would just say: "Turn around and go out of here, try so that the door does not hit you, get out." Here, so they said. But not Cisco. Cisco came and said: "We have invested $ 150 million, we own a stake and want to know everything that is happening behind the scenes." And they will show them. And so Cisco has the advantage of looking where it goes.

Cisco has acted as a “donor” for many IT companies. There have always been people in the management of the company who thought prospectively and expanded our sphere of influence by investing in this. This $ 150 million was not a critical amount for Cisco, but it was still a significant investment for the company and made up approximately 4 to 6% of capitalization.

The introduction of virtual technologies was carried out from 2000 to 2003. This was the first wave of data center creation.

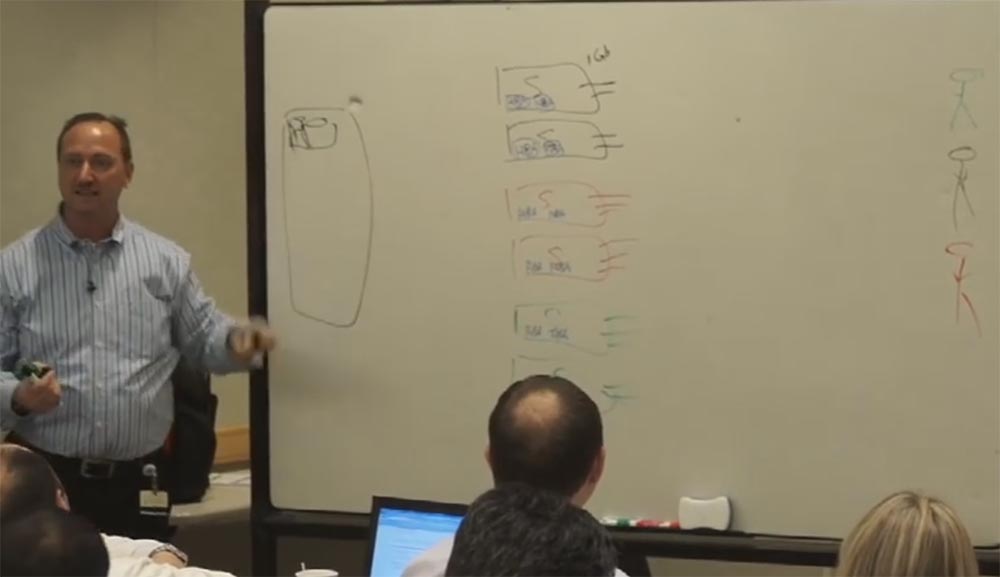

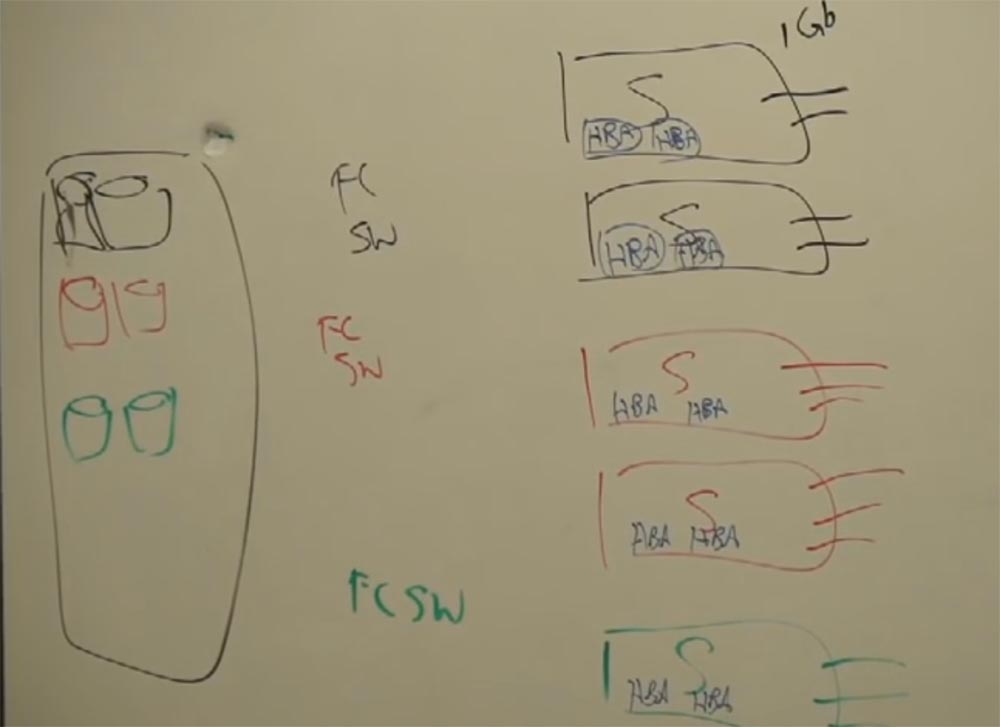

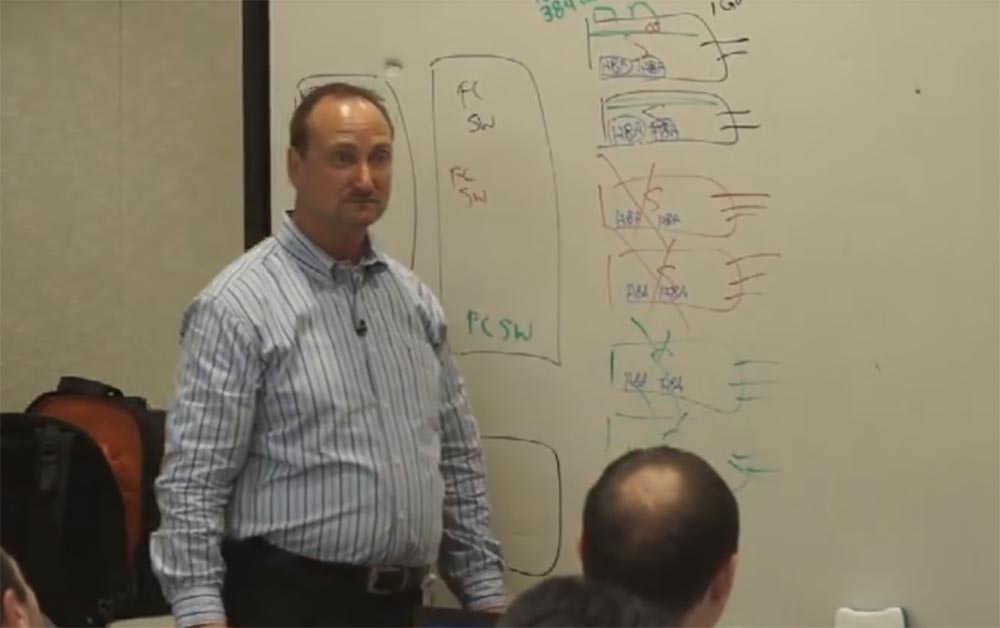

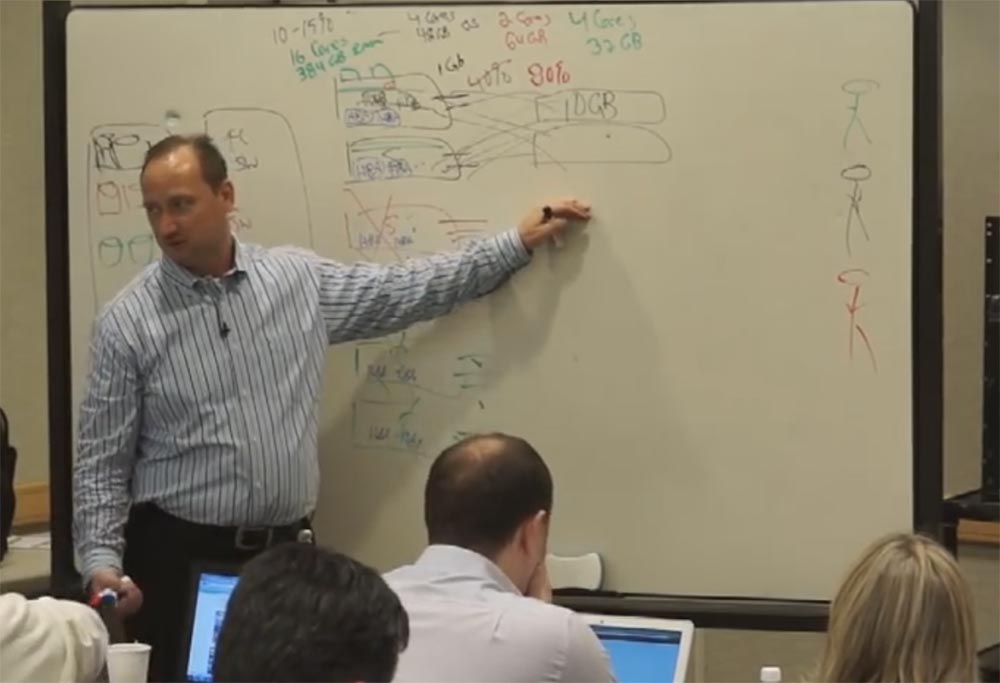

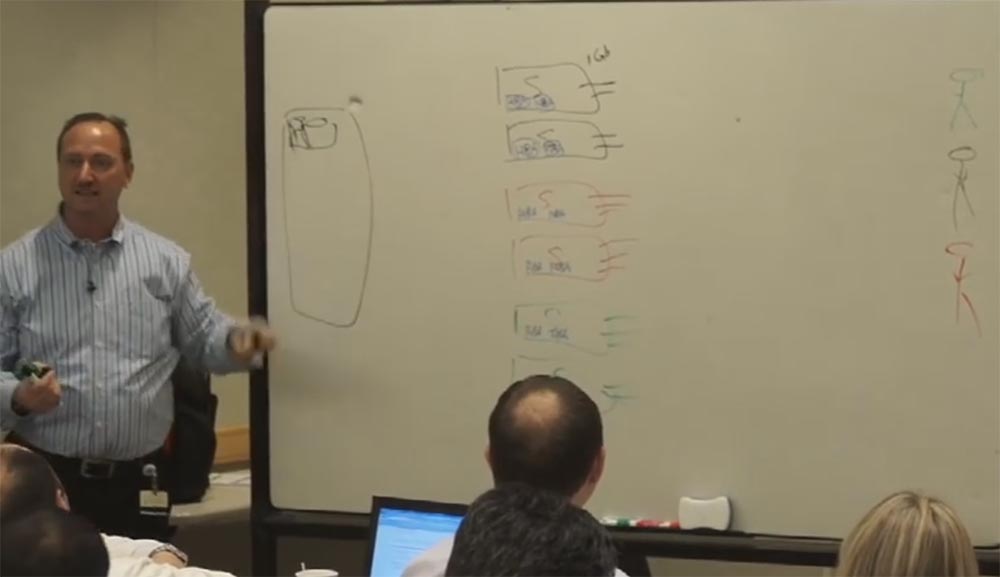

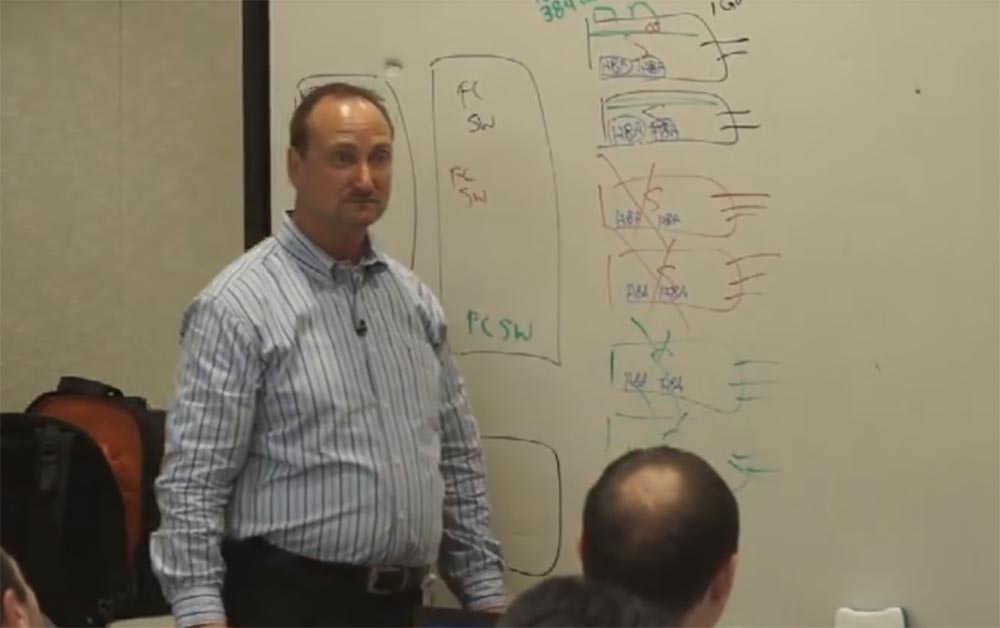

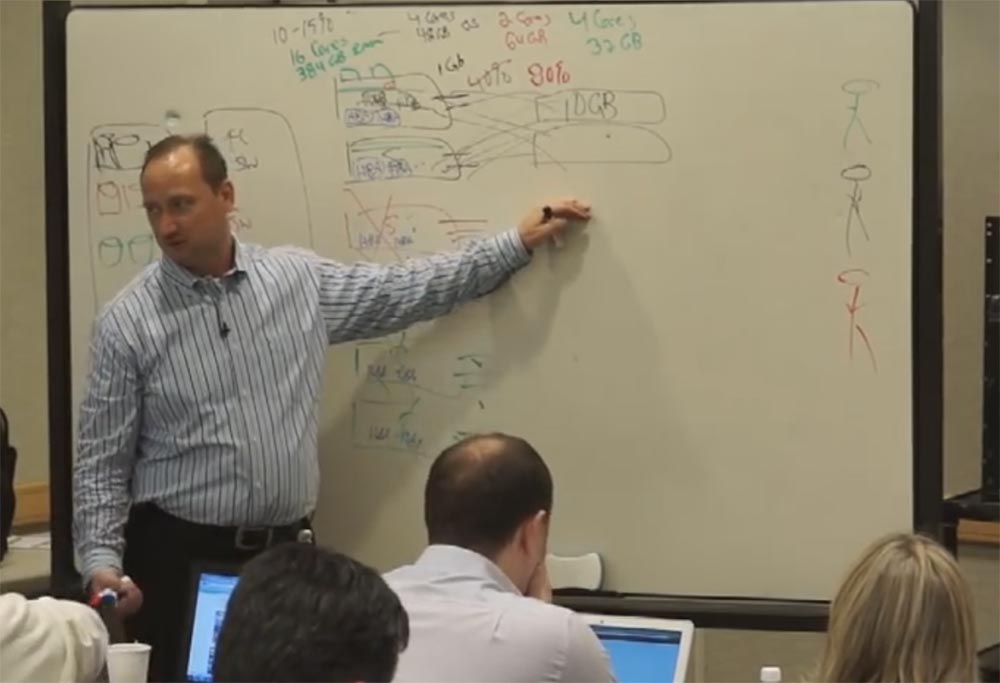

It's time to talk in detail about server virtualization. I will tell you about things that are important to me personally as a specialist. Suppose we have three groups, each of which has 2 multi-tasking servers for processing multiple applications. There may be more such servers, but the board is not enough for me to draw them. On the right side of the board we will draw three multi-colored users, each of which uses applications of the corresponding color - black, green or red. These can be Internet service providers, companies, other customers using the network. Between these people and servers are all the necessary devices - traffic balancers (SLB), firewalls, etc. Each of the servers is equipped with a network card that provides 1 Gbps, and can be 4, 6 or even 8 cards in one server.

On the left side of the servers is the network storage space (SAN). , -, HBA, Host Bus Adapter. , 1500 2000 $ . What are they doing? , . - .

, , ? «» , HBA! . , , HBA. SCSI, . , FCSW. , .

.

, , ? ? ! - , . .

EMC , ? SRDF, « ». , , . , -. EMC , .

, - HBA «» . Cisco , FCS MDS 9500. , VSANs. , 2 VSAN, . , .

, . , , «» , 10-15% . , , . «» . VMware , .

2 8 , 16 , 384 . 4 16 48 , 12 . , . 4 16 . «», . .

, , 2 64 , . ? , « » 2 , , 2 64 .

. « » – «» , «» ! .

, «». 4 32 . , , 4 32 , «» , ! «» .

, – ! , , . «», VMware, , !

Wallmart, 30000 , , , , — -. , 3 . ? ! , ? ! 60% «», . ? Thousands!

. 2000- , . , «» , VMware.

, , , : «, , »! Cisco . , .

– , IT-, . , . « », .

- , Compact 3000, 600 . , , ! , 2 , , . , . - . - .

, «» . : « , 2 , -»! - , ! ! . - . , . Cisco . , Cisco.

. , «» 6-8 /, «» . «» , . , 8 (6-8 , 2 ) 40%, 80%! « »? . , . , Cisco. , Ethernet? CISCO. 1 Gbps Ethernet? 10 Gbps Ethernet. , , . ? , , 10 / , 20 / . , 10 / , ? , .

? Catalist 6500 ? , . ? , 10 / . Nexus. !

Nexus «» , Catalist 6500, . 10 / . Nexus 5000, 7000, «» .

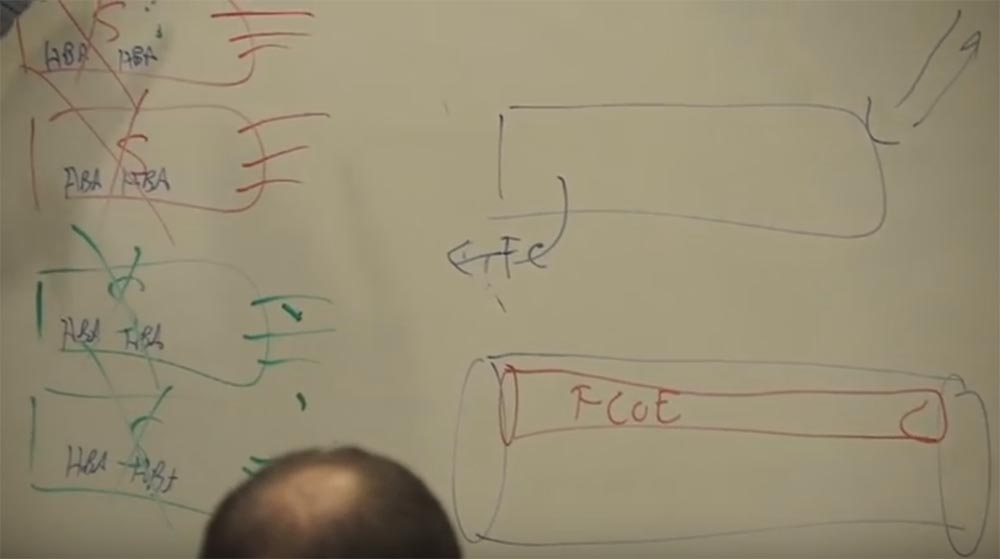

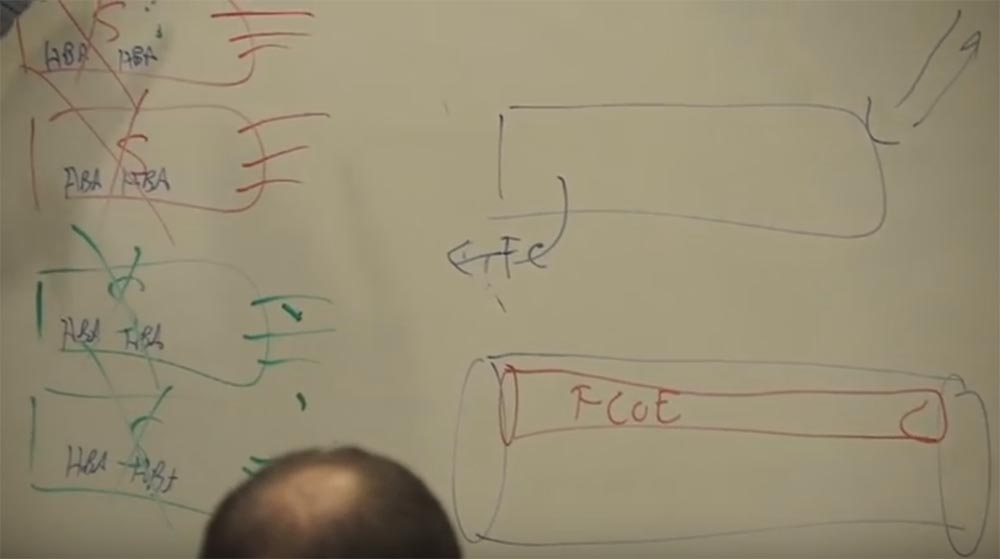

, Ethernet CISCO, 10 / Ehternet-, , FCSW, ? ? - , IBM SNA, . , . Cisco : , . .

Cisco «», , – Ethernet. , . , , 10 Ethernet. , FCOE, Fibre Channel over Ethernet, . . , 12 , 2.

, . , , 2009 . Ethernet – -. , -.

, Cisco , . ? , . , . MDS 9500, , , Nexus Fibre Channel over Ethernet. . ? , Nexus . Cisco, . , , …

Continued:

FastTrack Training. "Network Basics". "Basics of data centers." Part 2. Eddie Martin. December 2012

Thank you for staying with us. Do you like our articles? Want to see more interesting materials? Support us by placing an order or recommending to friends, 30% discount for Habr users on a unique analogue of the entry-level servers that we invented for you: The Truth About VPS (KVM) E5-2650 v4 (6 Cores) 10GB DDR4 240GB SSD 1Gbps $ 20 or how to share the server? (Options are available with RAID1 and RAID10, up to 24 cores and up to 40GB DDR4).

Dell R730xd 2 times cheaper? Only we have 2 x Intel Dodeca-Core Xeon E5-2650v4 128GB DDR4 6x480GB SSD 1Gbps 100 TV from $ 249 in the Netherlands and the USA! Read about How to build an infrastructure building. class c using servers Dell R730xd E5-2650 v4 worth 9000 euros for a penny?

We continue the cycle of 27 articles based on his lectures:

')

01/02: “Understanding the OSI Model” Part 1 / Part 2

03: "Understanding the Cisco Architecture"

04/05: “The Basics of Switching or Switches” Part 1 / Part 2

06: "Switches from Cisco"

07: "Area of use of network switches, the value of Cisco switches"

08/09: "Basics of a Wireless LAN" Part 1 / Part 2

10: "Products in the field of wireless LAN"

11: The Value of Cisco Wireless LANs

12: Routing Basics

13: "The structure of routers, routing platforms from Cisco"

14: The Value of Cisco Routers

15/16: “The Basics of Data Centers” Part 1 / Part 2

17: "Equipment for data centers"

18: "The Value of Cisco in Data Centers"

19/20/21: "The Basics of Telephony" Part 1 / Part 2 / Part 3

22: "Cisco Collaboration Software"

23: The Value of Collaboration Products from Cisco

24: "The Basics of Security"

25: "Cisco Security Software"

26: "The Value of Cisco Security Products"

27: "Understanding Cisco Architectural Games (Review)"

And here is the fifteenth of them.

FastTrack Training. "Network Basics". "Basics of data centers." Part 1. Eddie Martin. December 2012

Why do Cisco data centers? Our company and data centers are a really interesting story! And I have memories that can confirm this. I was a guy from IP-telephony, a specialist in the field of voice transmission, "a guy is a telephonist", as I was called in my team, when we started working on what I personally call a data center.

It happened in 2002, our team met, Cisco just announced the acquisition of Andiamo, and we were going to start selling fiber-optic switches that use a completely different protocol than Ethernet. We were only 12 software engineers, they discussed it all and I, frankly, didn’t listen to them very much, communicating with a colleague, and one of them said that he didn’t know anything about this fiber. I turned around and said that the most important thing to know about this is that the fiber optic cable is written “FIBER”, not “FIBER”. Our senior engineer immediately responded to my words, who said: “Oh, you apparently know a lot about this, you are a real specialist”! I realized that it was better for me to keep my mouth shut and not to be clever, since the next few months I figured out what we got into and how to get out of it. I had to become a small specialist in this, before we have a ready-made model and all these other things, one of those who pass this knowledge to my group.

What I've done. The first thing I had to do was learn about competitors. I went to Denver, Colorado, where I was going to take part in McDATA workshops. This company was the number 1 provider to use, as they said, large director-class switches. When I called them and said that I was from Cisco, they replied that they did not conduct master classes in which I could take part. Probably, since we already announced our plans and this company was not very happy about it. I reported this to my boss, to which he said: “I don’t want to know anything, show all your creativity to get there”! I called McDATA again and said that I was a consultant, but I cannot advise clients on what I do not understand. They answered me: “Well, in that case, we have a master class especially for you”! So, I had to remove all Cisco markings from myself and go there.

I learned a lot of interesting things there. When I called one of my colleagues who was engaged in fiber-optic communication, and he asked what I can say about it all now, I told him that now, in 2002, I feel as if I returned in 1995. These switches had only a small range of options, but it was a very reliable and high-speed connection, by the standards of the time, but within all these boxes there were no services! I took a week-long course, and we disassembled and assembled the 6140 series switch. I really enjoyed the training, and I flew back on Saturday. When I came to work on Sunday and saw our new switch of the MDS 9500 series, I realized that with such equipment we would just kill the entire market of our competitors!

At that time we were completely different people, we had completely different discussions, optical fiber only entered the market, we implemented many new virtualization projects, implemented VLANs. Just then, Cisco acquired the VSANs technology (virtual storage technology) that nobody has created until then. But how could we explain all this? Yes, we could say that we implemented VSANs. But they asked us: “What is VSANs like? What kind of VLAN? The people we spoke to did not even know what Cisco was doing in terms of networking. Most of them believed that Cisco is a company that delivers products for building networks on the same principle as companies involved in the delivery of food from stores. They did not know about our capabilities, they did not know about who we are. And what have we done? We started hiring people from various EMS companies around the world who dealt with all the other things, and I worked with them and other teams, teaching them our wisdom. And I learned terribly much about data centers, as about our future, it turns out, if we take the total IT budget, then 60% of this budget is spent in data centers! Cisco could not even imagine such a thing! Our company expanded by 45-50% per year, but we could “catch only half of the fish” in the organization of data centers, so we decided to invest in this promising new direction of the market, that is, to seize “fish places” and bring valuable development to of them.

Cisco entered the data center market just before creating VMware virtual machine technology, so we began to introduce products to use this technology when creating information processing centers. It was the most successful time and the most suitable opportunity to use virtualization. But I want to say that we did not act too wisely, investing huge sums in the creation of servers for clients over the past 15 years. But let's go directly to our topic. Let's start with applications - in terms of their importance, applications are located at the top of the requirements for network equipment. If you are a vice president and you need to ensure a 12% growth of your company without attracting additional staff, you need to use more productive and functional applications. Today, you can buy a ready-made software product based on the self-development platform for salesforce.com, but what if you need to create something unique for your internal needs? You have to be prepared for the fact that it will be necessary to invest a lot of money in developing what exactly your business project needs, but you can then own it.

Let it be an application for the sales department. And the first thing that should be there is a database, which will contain data for all accounts. And I will need to determine the requirement for the storage that will store and process all this data, be able to create backup copies, etc. And for this, I need a network, 2 switches that connect me to the SAN storage. Then I will need servers that can be with Windows, Linux or Unix, depending on other software required for the applications to work.

And if I have servers, then I still need two load balancing devices for the SLB servers and 2 firewalls for protection. And then I have to make it all available via an IP network, where everyone can use this application. All this is necessary for you, and all this costs money.

Now let's talk about the production. We are going to sell more widgets, which means we need to produce more widgets. But we are not going to build more production capacity for this. We need to make current production more intelligent, optimize it. And again we turn to applications, perhaps to those that will help ensure timely delivery or any other. But it will be essentially a similar infrastructure next to ours, which will be included in our IP network.

Customer service, let's talk about it now. Maybe we want to deploy some applications that will help us serve them. And we will deploy the third infrastructure in the same way and connect it to our IP network.

Why do we make them separate? Control, the solution of a particular problem, various sources of funding, right? And I can make them with the help of equipment from different manufacturers. Because again, the one who decides on the infrastructure for the operation of an application can decide on what it will work. Different types of servers and everything else may be required. Everything is funded differently. And different technical problems can be here.

The SAN uses the Fiber Channel Adapter Protocol for operation, which works here in host bus adapters. This solution is sold in a holistic manner. And it was sold to many manufacturers - EMC, HP, HDS, NetApp. It eventually passed the certification, it uses Brocade or McDATA switches, which cost no more than the "tax". Since the cost of such a decision may be $ 3 million. No one worries about the cost of two switches. SAN paid a “tax” for using third-party switches. And the use of these switches was due to the fact that they provided a fairly quick connection of servers and storage. But there was no intelligence in the vault. And because they are interested in EMC, HP, HDS, netApp, since 80% of their income is the development of applications for existing hardware solutions that are for them only for sale.

And Cisco also decided to participate. But the fact is that the fiber-optic protocol is a “hard” protocol, unforgiving, guaranteeing a very fast transport of a huge amount of information to the right place, a very cool protocol, but not at all like Ethernet. When you connect to a fiber switch, a three-step connection process occurs, called: FLOGI (fabric login), PLOGI (port login), and PRLI (process login). You are connecting to a "factory" fiber network. And if I need to “submit” a new server to the SAN storage for everyone else, connect it, all processes stop, no one can use the network while the F-login process is in progress. What happens to the applications? They stop their work. Therefore, if I deploy such an infrastructure, I must be sure that no additional servers will be added to it. I want to keep them independent. That is why, if the solution is small - they bought small Brocade switches, and for larger ones - McData. That's all. They didn't bother much. 80% of McData’s business was concentrated here, they provided special prices and were actually owned by EMC.

That's why Cisco decided to participate, as we thought we could change that. Cisco began to "rock the boat", developing the technology of intelligent traffic management process. Since everyone built these infrastructures of the same type, a lot of people. People bought storage for a variety of tasks, just think, I buy storage for 20 TB here, 10 TB here and 8 TB here. And what is my recycling of these stores? 40%! Imagine you come to CFO and say, we want to spend another half a million dollars and buy more storage, as we need more space, but we only recycle the current by 40%. Do not tell him this, do not say! It's all the same to buy a gallon of milk (3.78 liters), drink 2 large glasses and say when more than half the bottle is left, that we need to buy more. We do not want to do this, but this is how these people do, it is ineffective. Ports on SAN switches are less than 40% full. We buy them, but we do not use them.

And the worst thing that could happen in this case was the appearance of multicore processors in the servers. Every 3 years, people bought new servers, updating old ones, but the new servers had a much more powerful processor, and the price remained the same. It turned out that your applications do not require such power to work, they have enough performance of old processors, but you bought new servers with multi-core processors that you were not able to load at full capacity. What happened in this case with the server? Its utilization rate fell, its effectiveness fell. We reached values of 10-15% on some servers, because we deployed only 1 application on the server. SLBs were used at 40% power, firewalls at 40% power, and so on. But we needed to have several servers to ensure reliability at the expense of redundancy, as well as to be able to scale.

While the LAN switches were utilized by more than 80%, since we virtualized them and used them for several applications. I have one application and it needs a port, I create a VLAN for it, then I get a second application that also needs a port and I create a second VLAN, the advantage of this technology is that I can assign as many VLANs to one port . And because I do not need to buy a new switch every time I need a new VLAN. We virtualized it many years ago, in the middle of the 90s.

Virtualization happened here. We “pushed” this technology into the data center segment and called it “the consolidation of switches by virtualization”. We released MDS 9500 switches to the market and announced to our customers that they should refrain from buying all this “trifle” serving one port. We offered them to purchase one large device that could create virtual networks of any scale, and called it VSAN technology. I will combine the three SAN switches into one VSAN switch in the figure.

Our biggest problem was not technical, but was how to explain these technologies to clients. And the first sales of our switches began only in December 2002. I even conducted a two-day master class on storage organization to explain the benefits of using virtualization. And then VMware began to gain popularity, some of us may remember this. Then they are smart and fearless enough, maybe they were even drunk when they did it for the first time, they thought that it was possible to virtualize the server. Instead of running one application on one server, we can download a program to it called the hypervisor and run many applications on one server independently of each other. One physical server was able to simultaneously work with Microsotf applications, Linux applications and programs from other manufacturers. Previously, this would require a separate server for Microsotf, a separate server for Linux, and so on. Then people remembered multi-core processors and said: “Oh, now they will be needed”! VMware technology began to be used for server virtualization.

Now consider the SLB devices that Cisco decided to replace with the ACE module. This module, by the way, is quite expensive, became part of the Catalist 6500 switch and ensured even load distribution on the server. ACE, Application Content Engine, stands for “content engine”, provided load balancing. In 1 switch we placed 50 independent virtual ACEs, 50 independent load balancers on one “blade”, and customers no longer needed to buy several physical SLBs. We replaced the firewalls with one ASA module, each of which contained up to 250 virtual firewalls.

Each of the virtual firewalls obeyed the rules that you set for a particular application, that is, you could protect 250 programs with different conditions. Well, storage manufacturers began to replace it with one common virtual space, VD Space.

Such virtualization is the world's greatest invention. We have eliminated the situation when 60% of the IT budget was wasted on unnecessary things. I repeat - we started with the integration of physical devices based on virtual machines in order to then virtualize the entire process of creating data centers. As you know, EMC acquired VMware technology and created a separate company based on it, and Cisco was forced to buy it out for an $ 150 million IPO. You know, I also went to the IPO, but I did not invest $ 150 million, like Cisco. I invested only a few thousand dollars, buying a few shares of VMware. And by itself, that having come to them and saying: "I am Eddie, I want to know all your secrets." I would just say: "Turn around and go out of here, try so that the door does not hit you, get out." Here, so they said. But not Cisco. Cisco came and said: "We have invested $ 150 million, we own a stake and want to know everything that is happening behind the scenes." And they will show them. And so Cisco has the advantage of looking where it goes.

Cisco has acted as a “donor” for many IT companies. There have always been people in the management of the company who thought prospectively and expanded our sphere of influence by investing in this. This $ 150 million was not a critical amount for Cisco, but it was still a significant investment for the company and made up approximately 4 to 6% of capitalization.

The introduction of virtual technologies was carried out from 2000 to 2003. This was the first wave of data center creation.

It's time to talk in detail about server virtualization. I will tell you about things that are important to me personally as a specialist. Suppose we have three groups, each of which has 2 multi-tasking servers for processing multiple applications. There may be more such servers, but the board is not enough for me to draw them. On the right side of the board we will draw three multi-colored users, each of which uses applications of the corresponding color - black, green or red. These can be Internet service providers, companies, other customers using the network. Between these people and servers are all the necessary devices - traffic balancers (SLB), firewalls, etc. Each of the servers is equipped with a network card that provides 1 Gbps, and can be 4, 6 or even 8 cards in one server.

On the left side of the servers is the network storage space (SAN). , -, HBA, Host Bus Adapter. , 1500 2000 $ . What are they doing? , . - .

, , ? «» , HBA! . , , HBA. SCSI, . , FCSW. , .

.

, , ? ? ! - , . .

EMC , ? SRDF, « ». , , . , -. EMC , .

, - HBA «» . Cisco , FCS MDS 9500. , VSANs. , 2 VSAN, . , .

, . , , «» , 10-15% . , , . «» . VMware , .

2 8 , 16 , 384 . 4 16 48 , 12 . , . 4 16 . «», . .

, , 2 64 , . ? , « » 2 , , 2 64 .

. « » – «» , «» ! .

, «». 4 32 . , , 4 32 , «» , ! «» .

, – ! , , . «», VMware, , !

Wallmart, 30000 , , , , — -. , 3 . ? ! , ? ! 60% «», . ? Thousands!

. 2000- , . , «» , VMware.

, , , : «, , »! Cisco . , .

– , IT-, . , . « », .

- , Compact 3000, 600 . , , ! , 2 , , . , . - . - .

, «» . : « , 2 , -»! - , ! ! . - . , . Cisco . , Cisco.

. , «» 6-8 /, «» . «» , . , 8 (6-8 , 2 ) 40%, 80%! « »? . , . , Cisco. , Ethernet? CISCO. 1 Gbps Ethernet? 10 Gbps Ethernet. , , . ? , , 10 / , 20 / . , 10 / , ? , .

? Catalist 6500 ? , . ? , 10 / . Nexus. !

Nexus «» , Catalist 6500, . 10 / . Nexus 5000, 7000, «» .

, Ethernet CISCO, 10 / Ehternet-, , FCSW, ? ? - , IBM SNA, . , . Cisco : , . .

Cisco «», , – Ethernet. , . , , 10 Ethernet. , FCOE, Fibre Channel over Ethernet, . . , 12 , 2.

, . , , 2009 . Ethernet – -. , -.

, Cisco , . ? , . , . MDS 9500, , , Nexus Fibre Channel over Ethernet. . ? , Nexus . Cisco, . , , …

Continued:

FastTrack Training. "Network Basics". "Basics of data centers." Part 2. Eddie Martin. December 2012

Thank you for staying with us. Do you like our articles? Want to see more interesting materials? Support us by placing an order or recommending to friends, 30% discount for Habr users on a unique analogue of the entry-level servers that we invented for you: The Truth About VPS (KVM) E5-2650 v4 (6 Cores) 10GB DDR4 240GB SSD 1Gbps $ 20 or how to share the server? (Options are available with RAID1 and RAID10, up to 24 cores and up to 40GB DDR4).

Dell R730xd 2 times cheaper? Only we have 2 x Intel Dodeca-Core Xeon E5-2650v4 128GB DDR4 6x480GB SSD 1Gbps 100 TV from $ 249 in the Netherlands and the USA! Read about How to build an infrastructure building. class c using servers Dell R730xd E5-2650 v4 worth 9000 euros for a penny?

Source: https://habr.com/ru/post/350854/

All Articles