NVDIMM nonvolatile memory for cache protection in RAIDIX 4.6

In this article we will discuss in more detail the support of nonvolatile memory (NVDIMM) in RAIDIX 4.6 software. A new version of the software was adopted by our key partners. Thus, RAIDIX 4.6 management software is already used in Trinity FlexApp from Trinity.

Persistent memory and NVDIMM standard

The new RAIDIX works with persistent memory (PMEM), which combines the advantages of traditional storage devices and the high throughput of DRAM memory. This type of memory allows for byte addressing (load / store), and - in contrast to the traditional “blochnikov” - operates with DRAM speed and the corresponding low latency. In the event of a power loss on the server, the entire contents of the memory remains intact and can be restored after the download. Currently this type of memory is available in the form of NVDIMM modules (Non-Volatile Dual Inline Memory Module).

NVDIMM modules were an example of the integrated use of two technologies: RAM and non-volatile memory. The standard itself is not new: it was approved a couple of years ago, and many companies have already presented their memory modules with a “battery”. However, due to the active development of Flash microcircuits, modern NVDIMM has begun to include a sufficient amount of NAND memory. Now NVDIMM allows not only to preserve the integrity of the data at the time of the emergency power outage, but also to cache all the data passing through the RAM on the fly.

')

Non-volatile memory NVDIMM is of different types: NVDIMM-N, NVDIMM-F, NVDIMM-P. The NVDIMM-N module includes both an SDRAM chip (RAM) and a flash memory chip (SSD) for backing up RAM data in case of a crash.

If NVDIMM-N is an “RAM” with advanced functionality, then NVDIMM-F is a kind of storage. In the "F" modules there are no RAM cells, they contain only flash memory chips. NVDIMM-P combines the functions of NVDIMM-F and NVDIMM-N within a single module. Access goes both to DRAM, and to NAND on one level. All three configurations can significantly increase performance when working with big data, HPC, etc.

In 2017, there was a kind of breakthrough regarding NVDIMM memory in the server segment. Micron introduced new modules with a capacity of 32 GB. These modules operate on the DDR4-2933 frequency with CL21 delays - which is much faster than other DDR4 for server applications. 8 and 16 GB memory modules were released earlier.

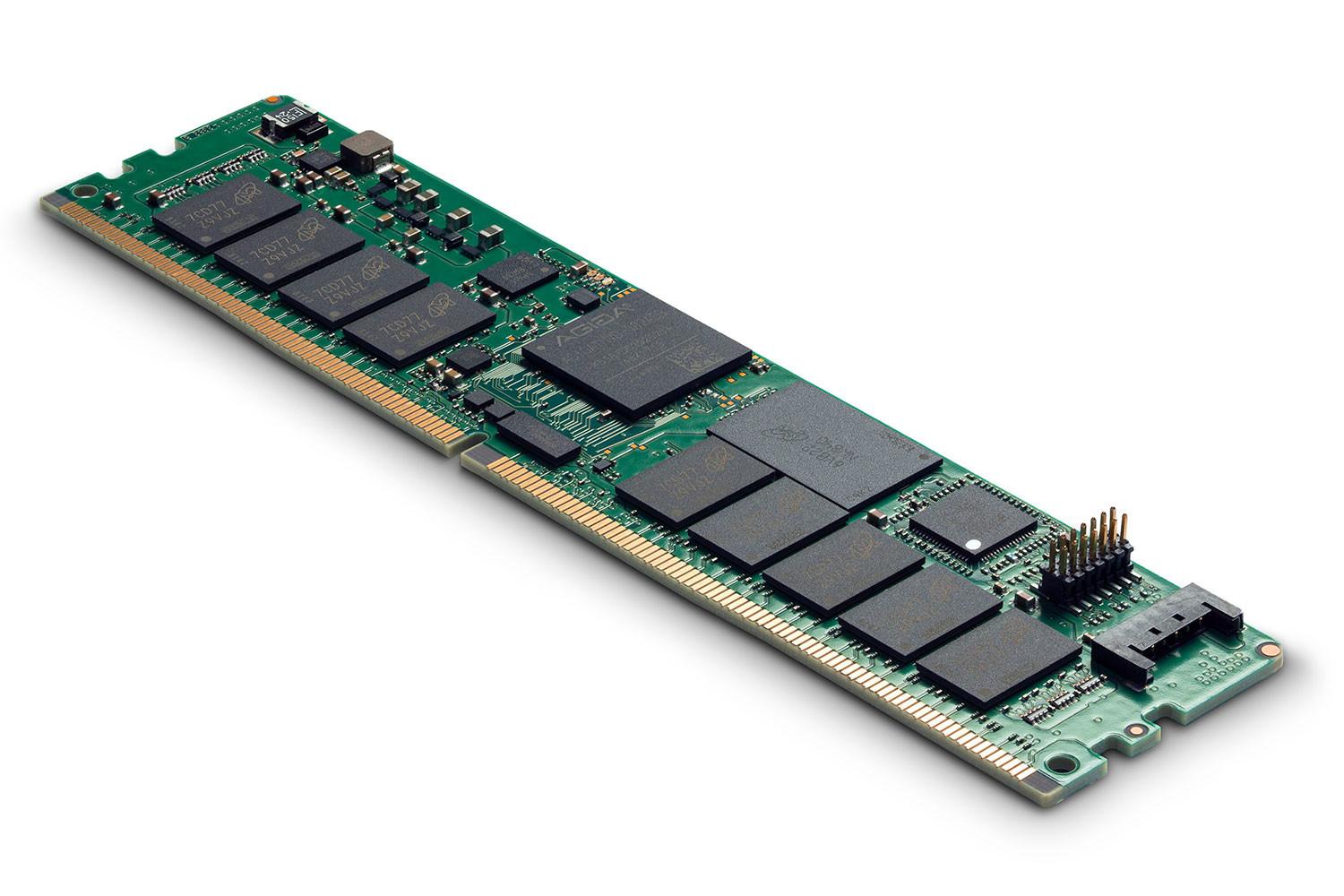

Pic.1 Micron 32GB DDR4 NVDIMM-N Module

Micron's NVDIMM-N is an ECC DRAM with 32GB of full access, with NAND Flash used only for data backup.

Access NVDIMM

There are two main ways to establish memory access on NVDIMM:

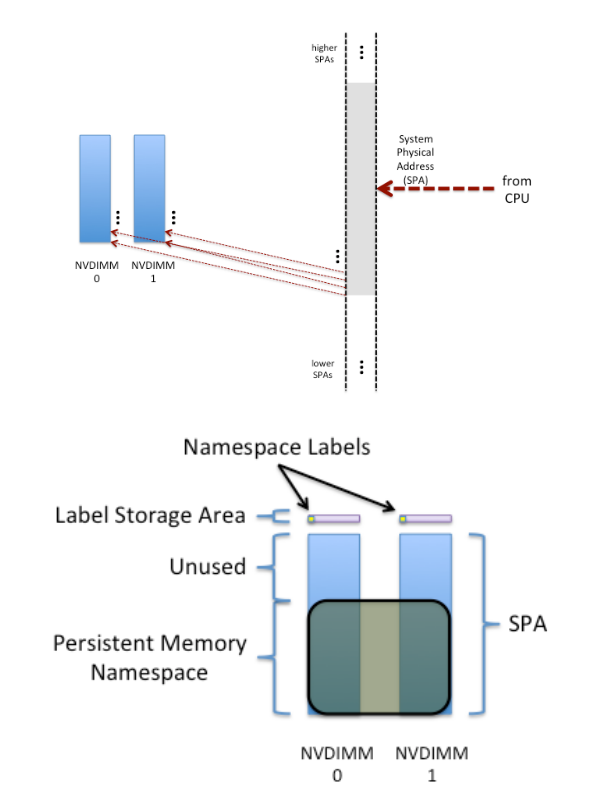

1. Direct access with PMEM

Direct access to modules using PMEM without consolidating into a single space is used in RAIDIX 4.6. In this case, the physical address space of the NVDIMM (DPA) is aligned with the physical address space of the SPA system. If there are several NVDIMM bars in the system, the memory controller can display them at its discretion. For example:

Fig. 2 Direct Access Scheme with PMEM

Accordingly, access to the modules will be carried out as a single entity. This is not always the preferred option. In some cases, it is necessary to access each strip separately, for example, to assemble a RAID from them. For such tasks, there is a second mode.

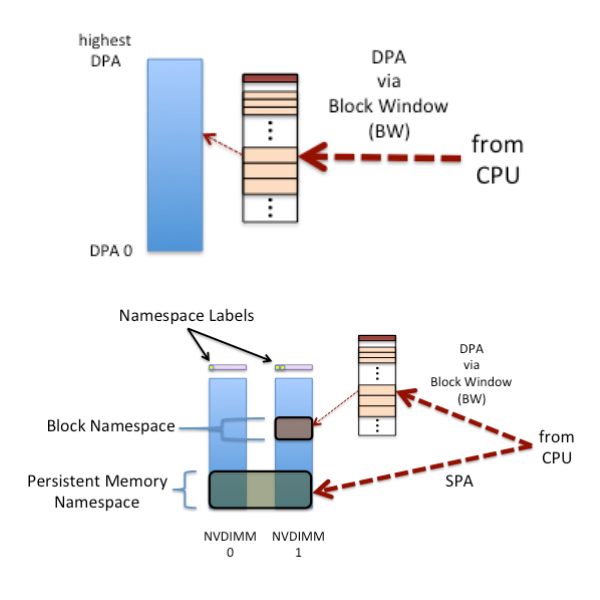

2. Access using BLK apertures

Access is carried out to each bar separately using the so-called “windows” of access:

Fig. 3 Access scheme using BLK apertures

Modern NVDIMM bars often support both of these modes simultaneously. Namespaces are used for this in the same way as on NVMe devices. Also, if NVDIMM corresponds to the NFIT (NVDIMM Firmware Interface Table), then at the beginning of each module special headers (labels) are stored, according to which the address space is divided into areas with different access modes (BLK or PMEM).

It is extremely important that these areas do not overlap, since parallel access to the same area using different methods will most likely lead to data corruption. (You can read more about NFIT in the description of the ACPI 6.1 standard .)

Cache protection for writing from NVDIMM to RAIDIX 4.6

Space organization

Up to version 4.6, the update operation of the block by the leading controller in RAIDIX was accompanied by a synchronous copy of the block into the RAM of the slave controller. In the event of a power outage, a significant amount of energy was required in the UPS, sufficient for the cluster to save all copies of the blocks to the disks before shutting down completely. When power was returned, significant time was required to charge the batteries before the cluster could be brought to a working state. The total idle time depended on both the power consumption of the cluster and the battery capacity, which in turn depended on the quality of service and storage conditions.

In the single-controller mode, there were risks of data loss due to emergency reloads and a lack of battery capacity in backup power sources when the solution was vertically scaled.

New functionality introduced in version 4.6 allows simplifying system maintenance and avoiding the introduction of redundant hardware components. How is this technically implemented?

NVDIMM is used as a more reliable storage place for our cache than in RAM. To do this, you must obtain an address in the virtual address space and cover the entire size of the area required to gain access.

Below is an exemplary scheme for storing information:

Fig. 4 Layout of data and metadata

First we divide the entire available space into several namespaces. They will store data and metadata describing their location on the RAID. Also, metadata carries identifiers for unique identification of data and the possibility of their recovery after an emergency failure.

What memory to use?

In this article, we will focus on the interaction of RAIDIX 4.6 with Micron's NVDIMM-N persistent memory. So, NVDIMM allows you to preserve the integrity of the data even during an emergency power outage. At the same time, Micron combines DRAM performance with the stability and reliability of NAND memory, ensuring data integrity and business continuity.

In the event of a crash, the internal controller NVDIMM moves the data stored in DRAM to the non-volatile memory area. After restoring the system, the controller loses data from the NAND memory back into RAM, allowing applications to continue working. The AgigA Tech PowerGEM ultracapacitor can act as a backup power supply for the Micron NVDIMM.

Fig.5 NVDIMM module with AgigA PowerGEM ultracapacitor

The NVDIMM technology in the Micron implementation is a combination of volatile and non-volatile memory (NAND Flash, DRAM and an autonomous power source in the memory subsystem). Micron's DDR4 NVDIMM-N provides high-speed read and write DRAM and backup DRAM data in the event of a power supply loss.

Below - a little more about the process of transferring data without loss.

Data transfer process

In the new version, the system records data taking into account the state of supercapacitors (the warranty period is up to 5 years), supplying only NVDIMM-N. Data integrity assurance is related to the accuracy of the energy reserve estimate that is required to transfer data to non-volatile memory.

Persistent memory combines the advantages of traditional storage devices and the high throughput of DRAM memory. A feature of persistent memory is byte addressing with high speed and very short latency.

The NVDIMM-N modules, within one minute after an emergency power outage, independently replace data from DRAM to NAND. The transfer process is accompanied by an appropriate indication. Upon completion of the transfer, the modules can be removed from the faulty controller and placed into a healthy one, like normal DIMM modules. This feature is relevant for all single-controller configurations, starting with budget disk solutions and ending with specialized solid-state ones.

What is the advantage?

In a configuration with two controllers, any of the NVDIMM-N modules is replaced with the controller at any convenient time, without interrupting access to the data. NVDIMM coherence can be provided not only by software, but also by hardware. Thus, there is no need to maintain batteries (BBU in old RAID controllers or UPS). Vertical and horizontal scaling of the solution no longer requires reassessing the risk of data loss!

RAIDIX 4.6 based Trinity FlexApp storage features

As part of the Trinity FlexApp storage system, each controller is a regular server with NVDIMM-N modules installed in the memory slots:

Figure 6 Trinity FlexApp storage components

100 Gbps support

The system provides administrators with the ability to connect to Linux client machines via high-performance InfiniBand Mellanox ConnectX-4 100 Gbps interfaces. As a result, the system provides minimal latency and improved performance in big data, HPC, and corporate environments. In addition, the software has made a number of improvements in terms of usability and resource management.

Cluster-in-a-box

RAIDIX-based Trinity FlexApp storage systems support heterogeneous clusters in Active-Active mode, which allows you to vertically scale the system without interrupting access to data and quickly replacing controllers with more modern and productive controllers. Thus, possible current and future risks for the qualitative development of the system as a whole are minimized.

High performance and data protection

The key tasks of the system are security, consistency and efficiency of simultaneous access to data for specific groups of users and competitive connections based on corporate policies and directories. In addition, RAIDIX includes the Silent Data Corruption Protection mechanism and provides resistance to errors associated with disk-level data distortion (read interference, resonance due to vibrations and impacts).

Solutions based on RAIDIX based on NVDIMM-N are already used not only in Russia, but also abroad. For example, in HPC-projects for the largest scientific cluster in Japan. Software-defined RAIDIX technology meets the needs of high-performance computing, providing a minimum computing speed (up to 25 GB / s per processor core), high resiliency (proprietary RAID levels are 6, 7.3, N + M), scalability and compatibility with Intel Luster *.

Source: https://habr.com/ru/post/350600/

All Articles