Web application caching and performance

Caching allows you to increase the performance of web applications through the use of previously stored data, such as responses to network queries or the results of calculations. Thanks to the cache, the next time the client accesses the same data, the server can service requests faster. Caching is an efficient architectural pattern, since most programs often access the same data and instructions. This technology is present at all levels of computing systems. Caches are at processors, hard drives, servers, browsers.

Nick Karnik, the author of the material, the translation of which we are publishing today, offers to talk about the role of caching in the performance of web applications, having considered caching tools of different levels, starting with the lowest. He pays special attention to where the data can be cached, not how it happens.

')

We believe that understanding the features of caching systems, each of which makes a certain contribution to the speed of application response to external influences, broadens the web developer’s horizons and helps him to create fast and reliable systems.

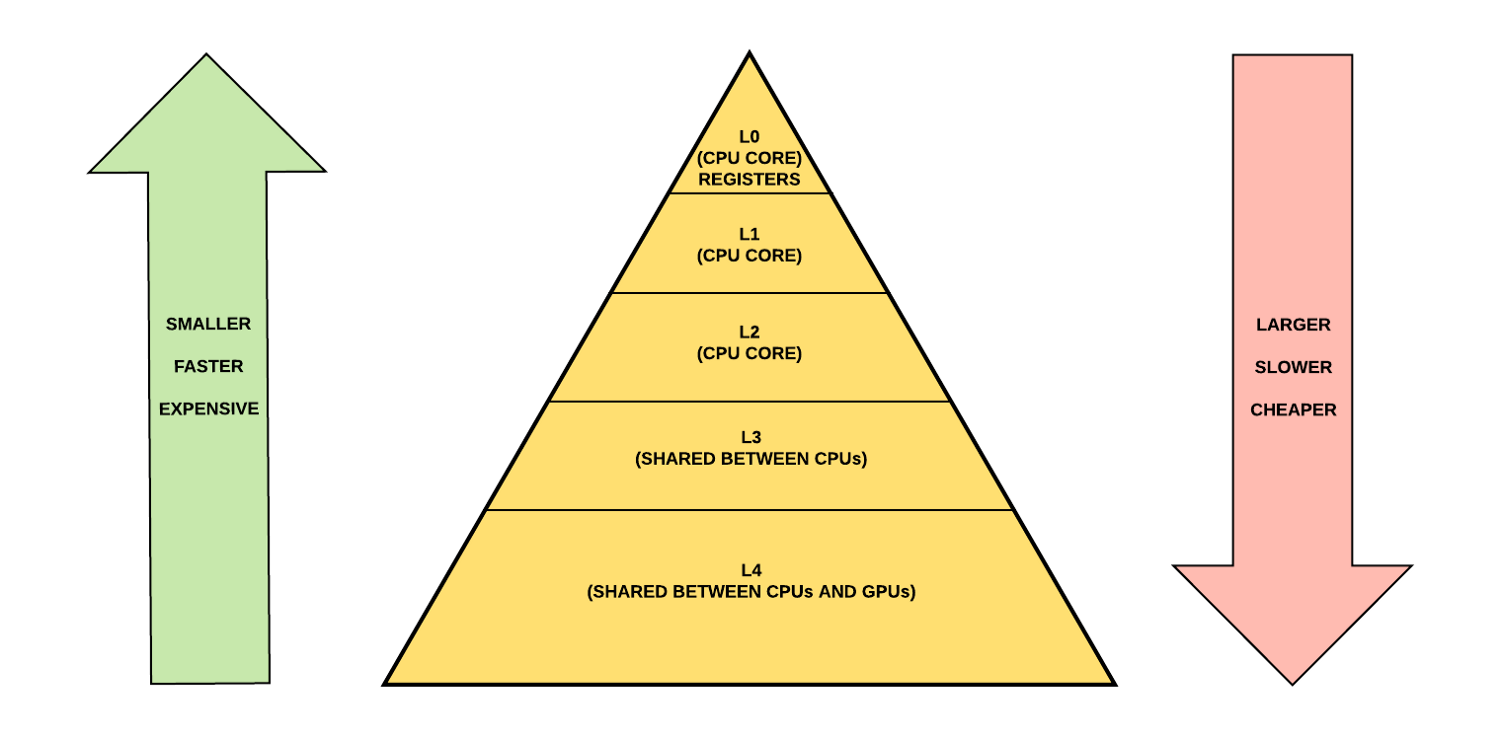

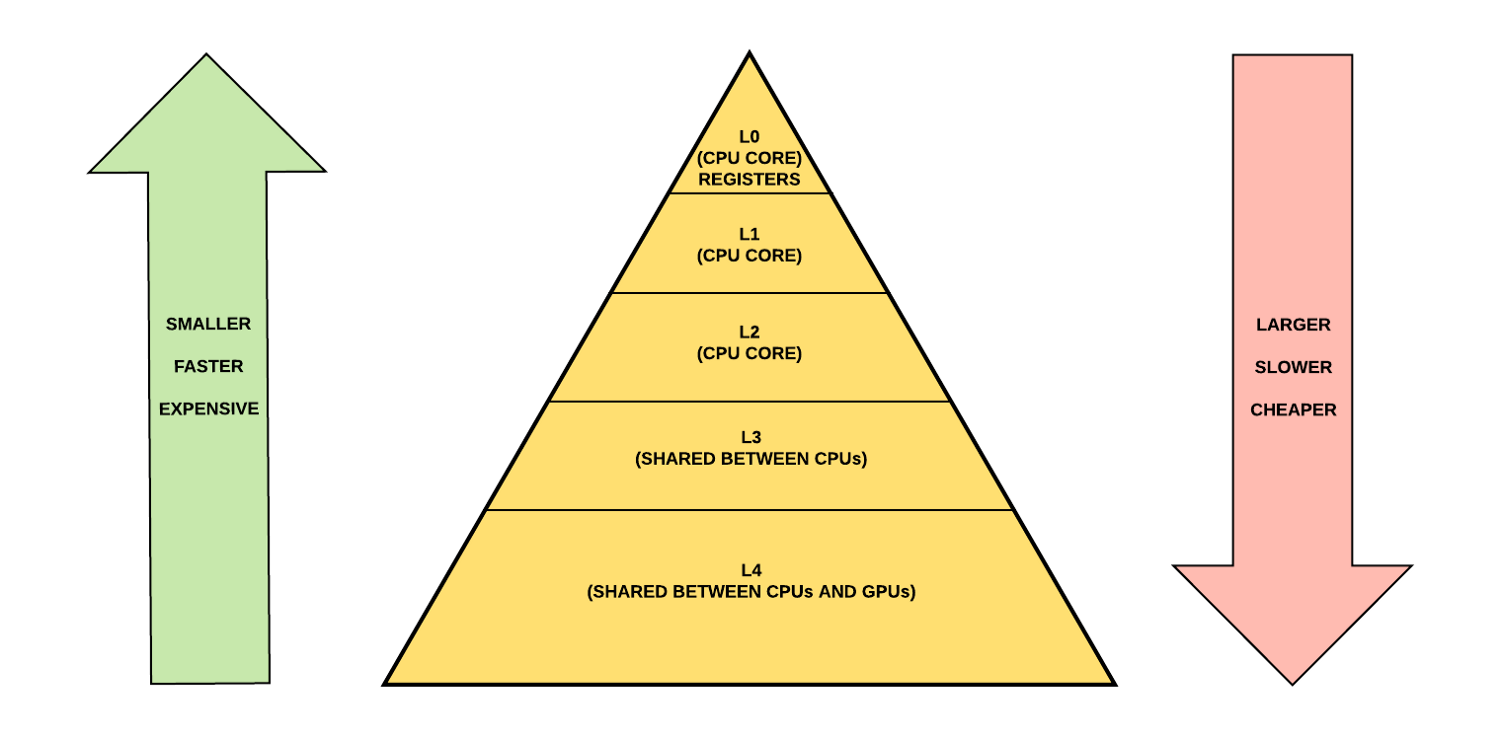

Let's start our talk about caches from the lowest level - from the processor. Processor cache memory is very fast memory that plays the role of a buffer between the processor (CPU) and random access memory (RAM). The cache memory stores data and instructions that are accessed most often, so that the processor can access all of this almost instantly.

Processors have a special memory, represented by processor registers, which is usually a small storage of information that provides extremely high data exchange rates. Registers are the fastest memory with which the processor can operate, which is located as close as possible to the rest of its mechanisms and has a small volume. Sometimes registers are called a zero-level cache (L0 Cache, L is short for Layer).

Processors, in addition, have access to several more levels of cache memory. This is up to four cache levels, which, respectively, are called first, second, third, and fourth level caches (L0 - L4 Cache). The level of the processor registers, in particular, whether it will be a zero or first level cache, is determined by the architecture of the processor and the motherboard. In addition, the system architecture determines where exactly - on the processor, or on the motherboard, the cache memory of different levels is physically located.

Memory structure in some newest CPUs

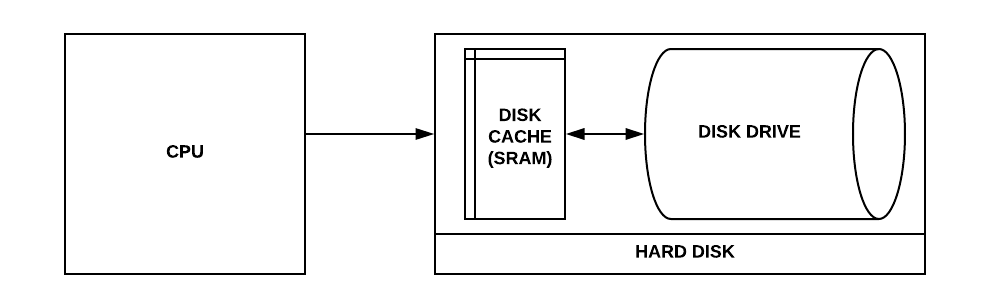

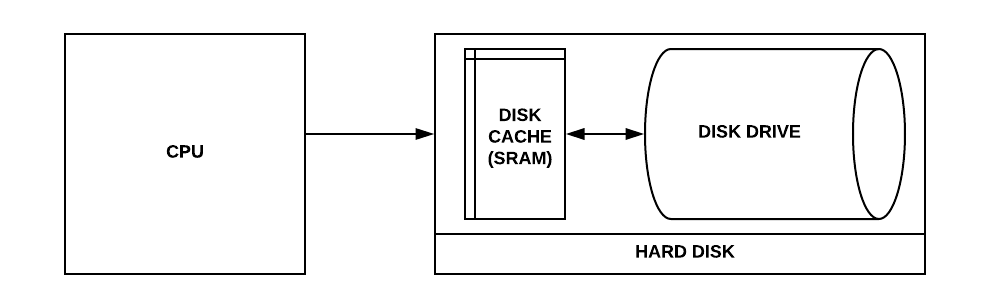

Hard disks (HDD, Hard Disk Drive) used for permanent data storage are, in comparison with RAM, intended for short-term information storage, devices are rather slow. However, it should be noted that the speed of permanent storage of information increases due to the proliferation of solid-state drives (SSD, Solid State Drive).

In long-term information storage systems, disk cache (also called a disk buffer or a cache buffer) is the memory built into the hard disk that acts as a buffer between the processor and the physical hard disk.

Hard disk cache

Disk caches work on the assumption that when something is written to the disk, or something is read from it, there is a chance that in the near future this data will be accessed again.

The difference between temporary storage of data in RAM and permanent storage on a hard disk is manifested in the speed of working with information, in the cost of carriers and in their proximity to the processor.

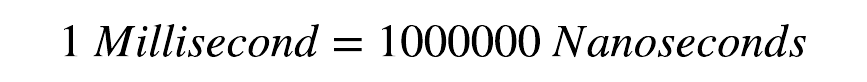

The response time of the RAM is tens of nanoseconds, while the hard disk needs tens of milliseconds. The difference in speed of disks and memory is six orders of magnitude!

One millisecond equals one million nanoseconds

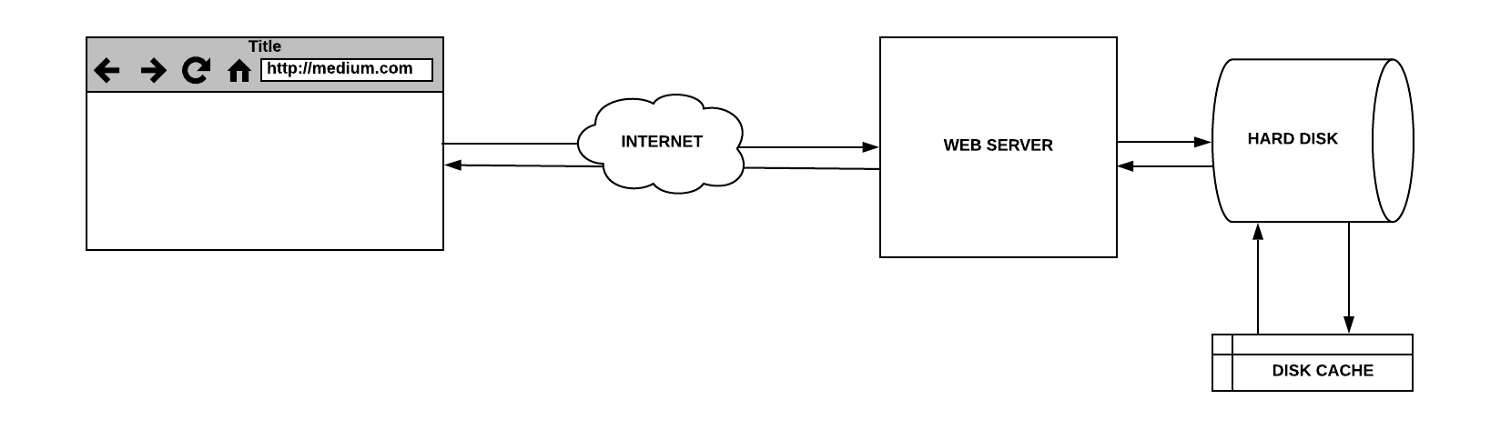

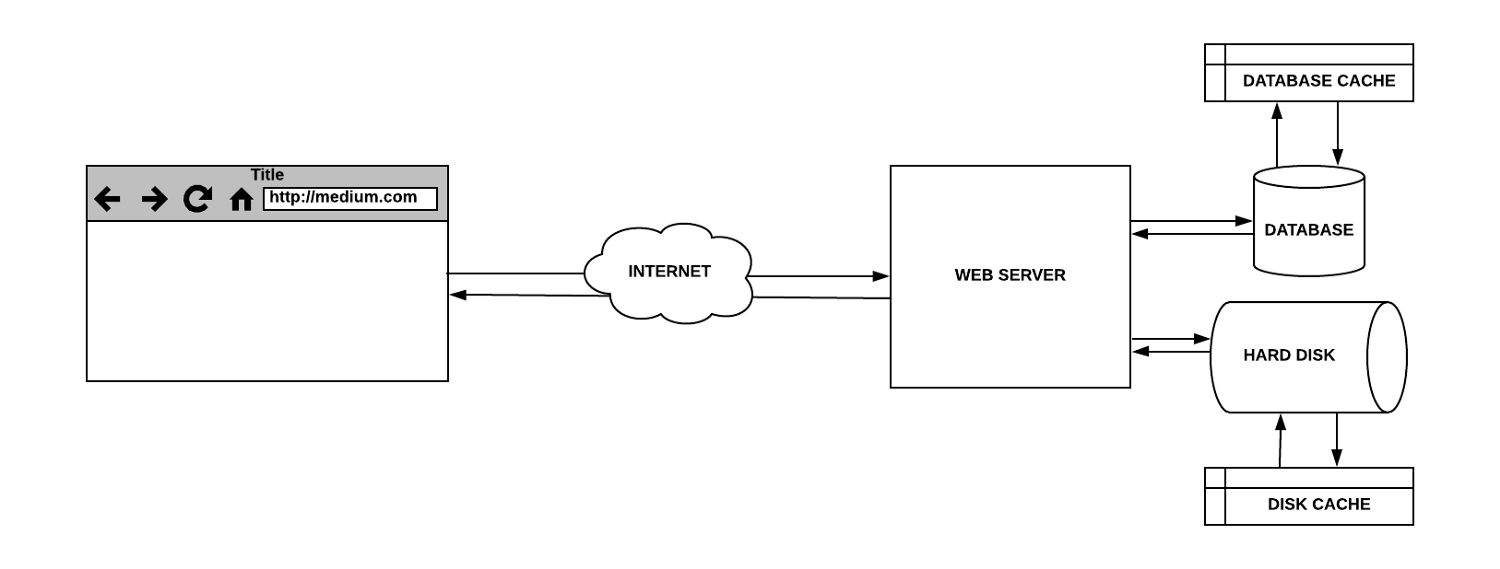

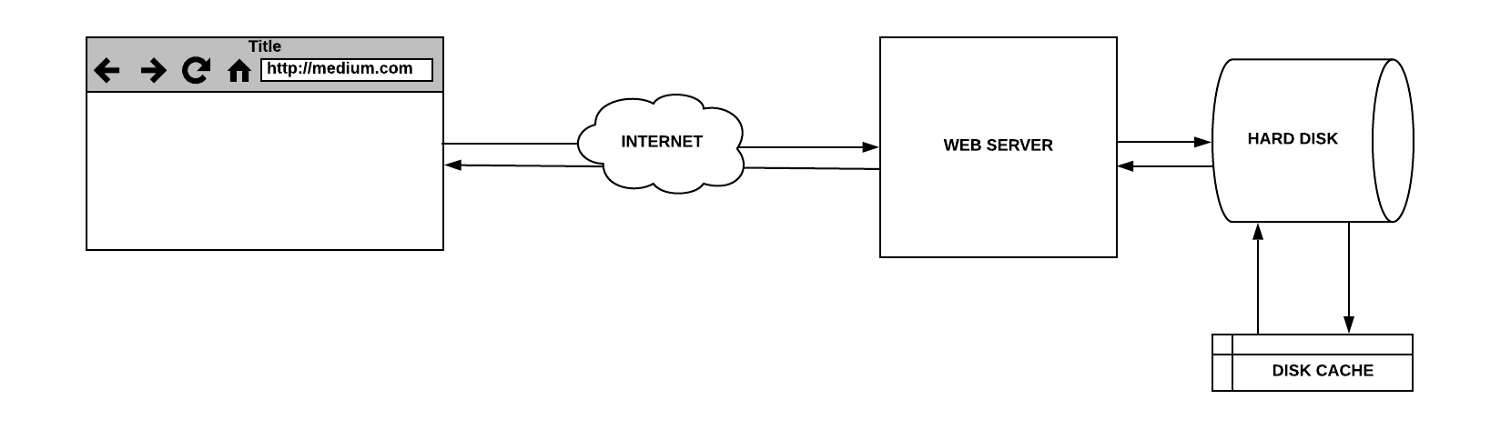

Now that we have discussed the role of caching in the basic mechanisms of computer systems, consider an example illustrating the use of caching concepts in the interaction of a client represented by a web browser and a server that, in response to client requests, sends it some data. At the very beginning we have a simple web server, which, responding to a client request, reads data from the hard disk. At the same time, let's imagine that there are no special caching systems between the client and the server. Here's what it looks like.

Simple web server

When the above system works, when the client accesses the server directly, and the latter, independently processing the request, reads the data from the hard disk and sends it to the client, it does not do without a cache, since the buffer will be used when working with the disk.

At the first request, the hard disk will check the cache, in which, in this case, there will be nothing, which will lead to the so-called “cache miss”. The data is then counted from the disk itself and will fall into its cache, which corresponds to the assumption that this data may be needed again.

With subsequent requests to get the same data, the cache search will be successful, this is the so-called “cache hit”. The data in response to the request will come from the disk buffer until they are overwritten, which, if you re-access the same data, will result in a cache miss.

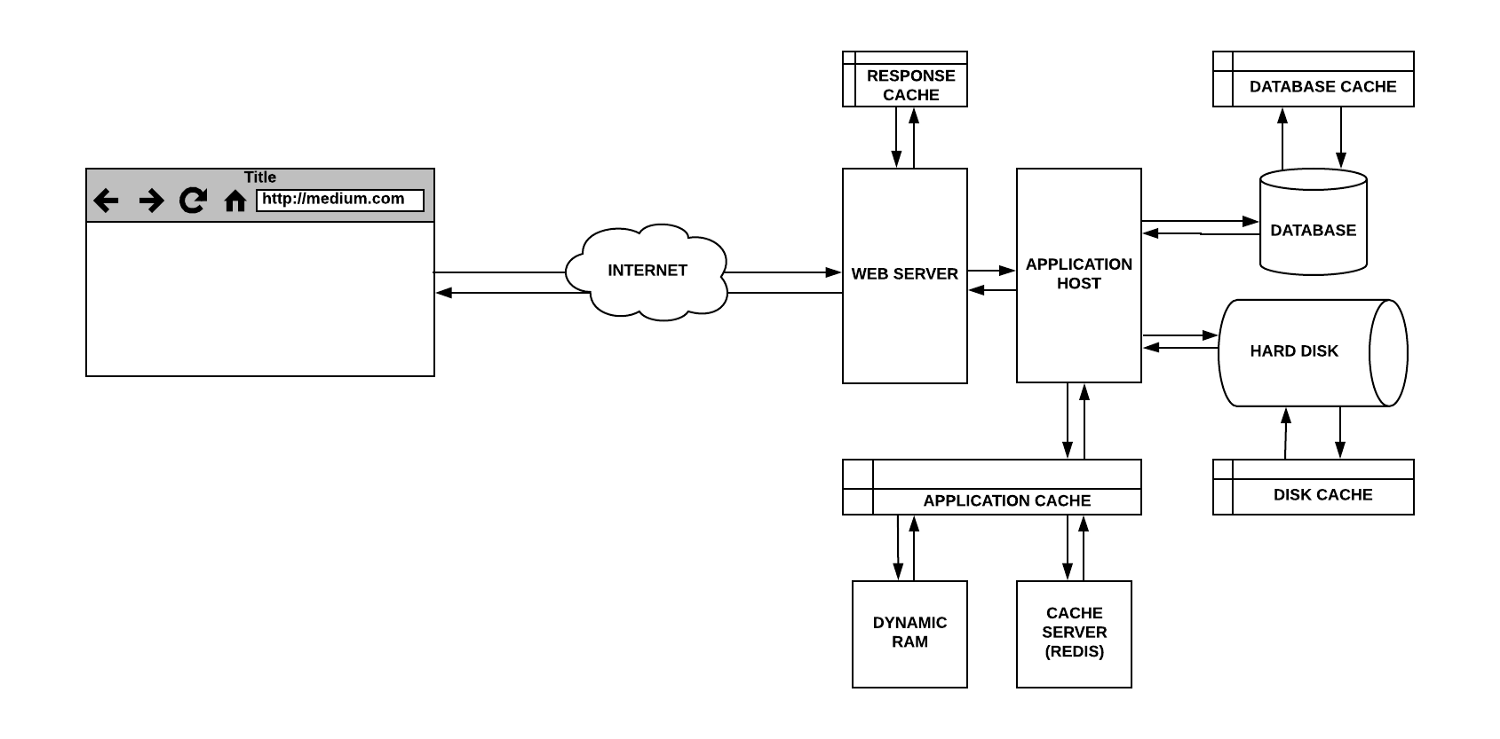

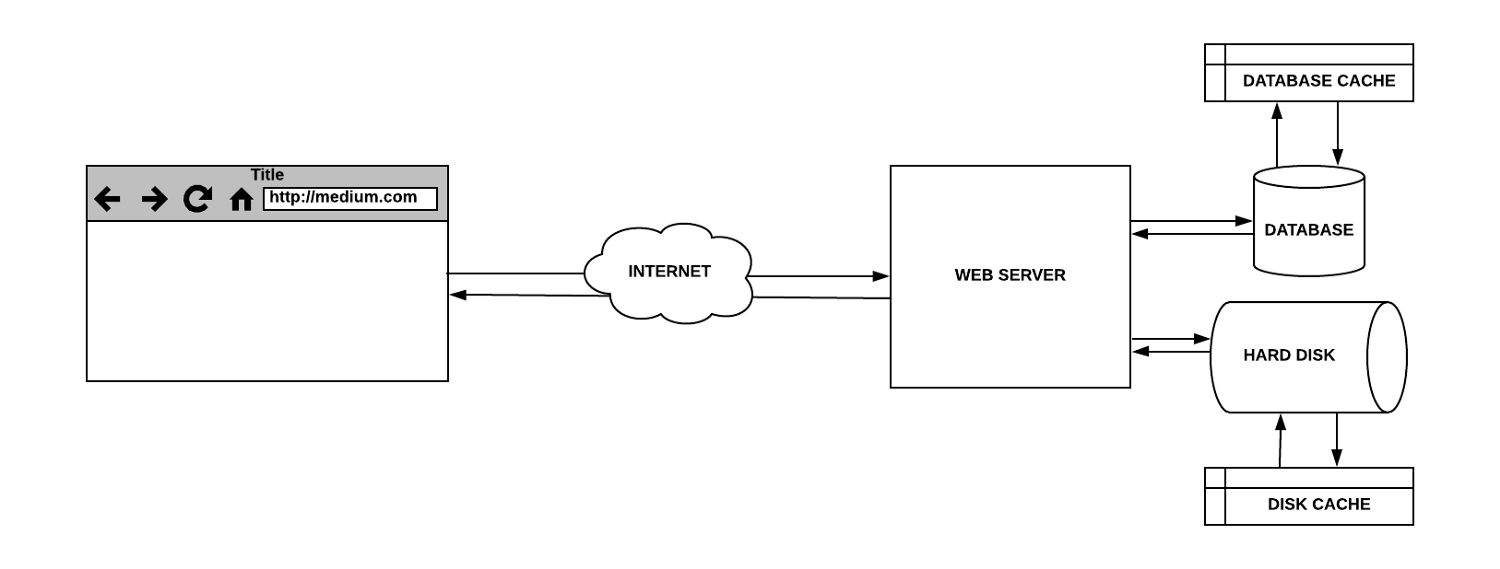

Let's complicate our example, add a database here. Database queries can be slow and require serious system resources, since the database server needs to perform some calculations to form a response. If requests are repeated, caching them with database tools will help reduce the response time. In addition, caching is useful in situations where several computers work with the database by executing the same queries.

Simple web server with database

Most database servers are configured by default for optimal caching options. However, there are many settings that can be modified so that the database subsystem better matches the features of a particular application.

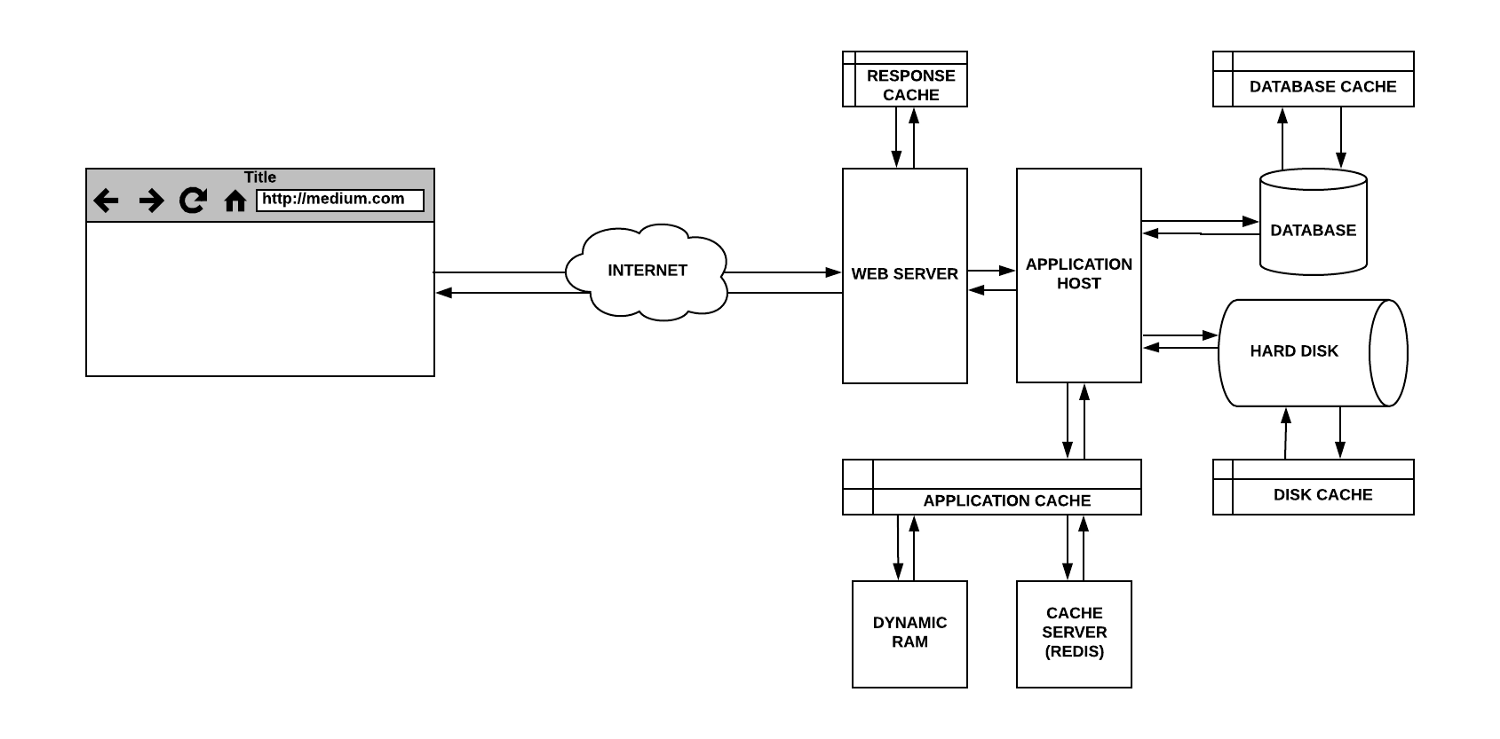

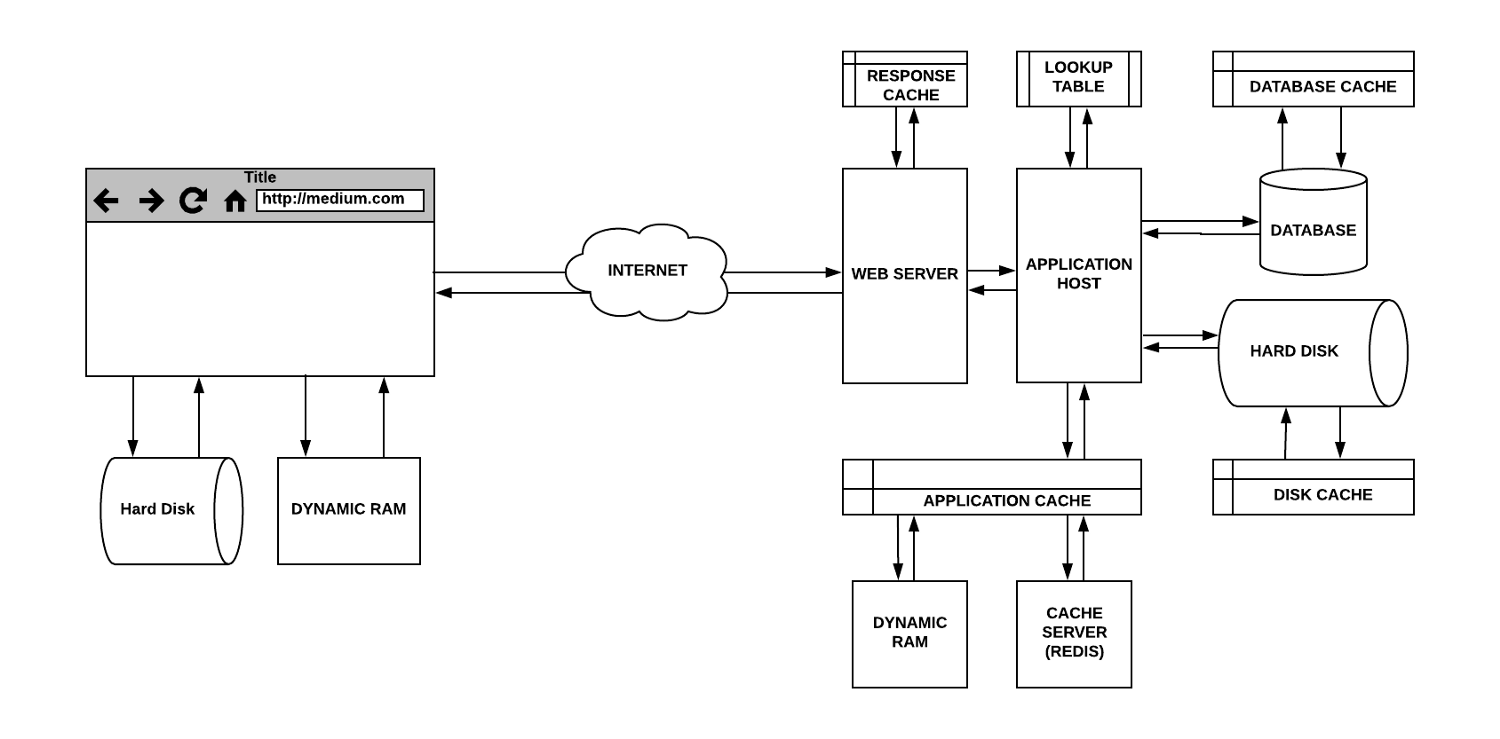

We continue to develop our example. Now the web server, formerly considered as a single entity, is divided into two parts. One of them, the web server itself, is now engaged in interaction with clients and with the server application that is already working with storage systems. The web server can be configured to cache the responses; as a result, it will not have to constantly send similar requests to the server application. Similarly, the main application can cache some parts of its own responses to resource-intensive database requests or to frequent file requests.

Answer Cache and Application Cache

Web server responses are cached in RAM. The application cache can be stored either locally, in memory, or on a special caching server that uses a database, like Redis, which stores data in RAM.

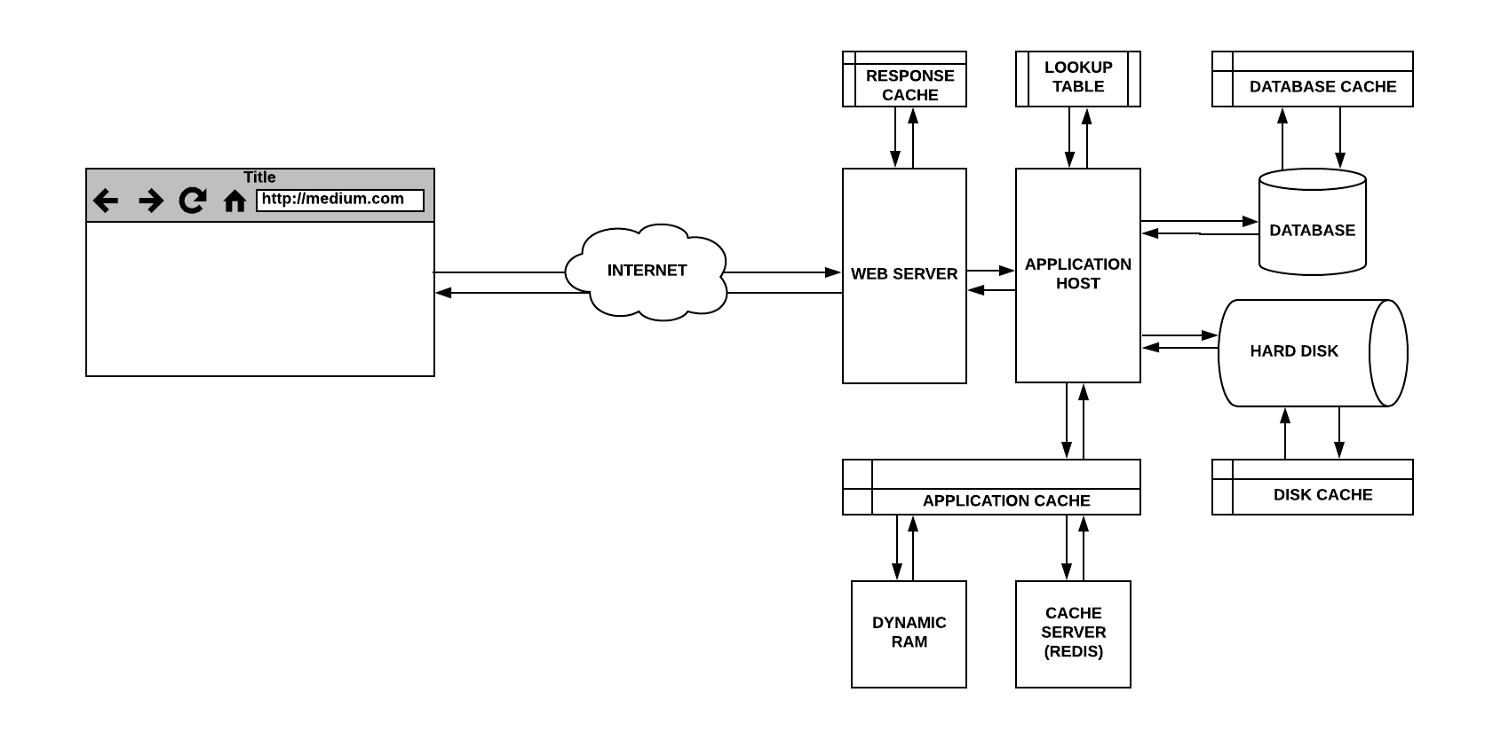

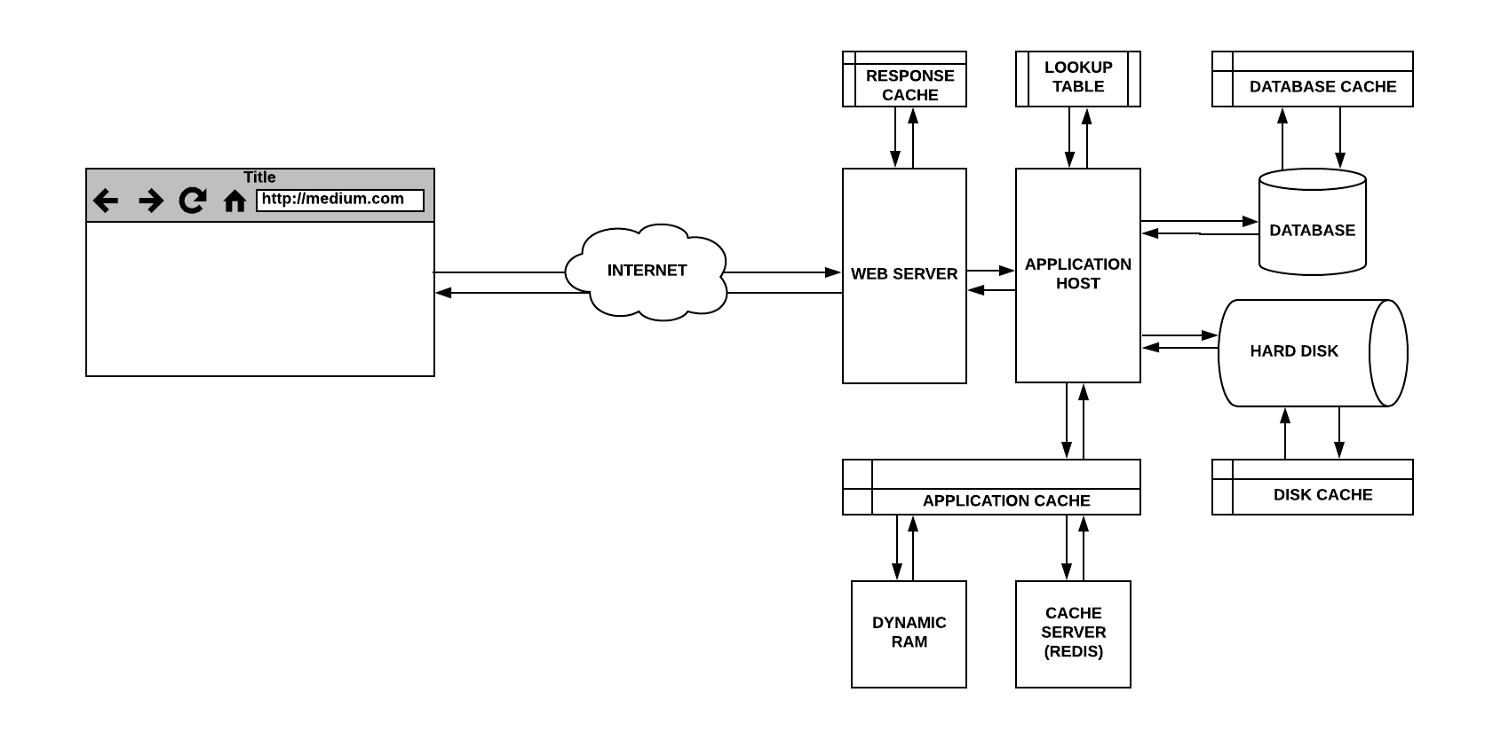

Now let's talk about optimizing the performance of the server application through memoization. This is a kind of caching used to optimize work with resource-intensive functions. This technique allows you to perform a full cycle of calculations for a certain set of input data only once, and with the following calls to a function with the same input data you can immediately output the result found earlier. Memoisation is realized by means of so-called “lookup tables”, which store keys and values. The keys correspond to the input data of the function, and the values correspond to the results that the function returns when passing this input data to it.

Memoization of a function using the lookup table

Memoization is a common technique used to improve program performance. However, it may not be particularly useful when dealing with resource-intensive functions that are rarely called, or with functions that, and without memoization, work fairly quickly.

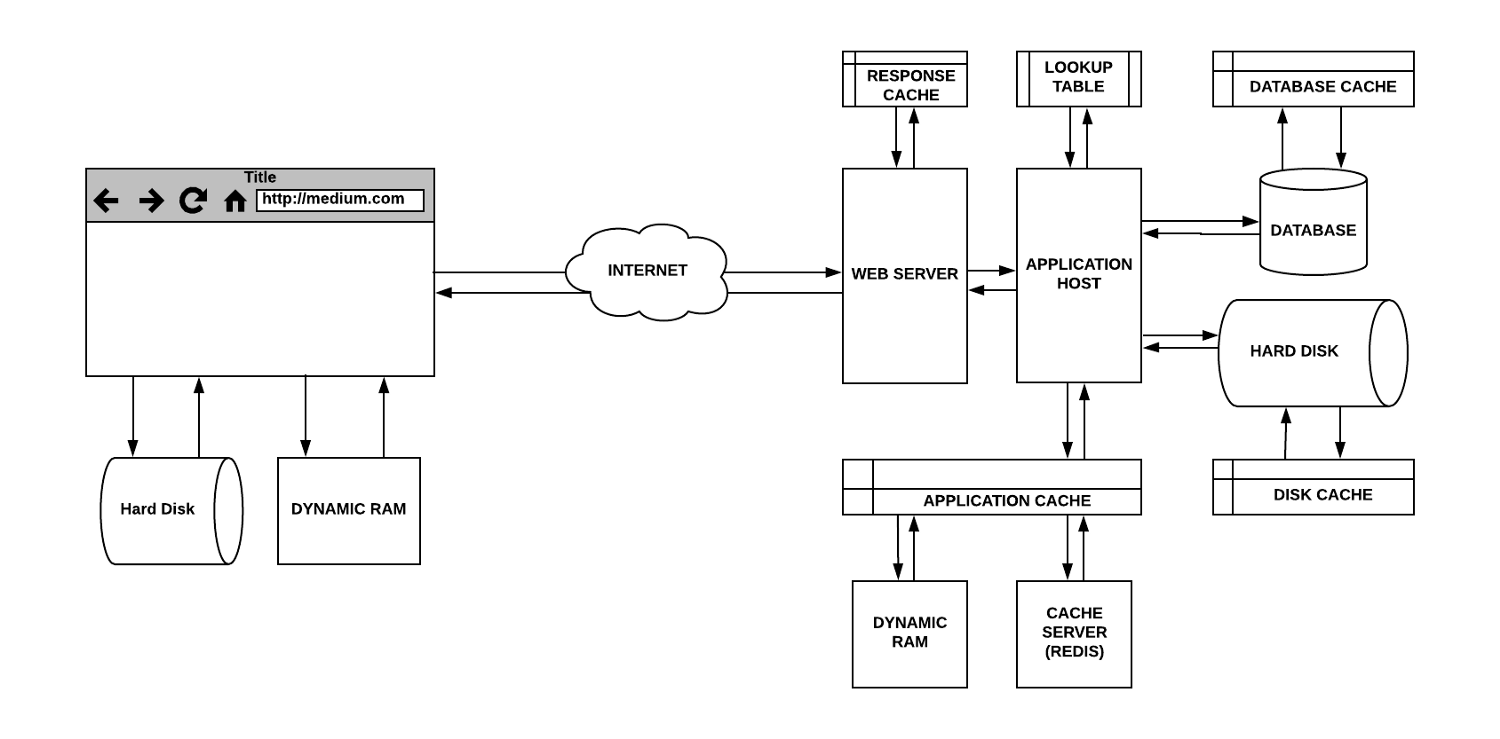

Now let's move to the client side and talk about caching in browsers. Each browser has an implementation of the HTTP cache (also called a web cache), which is designed for temporary storage of materials received from the Internet, such as HTML pages, JavaScript files and images.

This cache is used when the server’s response contains properly configured HTTP headers that indicate to the browser when and for how long it can cache the server’s response.

Before us is a very useful technology, which gives the following benefits to all participants of data exchange:

Browser caching

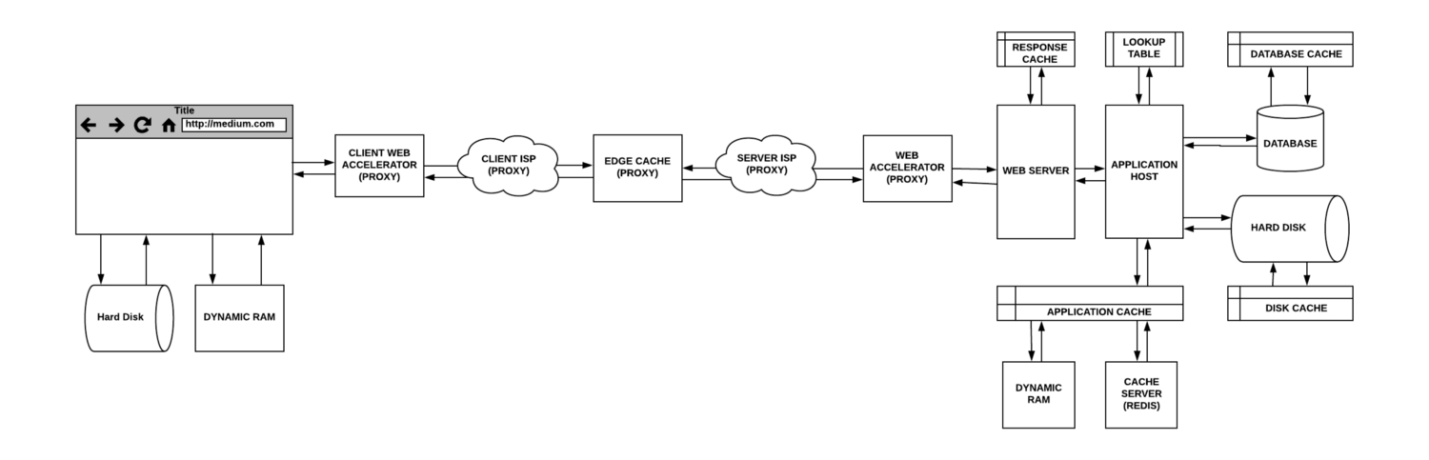

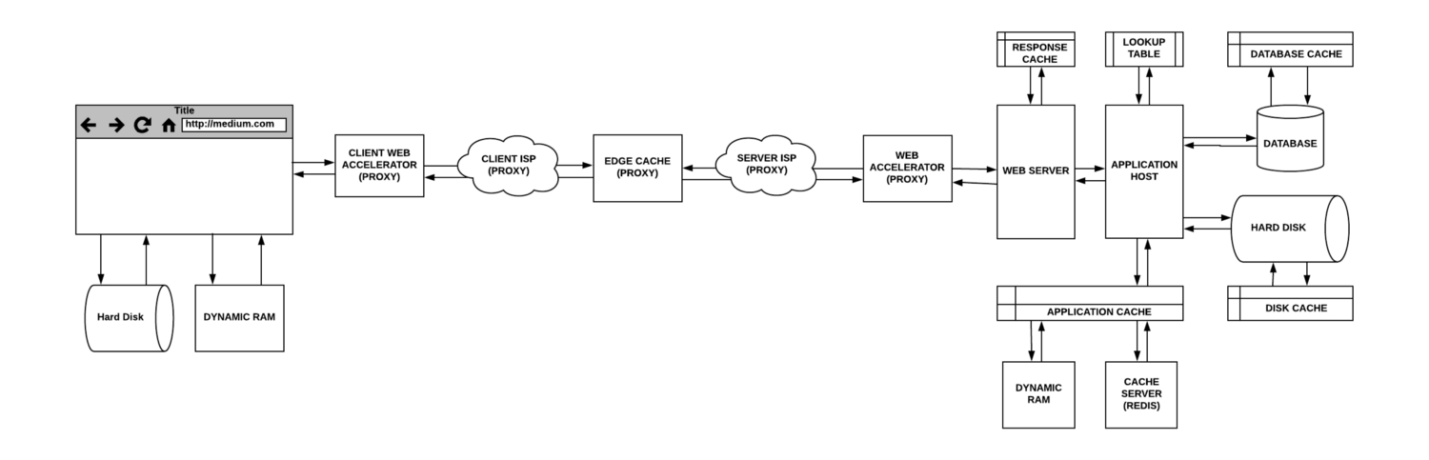

In computer networks, proxy servers can be represented by special hardware or related applications. They play the role of intermediaries between clients and servers storing the data that these clients require. Caching is one of the tasks that they solve. Consider the different types of proxy servers.

A gateway is a proxy server that redirects incoming requests or outgoing responses without modifying them. Such proxy servers are also called tunneling proxy (tunneling proxy), web proxy (web proxy), proxy (proxy), or application level proxy (application level proxy). These proxy servers are commonly shared, for example, by all clients behind the same firewall, which makes them well suited for query caching.

Direct proxy server (forward proxy, often such servers are called simply proxy server) is usually installed on the client side. A web browser that is configured to use a direct proxy server will send outgoing requests to this server. Then these requests will be redirected to the target server located on the Internet. One of the advantages of direct proxies is that they protect customer data (however, if we talk about ensuring anonymity on the Internet, it will be safer to use VPN).

A web accelerator (web accelerator) is a proxy server that reduces the time it takes to access a site. He does this by requesting in advance from the server the documents that clients most likely will need in the near future. Such servers, in addition, can compress documents, speed up encryption operations, reduce the quality and size of images, and so on.

A reverse proxy server (reverse proxy) is usually a server located in the same place as the web server with which it interacts. Reverse proxies are designed to prevent direct access to servers located on private networks. Reverse proxies are used to load balance between multiple internal servers, provide SSL authentication or query caching capabilities. These proxies perform server-side caching; they help the main servers handle a large number of requests.

Reverse proxy servers are located close to the servers. There is a technology in which the caching servers are located as close as possible to the data consumers. This is the so-called edge caching, represented by content delivery networks (CDN, Content Delivery Network). For example, if you visit a popular website and download some static data, it will be cached. Each next user who has requested the same data will receive it, before the expiration of their caching, from the caching server. These servers, determining the relevance of the information, are oriented to the servers that store the original data.

Proxy servers in the client-server communication infrastructure

In this article, we looked at the various levels of data caching that are used in the process of exchanging information between the client and the server. Web applications cannot instantly react to user exposure, which, in particular, is related to actions requiring data exchange with the servers of these applications, with the need to perform some calculations before sending a response. The time it takes to transfer data from the server to the client includes the time it takes to find the necessary data on the disk, network delays, processing request queues, network bandwidth throttling mechanisms, and much more. If we take into account that all this can occur on many computers located between the client and the server, then we can say that all these delays can seriously increase the time required for a request to arrive at the server and the client receives a response.

A properly configured caching system can significantly improve overall server performance. Caches reduce delays that inevitably occur when data is transmitted over a network, help save network traffic, and, as a result, reduce the time required for the browser to display the web page requested from the server.

Dear readers! What caching technologies do you use in your projects?

Nick Karnik, the author of the material, the translation of which we are publishing today, offers to talk about the role of caching in the performance of web applications, having considered caching tools of different levels, starting with the lowest. He pays special attention to where the data can be cached, not how it happens.

')

We believe that understanding the features of caching systems, each of which makes a certain contribution to the speed of application response to external influences, broadens the web developer’s horizons and helps him to create fast and reliable systems.

Processor cache

Let's start our talk about caches from the lowest level - from the processor. Processor cache memory is very fast memory that plays the role of a buffer between the processor (CPU) and random access memory (RAM). The cache memory stores data and instructions that are accessed most often, so that the processor can access all of this almost instantly.

Processors have a special memory, represented by processor registers, which is usually a small storage of information that provides extremely high data exchange rates. Registers are the fastest memory with which the processor can operate, which is located as close as possible to the rest of its mechanisms and has a small volume. Sometimes registers are called a zero-level cache (L0 Cache, L is short for Layer).

Processors, in addition, have access to several more levels of cache memory. This is up to four cache levels, which, respectively, are called first, second, third, and fourth level caches (L0 - L4 Cache). The level of the processor registers, in particular, whether it will be a zero or first level cache, is determined by the architecture of the processor and the motherboard. In addition, the system architecture determines where exactly - on the processor, or on the motherboard, the cache memory of different levels is physically located.

Memory structure in some newest CPUs

Hard disk cache

Hard disks (HDD, Hard Disk Drive) used for permanent data storage are, in comparison with RAM, intended for short-term information storage, devices are rather slow. However, it should be noted that the speed of permanent storage of information increases due to the proliferation of solid-state drives (SSD, Solid State Drive).

In long-term information storage systems, disk cache (also called a disk buffer or a cache buffer) is the memory built into the hard disk that acts as a buffer between the processor and the physical hard disk.

Hard disk cache

Disk caches work on the assumption that when something is written to the disk, or something is read from it, there is a chance that in the near future this data will be accessed again.

About the speed of hard drives and RAM

The difference between temporary storage of data in RAM and permanent storage on a hard disk is manifested in the speed of working with information, in the cost of carriers and in their proximity to the processor.

The response time of the RAM is tens of nanoseconds, while the hard disk needs tens of milliseconds. The difference in speed of disks and memory is six orders of magnitude!

One millisecond equals one million nanoseconds

Simple web server

Now that we have discussed the role of caching in the basic mechanisms of computer systems, consider an example illustrating the use of caching concepts in the interaction of a client represented by a web browser and a server that, in response to client requests, sends it some data. At the very beginning we have a simple web server, which, responding to a client request, reads data from the hard disk. At the same time, let's imagine that there are no special caching systems between the client and the server. Here's what it looks like.

Simple web server

When the above system works, when the client accesses the server directly, and the latter, independently processing the request, reads the data from the hard disk and sends it to the client, it does not do without a cache, since the buffer will be used when working with the disk.

At the first request, the hard disk will check the cache, in which, in this case, there will be nothing, which will lead to the so-called “cache miss”. The data is then counted from the disk itself and will fall into its cache, which corresponds to the assumption that this data may be needed again.

With subsequent requests to get the same data, the cache search will be successful, this is the so-called “cache hit”. The data in response to the request will come from the disk buffer until they are overwritten, which, if you re-access the same data, will result in a cache miss.

Database caching

Let's complicate our example, add a database here. Database queries can be slow and require serious system resources, since the database server needs to perform some calculations to form a response. If requests are repeated, caching them with database tools will help reduce the response time. In addition, caching is useful in situations where several computers work with the database by executing the same queries.

Simple web server with database

Most database servers are configured by default for optimal caching options. However, there are many settings that can be modified so that the database subsystem better matches the features of a particular application.

Web server response caching

We continue to develop our example. Now the web server, formerly considered as a single entity, is divided into two parts. One of them, the web server itself, is now engaged in interaction with clients and with the server application that is already working with storage systems. The web server can be configured to cache the responses; as a result, it will not have to constantly send similar requests to the server application. Similarly, the main application can cache some parts of its own responses to resource-intensive database requests or to frequent file requests.

Answer Cache and Application Cache

Web server responses are cached in RAM. The application cache can be stored either locally, in memory, or on a special caching server that uses a database, like Redis, which stores data in RAM.

Memoization of functions

Now let's talk about optimizing the performance of the server application through memoization. This is a kind of caching used to optimize work with resource-intensive functions. This technique allows you to perform a full cycle of calculations for a certain set of input data only once, and with the following calls to a function with the same input data you can immediately output the result found earlier. Memoisation is realized by means of so-called “lookup tables”, which store keys and values. The keys correspond to the input data of the function, and the values correspond to the results that the function returns when passing this input data to it.

Memoization of a function using the lookup table

Memoization is a common technique used to improve program performance. However, it may not be particularly useful when dealing with resource-intensive functions that are rarely called, or with functions that, and without memoization, work fairly quickly.

Browser caching

Now let's move to the client side and talk about caching in browsers. Each browser has an implementation of the HTTP cache (also called a web cache), which is designed for temporary storage of materials received from the Internet, such as HTML pages, JavaScript files and images.

This cache is used when the server’s response contains properly configured HTTP headers that indicate to the browser when and for how long it can cache the server’s response.

Before us is a very useful technology, which gives the following benefits to all participants of data exchange:

- Improved user experience of working with the site, as resources from the local cache are loaded very quickly. While receiving a response, the signal does not flow from the client to the server and back (RTT, Round Trip Time), since the request does not go to the network.

- The load on the server application and on other server components responsible for processing requests is reduced.

- Some part of network resources, which other Internet users can use now, are released, money is saved for payment for traffic.

Browser caching

Caching and proxy servers

In computer networks, proxy servers can be represented by special hardware or related applications. They play the role of intermediaries between clients and servers storing the data that these clients require. Caching is one of the tasks that they solve. Consider the different types of proxy servers.

▍ Gateways

A gateway is a proxy server that redirects incoming requests or outgoing responses without modifying them. Such proxy servers are also called tunneling proxy (tunneling proxy), web proxy (web proxy), proxy (proxy), or application level proxy (application level proxy). These proxy servers are commonly shared, for example, by all clients behind the same firewall, which makes them well suited for query caching.

▍Direct proxy servers

Direct proxy server (forward proxy, often such servers are called simply proxy server) is usually installed on the client side. A web browser that is configured to use a direct proxy server will send outgoing requests to this server. Then these requests will be redirected to the target server located on the Internet. One of the advantages of direct proxies is that they protect customer data (however, if we talk about ensuring anonymity on the Internet, it will be safer to use VPN).

▍Web accelerators

A web accelerator (web accelerator) is a proxy server that reduces the time it takes to access a site. He does this by requesting in advance from the server the documents that clients most likely will need in the near future. Such servers, in addition, can compress documents, speed up encryption operations, reduce the quality and size of images, and so on.

▍ Back proxy servers

A reverse proxy server (reverse proxy) is usually a server located in the same place as the web server with which it interacts. Reverse proxies are designed to prevent direct access to servers located on private networks. Reverse proxies are used to load balance between multiple internal servers, provide SSL authentication or query caching capabilities. These proxies perform server-side caching; they help the main servers handle a large number of requests.

▍ Edge caching

Reverse proxy servers are located close to the servers. There is a technology in which the caching servers are located as close as possible to the data consumers. This is the so-called edge caching, represented by content delivery networks (CDN, Content Delivery Network). For example, if you visit a popular website and download some static data, it will be cached. Each next user who has requested the same data will receive it, before the expiration of their caching, from the caching server. These servers, determining the relevance of the information, are oriented to the servers that store the original data.

Proxy servers in the client-server communication infrastructure

Results

In this article, we looked at the various levels of data caching that are used in the process of exchanging information between the client and the server. Web applications cannot instantly react to user exposure, which, in particular, is related to actions requiring data exchange with the servers of these applications, with the need to perform some calculations before sending a response. The time it takes to transfer data from the server to the client includes the time it takes to find the necessary data on the disk, network delays, processing request queues, network bandwidth throttling mechanisms, and much more. If we take into account that all this can occur on many computers located between the client and the server, then we can say that all these delays can seriously increase the time required for a request to arrive at the server and the client receives a response.

A properly configured caching system can significantly improve overall server performance. Caches reduce delays that inevitably occur when data is transmitted over a network, help save network traffic, and, as a result, reduce the time required for the browser to display the web page requested from the server.

Dear readers! What caching technologies do you use in your projects?

Source: https://habr.com/ru/post/350310/

All Articles