Russian storage systems from the company StoreQuant

Looking for reliable and fast storage? Use StoreQuant Solutions

Data storage systems (DSS) are a key element of any modern IT system, including business-oriented systems. Foreign companies have even introduced a term that describes such systems - Business Critical Systems or Mission Critical Systems.

Indeed, the processing and storage of data is an important task, since their loss or short-term failures in the storage system can lead to serious consequences for the business as a whole.

We will not translate the term Business Critical into Russian, since it is intuitively understandable to a wide audience of professionals working in this field, but our task is to make available an understanding of the basics of storage for a wider audience. Therefore, when we refer to the term “storage system”, we will mean a Business Critical storage system, since, in our understanding, a storage system must necessarily perform a number of functions and implement the necessary requirements, which are precisely laid down in the term Business Critical.

In this article we will talk about the storage architecture, its capabilities and advantages over the competitive solutions of foreign vendors.

Using our experience, as well as the experience of many customers using storage systems, we will further consider typical examples of how to solve actual problems with the help of the Russian patented storage technology from StoreQuant.

What do customers buy and is there a choice in the market?

Analyzing the market, we formulated a number of requirements that must necessarily be met in any storage system. Unfortunately, now buyers of foreign storage systems in Russia do not have the opportunity to influence the development of products or in any way adapt them to their needs with the help of storage manufacturers.

Basic requirements for modern storage systems:

- The storage system should work 24x7 and maintain the upgrade without stopping the service.

- In the storage system, the data recovery technology should be implemented without user participation in case of a data carrier failure.

- The storage architecture should not have a single point of failure.

- Storage should adapt to the load from the application without changing the configuration of the applications themselves.

- The storage system should contain self-diagnostic functions at the data access protocol level.

The overwhelming number of storage failures occur due to software errors or manifests itself due to incompatibility at the level of data access protocols.

It is in accordance with these principles that we create our products and solve clients' problems.

What is storage and how to build it?

Now there are a lot of various online courses for storage vendors, within which they will tell you how to manage storage systems, as well as what functions they have, but they won't tell you the main thing about how this all works in reality.

The storage system is a software and hardware complex (in English terminology Appliance). The development of storage is very much due to the software, which in practice is called microcode by many vendors. The software implements almost all the important elements of storage, and this is a competent technical approach, as it allows you to save on the development of storage hardware. The term Software Defined Storage (SDS), in our understanding, is incorrect, since there can be no purely software implementation for storage, any version of SDS is somehow related to the hardware and depends on it.

We initially introduced a number of principles that we adhere to when developing storage systems:

- Do NOT use Open Source tools for data management;

- DO NOT rely on the architecture of the hardware platform, except for the special critical functions of the storage system;

- Do NOT program at a low level (Kernel), except for critical areas.

Many pioneers who create their solutions in the field of storage, naively believe that it is enough to take a well-advertised Open Source-package for managing data on the Internet and you can solve any problems by first taking an inexpensive server. It is not difficult to guess that the Open Source solutions in the field of storage were created by their authors for completely different purposes and that they should be used very carefully to create them.

DSS is a system that works as a single and well-established mechanism and it is difficult and expensive to assemble it from the works of various authors.

And who will ensure the security and integrity of customer data?

Trusting open source solutions to managing data in a complex system like storage is very risky.

After analyzing how foreign storage vendors approach the solution of this problem, we developed our storage core without using the Open Source component.

We have identified the main elements of storage, which will be discussed in this article.

Why build a storage system?

We will cover many aspects of this issue in the future, but we will formulate the main reasons now:

- To be a leader in storage is to create storage. Being a consumer is buying someone else's decisions.

- Storage is easily integrated into any IT landscape, unlike, for example, from Russian DBMS, which is difficult to adapt to existing applications.

- A wide range of microchips used in data processing and transmission technologies allows you to quickly design new and unique storage solutions.

- Serious data security can be ensured only in the domestic solution.

We will try to present in future articles interesting ways to implement data processing and storage algorithms and just engineering solutions.

Is there a future for storage?

Of course, there is a future for storage, since the architecture of modern computers does not allow storing large amounts of data in RAM on an ongoing basis.

Even the emergence of new In-Memory-DBMS does not cancel storage for the above reason.

That is why we were the first to implement NVMe- based storage systems in the Russian market and presented our solution in the StoreQuant Velocity Scale line. This product is the first Russian NVMe-based storage system that competes with foreign counterparts and easily integrates into the SAN infrastructure of any client.

Further we will tell about our architecture, products and solutions.

What architecture do we use in the StoreQuant Velocity Scale?

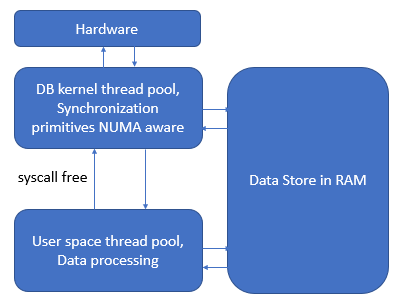

The main element of the StoreQuant storage architecture is an in-memory database engine that ensures data integrity based on the transaction processing mechanism.

Any operation associated with the processing of a command to write or read data in the storage system must comply with the concept of ACID (Atomicity, Consistency, Isolation, Durability). The DBMS storage is based on the storage of block data and can be classified as an object database. The task of the DBMS is also in fault-tolerant data processing in the memory of several controllers. The area of memory that is used by the DBMS to store data in RAM we call Cache.

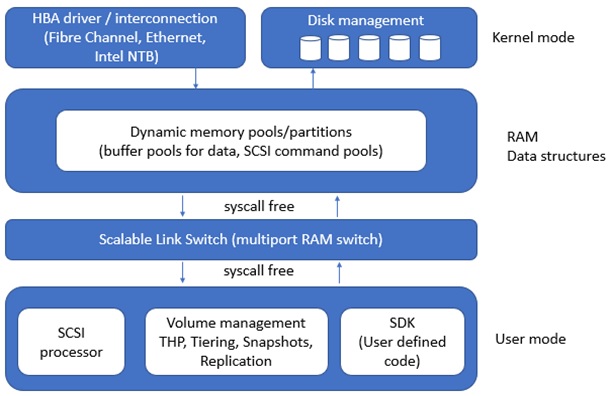

We actively use Cache to speed up I / O operations, i.e. All operations are stored primarily in the RAM memory of the storage controller. By the term Cache, we understand not just data processing in RAM, but also algorithms that control the movement of data between RAM and HDD-carriers. The block diagram of the storage software package is presented below in Figure 1.

Figure 1. Block diagram of the storage software code

By combining data processing in Cache with data synchronization algorithms, we ensure simultaneous synchronous replication of data and their availability in the event of physical equipment failures. Thanks to the ACID concept, we can be sure that all commands within the storage system are guaranteed to be executed. The block diagram of the components of the DBMS is shown in Figure 2.

Figure 2. Block diagram of the components of the database

What is High Performance Scalable Link Switch for?

A special component of the High Performance Scalable Link Switch, to which we gave the code name "URSUS" , allows you to make calls between the User space and the Kernel space without using syscall (in the syscall free scheme). URSUS allows to abstract from features of implementation of a hardware platform during the work with HBA adapters, RAID-groups, external controllers SHD. To implement the URSUS SDK, we used the C ++ language and our own C ++ template library to support a variety of primitives and data structures capable of solving block storage problems. In order to speed up development, we created an SDK that allowed us to create our own modules for implementing various information processing functions, such as information encoding, deduplication, compression, etc.

How to ensure the update of microcode storage in 24x7 mode?

URSUS allows you to update software storage modules running online in User space without stopping I / O operations. In the core of the URSUS layer, we do not use the standard mechanisms of the operating system and refer directly to the data in Cache, so we use the zero-copy data processing mechanism. In contrast to the block layer level in Linux, where multiple copying of data between different structures of the OS kernel is performed, we do not lose time on processing commands, and this allows us to reduce the latency of I / O operations on our storage system.

What are we better than foreign storage vendors?

Using sets of storage developers from foreign vendors, the development partner receives the whole range of disadvantages:

- The partner is completely dependent on proprietary controllers based on complex and inaccessible processor technologies. The resulting solution further depends entirely on the life cycle of the hardware platform.

- Full dependence on vendor technical support during development and in operation, in particular, and mainly because of the complex FPGA circuits that are used in the systems of foreign vendors.

- No guarantee of protection of your intellectual property, as the development process requires you to disclose the source code of your solution when consulting with the technical support of the foreign vendor.

The advantage of the URSUS SDK lies in the following possibilities, which we guarantee to our partners:

- The URSUS SDK architecture allows you to build a full-fledged storage system with 24x7 operation mode.

- The development team should not be qualified in the creation of storage. Enough to have the skills to develop programs for Linux and C / C ++. There is no problem in finding qualified personnel.

- Freedom in choosing a hardware platform for creating your own storage solution.

- The ability to port the SDK code to any architecture, in particular: ARM, Elbrus

- High speed of development based on SDK URSUS in comparison with other solutions and in particular such open source solutions like LIO, SCST. As a result, the partner will be able to quickly enter the market with a ready-made solution.

- Having the source code of the solution simplifies the certification of the solution to various security standards.

In our OS we use only stable versions of the Linux kernel from the Vanilla Kernels archive.

The description of the developer kit can be found on our website by the link .

How does the RAID in the StoreQuant Velocity Scale?

The basic basic storage element in the StoreQuant storage system is a RAID group.

There are various configurations of the RAID group, which are noted in Table 1.

Table 1 - RAID Configurations

| RAID type | 4 discs | 16 discs | 64 disks | 128 drives | 256 disks |

|---|---|---|---|---|---|

| RAID10 | 2D + 2D | 8D + 8D | 32D + 32D | 64D + 64D | 128D + 128D |

| RAID50 | 3D + 1P | 12D + 4P | 48D + 16P | 96D + 32P | 192D + 64P |

| RAID60 | 2D + 2P | 8D + 8P | 32D + 32P | 64D + 64P | 128D + 128P |

Parity data is distributed according to the round-robin scheme across the disk group in such a way that the failure of any disk (for example, 3D + 1P) does not affect the operation of the RAID and the availability of data.

The focus is always on a RAID group of 4 disks (basic group), using which you can build more complex RAID configurations on more disks. For example: a group of 32 disks in a 24D + 8P configuration consists of eight RAID groups in a 3D + 1P configuration. The calculation of parity occurs within the base group of 4 disks, which allows you to significantly distribute the load on the disks and increase the I / O speed.

We use the unite and conquer approach

Using the approach that we call “Unite and Conquer”, the client can simultaneously add at least 4 disks to the storage system and configure the RAID group, but can decide to either use it separately or add it to the existing RAID group. The division in Table 1 for 8, 16, 32, etc. drives are purely arbitrary; the combination can be performed even for a minimal RAID group of 4 drives, but not more than 256 drives within a single RAID group.

We integrated the NVMe protocol for solid-state disks of the following models presented in Table 2 into our software, so we manage the life cycle of each disk separately, controlling its state, availability, microcode updating, etc.

At the moment we support the NVM protocol up to version 1.2 inclusive.

Table 2 - Available StoreMuant NVMe protocol based disk drive models

| Disc model | Form factor | The amount of data TB |

|---|---|---|

| StoreQuant DataFusion 500 | 2.5 inch / U.2 | 2 |

| StoreQuant DataFusion 550 | 2.5 inch / U.2 | 3.6 |

| StoreQuant DataFusion 600 | 2.5 inch / U.2 | four |

| StoreQuant DataFusion 650 | 2.5 inch / U.2 | eight |

| StoreQuant DataFusion 700 | 2.5 inch / U.2 | eleven |

Why do I need BackEnd in StoreQuant storage?

The main engineering problem of any storage system is to create a productive, fault-tolerant channel for data exchange within the storage system. Any I / O operation generates many operations inside the storage system, which leads to the need to use a bus for data transfer with minimal latency.

If we talk about I / O operations, then in any storage there is essentially one atomic operation - data modification, which includes prefetch (reading) of data and the subsequent writing of changes to disk. Depending on the amount of data transferred and the type of RAID, writing changed data generates a lot of internal I / O operations for each disk that is part of a RAID group.

What is the Intel Non-Transparent Bridge?

In our architecture, we actively use the Intel Non-Transparent Bridge (NTB) technology, which allows you to use the built-in processor capabilities to interact with external processor systems using the PCI Express bus.

We are striving to put on the market in Russia unique solutions based on Intel NTB, now we have ready adapters to create a network connection between any two Intel-systems at a distance of up to 100 meters using an optical multimode cable.

It is important that we do not use InfiniBand or Ethernet protocols to synchronize data between storage controllers, which allows us to obtain minimal latency and sufficient PCI express bus bandwidth for our task.

To understand what result Intel Intel NTB can get, let us present the quantitative data of its tests (ping pong to synchronize data in RAM): transferring data on the x16 PCIe link between two storage controllers in 8Kb blocks allows us to achieve a throughput of 10,115 MB / s ( Megabyte per second) with an average delay of 0.81 μs (microsecond).

Why use Fiber Channel infrastructure?

However, since most customers use Fiber Channel (FC) technology and have a large amount of equipment for the FC infrastructure, we also support the FC protocol for organizing the BackEnd bus in the StoreQuant Velocity Scale storage system. It should be noted that the implementation of the FC protocol for most vendors of HBA adapters can significantly reduce our costs in the development of storage systems, since we get the ready SDK, where the main work is already happening at the SCSI level, not FC. Our strategy is to support at least two standards for the BackEnd tire in the StoreQuant Velocity Scale storage system.

When choosing a protocol for transferring data for storage, you should consider the RAM bandwidth, which depends on the amount of memory (the number of DIMMs, because the memory is multi-channel), the type of memory (frequency and speed) and the capabilities of the CPU.

Why you shouldn’t choose Infiniband infrastructure to organize BackEnd tires?

Testing various versions of platforms based on Intel, we find that in practice, the average throughput of DDR3 RAM is no more than 20GB / s (Gigabyte per second) on a standard dual-socket machine with Intel Xeon E5 v3, so the upper speed limit is already set by platform manufacturers.

The most widely used in the Intel architecture is the PCI Express data bus. Based on the capabilities of the bus PCI express v3.0, we get the lower limit of data throughput, and then the question arises, which protocol is better to use? Considering the marketing moves of various companies on the market that offer PCI express v3.0 based adapters with transmission speeds of 100Gb / s and higher, we understand that such speeds are difficult to achieve, at least on standard hardware based on Intel based platforms - for reasons above.

In any case, the situation will improve, as the PCI express v4.0 standard is now ready and the possibilities there have been greatly expanded.

The Infiniband add-on over the standard PCI express protocol adds additional overhead - it reduces data transfer bandwidth and increases latency.

In addition, the cost of development based on the Infiniband protocol will be quite high compared to Fiber Channel, as far as this protocol already has a logical level of data transmission based on the SCSI protocol.

What protocol should I choose for the BackEnd storage bus?

We understand that the use of Non-Transparent Bridge will create much more opportunities for customers and significantly reduce the cost of IT infrastructure.

If you choose between your data transfer protocol or a finished implementation of the protocol, then your version will definitely be cheaper at the sales and implementation stage, so we developed our own protocol that supports packet switching between controllers.

As a result, we received the following benefits:

- We ourselves dictate the development strategy of our protocol and do not depend on the implementation and intricacies of manufacturing adapters (embedded microcode) from a third-party foreign manufacturer.

- We have made a much cheaper version of our protocol, compared to other high-speed standards on the market. Therefore, we are more competitive in terms of providing better deals to our customers.

In the following articles we will tell about new StoreQuant products based on Intel NTB and possible ways to use them.

How do we scale our resources in StoreQuant storage systems?

Scaling storage systems while increasing the load, as well as compliance with the Quality of Service policies (hereinafter referred to as QoS) are urgent tasks of any client. According to our research, many foreign vendors do not have QoS capabilities that help uniquely protect critical applications without additional financial investments in the event of an increase in storage load.

We put into the term QoS the following features that are not available to foreign vendors:

- Ability to protect Cache for a specific application. The client himself adjusts the amount of Cache, which is guaranteed available for the application.

- The ability to allocate the width of the data transfer channel at the level of the RAID group for a specific application for a given volume.

- The possibility of balancing external data access at the level of I / O ports.

- Access to data based on virtual WWN for I / O ports.

The basic element of our storage architecture is a controller that has on board two sockets for Intel Xeon, a switch for NVMe disks, and is also capable of carrying up to 1500 MB of RAM under our “wing”, which we actively use under Cache. The controller has a height of 2 Unit or 1 Unit and is designed for installation in a standard Rack. The controller has from 10 to 24 slots for installing NVMe disks.

Example: The maximum raw storage capacity of data within one 2 Unit controller, using 2TB drives, is 48 TB.

An example of a ready offer from the company StoreQuant, as well as possible product configurations can be found on our website here .

One controller has up to 4 external (FrontEnd) and 2 internal (BackEnd) I / O ports, in particular, we support FC at 16 Gb / s and 32 Gb / s.

Table 3 - StoreQuant Storage Controller Specifications

| StoreQuant storage controller type | Input / Output Ports (BE / FE) | Amount of data (MIN / MAX) | RAM (Cache) |

|---|---|---|---|

| DataFusion SmartNode v1.0 | 2/4 | 1.6TB / 110TB | 1500 MB |

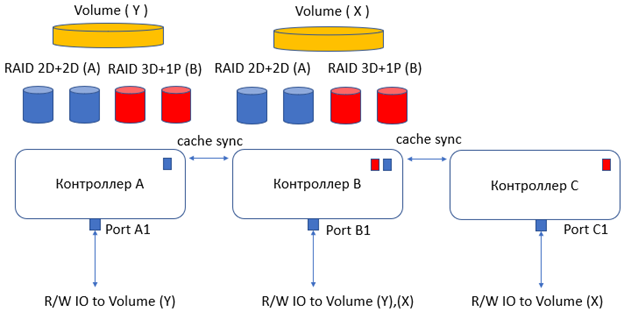

From the point of view of data protection, we implement synchronous data copying always in a pair of controllers. Thus, the data fall into the memory of the two controllers, which guarantees protection against failure at the equipment level.

For protection in the event of a Split-brain situation, we use the BackEnd bus, which has several dedicated data lines.

A feature of our architecture is the ability at the storage management system level to integrate controllers together, so we provide 1 + 1 protection at the controller level and enable the client to transfer traffic from servers to dedicated I / O ports and share the load between controllers. Thus, the controller always works in pairs (hereinafter Base Module) with another controller and represents a single complex as part of the storage system. At the moment we support only 512 Base Modules as part of our storage solution. Our maximum technical capabilities, taking into account the work of all 512 Base Modules, are presented in Table 4.

Table 4 - Technical specifications of StoreQuant storage systems in maximum configuration

| Number of input / output (FC) ports for Front End | Cache number (maximum) | Maximum amount of data |

|---|---|---|

| 4096 | 1536 TB | 112 640 TB |

From the point of view of the storage upgrade, the client can install at least one controller and include it in the storage structure. In Fig. Figure 3 shows an example of a storage system of three controllers, when a client installs an additional controller C, which allows increasing the volume of IOPS (I / O operations) of the entire storage system using additional I / O ports. In this case, controller B forms two pairs (Base Modules) with controller A and controller C, while all data writing operations are protected by synchronous copying of data into the Cache of all controllers. Using controller C, the client is able to provide an alternative path to volume (X) for applications, thereby isolating the application using volume (X) at the I / O port level from other applications.

Figure 3. StoreQuant base module storage configuration

It should be noted that the management of volumes and RAID groups occurs online without stopping the storage service, i.e. Using the Snapshots mechanism, you can transfer a volume from one RAID group to another RAID group within different controllers. We support two types of Snapshots - OW (Copy-on-write) and Full snapshots, as well as the ability to synchronize Snapshots in time to replicate changed data and reduce the time it takes to create a Snapshot.

We specifically do not use JBOD type systems in our architecture, since in essence our controller performs the functions of JBOD, i.e. stores data and provides access to it, as well as controller functions, i.e. provides data protection and manages RAID groups.

In our architecture, the client itself manages the fault tolerance of RAID groups, i.e. Storage allows you to select specific drives in any controller and form a RAID group based on them. Thus, the client can significantly save their financial resources during the next upgrade of the storage system.

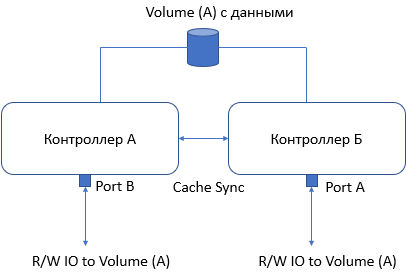

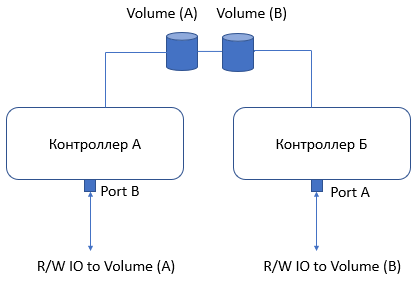

What is the Active-Active configuration in the StoreQuant storage system?

Most storage systems on the Russian market offer the ability to access data (volumes) through active I / O ports within at least two different physical controllers. However, in the Russian market there are such storage systems that understand the term Active-Active differently and deliberately introduce a wrong understanding in their marketing materials. Let's try to explain what the Active-Active configuration looks like; the best way is to refer to the visual example below in Figure. four.

“Cache Sync” allows you to synchronize all data writing operations between controllers using the BackEnd bus.

4. Active – Active StoreQuant

, . 5 , . .

LUN StoreQuant ?

LUN, .. SCSI. Active-Active , , . , Master, Slave, Slave «» , .. Master , .

StoreQuant, -, . 50/50 (Base Module), .

, StoreQuant :

- 247 8 call-to-repair.

- .

- StoreQuant .

.

')

Source: https://habr.com/ru/post/350218/

All Articles