Deep learning in the cloud: optical computers will replace the GPU

And soon. Startup Fathom Computing in the next two years plans to release an optical device that will bypass the GPU in speed training large neural networks. Developers want to place an optical computer in a cloud data center so that creators of artificial intelligence systems from all over the world can access it.

About the decision of Fathom and about who else is engaged in such developments, we will tell under the cut.

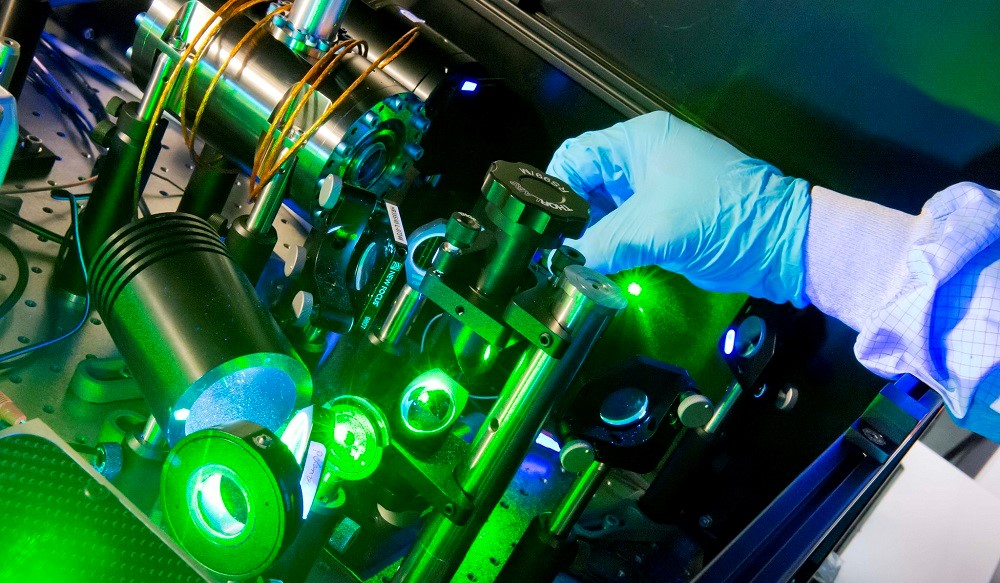

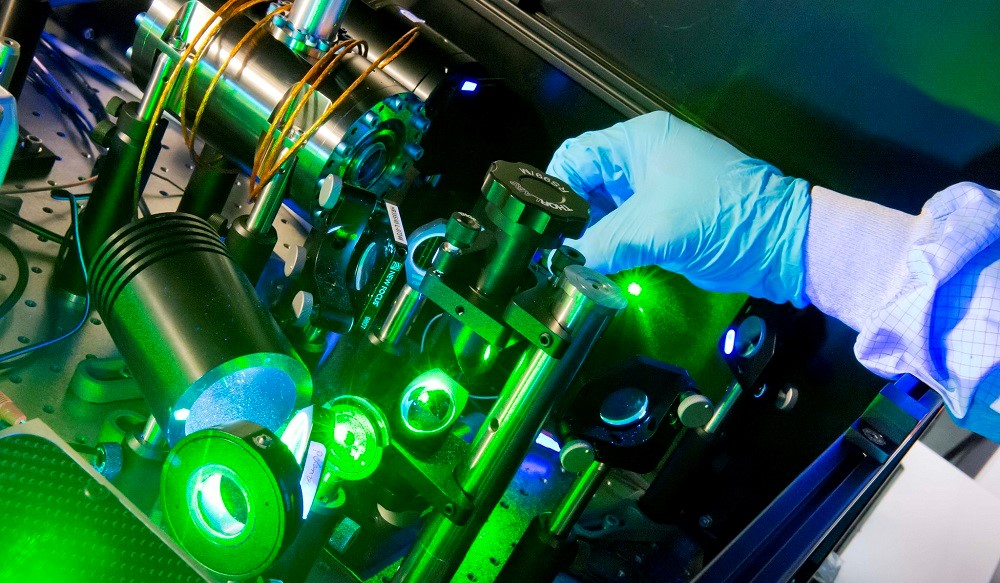

/ photo European Space Agency CC

')

GPU-accelerated computing was implemented at Nvidia about a decade ago. Now the technology is used in data centers of scientific laboratories, IT companies and IaaS providers providing work with vGPU for the implementation of high-performance computing.

However, the market is becoming increasingly demanding to the processing speed of large amounts of data - about 90% of all information was generated over the past 2-3 years - according to IBM, humanity generates 2.5 quintillion bytes per day.

The founders of Fathom Computing are convinced that optical computers will help to quickly adapt to changing requirements for working with data. The prototype created in the company performs mathematical operations more quickly, since the coded values are transmitted by means of light rays - a sequence of lenses and optical elements (for example, interferometers) changes the parameters of light, on the basis of which the system generates a result.

Wired journalists described the appearance of the device as "a bunch of lenses, brackets and wires resembling a disassembled telescope." Now all these components are placed in a large black box, but scientists are working to “pack” all this into a standard server that fits in the data center rack.

The Fathom computer is not a universal processor, it is “sharpened” to perform certain operations of linear algebra. In particular, Fathom is used to train recurrent neural networks with LSTM architecture and direct propagation neural networks .

Now the team is testing a computer, teaching it to recognize handwritten numbers. So far, the system has managed to achieve recognition accuracy of 90%. On the project site, the developers led the visualization of the LSTM data stream, which is generated by their control system.

Fathom Computing noted that they plan to create the first production-ready system that will surpass computers with a GPU in speed training networks in the next two years.

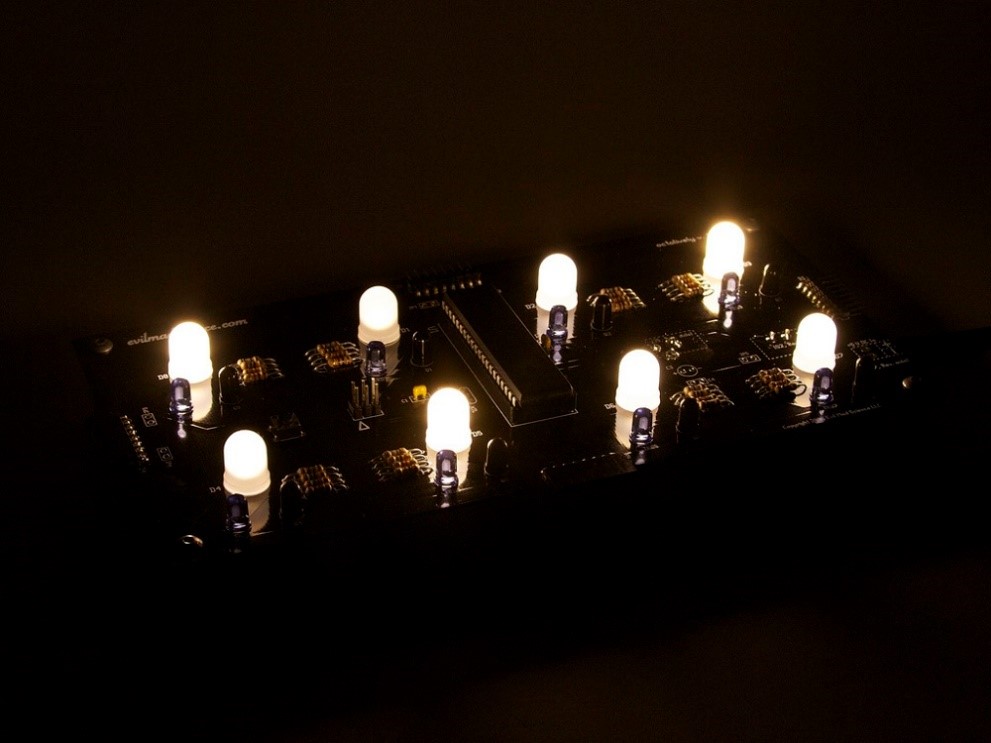

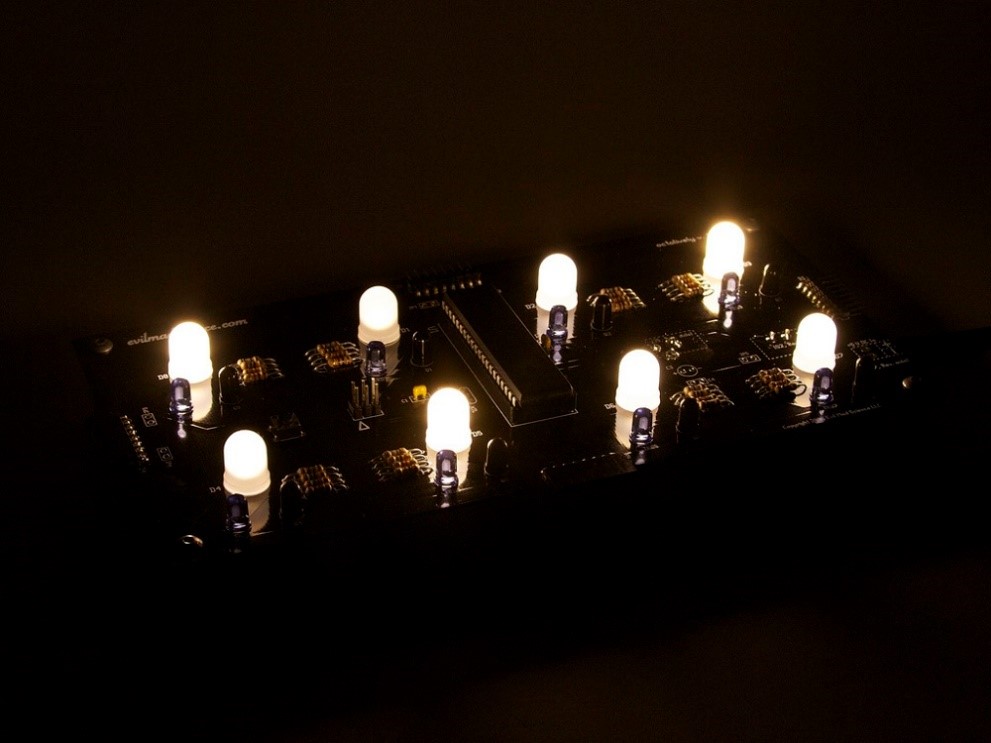

/ photo Windell Oskay CC

Fathom is not the only company that develops optical chips to accelerate deep learning. Its work in this area is carried out by the Massachusetts Institute of Technology (MIT).

MIT scientists have developed a chip, which they call a "programmable nanophoton processor." It performs matrix operations using a set of waveguides, the connections between which are adjusted depending on the task. The chip is built on the basis of a Mach-Zehnder interferometer , which changes the properties of transmitted rays and is equivalent to matrix multiplication, and a series of attenuators, which slightly reduce the light intensity. These processes provide training for the optical neural network.

A Lightmatter startup is also working on creating a silicon photoelectric processor for the AI systems market. Developers use MIT technology. The test of the prototype of 56 programmable Mach-Zehnder interferometers, implemented on an integrated circuit, took place last summer.

The system learned to recognize vowel sounds on a set of audio recordings. In the course of the experiment, recognition accuracy of 75% was achieved. Systems based on "classic" iron reach a threshold of 90%, so scientists will continue to develop and improve the architecture of the chip.

Another LightOn startup in mid-February announced the start of testing a prototype optical module Optical Processing Unit (OPU) in a data center. The company noted that they managed to reduce the time required to complete the Transfer Learning task from 20 minutes on classic GPUs to 3.5 minutes.

Open beta testing of the installation will begin in the spring on the LightOn Cloud cloud platform. The system now works with PyTorch and is compatible with Scikit-Learn. In the future, support will be added to other popular ML frameworks, such as TensorFlow.

PS A few more recent posts from the First Corporate IaaS blog:

About the decision of Fathom and about who else is engaged in such developments, we will tell under the cut.

/ photo European Space Agency CC

')

GPU-accelerated computing was implemented at Nvidia about a decade ago. Now the technology is used in data centers of scientific laboratories, IT companies and IaaS providers providing work with vGPU for the implementation of high-performance computing.

However, the market is becoming increasingly demanding to the processing speed of large amounts of data - about 90% of all information was generated over the past 2-3 years - according to IBM, humanity generates 2.5 quintillion bytes per day.

The founders of Fathom Computing are convinced that optical computers will help to quickly adapt to changing requirements for working with data. The prototype created in the company performs mathematical operations more quickly, since the coded values are transmitted by means of light rays - a sequence of lenses and optical elements (for example, interferometers) changes the parameters of light, on the basis of which the system generates a result.

Wired journalists described the appearance of the device as "a bunch of lenses, brackets and wires resembling a disassembled telescope." Now all these components are placed in a large black box, but scientists are working to “pack” all this into a standard server that fits in the data center rack.

The Fathom computer is not a universal processor, it is “sharpened” to perform certain operations of linear algebra. In particular, Fathom is used to train recurrent neural networks with LSTM architecture and direct propagation neural networks .

Now the team is testing a computer, teaching it to recognize handwritten numbers. So far, the system has managed to achieve recognition accuracy of 90%. On the project site, the developers led the visualization of the LSTM data stream, which is generated by their control system.

Fathom Computing noted that they plan to create the first production-ready system that will surpass computers with a GPU in speed training networks in the next two years.

/ photo Windell Oskay CC

Other developments

Fathom is not the only company that develops optical chips to accelerate deep learning. Its work in this area is carried out by the Massachusetts Institute of Technology (MIT).

MIT scientists have developed a chip, which they call a "programmable nanophoton processor." It performs matrix operations using a set of waveguides, the connections between which are adjusted depending on the task. The chip is built on the basis of a Mach-Zehnder interferometer , which changes the properties of transmitted rays and is equivalent to matrix multiplication, and a series of attenuators, which slightly reduce the light intensity. These processes provide training for the optical neural network.

A Lightmatter startup is also working on creating a silicon photoelectric processor for the AI systems market. Developers use MIT technology. The test of the prototype of 56 programmable Mach-Zehnder interferometers, implemented on an integrated circuit, took place last summer.

The system learned to recognize vowel sounds on a set of audio recordings. In the course of the experiment, recognition accuracy of 75% was achieved. Systems based on "classic" iron reach a threshold of 90%, so scientists will continue to develop and improve the architecture of the chip.

Another LightOn startup in mid-February announced the start of testing a prototype optical module Optical Processing Unit (OPU) in a data center. The company noted that they managed to reduce the time required to complete the Transfer Learning task from 20 minutes on classic GPUs to 3.5 minutes.

Open beta testing of the installation will begin in the spring on the LightOn Cloud cloud platform. The system now works with PyTorch and is compatible with Scikit-Learn. In the future, support will be added to other popular ML frameworks, such as TensorFlow.

PS A few more recent posts from the First Corporate IaaS blog:

Source: https://habr.com/ru/post/350192/

All Articles