Crash Course on Docker: Learn to swim with big fish

The quick start guide that you are looking for.

If you followed software trends in the past year, then you must be tired of hearing the term Docker. Most likely, you are stunned by the huge number of developers talking about containers, isolated virtual machines, supervisors and other Voodoo magic related to DevOps. Today we'll figure it out. It's time to finally understand what containers as a service are and why they are needed.

TL; DR

- "Why do I need it?"

- Review all key terms.

- Why we need CaaS and Docker.

- Fast start.

- Install Docker.

- Create a container.

- The real script.

- Creating a nginx container to host a static website.

- Learn how to use build tools to automate Docker commands.

"Why do I need it?"

Not so long ago, I asked myself the same question. Being a stubborn developer for a long time, I finally sat down and accepted the awesomeness of using containers. Here is my opinion why you should try to apply them.

Docker?

Docker - software for creating container applications. The container should be small, not storing the information of the medium for running part of the software.

The container image is a lightweight, self-contained executable package of a piece of software that includes everything you need to run it: code, runtime, system tools, system libraries, settings.

- Official Docker website.

In short, the container is a tiny virtual machine with primitive functions for running the application that was put into it.

Virtual machine?

The name “virtual machine” (VM) speaks for itself: it is a virtual version of a real machine that simulates the hardware of a machine inside a larger machine. So you can run multiple virtual machines on a single large server. Have you ever seen the movie "The Beginning"? A virtual machine is something like "Start." The part of the software that allows the VM to work is called Hypervisor.

Hypervisor?

Do you boil the brain from new terms? Be patient for a reason. Virtual machines only work because of hypervisor. This is a special software that allows a physical machine to host several different virtual machines. From the outside, it seems that VMs run their own programs and use host hardware. However, this hypervisor allocates resources to the virtual machine.

Note: If you have ever tried to install software (such as VirtualBox), but failed, this was most likely due to the fact that the virtualization system is not activated in your computer's BIOS. Perhaps this happened to me more times than I remember. nervous laughter **

If you're a nerd like me, here’s an amazing post on what Hypervisor is.

Virtualization 101: What is a hypervisor?

Answering your questions ...

What is CaaS really for? We have been using virtual machines for a long time. Why did containers suddenly become good? Nobody said that virtual machines are bad, they are just hard to handle.

DevOps, as a rule, is complicated, and it is necessary that the designated person performs the work associated with it all the time. Virtual machines take up a lot of space and RAM, and also need to be constantly configured. Not to mention the need for experience to properly manage them.

To not do double work, automate

With Docker, you can abstract away from regular configurations and environment settings and focus on coding instead. With Docker Hub, you can take pre-created images and launch them into work in a short time.

But the biggest advantage is the creation of a homogeneous environment. Instead of setting a list of various dependencies to launch an application, you need to install only Docker. Docker is cross-platform, so every team developer will work in the same environment. The same applies to the development, production and production server. That's cool! No more "it works on my machine."

Fast start

Let's start with the installation. Surprisingly: only a part of the software installed on the development machine is required, and everything will work fine. Docker is all you need.

Docker installation

Fortunately, the installation process is very simple. This is how installation is done on Ubuntu .

$ sudo apt-get update $ sudo apt-get install -y docker.io This is all that is needed. To make sure Docker is working, you can run another command.

$ sudo systemctl status docker Docker should return the results of the work.

● docker.service – Docker Application Container Engine Loaded: loaded (/lib/systemd/system/docker.service; enabled; vendor preset: enabled) Active: active (running) since Sun 2018-01-14 12:42:17 CET; 4h 46min ago Docs: https://docs.docker.com Main PID: 2156 (dockerd) Tasks: 26 Memory: 63.0M CPU: 1min 57.541s CGroup: /system.slice/docker.service ├─2156 /usr/bin/dockerd -H fd:// └─2204 docker-containerd --config /var/run/docker/containerd/containerd.toml If system services are stopped, run a combo of two commands to deploy the Docker and make sure that it starts at boot.

$ sudo systemctl start docker && sudo systemctl enable docker For a basic Docker installation, you need to run the docker as sudo . But if you add a user to the docker group, you can run the command without sudo .

$ sudo usermod -aG docker ${USER} $ su - ${USER} Running the commands will add the user to the docker group. To check this, run $ id -nG . If you return to the output device with your username in the list, everything is done correctly.

What about Mac and Windows? Fortunately, installation is just as easy. Download the simple file that runs the installation wizard. Nothing is easier. Look here for the installation wizard for Mac and here for Windows.

Container Deployment

After deploying and running Docker, we can experiment a bit. The first four teams that need to be put to work:

- create - creates a container from the image;

- ps - a list of running containers is displayed, optionally the

-aflag for the list of all containers; - start - launch of the created container;

- attach - attaches the standard input and output of the terminal to a running container, literally connecting you to the container, just like any virtual machine.

Let's start small. Take the Ubuntu image from Docker Hub and create a container from it.

$ docker create -it ubuntu:16.04 bash We add -it as an optional function to give the container an integrated terminal. We can connect to the terminal, as well as run the bash command. By specifying ubuntu: 16.04 , we get an Ubuntu image with a version tag 16.04 from the Docker Hub.

After running the create command, make sure the container is created.

$ docker ps -a The list should look something like this.

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 7643dba89904 ubuntu:16.04 "bash" X min ago Created name Container created and ready to run. Starting a container is simple: set the start ID of the container .

$ docker start 7643dba89904 Check again whether the container is running, but now without the -a flag.

$ docker ps If running, join it.

$ docker attach 7643dba89904 Cursor is changing. Why? Because you just entered the container. Now you can run any bash command that you are used to in Ubuntu, as if it were an instance running in the cloud. Try it.

$ ls Everything will work fine, and even $ ll . A simple Docker container is all you need. This is your own little virtual platform where you can develop, test or whatever you want! No need to use virtual machines or heavy software. To test my point, install something in this small container. The Node installation will go well. If you want to exit the container, enter exit . The container will stop and you can display the list by typing $ docker ps -a .

Note. Each Docker container runs as sudo by default, that is, the sudo does not exist. Each command executed is automatically run with sudo privileges.

Real script

Time to work with this material. This is what you will use in real life for your projects and production applications.

Containers or protocol without saving state?

I mentioned above that each container is isolated and does not save state. This means that after removing a container, its contents will be permanently deleted.

$ docker rm 7643dba89904 How do you save data in this case?

Have you ever heard of toms? Volumes allow you to map directories on the main machine with directories inside the container.

$ docker create -it -v $(pwd):/var/www ubuntu:latest bash When creating a new container, add the -v flag to indicate which volume to create. This command will bind the current working directory on the computer to the / var / www directory inside the container.

After starting the container with the $ docker start <container_id> you can edit the code on the host machine and see the changes in the container. Now you can save data for various use cases - from image storage to database file storage - and, of course, for development, where you need live-restart capabilities.

Note. You can run the create and start commands at the same time as the run command.

$ docker run -it -d ubuntu:16.04 bash Note. The only addition is the -d flag, which tells the container to work separately, in the background.

Why do I talk so much about volumes?

We can create a simple nginx web server to host a static website in a couple of easy steps.

Create a new directory, name it whatever you want, I'll call my myapp for convenience. All you need is to create a simple index.html file in the myapp directory and paste it.

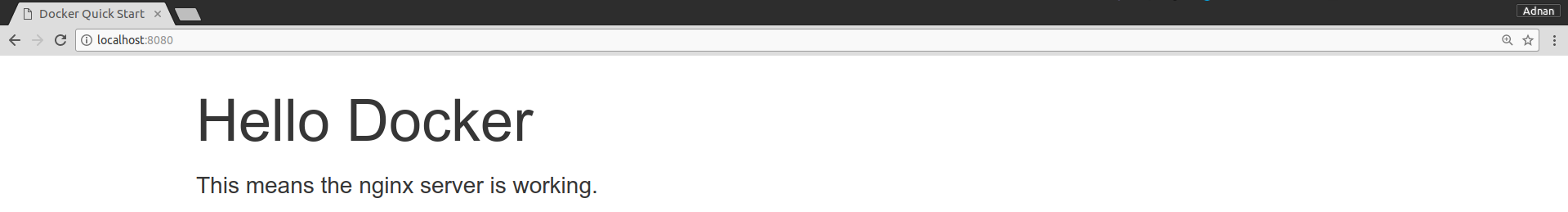

<!-- index.html --> <html> <head> <link href="https://maxcdn.bootstrapcdn.com/bootstrap/3.3.5/css/bootstrap.min.css" rel="stylesheet" integrity="sha256-MfvZlkHCEqatNoGiOXveE8FIwMzZg4W85qfrfIFBfYc= sha512-dTfge/zgoMYpP7QbHy4gWMEGsbsdZeCXz7irItjcC3sPUFtf0kuFbDz/ixG7ArTxmDjLXDmezHubeNikyKGVyQ==" crossorigin="anonymous"> <title>Docker Quick Start</title> </head> <body> <div class="container"> <h1>Hello Docker</h1> <p>This means the nginx server is working.</p> </div> </body> </html> = "stylesheet" integrity = "sha256-MfvZlkHCEqatNoGiOXveE8FIwMzZg4W85qfrfIFBfYc = sha512-dTfge / zgoMYpP7QbHy4gWMEGsbsdZeCXz7irItjcC3sPUFtf0kuFbDz / ixG7ArTxmDjLXDmezHubeNikyKGVyQ == <!-- index.html --> <html> <head> <link href="https://maxcdn.bootstrapcdn.com/bootstrap/3.3.5/css/bootstrap.min.css" rel="stylesheet" integrity="sha256-MfvZlkHCEqatNoGiOXveE8FIwMzZg4W85qfrfIFBfYc= sha512-dTfge/zgoMYpP7QbHy4gWMEGsbsdZeCXz7irItjcC3sPUFtf0kuFbDz/ixG7ArTxmDjLXDmezHubeNikyKGVyQ==" crossorigin="anonymous"> <title>Docker Quick Start</title> </head> <body> <div class="container"> <h1>Hello Docker</h1> <p>This means the nginx server is working.</p> </div> </body> </html> We have a common web page with the text of the title. It remains to run the container nginx.

$ docker run --name webserver -v $(pwd):/usr/share/nginx/html -d -p 8080:80 nginx We capture the nginx image from Docker Hub for instant nginx configuration. The configuration of the volumes is similar to what we did above. We pointed to the default directory where nginx places HTML files. New is the --name parameter that we set for the webserver and -p 8080: 80 . We compared the container port 80 with the port 8080 on the main machine. Remember to run the command in the myapp directory .

Check if the container is working with $ docker ps and a browser window is launched. Go to http: // localhost: 8080 .

We have a nginx web server running in just a couple of commands. Edit in index.html as you need. Reload the page and see that the content has changed.

Note. You can stop a running container with the stop command.

$ docker stop <container_id> How to make life even easier?

There is a saying: if you need to do something twice, automate. Fortunately, Docke took care of that. Add a Docker file along with the index.html file. Its name is Dockerfile , without any extensions.

# Dockerfile FROM nginx:alpine VOLUME /usr/share/nginx/html EXPOSE 80 Dockerfile - build configuration for Docker images. The focus is on images ! We indicate that we want to capture the nginx: alpine image as the basis for our image, create a volume and set port 80.

To create an image, we have the `build 'command.

$ docker build . -t webserver:v1 . indicates where the Docker file will be used to build the image, and -t marks the tag for the image. The image will be known as webserver:v1 .

With this command, we didn’t extract the image from the Docker Hub, but instead created our own. To view all images, use the images command.

$ docker images Run the created image.

$ docker run -v $(pwd):/usr/share/nginx/html -d -p 8080:80 webserver:v1 The strength of the Dockerfile is an add-on that you can provide to the container. You can pre-create images to your liking, and if you don’t like repetitive tasks, then take another step and install docker-compose .

Docker-compose?

Docker-compose allows you to create and run a container from a single command. But more importantly, you can build a whole cluster of containers and customize them using docker-compose.

Go to the install page and install docker-compose on the computer.

Install Docker Compose

Back in the device, run $ docker-compose --version . We will deal with some compositions.

Along with Dockerfile, add another file called docker-compose.yml and paste this snippet.

# docker-compose.yml version: '2' services: webserver: build: . ports: - "8080:80" volumes: - .:/usr/share/nginx/html Be careful with indents, otherwise * docker-compose.yml ** will not work properly. It remains only to run it.

$ docker-compose up (-d) Note The docker-compose-dcommand argument is used to run in the detached state, using you can run$ docker-compose psto see what is currently running, or to stop containers using the$ docker-compose stop.

Docker will collect the image from the Dockerfile in the current directory ( . ), Display the ports, as we did above, and also “fumble” the volumes. See what happens! Same as we did with the build and run commands. Now instead of them we execute only one docker-compose up .

Return to the browser, and you will see that everything works the same as before. The only difference is that now there is no need for tedious command writing in the terminal. We replaced them with two configuration files - Dockerfile and docker-compose.yml . Both of these files can be added to your Git repository. Why is it important? Because they will always work in production correctly, as expected. Exactly the same container network will be deployed on the production server!

To finish this section, go back to the console and review the list of all containers again.

$ docker ps -a If you want to remove the container, you can run the rm command, which I mentioned above. To delete images, use the rmi command.

$ docker rmi <image_id> Try not to leave leftover containers and remove them if you no longer need them.

Broader perspective?

After making sure that Docker is not the only technology for creating containers, I should definitely mention less popular technologies. Docker is just the most common containerization option. But it seems that rkt also works great.

Digging deeper, I have to mention container orchestration. We talked only about the tip of the iceberg. Docker-compose is a tool for creating container networks. But when it becomes necessary to manage large volumes of containers and ensure maximum uptime, orchestration comes into play.

Managing a large cluster based container is not a trivial task. As the number of containers grows, we need a way to automate various DevOps tasks we usually do. Orchestration is what helps in creating hosts, creating or removing containers, when you need to scale or recreate dropped containers, network containers, and more. And here the following tools come to the rescue: Google's Kubernetes and Docker's own development, Swarm Mode .

Conclusion

If I didn’t convince you of the tremendous benefits of using CaaS and the simplicity of Docker, I would strongly recommend reviewing and transferring one of your existing applications to the Docker container!

The Docker Container is a tiny virtual machine where you can do everything you like, from development, installation, testing to hosting production applications.

Docker homogeneity is similar to magic for production environments. This will facilitate application deployment and server management. Because now you know for sure: what works locally will work in the cloud. This is what I call peace. No one else will hear the infamous sentence that we have all heard too many times.

Well, it works on my car ...

Source: A crash course on Docker - Learn to swim with the big fish

')

Source: https://habr.com/ru/post/350184/

All Articles