How to build a strong team of analysts and data engineers? Experience company Wish. Part 2

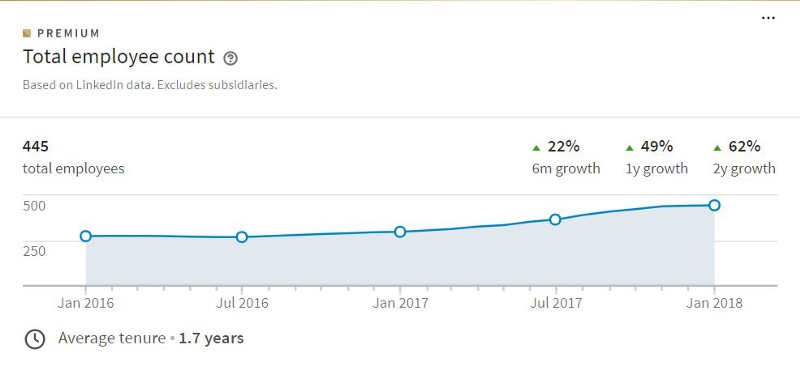

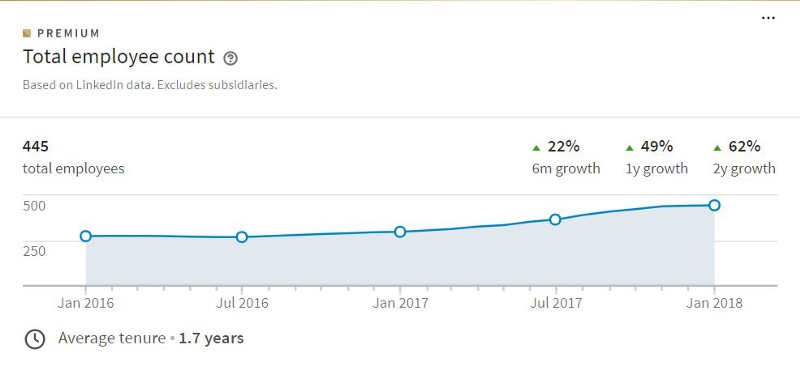

In the first part, we looked at how the data infrastructure was rebuilt in the Wish company in order to increase their analytical capabilities. This time we will pay attention to human resources and talk about how to further scale the company and create an ideal team of engineers and analysts. We will also tell about our approach to hiring the most talented candidates on the market.

Data engineering in a company can develop in two opposite directions. The first path is a classic one, in which case the data engineers mainly build and support the data pipelines, which are then used by analysts. The main disadvantage of this approach is the routine and monotony that can turn any engineer into a gear of a working system, which means it will be harder to retain talented employees in the company.

The second approach is different in that with it, data engineers are engaged in the construction and support of not pipelines of data, but platforms with the help of which anyone in the company can build and use their own pipelines. This allows analysts and data scientists to work end-to-end and fully control their projects.

')

If you want to attract the most talented to the company, then this way is for you, because the best ones want to work on scaling systems and platforms , solving complex technical problems. The productivity of analysts also increases as they no longer depend on anyone.

Of course, there are a couple of drawbacks. Firstly, there will be too many new tables, because it is always easier to create new ones than to try to change and adjust old ones. This will lead to confusion and incompatibility of different metrics and reports. Secondly, the costs and the workload of the infrastructure will increase, since ETL jobs written by analysts are often not optimal.

As a result, neither of the two approaches is imperfect, and the truth lies somewhere in the middle.

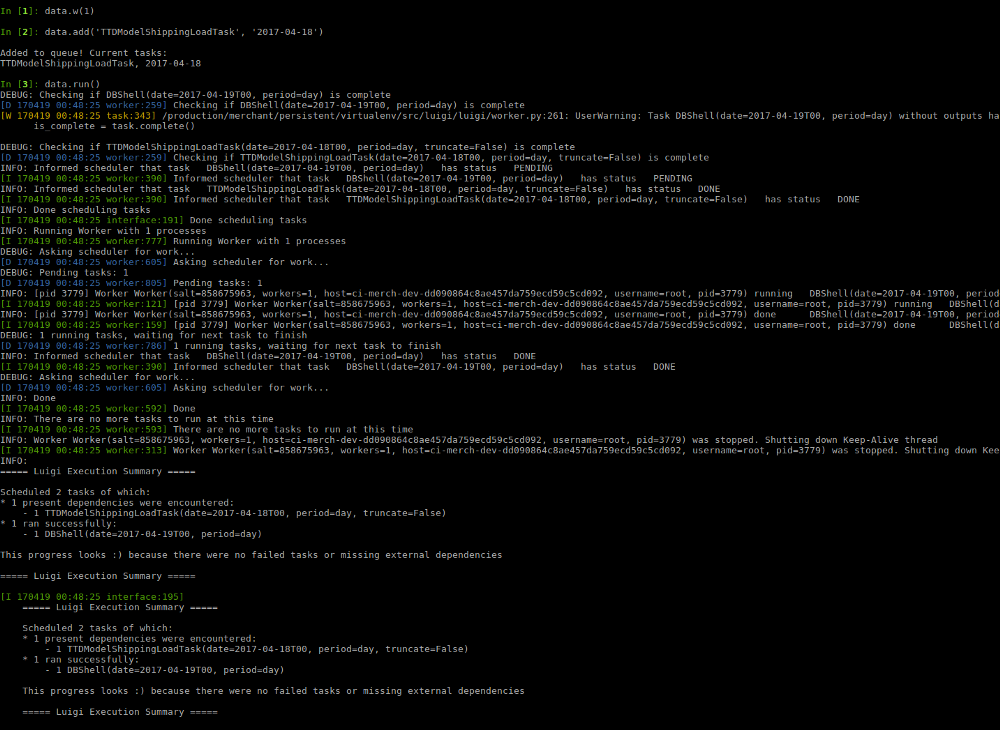

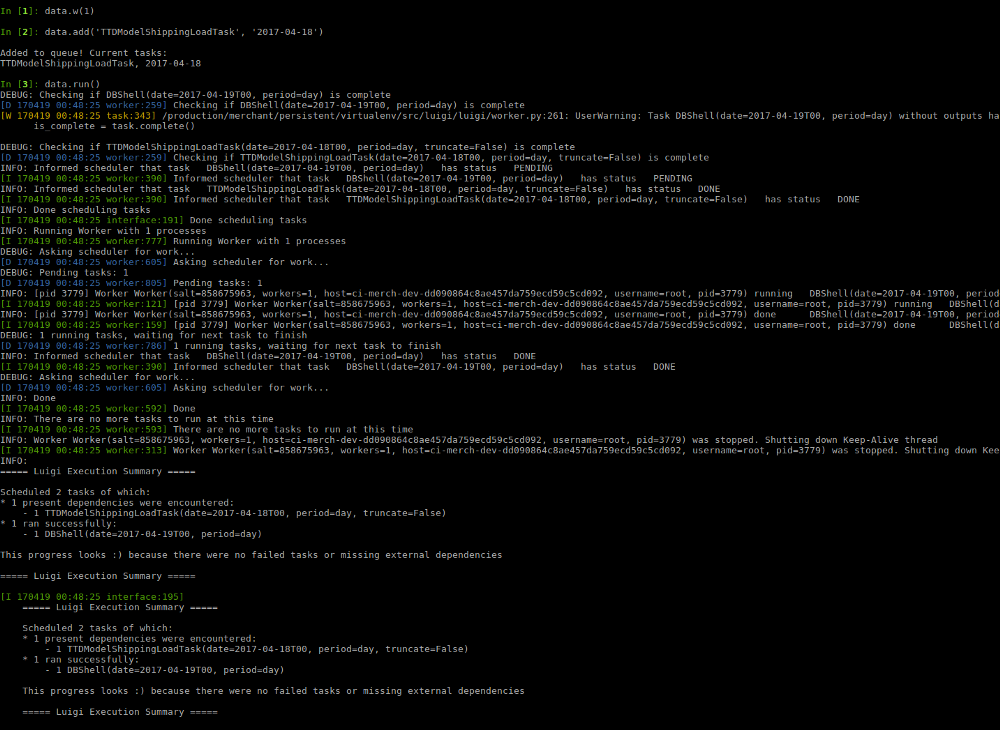

Scaling ETL and Luigi. Our Luigi- based data pipeline , described in the first part, worked fine, however, as soon as we began to build on additional functions, problems began to appear:

We managed to find very simple solutions to these problems:

Thus, a simple pipeline, with which several people work and the one used by more than a hundred, are completely different things and require different approaches.

Scaling data storage. Since we have Redshift and Hive clusters in our company, whose capacities are finite, it is necessary to keep track of queries and tables so that they do not slow down the system and it can continue to grow. For this we have taken several measures. First, we analyze the logs of requests , look for slow ones and, in which case, denormalize them. Secondly, it is a code review to maintain the quality of the code and train analysts. The scripts of our pipelines are regularly uploaded to the Git repository.

Handling system errors. One of the key duties of a data engineer is the error handling of the system, which generates a considerable number of them. At the moment, we have over 1000 active ETL jobs written by data engineers and analysts, some of whom have already left the company, some are interns who have recently left school. Therefore, even 1% of errors per week will mean 10 broken pipelines for this period.

To avoid the negative consequences of these mistakes, we assign “attendants” who are responsible for correcting them, and every week “attendants” change.

We build a team of engineers. In our team, the roles in data engineering are distributed like this:

No matter how good the data infrastructure is, if the people who use it are incompetent. Below we discuss how to create a strong team of analysts.

At the very beginning, analysts were engaged only in extracting data and building reports. It was wrong: analysts are not becoming for this . They always want to control not only the process of building a report, but also the final result - the decision made on its basis . They want to influence the company, not just an API to provide information.

3 key skills for analytics. Of course, analysts have a specialization, and what makes analytics in the field of logistics successful will not necessarily provide him with the same success in marketing. However, there are still 3 skills that each analyst team needs to be effective:

Business intelligence support. The main goal of all BI tools is data democratization, providing everyone with the opportunity to analyze data and make decisions based on them. That is why we launched Looker .

The problem is that not everyone has enough skills to analyze the data qualitatively. Bad data, illogical reports - all this flooded the company. In addition, the number of requests took off, because people could not cope with bad data and, instead of processing it, they demanded new metrics.

Therefore, we deployed a self-analytics system too early. There were so many problems that we had to restrict access and hire more analysts to help other employees process the data.

We build a team of analysts. Only in the case when your analysts are perfect and as if sent from above, you do not need to work out the development strategy of your team. I believe that analysts in a team should be in three main areas: business, technical component and statistics:

And finally, after more than 150 interviews with engineers, analysts and managers at Wish, I gained enough experience to talk about how we approach hiring employees and how to find worthy candidates for the position.

Resume Screening. For recent graduates, the first thing we look at in the resume is grades and the school they graduated from. For more experienced candidates, it is important for us which companies and teams they worked in: organizations with a strong engineering component or data-driven? How important was their role in them?

Interviewing. Strong interviewers should ask flexible questions that can be changed depending on the reaction of the candidate. If it seems that the candidate has rather weak analytical skills, then you need to put pressure on more complex questions, and if he looks uncommunicative, then you can ask him a vague and lengthy question and see how he explains it.

In general, candidates must go through 1-2 phone screenings and 4 face-to-face interviews to get to Wish. Interviews take place quickly, with writing notes, so that the next interviewer can correct his questions.

Interviews should end on a positive note, since this is a very exciting experience for candidates, they may be vulnerable. There is no easier way to destroy the whole motivation of a person than to humiliate him at the interview. Also, conducting the interview in a negative way, the company can quickly earn a bad reputation.

Over the past couple of years we have managed to achieve incredible things. We rebuilt the infrastructure and created a data work team for one of the largest online retailers in the world. And all this was done on the go, during this period the business did not stop for a second. We can say that we changed the tires on a Formula 1 car right while driving. We did it, I hope that thanks to this series of articles, you will succeed!

And if you decide to become a big data analyst or data engineer, but you’ll have to figure it out for yourself for a long time, and you don’t have to ask anyone, then come to the Newprolab programs:

Scaling data engineering

Data engineering in a company can develop in two opposite directions. The first path is a classic one, in which case the data engineers mainly build and support the data pipelines, which are then used by analysts. The main disadvantage of this approach is the routine and monotony that can turn any engineer into a gear of a working system, which means it will be harder to retain talented employees in the company.

The second approach is different in that with it, data engineers are engaged in the construction and support of not pipelines of data, but platforms with the help of which anyone in the company can build and use their own pipelines. This allows analysts and data scientists to work end-to-end and fully control their projects.

')

If you want to attract the most talented to the company, then this way is for you, because the best ones want to work on scaling systems and platforms , solving complex technical problems. The productivity of analysts also increases as they no longer depend on anyone.

Of course, there are a couple of drawbacks. Firstly, there will be too many new tables, because it is always easier to create new ones than to try to change and adjust old ones. This will lead to confusion and incompatibility of different metrics and reports. Secondly, the costs and the workload of the infrastructure will increase, since ETL jobs written by analysts are often not optimal.

As a result, neither of the two approaches is imperfect, and the truth lies somewhere in the middle.

Scaling ETL and Luigi. Our Luigi- based data pipeline , described in the first part, worked fine, however, as soon as we began to build on additional functions, problems began to appear:

- The system was fragile because all the tasks had to be performed within the same queue. A single error or poor request could break the entire pipeline.

- It was difficult to carry out maintenance tasks, the backfill algorithm also failed, it was necessary to manually update / delete tasks in MongoDB.

- Errors generated by queries in Hive could easily overload the cluster, especially when there are hundreds of them due to backfilling caused by a task with a bug inside.

- The monitoring system based on emails has ceased to cope, as the number of people using the pipeline grew and the number of emails per day grew. It became very difficult to sort and track them in order to react to errors in time.

We managed to find very simple solutions to these problems:

- We divided all tasks into 4 main streams: key ones for the whole company, for the data management team, infrastructure management teams and analysts working on individual projects.

- We wrote our own shell for maintenance tasks, for example, for backfill.

- Added circuit breakers that run in case of errors, so that system resources are not spent on bad code over and over again.

- We built our warning and monitoring system in Prometheus + Pagerduty, which allows you to respond to errors and problems on the server.

Thus, a simple pipeline, with which several people work and the one used by more than a hundred, are completely different things and require different approaches.

Scaling data storage. Since we have Redshift and Hive clusters in our company, whose capacities are finite, it is necessary to keep track of queries and tables so that they do not slow down the system and it can continue to grow. For this we have taken several measures. First, we analyze the logs of requests , look for slow ones and, in which case, denormalize them. Secondly, it is a code review to maintain the quality of the code and train analysts. The scripts of our pipelines are regularly uploaded to the Git repository.

Handling system errors. One of the key duties of a data engineer is the error handling of the system, which generates a considerable number of them. At the moment, we have over 1000 active ETL jobs written by data engineers and analysts, some of whom have already left the company, some are interns who have recently left school. Therefore, even 1% of errors per week will mean 10 broken pipelines for this period.

To avoid the negative consequences of these mistakes, we assign “attendants” who are responsible for correcting them, and every week “attendants” change.

We build a team of engineers. In our team, the roles in data engineering are distributed like this:

- Data Infrastructure Engineer. Focused on scaling and reliability of distributed systems. Must have experience in building such systems, be able to explain the choice of various tools.

- Data Platform Engineer. Engaged in building data pipelines. The ideal candidate is a person with a classic background in programming and a desire to build effective systems.

- Analytics Engineer. Focuses on building the main ETL job s and refactoring bad queries and data models. It is enough to own Python + SQL and have developed analytical skills.

Analytics scaling

No matter how good the data infrastructure is, if the people who use it are incompetent. Below we discuss how to create a strong team of analysts.

At the very beginning, analysts were engaged only in extracting data and building reports. It was wrong: analysts are not becoming for this . They always want to control not only the process of building a report, but also the final result - the decision made on its basis . They want to influence the company, not just an API to provide information.

3 key skills for analytics. Of course, analysts have a specialization, and what makes analytics in the field of logistics successful will not necessarily provide him with the same success in marketing. However, there are still 3 skills that each analyst team needs to be effective:

- Understanding the goal. First, analysts must understand why they are performing a particular task. You need to be able to look critically and from different angles at the original question that came from management in order to catch its true value and context.

- Understanding infrastructure and data. Secondly, analysts should understand perfectly how their system works, and how it affects the data. Using data as it is is a disgusting habit, which leads to a distortion of the situation and poor-quality analysis.

- To bring everything to the end. In the course of work it is very easy to forget about the final goal, so analysts should always bring their projects to a logical conclusion. The end result of the analyst’s work is not a dashboard, in which all the available information is aggregated, but recommendations or actions that can have a positive impact on the business.

Business intelligence support. The main goal of all BI tools is data democratization, providing everyone with the opportunity to analyze data and make decisions based on them. That is why we launched Looker .

The problem is that not everyone has enough skills to analyze the data qualitatively. Bad data, illogical reports - all this flooded the company. In addition, the number of requests took off, because people could not cope with bad data and, instead of processing it, they demanded new metrics.

Therefore, we deployed a self-analytics system too early. There were so many problems that we had to restrict access and hire more analysts to help other employees process the data.

We build a team of analysts. Only in the case when your analysts are perfect and as if sent from above, you do not need to work out the development strategy of your team. I believe that analysts in a team should be in three main areas: business, technical component and statistics:

- Report Designer. Most often they come from consulting, finance, or operations. They are able to make profitable business decisions based on quantitative information and are most useful when they are key drivers of change. They need to own SQL, but Python is optional (although it will be a plus).

- Data analysts. Excellent knowledge of Python and SQL, efficiently extract data and, if new tables are required, can build their own ETL pipelines. Finally, they can automate analytics and write non-reusable scripts to speed up work.

- Statistics. Data analysts should not be proficient in statistics, but it’s stupid not to have a person on the team who’s “on you”. Using aggregated metrics, conducting A / B testing on limited data, building predictive models - all this undoubtedly brings great benefits to the business.

Recruiting

And finally, after more than 150 interviews with engineers, analysts and managers at Wish, I gained enough experience to talk about how we approach hiring employees and how to find worthy candidates for the position.

Resume Screening. For recent graduates, the first thing we look at in the resume is grades and the school they graduated from. For more experienced candidates, it is important for us which companies and teams they worked in: organizations with a strong engineering component or data-driven? How important was their role in them?

Interviewing. Strong interviewers should ask flexible questions that can be changed depending on the reaction of the candidate. If it seems that the candidate has rather weak analytical skills, then you need to put pressure on more complex questions, and if he looks uncommunicative, then you can ask him a vague and lengthy question and see how he explains it.

In general, candidates must go through 1-2 phone screenings and 4 face-to-face interviews to get to Wish. Interviews take place quickly, with writing notes, so that the next interviewer can correct his questions.

Interviews should end on a positive note, since this is a very exciting experience for candidates, they may be vulnerable. There is no easier way to destroy the whole motivation of a person than to humiliate him at the interview. Also, conducting the interview in a negative way, the company can quickly earn a bad reputation.

Results

Over the past couple of years we have managed to achieve incredible things. We rebuilt the infrastructure and created a data work team for one of the largest online retailers in the world. And all this was done on the go, during this period the business did not stop for a second. We can say that we changed the tires on a Formula 1 car right while driving. We did it, I hope that thanks to this series of articles, you will succeed!

And if you decide to become a big data analyst or data engineer, but you’ll have to figure it out for yourself for a long time, and you don’t have to ask anyone, then come to the Newprolab programs:

- The program “Big Data Specialist 8.0” starts on March 22

- April 2 launch “Data Engineer 2.0”

Source: https://habr.com/ru/post/349968/

All Articles