Richard Hamming: Chapter 3. The History of Computers - Iron

“The goal of this course is to prepare you for your technical future.”

Hi, Habr. Remember the awesome article "You and your work" (+219, 2265 bookmarks, 353k readings)?

Hi, Habr. Remember the awesome article "You and your work" (+219, 2265 bookmarks, 353k readings)?So Hamming (yes, yes, self-checking and self-correcting Hamming codes ) has a whole book based on his lectures. Let's translate it, because the man is talking.

This book is not just about IT, it is a book about the thinking style of incredibly cool people. “This is not just a charge of positive thinking; it describes the conditions that increase the chances of doing a great job. ”

')

We have already translated 15 (out of 30) chapters.

For the translation, thanks to urticazoku , who responded to my call in the "previous chapter." Who wants to help with the translation - write in a personal or mail magisterludi2016@yandex.ru (By the way, we also started the translation of another cool book - The Dream Machine: The History of Computer Revolution ), and also translate Marvin Minsky.

Chapter 3. The history of computers - iron

The history of computing probably began with a primitive man using pebbles for addition. Marshak (Harvard) discovered that what was thought to be scratches on the bones of the times of a caveman was in fact carefully drawn lines related to the phases of the moon. The construction of Stonehenge on the Salisbury Plateau in England took place in three stages: 1900-1700, 1700-1500, and 1500-1400. BC, and was closely associated with astronomical observations, indicating a significant experience in astronomy. Work in archeoastronomy showed that many primitive peoples had considerable knowledge of astronomical events. Objects called observatories have been preserved in China, India and Mexico, but we do not have a complete understanding of how they were used. There are many traces of Indian astronomical observatories on our western plains.

Suan Pang (Chinese abacus) and abacus — tools that are more closely related to computing; The emergence of Arabic numerals from India meant a big step forward in the area of pure computing. Much resistance to the adoption of Arabic numerals (not in their original Arabic form) was met by the bureaucracy, up to recognizing them illegal, but over time (1400s) practical and economic advantages prevailed over the more awkward Roman (and earlier Greek) letters of the alphabet as characters for numbers.

The next big step is the invention of logarithm by Naper (1550-1617). A slide rule appeared, with logarithmic scales, where adding two logarithms means multiplying two numbers. This analog device, a slide rule, was the next significant step forward, but in the field of analog devices, not digital ones. I once used a very complex slide rule in the shape of a cylinder, 6-8 inches in diameter and 2 feet long, with many scales on the inner and outer cylinders, equipped with a magnifying glass to read the divisions.

The rulers of the 30s and 40s were a standard engineer tool, usually worn in a leather case attached to a belt as a symbol of their group on campus. A standard slide rule of 10 inches in length included a logarithmic scale, a scale of squares, cubes, and trigonometric scales. They are no longer produced!

We continue the story about analog devices. The next important step was the emergence of a differential analyzer, which with mechanical integrators in analog form. The first successful models were made around the 30th year by Vannevar Bush from the Massachusetts Institute of Technology. RDA No. 2, still analog and mechanical, had many electronic connections. I used this for some time (1947-1948) to calculate the launch trajectories of the Nike guided missile in the early stages of design.

During World War II, electronic analog computers began to be used by the military. They used capacitors as integrators instead of wheels and balls (although they could only integrate with respect to time). This was a significant step forward, and I have used such a device in the Bell telephone lab for many years. It was constructed from parts of the old M9 artillery fire control systems. We used parts of the M9 to build a second computer that could be used independently or in conjunction with the first to increase computing power.

Returning to digital computing, Napier also developed the "Napier's sticks", which most often were ivory rods with numbers that made it easy to multiply; they are digital and should not be confused with an analog slide rule.

From the sticks of Napier, modern desktop calculators probably originated. On December 20, 1623, Schickert wrote to Kepler that a fire in his laboratory burned the car he was making for Kepler. The study of his notes and sketches made it possible to establish that the machine would perform the four basic operations of arithmetic - if we were condescending to consider what multiplication and division are on such a machine. Pascal (1623 - 1662), born the same year, is called the inventor of the desktop computer, but his computer could only add and subtract - only these operations were necessary for his father to calculate taxes. Leibniz also worked on computers, and added multiplication and division, but his cars were not reliable.

Babbage (1791–1871) is the next great name in the digital realm, and is often considered the father of modern computing. Its first development is a difference engine based on the simple idea that a polynomial can be evaluated on consecutive, evenly distributed values using only a sequence of additions and subtractions, and since most functions locally can be represented by a suitable polynomial, this can be considered a “machine table "(Babbage insisted that the printing be done by the machine in order to prevent any human error). The government of England financed it, but the project was never completed. Norwegians, father and son Shoyts collected several working devices and Babbage congratulated them on their success. One of the devices was sold to an observatory in New York, and was used to calculate astronomical tables.

As it often happened in the field of computer technology, Babbage had not finished working with a differential engine before he conceived a much more powerful analytical engine, the design of which is close to von Neumanovskaya. He failed to get a working device; a group of scientists in England (1992) assembled the device according to his drawings and it worked successfully as it was intended.

The next major practical stage was Comptometer, which was only an adder, but due to repeated addition and shift, which is equivalent to multiplication, the device was very widely used for many, many years. After it appeared a sequence of more modern desktop calculators, Millionaire, then Marchant, Friden and Monroe. Power supply and control on them was carried out manually, but gradually part of the control was built in, mainly by mechanical levers. Starting from 1937, devices were gradually equipped with electric motors, to perform the most complex calculations. Until 1944, at least one of them had a built-in square root calculation operation (yet with complexly organized mechanical levers). Such hand-held machines were the basis for the groups controlling them to provide computing power. For example, when I went to the Bell telephone lab in 1946, there were four such groups in the lab, usually six to ten girls in a group; a small group in the department of mathematics, a large one in the network department, one in switching and one in quality control.

Calculations using punched cards appeared because one far-sighted person found that the Federal Census, which by law must be performed every 10 years, took so long that the last census (1890) would not have time to finish before the next one if they had not turned to machine methods. Hollerith took over this job and built the first punched cards, and with further successful censuses he built more powerful machines to keep up with both the population increase and the increased number of questions asked during the census. In 1928, IBM began using cards with rectangular holes, so electric brushes could easily detect the presence or absence of a hole on a map at a given location. Powers, who left the census group, used punched cards with round holes, which were designed to be detected by mechanical rods - "fingers."

Around 1935, IBM built a mechanical punch 601, which multiplied, and could make two increases to the result at the same time. He became one of the main components of computing. 1500 of them were leased, and they multiplied on average in 2 or 3 seconds. These devices, together with some special machines with triple product and division, were used at Los Alamos to calculate the designs of the first atomic bombs.

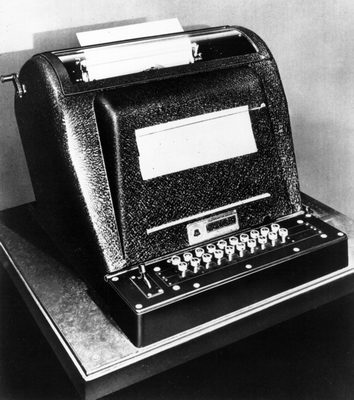

In mechanical, I mean the relay, the area George Stibits built (1939) a computer that works with complex numbers and put it in Dartmouth (1940), whereas the mainframe was in New York, that is, it was one of the first remote terminals and since he usually had three input stations in different places in the laboratories, it was, if you will, a “shared computer”.

Konrad Zuse in Germany and Howard Aitken at Harvard, each of them, like Stibits, released a series of relay computers of increased complexity. In Model 5, Stibitsa had two computers on the same machine and it was possible to divide the task, when necessary, as in a multiprocessor system. Of the three people, Zuse was probably the best, given both the difficulties he had to face and his later contribution to the development of computer software.

It is said that the era of electronic computers began with ENIAC, built for the US Army in 1946. It had about 18,000 vacuum tubes, was huge, and, as originally designed, was connected in the same way as the IBM connection boards, but its connections occupied a whole machine room to solve a particular problem! As long as it was used, as originally intended, to calculate ballistic trajectories, this defect was not serious. Ultimately, like the later IBM CPC, it was carefully reworked by users to act as if programmed from instructions (numbers on ballistic tables) rather than from wiring connections.

Mauchly and Eckert, who built ENIAC, found that, like Babbage, before the completion of their first car, they already imagined a larger, already programmed EDVAC machine. Von Neumann, being a project consultant, wrote a report and, as a result, he was often credited with internal programming, although, as far as I know, he never claimed or denied it. In the summer of 1946, Mauchly and Eckert opened the course for everyone how to design and create electronic computers, and as a result, many of the participants went to build their own; Wilkes from Cambridge, England, became the first to benefit from this - EDSAC.

At first, each machine was one of a kind, although many of them were copied (but often completed earlier) from the machine of the Advanced Research Institute under the leadership of von Neumann, because the assembly of this machine appeared to be suspended. As a result, many of the so-called copies, such as Maniac-I (1952) (which was named so as to get rid of the idiotic name of the machines), compiled under the guidance of NC Metropolis, were completed before the machine of the Advanced Research Institute. Maniac-I and Maniac-II (1955) are made at Los Alamos, and Maniac-III (1959) was assembled at the University of Chicago. The federal government, especially the military, supported the development of most early machines, and was very helpful at the beginning of the computer revolution.

The first commercial production of electronic computers was again under the leadership of Mauchly and Eckert, and since the company they had created was merged with another, their cars were called UNIVACS. Especially note one for the Census Bureau. IBM was a bit late from 18 (20 if you consider secret cryptographic users) IBM 701. I remember our group well, after the session on IBM 701 at the meeting, where they talked about the proposed 18 machines, everyone thought it would saturate the market for the years ahead ! Our mistake was simply that we thought only about the things we did then, and did not think about the directions of a completely new use of machines. The best experts of that time were absolutely mistaken! And a lot! And not the last time!

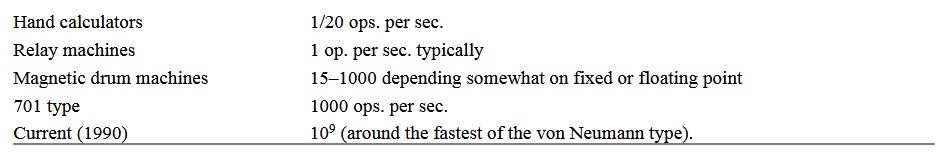

Let's compare:

The changes in speed and the corresponding amount of memory with which I dealt should give you an idea of what you will have to endure in your career. Even for machines like von Neumann, it is likely that the speed may increase 100 times to the maximum.

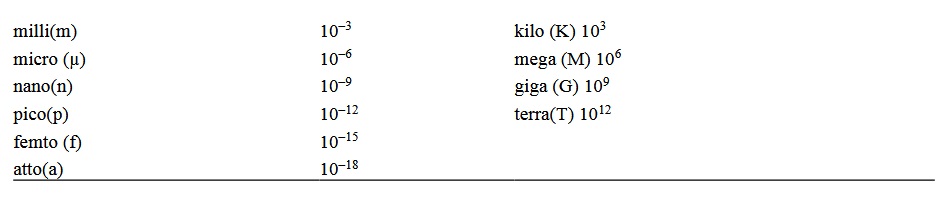

Since such numbers actually exceed most of the human dimensions, I need to introduce the human dimension of the described velocities. First record (parentheses contain standard character)

Now to the human dimensions. In one day, 60 × 60 × 24 = 86400 seconds. In one year - about 3.15 × 10 ^ 7 seconds, and in 100 years, probably longer than life expectancy - about 3.15 × 10 ^ 9 seconds. Thus, in 3 seconds a machine performing 10 ^ 9 floating point operations per second (flops) will do more operations than there are seconds in your entire life, and almost certainly without errors!

Another example of human dimensions is the speed of light in vacuum - 3x10 ^ 10 cm / sec. (on the wire it is about 7/10 of this value). Thus, in nanoseconds, light travels 30 cm, about one foot. On PS, of course, the distance is about 1/100 of an inch. These are the distances to which the signal can extend (at best) in the circuit. Thus, at some of the frequencies that we now use, the parts must be very close to each other - close in human dimensions, otherwise most of the potential velocity will be lost when switching between parts. Also, we can no longer use mixed analysis circuits.

What about natural length sizes instead of human ones? Atoms are of different sizes, usually from 1 to 3 angstroms (angstroms is 10 ^ -8 cm), and in a crystal they are located at a distance of about 10 angstroms, as a rule, although there are exceptions. For 1 femtosecond light passes about 300 atoms. Therefore, the details on a very fast computer should be small and close to each other!

If you think of an impurity transistor, and the impurities work for about 1 million, then you probably can't imagine a transistor with 1 impurity atom, but if you lower the temperature to reduce background noise, imagine 1000 impurities, which makes the solid state device at least about 1000 atoms in size. With interconnects that run at least ten times the distance relative to the size of the device, you realize that the distance is less than 100,000 atoms between some interconnected devices is actually large (3 picoseconds).

Heat dissipation also occurs. While we were talking about thermodynamically reversible computers, so far these were only conversations and published articles, and heat still matters. The more devices per unit of area and the more often their condition changes, the more heat is released in a small area that needs to be disposed of before everything melts. To compensate, we lower the voltage, reaching 2 or 3 volts. At present, the possibility of creating a diamond microcircuit base is being considered, since diamond is a very good thermal conductor, much better than copper. There is a reasonable opportunity for a similar, perhaps less expensive than a diamond, crystalline structure with very good thermal conductivity properties.

To speed up the work of computers, we moved to 2, 4 and even more, arithmetic units - on the same computer, and also developed pipelines and cache memory. All these are small steps to highly parallel computers.

Thus, you see the scheme on the board for a single-processor machine - we are approaching saturation. Hence the fascination with highly parallel machines. Unfortunately, for them there is no single common structure, but quite a lot of competing structures, which usually require different strategies to use their potential speeds and have different advantages and disadvantages. Probably, for a standard parallel computer architecture there will not be one design, therefore problems and dispersion of efforts to implement various promising directions will arise.

From a chart made long ago at Los Alamos (LANL) using data from the fastest computer on the market at the time, we found an equation for the number of operations per second:

and it describes the data fairly well. Here time begins in 1943. In 1987, the extrapolated value predicted (in about 20 years!) Was about 3 \ times10 ^ 8 and was the goal. The limiting asymptote is 3.576 \ times10 ^ 9 for a von Neumann type computer with a single processor.

Here, in the history of the growth of computers, you see the implementation of the growth curve of type “S” very slow start, fast ascent, a long section of almost linear growth of speed, and then a collision with inevitable saturation.

So returning to human dimensions. When digital computers first appeared in Bella's laboratory, I began by renting them for hours so often that the head of the mathematics department decided that it would be cheaper to hire me as an employee — I tried not to argue with him, because I considered the disputes useless and only creating more resistance from his side to digital computers.As soon as the boss says “No!”, It’s very difficult for him to make another decision, so don’t let him say “No!” To your proposal. I found that in my early years I doubled the number of calculations per year every 15 months. A few years later, I reduced the doubling time to about 18 months. The head of the department continued to repeat that I could not continue this forever, and my polite answer usually was: “You are right, of course, but you just watch me double the number of calculations every 18-20 months!”. The machines allowed me and my successors to double the number of calculations performed over the years. All these years we have been living on the almost straight part of the “S” curve.

Nevertheless, let me honestly treat the head of the Department, it was his remarks that made me understand that what counted was not the number of operations performed, but how the number of micro Nobel prizes I calculated. Thus, the motto of the book, which I published in 1961:

The goal of computation is understanding, not numbers.

My good friend revised it:

The purpose of the calculations is not yet clear.

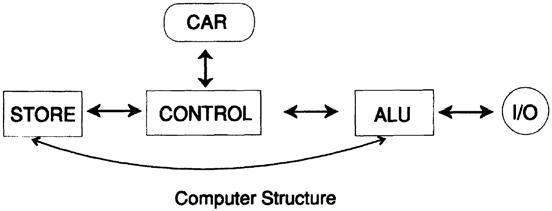

Figure 3.1

Now it is necessary to turn to some details about how computers have been designed for many years. The smallest parts that we will consider are two state devices for storing bits of information and for a transistor gate that pass or block the signal. Both are binary devices, and in the current state of knowledge they provide the simplest and fastest computation methods we know.

From such parts we build combinations that allow storing large arrays of bits; these arrays are often called numeric registers. A logic gate is just a combination of storage units, including transistor gates. We build an adder from such devices, as well as every large computer unit.

Turning to even larger units, we have a machine consisting of: (1) a storage device, (2) a control device, (3) an arithmetic logic unit. There is one register in the control unit, which we will call the Current Address Register (CAR). It stores the address at which the next instruction can be found, Figure 3.1.

Computer cycle:

1. Get the address of the following instruction from CAR.

2. Go to this address in the repository and get this instruction.

3. Decode and execute this instruction.

4. Add 1 to the CAR address and start again.

We see that the car does not know where it was and where it will be; at best, she has only a myopic view of simply repeating the same cycle indefinitely. Below this level, the gate of the transistor and double-sided memory devices do not know any meaning — they just react to what they have to do. They also have no global knowledge of what is happening, nor any sense to add any bits, whether it is storage or operation of the transistor.

There are instructions that, depending on some state of the machine, put the address of the instruction in CAR, (and 1 is not added in such cases), and then the machine, starting its cycle, simply finds an address that is not the address following the address of the previous instruction , and finds a place stored in CAR.

I want you to understand: the machine processes the bits of information in accordance with the other bits, and as far as the machine is concerned, there is no point in what is happening - it is we who attach importance to the bits. A machine is a “machine” in the classical sense; she does what she does and nothing else (not yet wrong). There are, of course, real-time interruptions and other ways in which new bits get into the machine, but for the machine they are just bits.

But before we leave this topic, remember the words of Democritus (460-336?): "Everything is atoms and emptiness." Thus, he expressed the modern view of the world of many physicists, that everything, including you and me, consists of molecules, and we exist in the energy field (?). Nothing else! Are we cars? Many people do not want to agree with this, but feel that they are something more than just a multitude of molecules that senselessly collide with each other - how do we imagine a computer. We will look at this topic in Chapters 6-8, entitled “Artificial Intelligence” (AI).

There are some benefits to seeing a computer as a machine, a set of storage devices, and bit processing units. For example, when debugging a program (searching for errors). What needs to be borne in mind when debugging is that the machine obeys instructions - and nothing more, no “free will” or self-consciousness as in humans.

How much are we really different from cars? We all would like to think that we are different, but really? This is a sensitive topic for people, and emotions and religious beliefs prevail as arguments. We will return to this issue in parts 6-8 about AI, when we have more knowledge to discuss this topic.

To be continued...

Who wants to help with the translation - write in a personal or mail magisterludi2016@yandex.ru

By the way, we also launched another translation of the coolest book - “The Dream Machine: The History of Computer Revolution” )

Book content and translated chapters

— magisterludi2016@yandex.ru

- Intro to the Art of Doing Science and Engineering: Learning to Learn (March 28, 1995) (during work) Translation: Chapter 1

- Foundations of the Digital (Discrete) Revolution (March 30, 1995) Chapter 2. Basics of the digital (discrete) revolution

- "History of Computers - Hardware" (March 31, 1995) (in work)

- History of Computers - Software (April 4, 1995) Chapter 4. Computer History - Software

- «History of Computers — Applications» (April 6, 1995) ( )

- «Artificial Intelligence — Part I» (April 7, 1995) ( )

- «Artificial Intelligence — Part II» (April 11, 1995) ( )

- «Artificial Intelligence III» (April 13, 1995) 8. -III

- «n-Dimensional Space» (April 14, 1995) 9. N-

- «Coding Theory — The Representation of Information, Part I» (April 18, 1995) ( )

- «Coding Theory — The Representation of Information, Part II» (April 20, 1995)

- «Error-Correcting Codes» (April 21, 1995) ( )

- «Information Theory» (April 25, 1995) ( , )

- «Digital Filters, Part I» (April 27, 1995)

- «Digital Filters, Part II» (April 28, 1995)

- «Digital Filters, Part III» (May 2, 1995)

- «Digital Filters, Part IV» (May 4, 1995)

- «Simulation, Part I» (May 5, 1995) ( )

- «Simulation, Part II» (May 9, 1995)

- «Simulation, Part III» (May 11, 1995)

- «Fiber Optics» (May 12, 1995)

- «Computer Aided Instruction» (May 16, 1995) ( )

- «Mathematics» (May 18, 1995) 23.

- «Quantum Mechanics» (May 19, 1995) 24.

- «Creativity» (May 23, 1995). : 25.

- «Experts» (May 25, 1995) 26.

- «Unreliable Data» (May 26, 1995) ( )

- «Systems Engineering» (May 30, 1995) 28.

- «You Get What You Measure» (June 1, 1995) 29. ,

- «How Do We Know What We Know» (June 2, 1995)

- Hamming, «You and Your Research» (June 6, 1995). :

— magisterludi2016@yandex.ru

Source: https://habr.com/ru/post/349936/

All Articles