From a series of conversations with colleagues or a grain of experience: DC Edge design

Yesterday I talked with my old friend, he told about the completion of a large data center upgrade project - a network design from scratch, Leaf / Spine, TOR, new equipment, fault tolerance, everything is beautiful and fresh. We have known each other since when 40Gbit / s seemed to be something beyond the limits of the slot, our professional roads proper came together against the background of studying internal oversubscription, architecture and traffic transmission features in line cards of a well-known manufacturer. Therefore, when a friend asked: “Do you know why I'm calling you?”, I replied without thinking about it: “What, again, drops?”

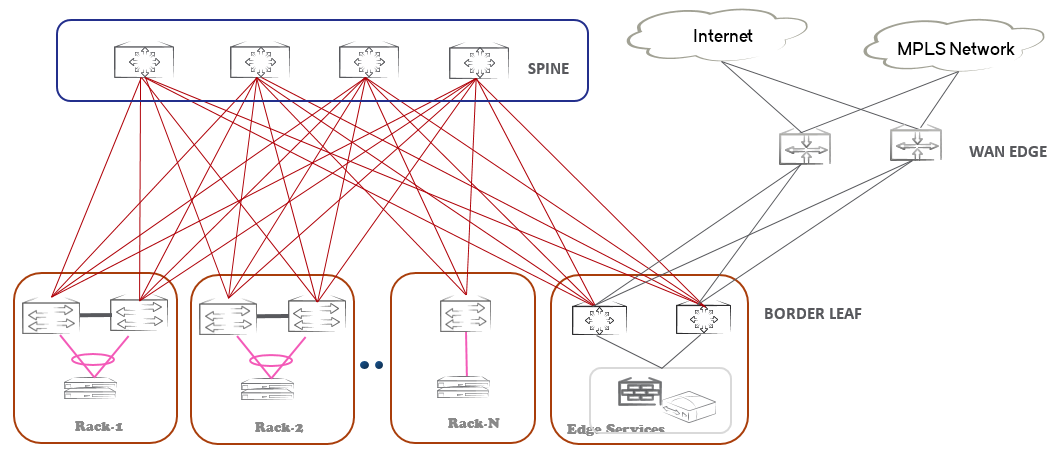

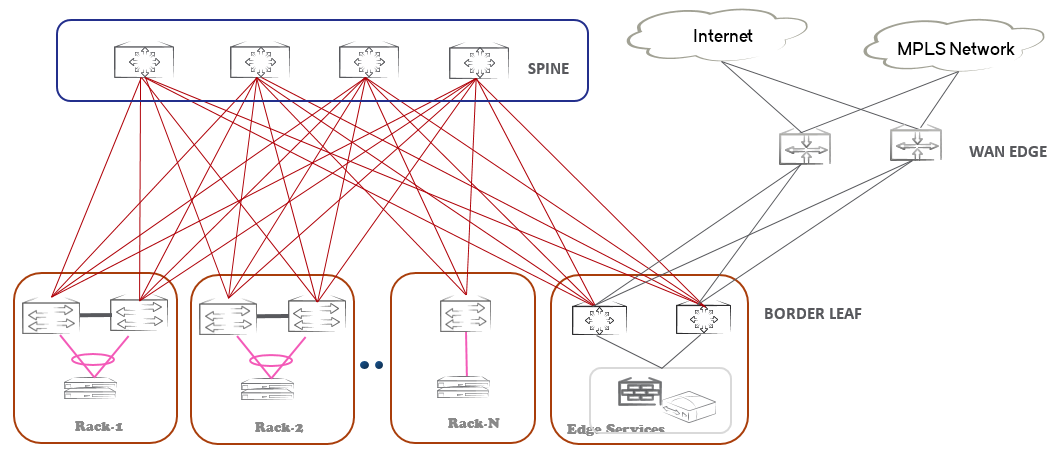

Having received an affirmative answer, I tried to find out what they are trying to figure out in such a situation — the matrix and the traffic profile, the switch models, the up / down capacitance ratio, the order of the number of active servers, the types of ports, and so on. I cannot take out the details of what I heard for everyone to see, however, it would not be superfluous to say that the switches my buddy deals with are based on Broadcom's Trident-2, which means the problem described below is to some extent unique for a specific manufacturer. Note rather not about the internal architecture, but about the external design as a whole. So, I managed to find out that a pair of Leaf switches, which are designed to connect the data center to the outside world and service devices, are causing complaints.

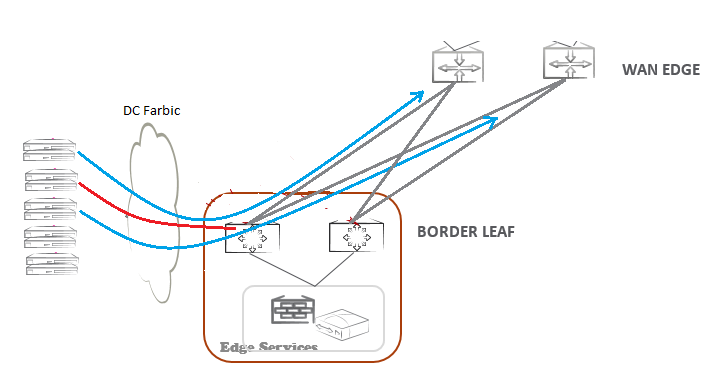

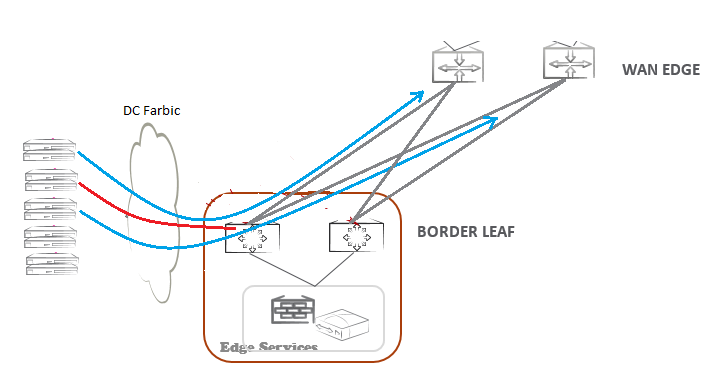

It turned out that on the pair of Border Leaf switches, the “broad” LAGs were assembled in the direction of the upstream WAN Edge routers. Data center traffic usually has asymmetry with respect to the incoming and outgoing lanes, we are interested in the direction of Border Leaf - WAN, where drops are observed. On the one hand, we have several 40G ports before Leaf, on the other - LAG from 10G ports with even greater capacity. In this case, the middle band does not reach even half of the possible. Once again, I asked the friend what types of ports the servers are connected to, it turned out that all TORs have 10G on access ports. Then I offered to think about this network as an old good TDM - each port in the LAG to the WAN can be represented in one time slot, suppose that the rest of the factory is not completely blocked, i.e. as soon as a server initiates the sending of a stream, one of the time slots is utilized exclusively for these needs. Having made such an assumption, we will not be far from the truth, since the channel speeds of access ports and ports in LAGs to WAN coincide, and the server always “throws” data into the network at the channel speed of its network card. If it is a TCP protocol, the time slot is reserved for the time required to transfer the volume of bytes to the TCP window. No matter how “wide” LAGs are, the number of servers in the data center clearly exceeds the number of ports in it, so two (actually more) streams may well end up in buffer memory (we returned from TDM to Ethernet networks) waiting to be sent one same port.

')

Is it correct to build joints with a data center with a WAN at the channel speed of the access port? Such an approach can work if the volume of the buffer memory of the switch is able to accommodate Incast traffic surges, with Broadcom chips this focus often fails. 9-12Mb of buffer memory on SoC for 48 ports of a standard TOR switch allows you to smooth out bursts from two sources with a duration of no more than 9Mb / 1250Mb / s = 0.0072s, where 1250 is the data amount transmitted on the 10G port every second. The number of simultaneous sources is not equal to the number of servers, and for each data center requires its own assessment, taking into account the observed traffic, but in any case it is more than two. In this case, the TOR on the Broadcom chip was “deployed” from the point of view of traffic and forced to be engaged in something completely unusual for him. Instead of accepting traffic from low-speed and ports and sending it over high-speed, minimizing the consumption of buffer memory, the chip is forced to do the opposite.

Let's go back to the design to develop solutions to the problem, offhand a few:

As you can see, the first two options require updating the hardware component, in a running project this is usually not welcome, and the third option is completely exotic. In addition, each implementation requires consideration. And this problem had to be solved here and now, so I suggested to go along the Scale Out path. What a lot of data center? TOR switches of course - there is a set of spare parts, there is to expand. Adding an even amount of Border Leaf and reallocating LAG ports to them, multiply reduces the number of competing streams and redistributes them across the appeared buffer memory. As a quick solution to the problem, in my opinion, it is not bad, but we will come back to the conversation on the right path number one.

Having received an affirmative answer, I tried to find out what they are trying to figure out in such a situation — the matrix and the traffic profile, the switch models, the up / down capacitance ratio, the order of the number of active servers, the types of ports, and so on. I cannot take out the details of what I heard for everyone to see, however, it would not be superfluous to say that the switches my buddy deals with are based on Broadcom's Trident-2, which means the problem described below is to some extent unique for a specific manufacturer. Note rather not about the internal architecture, but about the external design as a whole. So, I managed to find out that a pair of Leaf switches, which are designed to connect the data center to the outside world and service devices, are causing complaints.

It turned out that on the pair of Border Leaf switches, the “broad” LAGs were assembled in the direction of the upstream WAN Edge routers. Data center traffic usually has asymmetry with respect to the incoming and outgoing lanes, we are interested in the direction of Border Leaf - WAN, where drops are observed. On the one hand, we have several 40G ports before Leaf, on the other - LAG from 10G ports with even greater capacity. In this case, the middle band does not reach even half of the possible. Once again, I asked the friend what types of ports the servers are connected to, it turned out that all TORs have 10G on access ports. Then I offered to think about this network as an old good TDM - each port in the LAG to the WAN can be represented in one time slot, suppose that the rest of the factory is not completely blocked, i.e. as soon as a server initiates the sending of a stream, one of the time slots is utilized exclusively for these needs. Having made such an assumption, we will not be far from the truth, since the channel speeds of access ports and ports in LAGs to WAN coincide, and the server always “throws” data into the network at the channel speed of its network card. If it is a TCP protocol, the time slot is reserved for the time required to transfer the volume of bytes to the TCP window. No matter how “wide” LAGs are, the number of servers in the data center clearly exceeds the number of ports in it, so two (actually more) streams may well end up in buffer memory (we returned from TDM to Ethernet networks) waiting to be sent one same port.

')

Is it correct to build joints with a data center with a WAN at the channel speed of the access port? Such an approach can work if the volume of the buffer memory of the switch is able to accommodate Incast traffic surges, with Broadcom chips this focus often fails. 9-12Mb of buffer memory on SoC for 48 ports of a standard TOR switch allows you to smooth out bursts from two sources with a duration of no more than 9Mb / 1250Mb / s = 0.0072s, where 1250 is the data amount transmitted on the 10G port every second. The number of simultaneous sources is not equal to the number of servers, and for each data center requires its own assessment, taking into account the observed traffic, but in any case it is more than two. In this case, the TOR on the Broadcom chip was “deployed” from the point of view of traffic and forced to be engaged in something completely unusual for him. Instead of accepting traffic from low-speed and ports and sending it over high-speed, minimizing the consumption of buffer memory, the chip is forced to do the opposite.

Let's go back to the design to develop solutions to the problem, offhand a few:

- replace Border Leaf switches from Broadcom with switches with Deep Buffers. Many manufacturers have models built on chips of their own design, specialized for this kind of applications.

- increase the channel speed of LAG ports to WAN, so that this speed exceeds the channel speed of access ports.

- Switch to Flex Ethernet when this technology comes to the data center.

As you can see, the first two options require updating the hardware component, in a running project this is usually not welcome, and the third option is completely exotic. In addition, each implementation requires consideration. And this problem had to be solved here and now, so I suggested to go along the Scale Out path. What a lot of data center? TOR switches of course - there is a set of spare parts, there is to expand. Adding an even amount of Border Leaf and reallocating LAG ports to them, multiply reduces the number of competing streams and redistributes them across the appeared buffer memory. As a quick solution to the problem, in my opinion, it is not bad, but we will come back to the conversation on the right path number one.

Source: https://habr.com/ru/post/349804/

All Articles