Depth training with reinforcements does not work yet

About the author . Alex Irpan is a developer from the Brain Robotics group at Google, before that he worked in the laboratory of Berkeley Artificial Intelligence Research (BAIR).

Here are mainly cited articles from Berkeley, Google Brain, DeepMind and OpenAI over the past few years, because their work is most noticeable from my point of view. Almost certainly I missed something from older literature and from other organizations, so I apologize - I am just one person in the end.

Once on Facebook, I stated the following.

')

Unfortunately, in reality, this thing does not work yet.

But I believe that she will shoot. If I didn’t believe, I wouldn’t cook in this thread. But there are a lot of problems ahead, many of which are fundamentally complex. Beautiful demo trained agents hide all the blood, sweat and tears that spilled in the process of their creation.

Several times I have seen people seduced by the latest results. They first tried in-depth RL and always underestimated the difficulties. Without a doubt, this “model problem” is not as simple as it seems. And without a doubt, this area broke them several times before they learned to set realistic expectations in their research.

There is no personal error. Here is a system problem. Easy to draw a story around a positive result. Try to do it with a negative. The problem is that researchers often get exactly a negative result. In a sense, such results are even more important than positive ones.

In this article I will explain why deep RL does not work. I will give examples of when it still works and how to achieve more reliable work in the future, in my opinion. I do this not to make people stop working on deep-seated RL, but because it is easier to make progress if everyone understands the existence of problems. It is easier to reach agreement, if you really talk about problems, and not over and over again stumble on the same rake separately from each other.

I would like to see more research on deep RL. To come here new people. And so they know what they are getting involved in.

Before continuing, let me make a few comments.

Without further ado, here are some of the cases where the deep-seated RL fails.

The most famous benchmark for in-depth training with reinforcements is Atari games. As shown in the now famous Deep Q-Networks (DQN) article, if you combine Q-Learning with reasonable-sized neural networks and some optimization tricks, you can achieve or exceed human performance in several Atari games.

Atari games play at 60 frames per second. Can you just figure out how many frames you need to process the best DQN to show the result as a person?

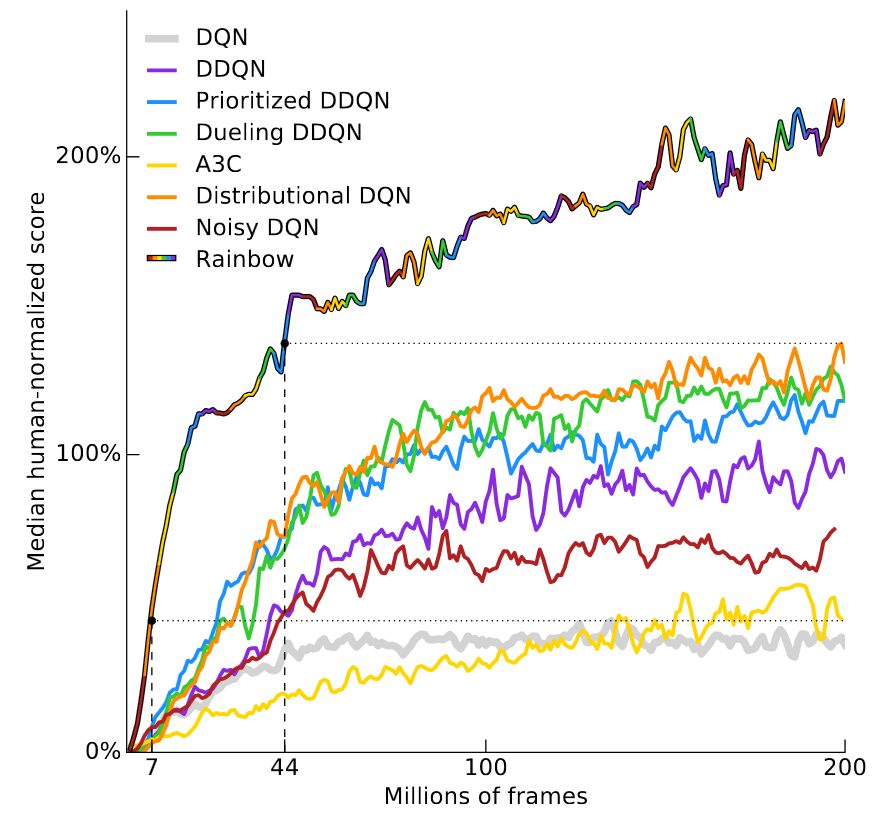

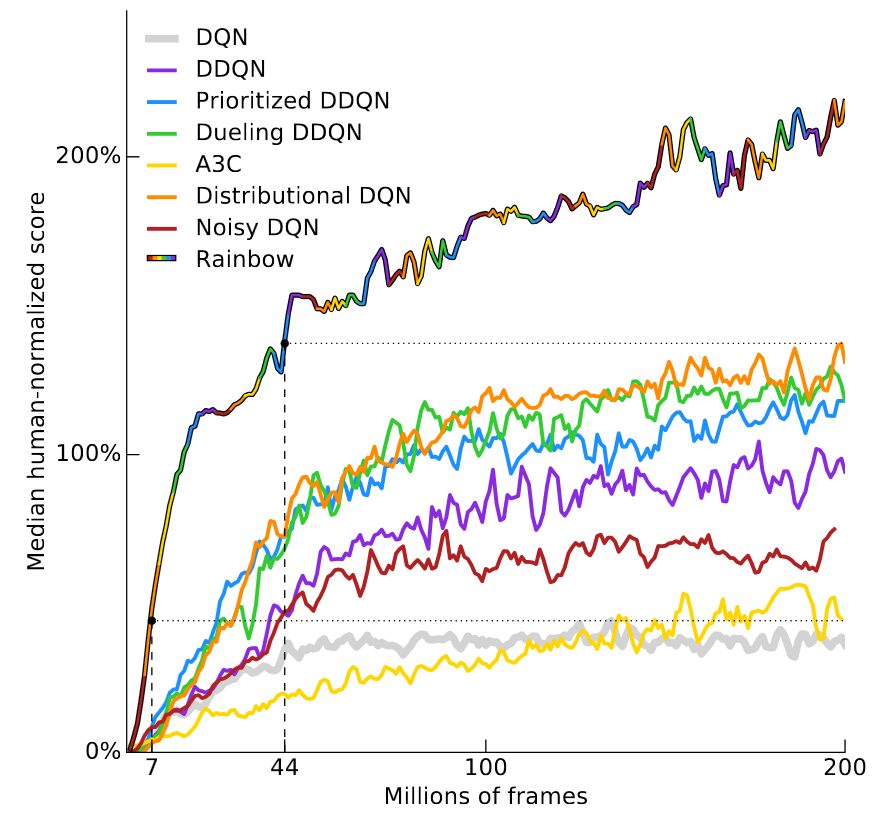

The answer depends on the game, so take a look at a recent article by Deepmind - Rainbow DQN (Hessel et al, 2017) . It shows how some consistent improvements to the original DQN architecture improve the result, and a combination of all the improvements as efficiently as possible. The neural network is superior to human performance in over 40 of the 57 Atari games. The results are shown in this convenient graph.

On the vertical axis, the “median median result normalized by the human” is plotted. it is calculated by training 57 DQN neural networks, one for each Atari game, with normalizing the result of each agent when the human result is taken as 100%, and then calculating the average median result for 57 games. RainbowDQN exceeds 100% after processing 18 million frames. This corresponds to about 83 hours of play, plus training time, no matter how long it takes. This is a lot of time for simple Atari games that most people grasp in a couple of minutes.

Consider that 18 million frames is actually a very good result, because the previous record belonged to the Distributional DQN system (Bellemare et al, 2017) , which required 70 million frames to achieve a result of 100%, that is, about four times longer. As for Nature DQN (Mnih et al, 2015) , it never reaches 100% of the median result at all, even after 200 million frames.

Cognitive distortion “planning error” says that completing a task usually takes longer than you thought. In reinforcement training there is a planning error of its own - training usually requires more samples than you thought.

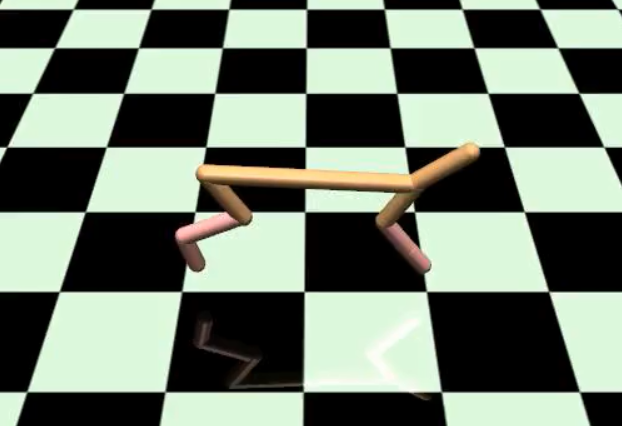

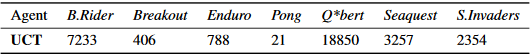

The problem is not limited to Atari games. The second most popular test is MuJoCo benchmarks, a set of tasks in the MuJoCo physics engine. In these tasks, the position and speed of each hinge in the simulation of a certain robot are usually given at the entrance. Although there is no need to solve the problem of vision, RL systems are required for learning from 10 5 before 10 7 steps, depending on the task. This is an incredible amount to control in such a simple environment.

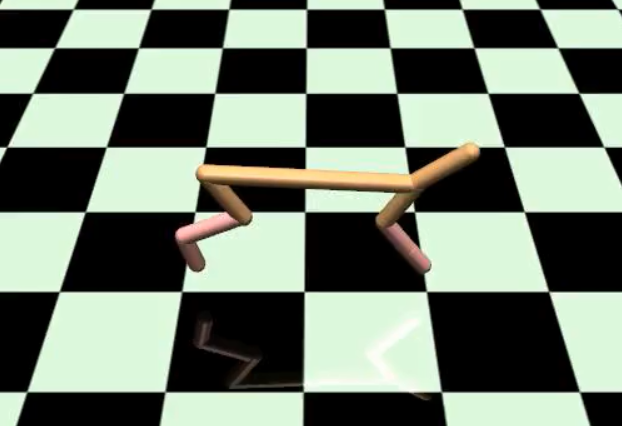

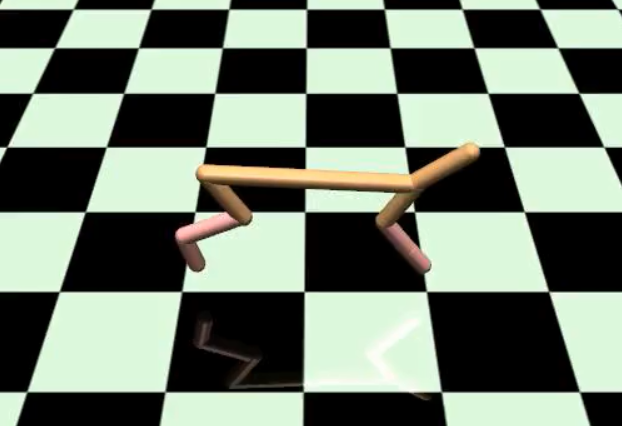

The DeepMind Parkour article (Heess et al, 2017) , illustrated below, has been trained using 64 workers for more than 100 hours. The article does not specify what a "worker" is, but I guess it means a single processor.

This is a super result . When he first came out, I was surprised that the deep-seated RL was able to learn such gaits on the run.

But it took 6400 hours of CPU time, which is a bit disappointing. Not that I was counting on less time ... it's just sad that in simple skills, deep RL is still an order of magnitude less than the level of training that could be useful in practice.

There is an obvious counter-argument here: what if you just ignore the effectiveness of training? There are certain environments that make it easy to generate experience. For example, games. But for any environment where this is impossible , RL faces enormous challenges. Unfortunately, most environments fall into this category.

When looking for solutions to any problem, one usually has to find a compromise in achieving different goals. You can focus on a really good solution to this particular problem, or you can focus on maximizing your contribution to the overall research. The best problems are those where a good contribution to research is required to get a good solution. But in reality it is difficult to find problems that meet these criteria.

Purely in demonstrating maximum efficiency, the in-depth RL shows not very impressive results, because it is constantly superior to other methods. Here is a video with MuJoCo robots, which are controlled by interactive trajectory optimization. Correct actions are calculated almost in real time, online, without offline learning. Yes, and everything works on the equipment of 2012 ( Tassa et al, IROS 2012 ).

I think this job can be compared with the article DeepMind on parkour. What is the difference?

The difference is that here the authors apply control with predictive models, working with a real model of the earthly world (physics engine). There are no such models in RL, which makes work very difficult. On the other hand, if planning an action based on a model improves the result so much, then why suffer with tricky training on RL rules?

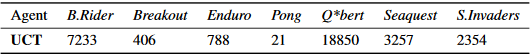

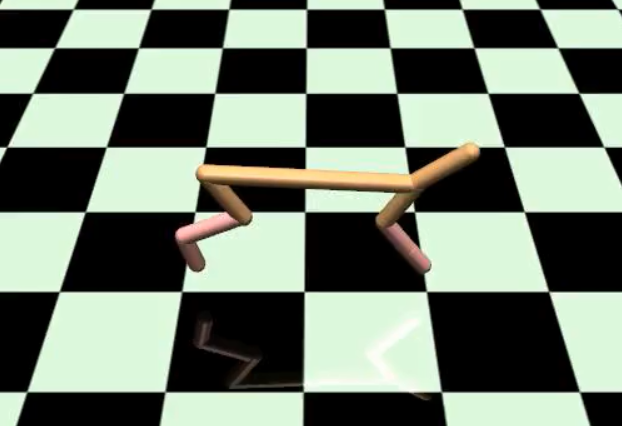

Similarly, you can easily surpass the DQN neural network in Atari with a ready-made Monte Carlo tree search (MCTS) solution. Here are the main indicators from the work of Guo et al, NIPS 2014 . The authors compare the results of the trained DQN with the results of the UCT agent (this is the standard version of the modern MCTS).

Again, this is an unfair comparison, because DQN does not search, and the MCTS does exactly the search on the real model of terrestrial physics (Atari emulator). But in some situations you do not care, here is an honest or unfair comparison. Sometimes you just need everything to work (if you need a full UCT assessment, see the appendix of the original research article Arcade Learning Environment (Bellemare et al, JAIR 2013) ).

Reinforced learning is theoretically appropriate for everything, including environments with an unknown model of the world. However, such versatility is costly: it is difficult to use some specific information that could help in learning. Because of this, we have to use a lot of samples in order to learn things that could be simply hard-coded initially.

Experience shows that, with the exception of rare cases, algorithms sharpened for specific tasks work faster and better than reinforcement learning. This is not a problem if you develop deep RL for the deepest RL, but personally I’m upset about comparing the effectiveness of RL c ... well, with anything else. One of the reasons why I liked AlphaGo so much is because it was an unequivocal victory for deep-seated RL, and this does not happen very often.

Because of all this, it is more difficult for people to explain why my tasks are so cool, complex and interesting, because they often have no context or experience to evaluate, why are they so difficult. There is a certain difference between what people think about the possibilities of deep-seated RL - and its real possibilities. Now I work in the field of robotics. Consider a company that comes to mind for most people if you mention robotics: Boston Dynamics.

This thing doesn't use reinforcement training. I met people several times who thought that RL was used here, but no. If you look for published scientific papers from a group of developers, you will find articles with references to time-varying linear-quadratic regulators, quadratic programming solvers, and convex optimization . In other words, they use mostly classical methods of robotics. It turns out that these classic techniques work fine, if properly applied.

Reinforcement training assumes the existence of a reward function. Usually, it is either originally, or manually configured offline and remains unchanged during training. I say “usually” because there are exceptions, such as simulation training or the reverse RL (when the reward function is restored after the fact), but in most RL options they use reward as an oracle.

It is important to note that in order for the RL to work properly, the reward function must cover exactly what we need. And I mean exactly . RL is annoyingly prone to overfit, which leads to unexpected consequences. That is why Atari is such a good benchmark. There is not only easy to get a lot of samples, but every game has a clear goal - the number of points, so you never have to worry about finding the reward function. And you know that everyone else has the same function.

The popularity of the tasks MuJoCo due to the same reasons. Since they work in the simulation, you have complete information about the state of the object, which greatly simplifies the creation of the reward function.

In the Reacher task, you control a two-segment hand that is connected to a central point, and the goal is to move the end of the hand to a given target. See below for an example of successful learning.

Since all coordinates are known, the reward can be defined as the distance from the end of the arm to the target, plus a short time to move. In principle, in the real world, you can conduct the same experience if you have enough sensors to accurately measure the coordinates. But depending on what the system should do, it can be difficult to determine a reasonable reward.

The reward function itself would not be a big problem if not ...

Making the reward function is not that difficult. Difficulties arise when you try to create a function that will encourage proper behavior, and at the same time, the system will retain learnability.

In HalfCheetah, we have a two-legged robot bounded by a vertical plane, which means, that is, it can only move forward or backward.

The goal is to learn to trot. Reward - HalfCheetah speed ( video ).

This is a smooth or shaped (shaped) reward, that is, it increases with the approach to the final goal. In contrast to the sparse (sparse) reward, which is given only upon reaching the final state of the goal, and is absent in other states. Smooth growth of remuneration is often much easier to learn, because it provides positive feedback, even if the training did not provide a complete solution to the problem.

Unfortunately, a smooth growth reward can be biassed. As already mentioned, this causes unexpected and undesirable behavior. A good example is the boating race from the OpenAI blog article . The intended goal is to reach the finish line. You can submit a reward as +1 for ending the race at a given time, and a reward of 0 otherwise.

The reward function gives points for crossing checkpoints and collecting bonuses that allow you to get to the finish line faster. As it turned out, the collection of bonuses gives more points than the end of the race.

To be honest, at first this publication annoyed me a little. Not because it is wrong! And because it seemed to me that she demonstrates the obvious things. Of course, reinforcement training will give a strange result when the reward is incorrectly determined! It seemed to me that the publication attaches unreasonably great importance to this particular case.

But then I started writing this article and I realized that the most convincing example of a wrongly defined reward is the very same boat racing video. Since then, it has been used in several presentations on this topic, which has drawn attention to the problem. So okay, I’m reluctant to admit that it was a good blog post.

Algorithms RL fall into a black hole, if they have more or less to guess about the world around them. The most universal category of modelless RL is almost like a black box optimization. Such systems are only allowed to assume that they are in the MDP (Markov decision-making process) - and nothing more. The agent is simply told that you get +1 for this, but you don’t get for this, but you have to learn everything else yourself. As with the black box optimization, the problem is that any behavior that gives +1 is considered good, even if the reward is received in the wrong way.

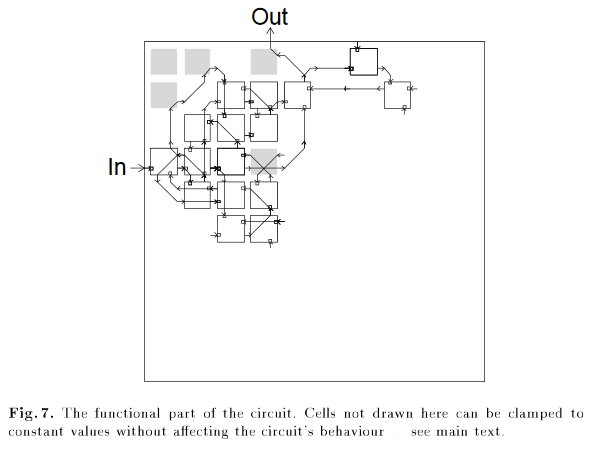

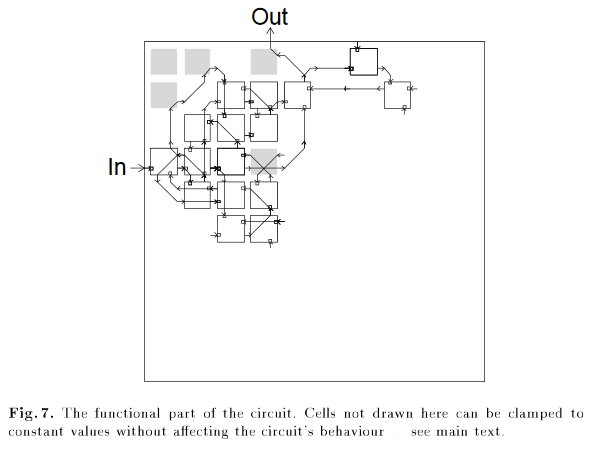

The classic example is not from the realm of RL - when someone applied genetic algorithms for chip design and got a circuit in which one unconnected logic gate was needed for the final design .

Gray elements are necessary for the correct operation of the circuit, including the element in the upper left corner, although it is not connected to anything. From the article “An Evolved Circuit, Intrinsic in Silicon, Entwined with Physics”

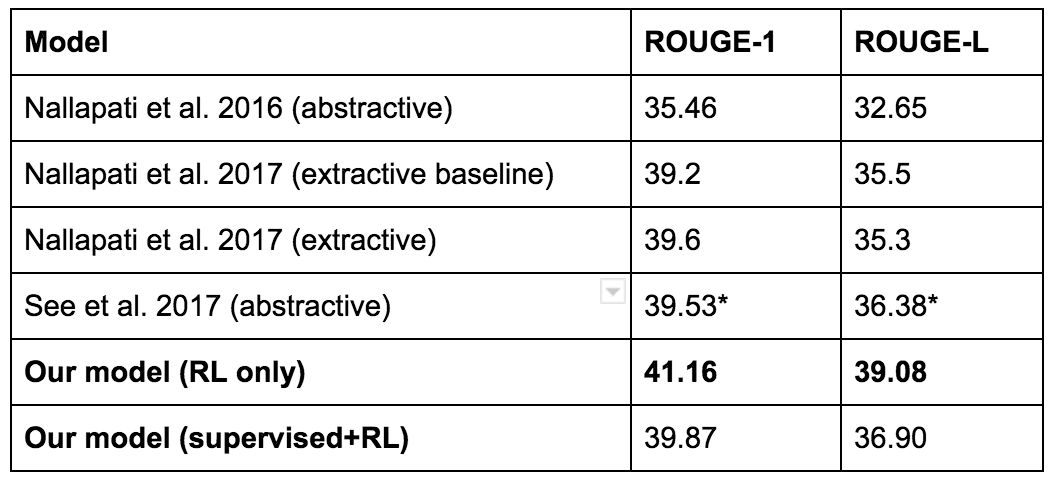

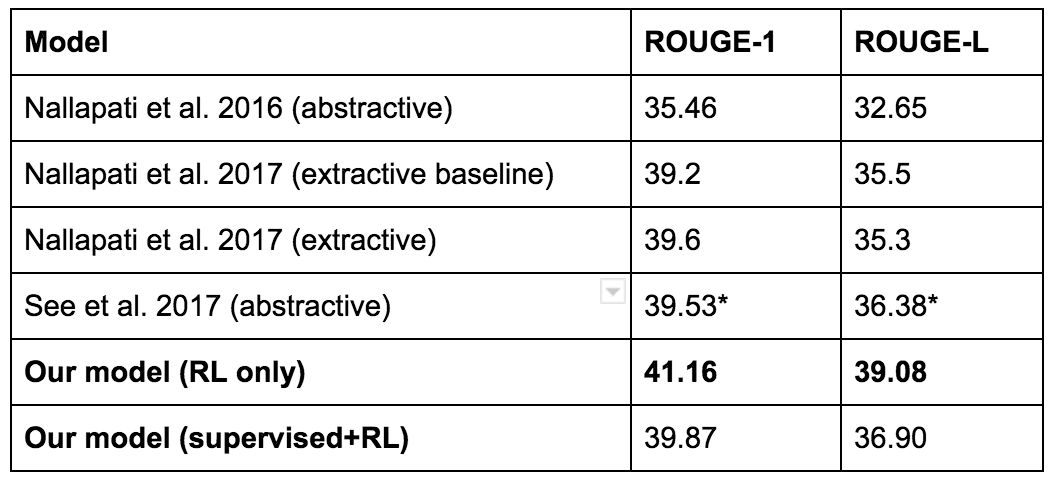

Or a more recent example - here is the publication in the 2017 Salesforce blog . Their goal was to write a summary for the text. The basic model was trained with the teacher, then it was evaluated by an automated metric called ROUGE. ROUGE is a non-differentiable reward, but RL can work with such. So they tried to apply RL to optimize ROUGE directly. This gives a high ROUGE (hooray!), But not really very good lyrics. Here is an example.

And although the RL model showed the maximum ROUGE result ...

... they finally decided to use a different model for the resume.

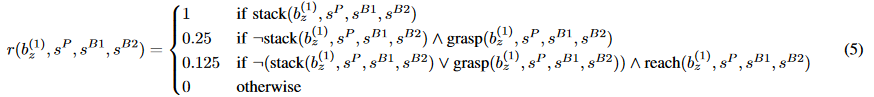

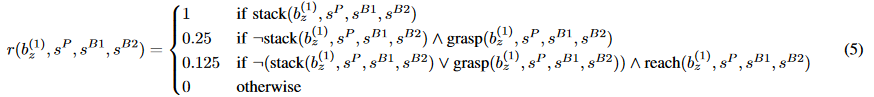

Another funny example. This is from an article by Popov et al, 2017 , also known as the “article on folding Lego designer.” The authors use the distributed version of DDPG to learn how to capture. The goal is to grab the red cube and put it on the blue one.

They made her work, but faced an interesting case of failure. The initial lifting movement is rewarded based on the height of the red block lifting. This is determined by the z-coordinate of the bottom face of the cube. In one of the options for failure, the model learned to turn the red cube upside down, rather than lift it.

It is clear that this behavior was not intended. But RL doesn't care. From the point of view of reinforcement training, she received a reward for turning the cube - therefore she will continue to turn the cube.

One way to solve this problem is to make the reward sparse, giving it only after connecting the cubes. Sometimes it works because the rare reward is trainable. But often this is not the case, since the absence of positive reinforcement complicates things too much.

Another solution to the problem is the careful formation of remuneration, the addition of new remuneration conditions and the adjustment of coefficients for existing conditions until the RL algorithm begins to demonstrate the desired behavior during training. Yes, it is possible to overcome the RL on this front, but such a struggle does not bring satisfaction. Sometimes it is necessary, but I never felt that I had learned something in the process.

For reference, here is one of the functions of remuneration from the article on folding designer Lego.

I do not know how much time the guys spent on the development of this function, but by the number of members and different coefficients, I would say “a lot”.

In conversations with other RL researchers, I heard several stories about the original behavior of models with improperly established rewards.

True, all these are stories from the lips of others; I personally have not seen videos with such behavior. But none of these stories seems to me impossible. I burned on the RL too many times to believe the opposite.

I know people who love telling stories about clip optimizers . Okay, I understand, honestly. But in truth, I am sick of listening to these stories, because they always talk about some kind of superhuman disoriented strong AI as a real story. Why invent, if there are so many real stories that happen every day.

The previous examples of RL are often called "reward hacks."As for me, this is a smart, non-standard solution that brings more reward than the intended solution from the designer of the problem.

Khaki rewards are exceptions. Cases of incorrect local optima are much more common, as they arise from an incorrect compromise between exploration and exploitation (exploration – exploitation).

Here is one of my favorite videos . Here the normalized benefit function is implemented , which is learned in the HalfCheetah environment. From the perspective of an outside observer, this is very, very

stupid But we call it stupid just because we look from the side and have a lot of knowledge that moving on legs is better than lying on your back. RL does not know this! He sees a state vector, sends action vectors, and sees that he receives some positive reward. That's all.

Here is the most plausible explanation that I can think of about what happened during the training.

This is very funny, but obviously not what we want from the robot.

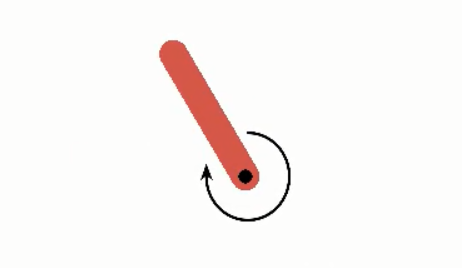

Here is another unfortunate example, this time surrounded by Reacher ( video ) In this run, random initial weights, as a rule, gave strongly positive or strongly negative values for actions. Because of this, most actions were performed with the maximum or minimum acceleration. In fact, it is very easy to unwind the model: just give a high amount of force to each hinge. When the robot has unwound, it is already difficult to get out of this state in some understandable way: in order to stop the uncontrolled rotation, several steps of reconnaissance should be taken. Of course, this is possible. But this did not happen in this run.

In both cases, we see the classic problem of exploration – exploitation, which since time immemorial has pursued reinforcement training. Your data flow from current rules. If the current rules provide for extensive intelligence, you will receive unnecessary data and learn nothing. You exploit too much - and "flush" non-optimal behavior.

There are several intuitively pleasant ideas on this subject - internal motives and curiosity, intelligence on the basis of calculation, etc. Many of these approaches were first proposed in the 1980s or earlier, and some were later revised for deep learning models. But as far as I know, no approach works stably in all environments. Sometimes it helps, sometimes it doesn't. It would be nice if some intelligence trick worked everywhere, but I doubt that in the foreseeable future they will find a silver bullet of this caliber. Not because no one is trying, but because exploration-exploitation is very, very, very, very difficult. Quote from the article about the multi-armed gangster from Wikipedia :

I see the in-depth RL as a demon who specifically misunderstands your reward and is actively looking for the laziest way to achieve a local optimum. A little funny, but it turned out to be a really productive mindset.

positive side of learning with reinforcement is that if you want to achieve a good result in a specific environment, you can retrain like crazy. The disadvantage is that if you need to expand the model to any other environment, it will probably work poorly because of the crazy re-learning.

DQN networks cope with many Atari games, because all the training of each model is focused on the only goal - to achieve maximum results in a single game. The final model cannot be expanded to other games, because it was not taught so. You can set up a trained DQN for the new Atari game (see Progressive Neural Networks (Rusu et al, 2016)), but there is no guarantee that such a transfer will take place, and usually no one expects it. This is not the wild success that people see on the pre-trained ImageNet signs.

To warn some obvious comments: yes, in principle, learning in a wide range of environments can solve some problems. In some cases, such an extension of the model's action occurs by itself. An example is navigation, where you can try random locations of targets and use universal functions for generalization. (see Universal Value Function Approximators, Schaul et al, ICML 2015). I find this work very promising, and later I will give more examples from this work. But I think that the possibilities for generalizing deep RL are not yet so great as to cope with a diverse set of tasks. The perception has become much better, but the deep RL is still ahead when “ImageNet for management” will appear. OpenAI Universe tried to light this fire, but from what I heard, the task was too difficult, so they achieved little.

While there is no such moment for the generalization of models, we are stuck with surprisingly narrow models in terms of coverage. As an example (and as an excuse to laugh at my own work) take a look at the Can Deep RL Solve Erdos-Selfridge-Spencer Games article ? (Raghu et al, 2017). We studied a combinatorial game for two players, where there is a solution in an analytical form for an optimal game. In one of the first experiments, we recorded the behavior of player 1, and then trained player 2 with the help of RL. In this case, you can consider the actions of the player 1 part of the environment. Teaching player 2 against optimal player 1, we showed that RL is able to show high results. But when we applied the same rules against non-optimal player 1, then the effectiveness of player 2 fell because it did not apply to non-optimal opponents.

The authors of the article Lanctot et al, NIPS 2017Got a similar result. Here two agents play laser tag. Agents are trained through multi-agent training with reinforcements. To test the generalization, the training was started with five random starting points (sid). Here is a video of agents who were taught to play against each other.

As you can see, they learned to get close and shoot each other. Then the authors took player 1 from one experiment - and brought him to player 2 from another experiment. If the learned rules are generalized, then we should see similar behavior.

Spoiler: we will not see him.

This seems to be a common problem with multi-agent RL. When agents are trained against each other, a kind of joint evolution takes place. Agents are trained to really fight well with each other, but when they are sent against a player with whom they have not met before, the effectiveness decreases. I want to note that the only difference between these videos is random sit. The same learning algorithm, the same hyperparameters. The difference in behavior is purely due to the random nature of the initial conditions.

Nevertheless, there are some impressive results obtained in an environment with independent play against each other - they seem to contradict the general thesis. The OpenAI blog has a nice post about some of their work in this area.. Self-play is also an important part of AlphaGo and AlphaZero. My intuitive guess is that if agents learn at the same pace, they can constantly compete and accelerate each other’s learning, but if one of them learns much faster than the other, then he takes advantage of the weakness of a weak player and retrains. As you move from symmetrical self-play to general multi-agent settings, it becomes much more difficult to make sure that learning is going at the same speed.

Almost every machine learning algorithm has hyper parameters that affect the behavior of the learning system. Often they are selected manually or by random search.

Teaching with the teacher is stable. Fixed dataset, true data check. If you slightly change the hyperparameters, the operation will not change much. Not all hyperparameters work well, but over the years many empirical tricks have been found, so many hyperparameters show signs of life during training. These signs of life are very important: they say that you are on the right track, doing something sensible - and you need to spend more time.

Currently, deep RL is not stable at all, which is very annoying in the research process.

When I started working in Google Brain, I almost immediately started working on the implementation of the algorithm from the above article, Normalized Advantage Function (NAF). I thought it would take only two or three weeks. I had several trump cards: a certain acquaintance with Teano (which is well tolerated by TensorFlow), some experience with deep-seated RL, and also the lead author of an article on NAF trained at Brain, so I could pester him with questions.

In the end, it took me six weeks to reproduce the results, due to several bugs in the software. The question is, why are these bugs hiding for so long?

To answer this question, consider the simplest continuous control problem in the OpenAI Gym: the Pendulum task. In this task, the pendulum is fixed at a certain point and gravity acts on it. At the entrance of the three-dimensional state. The action space is one-dimensional: this is the moment of force that is attached to the pendulum. The goal is to balance the pendulum in exactly vertical position.

This is a small problem, and it becomes even easier thanks to a well-defined reward. The reward depends on the angle of the pendulum. Actions that bring the pendulum closer to the vertical position, not only give reward, they increase it.

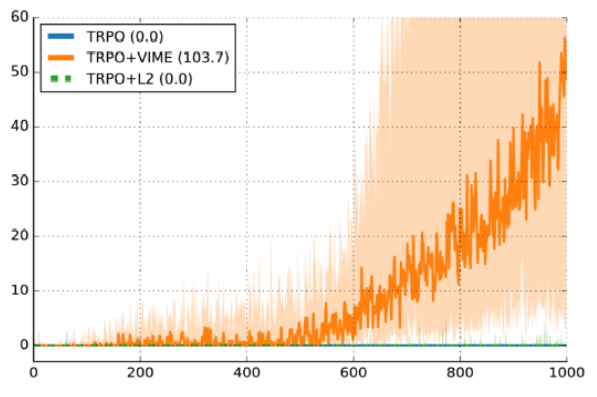

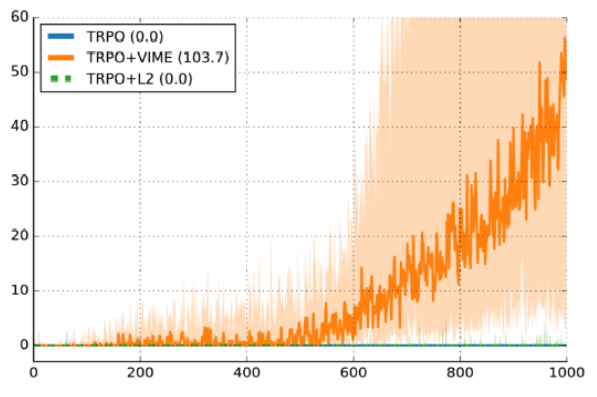

Here is a video of a model that is almostworks. Although it does not bring the pendulum into a precisely vertical position, it produces an exact moment of force to compensate for gravity. But the performance graph after correcting all errors. Each line is a reward curve from one of ten independent runs. The same hyperparameters, the difference is only in a random starting point. Seven out of ten runs worked well. Three did not pass. A failure rate of 30% is considered working . Here is another plot from the published work “Variational Information Maximizing Exploration” (Houthooft et al, NIPS 2016) . Wednesday - HalfCheetah. The award was made sparse, although the details are not too important. On the y-axis, episodic reward, on the x-axis, the number of time intervals, and the algorithm used is TRPO.

The dark line is the median performance of ten random sids, and the shaded area is the coverage from the 25th to the 75th percentile. Don't get me wrong, this chart seems to be a good argument for VIME. But on the other hand, the 25th percentile line is really close to zero. This means that about 25% do not work simply because of a random starting point.

See, there is also a variance in learning with the teacher, but not so bad. If my learning code with the teacher could not cope with 30% of the runs with random sidami, then I would be quite sure that there was some kind of error when loading data or training. If my training code with reinforcements does not do better than the random one, then I have no idea whether this is a bug or bad hyperparameters, or if I’m just unlucky.

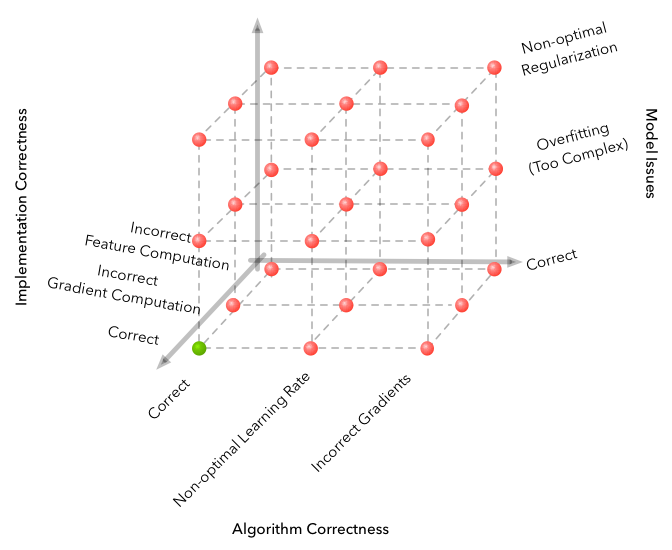

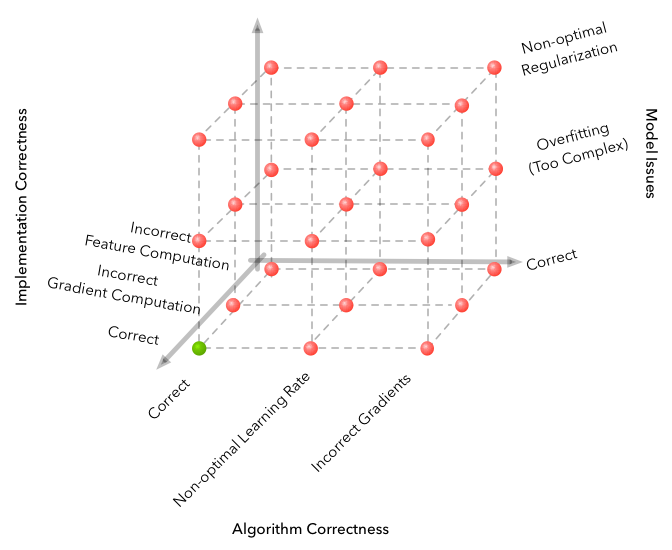

This is an illustration from the article “Why is it so“ difficult ”to work with machine learning?” The main thesis is that machine learning adds extra dimensions to the failure space, which exponentially increases the number of failure options. Deep RL adds another dimension: randomness. And the only way to solve the problem of chance is to clear out the noise.

If your learning algorithm has an inefficient sample and is unstable, it greatly reduces the productivity of your research. Maybe he will need only 1 million steps. But when you multiply this by five random input values, and then multiply by the scatter of hyper parameters, you will see an exponential increase in the calculations needed to effectively test the hypothesis.

The instability of random seeds is like a canary in a coal mine. If simple randomness leads to such a strong gap between runs, then imagine what difference will arise from a real change in the code.

Fortunately, we do not need to conduct this thought experiment, because it has already been carried out and described in the article “Deep Reinforcement Learning That Matters” (Henderson et al, AAAI 2018) . Here are some conclusions:

My theory is that RL is very sensitive both to initialization and to the dynamics of the learning process, because data is always collected on the Internet and the only controllable parameter is only the amount of remuneration. Models that randomly encounter good learning examples work much better. If the model does not see good examples, then it may not learn anything at all, as it becomes more and more convinced that any deviations are unsuccessful.

Of course, in-depth training with reinforcements has achieved some excellent results. DQN is no longer a novelty, but at one time it was an absolutely crazy discovery. The same model learns directly from the pixels, without adjusting for each game individually. Both AlphaGo and AlphaZero also remain very impressive achievements.

But apart from these successes, it is difficult to find cases where the deep RL has practical value for the real world.

I tried to think about how to use deep RL in the real world for practical tasks - and this is surprisingly difficult. I thought that I would find some use in recommendation systems, but in my opinion, collaborative filtering and contextual gangsters still dominate there.

The best thing that I finally found was two Google projects: a reduction in energy consumption in data centers and the recently announced AutoML Vision project. Jack Clark from OpenAI addressed the readers on Twitter with a similar question - and came to the same conclusion . (Last year's tweet, before the AutoML announcement).

I know that Audi is doing something interesting with the in-depth RL, because at NIPS they showed a small model of an unmanned racing car and said that the deep-down system RL was developed for it. I know that skillful work is being done with optimizing the placement of devices for large tensor graphs (Mirhoseini et al, ICML 2017) . Salesforce has a text summarization model that works when RL is used carefully enough. Financial companies are probably experimenting with RL while you are reading this article, but so far there is no evidence of this. (Of course, financial companies have reasons to hide their methods of playing in the market, so we may never get solid evidence). Facebook works great with in-depth RL for chatbots and speech. Every Internet company in history has probably ever thought about introducing RL into its advertising model, but if someone actually implemented RL, then it is silent about it.

So, in my opinion, either in-depth RL is still a topic of academic research, not a reliable technology for widespread use, or it really can be made to work effectively - and the people who succeeded in it did not disclose the information. I think the first option is more likely.

If you came to me with the problem of image classification, I would advise the pre-trained ImageNet models - they will probably do an excellent job. We live in a world where filmmakers from the Silicon Valley TV series are jokingly making a real AI application for recognizing hot dogs . I can not say the same successes deep RL.

This is a priori tough question. The problem is that you are trying to use the same RL approach for different environments. It is quite natural that it will not always work.

In view of the above, it is possible to draw some conclusions from the existing achievements of reinforced learning. These projects, where in-depth RL is either learning some kind of qualitatively impressive behavior, or learning better than previous systems in this area (although these are very subjective criteria).

Here is my list for now.

(A brief digression: machine learning recently beat professional players in no limit Texas Hold'em. The program uses both Libratus (Brown et al, IJCAI 2017) and DeepStack (Moravčík et al, 2017) systems . I spoke with several people who thought they were working here. deep RL. Both systems are very cool, but they do not use in-depth training with reinforcements. They use an algorithm of counterfactual minimization of regret and a competent iterative solution of subgame).

From this list, you can isolate common properties that facilitate learning. None of these properties listed below are required for training, but the more they are present - the better the result will be, definitely.

You can combine several principles and analyze the success of Neural Architecture Search. According to the initial version of ICLR 2017 , after 12800 samples, the deep RL is able to design the best of its kind neural networks architecture. Admittedly, in each example it was necessary to train the neural network to convergence, but it is still very effective in terms of the number of samples.

As mentioned above, the reward is validation accuracy. This is a very rich reward signal - if a change in the structure of a neural network increases the accuracy from just 70% to 71%, RL will still benefit from it. In addition, there is evidence that hyperparameters in depth learning are close to linearly independent. (This was empirically shown in Hyperparameter Optimization: A Spectral Approach (Hazan et al, 2017) - my resume is here if you 're interested). The NAS does not specifically configure the hyperparameters, but I think it is quite reasonable that the design decisions of the neural network are made this way. This is good news for learning, given the strong correlation between the solution and performance. Finally, here is not only a rich reward, but here is exactly what is important to us when training models.

Together, these reasons make it possible to understand why a NAS requires “only” about 12,800 trained networks to determine the best neural network, compared with the millions of examples needed in other environments. From different sides, everything plays in favor of RL.

In general, such success stories are still the exception, not the rule. Many puzzle pieces must be folded correctly so that learning with reinforcements works convincingly, and even in this case it will not be easy.

In general, now deep RL can not be called a technology that works out of the box.

There is an old saying that every researcher learns how to hate his area of research. The joke is that researchers still continue to do this, because they like problems too much.

This is about how I feel about deep learning with reinforcement. Despite all this, I am absolutely sure that we need to try to apply RL on various problems, including those where it is most likely to be ineffective. But how else can we improve RL?

I see no reason why in-depth RL will not work in the future if technology is given time to improve. Some very interesting things will start to happen when the deep RL becomes reliable enough for widespread use. The question is how to achieve this.

Below I have listed some plausible options for the development of the future. If additional research is needed for development in this area, then references to relevant scientific articles in these areas are indicated.

Local optima are good enough . It would be arrogant to say that people themselves are globally optimal in everything. I would say that we are slightly better than other species optimized to create civilization. In the same vein, the RL decision does not necessarily have to strive for global optima if its local optima exceed the basic level of a person.

Iron will solve everything . I know people who believe that the most important thing for creating AI is to simply increase the speed of iron. Personally, I am skeptical that iron will solve all problems, but it will certainly make an important contribution. The faster everything works, the less you worry about the inefficiency of the samples and the easier it is to brute force through the problems of intelligence.

Add more learning signals . Sparse rewards are hard to grasp, because there is very little information about what gives effect. It is possible that we will either generate positive rewards in the form of hallucinations ( Hindsight Experience Replay, Andrychowicz et al, NIPS 2017 ), or define auxiliary tasks ( UNREAL, Jaderberg et al, NIPS 2016 ), or build a good model of the world, starting with self-controlled learning . Add cherries to the cake, so to speak.

Model training will increase sample efficiency . Here's how I describe the RL based on the model: “Everyone wants to do it, but few know how.” In principle, a good model fixes a bunch of problems. As can be seen in the example of AlphaGo, the presence of a model in principle greatly facilitates the search for a good solution. Good models of the world are not bad transferred to new tasks, and the introduction of a model of the world allows you to imagine a new experience. In my experience, model-based solutions also require fewer samples.

But learning a good model is difficult. I got the impression that low-dimensional state models sometimes work, but image models are usually too difficult. But if they become simpler, some interesting things can happen.

Dyna (Sutton, 1991) and Dyna-2 (Silver et al., ICML 2008) are classic works in this area. As examples of work where model-based training is combined with deep-seated networks, I would recommend several recent articles from the Berkeley Robotics Laboratory:

Using reinforcement training is simply a fine tuning step . The first article on AlphaGo began training with a teacher followed by fine tuning of the RL. This is a good option because it speeds up the initial learning, using a faster, but less powerful way. This method worked in another context - see Sequence Tutor (Jaques et al, ICML 2017) . You can consider it as the beginning of the RL process with a reasonable, rather than random, prior distribution of probabilities (prior), where another system is involved in creating this “prior”.

Remuneration functions can become learners . Machine learning promises that, based on data, one can learn to build things of higher quality than those designed by man. If it is so difficult to choose the remuneration function, then why not use machine learning for this task? Simulation training and the inverse of RL - these rich areas have shown that reward functions can be implicitly defined by evidence or ratings from a person.

The most famous research papers on reverse RL and simulation training are Algorithms for Inverse Reinforcement Learning (Ng and Russell, ICML 2000) , Apprenticeship Learning through Inverse Reinforcement Learning (Abbeel and Ng, ICML 2004) and DAgger (Ross, Gordon, and Bagnell, AISTATS 2011) .

Of the recent works that extend these ideas to the field of in-depth learning - Guided Cost Learning (Finn et al, ICML 2016) , Time-Constrastive Networks (Sermanet et al, 2017) and Learning From Human Preferences (Christiano et al, NIPS 2017) . In particular, the last of the listed articles shows that the reward derived from the ratings put by people actually turned out to be better (better-shaped) for training than the original hard-coded reward - and this is a good practical result.

From long-term work where deep learning is not used, I liked the articles Inverse Reward Design (Hadfield-Menell et al, NIPS 2017) and Learning Robot Objectives from Physical Human Interaction (Bajcsy et al, CoRL 2017) .

Transfer training . Transferring training promises that you can use the knowledge from previous tasks to speed up learning new tasks.I am quite sure that this is the future when learning becomes reliable enough to solve disparate tasks. It is difficult to transfer training, if you cannot study at all, and if there are tasks A and B, it is difficult to predict whether transfer of training from task A to task B will occur. In my experience, here is either a very obvious answer or completely incomprehensible. And even in the most obvious cases, a non-trivial approach is required.

Recent work in this area is Universal Value Function Approximators (Schaul et al, ICML 2015) , Distral (Whye Teh et al, NIPS 2017) and Overcoming Catastrophic Forgetting (Kirkpatrick et al, PNAS 2017) . From older works, see Horde (Sutton et al, AAMAS 2011) .

For example, robotics shows good progress in transferring training from simulators to the real world (from simulating a task to a real task). See Domain Randomization (Tobin et al, IROS 2017) , Sim-to-Real Robot Learning with Progressive Nets (Rusu et al, CoRL 2017) and GraspGAN (Bousmalis et al, 2017) . (Disclaimer: I worked on GraspGAN).

Good priors can greatly reduce training time.. This is closely related to some of the preceding paragraphs. On the one hand, the transfer of training is to use past experience to create good a priori probability distributions (priors) for other tasks. RL algorithms are designed to work on any Markov decision-making process - and this is where the problem with generalization arises. If we believe that our solutions work well only in a narrow sector of environments, then we should be able to use a common structure to effectively solve these environments.

Pietr Abbil in his speeches likes to note that the deep RL needs to be given only such tasks as we solve in the real world. I agree that this makes a lot of sense. There must be a certain real world prior (real-world prior) that allows us to quickly learn new real-world problems at the expense of slower learning of unrealistic tasks, and this is a perfectly acceptable compromise.

The difficulty is that such a prior world is very difficult to design. However, I think there is a good chance that this is still possible. Personally, I am delighted with the recent work on meta-learning, because it provides a way to generate reasonable priors from the data. For example, if I want to use RL to navigate the warehouse, it would be curious to first use meta-learning to teach good navigation in general, and then fine-tune this priority for the particular warehouse where the robot will move. This is very similar to the future, and the question is whether meta-learning will get there or not.

For a summary of recent learning-to-learn work, see this publication from BAIR (Berkeley AI Research) .

More complex environments, paradoxically, may be easier.. One of the main conclusions of the DeepMind article on parkour bot is that if you complicate a task very much by adding several variations of tasks, then in reality you simplify learning, because the rules cannot be retrained in one situation without losing productivity in all other parameters . We saw something similar in articles on domain randomization (domain randomization) and even in ImageNet: models trained in ImageNet can expand to other environments much better than those trained in CIFAR-100. As I said before, maybe we only need to create some “ImageNet for management” in order to go to a much more universal RL.

There are many options. OpenAI Gym is the most popular environment, but there is also the Arcade Learning Environment ,Roboschool , DeepMind Lab , DeepMind Control Suite and ELF .

Finally, although it is a shame from an academic point of view, the empirical problems of deep RL may not matter from a practical point of view. As a hypothetical example, imagine that a financial company uses deep RL. They train a sales agent on past US stock market data using three random sid. In real A / B testing, the first seed brings 2% less revenue, the second works with average profitability, and the third brings 2% more. In this hypothetical version, reproducibility does not matter - you simply unfold a model whose yield is 2% higher and you rejoice. Similarly, it does not matter that a sales agent can work well only in the United States: if it expands badly on the world market, simply do not use it there.There is a big difference between an extraordinary system and a reproducible extraordinary system. Perhaps you should focus on the first one.

In many ways, I am annoyed by the current state of the deep RL. And yet it attracts such a strong interest from researchers, which I have not seen in any other field. My feelings are best expressed by the phrase Andrew Eun referred to in his speech Nuts and Bolts of Applying Deep Learning : strong pessimism in the short term, balanced by even stronger long-term optimism. Now the deep RL is a bit chaotic, but I still believe in the future.

However, if someone asks me again if training with reinforcements (RL) can solve their problem, I will still immediately answer that no - they cannot. But I will ask you to repeat this question in a few years. By that time, maybe everything will work out.

Here are mainly cited articles from Berkeley, Google Brain, DeepMind and OpenAI over the past few years, because their work is most noticeable from my point of view. Almost certainly I missed something from older literature and from other organizations, so I apologize - I am just one person in the end.

Introduction

Once on Facebook, I stated the following.

When someone asks if learning with reinforcement (RL) can solve their problem, I immediately reply that they cannot. I think that this is true at least in 70% of cases.Depth training with reinforcement is accompanied by a lot of hype. And for good reasons! Reinforced learning (RL) is an incredibly common paradigm. In principle, a reliable and high-performance RL system should be perfect in everything. The merging of this paradigm with the empirical power of deep learning is evident in itself. Deep RL is what most looks like a strong AI, and it's a kind of dream that feeds billions of dollars in funding.

')

Unfortunately, in reality, this thing does not work yet.

But I believe that she will shoot. If I didn’t believe, I wouldn’t cook in this thread. But there are a lot of problems ahead, many of which are fundamentally complex. Beautiful demo trained agents hide all the blood, sweat and tears that spilled in the process of their creation.

Several times I have seen people seduced by the latest results. They first tried in-depth RL and always underestimated the difficulties. Without a doubt, this “model problem” is not as simple as it seems. And without a doubt, this area broke them several times before they learned to set realistic expectations in their research.

There is no personal error. Here is a system problem. Easy to draw a story around a positive result. Try to do it with a negative. The problem is that researchers often get exactly a negative result. In a sense, such results are even more important than positive ones.

In this article I will explain why deep RL does not work. I will give examples of when it still works and how to achieve more reliable work in the future, in my opinion. I do this not to make people stop working on deep-seated RL, but because it is easier to make progress if everyone understands the existence of problems. It is easier to reach agreement, if you really talk about problems, and not over and over again stumble on the same rake separately from each other.

I would like to see more research on deep RL. To come here new people. And so they know what they are getting involved in.

Before continuing, let me make a few comments.

- Here are cited several scientific articles. I usually cite convincing negative examples and keep silent about positive ones. This does not mean that I do not like scientific work . They are all good - worth reading if you have time.

- I use the terms “reinforcement learning” and “in-depth reinforcement learning” as synonyms, because in my daily work, RL always implies deep-seated RL. Empirical behavior of depth learning with reinforcement, rather than training with reinforcement as a whole, is criticized here . In the cited articles, the work of an agent with a deep neural network is usually described. Although empirically criticism may also apply to linear RL (linear RL) or tabular RL (tabular RL), I am not sure that this criticism can be extended to smaller tasks. The buzz around deep RL is due to the fact that RL is presented as a solution for large, complex, multidimensional environments where a good approximation function is needed. It is with this hype, in particular, we need to understand.

- The article is structured to move from pessimism to optimism. I know that it is a bit long, but I will be very grateful if you take the time to read it in its entirety before answering.

Without further ado, here are some of the cases where the deep-seated RL fails.

Depth reinforcement training can be terribly ineffective

The most famous benchmark for in-depth training with reinforcements is Atari games. As shown in the now famous Deep Q-Networks (DQN) article, if you combine Q-Learning with reasonable-sized neural networks and some optimization tricks, you can achieve or exceed human performance in several Atari games.

Atari games play at 60 frames per second. Can you just figure out how many frames you need to process the best DQN to show the result as a person?

The answer depends on the game, so take a look at a recent article by Deepmind - Rainbow DQN (Hessel et al, 2017) . It shows how some consistent improvements to the original DQN architecture improve the result, and a combination of all the improvements as efficiently as possible. The neural network is superior to human performance in over 40 of the 57 Atari games. The results are shown in this convenient graph.

On the vertical axis, the “median median result normalized by the human” is plotted. it is calculated by training 57 DQN neural networks, one for each Atari game, with normalizing the result of each agent when the human result is taken as 100%, and then calculating the average median result for 57 games. RainbowDQN exceeds 100% after processing 18 million frames. This corresponds to about 83 hours of play, plus training time, no matter how long it takes. This is a lot of time for simple Atari games that most people grasp in a couple of minutes.

Consider that 18 million frames is actually a very good result, because the previous record belonged to the Distributional DQN system (Bellemare et al, 2017) , which required 70 million frames to achieve a result of 100%, that is, about four times longer. As for Nature DQN (Mnih et al, 2015) , it never reaches 100% of the median result at all, even after 200 million frames.

Cognitive distortion “planning error” says that completing a task usually takes longer than you thought. In reinforcement training there is a planning error of its own - training usually requires more samples than you thought.

The problem is not limited to Atari games. The second most popular test is MuJoCo benchmarks, a set of tasks in the MuJoCo physics engine. In these tasks, the position and speed of each hinge in the simulation of a certain robot are usually given at the entrance. Although there is no need to solve the problem of vision, RL systems are required for learning from 10 5 before 10 7 steps, depending on the task. This is an incredible amount to control in such a simple environment.

The DeepMind Parkour article (Heess et al, 2017) , illustrated below, has been trained using 64 workers for more than 100 hours. The article does not specify what a "worker" is, but I guess it means a single processor.

This is a super result . When he first came out, I was surprised that the deep-seated RL was able to learn such gaits on the run.

But it took 6400 hours of CPU time, which is a bit disappointing. Not that I was counting on less time ... it's just sad that in simple skills, deep RL is still an order of magnitude less than the level of training that could be useful in practice.

There is an obvious counter-argument here: what if you just ignore the effectiveness of training? There are certain environments that make it easy to generate experience. For example, games. But for any environment where this is impossible , RL faces enormous challenges. Unfortunately, most environments fall into this category.

If you only care about the final performance, then many problems are better solved by other methods.

When looking for solutions to any problem, one usually has to find a compromise in achieving different goals. You can focus on a really good solution to this particular problem, or you can focus on maximizing your contribution to the overall research. The best problems are those where a good contribution to research is required to get a good solution. But in reality it is difficult to find problems that meet these criteria.

Purely in demonstrating maximum efficiency, the in-depth RL shows not very impressive results, because it is constantly superior to other methods. Here is a video with MuJoCo robots, which are controlled by interactive trajectory optimization. Correct actions are calculated almost in real time, online, without offline learning. Yes, and everything works on the equipment of 2012 ( Tassa et al, IROS 2012 ).

I think this job can be compared with the article DeepMind on parkour. What is the difference?

The difference is that here the authors apply control with predictive models, working with a real model of the earthly world (physics engine). There are no such models in RL, which makes work very difficult. On the other hand, if planning an action based on a model improves the result so much, then why suffer with tricky training on RL rules?

Similarly, you can easily surpass the DQN neural network in Atari with a ready-made Monte Carlo tree search (MCTS) solution. Here are the main indicators from the work of Guo et al, NIPS 2014 . The authors compare the results of the trained DQN with the results of the UCT agent (this is the standard version of the modern MCTS).

Again, this is an unfair comparison, because DQN does not search, and the MCTS does exactly the search on the real model of terrestrial physics (Atari emulator). But in some situations you do not care, here is an honest or unfair comparison. Sometimes you just need everything to work (if you need a full UCT assessment, see the appendix of the original research article Arcade Learning Environment (Bellemare et al, JAIR 2013) ).

Reinforced learning is theoretically appropriate for everything, including environments with an unknown model of the world. However, such versatility is costly: it is difficult to use some specific information that could help in learning. Because of this, we have to use a lot of samples in order to learn things that could be simply hard-coded initially.

Experience shows that, with the exception of rare cases, algorithms sharpened for specific tasks work faster and better than reinforcement learning. This is not a problem if you develop deep RL for the deepest RL, but personally I’m upset about comparing the effectiveness of RL c ... well, with anything else. One of the reasons why I liked AlphaGo so much is because it was an unequivocal victory for deep-seated RL, and this does not happen very often.

Because of all this, it is more difficult for people to explain why my tasks are so cool, complex and interesting, because they often have no context or experience to evaluate, why are they so difficult. There is a certain difference between what people think about the possibilities of deep-seated RL - and its real possibilities. Now I work in the field of robotics. Consider a company that comes to mind for most people if you mention robotics: Boston Dynamics.

This thing doesn't use reinforcement training. I met people several times who thought that RL was used here, but no. If you look for published scientific papers from a group of developers, you will find articles with references to time-varying linear-quadratic regulators, quadratic programming solvers, and convex optimization . In other words, they use mostly classical methods of robotics. It turns out that these classic techniques work fine, if properly applied.

Reinforcement training usually requires a reward function.

Reinforcement training assumes the existence of a reward function. Usually, it is either originally, or manually configured offline and remains unchanged during training. I say “usually” because there are exceptions, such as simulation training or the reverse RL (when the reward function is restored after the fact), but in most RL options they use reward as an oracle.

It is important to note that in order for the RL to work properly, the reward function must cover exactly what we need. And I mean exactly . RL is annoyingly prone to overfit, which leads to unexpected consequences. That is why Atari is such a good benchmark. There is not only easy to get a lot of samples, but every game has a clear goal - the number of points, so you never have to worry about finding the reward function. And you know that everyone else has the same function.

The popularity of the tasks MuJoCo due to the same reasons. Since they work in the simulation, you have complete information about the state of the object, which greatly simplifies the creation of the reward function.

In the Reacher task, you control a two-segment hand that is connected to a central point, and the goal is to move the end of the hand to a given target. See below for an example of successful learning.

Since all coordinates are known, the reward can be defined as the distance from the end of the arm to the target, plus a short time to move. In principle, in the real world, you can conduct the same experience if you have enough sensors to accurately measure the coordinates. But depending on what the system should do, it can be difficult to determine a reasonable reward.

The reward function itself would not be a big problem if not ...

The difficulty of developing a reward function

Making the reward function is not that difficult. Difficulties arise when you try to create a function that will encourage proper behavior, and at the same time, the system will retain learnability.

In HalfCheetah, we have a two-legged robot bounded by a vertical plane, which means, that is, it can only move forward or backward.

The goal is to learn to trot. Reward - HalfCheetah speed ( video ).

This is a smooth or shaped (shaped) reward, that is, it increases with the approach to the final goal. In contrast to the sparse (sparse) reward, which is given only upon reaching the final state of the goal, and is absent in other states. Smooth growth of remuneration is often much easier to learn, because it provides positive feedback, even if the training did not provide a complete solution to the problem.

Unfortunately, a smooth growth reward can be biassed. As already mentioned, this causes unexpected and undesirable behavior. A good example is the boating race from the OpenAI blog article . The intended goal is to reach the finish line. You can submit a reward as +1 for ending the race at a given time, and a reward of 0 otherwise.

The reward function gives points for crossing checkpoints and collecting bonuses that allow you to get to the finish line faster. As it turned out, the collection of bonuses gives more points than the end of the race.

To be honest, at first this publication annoyed me a little. Not because it is wrong! And because it seemed to me that she demonstrates the obvious things. Of course, reinforcement training will give a strange result when the reward is incorrectly determined! It seemed to me that the publication attaches unreasonably great importance to this particular case.

But then I started writing this article and I realized that the most convincing example of a wrongly defined reward is the very same boat racing video. Since then, it has been used in several presentations on this topic, which has drawn attention to the problem. So okay, I’m reluctant to admit that it was a good blog post.

Algorithms RL fall into a black hole, if they have more or less to guess about the world around them. The most universal category of modelless RL is almost like a black box optimization. Such systems are only allowed to assume that they are in the MDP (Markov decision-making process) - and nothing more. The agent is simply told that you get +1 for this, but you don’t get for this, but you have to learn everything else yourself. As with the black box optimization, the problem is that any behavior that gives +1 is considered good, even if the reward is received in the wrong way.

The classic example is not from the realm of RL - when someone applied genetic algorithms for chip design and got a circuit in which one unconnected logic gate was needed for the final design .

Gray elements are necessary for the correct operation of the circuit, including the element in the upper left corner, although it is not connected to anything. From the article “An Evolved Circuit, Intrinsic in Silicon, Entwined with Physics”

Or a more recent example - here is the publication in the 2017 Salesforce blog . Their goal was to write a summary for the text. The basic model was trained with the teacher, then it was evaluated by an automated metric called ROUGE. ROUGE is a non-differentiable reward, but RL can work with such. So they tried to apply RL to optimize ROUGE directly. This gives a high ROUGE (hooray!), But not really very good lyrics. Here is an example.

Button was deprived of his 100th race for McLaren after the ERS did not let him start. So ended a bad weekend for the British. Button ahead in qualifying. Finished ahead of Nico Rosberg in Bahrain. Lewis Hamilton In 11 races .. Race. To lead 2000 laps .. V ... I. - Paulus et al, 2017

And although the RL model showed the maximum ROUGE result ...

... they finally decided to use a different model for the resume.

Another funny example. This is from an article by Popov et al, 2017 , also known as the “article on folding Lego designer.” The authors use the distributed version of DDPG to learn how to capture. The goal is to grab the red cube and put it on the blue one.

They made her work, but faced an interesting case of failure. The initial lifting movement is rewarded based on the height of the red block lifting. This is determined by the z-coordinate of the bottom face of the cube. In one of the options for failure, the model learned to turn the red cube upside down, rather than lift it.

It is clear that this behavior was not intended. But RL doesn't care. From the point of view of reinforcement training, she received a reward for turning the cube - therefore she will continue to turn the cube.

One way to solve this problem is to make the reward sparse, giving it only after connecting the cubes. Sometimes it works because the rare reward is trainable. But often this is not the case, since the absence of positive reinforcement complicates things too much.

Another solution to the problem is the careful formation of remuneration, the addition of new remuneration conditions and the adjustment of coefficients for existing conditions until the RL algorithm begins to demonstrate the desired behavior during training. Yes, it is possible to overcome the RL on this front, but such a struggle does not bring satisfaction. Sometimes it is necessary, but I never felt that I had learned something in the process.

For reference, here is one of the functions of remuneration from the article on folding designer Lego.

I do not know how much time the guys spent on the development of this function, but by the number of members and different coefficients, I would say “a lot”.

In conversations with other RL researchers, I heard several stories about the original behavior of models with improperly established rewards.

- A colleague teaches the agent to navigate the room. The episode ends if the agent is out of bounds, but in this case no penalty is imposed. Upon completion of the training, the agent learned the behavior of the suicide, because it was very easy to get a negative reward, and a positive one was too difficult, so a quick death with a result of 0 was preferable to a long life with a high risk of a negative result.

- A friend taught the robot arm to move in the direction of a certain point above the table. It turns out that the point was determined relative to the table , and the table was not tied to anything. The model learned to knock very hard on the table, causing it to fall, which moved the target point - and that turned out to be next to the hand.

- The researcher talked about the use of RL for teaching a robotic arm simulator to take a hammer and hammer a nail. Initially, the reward was determined by how far the nail entered the hole. Instead of taking a hammer, the robot hammered a nail into his own limbs. Then they added a reward to encourage the robot to take the hammer. As a result, the learned strategy for the robot began to take the hammer ... and throw the tool into the nail, and not use it in the normal way.

True, all these are stories from the lips of others; I personally have not seen videos with such behavior. But none of these stories seems to me impossible. I burned on the RL too many times to believe the opposite.

I know people who love telling stories about clip optimizers . Okay, I understand, honestly. But in truth, I am sick of listening to these stories, because they always talk about some kind of superhuman disoriented strong AI as a real story. Why invent, if there are so many real stories that happen every day.

Even with a good reward, it is difficult to avoid a local optimum.

The previous examples of RL are often called "reward hacks."As for me, this is a smart, non-standard solution that brings more reward than the intended solution from the designer of the problem.

Khaki rewards are exceptions. Cases of incorrect local optima are much more common, as they arise from an incorrect compromise between exploration and exploitation (exploration – exploitation).

Here is one of my favorite videos . Here the normalized benefit function is implemented , which is learned in the HalfCheetah environment. From the perspective of an outside observer, this is very, very

stupid But we call it stupid just because we look from the side and have a lot of knowledge that moving on legs is better than lying on your back. RL does not know this! He sees a state vector, sends action vectors, and sees that he receives some positive reward. That's all.

Here is the most plausible explanation that I can think of about what happened during the training.

- In the process of random research, the model found that falling forward is more beneficial than staying motionless.

- The model often did this to “flash” this behavior and begin to fall continuously.

- After falling forward, the model found out that if you apply enough effort, you can do a back flip, which gives a little more reward.

- — , , «» .

- , — « » , ? .

This is very funny, but obviously not what we want from the robot.

Here is another unfortunate example, this time surrounded by Reacher ( video ) In this run, random initial weights, as a rule, gave strongly positive or strongly negative values for actions. Because of this, most actions were performed with the maximum or minimum acceleration. In fact, it is very easy to unwind the model: just give a high amount of force to each hinge. When the robot has unwound, it is already difficult to get out of this state in some understandable way: in order to stop the uncontrolled rotation, several steps of reconnaissance should be taken. Of course, this is possible. But this did not happen in this run.

In both cases, we see the classic problem of exploration – exploitation, which since time immemorial has pursued reinforcement training. Your data flow from current rules. If the current rules provide for extensive intelligence, you will receive unnecessary data and learn nothing. You exploit too much - and "flush" non-optimal behavior.

There are several intuitively pleasant ideas on this subject - internal motives and curiosity, intelligence on the basis of calculation, etc. Many of these approaches were first proposed in the 1980s or earlier, and some were later revised for deep learning models. But as far as I know, no approach works stably in all environments. Sometimes it helps, sometimes it doesn't. It would be nice if some intelligence trick worked everywhere, but I doubt that in the foreseeable future they will find a silver bullet of this caliber. Not because no one is trying, but because exploration-exploitation is very, very, very, very difficult. Quote from the article about the multi-armed gangster from Wikipedia :

For the first time in history, this problem was studied by scientists from the Allied countries of the Second World War. It turned out to be so intractable that, according to Peter Whittle, it was offered to throw it to the Germans, so that the German scientists would also spend their time on it.(Source: Q-Learning for Bandit Problems, Duff 1995 )

I see the in-depth RL as a demon who specifically misunderstands your reward and is actively looking for the laziest way to achieve a local optimum. A little funny, but it turned out to be a really productive mindset.

Even if in-depth RL works, he can retrain to strange behavior.

In-depth training is popular because it is the only area of machine learning where it is socially acceptable to study on a test suite.( Source ) The

positive side of learning with reinforcement is that if you want to achieve a good result in a specific environment, you can retrain like crazy. The disadvantage is that if you need to expand the model to any other environment, it will probably work poorly because of the crazy re-learning.

DQN networks cope with many Atari games, because all the training of each model is focused on the only goal - to achieve maximum results in a single game. The final model cannot be expanded to other games, because it was not taught so. You can set up a trained DQN for the new Atari game (see Progressive Neural Networks (Rusu et al, 2016)), but there is no guarantee that such a transfer will take place, and usually no one expects it. This is not the wild success that people see on the pre-trained ImageNet signs.

To warn some obvious comments: yes, in principle, learning in a wide range of environments can solve some problems. In some cases, such an extension of the model's action occurs by itself. An example is navigation, where you can try random locations of targets and use universal functions for generalization. (see Universal Value Function Approximators, Schaul et al, ICML 2015). I find this work very promising, and later I will give more examples from this work. But I think that the possibilities for generalizing deep RL are not yet so great as to cope with a diverse set of tasks. The perception has become much better, but the deep RL is still ahead when “ImageNet for management” will appear. OpenAI Universe tried to light this fire, but from what I heard, the task was too difficult, so they achieved little.

While there is no such moment for the generalization of models, we are stuck with surprisingly narrow models in terms of coverage. As an example (and as an excuse to laugh at my own work) take a look at the Can Deep RL Solve Erdos-Selfridge-Spencer Games article ? (Raghu et al, 2017). We studied a combinatorial game for two players, where there is a solution in an analytical form for an optimal game. In one of the first experiments, we recorded the behavior of player 1, and then trained player 2 with the help of RL. In this case, you can consider the actions of the player 1 part of the environment. Teaching player 2 against optimal player 1, we showed that RL is able to show high results. But when we applied the same rules against non-optimal player 1, then the effectiveness of player 2 fell because it did not apply to non-optimal opponents.

The authors of the article Lanctot et al, NIPS 2017Got a similar result. Here two agents play laser tag. Agents are trained through multi-agent training with reinforcements. To test the generalization, the training was started with five random starting points (sid). Here is a video of agents who were taught to play against each other.

As you can see, they learned to get close and shoot each other. Then the authors took player 1 from one experiment - and brought him to player 2 from another experiment. If the learned rules are generalized, then we should see similar behavior.

Spoiler: we will not see him.

This seems to be a common problem with multi-agent RL. When agents are trained against each other, a kind of joint evolution takes place. Agents are trained to really fight well with each other, but when they are sent against a player with whom they have not met before, the effectiveness decreases. I want to note that the only difference between these videos is random sit. The same learning algorithm, the same hyperparameters. The difference in behavior is purely due to the random nature of the initial conditions.

Nevertheless, there are some impressive results obtained in an environment with independent play against each other - they seem to contradict the general thesis. The OpenAI blog has a nice post about some of their work in this area.. Self-play is also an important part of AlphaGo and AlphaZero. My intuitive guess is that if agents learn at the same pace, they can constantly compete and accelerate each other’s learning, but if one of them learns much faster than the other, then he takes advantage of the weakness of a weak player and retrains. As you move from symmetrical self-play to general multi-agent settings, it becomes much more difficult to make sure that learning is going at the same speed.

Even without generalization, it may be that the final results are unstable and difficult to reproduce.

Almost every machine learning algorithm has hyper parameters that affect the behavior of the learning system. Often they are selected manually or by random search.

Teaching with the teacher is stable. Fixed dataset, true data check. If you slightly change the hyperparameters, the operation will not change much. Not all hyperparameters work well, but over the years many empirical tricks have been found, so many hyperparameters show signs of life during training. These signs of life are very important: they say that you are on the right track, doing something sensible - and you need to spend more time.

Currently, deep RL is not stable at all, which is very annoying in the research process.

When I started working in Google Brain, I almost immediately started working on the implementation of the algorithm from the above article, Normalized Advantage Function (NAF). I thought it would take only two or three weeks. I had several trump cards: a certain acquaintance with Teano (which is well tolerated by TensorFlow), some experience with deep-seated RL, and also the lead author of an article on NAF trained at Brain, so I could pester him with questions.

In the end, it took me six weeks to reproduce the results, due to several bugs in the software. The question is, why are these bugs hiding for so long?

To answer this question, consider the simplest continuous control problem in the OpenAI Gym: the Pendulum task. In this task, the pendulum is fixed at a certain point and gravity acts on it. At the entrance of the three-dimensional state. The action space is one-dimensional: this is the moment of force that is attached to the pendulum. The goal is to balance the pendulum in exactly vertical position.

This is a small problem, and it becomes even easier thanks to a well-defined reward. The reward depends on the angle of the pendulum. Actions that bring the pendulum closer to the vertical position, not only give reward, they increase it.

Here is a video of a model that is almostworks. Although it does not bring the pendulum into a precisely vertical position, it produces an exact moment of force to compensate for gravity. But the performance graph after correcting all errors. Each line is a reward curve from one of ten independent runs. The same hyperparameters, the difference is only in a random starting point. Seven out of ten runs worked well. Three did not pass. A failure rate of 30% is considered working . Here is another plot from the published work “Variational Information Maximizing Exploration” (Houthooft et al, NIPS 2016) . Wednesday - HalfCheetah. The award was made sparse, although the details are not too important. On the y-axis, episodic reward, on the x-axis, the number of time intervals, and the algorithm used is TRPO.

The dark line is the median performance of ten random sids, and the shaded area is the coverage from the 25th to the 75th percentile. Don't get me wrong, this chart seems to be a good argument for VIME. But on the other hand, the 25th percentile line is really close to zero. This means that about 25% do not work simply because of a random starting point.

See, there is also a variance in learning with the teacher, but not so bad. If my learning code with the teacher could not cope with 30% of the runs with random sidami, then I would be quite sure that there was some kind of error when loading data or training. If my training code with reinforcements does not do better than the random one, then I have no idea whether this is a bug or bad hyperparameters, or if I’m just unlucky.