A Cloud Guru portal interview with Kelsey Hightower: about DevOps, Kubernetes and serverless

Surely not everyone knows that in terms of workload and the number of users, iFunny is a true highload service. The API serves about 15000 requests per second at peaks, the analytics system processes about 5 billion events per day, and up to 400 EC2 instances work to support the full functionality. Therefore, it is very important for an application to have a strong team of engineers. To solve the typical problems of high-load systems and improve their work every day, the iFunny team is constantly looking for new tools and solutions. And this time it was impossible to pass by an interview with one of the main contributors of the global IT community - Kelsey Hightower. Adequate translation and your attention.

Many of you have heard the term serverless at least once. The paradigm, which means there is no need to maintain a pool of servers to run an application, is quickly gaining popularity in the world of cloud computing. Already discussions are underway on how to properly use the Function as a Service platform (hereinafter referred to as FaaS) and how the world is changing with the introduction of AWS Lambda and Google Cloud Functions on the market.

On the same wave of HYIP with serverless, the words DevOps and Kubernetes constantly pop up. Countless guides and stories of experience appear in the network, more and more vacancies are opened with their mention. But what exactly of this information noise will be really useful for engineers and managers? And how to determine the value of using emerging technologies in their daily work?

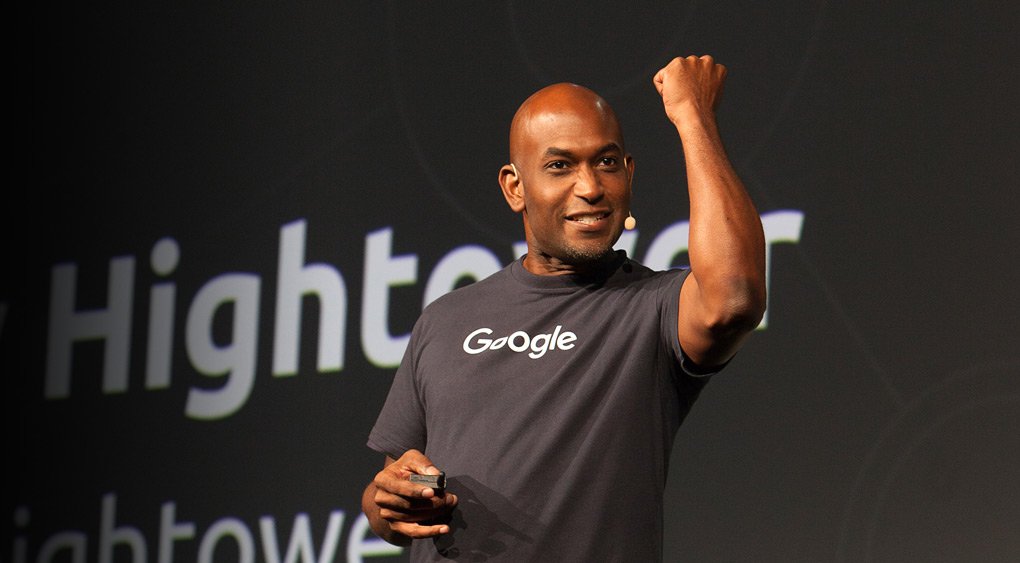

A Cloud Guru recently published an interview with Kelsey Hightower , a very famous person in the IT world.

Currently, Kelsey Hightower is the Developer Advocate on Google , as well as one of the main contributors to the Kubernetes project. In the IT community, he is known not only for his contributions to Kubernetes, but also for his regular presentations at conferences in the United States and in Europe. Kelsey's speeches are usually filled with lots of funny jokes and interesting demo stands.

Kelsey, you are the leading expert on Kubernetes. How did you get into this project and what initially inspired you to work with Kubernetes?

- I worked as a system administrator in finance, start-ups, web hosting and even in several data centers. I am familiar with the difficulties of managing infrastructure, I experienced the peaks of the popularity of the principle of write once, run anywhere, virtualization, configuration management and the like long before the appearance of containers.

When I went to the developers, it became clear to me how difficult it is to roll out the code from the local environment, trying to settle differences between the development and operation engineers.

It takes a few more years. I work in CoreOS, and before my eyes Google launches Kubernetes at DockerCon-2014. By that time they already had a repository on GitHub, but in fact it was impossible to use the project, since there was no documentation.

I rolled up my sleeves and plunged into the code base. In the end, I wrote a “very first” post about how to install and learn Kubernetes on my laptop . Post hung in the top on HackerNews - even higher than the press release from Google! That's how CoreOS found its place in the Kubernetes community.

But at that time CoreOS did not show much interest in Kubernetes, so I began to donate on my own initiative. At night I fixed bugs, refactored tests, structured code base. Before starting to talk about Kubernetes at conferences, I put a lot of effort into its development.

He inspired me, because he really solved the problems that I had encountered before. It was like insight. If it existed ten years ago, life would be much better. And I saw what potential Kubernetes has: it makes it easier for people to work not in the future, but here and now.

So how does Kubernetes make life easier right now?

- Due to its consistency. Kubernetes does what the best system administrator could do. With it, it becomes very easy to roll out your code to the right environment.

Three or four years ago, even before Kubernetes had the concept of “partitions” (English volumes) and everything related to the network stack, its main advantage was obvious: it is the deployment of containers and their management.

Let's say you have several containers and a pool of machines. After a few lines of configuration in Kubernetes, you can write: “Launch this container”. And then you just watch how it starts. If you turn off one machine, Kubernetes will move the container to another. The mere fact that today not every person is able to do this speaks for the advancement of Kubernetes.

And now, after these three or four years, Kubernetes has essentially swallowed up the world of containers. But in parallel, serverless technologies and FaaS, which solve some of the problems in infrastructure management that you are talking about, have appeared. What do you think of FaaS as a concept?

- I first encountered FaaS when working with CGI. It worked like this: some PHP code was written and put under Apache. Apache then called the code when it received the HTTP request. Then there were several weighty limitations: there was no scaling, no “cloud” concept, no understandable API in order to implement it in any language.

Now we see how Amazon is trying to once again implement this idea in Lambda. They say they can take your code and simply run it in their cloud, providing many of the components needed for the application, such as authentication, database, and API gateway.

Lambda is also different from scripts in CGI spending patterns. Do you see something in the functional billing that changes the rules of the game?

- I understand this cost model as “pay per call”. And this concept penetrates many cloud resources.

Personally, I see FaaS as a development environment in the cloud. “Like a cloud provider, I have events, message queues, and other similar services that I provide. I am not going to charge you for the SDK with which you use these services. And therefore, I don’t want to create a high threshold of entry, as I aim to attract as many customers as possible. ”

You can also run functions on Kubernetes clusters using Kubeless . Do you see in this a combination of the best of both concepts - containers and serverless?

- If your environment consists of a pool of virtual machines, then you need to do a lot of work before running your application on it. You need configuration management and a lot of similar tools before deployment.

Kubernetes raises the bar, providing more flexible abstractions: you just give him a container, say exactly how you need to run it - and you are free! But he still lacks some critical workflows that provide serverless platforms. And if you need them, you can put them on top of Kubernetes by installing something like Kubeless.

Now you have the opportunity to load a piece of code as a function and immediately launch it. Here it is important to remember that all offers from FaaS use containers, sharpened for their service. And with Kubernetes, besides the same containers, you have an open-source-product, thanks to which you get full visibility and control of your platform.

FaaS provides a good abstraction for certain tasks, but not for everyone. Kubernetes gives the highest level of abstraction, understandable and convenient for many (especially for those who come from the world of virtual machines). Serverless is essentially the next level of abstraction.

If you have a cluster of Kubernetes, which has been developing for several years, then why not implement on top of it the functionality that AWS Lambda has? You already have all the necessary abstractions that make Kubernetes a foundation for creating new workflow at a higher level. Even if it is serverless.

Do you think Kubernetes will remain the most convenient abstraction for most engineers, or will many start moving higher and developing applications at the FaaS level?

- People must use the right abstraction for their tasks. For example, when I write integration with Google Assistant, the only thing I want is to run a certain piece of code that responds to user requests. And I can run it on Google Cloud Functions. I do not need a container, do not need data storage. I just want to run it as a function. And this is the best abstraction for my case.

Now, if I want to go into machine learning, explore TensorFlow, then I need to allocate a separate container for my code in order to mount a data section to it, access the GPU, and the serverless platform will no longer be the best place to implement. In general, I believe that people should go forward and use the highest level of abstraction that will work in a particular situation.

We are moving further away from the skill set that is associated with DevOps. In my previous interview, Simon Wordley called DevOps a walked step. Does this thought intersect with your experience?

- I agree with Simon. When we start to learn something previously unknown, we have to somehow call it: “I am a system administrator. I do DevOps. I sre. The point is that as soon as we go beyond the framework of the well-known in our discipline and discover something new, this new one should have its own name.

Given the name of their discipline - go to the technology. There is no point in practicing DevOps practice for 40 years. As soon as we understand how to do it right, our ideas should form into technology.

Thus from DevOps was born Kubernetes. Even though its origins go back to Google, Kubernetes is not a complete copy of what we do for internal use. It goes well with what Docker, Puppet and Chef are working on.

For deploying Kubernetes applications, configuration management is much easier, since It includes knowledge and experience. These are its default working mechanisms. You no longer need to reinvent the wheel.

What will be your actions in case of a node fall? Surely you would have to automate your service and provide for emergency switching to another machine from the resource pool. And this is already implemented in Kubernetes.

DevOps experience has shown that there should be components in operation such as centralized logging and monitoring. And in Kubernetes this is all present in the standard package. We just took the right one from DevOps and transferred it to a new technology. And when I say “we,” I mean all the contributors to the Kubernetes project.

If most of the DevOps skills are automated now, what will be the next set of skills that an engineer should master?

- Site reliability engineering. Suppose you have a system like Kubernetes or Lambda - but who is watching the observers? Even if the cloud provider does everything for me, I need to double check at what level the service response delay is now and how much it suits my clients. The provider seeks to provide me as a client with a high level of quality of service, but I will have problems if this is not enough for my clients.

Why can this happen? Maybe I'm using the wrong library or inefficiently performing database queries. And it is here that the work of the SRE team has value. Whom do deploi now worry about? This problem has already been resolved. But after the deployment was completed, how can I customize what the client needs?

And here we can free ourselves from the bygone routine and do what was put on the back burner. Every DevOps engineer has this box. “Once we make centralized logging. Someday we will have CI / CD. ” And now you can start working on these ideas.

What is the fact that the Google Cloud Platform does with serverless and Kubernetes that you are most inspired by?

- We have been serverless for quite a long time. App Engine is definitely what AWS talked about at the last re: Invent. Serverless is not just FaaS. We want to give people the experience of using the platform painlessly. We believe that App Engine has been doing this for almost 8 years. Or, for example, BigQuery: it is completely manageable, and you simply launch your queries and then decide how quickly they should be executed.

On the computational side, we have Cloud Functions, a FaaS service, whose audience we are constantly expanding, adding support for new programming languages and other functionality so that we can focus on the code, and not on the infrastructure.

We also have implemented end-to-end solutions, which not everyone can know. For example, we integrated Cloud Functions with DialogFlow. If you want to work with Alexa analogues or connect Google Actions with Google Assistant, then you can use DialogFlow. When we create skills or actions, the whole ML-training process can be seen in the browser, using GCP in the background.

This is the main idea and value of serverless. You no longer need to configure your infrastructure to perform calculations.

Someone will probably be discouraged by this interview. They will think: “Kubernetes and serverless are very cool, but they find it difficult to find an application in my daily work. Have I been late with the implementation of these tools in my organization? ”Can you recommend something to such people?

- I will say this: you already have the necessary skills to implement these technologies. These skills are gained by sweat and blood during the management of platforms that were before Kubernetes.

You know your organizations better than anyone. Therefore, you will always be in demand, regardless of changes in technology. Be happy, start to be proud of it: no one can replace such an experience - neither a serverless, nor a cloud provider.

But you should also consider your actions and understand their value. And here, in my opinion, people make mistakes. Only when they hear about Kubernetes or serverless, they immediately want to implement them. Instead, you should ask yourself the question: “What does my team or organization as a whole need?”

Perhaps you have a fellow developer who always complains about the need to constantly deploy the infrastructure before running anything. Offer him a try first. Let him roll out his code and just watch how it unfolds from scratch. If your colleagues see this as a benefit, they will support you. And then you tell them that it really was Kubernetes, Lambda or something else.

We need to be more savvy about business and think about why we need this or that system, and not just walk in a Kubernetes T-shirt and say, “Hey, I was at the conference and I think we should implement it right now.” ! ”

Slow down! You know exactly what your business’s problems are. First solve them and only then start talking about Kubernetes.

')

Source: https://habr.com/ru/post/349716/

All Articles