How not to go crazy in the development of regulatory information management systems. From the history of our projects

Being engaged in large-scale automation projects and creating new information systems, each time we were faced with the need to implement the subsystem of reference books, classifiers, registers and other similar objects that make up the regulatory reference information (NSI) of the customer. For 15 years of work in LANIT with the NSI control systems, life has thrown us clients with the most diverse requirements. And, of course, various situations arose on these projects. I will tell about several instructive stories that have happened to us. In the article you will find examples that will be useful to many who develop software. Well, those who work directly with the NSI will be even more interesting - their own shirt is closer to the body.

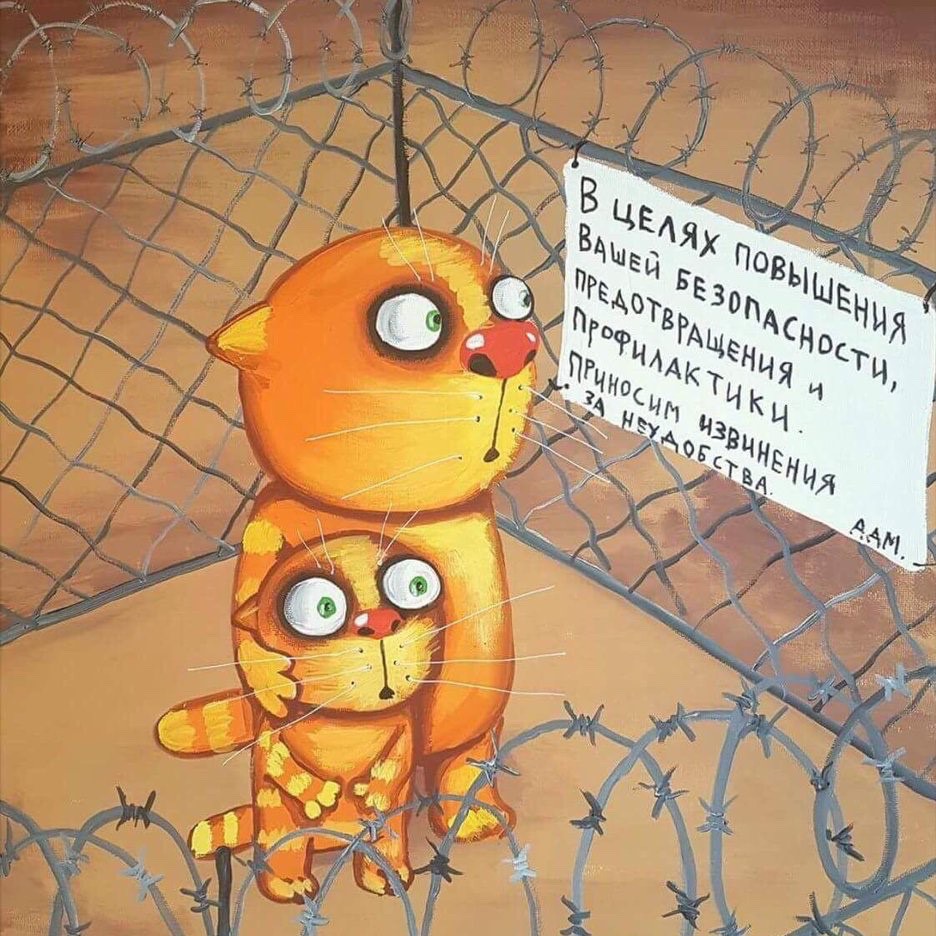

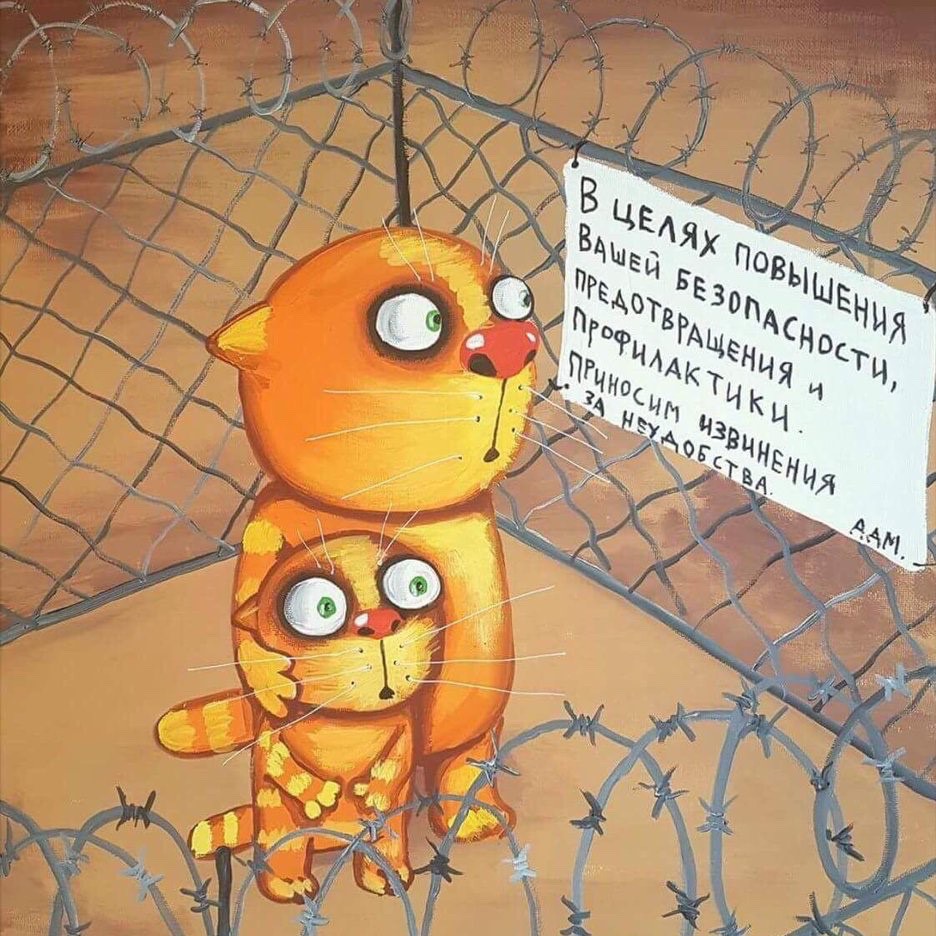

For the illustrations, special thanks to the wonderful artist Vasya Lozhkin .

Creating a single counterparty management system for a large manufacturing company with a multitude of factories throughout the country and abroad.

')

The goal of the project is to create a single counterparty database for all departments. Counterparties are maintained on the basis of applications that are assigned priorities from low to urgent. Urgent application must be processed by the NSI experts for 2 hours, regardless of the time difference between departments.

Living history

The project was agreed with all interested parties (the customer’s management assured us of this) and was developed in due time in accordance with the approved requirements.

The presentation of the created counterparty management system went smoothly until one prominent woman got up - the head of the Siberian branch - and very vigorously, using Russian idiomatic expressions, brought to the attention of the audience that when she received the train car for loading finished products she would not wait 2 hours, while someone there in Moscow will consider the application for adding a buyer.

She is not going to pay for a simple wagon while the application is being approved, and will bring the customer data into the system as it is and ship the goods, and the Moscow comrades can then sort out information about the customer as much as they like.

This statement was supported by several more heads of the company's branches, which almost completely destroyed the centralized methodology for maintaining a single directory of contractors on the basis of applications.

What we have remembered: do not trust the words of the managers and responsible persons on the part of the customer, that all decisions are agreed, everything is in the subject and there are no objections. Identify all project stakeholders and try to figure out the system requirements and limitations directly from them.

Creating a centralized client management system for an insurance company with a large number of branches and agents throughout the country.

The goal of the project is to create a consolidated customer base for use in analytical applications. The database was collected from all branches, the data were verified, supplemented, duplicate objects were eliminated. The number of clients in one branch is from one thousand to several millions. At the same time, there are practically no intersections in clients between branches.

Living history

After creating a consolidated customer base, it should be periodically compared with the databases of branches to identify differences, their subsequent processing and upload changes to the consolidated database. The increase in customer base between reconciliations amounted to several thousand entries.

To perform the verification, a special module was created, the architecture of which was designed based on the fact that it must quickly compare a large number of records and form a relatively small XML file with the changes to be loaded. XML format was chosen by the customer.

After the introduction of the system, we received a message from the customer that the reconciliation module is extremely slow and generates a huge file for loading into the consolidated database, which they cannot open in any way.

What turned out? The customer made an initial download of data from the branches to the summary directory. For experts, this work seemed tedious and time consuming, and they simply took the reconciliation module and slipped to it the complete data of the new branch, which had never been downloaded into the summary directory.

The reconciliation module, which, in accordance with the TOR, was supposed to generate information about differences in the number of several thousand records, received two million entries as input, and they were all absent in the master directory.

As a result, for several hours of inhuman efforts, the verification module still formed a file for downloading, which included all the branch data. And yes, this file was huge.

The reconciliation module was used by the customer for purposes other than intended, but the fact that the reconciliation allows the initial loading of the data was pleasant to the customer, and he was going to continue working in this way, only asked to significantly speed up the module’s operation and do something with the file being created. it could be opened in a text editor.

On our objection that the reconciliation module is not intended for initial data loading, the customer happily showed the TK and asked, but where is it written here? As we want, and use!

What we have remembered: always include a description of restrictions in the TOR - which your system should not do. Well, or create solutions that take into account all possible usage scenarios, which is much more expensive.

Creation of a centralized NSI management system for a financial organization.

The goal of the project is to create a centralized system for keeping reference books and classifiers with sending changes to interested systems and databases. Providing access to external systems to directories through the web services of our system.

Typically, customers have an average number of entries for one directory from several hundred to several thousand. Our recent record holder is a directory with 11 million entries. But this customer gave us a surprise. His directory has over 100 million entries. We loaded it more than a day, because During the initial load, many data checks were performed. This would not be a big problem, but the customer demanded that the directory load in a few minutes.

What we remember: in the modern world, data is becoming more and more, and their growth rates are constantly increasing. The system must be ready for high loads even where they were not originally intended. We are constantly developing our solution taking into account current data growth trends and increasing requirements for their processing speed.

Creation of a centralized system for managing NSIs in a large bank.

The goal of the project is to create a centralized system for keeping reference books and classifiers with sending changes to interested systems and databases. The peculiarity of the project is the very complicated processes of propagation of changes, affecting many systems.

Since in the future I will have to mention our own solution for managing the NSI, I allow myself a small lyrical digression.

Typically, when creating directories and change propagation processes, the customer consults with our analysts which tool or set of tools provided by the system is best used for a specific task. This time the customer said that he would create directories and processes on his own.

After some time, one of the customer’s specialists complained to us that he was not loading data into the system. In confirmation, we were sent a data import package, a source file with loadable records, and an error message stating that the data being loaded are of the wrong type.

We start to understand. We twist the package this way and that, we try different ways of presenting the source data, but we cannot repeat the mistake. We contact the customer with questions, maybe the import package has connected software components, maybe some additional restrictions are imposed on the directory, maybe there is no data from this process? We get the answer to everything - there is nothing like that, everything should be easy to load and work before.

It turns out that this import package was only the tip of the iceberg. If briefly and greatly simplified, the following happened. The import procedure loaded the correct data from the source file into the directory. The source file was deleted. Then, our system carried out the distribution of changes to several databases, one of which made a comparison of our own data with our changes and formed a file with discrepancies, which was returned to our system for download. Moreover, to download this file, the customer used the same import procedure as for the source file. And it was in this file, formed by the external system, there was data of the wrong type. Obviously, when analyzing the source file, we could not find any errors, and we were told nothing about the second file and the spreading process of propagation of changes.

What we remember: Always check the information received, even if you are told that we have a little problem here, and she is exactly in this place, I swear to my mother! Analyze the problem in context.

Creating a master data management system in a manufacturing company.

The goal of the project is to create a system for managing NSIs in a management company with a multitude of branches, factories and design departments.

This time we have not moved beyond several presentations. Our system NORMA really liked techies. She covered all their existing problems. Then it was the turn to show the system to the leadership, and here there was a bummer of a decade. The high manager looked, listened and said: “We all work here on Apple products, they have a certain style, and your system does not fit into this style. We will not even consider it. ”

What we remember: customers are different, and some you just do not fit. The style is different.

Similar stories happen in various projects. What was interesting about your project life? What was an unexpected lesson for you? Share in the comments.

For the illustrations, special thanks to the wonderful artist Vasya Lozhkin .

The first case. How to load the car and small cart

Creating a single counterparty management system for a large manufacturing company with a multitude of factories throughout the country and abroad.

')

The goal of the project is to create a single counterparty database for all departments. Counterparties are maintained on the basis of applications that are assigned priorities from low to urgent. Urgent application must be processed by the NSI experts for 2 hours, regardless of the time difference between departments.

Living history

The project was agreed with all interested parties (the customer’s management assured us of this) and was developed in due time in accordance with the approved requirements.

The presentation of the created counterparty management system went smoothly until one prominent woman got up - the head of the Siberian branch - and very vigorously, using Russian idiomatic expressions, brought to the attention of the audience that when she received the train car for loading finished products she would not wait 2 hours, while someone there in Moscow will consider the application for adding a buyer.

She is not going to pay for a simple wagon while the application is being approved, and will bring the customer data into the system as it is and ship the goods, and the Moscow comrades can then sort out information about the customer as much as they like.

This statement was supported by several more heads of the company's branches, which almost completely destroyed the centralized methodology for maintaining a single directory of contractors on the basis of applications.

As a result, the project was modified in such a way that all branches had access to the counterparty database and could make changes directly, but at the same time automatic search for similar records that were displayed to the branch employee was performed, and he decided to correct the data, which later tested by an expert group.

What we have remembered: do not trust the words of the managers and responsible persons on the part of the customer, that all decisions are agreed, everything is in the subject and there are no objections. Identify all project stakeholders and try to figure out the system requirements and limitations directly from them.

Case two. We want and use

Creating a centralized client management system for an insurance company with a large number of branches and agents throughout the country.

The goal of the project is to create a consolidated customer base for use in analytical applications. The database was collected from all branches, the data were verified, supplemented, duplicate objects were eliminated. The number of clients in one branch is from one thousand to several millions. At the same time, there are practically no intersections in clients between branches.

Living history

After creating a consolidated customer base, it should be periodically compared with the databases of branches to identify differences, their subsequent processing and upload changes to the consolidated database. The increase in customer base between reconciliations amounted to several thousand entries.

To perform the verification, a special module was created, the architecture of which was designed based on the fact that it must quickly compare a large number of records and form a relatively small XML file with the changes to be loaded. XML format was chosen by the customer.

After the introduction of the system, we received a message from the customer that the reconciliation module is extremely slow and generates a huge file for loading into the consolidated database, which they cannot open in any way.

What turned out? The customer made an initial download of data from the branches to the summary directory. For experts, this work seemed tedious and time consuming, and they simply took the reconciliation module and slipped to it the complete data of the new branch, which had never been downloaded into the summary directory.

The reconciliation module, which, in accordance with the TOR, was supposed to generate information about differences in the number of several thousand records, received two million entries as input, and they were all absent in the master directory.

As a result, for several hours of inhuman efforts, the verification module still formed a file for downloading, which included all the branch data. And yes, this file was huge.

The reconciliation module was used by the customer for purposes other than intended, but the fact that the reconciliation allows the initial loading of the data was pleasant to the customer, and he was going to continue working in this way, only asked to significantly speed up the module’s operation and do something with the file being created. it could be opened in a text editor.

On our objection that the reconciliation module is not intended for initial data loading, the customer happily showed the TK and asked, but where is it written here? As we want, and use!

As a result, we had to make changes to the architecture of the verification module in order to process large data arrays and generate the output file in CSV format, since the customer decidedly did not want to abandon such a convenient tool.

What we have remembered: always include a description of restrictions in the TOR - which your system should not do. Well, or create solutions that take into account all possible usage scenarios, which is much more expensive.

The third case. Not a baby elephant, but an elephant, and it should fly

Creation of a centralized NSI management system for a financial organization.

The goal of the project is to create a centralized system for keeping reference books and classifiers with sending changes to interested systems and databases. Providing access to external systems to directories through the web services of our system.

Typically, customers have an average number of entries for one directory from several hundred to several thousand. Our recent record holder is a directory with 11 million entries. But this customer gave us a surprise. His directory has over 100 million entries. We loaded it more than a day, because During the initial load, many data checks were performed. This would not be a big problem, but the customer demanded that the directory load in a few minutes.

As a result, we had to greatly change the order of the system with this directory. In fact, it is managed outside the system, and we only provide an interface for using it. Now we are developing new ways for our system to work with very large directories. We hope that the customer will like it.

What we remember: in the modern world, data is becoming more and more, and their growth rates are constantly increasing. The system must be ready for high loads even where they were not originally intended. We are constantly developing our solution taking into account current data growth trends and increasing requirements for their processing speed.

The fourth case. Hard focus with files

Creation of a centralized system for managing NSIs in a large bank.

The goal of the project is to create a centralized system for keeping reference books and classifiers with sending changes to interested systems and databases. The peculiarity of the project is the very complicated processes of propagation of changes, affecting many systems.

Since in the future I will have to mention our own solution for managing the NSI, I allow myself a small lyrical digression.

Read more about the NORMA system.

The tasks of our customers are similar in many respects, and we decided to reduce the cost of software development and reduce project time by creating our own universal platform for managing the master data and master data (Reference Data Management & Master Data Management). The system has existed for more than 10 years, and all these years we have been actively developing it in LANIT.

NORMA supports centralized and distributed NSIs. All data and meta-information are kept in the light of the change history and the system allows you to view and change the entire array of NSIs on an arbitrary date in the past or in the future. For directories, the processes of agreeing and approving changes can be customized. The system includes a dedicated change distribution server, which allows you to interact with external systems through various interfaces and create fairly complex integration business processes (a sort of mini BizTalk Server). We have data export / import packages that can upload / download reference data to databases and files of various formats. Recoding tables are maintained for external systems.

NORMA includes a graphical query builder and report designer. In addition to working with its own directories, the system allows through its interface to view and modify directories that are in the external, in relation to it, databases, as well as use these directories in the query builder and export / import packages.

In response to the occurrence of various events in the system, for example, changes in the directory, plug-in software components written in C # can be launched, which can both check data and interact with external systems and, in fact, the NORMA system itself. Almost all functions of the system are available through web services.

The system can be scaled both vertically, by increasing the capacity of the application server and the database, or horizontally by using a multi-node application server, in which each node or group of nodes is responsible for performing a separate function. The system can use Microsoft SQL Server, Oracle or PostgreSQL to store the master data.

NORMA supports centralized and distributed NSIs. All data and meta-information are kept in the light of the change history and the system allows you to view and change the entire array of NSIs on an arbitrary date in the past or in the future. For directories, the processes of agreeing and approving changes can be customized. The system includes a dedicated change distribution server, which allows you to interact with external systems through various interfaces and create fairly complex integration business processes (a sort of mini BizTalk Server). We have data export / import packages that can upload / download reference data to databases and files of various formats. Recoding tables are maintained for external systems.

NORMA includes a graphical query builder and report designer. In addition to working with its own directories, the system allows through its interface to view and modify directories that are in the external, in relation to it, databases, as well as use these directories in the query builder and export / import packages.

In response to the occurrence of various events in the system, for example, changes in the directory, plug-in software components written in C # can be launched, which can both check data and interact with external systems and, in fact, the NORMA system itself. Almost all functions of the system are available through web services.

The system can be scaled both vertically, by increasing the capacity of the application server and the database, or horizontally by using a multi-node application server, in which each node or group of nodes is responsible for performing a separate function. The system can use Microsoft SQL Server, Oracle or PostgreSQL to store the master data.

Typically, when creating directories and change propagation processes, the customer consults with our analysts which tool or set of tools provided by the system is best used for a specific task. This time the customer said that he would create directories and processes on his own.

After some time, one of the customer’s specialists complained to us that he was not loading data into the system. In confirmation, we were sent a data import package, a source file with loadable records, and an error message stating that the data being loaded are of the wrong type.

We start to understand. We twist the package this way and that, we try different ways of presenting the source data, but we cannot repeat the mistake. We contact the customer with questions, maybe the import package has connected software components, maybe some additional restrictions are imposed on the directory, maybe there is no data from this process? We get the answer to everything - there is nothing like that, everything should be easy to load and work before.

It turns out that this import package was only the tip of the iceberg. If briefly and greatly simplified, the following happened. The import procedure loaded the correct data from the source file into the directory. The source file was deleted. Then, our system carried out the distribution of changes to several databases, one of which made a comparison of our own data with our changes and formed a file with discrepancies, which was returned to our system for download. Moreover, to download this file, the customer used the same import procedure as for the source file. And it was in this file, formed by the external system, there was data of the wrong type. Obviously, when analyzing the source file, we could not find any errors, and we were told nothing about the second file and the spreading process of propagation of changes.

What we remember: Always check the information received, even if you are told that we have a little problem here, and she is exactly in this place, I swear to my mother! Analyze the problem in context.

The fifth case. I'm getting used to mismatches

Creating a master data management system in a manufacturing company.

The goal of the project is to create a system for managing NSIs in a management company with a multitude of branches, factories and design departments.

This time we have not moved beyond several presentations. Our system NORMA really liked techies. She covered all their existing problems. Then it was the turn to show the system to the leadership, and here there was a bummer of a decade. The high manager looked, listened and said: “We all work here on Apple products, they have a certain style, and your system does not fit into this style. We will not even consider it. ”

What we remember: customers are different, and some you just do not fit. The style is different.

Similar stories happen in various projects. What was interesting about your project life? What was an unexpected lesson for you? Share in the comments.

By the way, we are looking for specialists in our team.

Source: https://habr.com/ru/post/349710/

All Articles