HDR game analysis

The last couple of weeks I spent on researching HDR images of various games.

When it comes to SDR video, you should be familiar with the RGB values in the range of 0-255, where 0 is black and 255 is white.

HDR10 / Dolby Vision is slightly different in this regard, not only because it uses the interval of 0-1023, but also because all these data values represent not just a black and white (or color) gradient, but also a measure of illumination in the threads, There is in units of measurement of brightness of light.

')

Unlike the values used in previous video formats, these values are defined and absolute. A value of 0 always means a total absence of light (total black), a value of 1023 always represents 10,000 nits of illumination, a value of 769 always denotes 1000 nits.

That is, if you transmit these values to a modern HDR TV, it should output exactly the amount of light described by the specified value.

This system is used in both the HDR10 and Dolby Vision. It may be called PQ based HDR (PQ based HDR).

At the moment there are not many TVs with a maximum value of 10,000 nits, you are lucky if your value exceeds 1500 nits.

When the received signal goes beyond the hardware limits of the display, the TV itself decides how to handle this situation. Most manufacturers simply cut the white values above their chosen level. They can also use a smooth recession and make the offset to the trimmed values less obvious.

To do this, when creating content under HDR10 and Dolby Vision, additional information about the image content in the metadata format is indicated. This metadata usually tells you what the brightest value will be in the game (or movie) and what is the average illumination for all content. These values are determined by the display on which the content was created.

Most UHD content is now created for screens with 1000 nit or 4000 nit.

The purpose of this metadata is to get an SDR image (or something between SDR and HDR) from the original HDR content when the content is viewed on a display that does not reach the peak illumination values of the display on which the content was created .

That is, if the movie was created on a 1000-nit display, and you have an OLED screen with a maximum of 650 nit, the TV can use this metadata to decide how to display information that cannot be displayed any other way due to hardware limitations.

If you have a display that matches the maximum brightness of the content or exceeds it, then it will not need metadata.

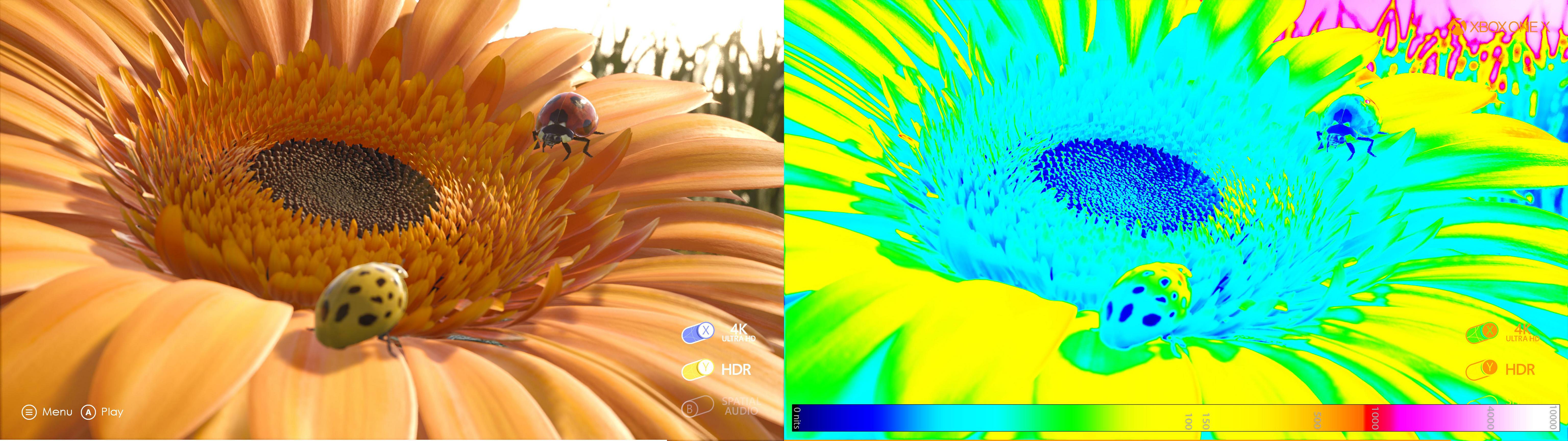

Having dealt with this, let's look at how the games were created, what options they provide to the user for adjusting the image, what exactly these options do, and what is the connection between these aspects and what HDR looks like.

Video games have a big advantage over movies - their picture is generated in real time, so you can customize it as you like.

Due to the very nature of HDR content, this is very easy to measure: we will only need a screenshot or video capture without tone mapping, and from them we can see the code values used in different parts of the image.

We can see that if the game conveys something different from black (or has cinematic color correction with elevated black values), then we can see the brightest value that the game will try to use for objects like the sun.

Therefore, I studied various games for the Xbox (the console allows you to create screenshots in HDR) in order to understand what the various in-game settings do and how to use them to get the optimal picture on the display.

The goal of HDR is to transfer more information to the display so that the brightest light that could be displayed in the corresponding parts of the image. Usually the brightest points are in the glare of reflections, explosions and the sun.

Let's look at some really good examples of HDR gaming. In all these examples, slightly different parameters and different approaches to the implementation of tone mapping are used.

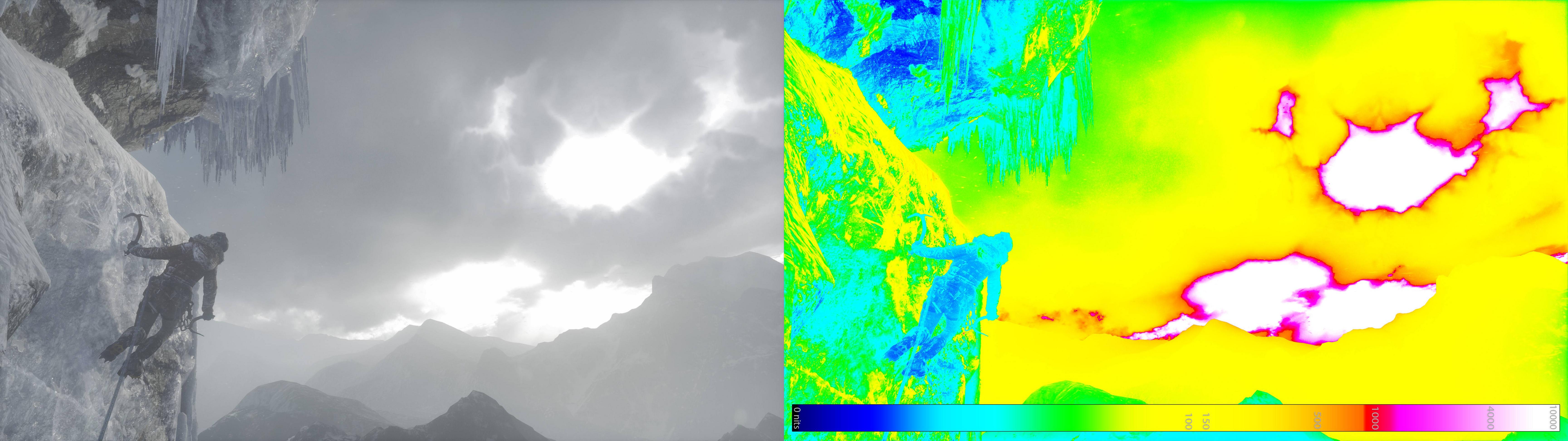

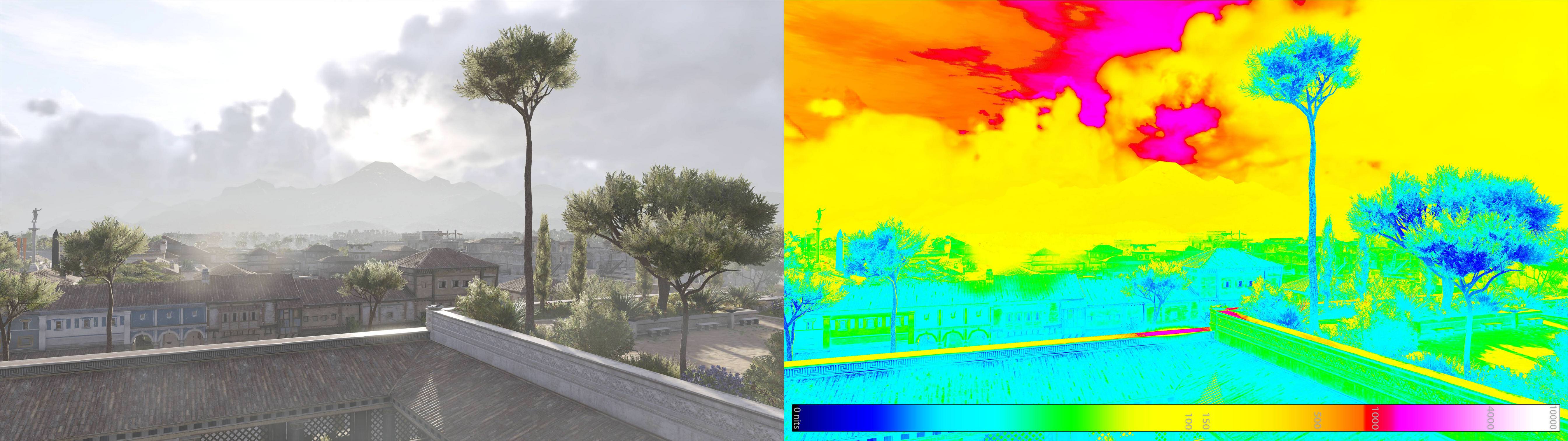

In order to better visualize the output of the game in a format without HDR10 / SDR format, I came up with a method for creating irradiance maps.

Using this scale, we can easily assess what is dark in the picture, what is light, and what is very bright.

Most of what we see is in the range of 0-150 nits, and all the above is an additional illumination provided by HDR.

Star Wars: Battlefront 2

In fact, in all HDR-compatible games on the Frostbite engine, which I have reviewed (Battlefield 1, Mass Effect), the same scheme is used.

The metadata is output to 100,000, and the tonal compression itself is performed by the game using the HDR slider, where 0 nit is the leftmost value and 10k nit is the rightmost.

As we can see, the Sun itself is displayed in 10k nits. Objects that should be completely dark look like this. Glare reflections on the top of the weapon also fall in the range from 4,000 to 10,000 nit.

The Frostbite engine Dice games are actually very interesting because you can move the HDR slider one space to the left. This will give us 100/200 nits (depending on the game), essentially compressing the game image to the SDR. This way you can very easily see what your new cool TV is capable of.

Another fun thing about DICE games is that you can move the slider to 0% and literally turn off all the lights. So we will see that the lighting is really calculated in real time, because we told the engine that the brightest light source should be equal to 0.

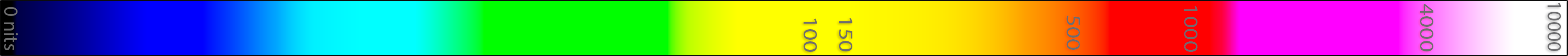

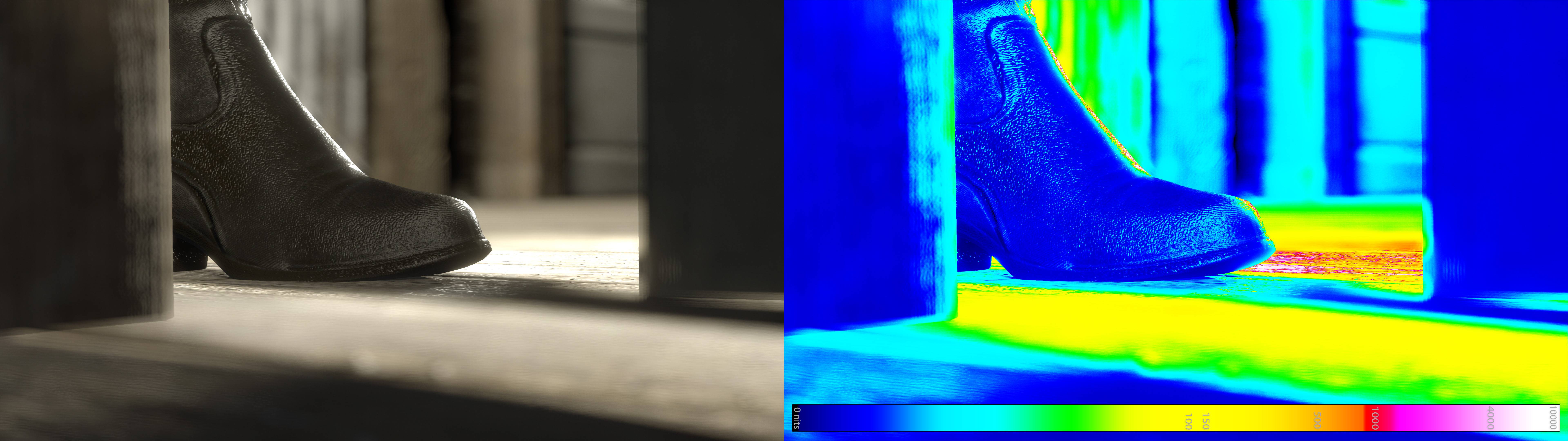

Rise of the Tomb Raider

In this game, a similar scheme is used: the brightness slider, which controls the black point (when moving to the lowest point), and the second HDR slider controls the peak brightness.

Tomb Raider is limited to 4000 threads. As in the Frostbite engine games, you can either raise the slider to the maximum, so that the TV performs tonal compression, or follow the on-screen instructions by selecting the maximum brightness and allowing the game to display the data yourself.

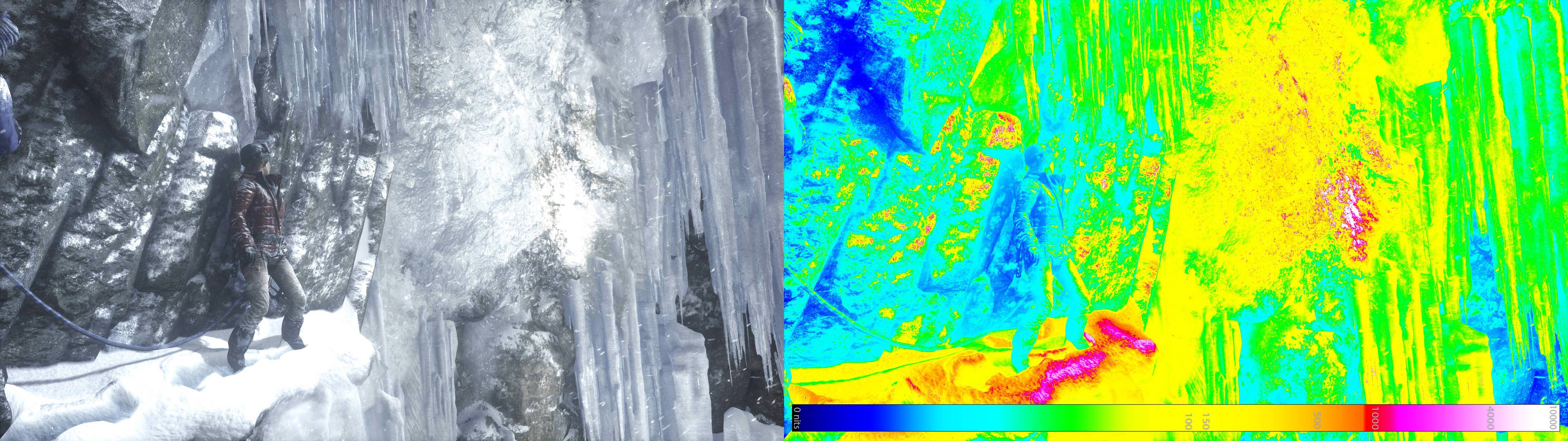

RotTR is especially interesting in this, because there are a lot of glare reflections, and not only in places where we usually see them, for example, on brilliant ice and shimmering snow, reflecting the light of the sun ...

... they can be seen in low light conditions and on less obvious “shiny” surfaces, such as this shoe in insanely high resolution.

It seems that when the output reaches 4000 nits, then any level above this jumps directly to 10k nits (which the display will cut anyway, since the metadata seems to tell the display that they have a value of 4000 nits).

These data are not lost, they can be seen from time to time, and this tells us that this is an artistic decision or part of the color correction process of the game under HDR.

Assassin's Creed Origins

AC: Origins is another game with well-implemented HDR. As in Tomb Raider, there is also a limitation of 4,000 nits (pink fragments). The game has a brightness slider, which, based on visibility conditions, must be lowered as low as possible. In addition, the game has an option Max Luminance, conveniently marked in the threads.

It also has a “white sheet” scale, that is, besides the sliders and the settings of the darkest and brightest parts of the game, it allows you to change one of the intermediate points: the brightness of the sheet of paper.

Ubisoft recommends the following setting for the white slider:

adjust the value so that the paper and hanging fabric in the image are almost white

However, as in the case with the brightness slider, this allows you to adjust the displayed game graphics to match the viewing conditions: if you are surrounded by a controlled light source, set the value to 80 nits, but with enhanced ambient lighting you prefer higher values.

Configuring games according to technically correct parameters shows that for many consumers HDR10 / Dolby vision is too dark. You can also see that the developers are still closely involved in these technologies: with proper calibration of the picture of the game HUD in AC becomes a bit too dark.

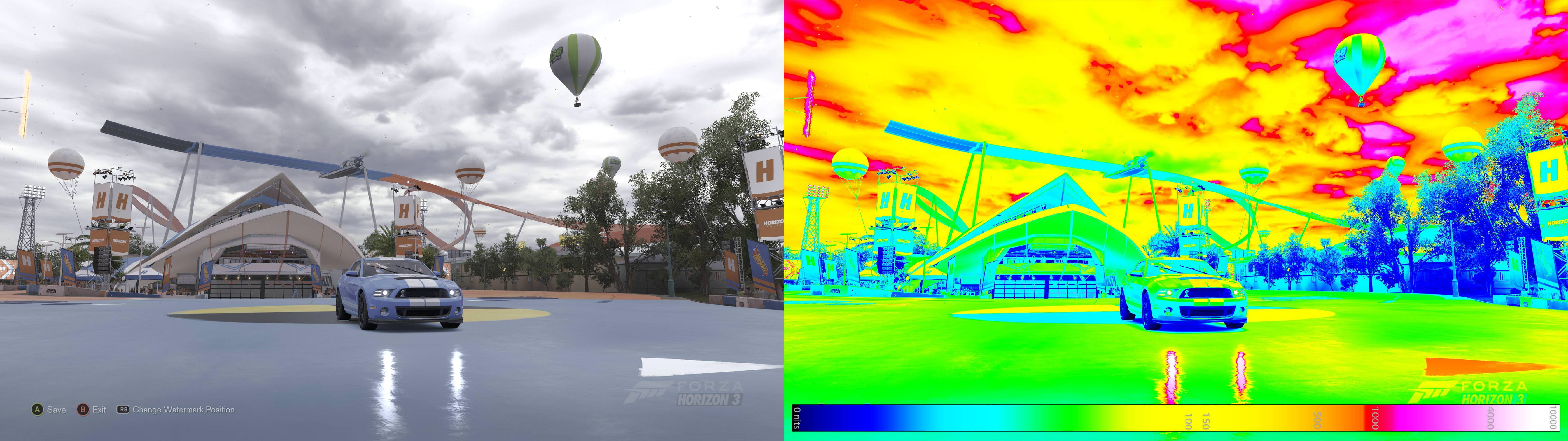

Next, we look at three Microsoft products that work with output to the full 10,000 nits.

Forza Horizon 3

A wonderful example of HDR is that it is not necessary to use it only for insanely bright spots of the sun, magic and fire.

When the clouds are over, the sky is still very bright, and photographers have to go on tricks to cope with the problems of contrast caused by this cause. Forza blooms here in full force, I almost feel cold and dull, as if I see them with my own eyes.

As you can see, the sky reaches about 4000 nits, the light almost does not fall on the grille and it is dark, while the headlights give a full 10k nits.

In the conditions of the night, the game uses the dark border of the scale to the full, while explosions and headlights are still illuminated in a realistic way.

This is also a good example of the fact that even in SDR a very dark image that conforms to HDR10 standards will be perceived as too dark, or muffled, or

black. As we can see from the irradiance map, all the details are really in place, but the human eye cannot adjust to such details. He needs to be in low light conditions for about 10 minutes, until certain chemical reactions take place in the eye cells. Obviously, this is a problem for many consumers, who most likely are not in the light conditions that contribute to this.

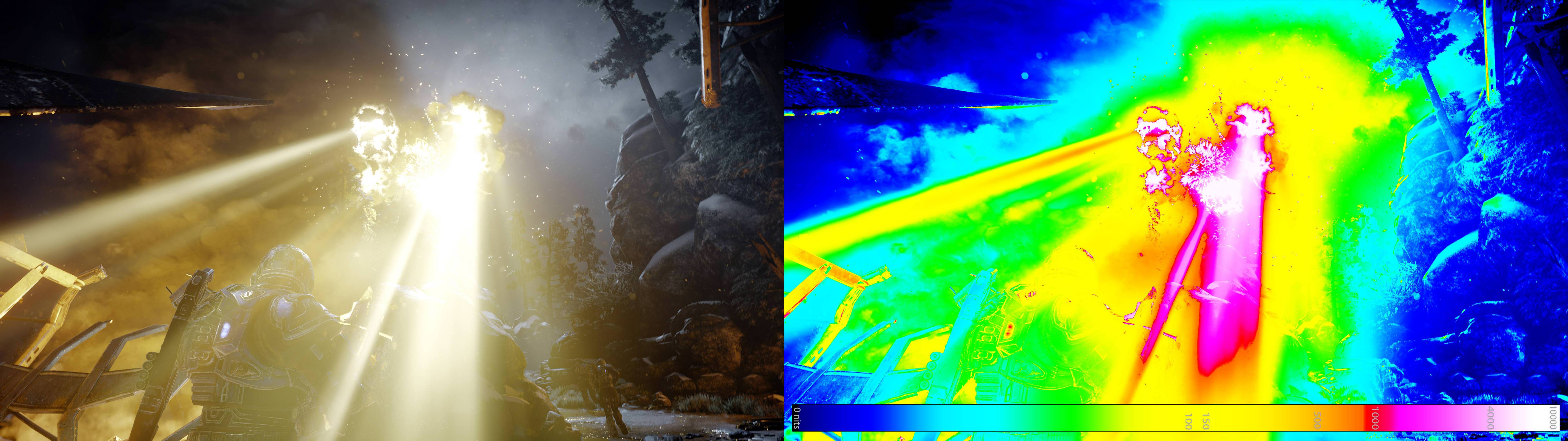

Gears of war 4

INSECTS

All three games work with two sliders. The brightness slider controls the black levels, but also controls one aspect of the contrast, increasing the maximum output to 10,000k nits. The second HDR slider also allows you to set the maximum output, below which other slider is configured.

In Forza and Gears, these parameters are called simply Brightness (Brightness) and HDR, but in INSECTS they are called HDR contrast (HDR Contrast) and HDR brightness (HDR brightness).

Let's now look at some other examples.

Shadow of Mordor

SoM uses a very simplified approach: the game always outputs a maximum of 10k nits, and tonal compression leaves it to the TV. This is interesting, since we know that, in fact, developers have never seen the game being output to 10k nits, because there are no such displays.

The game has a traditional brightness slider (Brightness), which allows users to adjust the black point in accordance with their taste and viewing conditions.

In the screenshot we see the obvious places to look for the full 10k nits, namely the sun and the glare of reflections. We also see that the side of the character in the shadow is as dark as it should be.

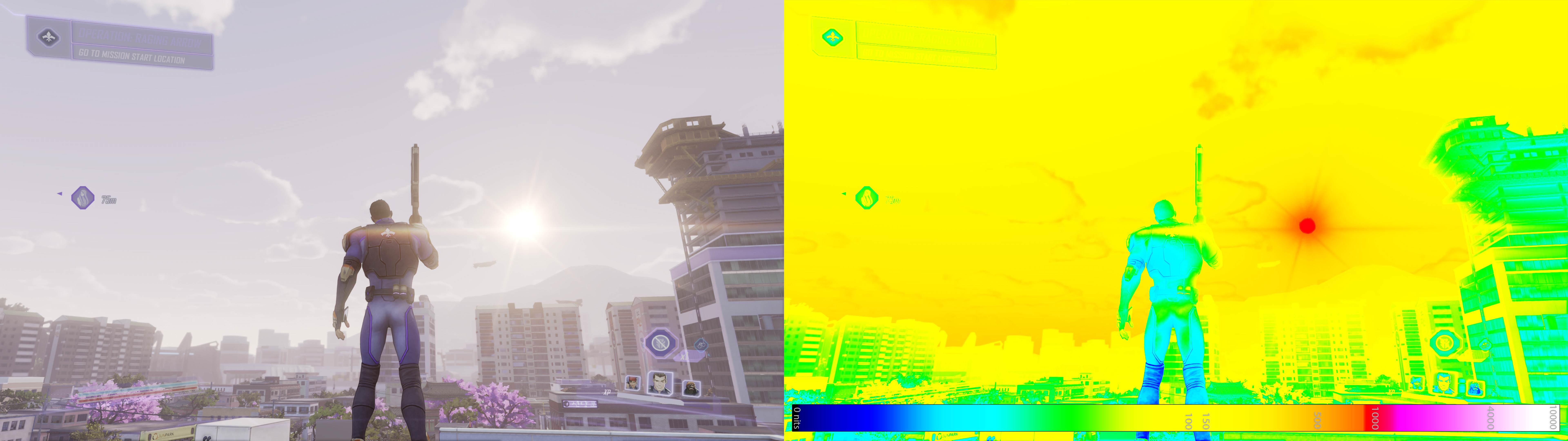

Agents of mayhem

It uses a similar technique, except that the game is bounded above by 1000 threads and color correction / tonal compression is obviously done with this in mind, since this is a fairly achievable conclusion from the consumer display point of view. I don’t think it was a coincidence, and it’s actually one of the best looking HDR games.

Here again, as in other games, moving the slider to the left improves the black level.

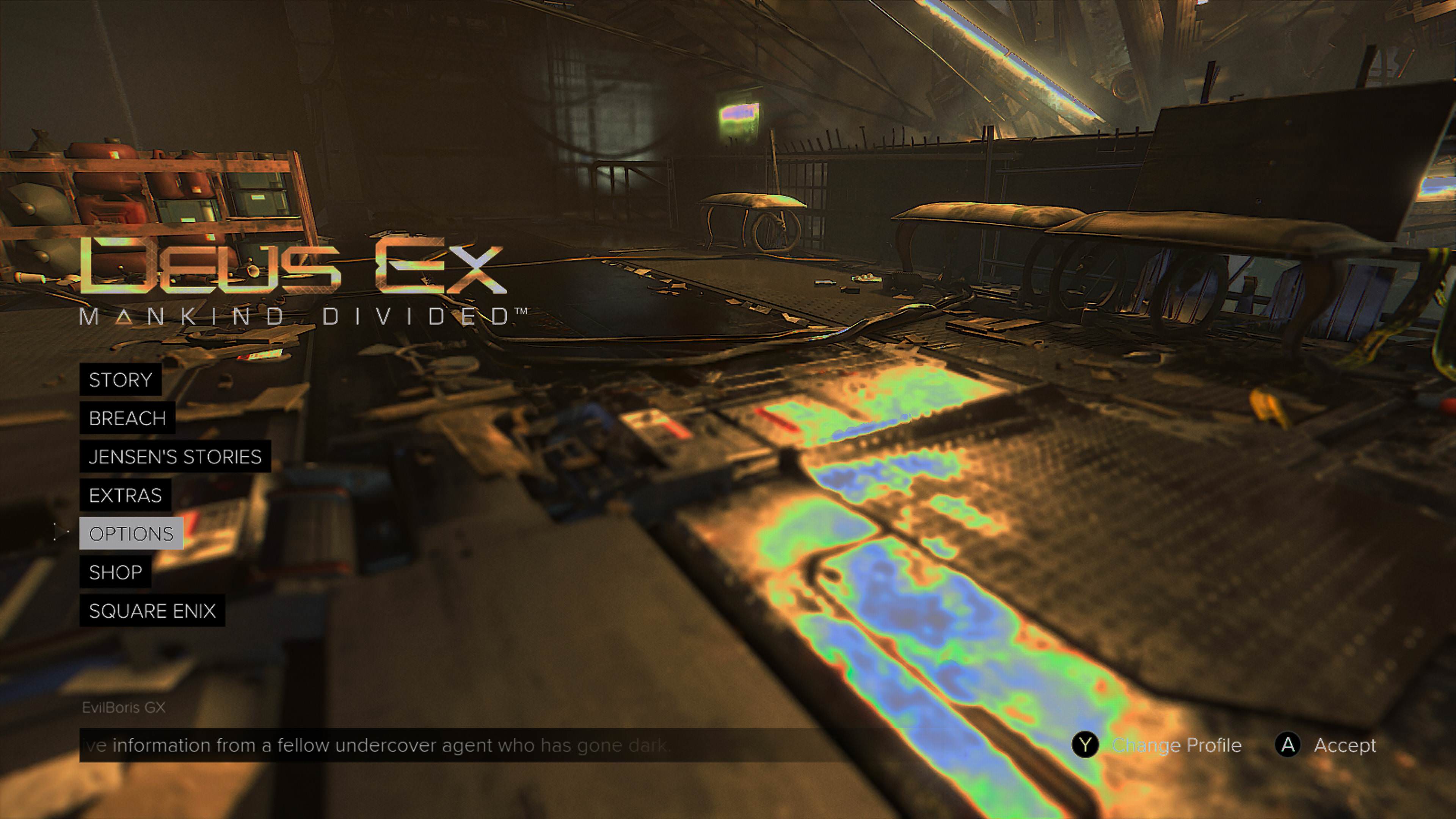

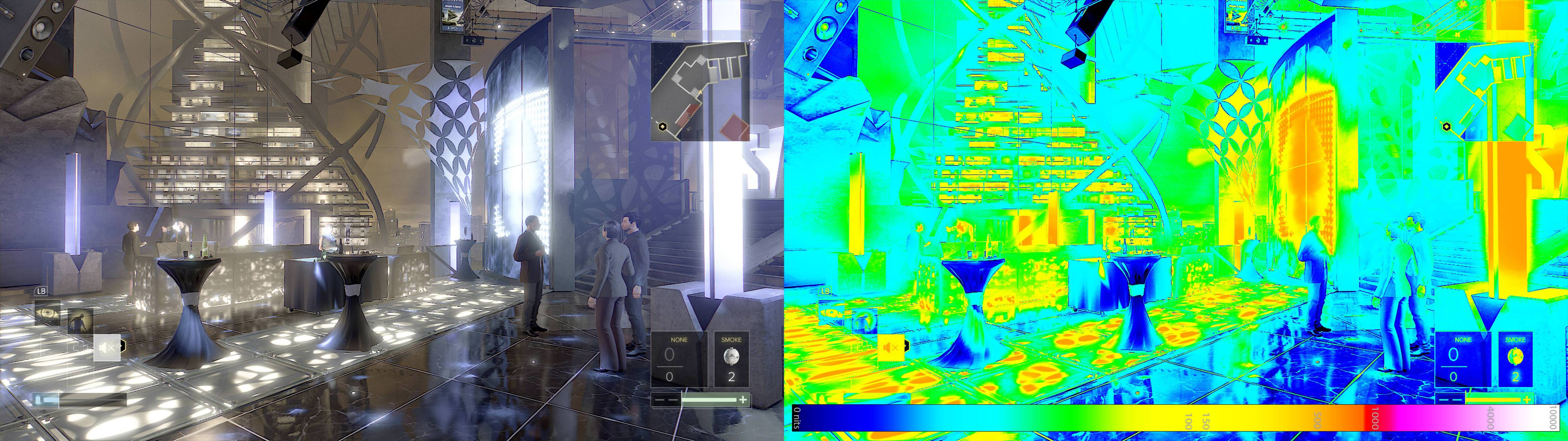

DEUS EX: Mankind Divided

Many cases of black pushed up are probably artistic tricks, but it also turns out to be a bug that leads to a perfect failure of color correction when lowering the game brightness slider below 35%.

This looks like the result of some kind of incorrect curve adjustment.

40-45% give a conclusion of 1000 nits without too much lifting black up.

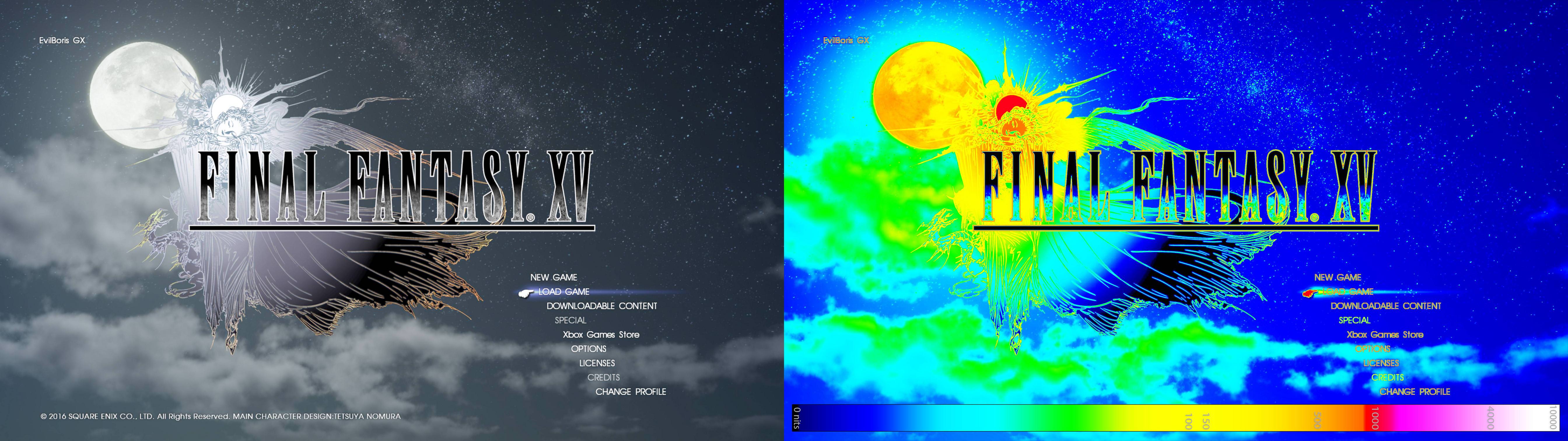

Final fantasy XV

From "Squarenix, fu", to "Squarenix, wow!"

A fixed maximum output of 1000 nits and a simple brightness slider for lowering black levels. Fabulous color correction, including under different lighting conditions.

Even on the initial screen, there are 2D elements optimized for HDR.

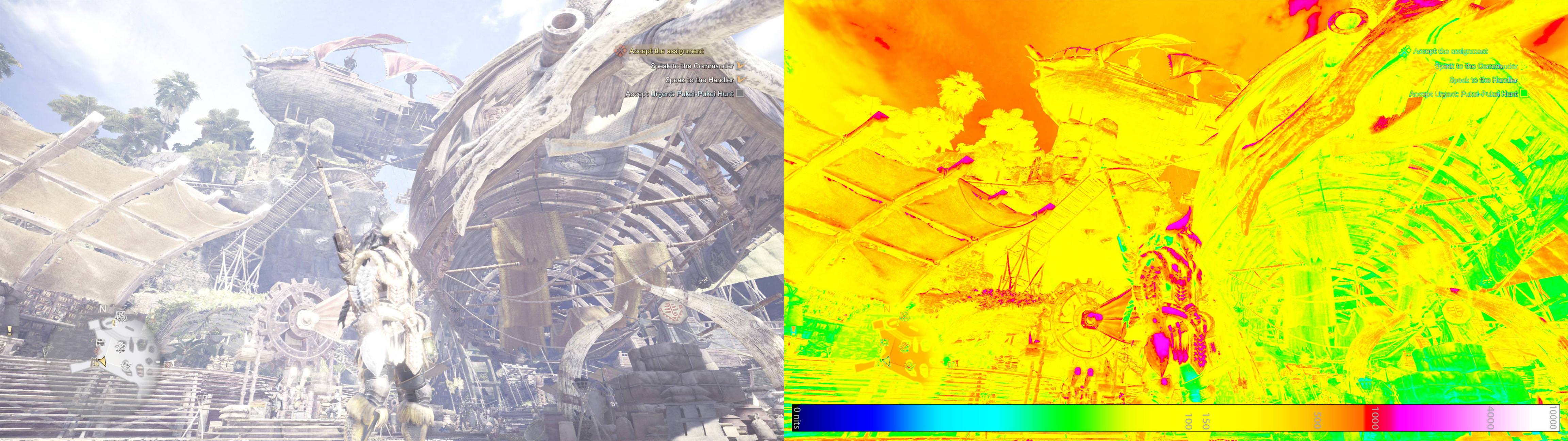

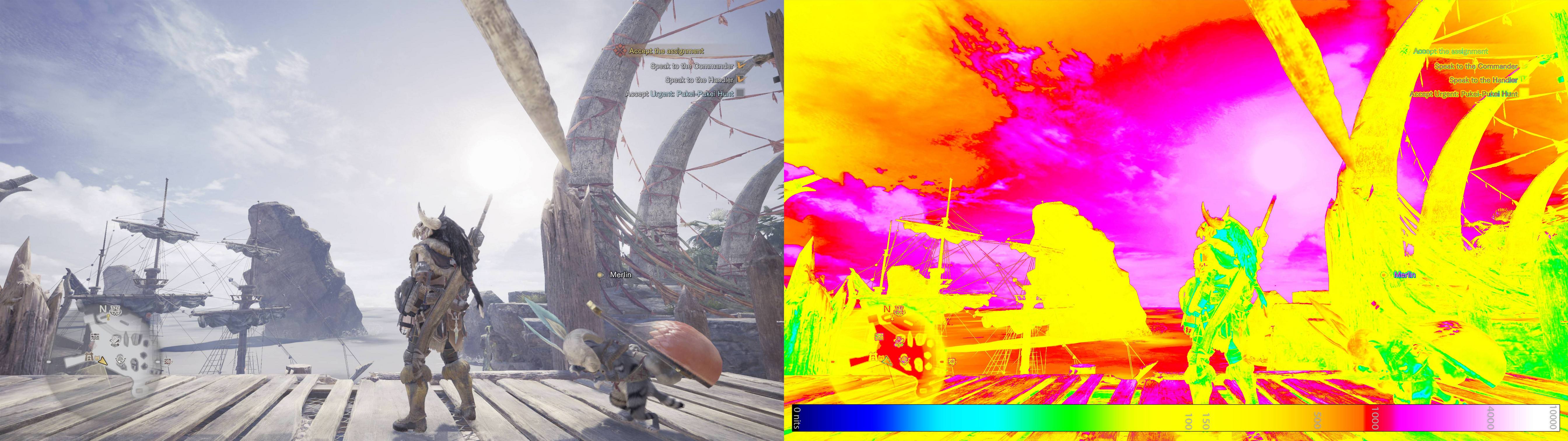

Monster hunter world

Almost like Deus Ex, Monster Hunter World seems to be operating at around 4000 nits. However, almost as in the DEUS EX, when HDR is enabled, the game shows serious problems with the level of black. When adjusting the default brightness, we get the following:

There are intermediate values and highlights, but where are all the shadows?

Thanks to the “quick and dirty” level enhancement, we can remove additional HDR illumination and look at the histogram.

If we compare this with the game screenshot in the SDR made in a few seconds

then we will see that there is a significant shift between SDR and HDR tone compression. In HDR, the contrast and black levels completely fall apart.

This can be slightly corrected by reducing the brightness to a minimum, but this is not enough to make the picture completely correct. It seems that this is at least partly due to some adaptation of the eye that is taking place.

Whew! A whole bunch of pictures!

We studied one side of the creation of games, but did not examine the metadata displayed by the game. I would not be surprised if there are games with different metadata, but since they are static, then as soon as you set up the picture, from a technical point of view, they will still become incorrect.

Horizon Zero Dawn: Frozen Wilds

Even from this short clip, we can see how to implement HDR correctly - almost everything seen is in the standard SDR range, however the flashes of Elah’s weapons flickering in the sun and the sparks coming down her back approach the level of 10k nits. We see that the clouds are brightly lit and are in the range of 1000-4000 nits, while the sun itself tends to 10k:

Judging by what I saw, it exceeds 4000 nits.

Uncharted 4

I recorded an HDR video for Uncharted 4, another game that exceeded the threshold of 4000 nits and tends to 10k.

Source: https://habr.com/ru/post/349664/

All Articles