VLFs - Forgotten Enemy

Hi, Habr! I present to you the translation of the article "VLFs - The Forgotten Foe" by Monica Rathbun.

How many of you check the number of virtual log files (VLF) that are in your transaction logs?

Now, as a consultant, I see that it is often ignored by database administrators. This is not an easy task to maintain and yet many do not know how to do it. Storing this data can improve performance not only at startup, but also during insertion / update / deletion, as well as backup / restore operations. SQL Server works better with fewer virtual log files of the right size. I highly recommend that you add this to your servers.

Each transaction log consists of small segments called virtual log files (VLF). Each time a growth event occurs in new segments, virtual log files are created at the end of the transaction log file. Large amounts of VLF may slow down performance.

')

Because transactions cause the log file to grow in size, an incorrect log file size or automatic growth settings can lead to a large number of VLFs. Each event adds a VLF to the log file. The more often the events in combination occur, the greater the VLF in your transaction log.

If you increase your default log by 1 MB, you can get thousands of VLF, and increase the log by 1 GB. The MSDN is perfectly explained how the transaction logs work, which I recommend reading.

How do I know how many VLF files my log files have?

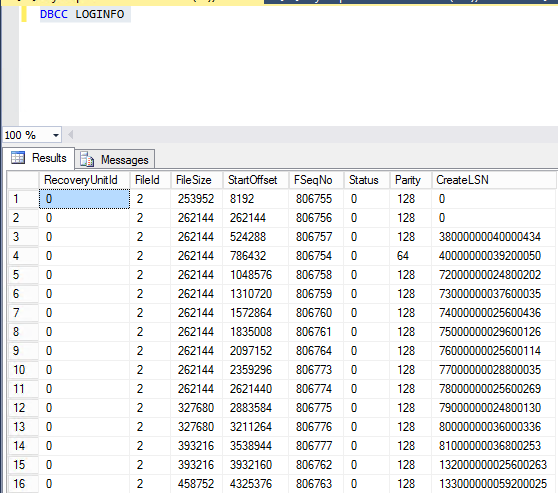

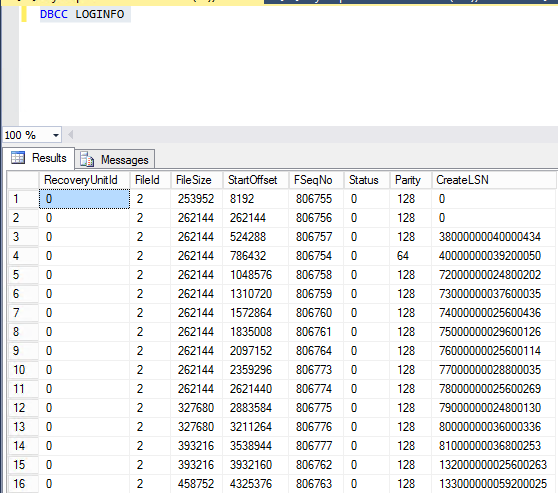

It is very easy to understand how many VLFs you have in your log file. Make sure you are in the context of the database in which you want to know it. In my case, this is TEMPDB and run the DBCC LOGINFO command.

The query will return the set of all LSNs created for this database, the COUNT of these rows is the number of VLFs that you have.

Now there are many ways in which you can get an idea of this with T-SQL, so keep this in mind. Write something that rolls through all your databases and gives you the number of records for each. There are many useful examples on the Internet.

The number of VLFs should be no more than 100, ideally, all of the above should be considered.

* New DMV ( Use SQL Server Dynamic Management Views and Functions ), which will give you an even easier way to get the VLF values sys.dm_db_log_stats (database_id).

These transaction log files should be reduced until there are only two VLFs and then increased to the current size.

Note. Increasing the log may result in a performance hit and transaction lock; be sure to do this during maintenance.

It's that easy, now take a look at your files. You may be surprised at what you find.

How many of you check the number of virtual log files (VLF) that are in your transaction logs?

Now, as a consultant, I see that it is often ignored by database administrators. This is not an easy task to maintain and yet many do not know how to do it. Storing this data can improve performance not only at startup, but also during insertion / update / deletion, as well as backup / restore operations. SQL Server works better with fewer virtual log files of the right size. I highly recommend that you add this to your servers.

What is a VLF?

Each transaction log consists of small segments called virtual log files (VLF). Each time a growth event occurs in new segments, virtual log files are created at the end of the transaction log file. Large amounts of VLF may slow down performance.

')

What is the reason for the growth of VLF?

Because transactions cause the log file to grow in size, an incorrect log file size or automatic growth settings can lead to a large number of VLFs. Each event adds a VLF to the log file. The more often the events in combination occur, the greater the VLF in your transaction log.

Example

If you increase your default log by 1 MB, you can get thousands of VLF, and increase the log by 1 GB. The MSDN is perfectly explained how the transaction logs work, which I recommend reading.

How do I know how many VLF files my log files have?

It is very easy to understand how many VLFs you have in your log file. Make sure you are in the context of the database in which you want to know it. In my case, this is TEMPDB and run the DBCC LOGINFO command.

USE tempdb DBCC LOGINFO The query will return the set of all LSNs created for this database, the COUNT of these rows is the number of VLFs that you have.

Now there are many ways in which you can get an idea of this with T-SQL, so keep this in mind. Write something that rolls through all your databases and gives you the number of records for each. There are many useful examples on the Internet.

The number of VLFs should be no more than 100, ideally, all of the above should be considered.

* New DMV ( Use SQL Server Dynamic Management Views and Functions ), which will give you an even easier way to get the VLF values sys.dm_db_log_stats (database_id).

SELECT name AS 'Database Name', total_vlf_count AS 'VLF count' FROM sys.databases AS s CROSS APPLY sys.dm_db_log_stats(s.database_id) WHERE total_vlf_count > 100; How to fix it?

These transaction log files should be reduced until there are only two VLFs and then increased to the current size.

- Run Shrink using DBCC SHRINKFILE

SHRINKFILE (N'Log_Logical_Name_Here', 0, TRUNCATEONLY); - Restore your journal with an increment that makes sense to your environment. However, if your file exceeds 8 GB, it is recommended to increase the block size to 8000 MB. The value of your increment should automatically be set to a lower value. There is no set rule for what these values should be, it may take a lot of trial and error to figure out what is best for your environment.

USE[master] GO ALTER DATABASE[tempdb] MODIFY FILE(NAME = N 'tempdev', SIZE = 8192000 KB) GO

Note. Increasing the log may result in a performance hit and transaction lock; be sure to do this during maintenance.

It's that easy, now take a look at your files. You may be surprised at what you find.

Source: https://habr.com/ru/post/349656/

All Articles