Conduit - lightweight service mesh for Kubernetes

At the end of last year, Buoyant, already famous for the release of one of the most popular service mesh category solutions (i.e., “interlocking” between networks) - Linkerd, announced its second brainchild called Conduit . One might be surprised that the new product is another open-source service mesh, but there are reasons for that.

Obviously, in Buoyant, where Linkerd is called “the most widely used production mesh solution for the service mesh in the world,” there were good reasons for creating a new product of a similar purpose (and therefore competing in something). The answer is simple - the company saw a great potential for service mesh in a specific niche:

')

The same can be said in the context of the main competitor Linkerd - Istio , created by industry giants ... also for really large microservice installations.

Thus, the answer to the question about the purpose of the Conduit is a service mesh for small (resource-limited) microservice environments based on Kubernetes (and only) . According to the authors themselves, the appearance of this new product, which took about six months, practically does not mean anything to Linkerd, because Conduit "is not focused on a great variety of platforms and integrations supported by Linkerd", to which they refer ECS, Consul, Mesos, ZooKeeper, Nomad, Rancher and various challenging environments. What is Conduit good for?

The main features of the product, allocated by its authors:

How is this achieved? Conduit consists of two components: data plane and control plane.

Conduit's overall architecture (taken from the Abhishek Tiwari blog )

Data plane directly responds to requests for services and manages the necessary traffic for this. The technical implementation is represented by a set of lightweight proxies that are deployed as sidecar-containers (ie, “additional” containers existing in the same slope next to the main ones that implement the direct service functions) for each instance of the service. In order for the service to be added to Conduit, it is necessary to reapply its submissions to Kubernetes in order to add a proxy from each Conduit data plane.

Deploy Conduit data plane result

The documentation promises that Conduit "supports most applications without the need for configuration on your part," for which automatic detection of the protocol used at each connection is used. However, in some cases this definition is not fully automated - for example, for WebSockets without HTTPS, as well as HTTP proxying (using

Data plane provides communication for pods, implementing accompanying auxiliary mechanisms, such as retry requests and timeouts, encryption (TLS), assigning policies to accepting requests or rejecting them.

An important feature of this component is that in the name of high performance it is written in the Rust language. To implement it, the authors invested in the development of the underlying Open Source technologies: Tokio (an event I / O platform for creating asynchronous network applications) and Tower (succinctly described as

Control plane is the second component in Conduit, designed to control the proxy behavior of the data plane. It is represented by a set of services already written in the Go language, launched in a separate Kubernetes namespace. Their functions include telemetry aggregation and the provision of APIs that allow both accessing the collected metrics and changing the behavior of the data plane. The control plane APIs use both interfaces to Conduit (CLI and web UI), and can be used by other third-party tools (for example, systems for CI / CD).

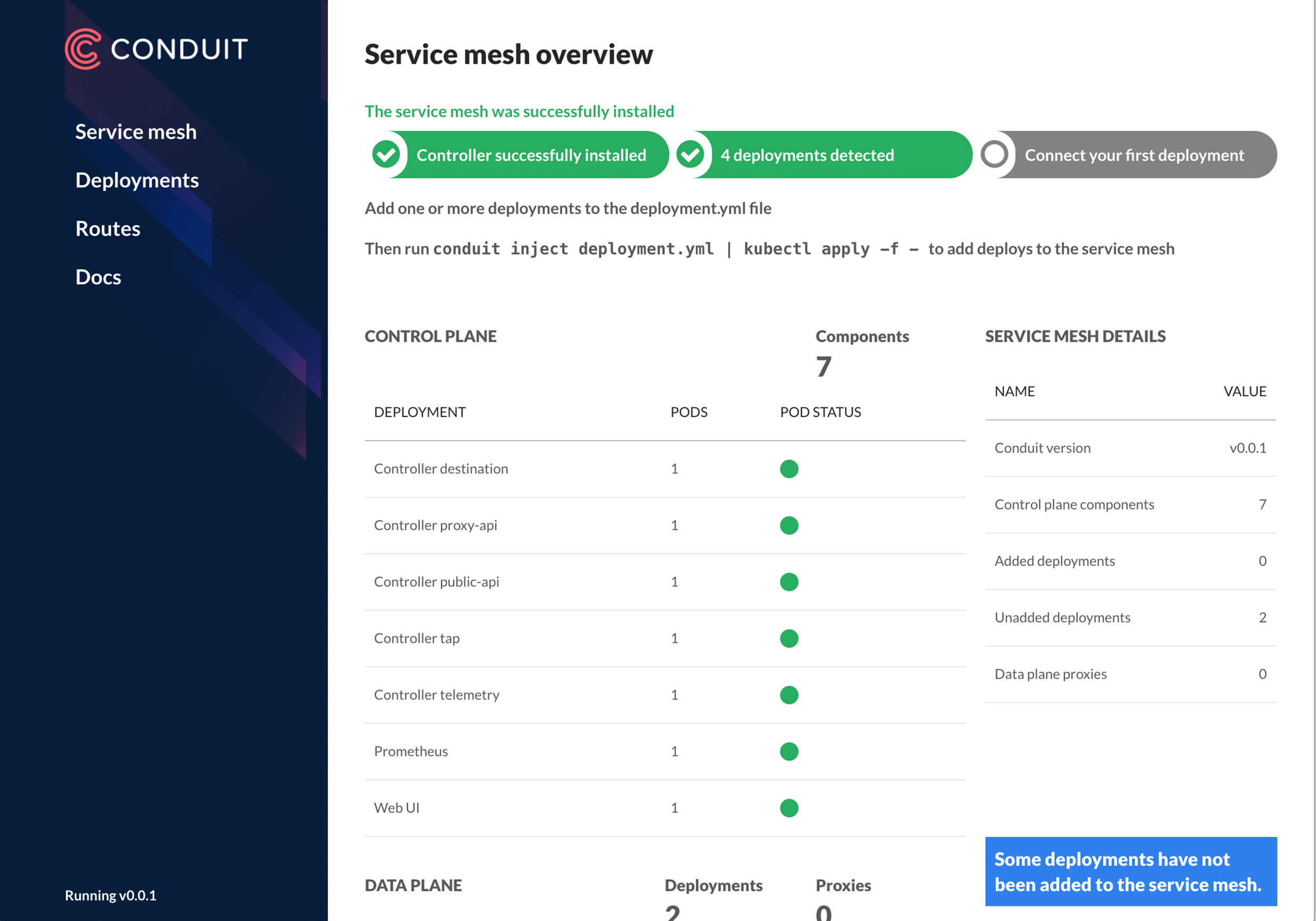

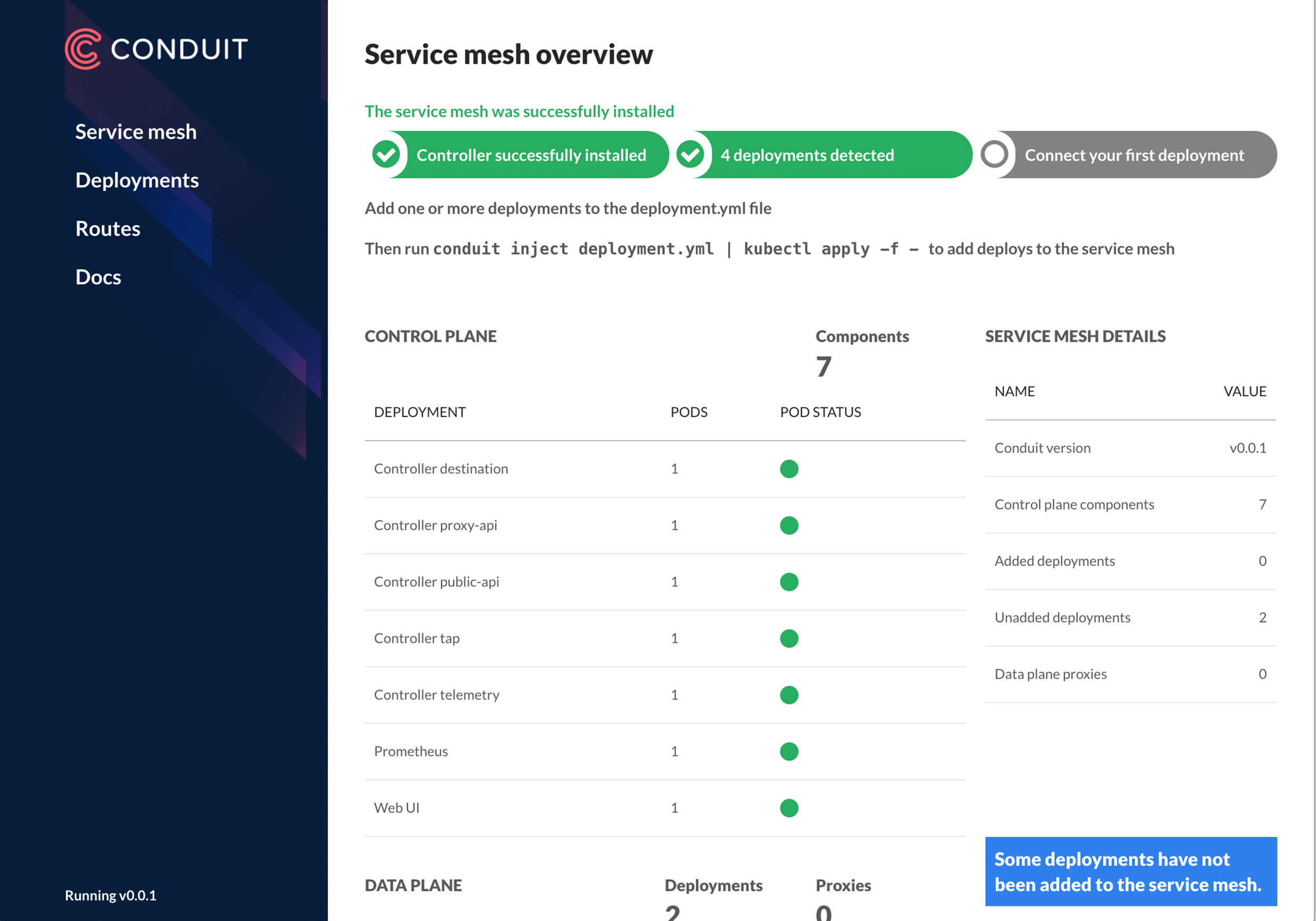

General view of the Conduit web interface

Details about the technical device Conduit and its components can be found in the document for developers .

Conduit was originally created with an eye to use in existing installations Kubernetes. In particular, for this, the

Instructions on how to start using Conduit (with the Minikube option and an example installation of the demo application) are available in the project documentation . The statistics for the application deployed with Conduit will look like this:

The current status of the project Conduit - alpha version . Currently (the latest version, v0.2.0 , dated February 1), all TCP traffic is proxied and the high-level metric is displayed (the ratio of successful requests, delays — see the example above) for all traffic in HTTP, HTTP / 2 and gRPC. In the criteria for the development of the project (to bring it to the possibility of use in production) the following global objectives are noted:

For 2.5 months of its existence, the project managed to collect more than a thousand stars on GitHub and 19 contributors. The source code is distributed under the terms of the Apache License v2.0 (some of the components used are under the MIT License).

Buoyant's plans include active work over the coming months to prepare Conduit for use in production, as well as providing commercial support.

Read also in our blog:

Why new service mesh?

Obviously, in Buoyant, where Linkerd is called “the most widely used production mesh solution for the service mesh in the world,” there were good reasons for creating a new product of a similar purpose (and therefore competing in something). The answer is simple - the company saw a great potential for service mesh in a specific niche:

')

“We found out that there are types of installations in which the consumption of Linkerd resources turns out to be unreasonably high. Linkerd is based on such widely distributed and production-tested components as Finagle, Netty, Scala and JVM. Although they allow it to scale up to serving huge loads, if you provide the necessary amount of CPU and RAM for this, all these components are not designed for the reverse — downscaling to resource-limited environments — in particular, for Kubernetes installations based on sidecar containers ".

The same can be said in the context of the main competitor Linkerd - Istio , created by industry giants ... also for really large microservice installations.

Thus, the answer to the question about the purpose of the Conduit is a service mesh for small (resource-limited) microservice environments based on Kubernetes (and only) . According to the authors themselves, the appearance of this new product, which took about six months, practically does not mean anything to Linkerd, because Conduit "is not focused on a great variety of platforms and integrations supported by Linkerd", to which they refer ECS, Consul, Mesos, ZooKeeper, Nomad, Rancher and various challenging environments. What is Conduit good for?

Conduit: architecture and features

The main features of the product, allocated by its authors:

- ease and speed of work;

- visibility from beginning to end (the ability to see the behavior of all components of the application without the need to make changes to the code);

- security out of the box (enabled by default TLS);

- focus on Kubernetes.

How is this achieved? Conduit consists of two components: data plane and control plane.

Conduit's overall architecture (taken from the Abhishek Tiwari blog )

Data plane directly responds to requests for services and manages the necessary traffic for this. The technical implementation is represented by a set of lightweight proxies that are deployed as sidecar-containers (ie, “additional” containers existing in the same slope next to the main ones that implement the direct service functions) for each instance of the service. In order for the service to be added to Conduit, it is necessary to reapply its submissions to Kubernetes in order to add a proxy from each Conduit data plane.

Deploy Conduit data plane result

The documentation promises that Conduit "supports most applications without the need for configuration on your part," for which automatic detection of the protocol used at each connection is used. However, in some cases this definition is not fully automated - for example, for WebSockets without HTTPS, as well as HTTP proxying (using

HTTP CONNECT ), connections without using TLS to MySQL and SMTP. Other limitations: applications that interact with gRPC using grpc-go must use a library version not lower than 1.3, and there is no support for external DNS queries in Conduit (i.e., proxying these requests to third-party APIs).Data plane provides communication for pods, implementing accompanying auxiliary mechanisms, such as retry requests and timeouts, encryption (TLS), assigning policies to accepting requests or rejecting them.

An important feature of this component is that in the name of high performance it is written in the Rust language. To implement it, the authors invested in the development of the underlying Open Source technologies: Tokio (an event I / O platform for creating asynchronous network applications) and Tower (succinctly described as

fn(Request) → Future<Response> ) . This approach allowed the authors to achieve minimal delays (“sub-millisecond p99”) and memory consumption (“10mb RSS”) .Control plane is the second component in Conduit, designed to control the proxy behavior of the data plane. It is represented by a set of services already written in the Go language, launched in a separate Kubernetes namespace. Their functions include telemetry aggregation and the provision of APIs that allow both accessing the collected metrics and changing the behavior of the data plane. The control plane APIs use both interfaces to Conduit (CLI and web UI), and can be used by other third-party tools (for example, systems for CI / CD).

General view of the Conduit web interface

Details about the technical device Conduit and its components can be found in the document for developers .

Integration with K8s and status

Conduit was originally created with an eye to use in existing installations Kubernetes. In particular, for this, the

conduit console utility implies the ubiquitous use of kubectl (for example, the conduit install and conduit inject generate configurations that should be redirected to kubectl apply -f ). Conduit chose Deployments as the basic component to be used (they, and not Services , are shown in the web UI), in order not to create unnecessary difficulties in traffic routing (separate pods can be part of an arbitrary number of Services ).Instructions on how to start using Conduit (with the Minikube option and an example installation of the demo application) are available in the project documentation . The statistics for the application deployed with Conduit will look like this:

conduit stat deployments NAME REQUEST_RATE SUCCESS_RATE P50_LATENCY P99_LATENCY emojivoto/emoji 2.0rps 100.00% 0ms 0ms emojivoto/voting 0.6rps 66.67% 0ms 0ms emojivoto/web 2.0rps 95.00% 0ms 0ms The current status of the project Conduit - alpha version . Currently (the latest version, v0.2.0 , dated February 1), all TCP traffic is proxied and the high-level metric is displayed (the ratio of successful requests, delays — see the example above) for all traffic in HTTP, HTTP / 2 and gRPC. In the criteria for the development of the project (to bring it to the possibility of use in production) the following global objectives are noted:

- support of all types of interaction between services (not only HTTP);

- providing (by default) inter-service authentication and confidentiality;

- the Kubernetes API extension to support a variety of real-life exploitation policies;

- The Conduit controller itself is a microservice that will be extensible to support plugins with environment-specific policies.

For 2.5 months of its existence, the project managed to collect more than a thousand stars on GitHub and 19 contributors. The source code is distributed under the terms of the Apache License v2.0 (some of the components used are under the MIT License).

Buoyant's plans include active work over the coming months to prepare Conduit for use in production, as well as providing commercial support.

PS

Read also in our blog:

- “ What is a service mesh and why do I need it [for a cloud microservice application]? » (Translation of an article from Buoyant, published on the occasion of the release of Linkerd 1.0) ;

- “ Rook is a“ self-service ”data store for Kubernetes ”;

- " CoreDNS - DNS server for the cloud native world and Service Discovery for Kubernetes ";

- “ Container Networking Interface (CNI) - network interface and standard for Linux containers ”;

- " Infrastructure with Kubernetes as an affordable service ."

Source: https://habr.com/ru/post/349496/

All Articles