TOP-10. Analysis of the best reports in the public domain. Heisenbug 2017 Moscow

We act according to the old scheme: I look for 10 reports in a row for you, I make a brief description of the content so that the uninteresting can be thrown away. In addition, from sites I collect links to slides and descriptions. The resulting sort and give in order to increase the rating - that is, at the bottom will be the coolest report. Ratings are not likes on YouTube, but their own evaluation system, it is cooler than likes.

Previous parts: JBreak 2017 , JPoint 2017 (both conferences were about Java).

This time the object of the survey will be Heisenbug 2017 Moscow - a well-known conference for testers (as well as programmers and team managers, as written on the home page of the site ).

In the post there is an overwhelming number of pictures and links to YouTube. Caution traffic!

Disclaimer : All descriptions are my personal opinion. Everything written is the fruit of my sick imagination, and not the distorted quotes of the speakers (this warning is written so that the speakers will not beat me). If someone is accidentally offended - write in a personal, let's figure it out. But on the whole, let's think this way: if BadComedian every time asked the Cinema Foundation what he should say or not say, would he have made at least one video clip?

10. Selenide Puzzlers

Speaker : Alexey Vinogradov, Andrey Solntsev; rating : 4.31 ± 0.14. Link to the presentation .

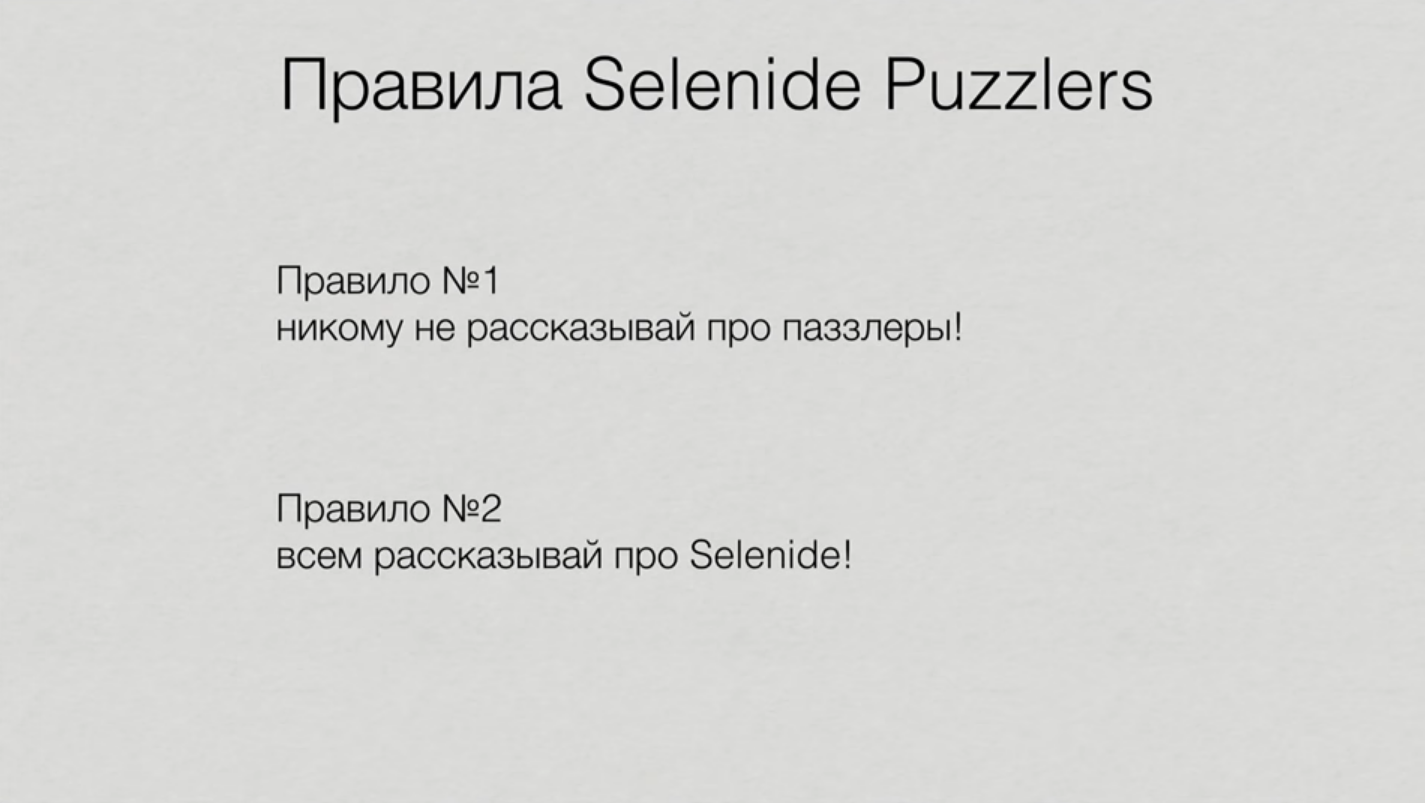

Puzzlers is a quiz report in which all viewers become direct participants of the show. The presenters come up with half a dozen interesting tasks, almost all of which are taken from real design practice. Each task is shown a list of options and gives convincing arguments in favor of each.

The theme of this puzzle is the Selenide UI testing framework. Questions are available for beginners with superficial knowledge of the framework, but even some professionals will break their teeth about some of them.

By the way, Andrey Solntsev is the creator of Selenide, and Alexey Vinogradov is the very Vinogradov from Radio QA, which in itself delivers.

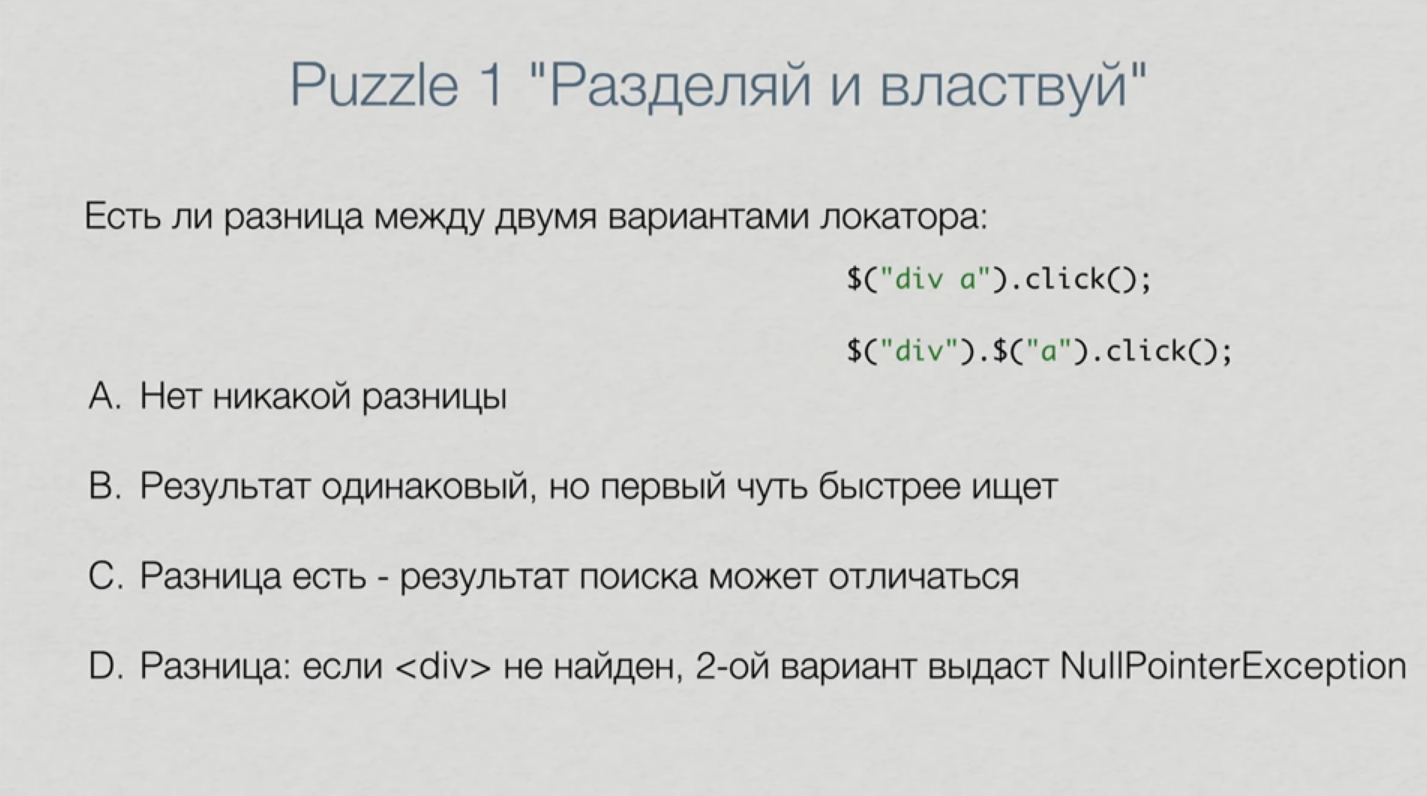

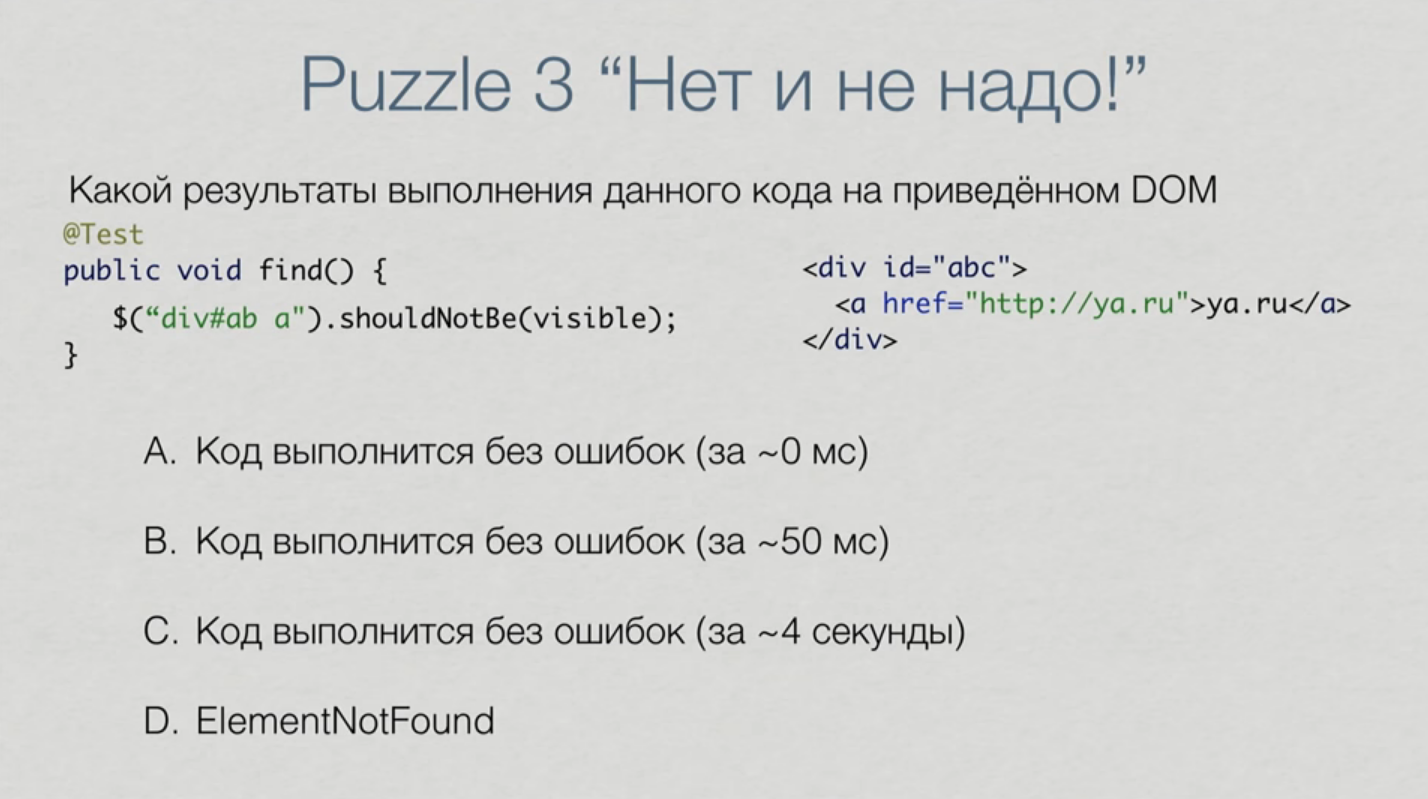

I will not spoil answers to puzzlers here, but I will give a couple of tasks.

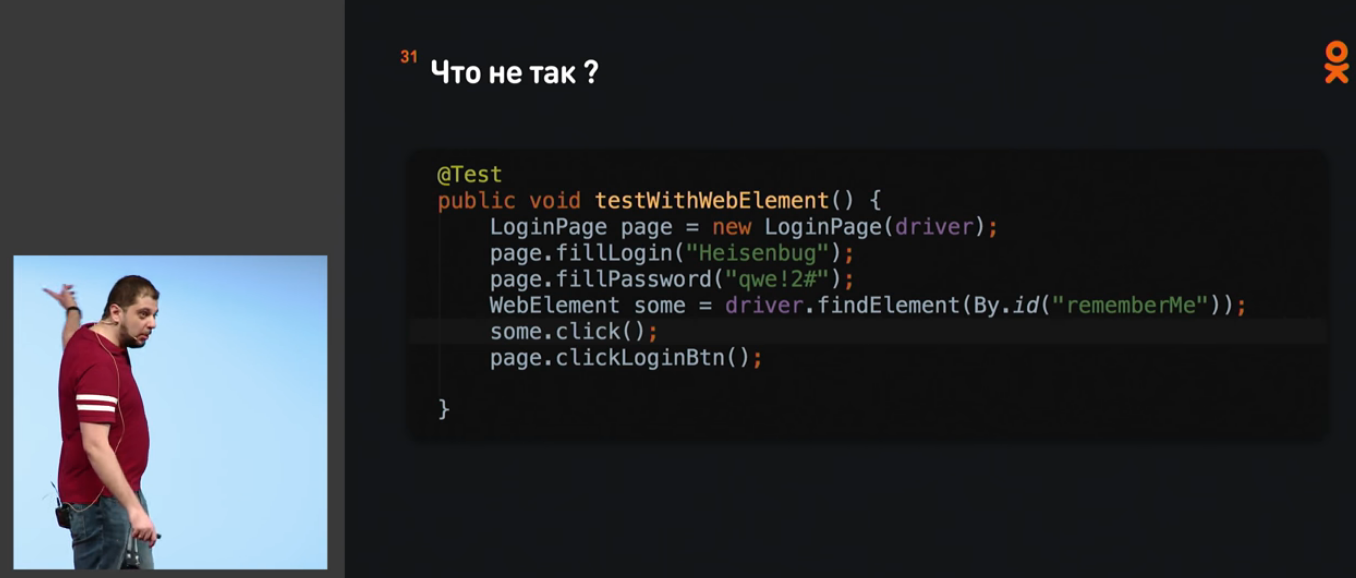

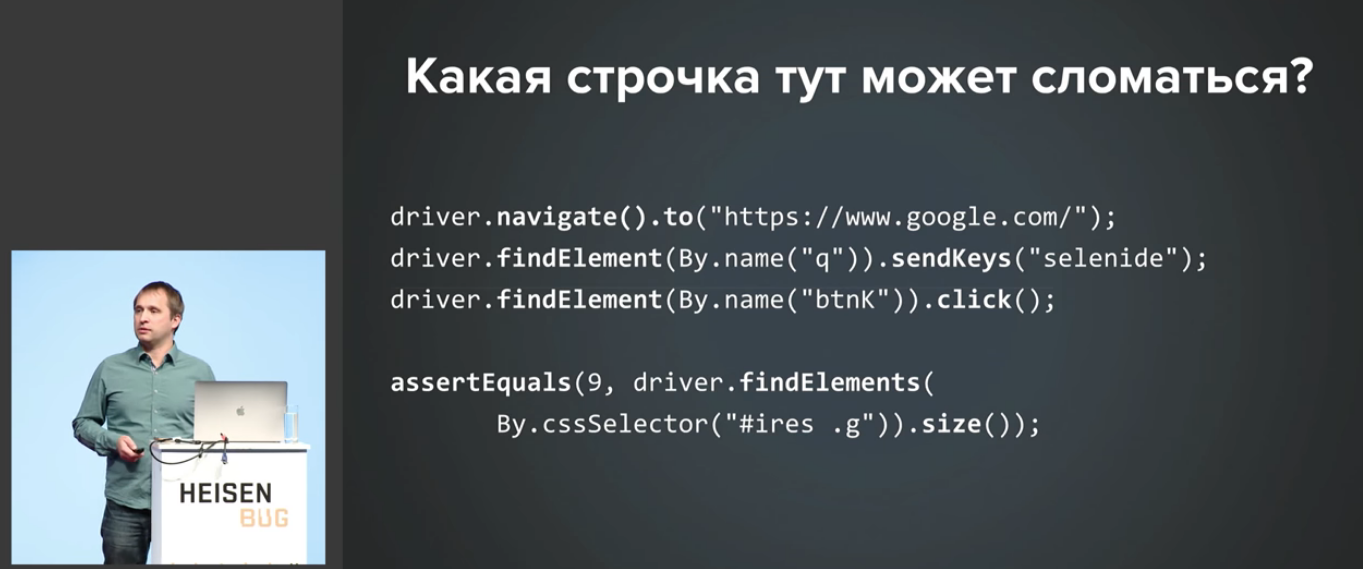

The very first puzzler is quite simple (if you saw the answer):

But here everything is not so obvious:

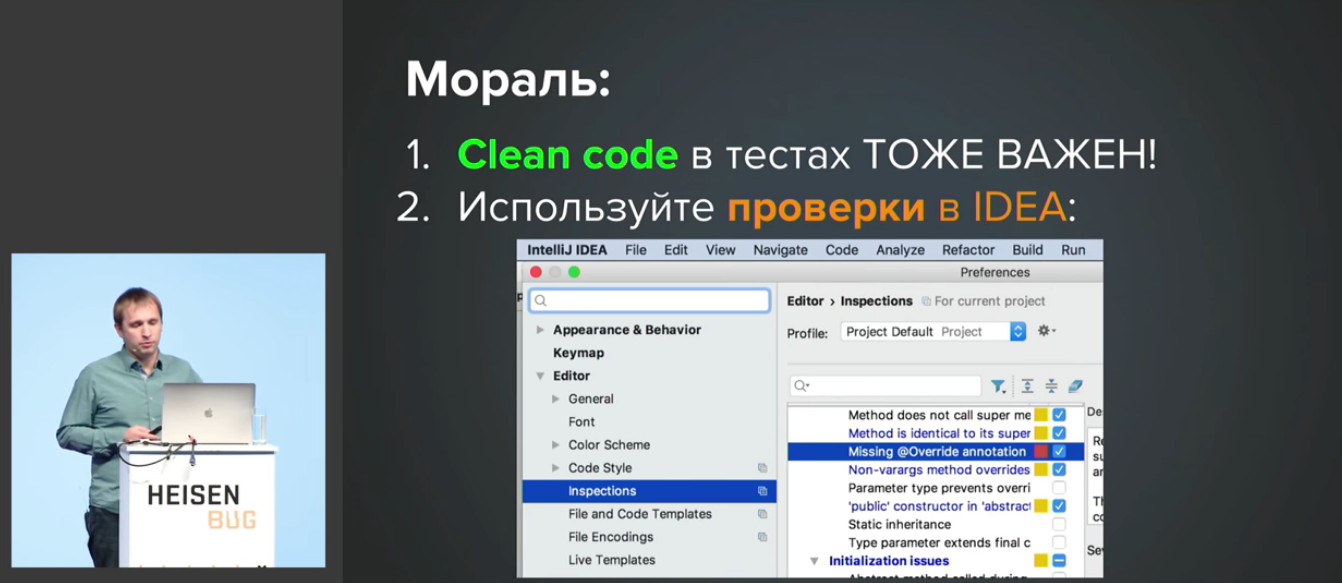

With pleasure to listen to everything until the end. It's funny that if at programming conferences puzzlers are often useless (but knowledge of magic gives programmers incredible pleasure), then this report is of direct practical value. It is immediately obvious that the authors did not just google the top 10 most bizarre questions with StackOverflow, but simply took tasks from their practice.

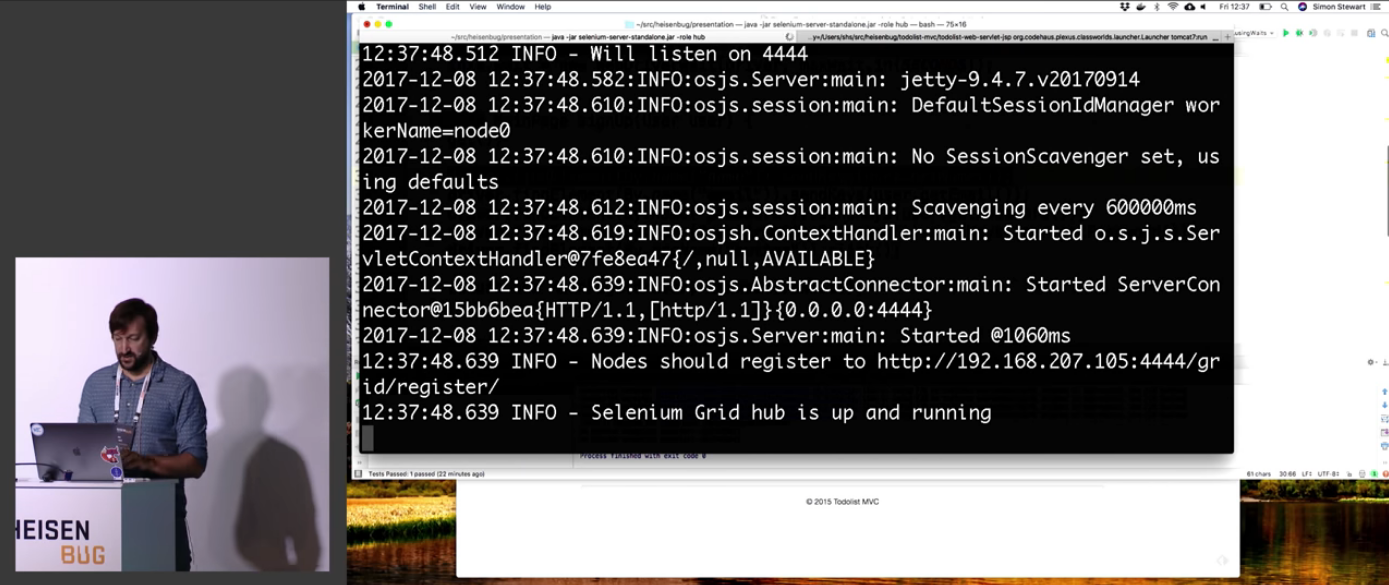

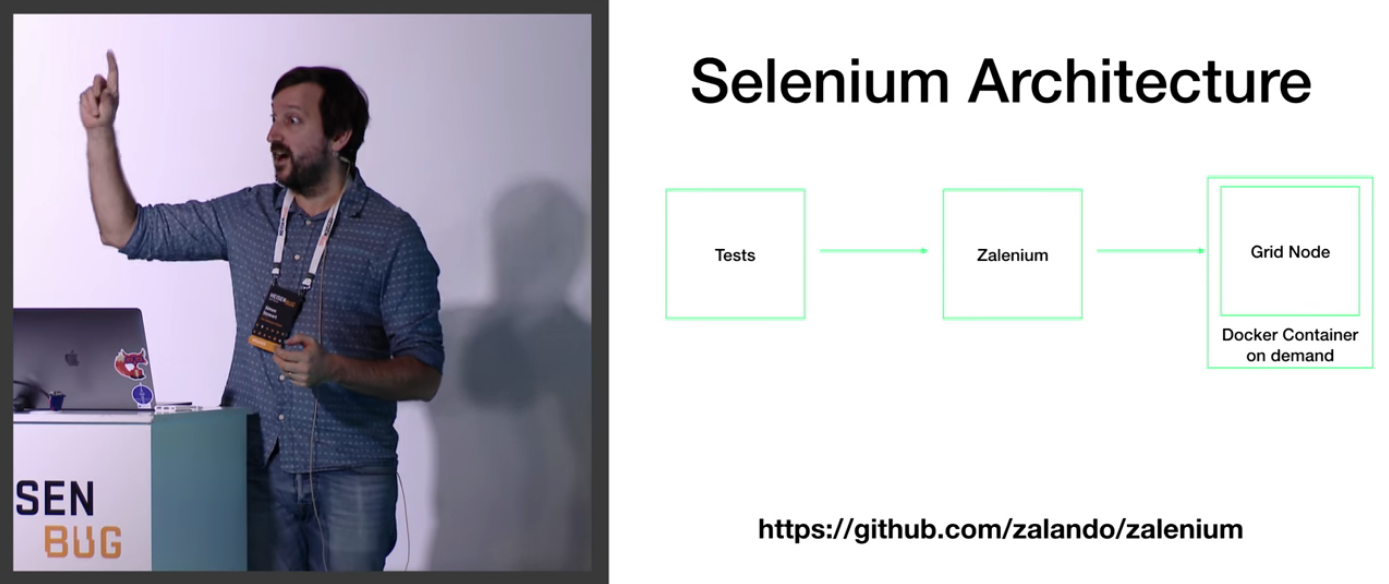

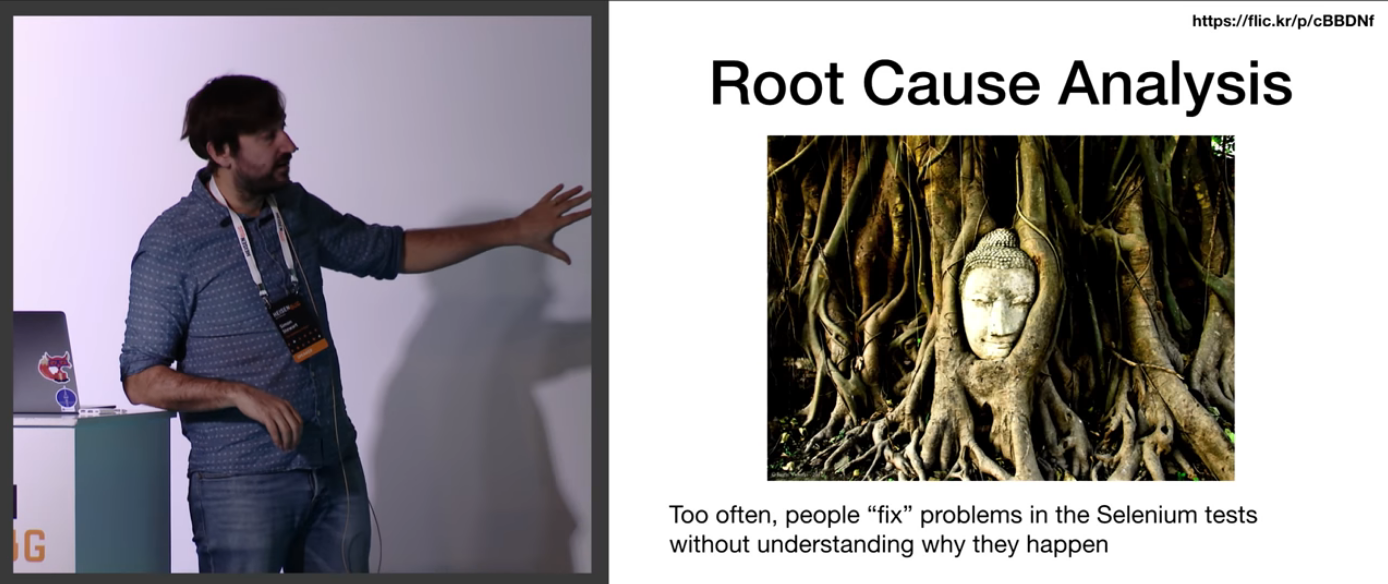

9. Scaling Selenium

Speaker : Simon Stewart; rating : 4.33 ± 0.13. Link to the presentation .

Simon Stewart is, to put it mildly, a famous person. It was he who originally created WebDriver and continues to haul Selenium on himself.

The report begins with some pretty basic things about locators, long XPath and slips. Simon explains how to write a test and an application in such a way as to avoid typical problems.

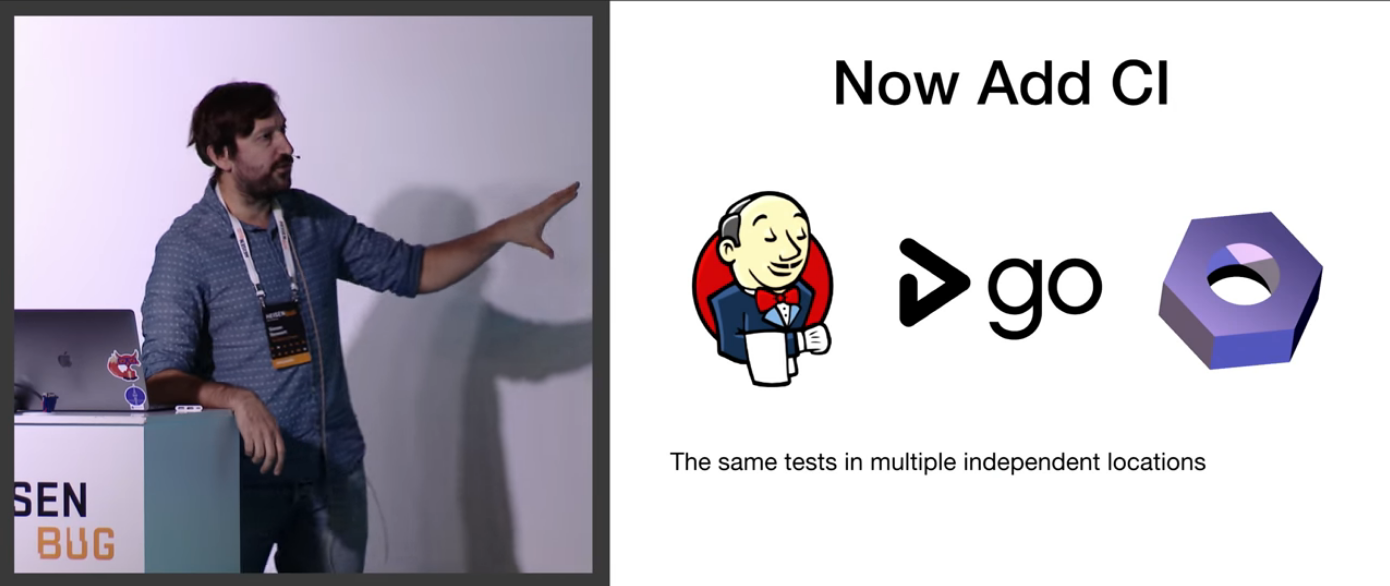

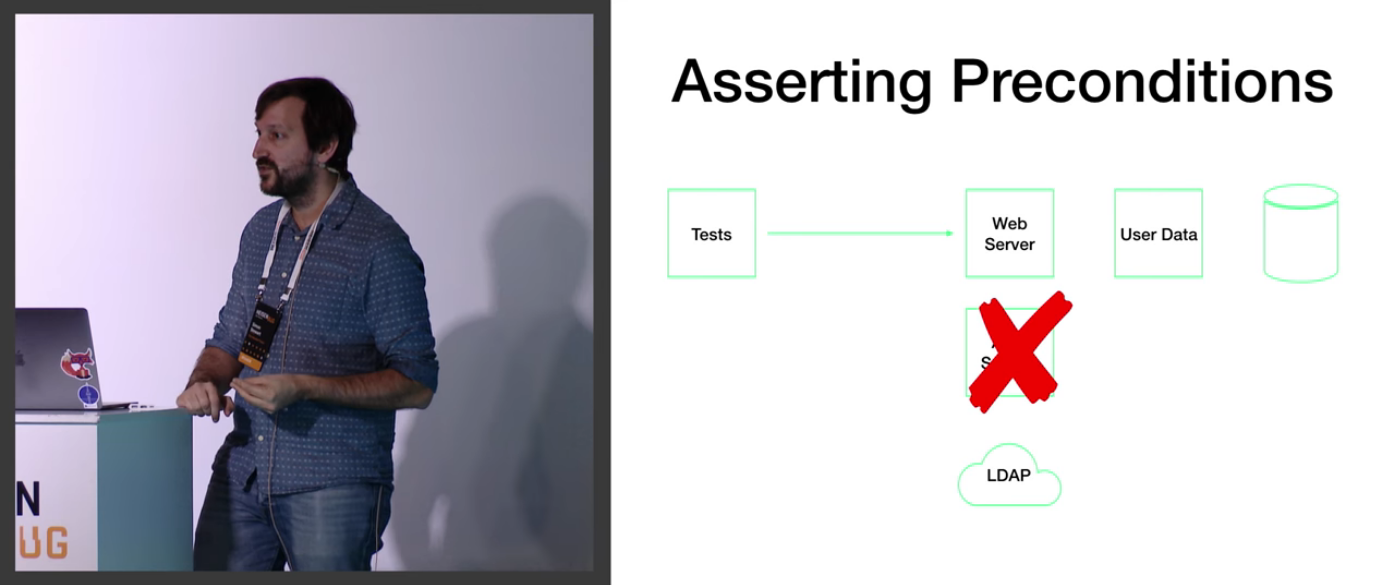

But immediately after this (somewhere around 24 minutes), the story condenses around the main topic of the report - scaling.

He tells why static and ThreadLocals are bad, and also why the code should be uncuffable and stateless. Moreover, these explanations do not come from the standard approach to programming high-performance code (in which, if you try hard, you can prove the benefit of statics), namely from tests.

All this is supported by live demonstrations on his MacBook.

The author approaches the issue systematically, considers the launch of tests from independent locations. In general, it seems to me that such a question is perfect for an interview in order to immediately determine that a person wrote only Hellowvolds or worked :-)

On the example of a typical web application, situations are considered when tests may fall, but not at all for the reasons we were going to catch.

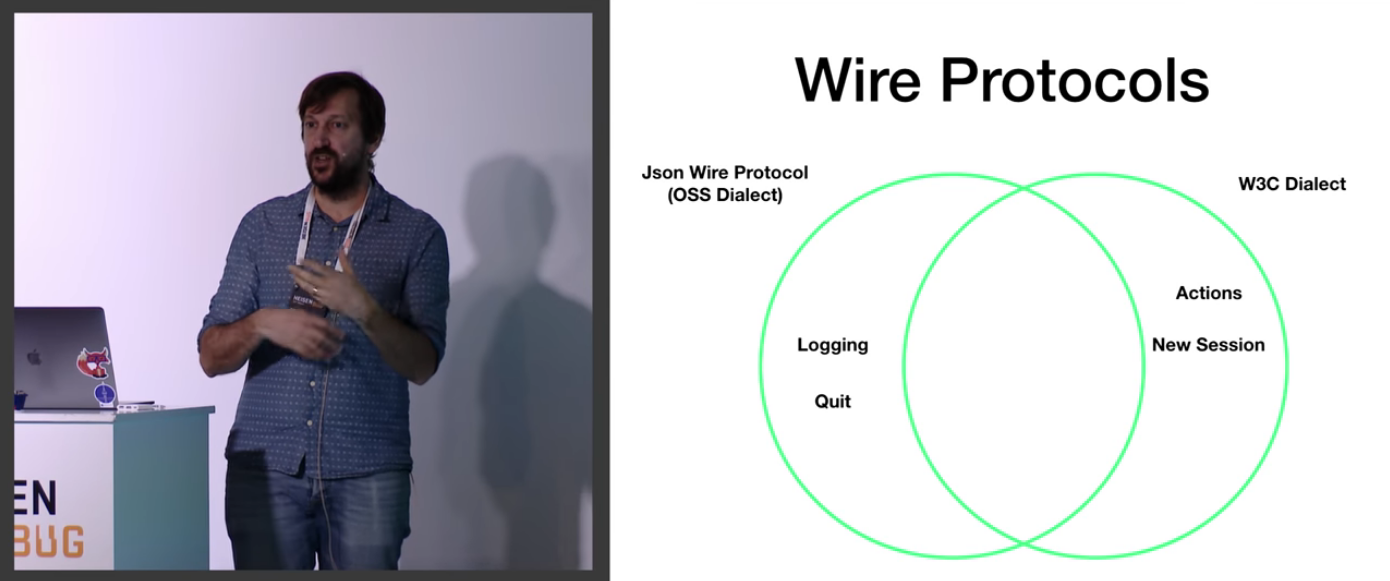

Along the way, Simon doesn’t disdain to go completely into the details of the applicability of the Selenium architecture. It is not surprising that he developed it.

So he brings us to using the Selenium Grid and shows it on real demos.

Well, Docker, of course. The first rule of Docker: always talk about Docker!

And it ends with cloud providers.

At the end of the report there is a discussion of standard errors that can be made.

In the past, I introduced DevOps, and the full-stack problems described in the report seemed to me very close and familiar. For myself, I took away from here the official position of Simon (in fact, the position of the Selenium team) on a number of fundamental issues of testing scaling, and this will allow us to handle their tools more correctly in the future.

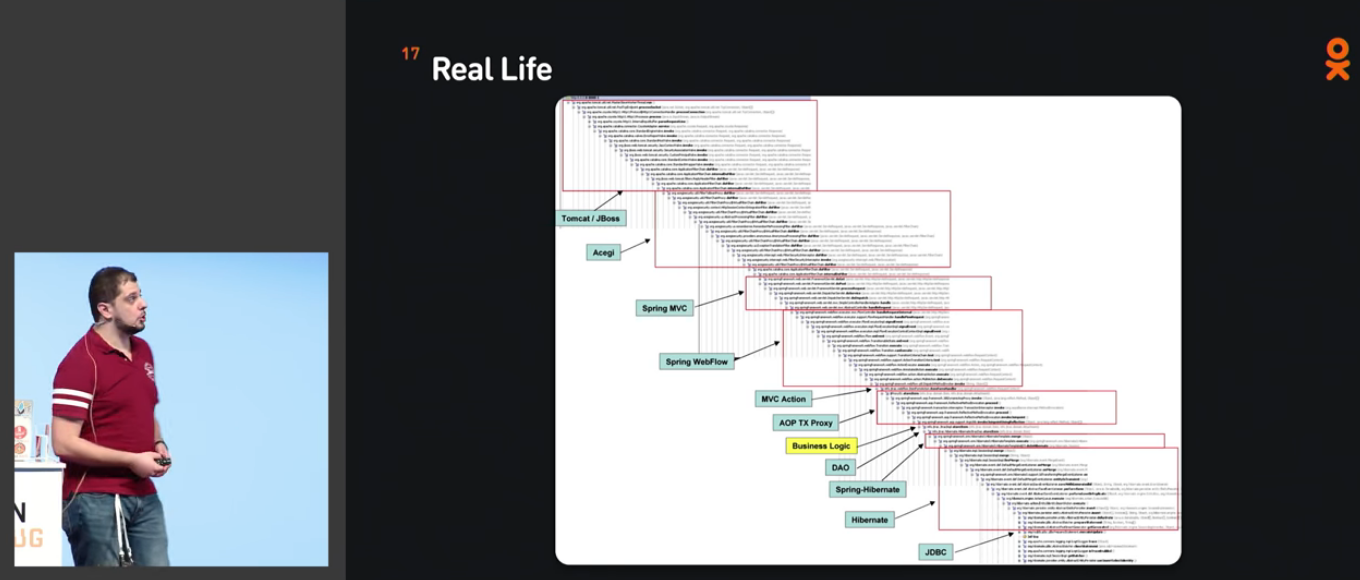

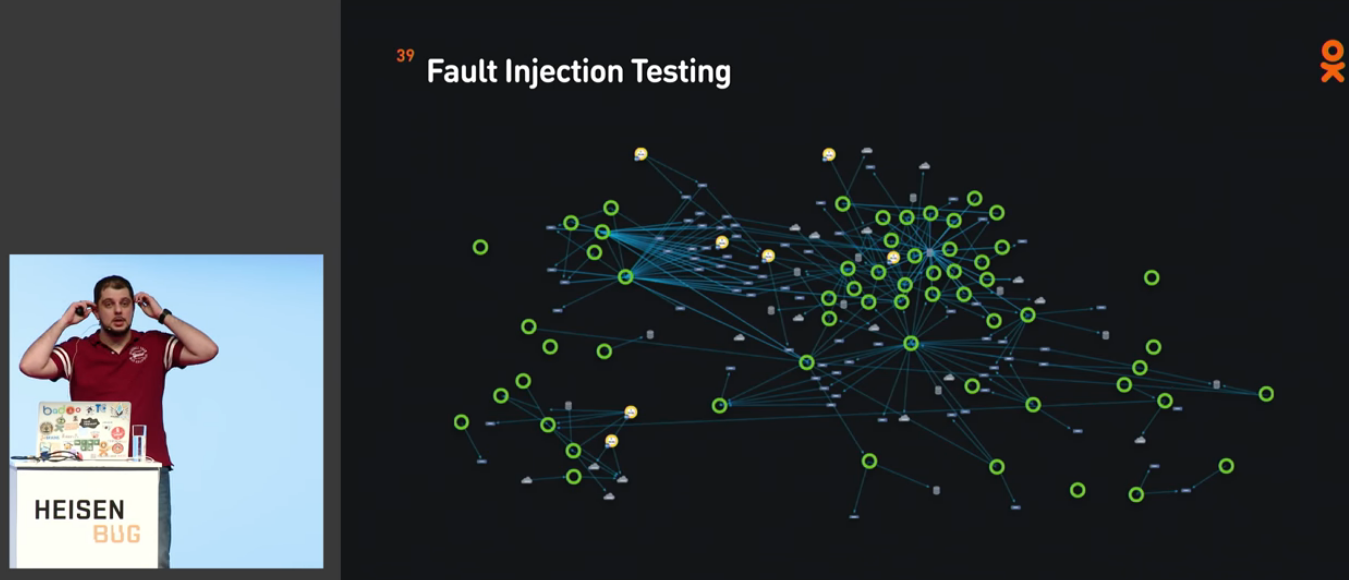

8. White Pandora's Box

Speaker : Nikita Makarov; rating : 4.34 ± 0.07. Link to the presentation .

Nikita Makarov - the head of the auto-test group at Odnoklassniki. He is also a member of the Heisenbug Program Committee. From time to time we communicate on the Internet, and it was especially interesting to see his report.

Firstly, the report begins with Nikita having already laid out the presentation and gave a QR code to it. To say that it is awesome is to say nothing. IMHO, so you need to do every speaker at all. Although it is not so important for us who are viewing the recording of a conference on YouTube.

In short, the testing industry talks a lot about the “black box”, but occasionally “white” is mentioned. This is due, among other things, to the fact that testing the “white box” has always been considered the prerogative of programmers.

This report answers several burning questions:

- How to prevent programmers from writing the wrong code?

- How to embed crashes in the code so that it does not hurt painfully?

- Why would you need source code parsing and what can you get from it?

- Social code analysis and coverage - why and why?

At the very beginning, Nikita wonders why there is so little information about the white box? Describes the relevant historical context and his research on this topic.

After that we turn to specifics. It's funny that Nikita uses the same screenshot of the call stack as I did in my last DevOps talk:

If you try to enter this chain of calls from the point of view of the black box, you can not test a lot of things. Every Java programmer knows this, and (I want to hope) every Java tester, but this is something that you usually don’t want to think about.

It was the motivational part. Then comes the practice. Nikita warns in advance that there will be no ready-made solutions, and starts telling principles.

The following questions are illustrated with specific examples in Java code:

At one point, he even discovered the Idea and began to show it all:

The report covers a lot of different types of testing and instruments for them:

And all this is illustrated by live demonstrations on the speaker's laptop. It is pointless to retell, because the concentration of information is really high.

In fact, the report is divided into certain "levels of immersion" in the subject. It starts with the simplest and ends with the most nightmarish. For example, there will even be about source parsing and Abstract Syntax Tree.

At the very end, Nikita sums up that the white box is a strategy, and how we will implement it is our business, and it depends on the specific project. We must think a head.

And what I particularly liked, the report ends on a life-affirming note: you need to read the code! (How to make testers do this, I still do not understand, however).

7. Your A / B tests are broken.

Speaker : Roman Fightful; rating : 4.36 ± 0.13. Link to the presentation .

We met Roman during the preparations for the JBreak and JPoint conferences. The novel helped make my slides (and reports in general) not as lame as I would have done alone. It also turned out that Roman possesses an incredible depth of knowledge on topics such as A / B testing, and I was looking forward to having a report too. So, here it is.

The novel tells about the area adjacent to the testing. After you check that the functionality is implemented normally, it rolls out into the experiment to find out if the users like the new version.

Have you noticed that usually people who are responsible for experiments, as a result, say that there is not enough data to solve? Often this is true, but often it’s all a matter of breakdowns in the system of experiments and accounting of user statistics.

The report discusses the typical breakdowns that occur there, as a result of which it becomes possible, returning to the workplace, to be a little data scientists and find errors in oneself. Some of them are probably there.

In general, I am very poorly versed in mathematics, and even more so in the matstat, and I was afraid that I would not be able to take anything out of the report from Roman. Just because IQ is not enough. But no, in some inexplicable way the content of the report turned out to be quite understandable and applicable in practice.

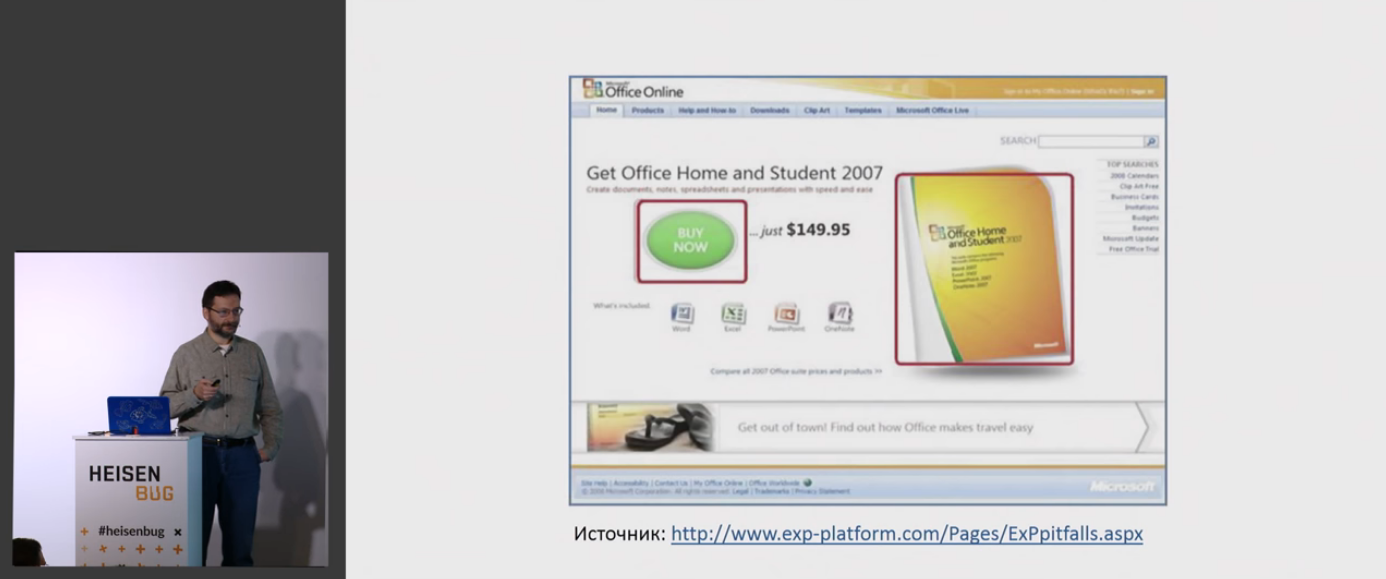

The report begins with a story about one of the Microsoft tests at a time when the grass was greener:

The main mistake is that if you don’t have any tests at all, then you can roll out something like that for food, which will make you regret very much later.

Often, people do not understand what can be difficult there - broke by users, showed each his own, counted the result - and that's it!

Actually, the whole report of the novel about the fact that there may be more than dofig there.

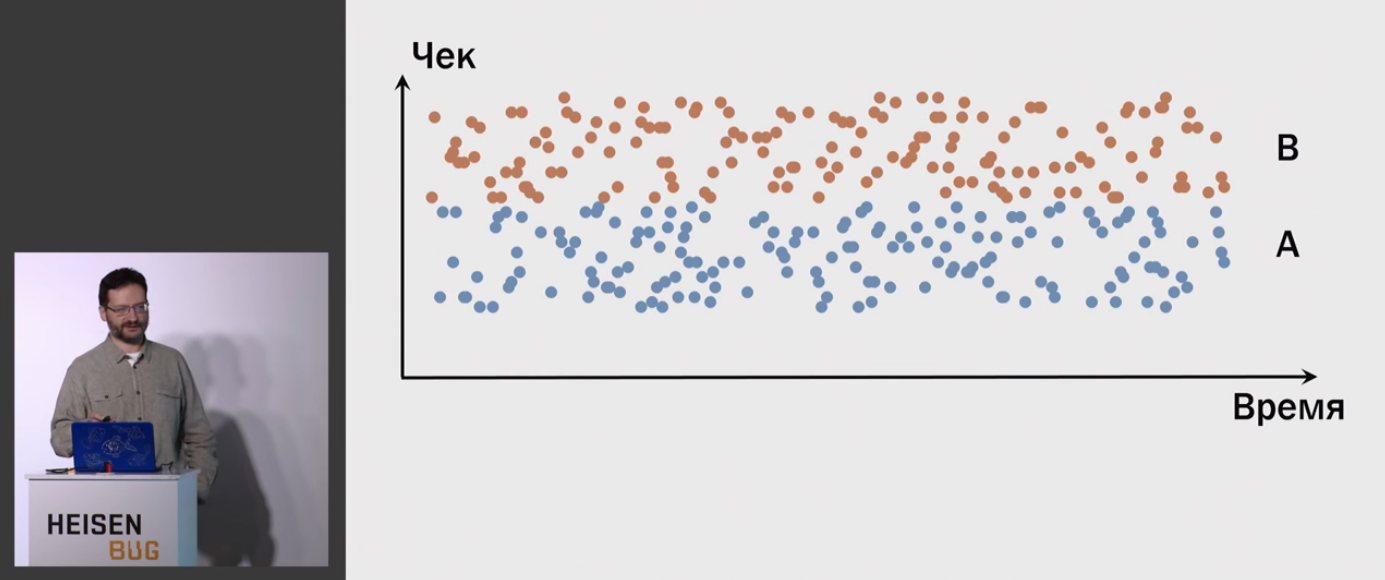

For example, a person depends more on his own habits than on some minor changes on our service.

Therefore, the separation picture is not as follows:

But some such:

The fact is that quite a few people have come to us for experiments, and the results of the research will need to be applied to all people in general. And this is the classic task of statistics.

The central line of the report is a few major mistakes:

- Do not conduct experiments at all.

- Scroll through the parameters by users

- Conduct too many bad experiments

- Do not save logs of experiments with the possibility of recalculation

- Do not take into account that the measured indicator itself changes over time (this is called seasonality)

The results are approximately the same (and they follow from the stated problems):

For me, the report turned out to be valuable not as a result as such, but as a coherent system of reasoning, which allows you to take this material as a basis and continue to think independently in this direction.

And of course, mathematics still needs to be tightened.

If you try to express the entire report in one slide, it will probably look something like this:

6. Phone Testing with Arduino

Speaker : Alexey Lavrenyuk, Timur Torubarov; rating : 4.38 ± 0.14. Link to the presentation .

The next report are two developers from Yandex. Alexey is involved in such things as Yandex.Tank , Pandora and Overload . Timur worked for 4 years in telecom and the last 4 years in Yandex.

It was one of those few reports in which it turned out to participate live. I came to him from hidden motives: to gloat about how modern applications put the battery. The benefit of the report is just from people who are heroically fighting this company-wide.

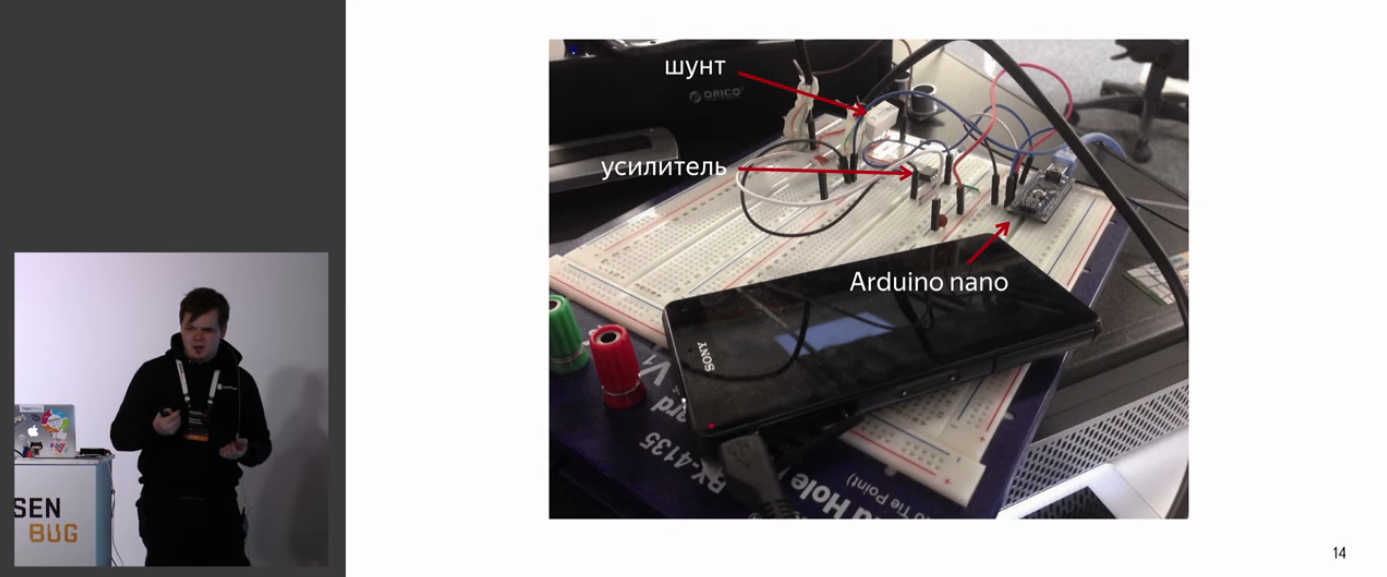

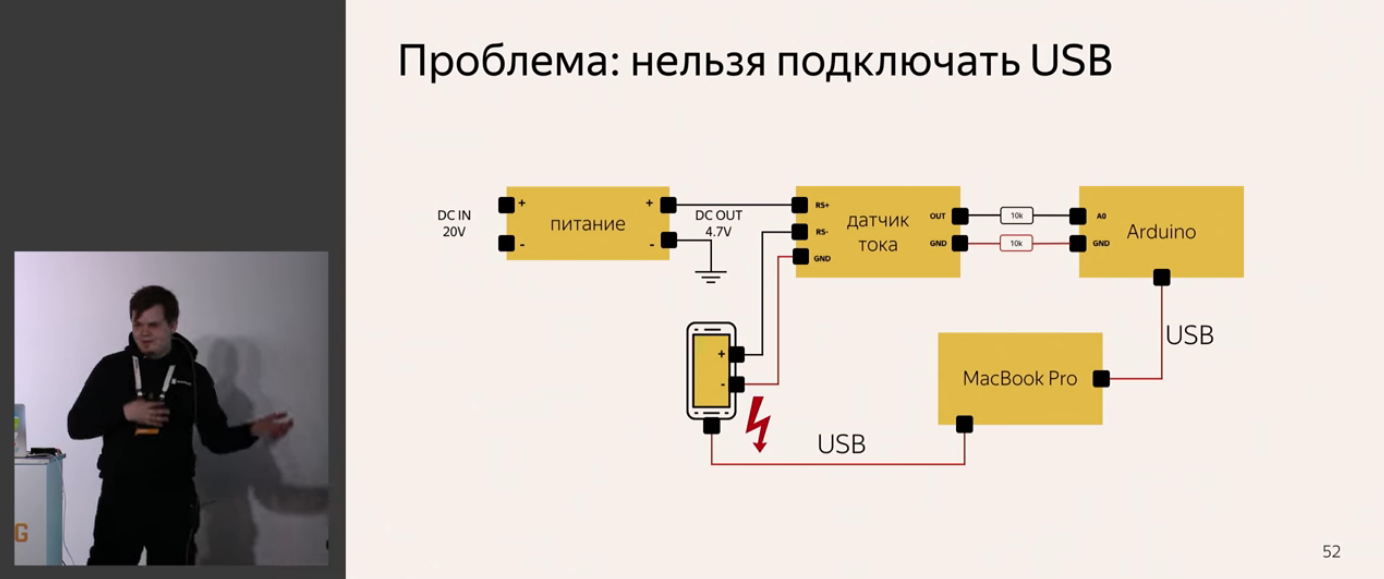

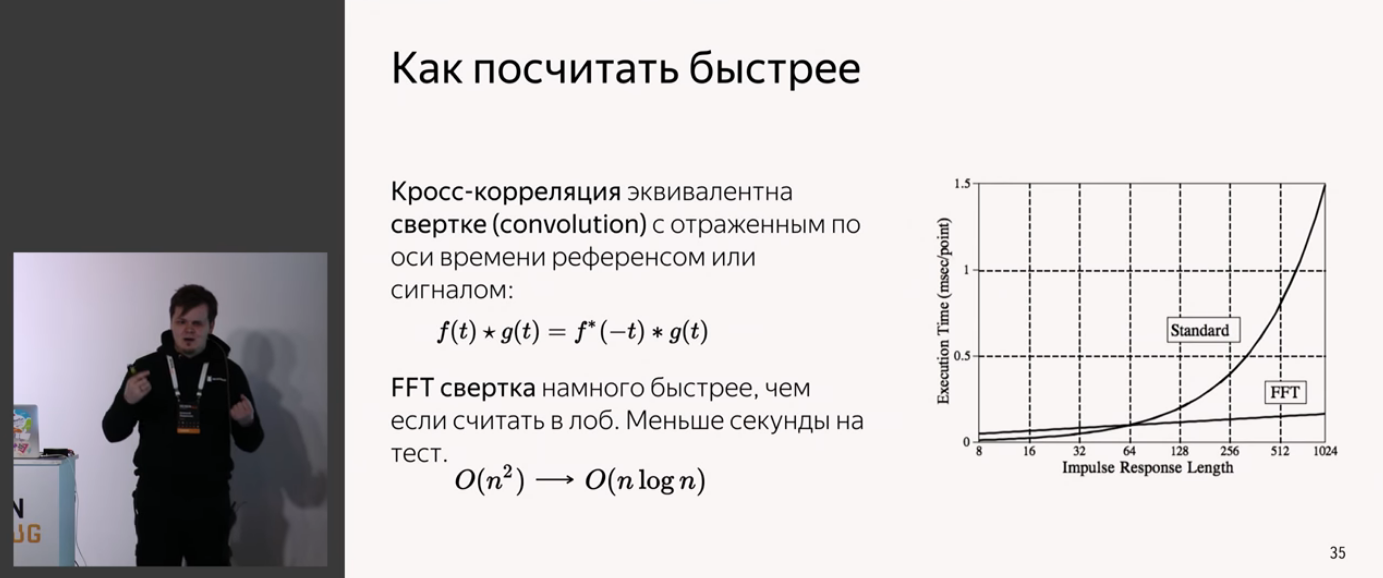

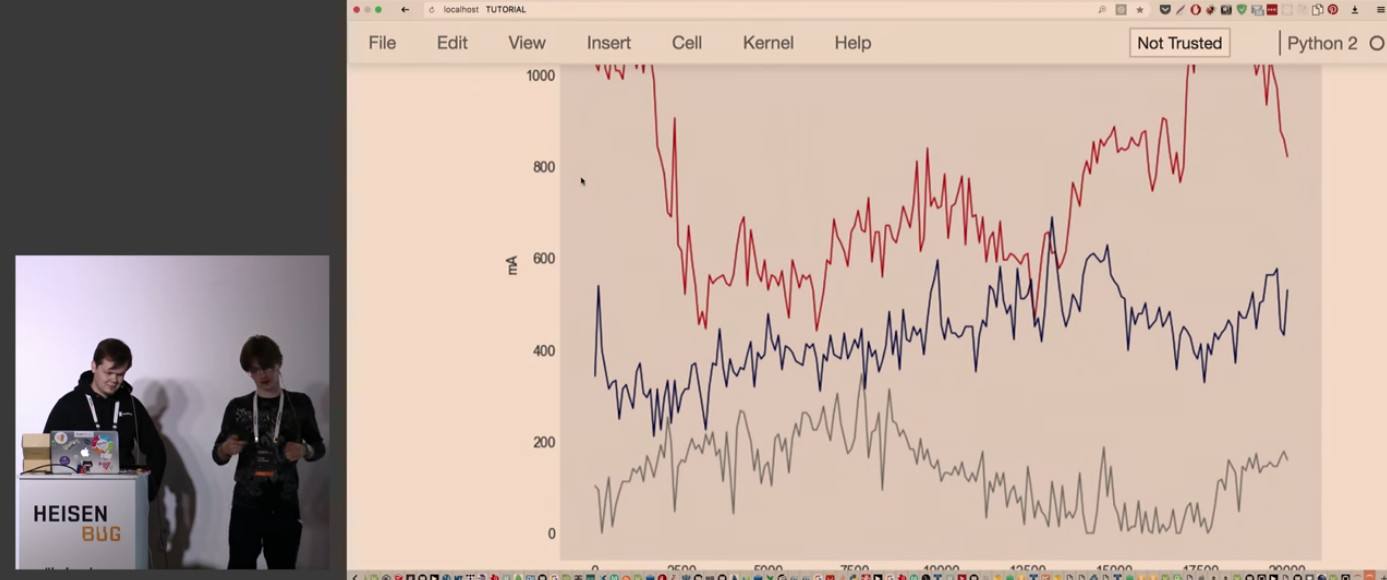

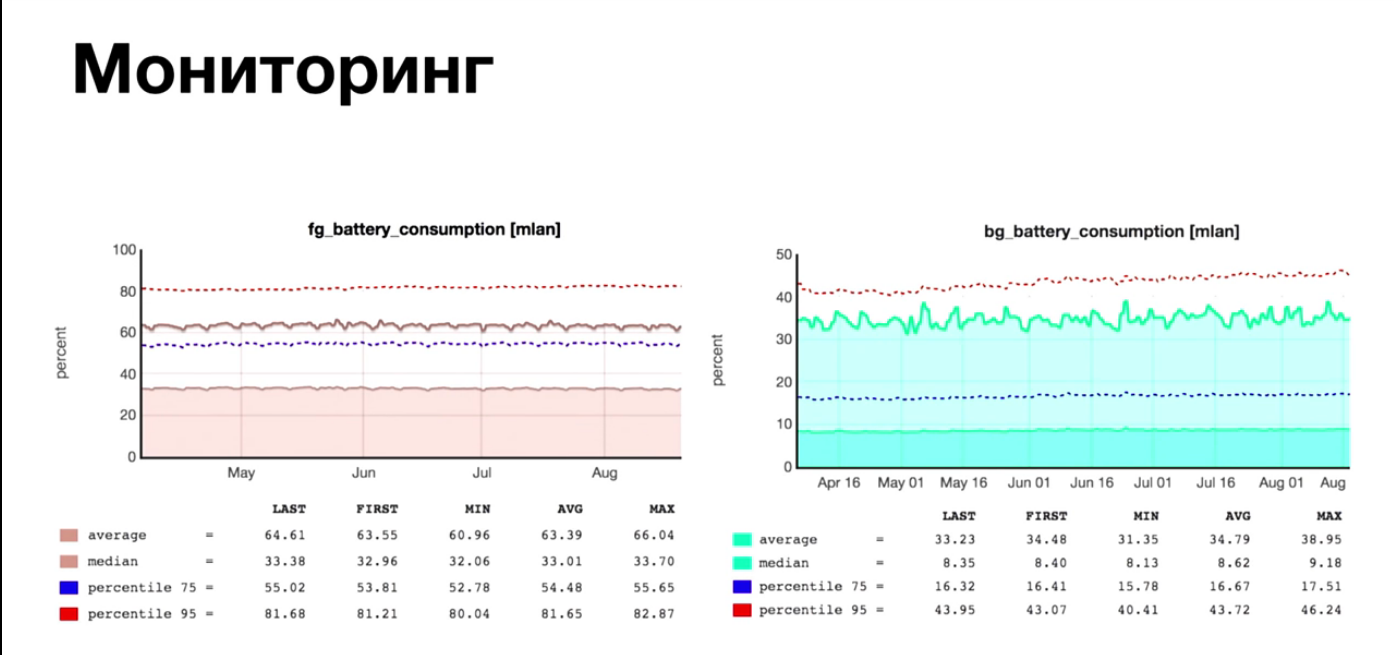

Roughly speaking, earlier we were able to test only the performance of server applications, and now the whole world has switched to mobile phones. Including, in Yandex measure the power consumption of phones. Alexey and Timur tell how they learned how to collect the energy consumption metric in the hardware way: they collected a small Arduino based circuit that measures the current and wrote a library to work with it. Library they posted in open source . In addition, the report describes how to prepare phones, assemble boxes for measurements and how to use the library.

Of course, all this is interesting first of all for those who have direct hands. I'm not sure for myself that I could really do such a thing in the sale. For this you need remarkable persistence and talent. But marveling how professionals do it is always interesting.

The report begins with how everything is not easy if the test happens with real phones.

They did some research, who needs it at all, and what characteristics should their own decision on energy consumption have.

The general outline of the report is approximately as follows:

That is, first it is told why they did not take a multimeter, and then Timur comes out, shows what is in the open source and how to use it.

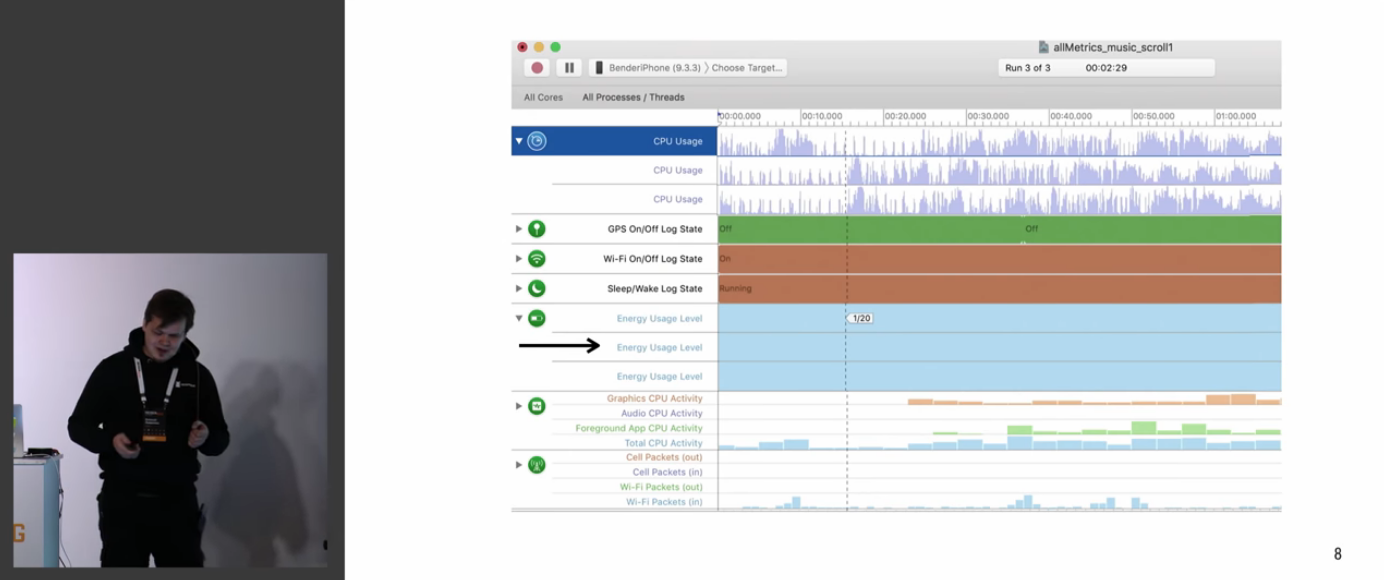

A certain problematics of what the phone can and cannot do (and what the 1/20 metric means for the iPhone)

It discusses how they studied turnkey solutions.

Therefore, they set to work themselves!

Not only purely hardware minor issues that arose during the course of work are discussed:

But the problems of software development:

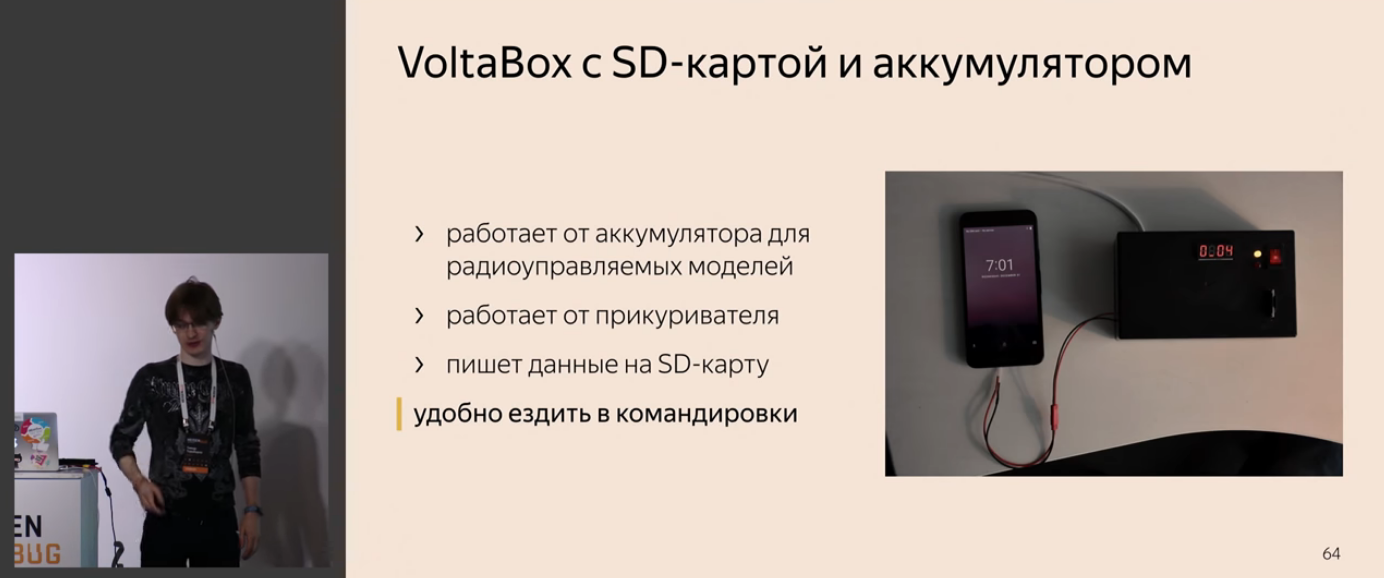

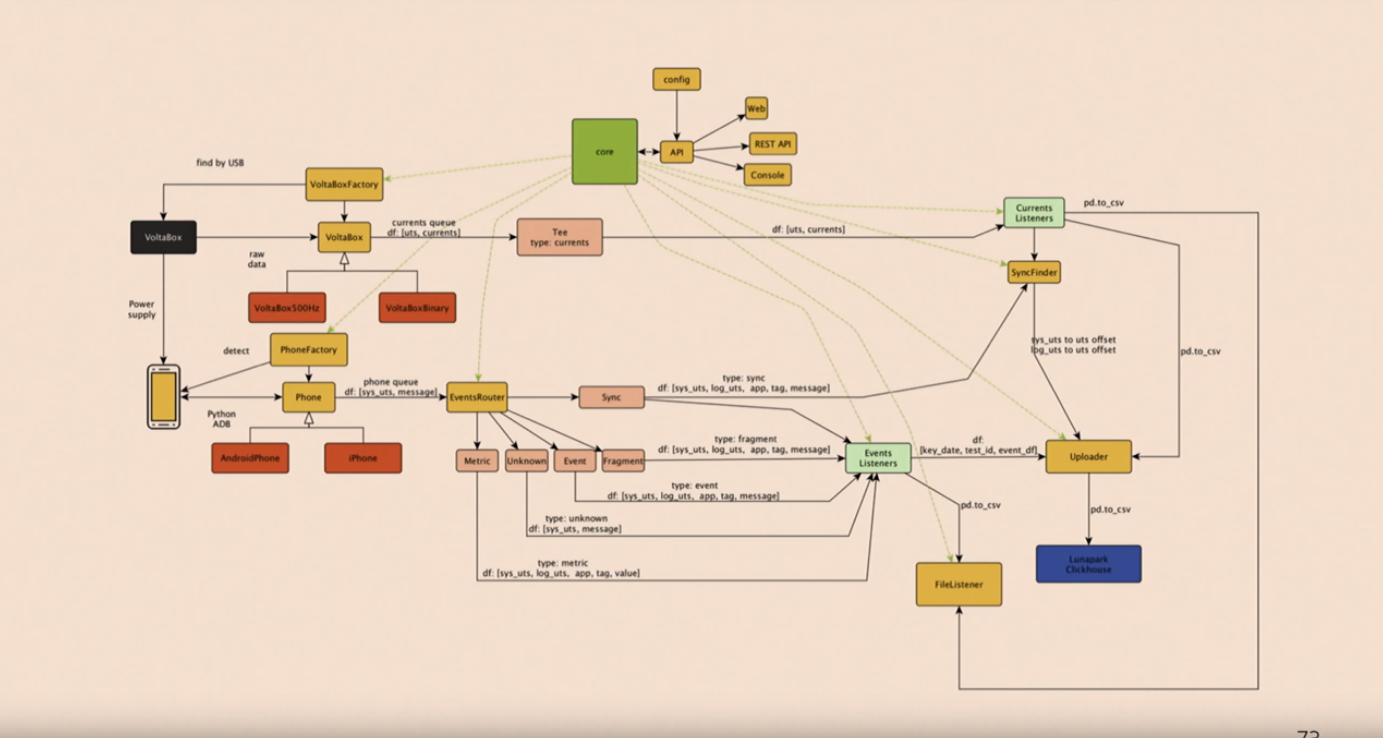

The second part of the report is devoted to a ready-made solution - Volta and VoltaBox.

The software is installed using pip install volta . This is how the software looks under the hood:

Actually, the diagram here is only to show that everything inside there is very well thought out.

Timur tells how to really configure all this and use it. Everything works just on Jupyter Notebook, and then follow the hands!

In general, the results look something like this:

The report leaves the impression that the measurement of energy consumption is not some kind of fantasy and distant future, and this can be done right now. And all this does not require to be a doctor of physical and mathematical sciences. Of course, this is only possible because people like Alexey and Timur did everything for us, and all we have to do is use the ready-made one.

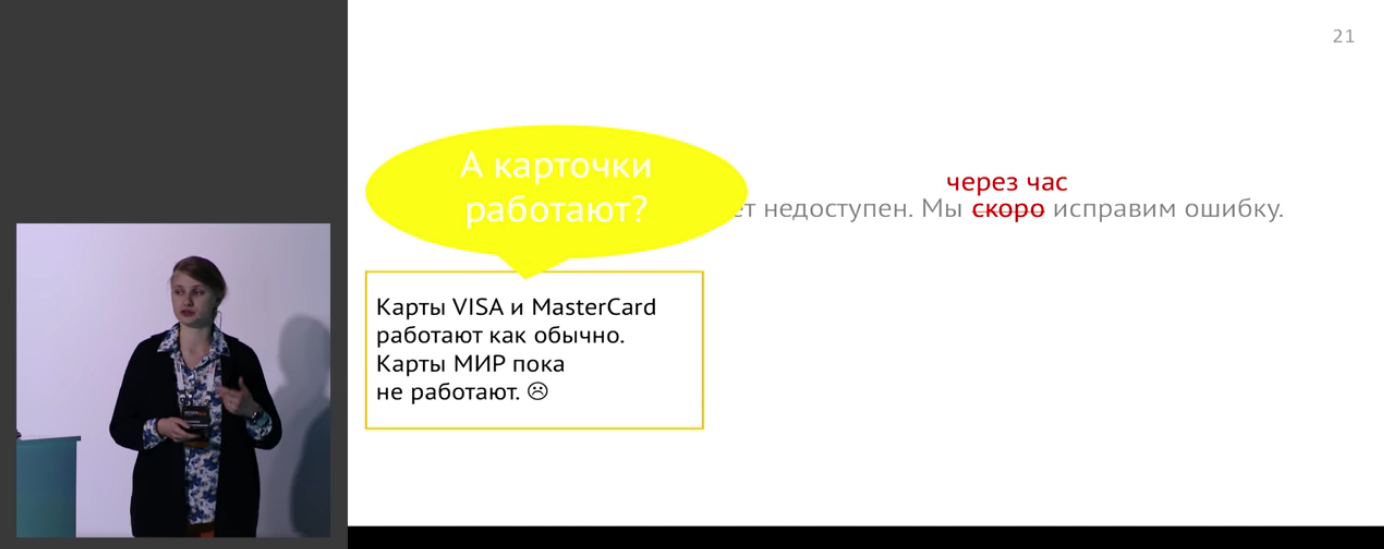

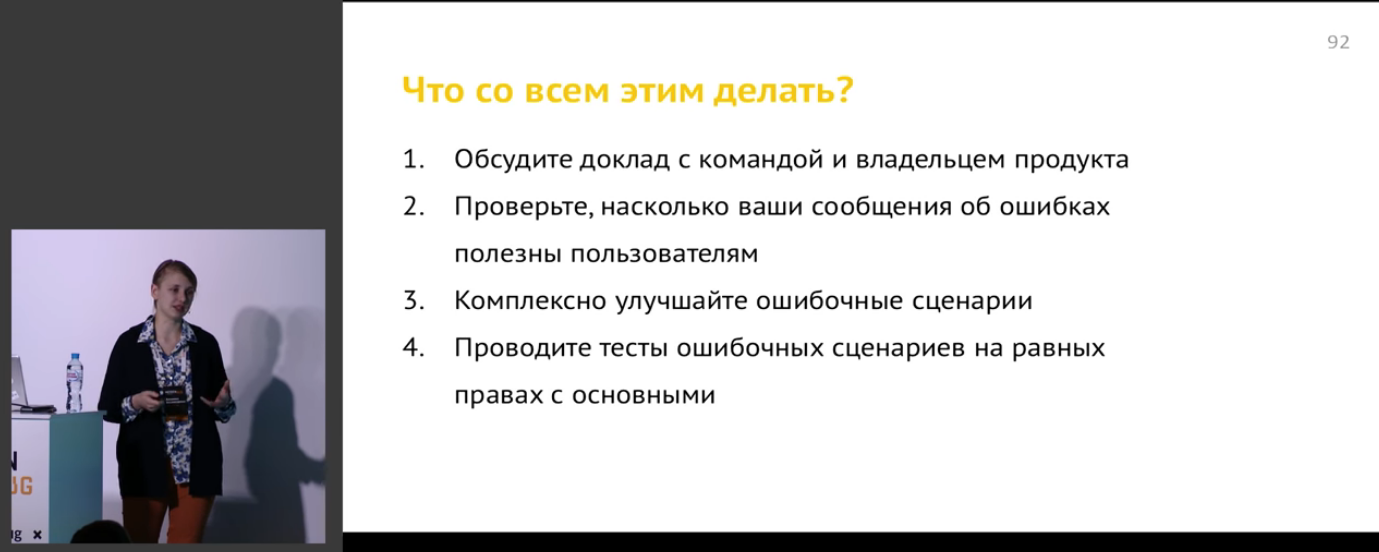

5. How to inform the user if “Oops, something went wrong”

Speaker : Antonina Hisametdinova; rating : 4.40 ± 0.10. Link to the presentation .

An ordinary programmer (and the tester too) likes to test only the happy path. Anything that is not happy drips too much on the nerves. Therefore, error scenarios usually receive very little attention.

Many simply create the same type of interface windows like “Error No. 392904” or “Oops, something went wrong”, without thinking about what the user will feel. But he can be upset, lose confidence in the product. Well, or catch up on the street and beat.

The report shows mistakes through the eyes of ordinary people. Antonina tells how to teach the interface to correctly report errors and failures, so as not to annoy users.

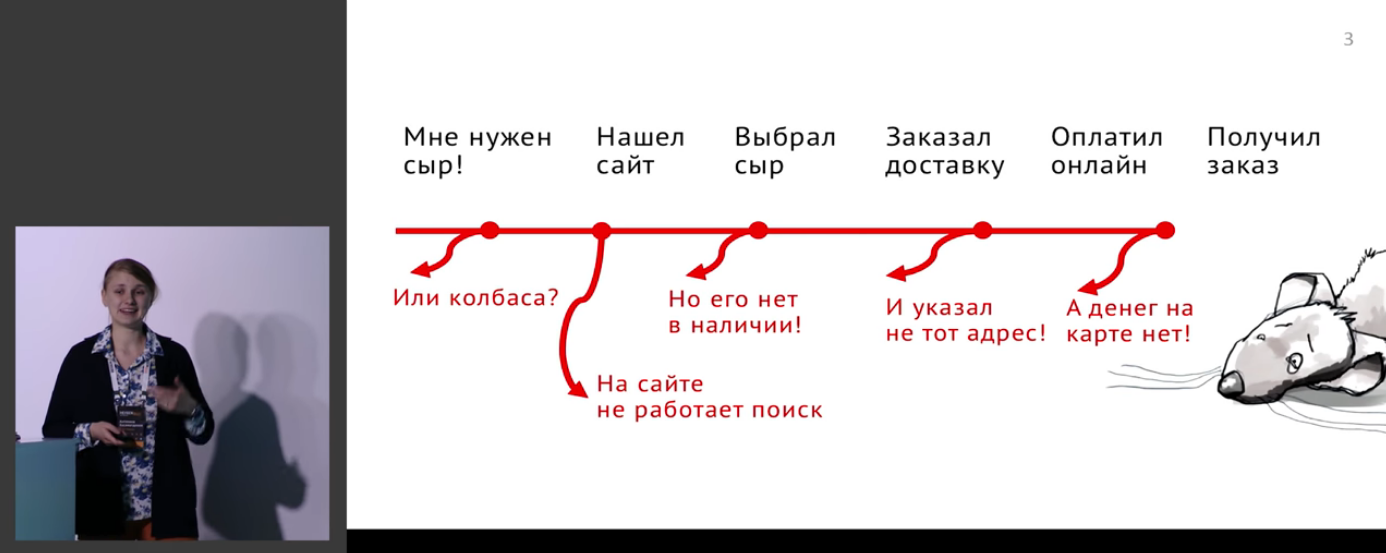

The story begins with a review of scenarios with errors.

A little bit about how to justify the processing of such scenarios to business:

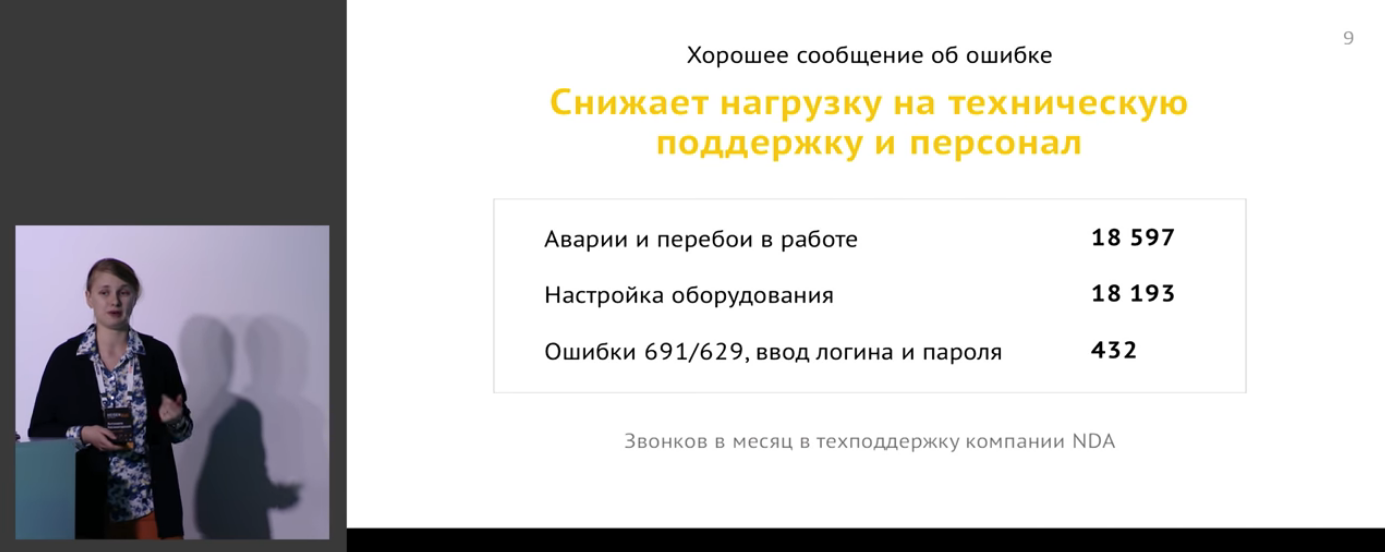

All this translates into real money:

That is a good error message:

- Reduces support load

- Helps the user not to get lost in the conversion funnel

- Quickly learns how to work with a product.

- Helps to maintain confidence in the service in difficult times

Further systematically discusses the different types of errors, the user's reaction to them (including the example of World of Tanks!) And what nuances all this brings into the formation of an error message.

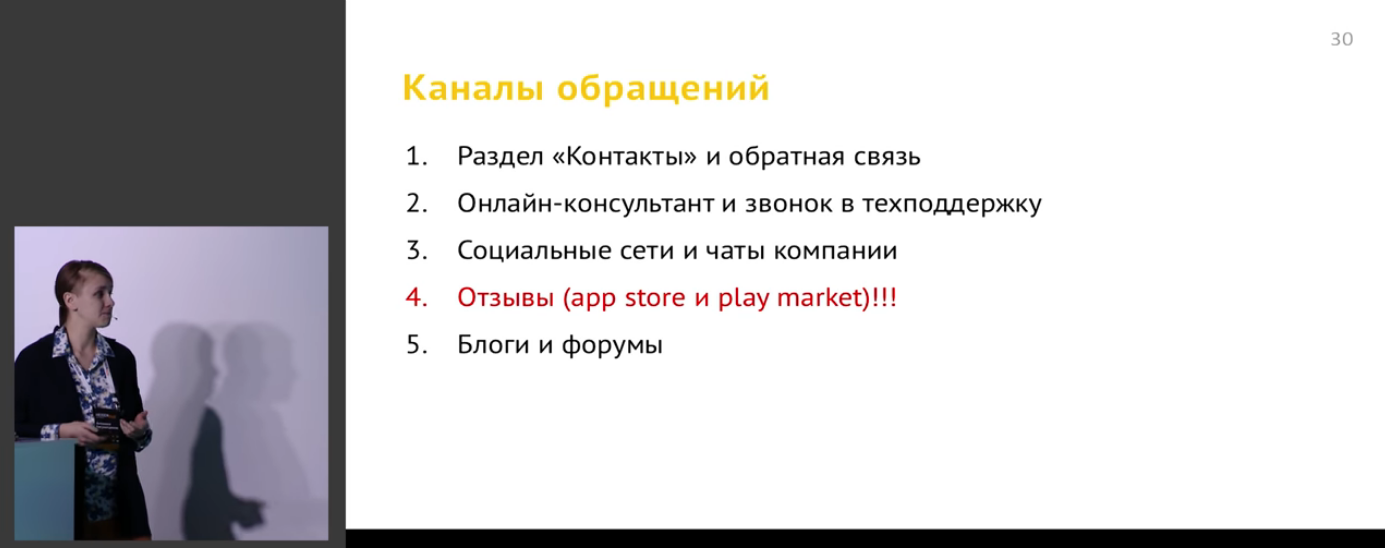

There is a little about the channels of user access and how to correctly inform users about errors:

There are very specific tips for improving applications in the form of checklists.

All this is illustrated on the insane amount of living examples:

Even such rather unusual applications as interfaces for the visually impaired are considered:

In general, the report is simply full of concentrated information, and I think it is impossible to convey everything in the form of this marc.

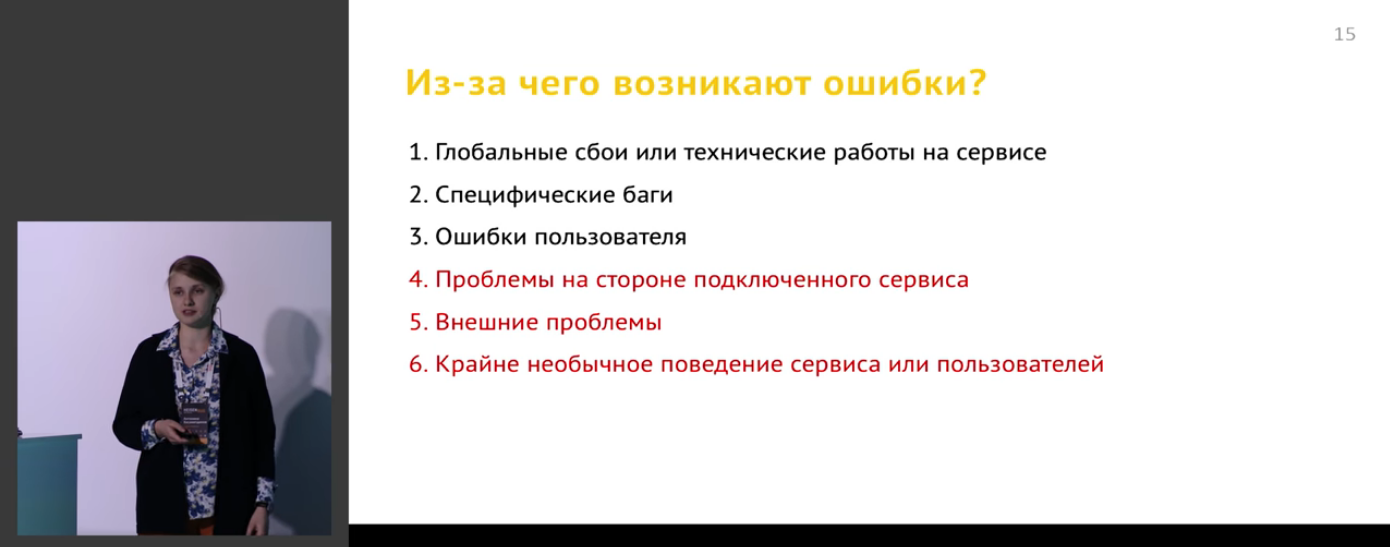

But you can throw some central plan:

- How to report global crashes?

- Specific bugs

- User errors

- Connected service issues

- External problems

- Extremely unusual user or service behavior.

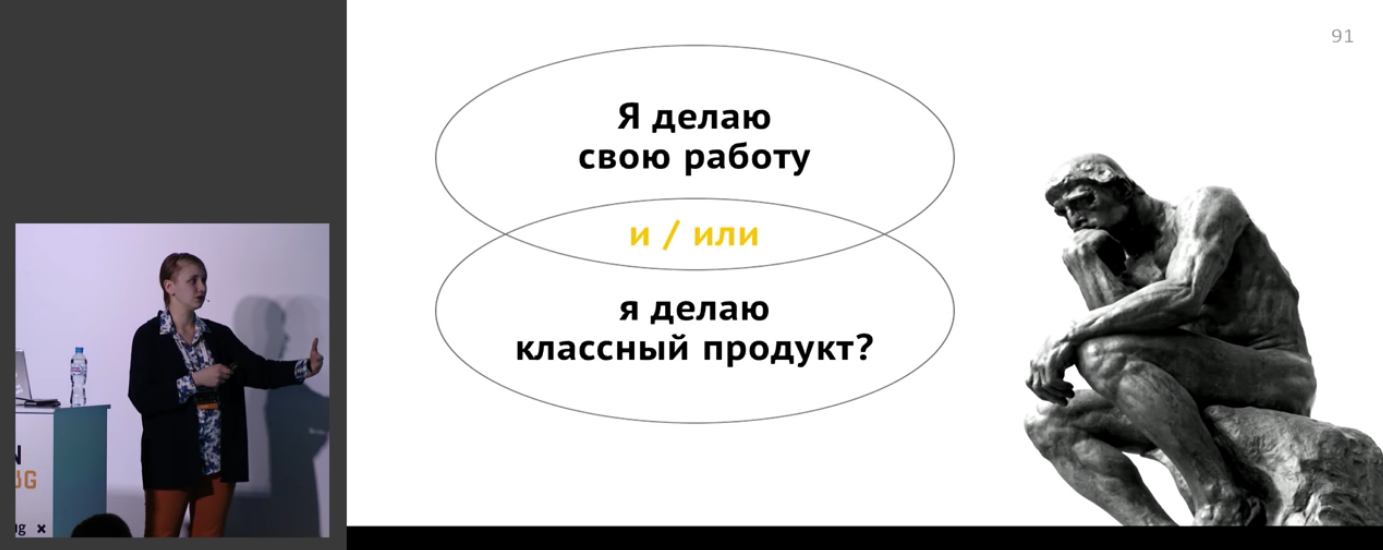

Towards the end, the main issue of the universe of life rises and in general:

There is also a clear checklist that now with all this to do and what to read on the topic:

Slide about "What to read?" - Ninety-third. Ninety-three slides, Karl!

After watching the video, I had the feeling that this was not just some kind of report, but a real book, clever and with a high depth of study. Maybe someday she will write a book? It would be great.

Well, yes, absolutely everything that was said there is very useful, clear and to the point. One of the few reports that are applicable at 146%.

4. Simplicity, trust, control - three piles of web testing automation

Speaker : Artem Eroshenko; rating : 4.45 ± 0.08. Link to the presentation .

Artyom is known for being the author of Allure and HtmlElements. Long engaged in projects related to the automation of web testing, he formed a set of rules that ensure comfortable work for him and his team throughout the life of the entire project, from the first test to several thousand. This set of rules is conventionally divided into three groups: "Ease of development", "Trust in results" and "Quality control".

The report deals with tools that allow Artem with the team to create and edit tests as simply and clearly as possible. The approaches that help to achieve confidence in the results of passing the tests for the whole team, how to monitor the quality of tests, are considered.

It would be most appropriate to present this report in the form of a plan.

The fact is that the report uses more than 170 slides. If you start to describe it in detail, you will need a separate habrapost - and perhaps not one.

All together it will look something like this (sorry, if you confused somewhere with nesting levels, because the conversation spreads smoothly):

- Simplicity

- Development tools

- Browser operation

- PageElements

- Element structure

- Page structure

- What does a test look like?

- Disadvantages of HtmlElements

- Pain with Ajax pages

- Side checks

- No parameterization

- No logging

- Interfaces instead of classes

- Parameterization

- Repeated checks

- Page Templates

- Item descriptor

- Steppe almost not needed

- Data preparation

- Test Configuration

- Running tests

- Test Configuration

- Configuration description

- Primitive types

- Extended types

- Lists and arrays

- Several sources

- Redefinition of values

- WebConfig Configuration

- ApiConfig configuration

- Configs initialization

- Use in tests

- Dependency injection

- Dependency graph

- Base class - bad

- Static fields are bad

- What do we really need?

- WebConfig Provider

- WebDriver Provider

- MainPage Provider

- Module configuration

- User Provider

- JUnit integration

- What does a test look like?

- The trust

- Team confidence in tests

- Short tests

- Example on charts

- What does the service look like

- How does the test run

- As a matter of fact

- Why it happens

- Different entry points

- How to act

- Example on charts

- Server "stubs"

- How to check ad

- How to check filters

- What are the implementation

- How the service works

- Server "stubs"

- Proxy mode

- How does the testing

- How does the code in the test

- And if in several streams?

- Test ID

- Header in HTTP request

- Short tests

- Team confidence in tests

- Control

- Launches types

- Grafana

- Graphic examples

- The number of tests

- Test run status

- Test statuses

- Test run time

- Number of restarts

- Restarts and status

- Restarts and time

- Startup problems

- 'Categories.json' file

- Problem categories

- How to setup?

- How it works?

- Export to InfluxDB

- InfluxDB data format

- Allure plugin

In fact, this is one of the most comprehensive reports, reference books on the topic, and there is nothing special to add here. This is such a mega-checklist, according to which you have to go and consistently work through.

TOP-3

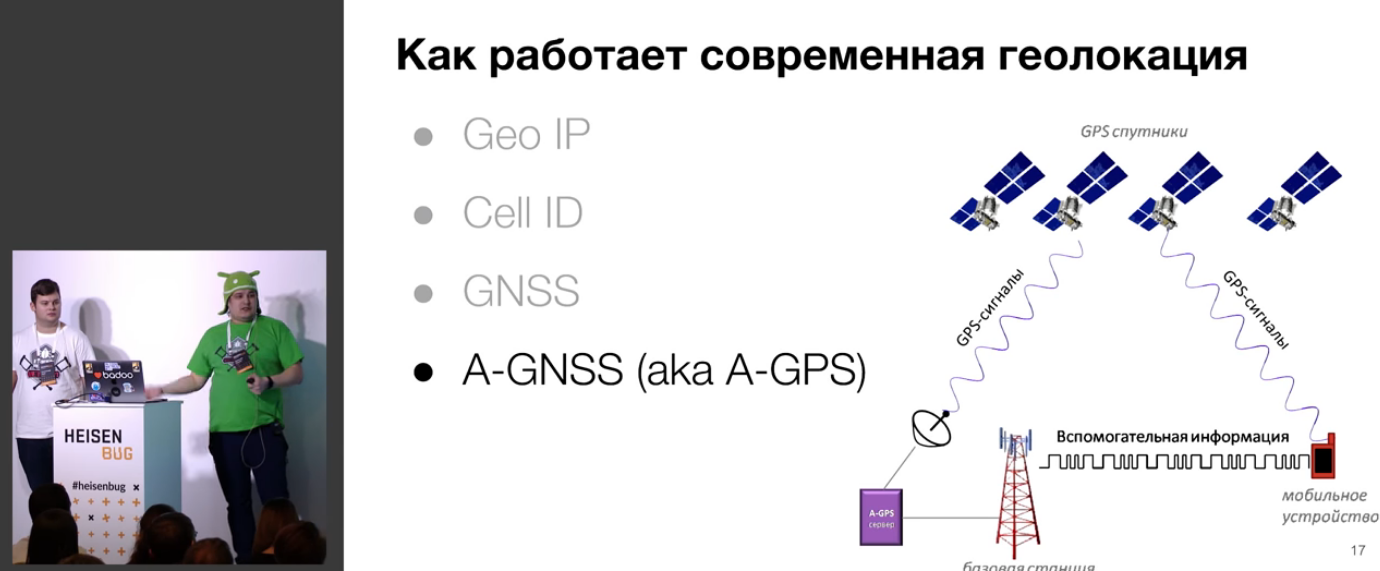

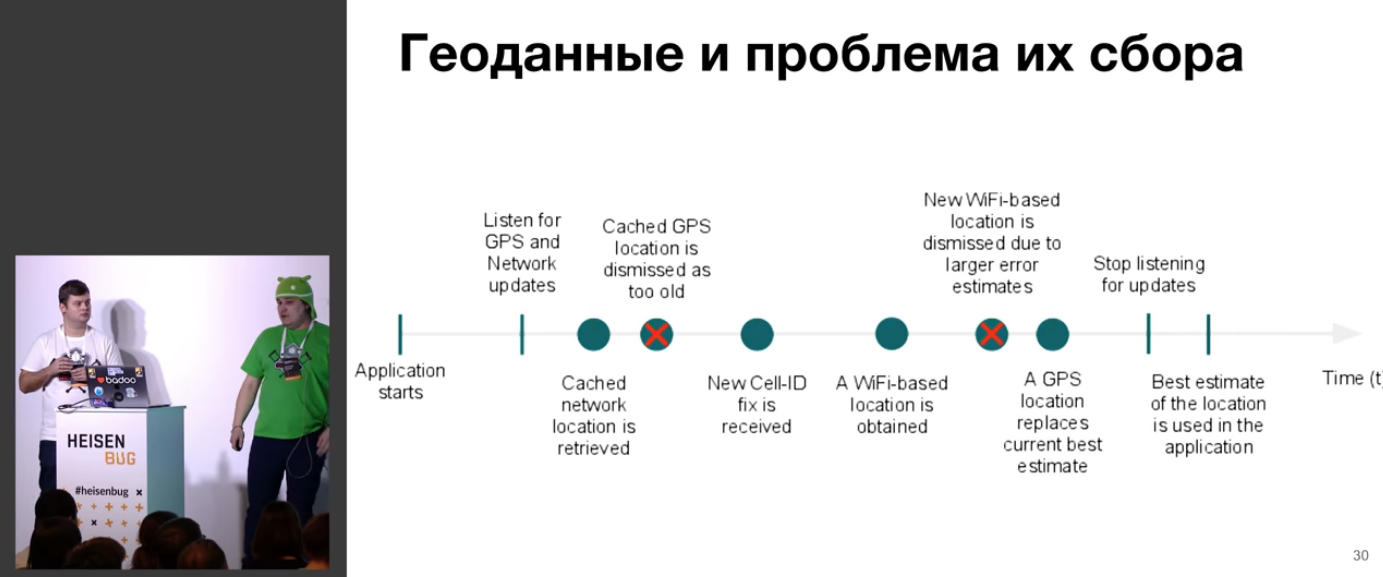

3. Testing geolocation in Badoo: cones, stones, crutches and selfie stick

Speaker : Alexander Host, Nikolay Kozlov; rating : 4.57 ± 0.10. Link to the presentation .

Working with locations is quite nontrivial, and in the process there are a lot of things that are difficult to predict in advance. The speakers highlight the problems and nuances of this topic, hand out useful tips and talk about the tools used. Information on the topic is not so much, so that the report will be useful for almost anyone.

First, the speakers roll out a set of ponts on what they are cool and big in Badoo . I myself do not use this service, so tsiferki plunged me into a light shock:

And all this is stored correctly, in a protected form.

The report is about what:

Initially, there is a kind of introduction to how modern geolocation works:

Further several tasks are put (like “to process a ton of geodata with the lowest possible power consumption”) and a bunch of examples are shown where these same geodata are used.

The problem is that between the start of the application and the collection of geodata with a given accuracy may take time.

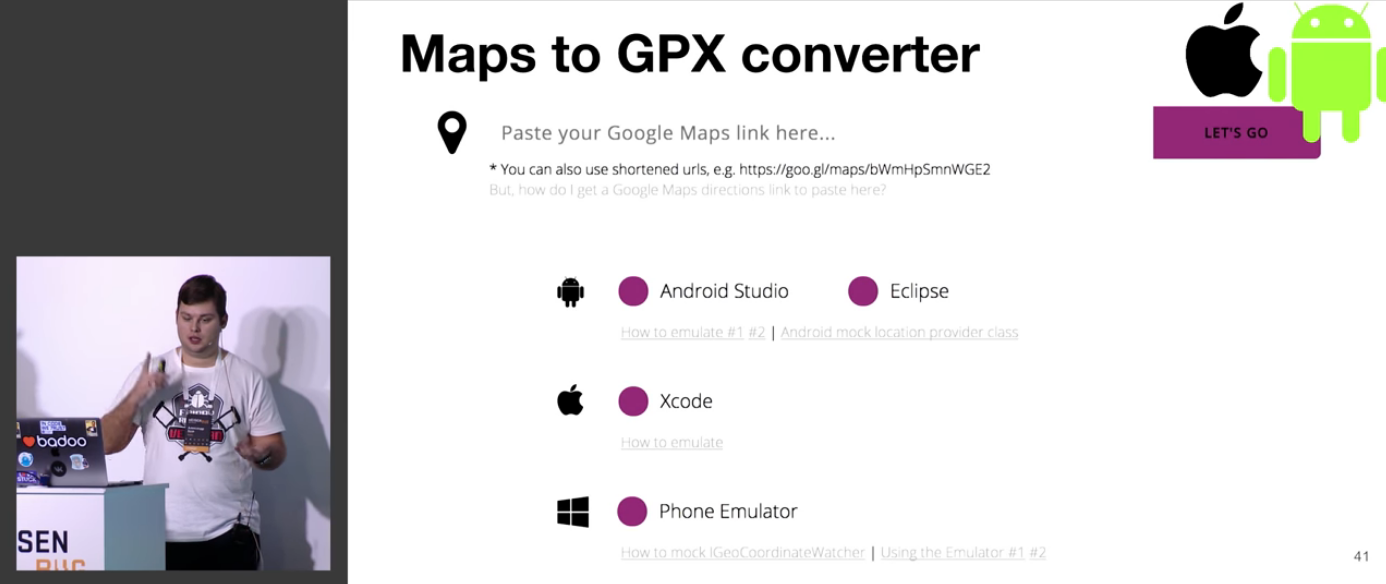

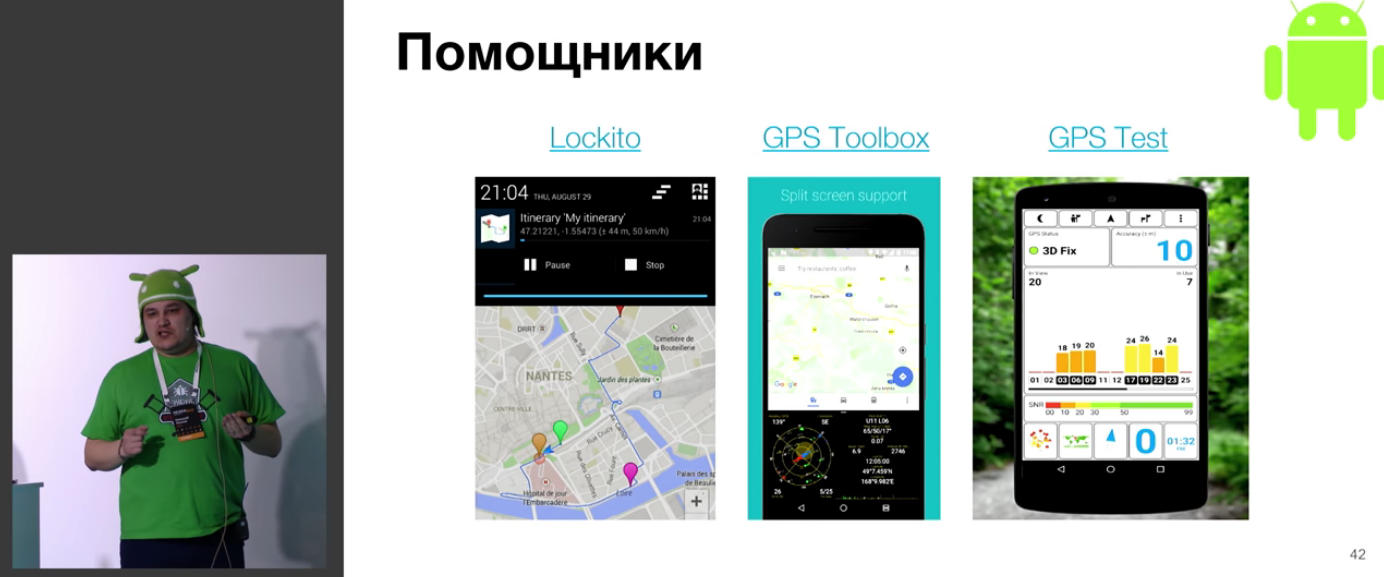

It is mentioned that the data obtained are not absolutely accurate, and that they need to be able to test. For testing, an android emulator and Xcode simulator are used, and there are bugs everywhere.

Various interesting utilities:

Now the system is already receiving data (due to the above), and it is necessary to determine that it works at all somehow.

The problem of information noise is discussed, and sometimes it is not even clear which way to dig:

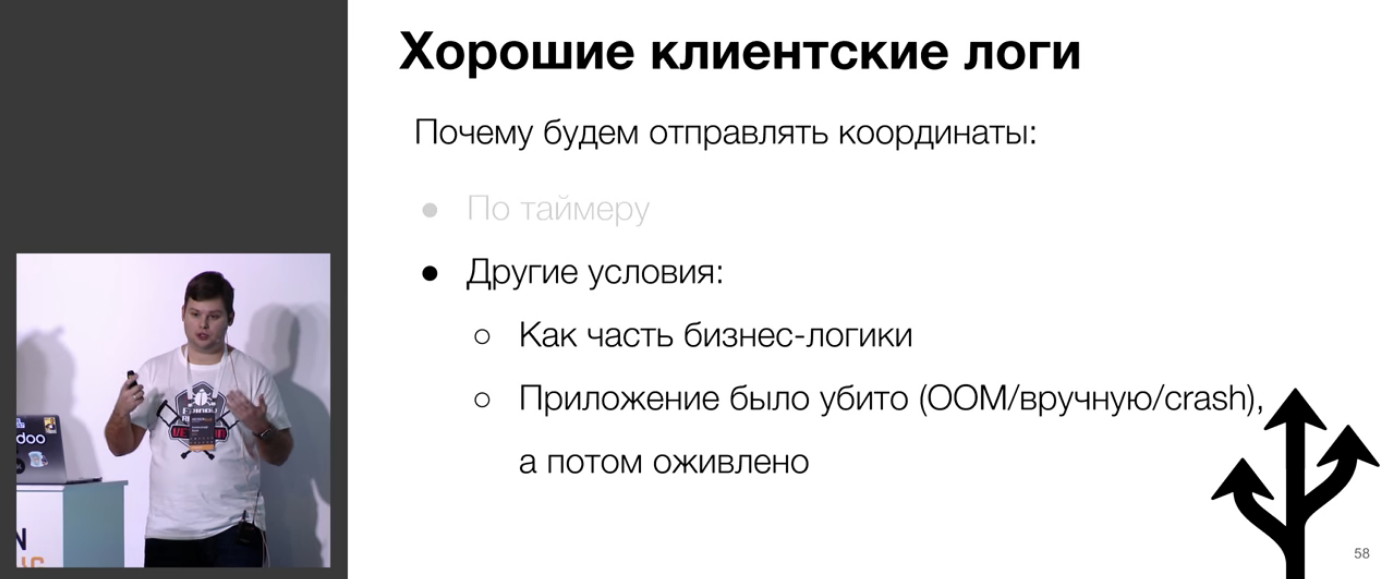

Naturally, here comes the turn of the logs. :

, .

? !

, . , Badoo , .

!

.

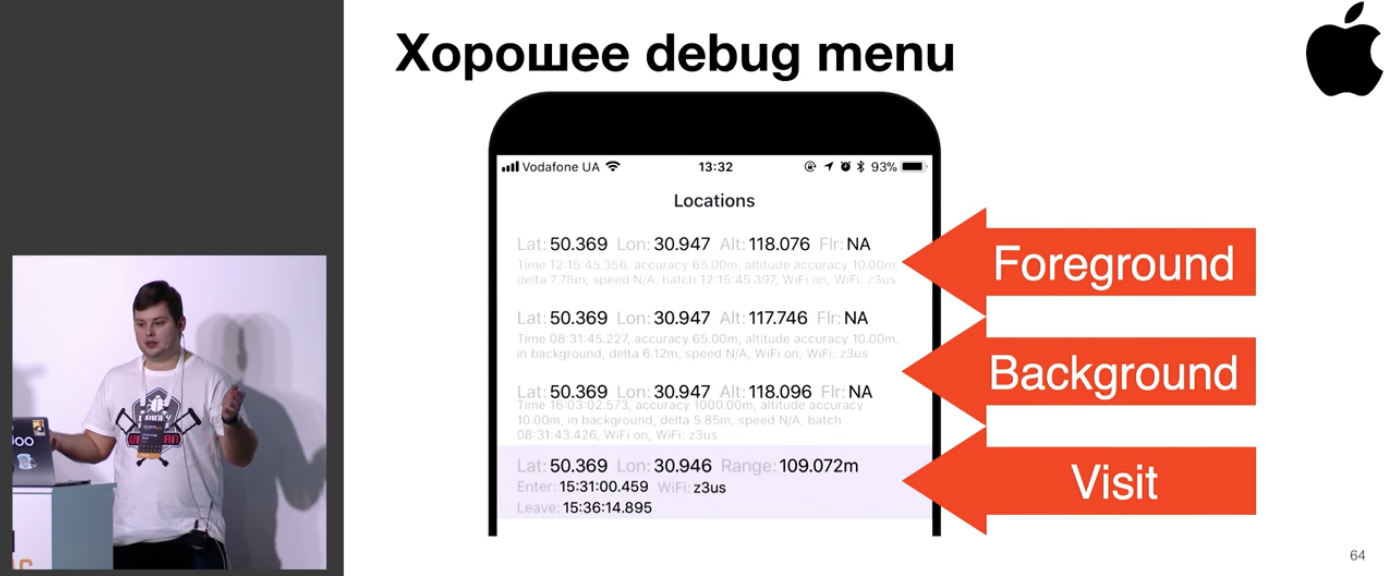

, , foreground, , , fused location data .

, , , !

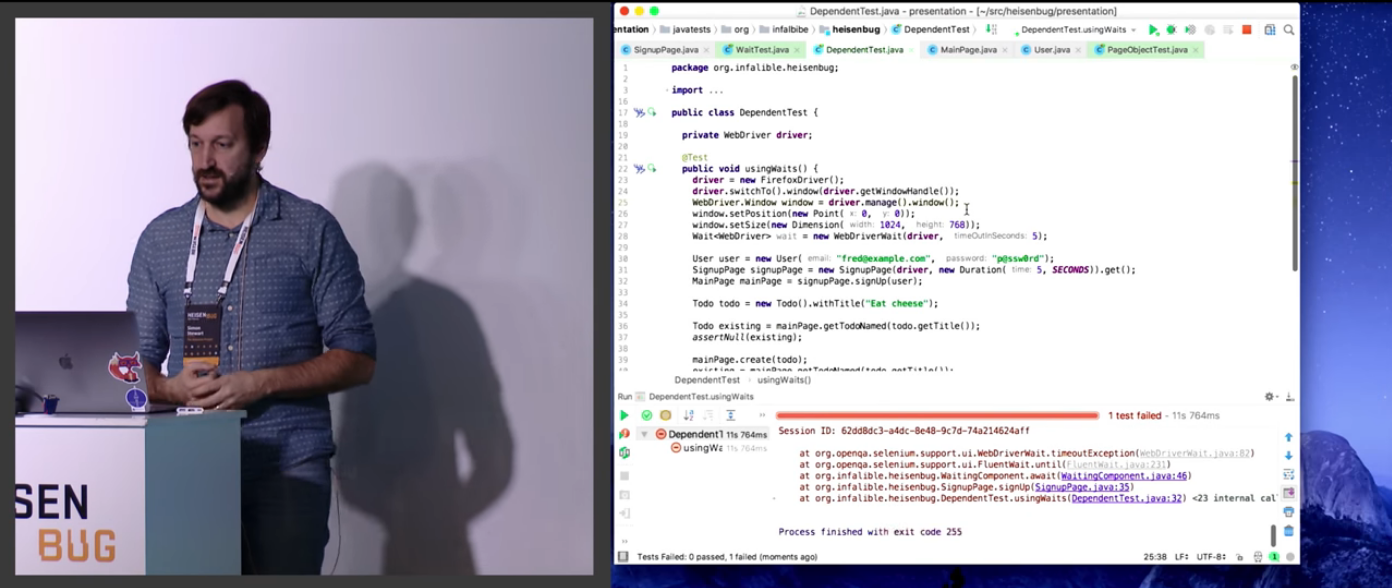

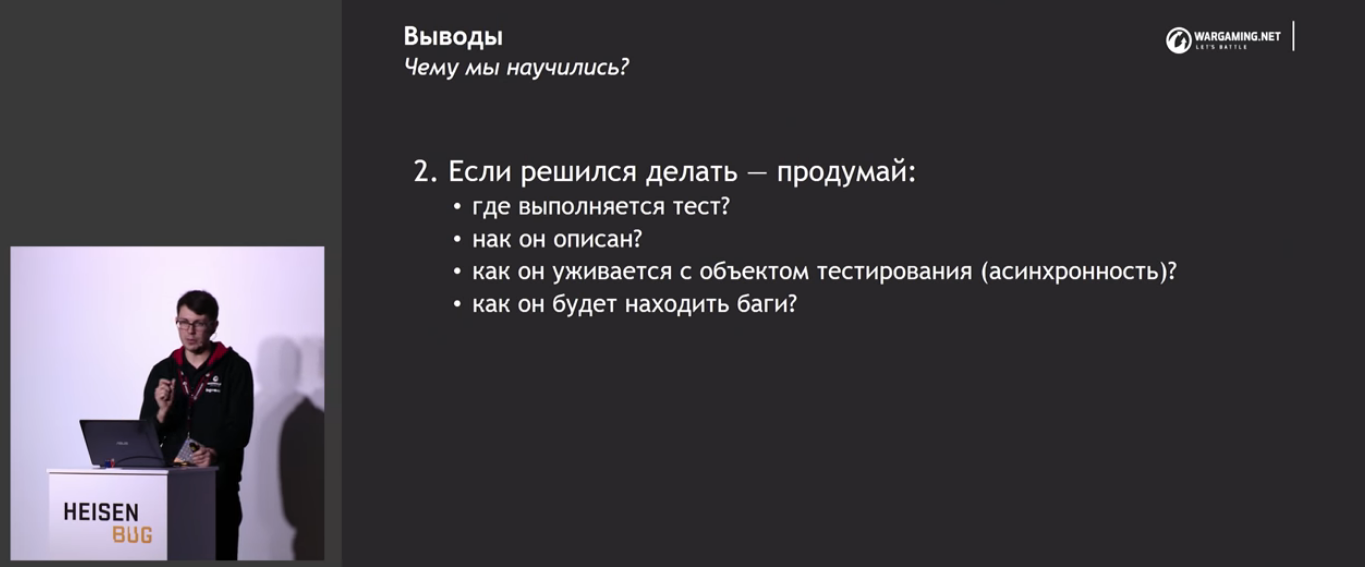

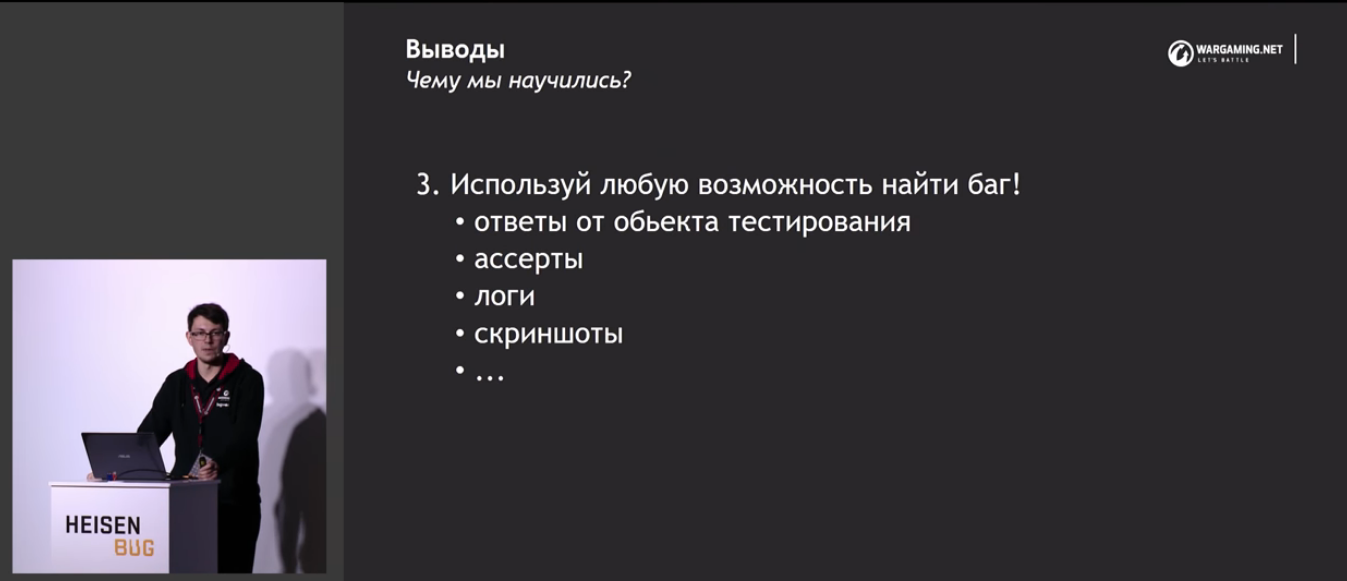

2. Flaky tests

: ; : 4,57 ± 0,06. Link to the presentation .

Flaky tests — . , — . . . , . — , .

- , :

Flaky- — , .

. , , .

1,5% — . .

:

, . , :

, - :

nbob ( , !), , Java, « » ( , ), Chrome .

, - :

:

1. World of Tanks:

: ; : 4,61 ± 0,11. Link to the presentation .

, : « , ». - , ?

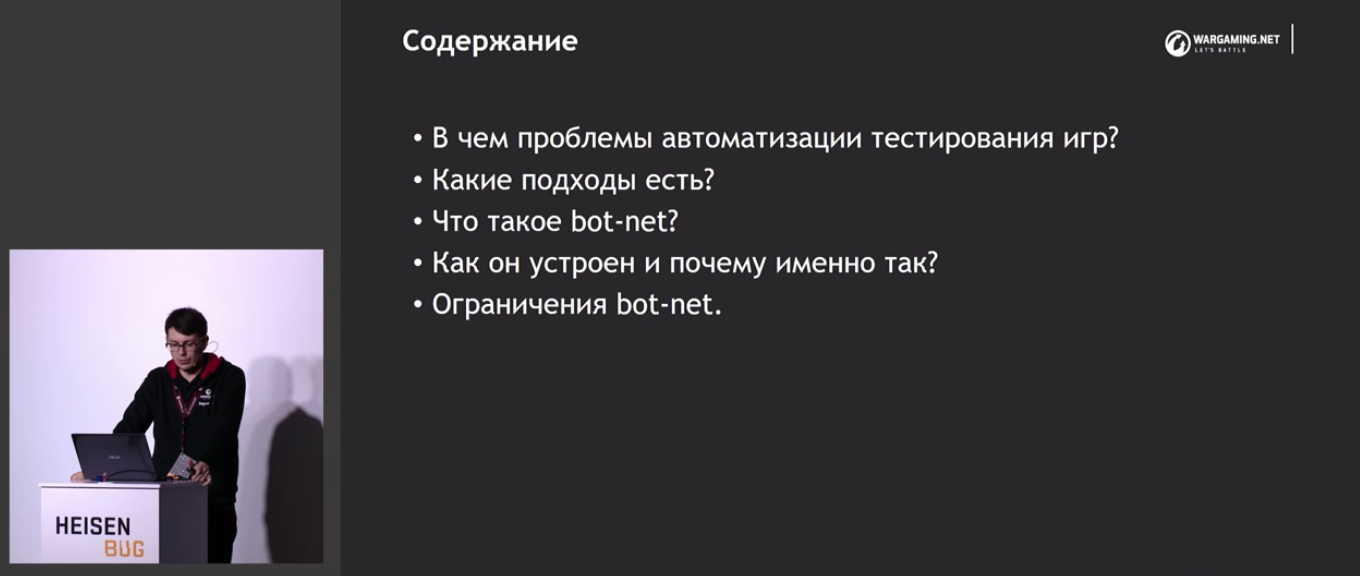

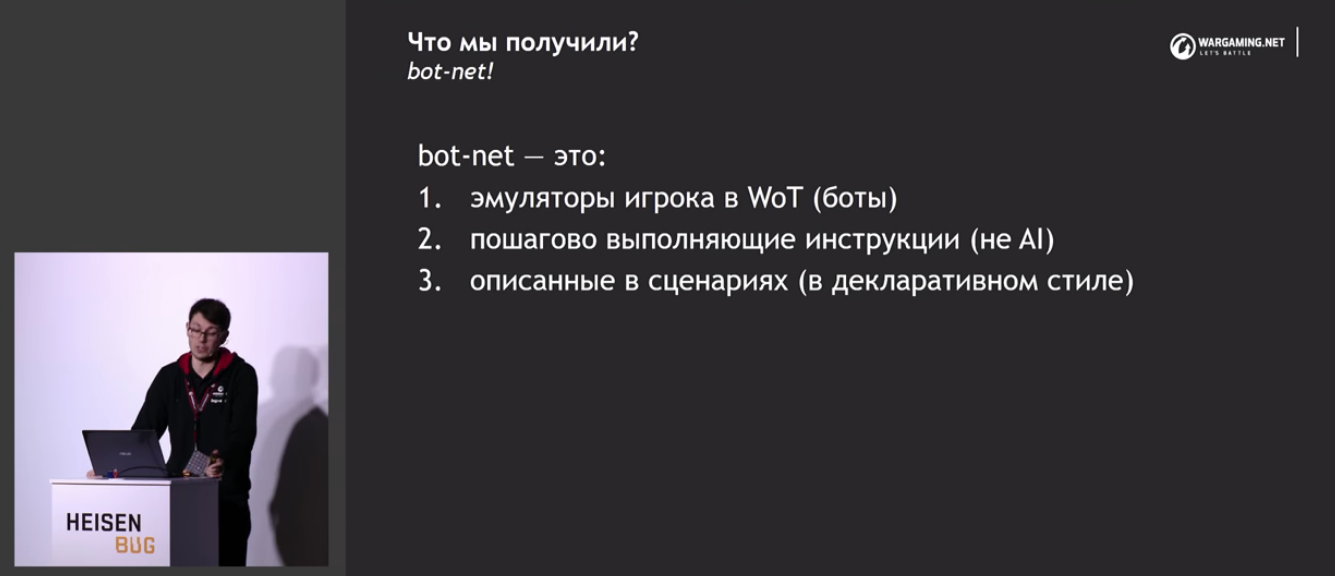

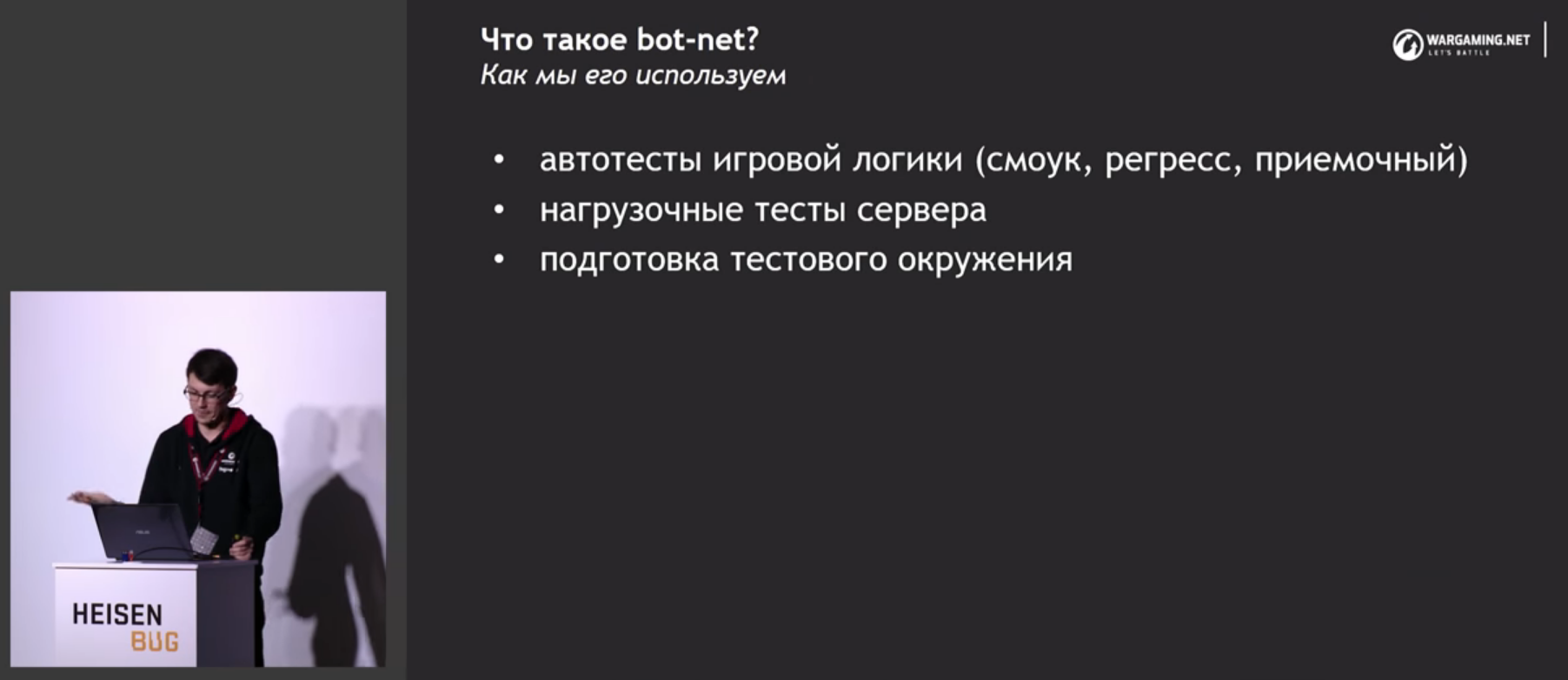

GameDev World of Tanks. , , ( -), «bot-net» – «World of Tanks» Python. .

, .

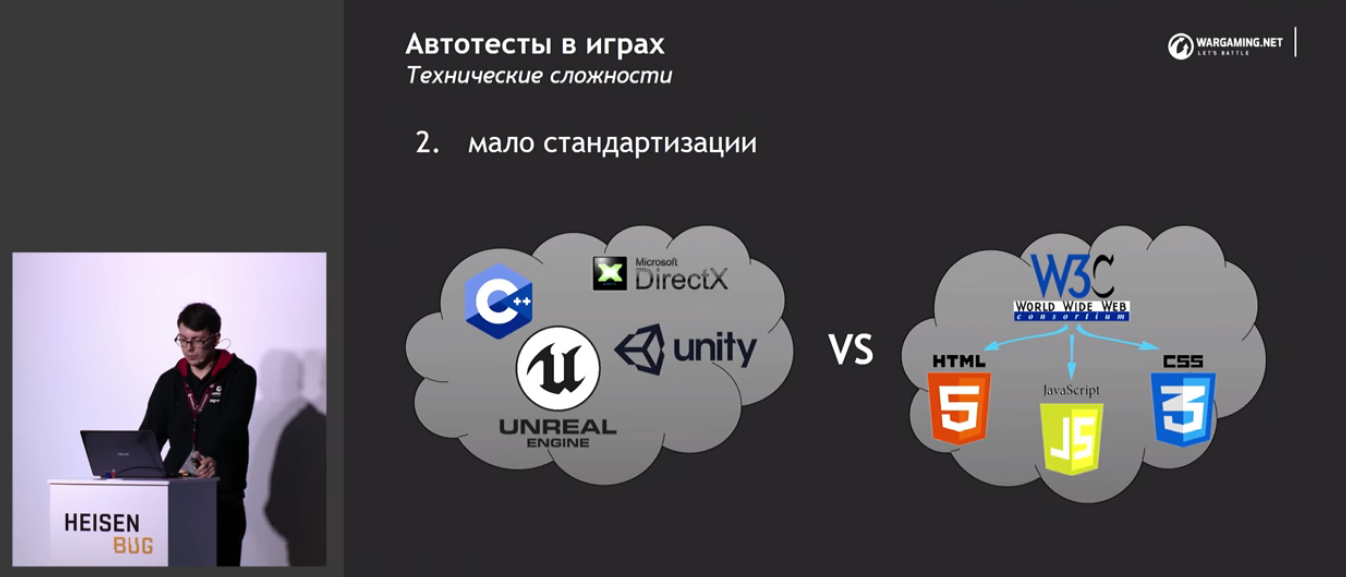

, — . -, , , - ( Overwatch, ). -, MMO-, , , , .

— World of Tanks.

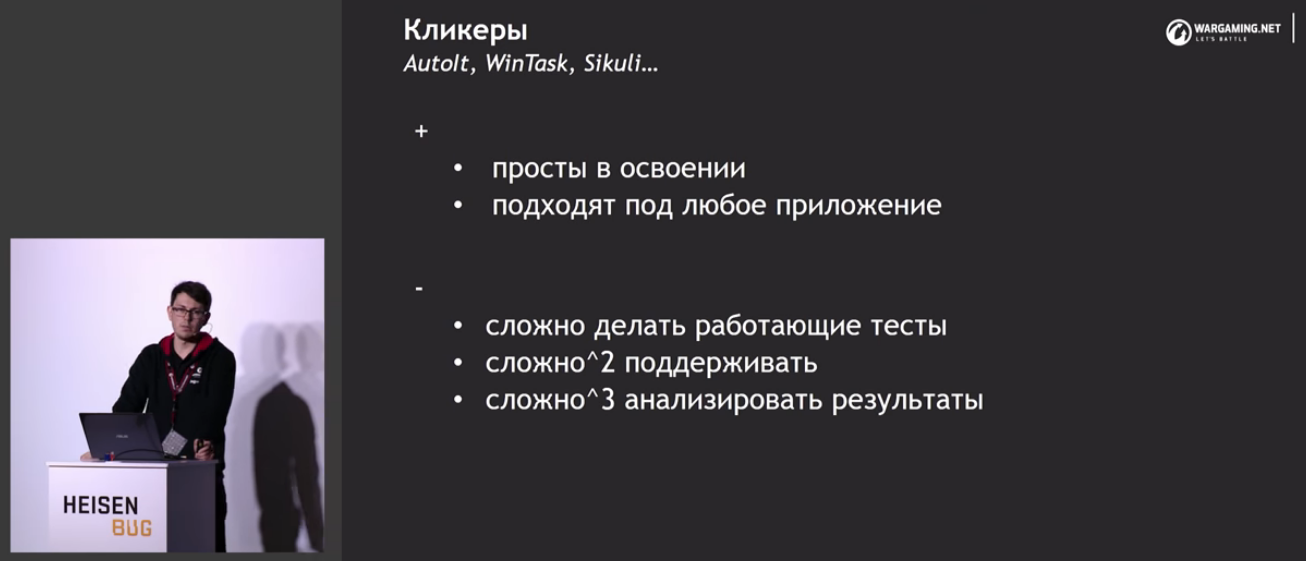

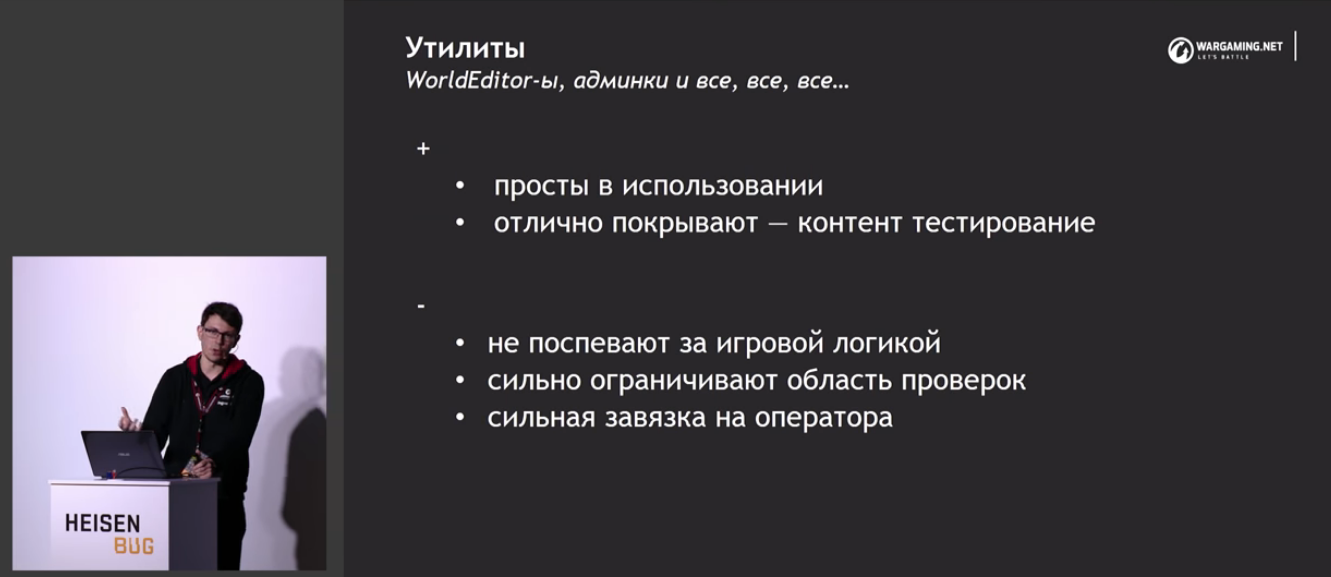

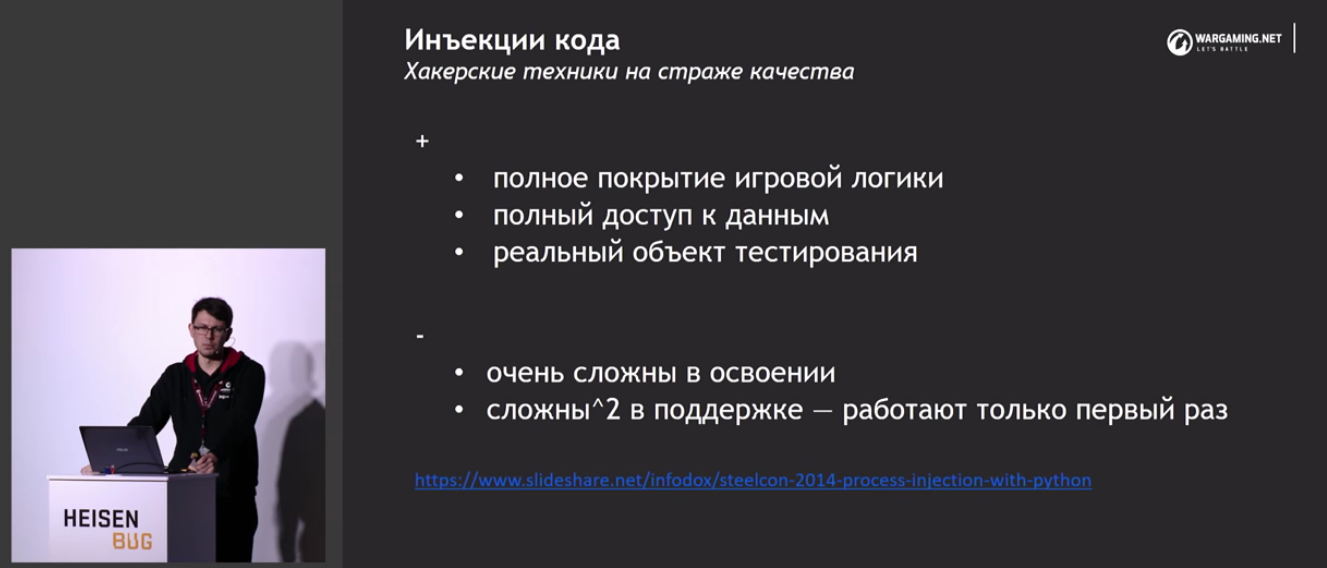

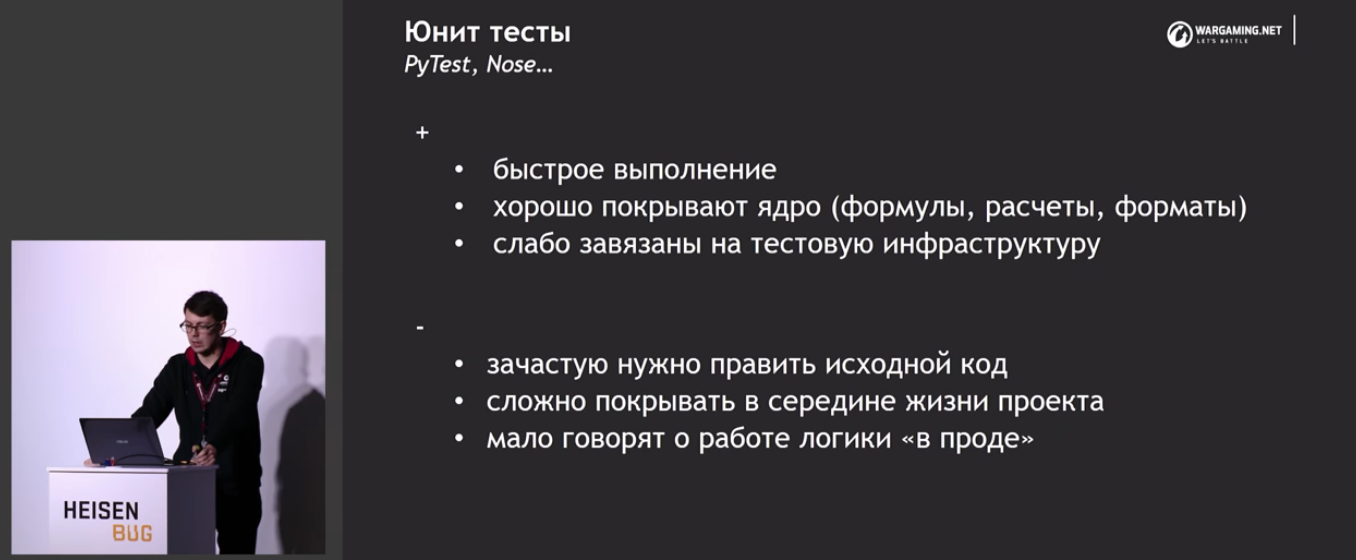

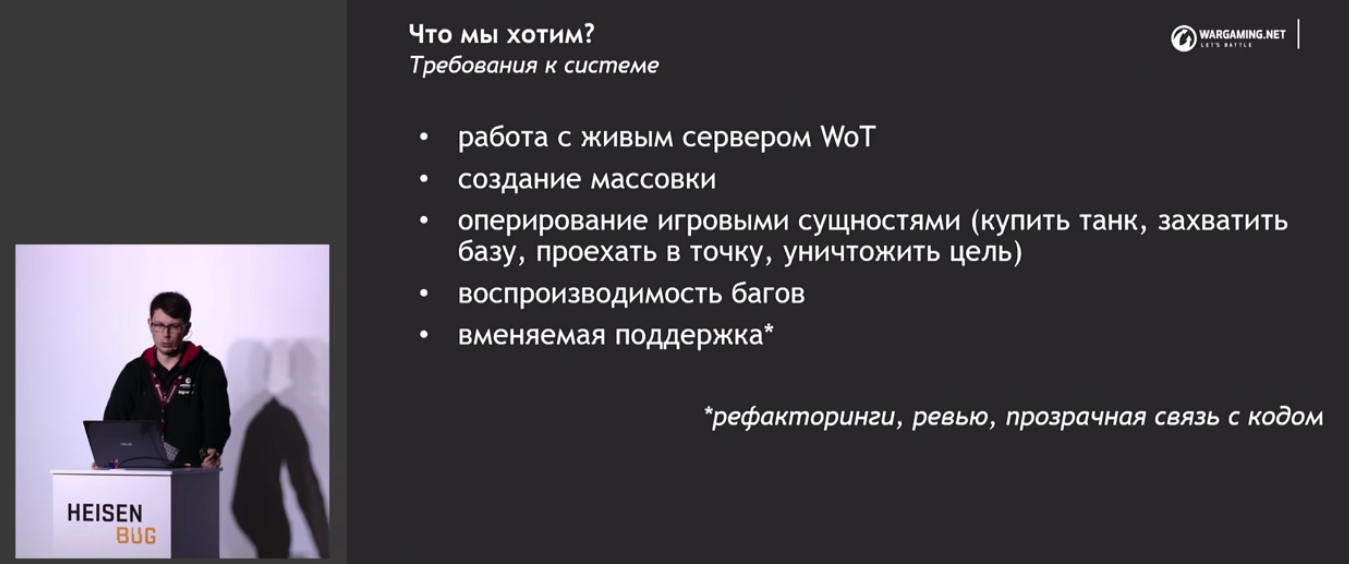

:

, MMO . , , , … , :)

— .

, PHP, , — .

.

, -. , .

, , , , . , .

. !

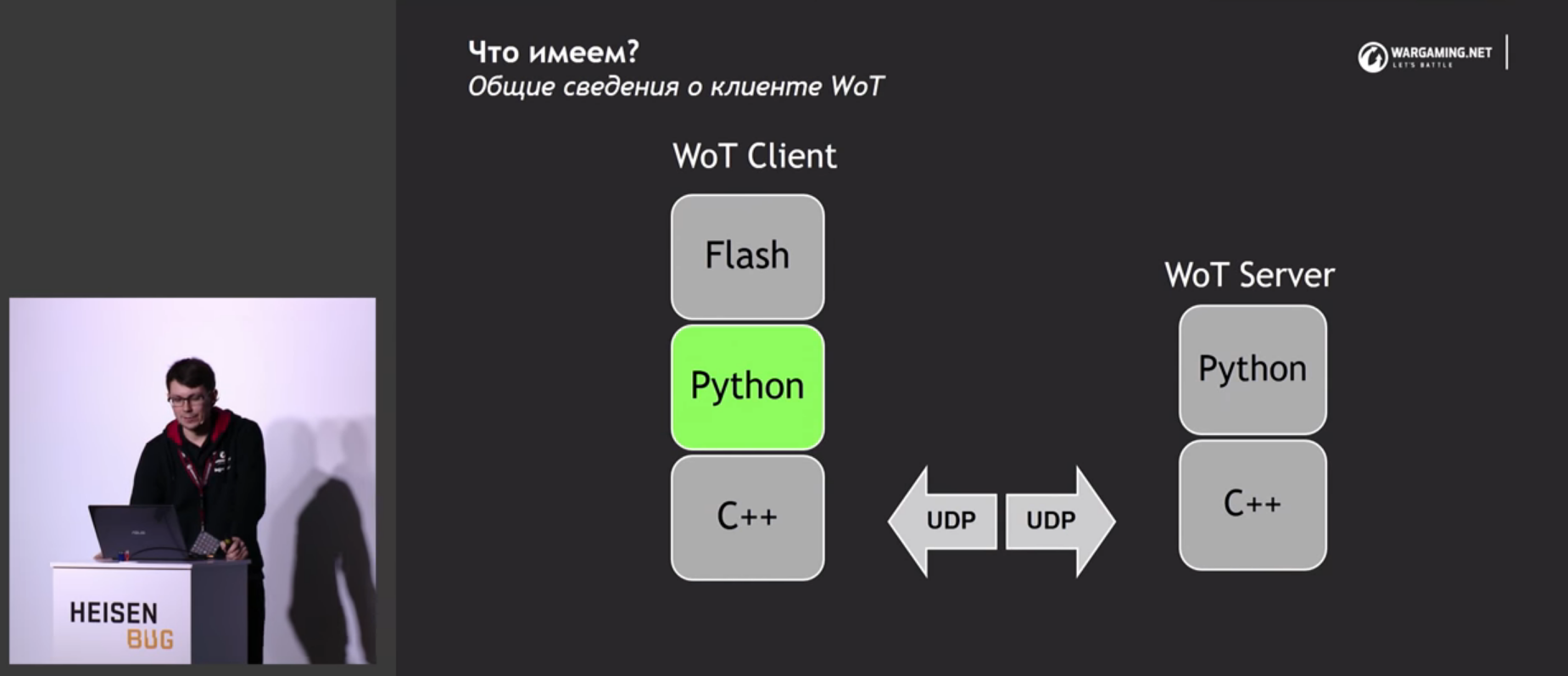

( , ) — . WoT , .

, — . , , , . : , , .., , .

, . !

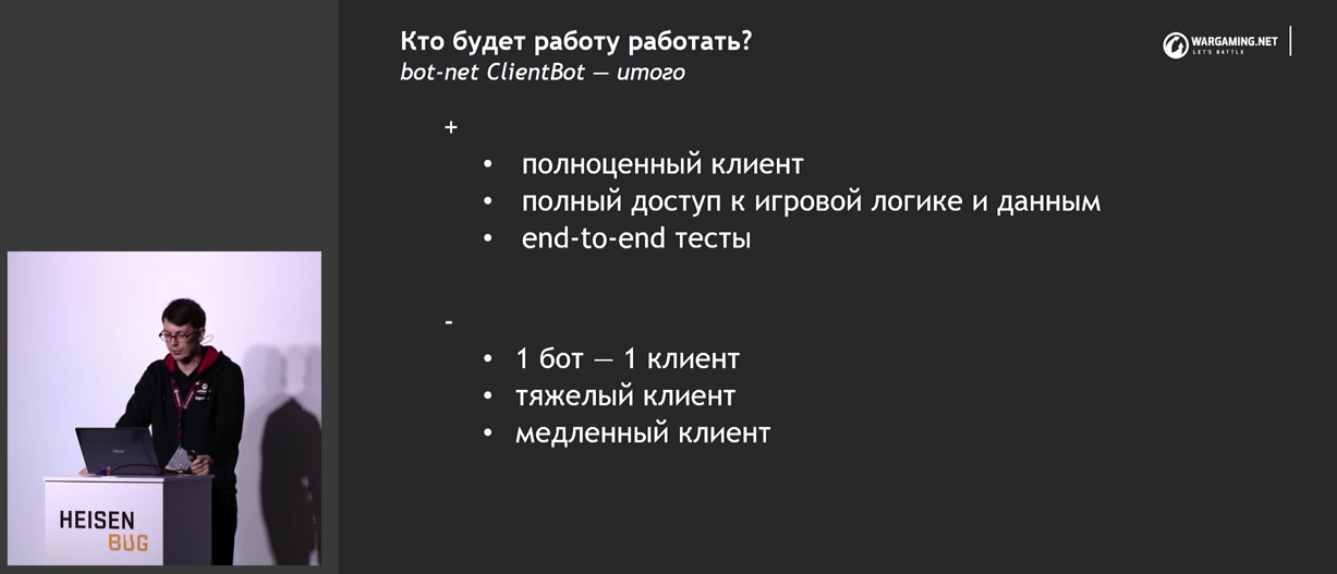

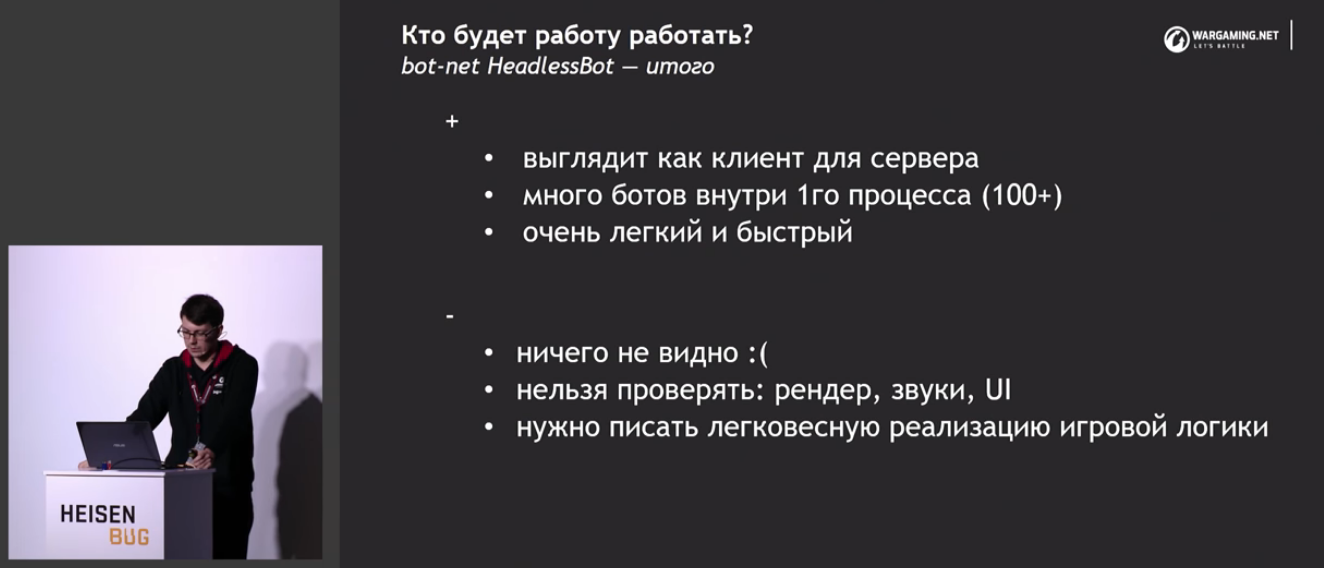

(, , ):

( , , , ):

, , .

, , . , . , . .

, .

, - , , . !

:

headless-.

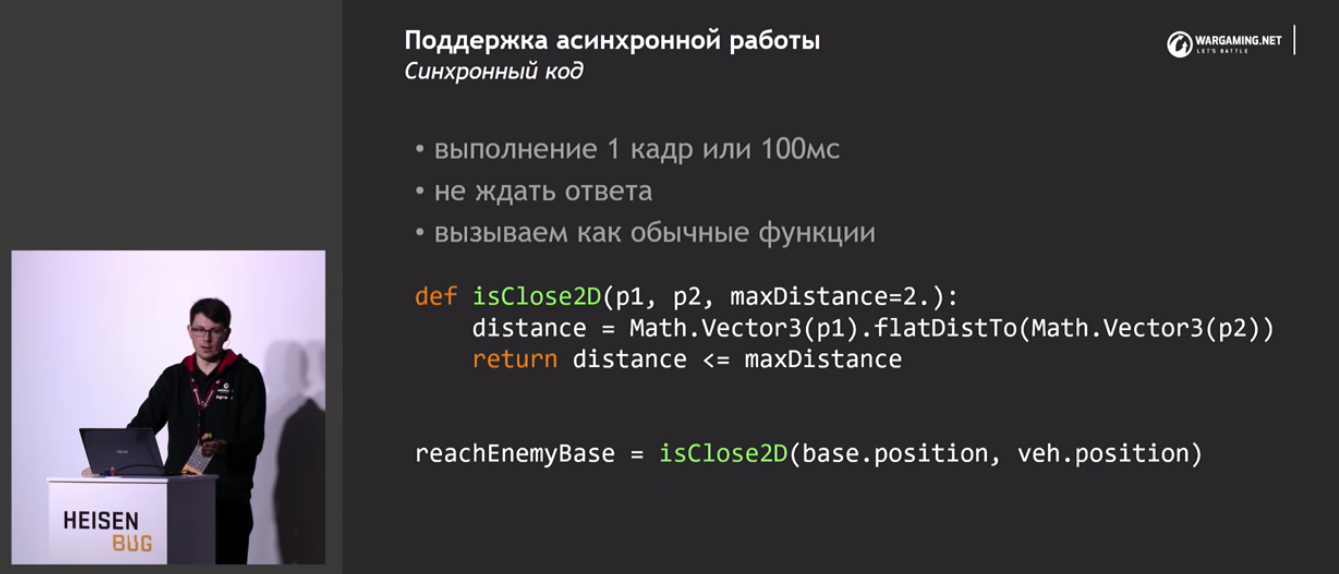

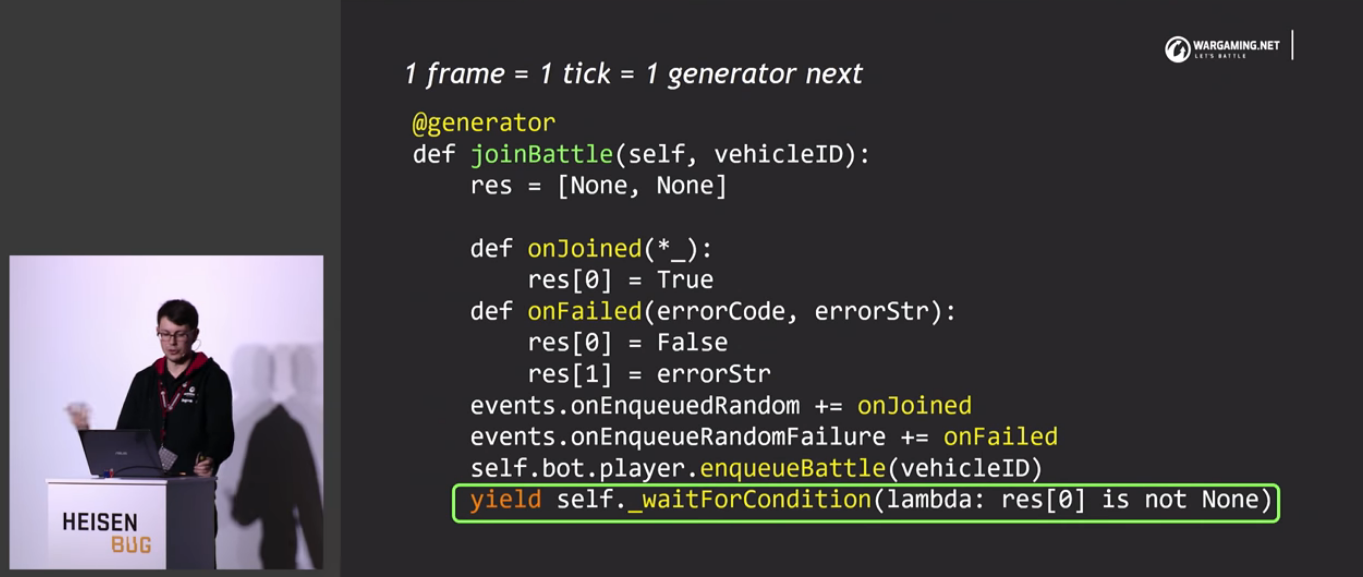

, ? XML, : C, C++, C#, Python, LUA.

. , . , , .. , — .

: ( — 1 , 100 headless, ) .

, (, , ) :

, .

, !

That's all. , . : , , , , , , - «-».

Minute advertising. As you probably know, we do conferences. — Heisenbug 2018 Piter , 17-18 2018 . , ( — ), . In short, come in, we are waiting for you.

')

Source: https://habr.com/ru/post/349144/

All Articles