How to manage infrastructure in hundreds of servers and avoid problems: 5 tips from King Servers engineers

In a blog on Habré, we write a lot about building IT infrastructure - for example, we reveal the issues of choosing data centers in Russia and the United States . Now, within the King Servers, there are hundreds of physical and thousands of virtual servers. Today, our engineers are sharing tips on managing infrastructure of this size.

Automation is very important.

To manage an infrastructure of this scale, one of the most important elements of success is streamlined monitoring. Since it is physically impossible to physically monitor such a number of servers even with a large staff of engineers, it is necessary to use automation. The system itself should find possible problems and notify about them. In order for such monitoring to be as effective as possible, it is necessary to foresee all possible points of failure. Problems should be detected at the initial stages in the automatic mode, so that the engineers have time to take the necessary actions in the normal mode and not restore the already fallen services.

')

It is also important to automate routine activities as much as possible. Installing support keys for 10 servers is one task, and when you need to reach three hundred is completely different.

It is important to understand that the infrastructure tends to increase in size over time. In this case, usually the number of engineers in the company does not increase at an appropriate pace. In order to avoid problems, automation should be introduced immediately, even if at the current moment it seems that you can handle it. It is often a mistake when it seems that if an action is repeated once a week, then there is no need to automate it. In fact, the time spent writing a script will pay off in the near future by reducing the time to perform repetitive actions. Also, with such an approach, over time, the script database will be created, oriented specifically for your infrastructure, and it will be possible to use ready-made code fragments in new scripts, which will also save time.

And, of course, more than one engineer should receive all automatically generated notifications. Even with intelligent automation, hundreds of services will not be able to effectively manage hundreds of services. The correct option would be the constant presence of one of the engineers online. In our experience, this requires at least 4 engineers.

Reservations and backups save in critical situations

In the case of large infrastructure, redundancy becomes even more important, since in the event of a failure, a large number of users will suffer. Worth to reserve:

- Monitoring of servers - it is necessary not only to create a monitoring system for failures, but also to use the tools of “monitoring monitoring”.

- Management tools.

- Communication channels - if there is only one provider in your data center, in the event of a failure, all your hardware will be completely cut off from the world. We recently encountered a channel failure in our data center in the Netherlands - if there were no channel redundancy, then the service for hundreds of our customers would be stopped, with corresponding consequences for the business.

- Power lines - in modern data centers there is the possibility of leading 2 independent power lines to the racks. You should not neglect this and save on additional power units for servers. If you have already purchased a lot of servers, in which it is impossible to install a spare power supply - you can install equipment for automatic transfer of reserves, which is designed to connect equipment with one power supply to 2 inputs.

- Any equipment requires periodic maintenance, and the likelihood that the data center will work with one of the power lines disconnected - 100%

- If you are lucky - the work will not be on your supply line, but you should not risk it.

- Not only can services be unavailable for several hours, it is also possible that some of the servers will not rise after an abrupt power outage and require the intervention of engineers.

- Iron - even in the case of high-quality equipment there is always a chance of failure, so the most important elements should be duplicated at the hardware level. For example, servers most often fail disks, so the use of RAID-arrays with redundancy is a good idea, as well as redundancy at the network level, when one server is connected to different switches, which allows not to lose traffic when one device fails. It is also necessary to have at least a minimum stock of spare parts. The failure of components in high-quality equipment is unlikely, but not impossible.

Regular backups and testing of deployment backups are also absolutely essential for managing any infrastructure. When you need to back up a lot of data and make backups often, it is important not to forget to monitor the performance of the server on which the backup is saved. It may well be that there is already too much data, so the backup has not yet completed, and a new one is already starting.

Documentation and logs

When a project is supported by several engineers, it is very important to document workflows as infrastructure is upgraded. From the very beginning, you should create your own knowledge base and, together with the introduction of new options, compile documentation on how to work with them. Even if all those who support the project already know what and how it works. When expanding the engineering team, well-written documentation will help speed up the process of familiarizing the new team member with the project.

Do not forget that writing scripts is also a development, and when working on a script of several people, you need to get a version control system and track changes so that if problems arise you can quickly roll back to the previous version.

When several people are working on the same task, it is important to transmit information about what has been done. Considerably reduce the time to maintain detailed logs of work in automatic mode and store them on a central server. This will simplify the transfer of the task to another person (it is not necessary to specify what actions were taken, everything will be in the log file).

It is important to analyze the response time of data center engineers.

All adequate modern data centers provide the remote hands service, within which, if problems arise, the server owner can ask the site engineers to perform the necessary actions with the equipment. But not always as a result of the site render it qualitatively. There may be many reasons, one of the most frequent is a high load of specialists, or the absence of some engineers on the site (one of the data centers in the US offered us this option - an engineer’s departure during the working day), but in critical situations the reaction time is very important But a problem may arise not only during working hours.

Therefore, you should not immediately transfer the entire infrastructure to a single data center, it is better to carry out the move in stages, this will allow to identify possible problems until the moment when it will be too difficult and expensive to take any measures.

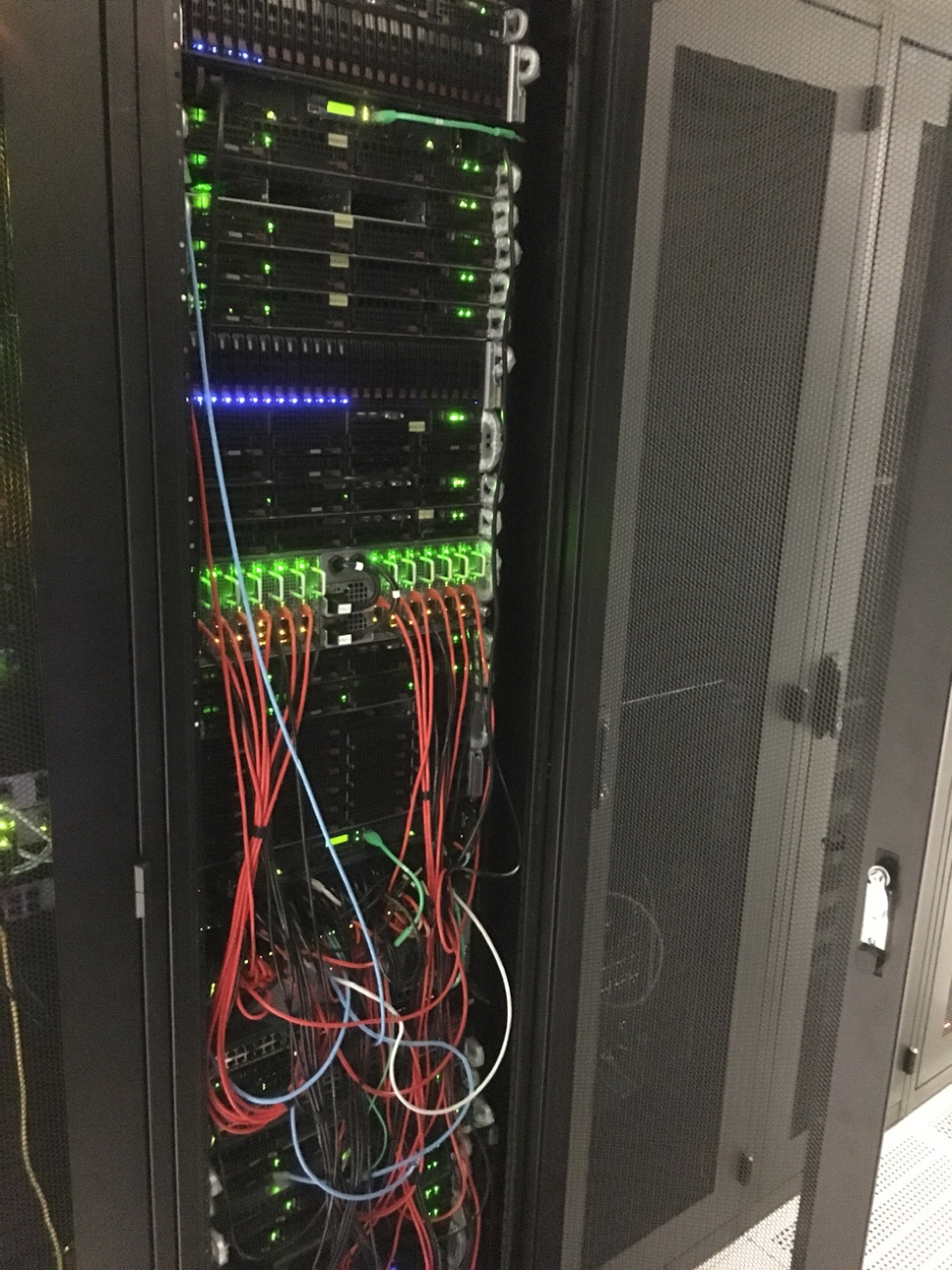

It may also turn out that after you have transported the equipment and carefully laid all the cables, after a couple of years and several dozen requests for assistance as part of the remote hands service, the picture will look like this:

And during the work, problems will arise when, due to the abundance of cables, one server cannot be removed from the rack without disrupting the operation of other servers. It is important to periodically check the status of the racks and ask the data center staff to take photographs.

No need to save money, experiment carefully better

It is necessary to carefully study the characteristics of the equipment - this will allow you to more accurately predict possible problems. For example, in the case of SSD a little extra time spent on the analysis can get iron, which will live much longer than bought in a hurry.

It is necessary to prepare for the fact that this approach will not allow to save here and now. In the long run, saving on iron leads to losses — a lower price is always compensated for by low reliability, and the repair and replacement of iron in the end often cost more than the purchase of more expensive equipment that will live longer.

In our project, we have been using SuperMicro products for many years - no serious unreasonable failures have happened to these servers. Also, not so long ago, we began to work very carefully with Dell hardware. The products of this company have an excellent reputation, but dramatically increasing the amount of work with a new iron is always risky, so we move gradually.

As for components, the experiments here are also inappropriate - it is better to be paranoid and trust only trusted suppliers, even if it does not allow to save here and now. Likewise, it’s absolutely not worthwhile to use the server components for desktop computers even in the smallest amount - these are completely different classes of devices designed for radically different loads.

This will not end well, especially if the infrastructure is used by external customers - in the hosting industry, there is often a situation when a user buys a virtual server for a business, but forgets to make backups or does not test their deployment (this is what small business savings look like on administrators). If the physical server is also half assembled from the desktop components, then very soon there will be a failure with complete data loss - this can just kill someone’s business. This should not be allowed.

More useful links and materials from King Servers :

- Free infrastructure test from King Servers

- How and why we created a knowledge base

- How to choose a data center for an IT project in Russia: uptime, money and overall adequacy

- Popularity statistics of operating systems in IaaS: Ubuntu is still number one, CentOS popularity is growing

- When the “cloud” breaks down: what can be done in this situation?

Source: https://habr.com/ru/post/348798/

All Articles