Gesturizer: 3D-touch and gestures in iOS apps

Gestures on mobile devices performed with a stylus or finger can be more effective and convenient than standard interactions with buttons and menus, depending on context and task. However, the need to memorize gestures and related commands cause some difficulties. In addition, the limited space on mobile devices and the presence of clickable and moving elements in the UI complicate the recognition of gestures. To solve these problems, I present to your attention the library iOSGesturizer.

In short, this library allows you to learn and use different types of gestures, made with one finger, across the entire display area and in any iOS application on devices with 3D-touch support. Now we will take a closer look at an example.

Take Apple's ready-made simple FoodTracker project, which is used in their educational materials. After the library has been added to this project through CocoaPods or by regular file transfer, open two files from the project.

In the AppDelegate.swift file, we initialize the

')

Now open the MealTableViewController.swift file and add

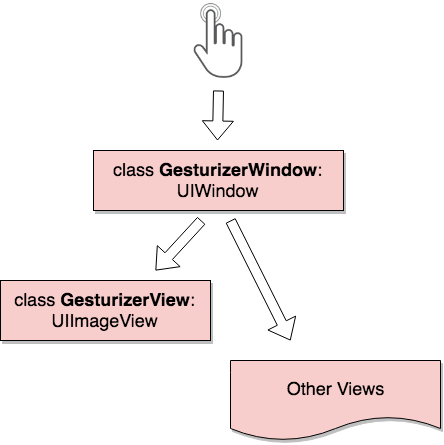

Through gestureHandler, we defined methods for recognizing gestures. In this case, the name of the gesture is displayed in a pop-up window (cut, copy, or paste). Run the project and get the following:

As you can see, the application recognizes three types of gestures. To display possible gestures (example on the left), after hard pressing you need to wait one second and perform the movement you need with your finger. And after studying, you can perform gestures without waiting (the example on the right).

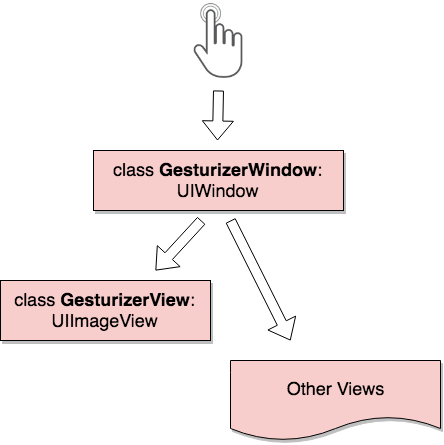

From an architectural point of view, the whole thing looks like this:

GesturizerWindow primarily processes all screen touches and determines to send the event to GesturizerView or to the viewport below. It is worth noting that GesturizerView is needed only for drawing gestures in Training Mode, while processing the force of pressing and recognizing gestures takes place in GesturizerWindow. And fortunately for us, GesturizerWindow does not create delays in the application, since the fast and simple 1 $ -Recognizer algorithm , which does not require training and training data, is used to recognize gestures.

If the idea seemed interesting to you, I am waiting for pull requests .

PS: In the future, I plan to add functionals for customizing gestures and parameters in general.

In short, this library allows you to learn and use different types of gestures, made with one finger, across the entire display area and in any iOS application on devices with 3D-touch support. Now we will take a closer look at an example.

Take Apple's ready-made simple FoodTracker project, which is used in their educational materials. After the library has been added to this project through CocoaPods or by regular file transfer, open two files from the project.

In the AppDelegate.swift file, we initialize the

window: UIWindow variable as an instance of the GesturizerWindow class:')

var window: UIWindow? = GesturizerWindow() Now open the MealTableViewController.swift file and add

viewDidAppear(_ animated: Bool) : override func viewDidAppear(_ animated: Bool) { super.viewDidAppear(animated) let window = UIApplication.shared.keyWindow! as! GesturizerWindow let view = GesturizerView() view.gestureHandler = {index in let alert = UIAlertView() alert.message = view.names[index] alert.addButton(withTitle: "OK") alert.show() } window.setGestureView(view: view) } Through gestureHandler, we defined methods for recognizing gestures. In this case, the name of the gesture is displayed in a pop-up window (cut, copy, or paste). Run the project and get the following:

As you can see, the application recognizes three types of gestures. To display possible gestures (example on the left), after hard pressing you need to wait one second and perform the movement you need with your finger. And after studying, you can perform gestures without waiting (the example on the right).

From an architectural point of view, the whole thing looks like this:

GesturizerWindow primarily processes all screen touches and determines to send the event to GesturizerView or to the viewport below. It is worth noting that GesturizerView is needed only for drawing gestures in Training Mode, while processing the force of pressing and recognizing gestures takes place in GesturizerWindow. And fortunately for us, GesturizerWindow does not create delays in the application, since the fast and simple 1 $ -Recognizer algorithm , which does not require training and training data, is used to recognize gestures.

If the idea seemed interesting to you, I am waiting for pull requests .

PS: In the future, I plan to add functionals for customizing gestures and parameters in general.

Source: https://habr.com/ru/post/348766/

All Articles